Experiments

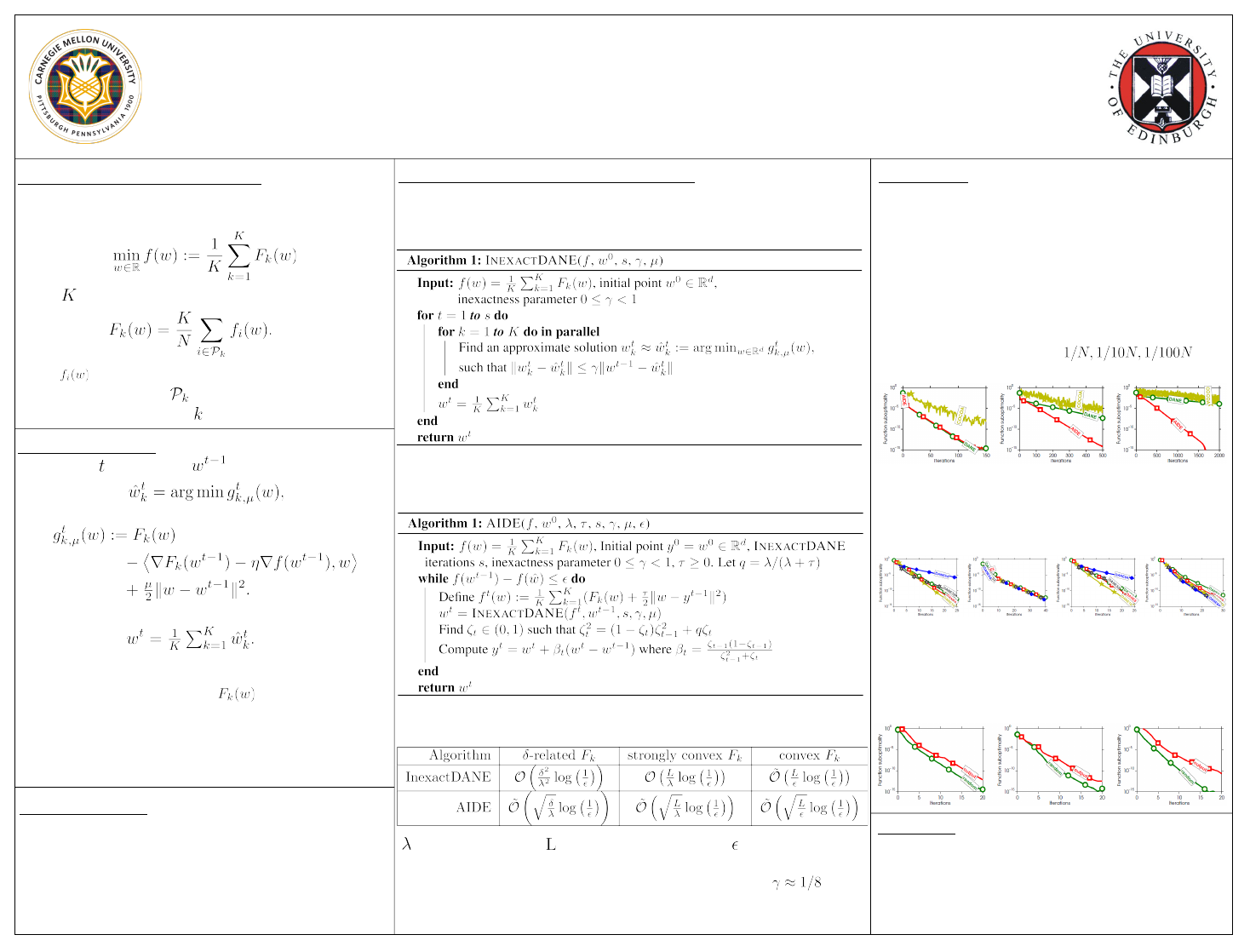

In the following, compare three algorithms

• COCOA ([2]) with SDCA locally

• InexactDANE (DANE) with SVRG locally

• AIDE with SVRG locally

By default for a single pass through local data.

Algorithm comparison

• Rcv1 dataset, smoothed hinge loss

• Regularization strength { }

• Data randomly distributed across 8 nodes.

Node scaling

• Rcv1, covtype, realism, url datasets, logistic loss

• Randomly distributed across 4–64 computers.

• Fixed number of local steps of SVRG

Arbitrary data partitioning

• Rcv1, covtype, realsim datasets, logistic loss

• Partitioned to 2 computers:

Ø Randomly (random)

Ø Based on output label (output)

AIDE: Fast and Communication Efficient Distributed Optimization

Sashank J. Reddi*, Jakub Konečný^, Peter Richtárik^, Barnabás Póczos*, Alex Smola*

*Machine Learning Department, Carnegie Mellon University

^School of Mathematics, University of Edinburgh

Distributed Optimization Problem

Minimize an average of functions, each of which is

stored on different machine. Formally,

where is the number of machines, and

Here, usually represent a loss function incurred on

the i-th data point. Set denotes indices of data points

stored on computer .

Baseline algorithm: DANE [3]

At iteration , with iterate , each machine solves

where

This is followed by aggregation to form new iterate

Properties

• Fast convergence when are similar enough

(i.i.d. data distribution)

• Not robust to arbitrary data distributions

• Exact minimum computationally infeasible in

many applications

Our contributions

• Inexact version of DANE – more robust

• Accelerated version, AIDE, that (nearly) matches

communication complexity lower bounds

Ø First efficient method that can be

implemented using only first-order oracle

Two Distributed Optimization Algorithms

Inexact DANE

Core idea – solve the DANE subproblem approximately.

References

[1] Hongzhou Lin, Julien Mairal, and Zaid Harchaoui. “A universal catalyst for first-

order optimization.” In Advances in Neural Information Processing Systems, 2015.

[2] Chenxin Ma, Jakub Konečný, Martin Jaggi, Virginia Smith, Michael I. Jordan, Peter

Richtárik, and Martin Takáč. “Distributed Optimization with Arbitrary Local Solvers.”

arXiv preprint arXiv:1512.04039 (2015).

[3] Ohad Shamir, Nathan Srebro, and Tong Zhang. “Communication efficient

distributed optimization using an approximate newton-type method.” arXiv preprint

arXiv:1312.7853 (2013).

Communication complexity guarantees

– strong convexity; – smoothness parameter; – target accuracy

• Only modest accuracy necessary for the above rates ( )

• Inexactness changes only constants and adds practical robustness

AIDE: Accelerated Inexact DANE

Core idea – apply Universal Catalyst [1] to InexactDANE