Journal of Machine Learning Research 18 (2018) 1-54 Submitted 12/17; Revised 12/17; Published 4/18

Catalyst Acceleration for First-order Convex Optimization:

from Theory to Practice

Massachusetts Institute of Technology

Computer Science and Artificial Intelligence Laboratory

Cambridge, MA 02139, USA

Univ. Grenoble Alpes, Inria, CNRS, Grenoble INP

∗

, LJK,

Grenoble, 38000, France

University of Washington

Department of Statistics

Seattle, WA 98195, USA

Editor: L´eon Bottou

Abstract

We introduce a generic scheme for accelerating gradient-based optimization methods in

the sense of Nesterov. The approach, called Catalyst, builds upon the inexact acceler-

ated proximal point algorithm for minimizing a convex objective function, and consists of

approximately solving a sequence of well-chosen auxiliary problems, leading to faster con-

vergence. One of the keys to achieve acceleration in theory and in practice is to solve these

sub-problems with appropriate accuracy by using the right stopping criterion and the right

warm-start strategy. We give practical guidelines to use Catalyst and present a compre-

hensive analysis of its global complexity. We show that Catalyst applies to a large class of

algorithms, including gradient descent, block coordinate descent, incremental algorithms

such as SAG, SAGA, SDCA, SVRG, MISO/Finito, and their proximal variants. For all of

these methods, we establish faster rates using the Catalyst acceleration, for strongly convex

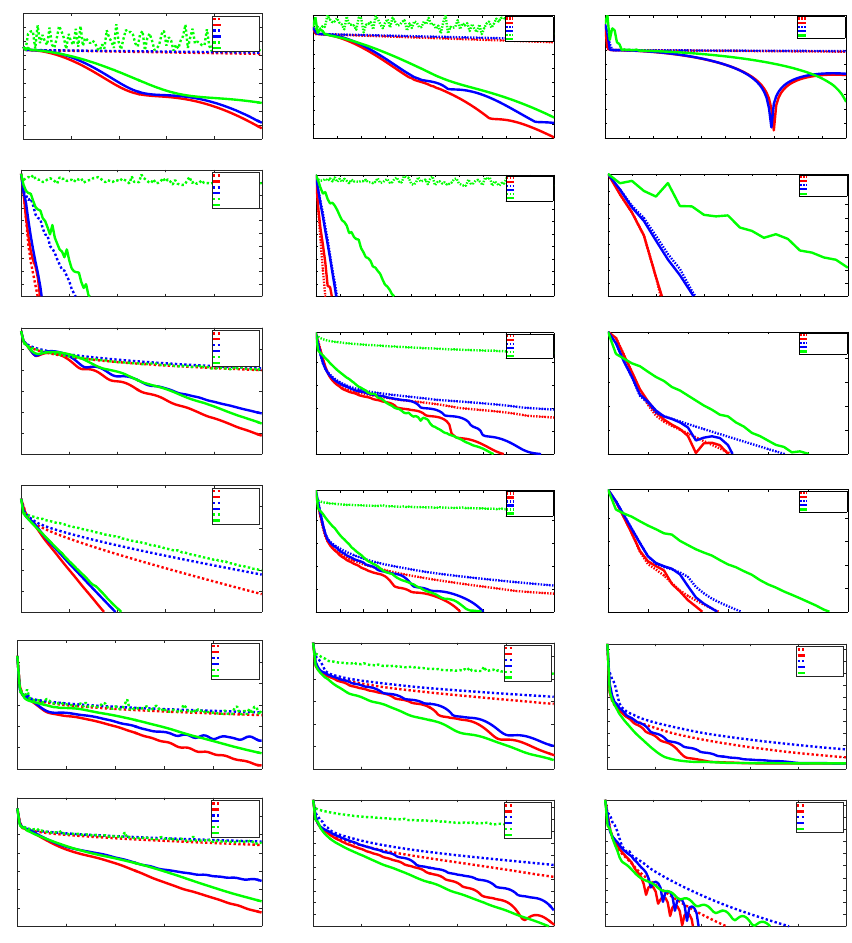

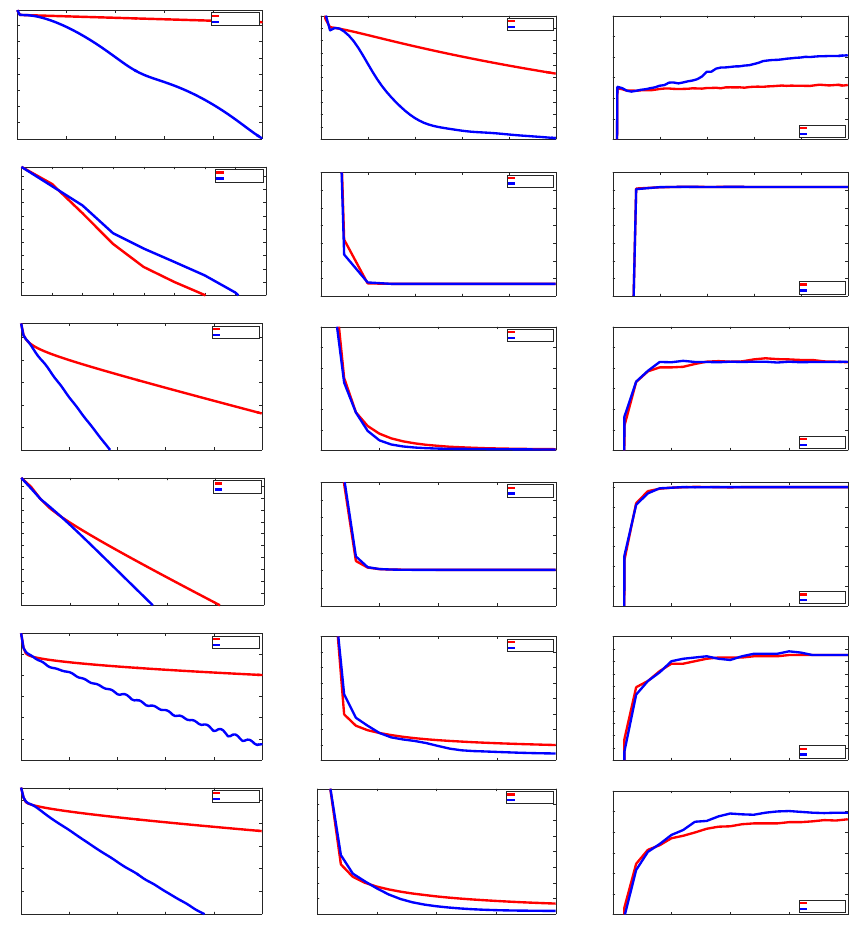

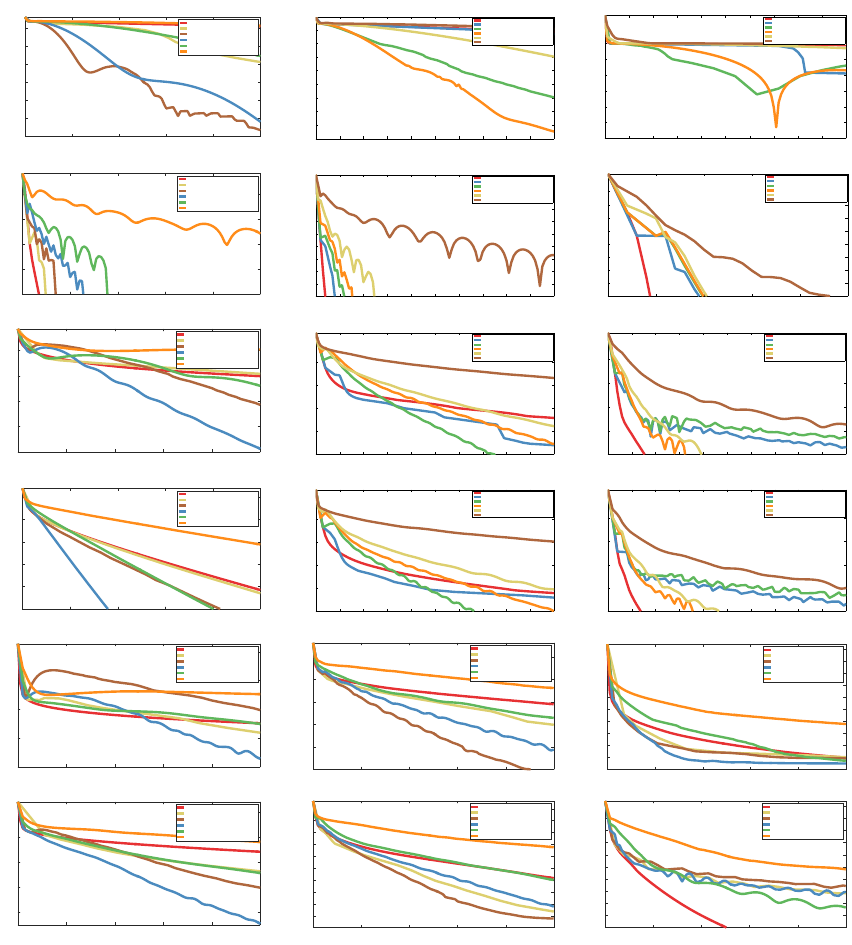

and non-strongly convex objectives. We conclude with extensive experiments showing that

acceleration is useful in practice, especially for ill-conditioned problems.

Keywords: convex optimization, first-order methods, large-scale machine learning

1. Introduction

A large number of machine learning and signal processing problems are formulated as the

minimization of a convex objective function:

min

x∈R

p

n

f(x) , f

0

(x) + ψ(x)

o

, (1)

where f

0

is convex and L-smooth, and ψ is convex but may not be differentiable. We call

a function L-smooth when it is differentiable and its gradient is L-Lipschitz continuous.

∗. Institute of Engineering Univ. Grenoble Alpes

c

2018 Hongzhou Lin, Julien Mairal and Zaid Harchaoui.

License: CC-BY 4.0, see https://creativecommons.org/licenses/by/4.0/. Attribution requirements are provided

at http://jmlr.org/papers/v18/17-748.html.

Lin, Mairal and Harchaoui

In statistics or machine learning, the variable x may represent model parameters, and

the role of f

0

is to ensure that the estimated parameters fit some observed data. Specifically,

f

0

is often a large sum of functions and (1) is a regularized empirical risk which writes as

min

x∈R

p

(

f(x) ,

1

n

n

X

i=1

f

i

(x) + ψ(x)

)

. (2)

Each term f

i

(x) measures the fit between x and a data point indexed by i, whereas the

function ψ acts as a regularizer; it is typically chosen to be the squared `

2

-norm, which

is smooth, or to be a non-differentiable penalty such as the `

1

-norm or another sparsity-

inducing norm (Bach et al., 2012).

We present a unified framework allowing one to accelerate gradient-based or first-order

methods, with a particular focus on problems involving large sums of functions. By “ac-

celerating”, we mean generalizing a mechanism invented by Nesterov (1983) that improves

the convergence rate of the gradient descent algorithm. When ψ = 0, gradient descent

steps produce iterates (x

k

)

k≥0

such that f(x

k

) − f

∗

≤ ε in O(1/ε) iterations, where f

∗

denotes the minimum value of f. Furthermore, when the objective f is µ-strongly convex,

the previous iteration-complexity becomes O((L/µ) log(1/ε)), which is proportional to the

condition number L/µ. However, these rates were shown to be suboptimal for the class

of first-order methods, and a simple strategy of taking the gradient step at a well-chosen

point different from x

k

yields the optimal complexity—O(1/

√

ε) for the convex case and

O(

p

L/µ log(1/ε)) for the µ-strongly convex one (Nesterov, 1983). Later, this acceleration

technique was extended to deal with non-differentiable penalties ψ for which the proximal

operator defined below is easy to compute (Beck and Teboulle, 2009; Nesterov, 2013).

prox

ψ

(x) , arg min

z∈R

p

ψ(z) +

1

2

kx − zk

2

, (3)

where k.k denotes the Euclidean norm.

For machine learning problems involving a large sum of n functions, a recent effort has

been devoted to developing fast incremental algorithms such as SAG (Schmidt et al., 2017),

SAGA (Defazio et al., 2014a), SDCA (Shalev-Shwartz and Zhang, 2012), SVRG (Johnson

and Zhang, 2013; Xiao and Zhang, 2014), or MISO/Finito (Mairal, 2015; Defazio et al.,

2014b), which can exploit the particular structure (2). Unlike full gradient approaches,

which require computing and averaging n gradients (1/n)

P

n

i=1

∇f

i

(x) at every iteration,

incremental techniques have a cost per-iteration that is independent of n. The price to pay is

the need to store a moderate amount of information regarding past iterates, but the benefits

may be significant in terms of computational complexity. In order to achieve an ε-accurate

solution for a µ-strongly convex objective, the number of gradient evaluations required by

the methods mentioned above is bounded by O

n +

¯

L

µ

log(

1

ε

)

, where

¯

L is either the

maximum Lipschitz constant across the gradients ∇f

i

, or the average value, depending

on the algorithm variant considered. Unless there is a big mismatch between

¯

L and L

(global Lipschitz constant for the sum of gradients), incremental approaches significantly

outperform the full gradient method, whose complexity in terms of gradient evaluations is

bounded by O

n

L

µ

log(

1

ε

)

.

2

Catalyst for First-order Convex Optimization

Yet, these incremental approaches do not use Nesterov’s extrapolation steps and whether

or not they could be accelerated was an important open question when these methods were

introduced. It was indeed only known to be the case for SDCA (Shalev-Shwartz and Zhang,

2016) for strongly convex objectives. Later, other accelerated incremental algorithms were

proposed such as Katyusha (Allen-Zhu, 2017), or the method of Lan and Zhou (2017).

We give here a positive answer to this open question. By analogy with substances that

increase chemical reaction rates, we call our approach “Catalyst”. Given an optimization

method M as input, Catalyst outputs an accelerated version of it, eventually the same algo-

rithm if the method M is already optimal. The sole requirement on the method in order to

achieve acceleration is that it should have linear convergence rate for strongly convex prob-

lems. This is the case for full gradient methods (Beck and Teboulle, 2009; Nesterov, 2013)

and block coordinate descent methods (Nesterov, 2012; Richt´arik and Tak´aˇc, 2014), which

already have well-known accelerated variants. More importantly, it also applies to the pre-

viou incremental methods, whose complexity is then bounded by

˜

O

n +

p

n

¯

L/µ

log(

1

ε

)

after Catalyst acceleration, where

˜

O hides some logarithmic dependencies on the condition

number

¯

L/µ. This improves upon the non-accelerated variants, when the condition num-

ber is larger than n. Besides, acceleration occurs regardless of the strong convexity of the

objective—that is, even if µ = 0—which brings us to our second achievement.

Some approaches such as MISO, SDCA, or SVRG are only defined for strongly convex

objectives. A classical trick to apply them to general convex functions is to add a small

regularization term εkxk

2

in the objective (Shalev-Shwartz and Zhang, 2012). The drawback

of this strategy is that it requires choosing in advance the parameter ε, which is related

to the target accuracy. The approach we present here provides a direct support for non-

strongly convex objectives, thus removing the need of selecting ε beforehand. Moreover, we

can immediately establish a faster rate for the resulting algorithm. Finally, some methods

such as MISO are numerically unstable when they are applied to strongly convex objective

functions with small strong convexity constant. By defining better conditioned auxiliary

subproblems, Catalyst also provides better numerical stability to these methods.

A short version of this paper has been published at the NIPS conference in 2015 (Lin

et al., 2015a); in addition to simpler convergence proofs and more extensive numerical evalu-

ation, we extend the conference paper with a new Moreau-Yosida smoothing interpretation

with significant theoretical and practical consequences as well as new practical stopping

criteria and warm-start strategies.

The paper is structured as follows. We complete this introductory section with some

related work in Section 1.1, and give a short description of the two-loop Catalyst algorithm

in Section 1.2. Then, Section 2 introduces the Moreau-Yosida smoothing and its inexact

variant. In Section 3, we introduce formally the main algorithm, and its convergence analysis

is presented in Section 4. Section 5 is devoted to numerical experiments and Section 6

concludes the paper.

1.1 Related Work

Catalyst can be interpreted as a variant of the proximal point algorithm (Rockafellar, 1976;

G¨uler, 1991), which is a central concept in convex optimization, underlying augmented

Lagrangian approaches, and composite minimization schemes (Bertsekas, 2015; Parikh and

3

Lin, Mairal and Harchaoui

Boyd, 2014). The proximal point algorithm consists of solving (1) by minimizing a sequence

of auxiliary problems involving a quadratic regularization term. In general, these auxiliary

problems cannot be solved with perfect accuracy, and several notions of inexactness were

proposed by G¨uler (1992); He and Yuan (2012) and Salzo and Villa (2012). The Cata-

lyst approach hinges upon (i) an acceleration technique for the proximal point algorithm

originally introduced in the pioneer work of G¨uler (1992); (ii) a more practical inexact-

ness criterion than those proposed in the past.

1

As a result, we are able to control the

rate of convergence for approximately solving the auxiliary problems with an optimization

method M. In turn, we are also able to obtain the computational complexity of the global

procedure, which was not possible with previous analysis (G¨uler, 1992; He and Yuan, 2012;

Salzo and Villa, 2012). When instantiated in different first-order optimization settings, our

analysis yields systematic acceleration.

Beyond G¨uler (1992), several works have inspired this work. In particular, accelerated

SDCA (Shalev-Shwartz and Zhang, 2016) is an instance of an inexact accelerated proximal

point algorithm, even though this was not explicitly stated in the original paper. Catalyst

can be seen as a generalization of their algorithm, originally designed for stochastic dual

coordinate ascent approaches. Yet their proof of convergence relies on different tools than

ours. Specifically, we introduce an approximate sufficient descent condition, which, when

satisfied, grants acceleration to any optimization method, whereas the direct proof of Shalev-

Shwartz and Zhang (2016), in the context of SDCA, does not extend to non-strongly convex

objectives. Another useful methodological contribution was the convergence analysis of

inexact proximal gradient methods of Schmidt et al. (2011) and Devolder et al. (2014).

Finally, similar ideas appeared in the independent work (Frostig et al., 2015). Their results

partially overlap with ours, but the two papers adopt rather different directions. Our

analysis is more general, covering both strongly-convex and non-strongly convex objectives,

and comprises several variants including an almost parameter-free variant.

Then, beyond accelerated SDCA (Shalev-Shwartz and Zhang, 2016), other accelerated

incremental methods have been proposed, such as APCG (Lin et al., 2015b), SDPC (Zhang

and Xiao, 2015), RPDG (Lan and Zhou, 2017), Point-SAGA (Defazio, 2016) and Katyusha

(Allen-Zhu, 2017). Their techniques are algorithm-specific and cannot be directly general-

ized into a unified scheme. However, we should mention that the complexity obtained by

applying Catalyst acceleration to incremental methods matches the optimal bound up to a

logarithmic factor, which may be the price to pay for a generic acceleration scheme.

A related recent line of work has also combined smoothing techniques with outer-loop

algorithms such as Quasi-Newton methods (Themelis et al., 2016; Giselsson and F¨alt, 2016).

Their purpose was not to accelerate existing techniques, but rather to derive new algorithms

for nonsmooth optimization.

To conclude this survey, we mention the broad family of extrapolation methods (Sidi,

2017), which allow one to extrapolate to the limit sequences generated by iterative al-

gorithms for various numerical analysis problems. Scieur et al. (2016) proposed such an

approach for convex optimization problems with smooth and strongly convex objectives.

The approach we present here allows us to obtain global complexity bounds for strongly

1. Note that our inexact criterion was also studied, among others, by Salzo and Villa (2012), but their

analysis led to the conjecture that this criterion was too weak to warrant acceleration. Our analysis

refutes this conjecture.

4

Catalyst for First-order Convex Optimization

Algorithm 1 Catalyst - Overview

input initial estimate x

0

in R

p

, smoothing parameter κ, optimization method M.

1: Initialize y

0

= x

0

.

2: while the desired accuracy is not achieved do

3: Find x

k

using M

x

k

≈ arg min

x∈R

p

n

h

k

(x) , f (x) +

κ

2

kx − y

k−1

k

2

o

. (4)

4: Compute y

k

using an extrapolation step, with β

k

in (0, 1)

y

k

= x

k

+ β

k

(x

k

− x

k−1

).

5: end while

output x

k

(final estimate).

convex and non strongly convex objectives, which can be decomposed into a smooth part

and a non-smooth proximal-friendly part.

1.2 Overview of Catalyst

Before introducing Catalyst precisely in Section 3, we give a quick overview of the algo-

rithm and its main ideas. Catalyst is a generic approach that wraps an algorithm M into

an accelerated one A, in order to achieve the same accuracy as M with reduced computa-

tional complexity. The resulting method A is an inner-outer loop construct, presented in

Algorithm 1, where in the inner loop the method M is called to solve an auxiliary strongly-

convex optimization problem, and where in the outer loop the sequence of iterates produced

by M are extrapolated for faster convergence. There are therefore three main ingredients

in Catalyst: a) a smoothing technique that produces strongly-convex sub-problems; b) an

extrapolation technique to accelerate the convergence; c) a balancing principle to optimally

tune the inner and outer computations.

Smoothing by infimal convolution Catalyst can be used on any algorithm M that

enjoys a linear-convergence guarantee when minimizing strongly-convex objectives. How-

ever the objective at hand may be poorly conditioned or even might not be strongly convex.

In Catalyst, we use M to approximately minimize an auxiliary objective h

k

at iteration k,

defined in (4), which is strongly convex and better conditioned than f. Smoothing by

infimal convolution allows one to build a well-conditioned convex function F from a poorly-

conditioned convex function f (see Section 3 for a refresher on Moreau envelopes). We

shall show in Section 3 that a notion of approximate Moreau envelope allows us to define

precisely the information collected when approximately minimizing the auxiliary objective.

Extrapolation by Nesterov acceleration Catalyst uses an extrapolation scheme “ `a

la Nesterov ” to build a sequence (y

k

)

k≥0

updated as

y

k

= x

k

+ β

k

(x

k

− x

k−1

) ,

where (β

k

)

k≥0

is a positive decreasing sequence, which we shall define in Section 3.

5

Lin, Mairal and Harchaoui

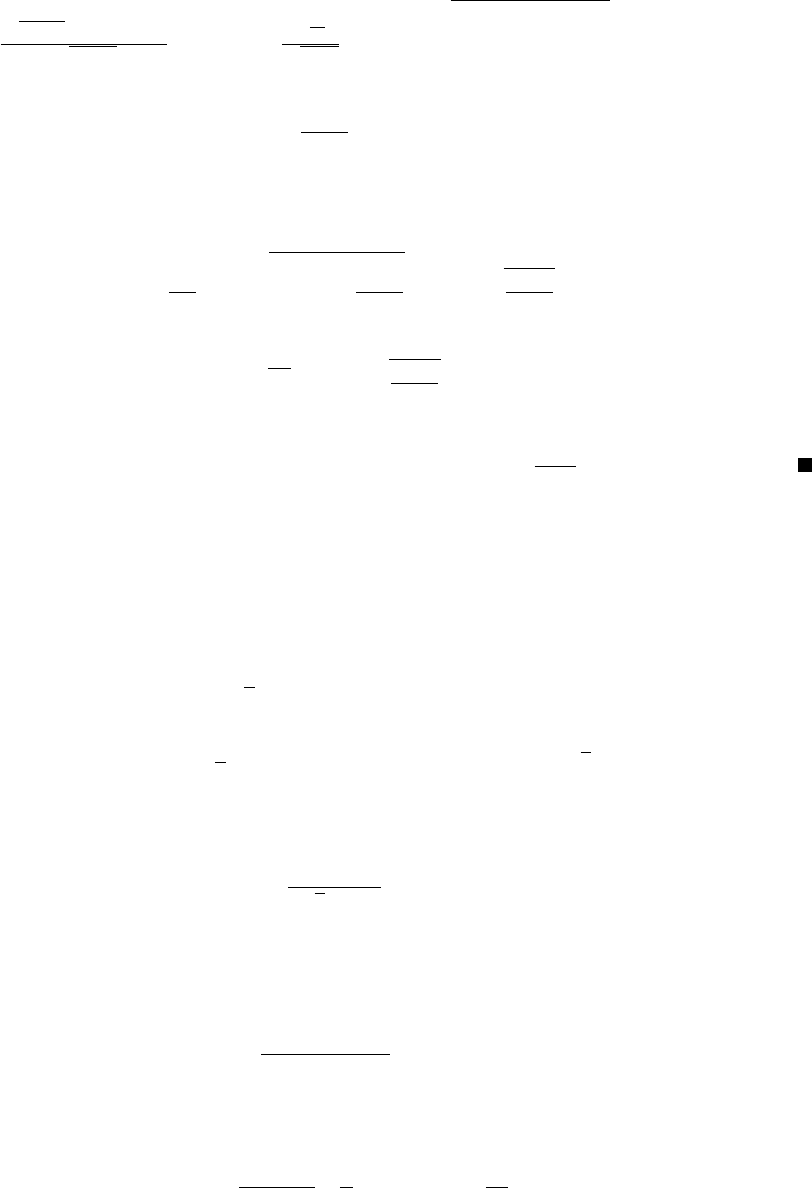

Without Catalyst With Catalyst

µ > 0 µ = 0 µ > 0 µ = 0

FG O

n

L

µ

log

1

ε

O

n

L

ε

˜

O

n

q

L

µ

log

1

ε

˜

O

n

q

L

ε

SAG/SAGA

O

n +

¯

L

µ

log

1

ε

O

n

¯

L

ε

˜

O

n +

q

n

¯

L

µ

log

1

ε

˜

O

q

n

¯

L

ε

MISO

not avail.

SDCA

SVRG

Acc-FG O

n

q

L

µ

log

1

ε

O

n

L

√

ε

no acceleration

Acc-SDCA

˜

O

n +

q

n

¯

L

µ

log

1

ε

not avail.

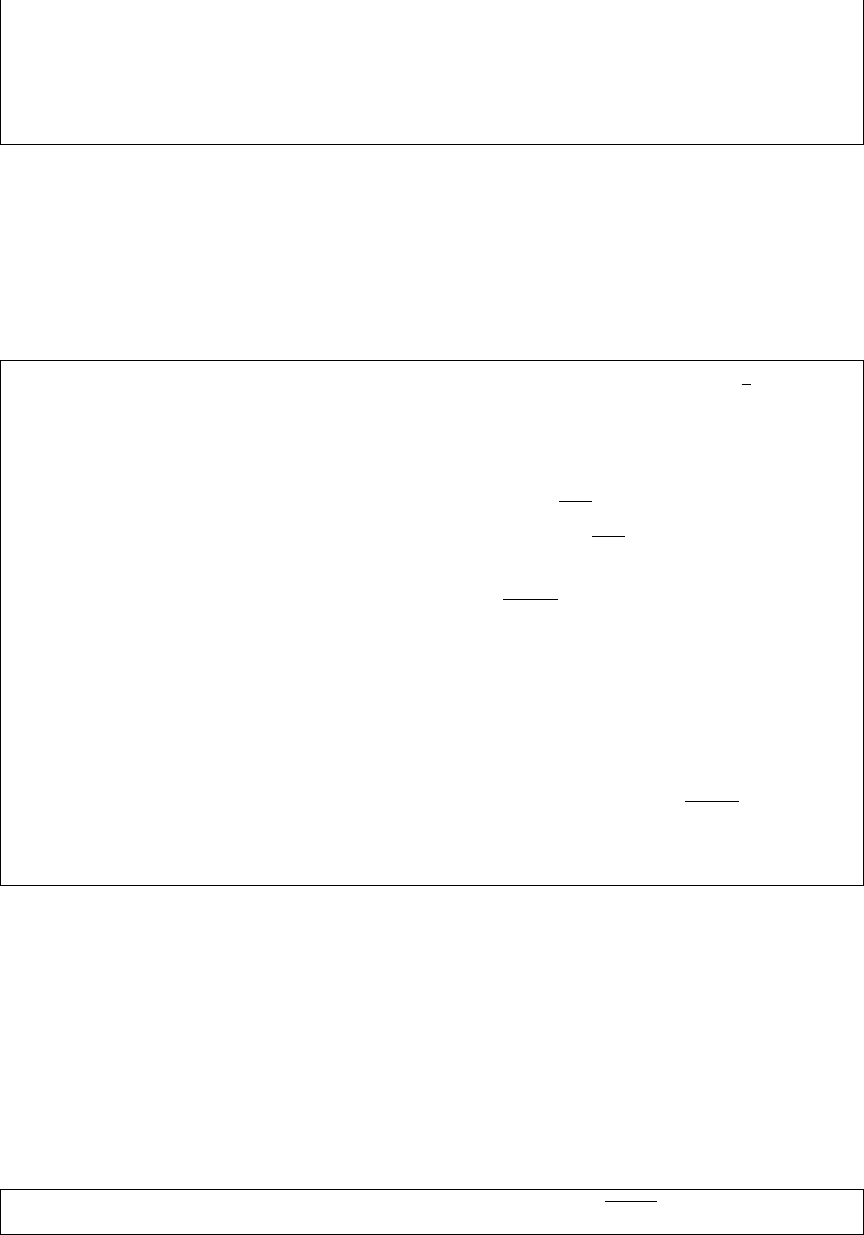

Table 1: Comparison of rates of convergence, before and after the Catalyst acceleration, in

the strongly-convex and non strongly-convex cases, respectively. The notation

˜

O

hides logarithmic factors. The constant L is the global Lipschitz constant of the

gradient’s objective, while

¯

L is the average Lipschitz constants of the gradients

∇f

i

, or the maximum value, depending on the algorithm’s variants considered.

We shall show in Section 4 that we can get faster rates of convergence thanks to this

extrapolation step when the smoothing parameter κ, the inner-loop stopping criterion, and

the sequence (β

k

)

k≥0

are carefully built.

Balancing inner and outer complexities The optimal balance between inner loop and

outer loop complexity derives from the complexity bounds established in Section 4. Given

an estimate about the condition number of f, our bounds dictate a choice of κ that gives the

optimal setting for the inner-loop stopping criterion and all technical quantities involved in

the algorithm. We shall demonstrate in particular the power of an appropriate warm-start

strategy to achieve near-optimal complexity.

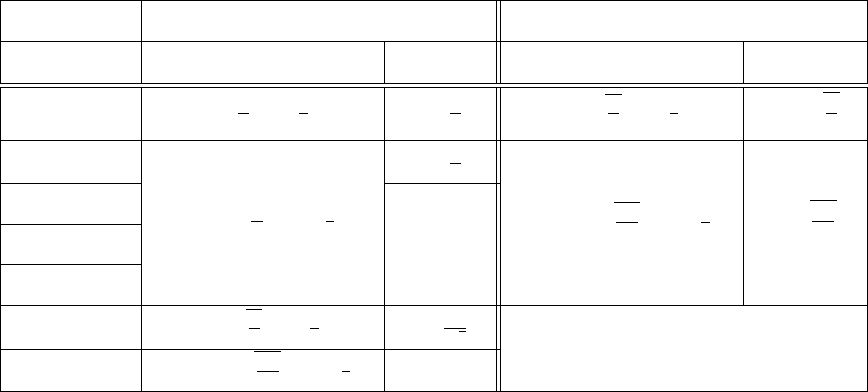

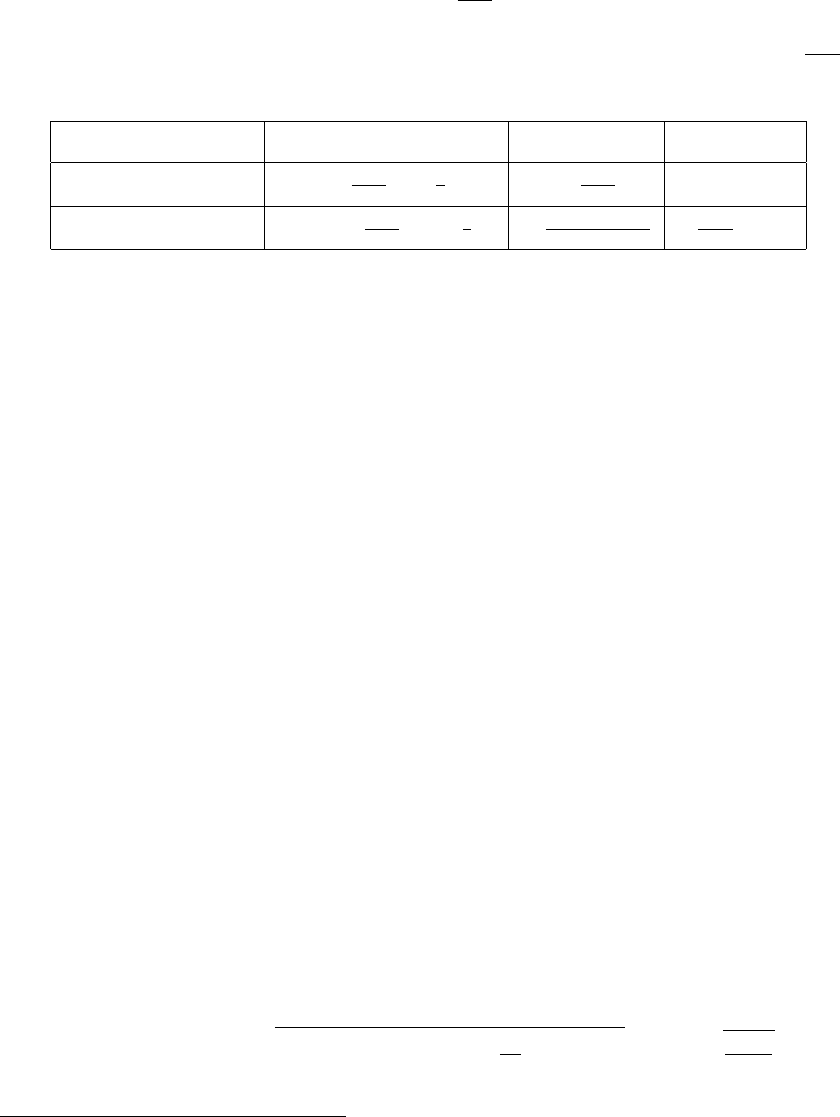

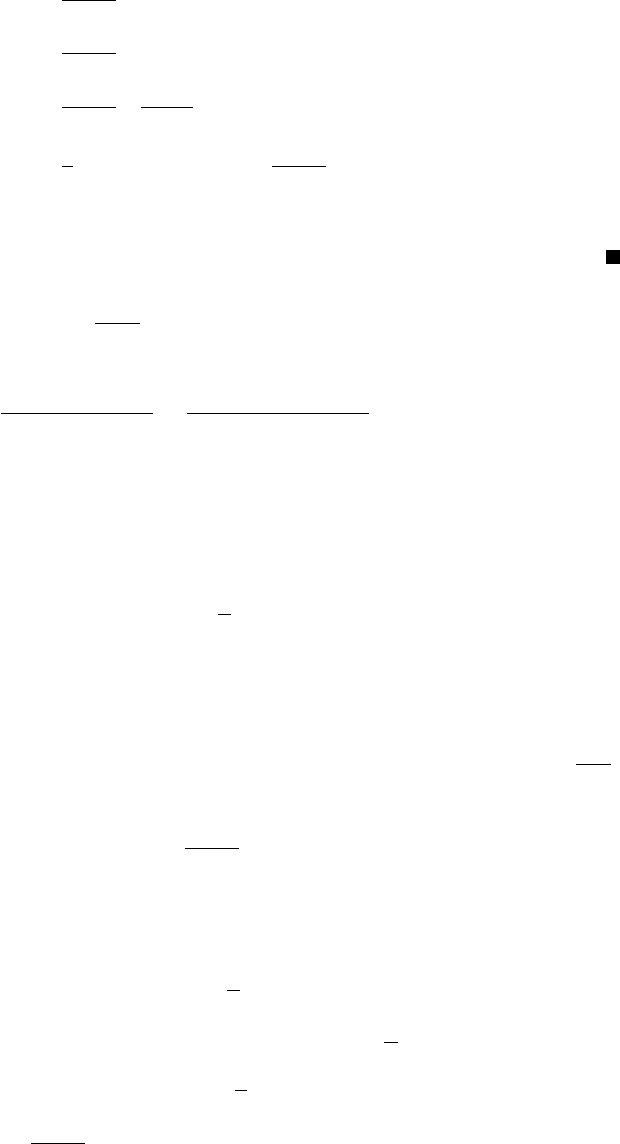

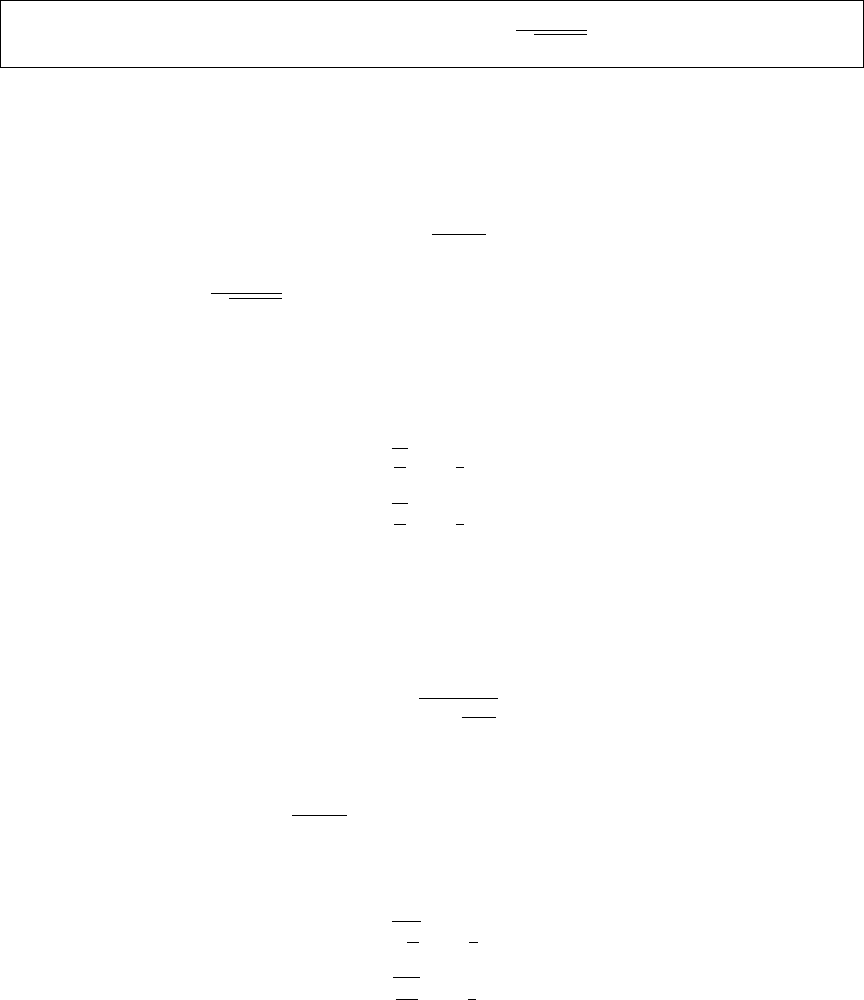

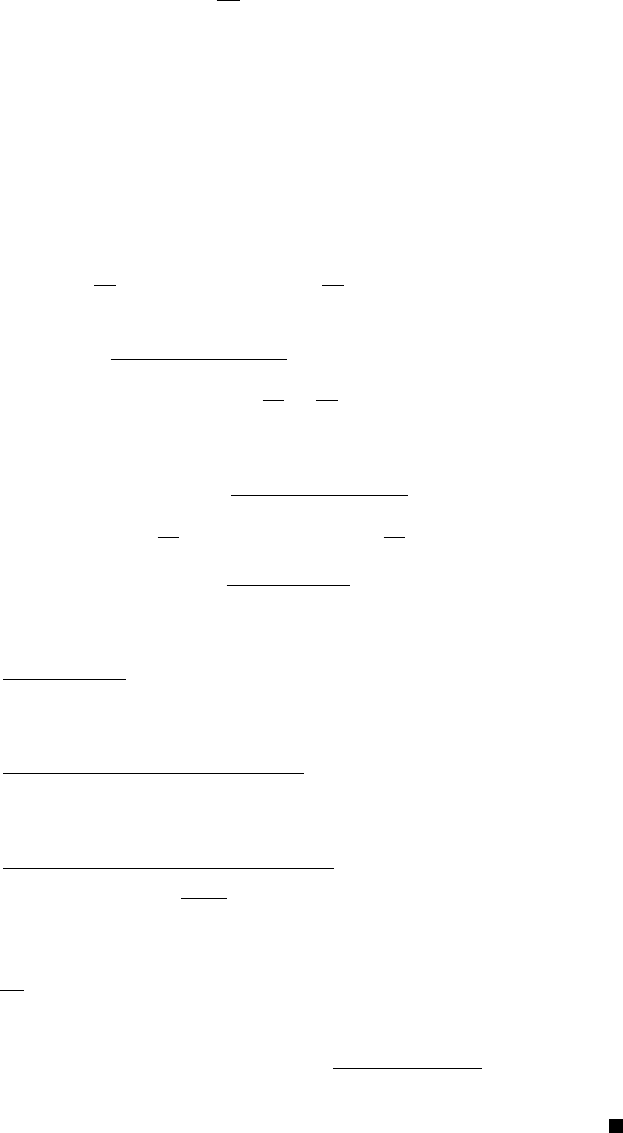

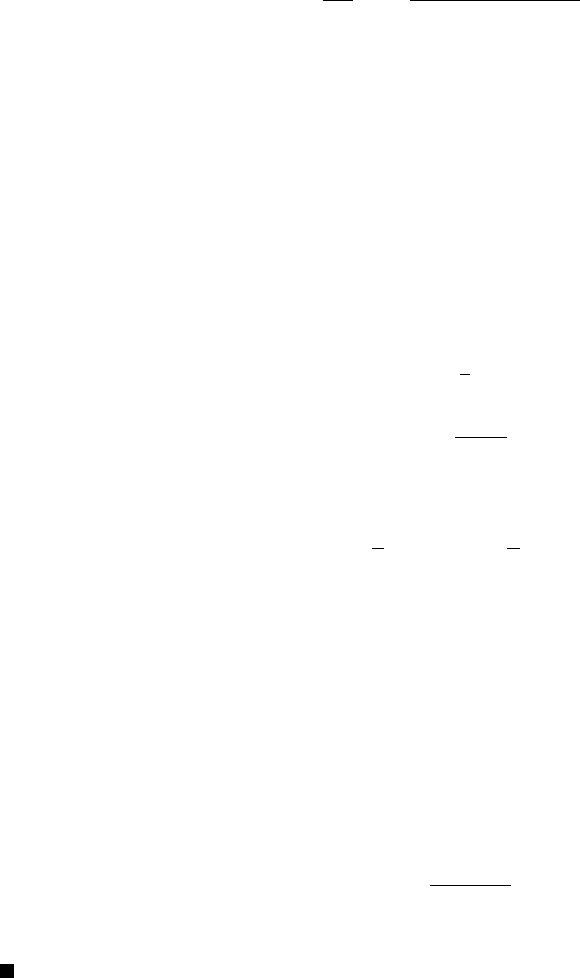

Overview of the complexity results Finally, we provide in Table 1 a brief overview

of the complexity results obtained from the Catalyst acceleration, when applied to various

optimization methods M for minimizing a large finite sum of n functions. Note that the

complexity results obtained with Catalyst are optimal, up to some logarithmic factors (see

Agarwal and Bottou, 2015; Arjevani and Shamir, 2016; Woodworth and Srebro, 2016).

2. The Moreau Envelope and its Approximate Variant

In this section, we recall a classical tool from convex analysis called the Moreau envelope

or Moreau-Yosida smoothing (Moreau, 1962; Yosida, 1980), which plays a key role for

understanding the Catalyst acceleration. This tool can be seen as a smoothing technique,

6

Catalyst for First-order Convex Optimization

which can turn any convex lower semicontinuous function f into a smooth function, and an

ill-conditioned smooth convex function into a well-conditioned smooth convex function.

The Moreau envelope results from the infimal convolution of f with a quadratic penalty:

F (x) , min

z∈R

p

n

f(z) +

κ

2

kz − xk

2

o

, (5)

where κ is a positive regularization parameter. The proximal operator is then the unique

minimizer of the problem—that is,

p(x) , prox

f/κ

(x) = arg min

z∈R

p

n

f(z) +

κ

2

kz − xk

2

o

.

Note that p(x) does not admit a closed form in general. Therefore, computing it requires

to solve the sub-problem to high accuracy with some iterative algorithm.

2.1 Basic Properties of the Moreau Envelope

The smoothing effect of the Moreau regularization can be characterized by the next propo-

sition (see Lemar´echal and Sagastiz´abal, 1997, for elementary proofs).

Proposition 1 (Regularization properties of the Moreau Envelope) Given a con-

vex continuous function f and a regularization parameter κ > 0, consider the Moreau

envelope F defined in (5). Then,

1. F is convex and minimizing f and F are equivalent in the sense that

min

x∈R

p

F (x) = min

x∈R

p

f(x) .

Moreover the solution set of the two above problems coincide with each other.

2. F is continuously differentiable even when f is not and

∇F (x) = κ(x − p(x)) . (6)

Moreover the gradient ∇F is Lipschitz continuous with constant L

F

= κ.

3. If f is µ-strongly convex, then F is µ

F

-strongly convex with µ

F

=

µκ

µ+κ

.

Interestingly, F is friendly from an optimization point of view as it is convex and differen-

tiable. Besides, F is κ-smooth with condition number

µ+κ

µ

when f is µ-strongly convex.

Thus F can be made arbitrarily well conditioned by choosing a small κ. Since both functions

f and F admit the same solutions, a naive approach to minimize a non-smooth function f

is to first construct its Moreau envelope F and then apply a smooth optimization method

on it. As we will see next, Catalyst can be seen as an accelerated gradient descent technique

applied to F with inexact gradients.

2.2 A Fresh Look at Catalyst

First-order methods applied to F provide us several well-known algorithms.

7

Lin, Mairal and Harchaoui

The proximal point algorithm. Consider gradient descent steps on F :

x

k+1

= x

k

−

1

L

F

∇F (x

k

).

By noticing that ∇F (x

k

) = κ(x

k

− p(x

k

)) and L

f

= κ, we obtain in fact

x

k+1

= p(x

k

) = arg min

z∈R

p

n

f(z) +

κ

2

kz − x

k

k

2

o

,

which is exactly the proximal point algorithm (Martinet, 1970; Rockafellar, 1976).

Accelerated proximal point algorithm. If gradient descent steps on F yields the

proximal point algorithm, it is then natural to consider the following sequence

x

k+1

= y

k

−

1

L

F

∇F (y

k

) and y

k+1

= x

k+1

+ β

k+1

(x

k+1

− x

k

),

where β

k+1

is Nesterov’s extrapolation parameter (Nesterov, 2004). Again, by using the

closed form of the gradient, this is equivalent to the update

x

k+1

= p(y

k

) and y

k+1

= x

k+1

+ β

k+1

(x

k+1

− x

k

),

which is known as the accelerated proximal point algorithm of G¨uler (1992).

While these algorithms are conceptually elegant, they suffer from a major drawback

in practice: each update requires to evaluate the proximal operator p(x). Unless a closed

form is available, which is almost never the case, we are not able to evaluate p(x) exactly.

Hence an iterative algorithm is required for each evaluation of the proximal operator which

leads to the inner-outer construction (see Algorithm 1). Catalyst can then be interpreted as

an accelerated proximal point algorithm that calls an optimization method M to compute

inexact solutions to the sub-problems. The fact that such a strategy could be used to solve

non-smooth optimization problems was well-known, but the fact that it could be used for

acceleration is more surprising. The main challenge that will be addressed in Section 3 is

how to control the complexity of the inner-loop minimization.

2.3 The Approximate Moreau Envelope

Since Catalyst uses inexact gradients of the Moreau envelope, we start with specifying the

inexactness criteria.

Inexactness through absolute accuracy. Given a proximal center x, a smoothing

parameter κ, and an accuracy ε>0, we denote the set of ε-approximations of the proximal

operator p(x) by

p

ε

(x) , {z ∈ R

p

s.t. h(z) − h

∗

≤ ε} where h(z) = f(z) +

κ

2

kx − zk

2

, (C1)

and h

∗

is the minimum function value of h.

Checking whether h(z) − h

∗

≤ ε may be impactical since h

∗

is unknown in many sit-

uations. We may then replace h

∗

by a lower bound that can be computed more easily.

We may use the Fenchel conjugate for instance. Then, given a point z and a lower-bound

8

Catalyst for First-order Convex Optimization

d(z) ≤ h

∗

, we can guarantee z ∈ p

ε

(x) if h(z) − d(z) ≤ ε. There are other choices for the

lower bounding function d which result from the specific construction of the optimization

algorithm. For instance, dual type algorithms such as SDCA (Shalev-Shwartz and Zhang,

2012) or MISO (Mairal, 2015) maintain a lower bound along the iterations, allowing one to

compute h(z) −d(z) ≤ ε.

When none of the options mentioned above are available, we can use the following fact,

based on the notion of gradient mapping; see Section 2.3.2 of (Nesterov, 2004). The intuition

comes from the smooth case: when h is smooth, the strong convexity yields

h(z) −

1

2κ

k∇h(z)k

2

≤ h

∗

.

In other words, the norm of the gradient provides enough information to assess how far

we are from the optimum. From this perspective, the gradient mapping can be seen as an

extension of the gradient for the composite case where the objective decomposes as a sum

of a smooth part and a non-smooth part (Nesterov, 2004).

Lemma 2 (Checking the absolute accuracy criterion) Consider a proximal center x,

a smoothing parameter κ and an accuracy ε > 0. Consider an objective with the composite

form (1) and we set function h as

h(z) = f (z) +

κ

2

kx − zk

2

= f

0

(z) +

κ

2

kx − zk

2

| {z }

, h

0

+ψ(x).

For any z ∈ R

p

, we define

[z]

η

= prox

ηψ

(z − η∇h

0

(z)) , with η =

1

κ + L

. (7)

Then, the gradient mapping of h at z is defined by

1

η

(z − [z]

η

) and

1

η

kz − [z]

η

k ≤

√

2κε implies [z]

η

∈ p

ε

(x).

The proof is given in Appendix B. The lemma shows that it is sufficient to check the norm

of the gradient mapping to ensure condition (C1). However, this requires an additional full

gradient step and proximal step at each iteration.

As soon as we have an approximate proximal operator z in p

ε

(x) in hand, we can define

an approximate gradient of the Moreau envelope,

g(z) , κ(x − z), (8)

by mimicking the exact gradient formula ∇F (x) = κ(x − p(x)). As a consequence, we may

immediately draw a link

z ∈ p

ε

(x) =⇒ kz − p(x)k ≤

r

2ε

κ

⇐⇒ kg(z) − ∇F (x)k ≤

√

2κε, (9)

where the first implication is a consequence of the strong convexity of h at its minimum p(x).

We will then apply the approximate gradient g instead of ∇F to build the inexact proximal

point algorithm. Since the inexactness of the approximate gradient can be bounded by an

absolute value

√

2κε, we call (C1) the absolute accuracy criterion.

9

Lin, Mairal and Harchaoui

Relative error criterion. Another natural way to bound the gradient approximation is

by using a relative error, namely in the form kg(z) −∇F (x)k ≤ δ

0

k∇F (x)k for some δ

0

> 0.

This leads us to the following inexactness criterion.

Given a proximal center x, a smoothing parameter κ and a relative accuracy δ in [0, 1),

we denote the set of δ-relative approximations by

g

δ

(x) ,

z ∈ R

p

s.t. h(z) − h

∗

≤

δκ

2

kx − zk

2

, (C2)

At a first glance, we may interpret the criterion (C2) as (C1) by setting ε =

δκ

2

kx − zk

2

.

But we should then notice that the accuracy depends on the point z, which is is no longer

an absolute constant. In other words, the accuracy varies from point to point, which is

proportional to the squared distance between z and x. First one may wonder whether g

δ

(x)

is an empty set. Indeed, it is easy to see that p(x) ∈ g

δ

(x) since h(p(x)) − h

∗

= 0 ≤

δκ

2

kx −p(x)k

2

. Moreover, by continuity, g

δ

(x) is closed set around p(x). Then, by following

similar steps as in (9), we have

z ∈ g

δ

(x) =⇒ kz − p(x)k ≤

√

δkx − zk ≤

√

δ(kx − p(x)k + kp(x) −zk).

By defining the approximate gradient in the same way g(z) = κ(x − z) yields,

z ∈ g

δ

(x) =⇒ kg(z) − ∇F (x)k ≤ δ

0

k∇F (x)k with δ

0

=

√

δ

1 −

√

δ

,

which is the desired relative gradient approximation.

Finally, the discussion about bounding h(z)−h

∗

still holds here. In particular, Lemma 2

may be used by setting the value ε =

δκ

2

kx−zk

2

. The price to pay is as an additional gradient

step and an additional proximal step per iteration.

A few remarks on related works. Inexactness criteria with respect to subgradient

norms have been investigated in the past, starting from the pioneer work of Rockafel-

lar (1976) in the context of the inexact proximal point algorithm. Later, different works

have been dedicated to more practical inexactness criteria (Auslender, 1987; Correa and

Lemar´echal, 1993; Solodov and Svaiter, 2001; Fuentes et al., 2012). These criteria include

duality gap, ε-subdifferential, or decrease in terms of function value. Here, we present a

more intuitive point of view using the Moreau envelope.

While the proximal point algorithm has caught a lot of attention, very few works have

focused on its accelerated variant. The first accelerated proximal point algorithm with

inexact gradients was proposed by G¨uler (1992). Then, Salzo and Villa (2012) proposed

a more rigorous convergence analysis, and more inexactness criteria, which are typically

stronger than ours. In the same way, a more general inexact oracle framework has been

proposed later by Devolder et al. (2014). To achieve the Catalyst acceleration, our main

effort was to propose and analyze criteria that allow us to control the complexity for finding

approximate solutions of the sub-problems.

3. Catalyst Acceleration

Catalyst is presented in Algorithm 2. As discussed in Section 2, this scheme can be inter-

preted as an inexact accelerated proximal point algorithm, or equivalently as an accelerated

10

Catalyst for First-order Convex Optimization

gradient descent method applied to the Moreau envelope of the objective with inexact gradi-

ents. Since an overview has already been presented in Section 1.2, we now present important

details to obtain acceleration in theory and in practice.

Algorithm 2 Catalyst

input Initial estimate x

0

in R

p

, smoothing parameter κ, strong convexity parameter µ,

optimization method M and a stopping criterion based on a sequence of accuracies

(ε

k

)

k≥0

, or (δ

k

)

k≥0

, or a fixed budget T .

1: Initialize y

0

= x

0

, q =

µ

µ+κ

. If µ > 0, set α

0

=

√

q, otherwise α

0

= 1.

2: while the desired accuracy is not achieved do

3: Compute an approximate solution of the following problem with M

x

k

≈ arg min

x∈R

p

n

h

k

(x) , f (x) +

κ

2

kx − y

k−1

k

2

o

,

using the warm-start strategy of Section 3 and one of the following stopping criteria:

(a) absolute accuracy: find x

k

in p

ε

k

(y

k−1

) by using criterion (C1);

(b) relative accuracy: find x

k

in g

δ

k

(y

k−1

) by using criterion (C2);

(c) fixed budget: run M for T iterations and output x

k

.

4: Update α

k

in (0, 1) by solving the equation

α

2

k

= (1 − α

k

)α

2

k−1

+ qα

k

. (10)

5: Compute y

k

with Nesterov’s extrapolation step

y

k

= x

k

+ β

k

(x

k

− x

k−1

) with β

k

=

α

k−1

(1 − α

k−1

)

α

2

k−1

+ α

k

. (11)

6: end while

output x

k

(final estimate).

Requirement: linear convergence of the method M. One of the main characteristic

of Catalyst is to apply the method M to strongly-convex sub-problems, without requiring

strong convexity of the objective f. As a consequence, Catalyst provides direct support

for convex but non-strongly convex objectives to M, which may be useful to extend the

scope of application of techniques that need strong convexity to operate. Yet, Catalyst

requires solving these sub-problems efficiently enough in order to control the complexity of

the inner-loop computations. When applying M to minimize a strongly-convex function h,

we assume that M is able to produce a sequence of iterates (z

t

)

t≥0

such that

h(z

t

) − h

∗

≤ C

M

(1 − τ

M

)

t

(h(z

0

) − h

∗

), (12)

where z

0

is the initial point given to M, and τ

M

in (0, 1), C

M

> 0 are two constants. In such

a case, we say that M admits a linear convergence rate. The quantity τ

M

controls the speed

11

Lin, Mairal and Harchaoui

of convergence for solving the sub-problems: the larger is τ

M

, the faster is the convergence.

For a given algorithm M, the quantity τ

M

depends usually on the condition number of h.

For instance, for the proximal gradient method and many first-order algorithms, we simply

have τ

M

= O((µ+κ)/(L+κ)), as h is (µ+κ)-strongly convex and (L+κ)-smooth. Catalyst

can also be applied to randomized methods M that satisfy (12) in expectation:

E[h(z

t

) − h

∗

] ≤ C

M

(1 − τ

M

)

t

(h(z

0

) − h

∗

), (13)

Then, the complexity results of Section 4 also hold in expectation. This allows us to apply

Catalyst to randomized block coordinate descent algorithms (see Richt´arik and Tak´aˇc, 2014,

and references therein), and some incremental algorithms such as SAG, SAGA, or SVRG.

For other methods that admit a linear convergence rates in terms of duality gap, such as

SDCA, MISO/Finito, Catalyst can also be applied as explained in Appendix C.

Stopping criteria. Catalyst may be used with three types of stopping criteria for solving

the inner-loop problems. We now detail them below.

(a) absolute accuracy: we predefine a sequence (ε

k

)

k≥0

of accuracies, and stop the

method M by using the absolute stopping criterion (C1). Our analysis suggests

– if f is µ-strongly convex,

ε

k

=

1

2

(1 − ρ)

k

(f(x

0

) − f

∗

) with ρ <

√

q .

– if f is convex but not strongly convex,

ε

k

=

f(x

0

) − f

∗

2(k + 2)

4+γ

with γ > 0 .

Typically, γ = 0.1 and ρ = 0.9

√

q are reasonable choices, both in theory and in

practice. Of course, the quantity f(x

0

) − f

∗

is unknown and we need to upper

bound it by a duality gap or by Lemma 2 as discussed in Section 2.3.

(b) relative accuracy: To use the relative stopping criterion (C2), our analysis suggests

the following choice for the sequence (δ

k

)

k≥0

:

– if f is µ-strongly convex,

δ

k

=

√

q

2 −

√

q

.

– if f is convex but not strongly convex,

δ

k

=

1

(k + 1)

2

.

(c) fixed budget: Finally, the simplest way of using Catalyst is to fix in advance the

number T of iterations of the method M for solving the sub-problems without

12

Catalyst for First-order Convex Optimization

checking any optimality criterion. Whereas our analysis provides theoretical bud-

gets that are compatible with this strategy, we found them to be pessimistic and

impractical. Instead, we propose an aggressive strategy for incremental methods

that simply consists of setting T = n. This setting was called the “one-pass”

strategy in the original Catalyst paper (Lin et al., 2015a).

Warm-starts in inner loops. Besides linear convergence rate, an adequate warm-start

strategy needs to be used to guarantee that the sub-problems will be solved in reasonable

computational time. The intuition is that the previous solution may still be a good ap-

proximation of the current subproblem. Specifically, the following choices arise from the

convergence analysis that will be detailed in Section 4.

Consider the minimization of the (k + 1)-th subproblem h

k+1

(z) = f(z) +

κ

2

kz − y

k

k

2

,

we warm start the optimization method M at z

0

as following:

(a) when using criterion (C1) to find x

k+1

in p

ε

k

(y

k

),

– if f is smooth (ψ = 0), then choose z

0

= x

k

+

κ

κ+µ

(y

k

− y

k−1

).

– if f is composite as in (1), then define w

0

= x

k

+

κ

κ+µ

(y

k

− y

k−1

) and

z

0

= [w

0

]

η

= prox

ηψ

(w

0

− ηg) with η =

1

L + κ

and g = ∇f

0

(w

0

)+κ(w

0

−y

k

).

(b) when using criteria (C2) to find x

k+1

in g

δ

k

(y

k

),

– if f is smooth (ψ = 0), then choose z

0

= y

k

.

– if f is composite as in (1), then choose

z

0

= [y

k

]

η

= prox

ηψ

(y

k

− η∇f

0

(y

k

)) with η =

1

L + κ

.

(c) when using a fixed budget T , choose the same warm start strategy as in (b).

Note that the earlier conference paper (Lin et al., 2015a) considered the the warm start

rule z

0

= x

k−1

. That variant is also theoretically validated but it does not perform as well

as the ones proposed here in practice.

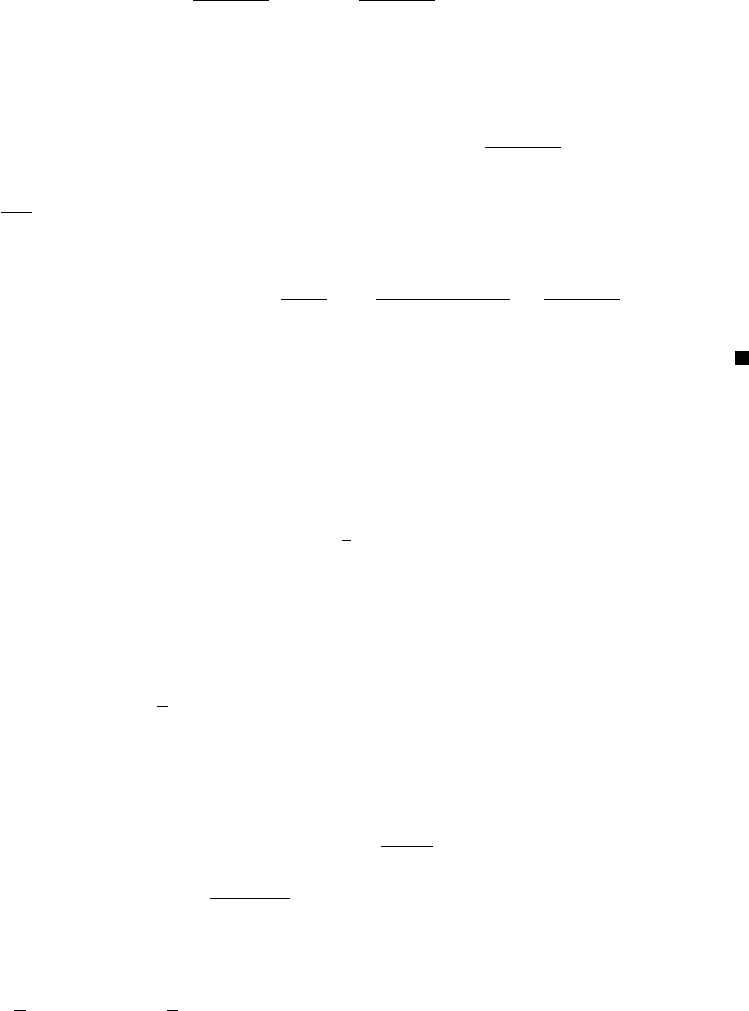

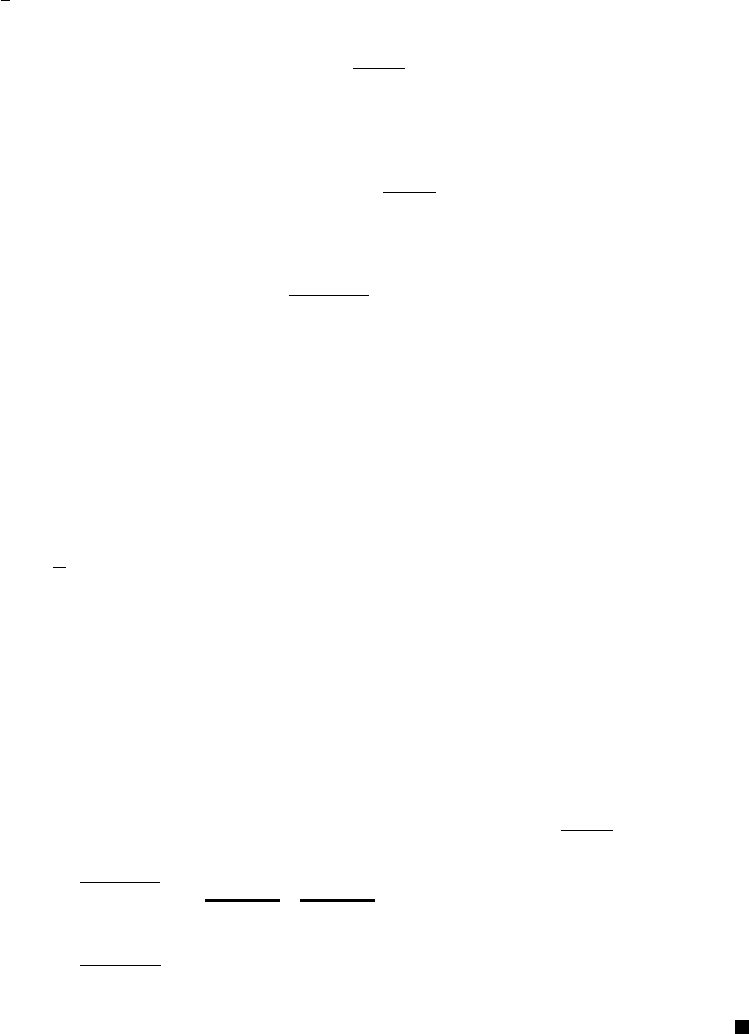

Optimal balance: choice of parameter κ. Finally, the last ingredient is to find an

optimal balance between the inner-loop (for solving each sub-problem) and outer-loop com-

putations. To do so, we minimize our global complexity bounds with respect to the value

of κ. As we shall see in Section 5, this strategy turns out to be reasonable in practice.

Then, as shown in the theoretical section, the resulting rule of thumb is

We select κ by maximizing the ratio τ

M

/

√

µ + κ.

13

Lin, Mairal and Harchaoui

We recall that τ

M

characterizes how fast M solves the sub-problems, according to (12);

typically, τ

M

depends on the condition number

L+κ

µ+κ

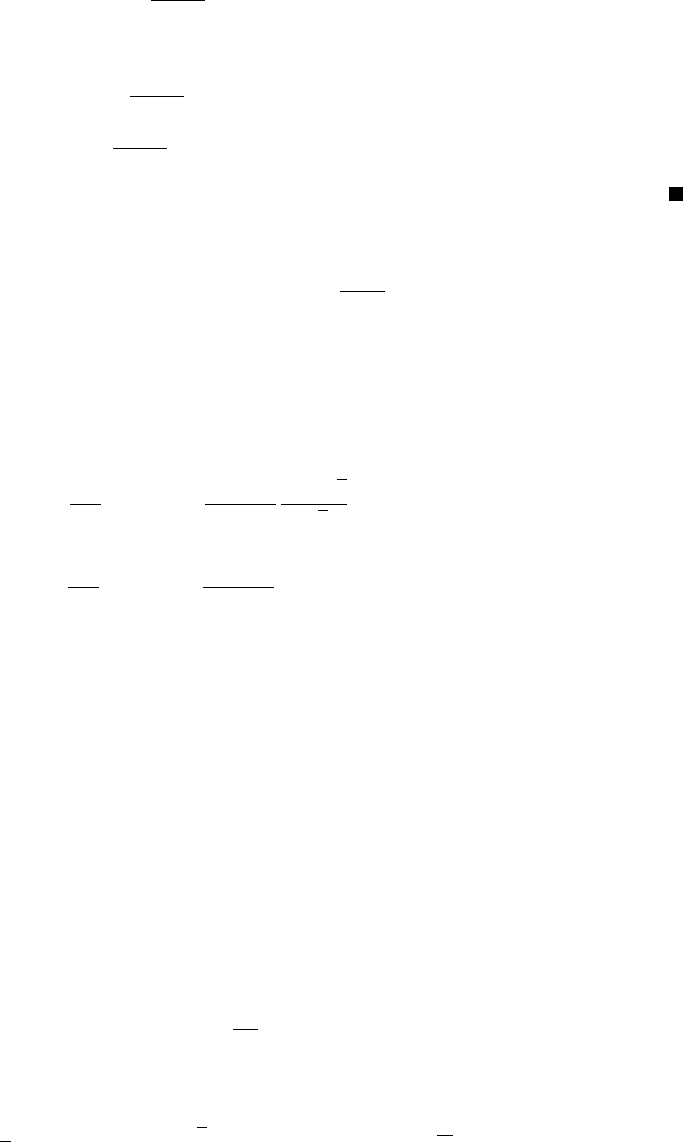

and is a function of κ.

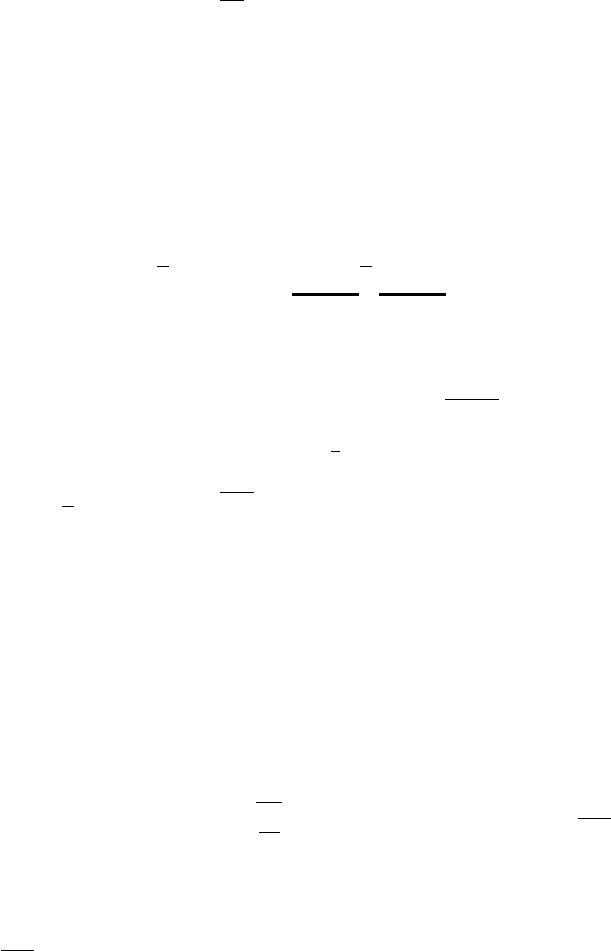

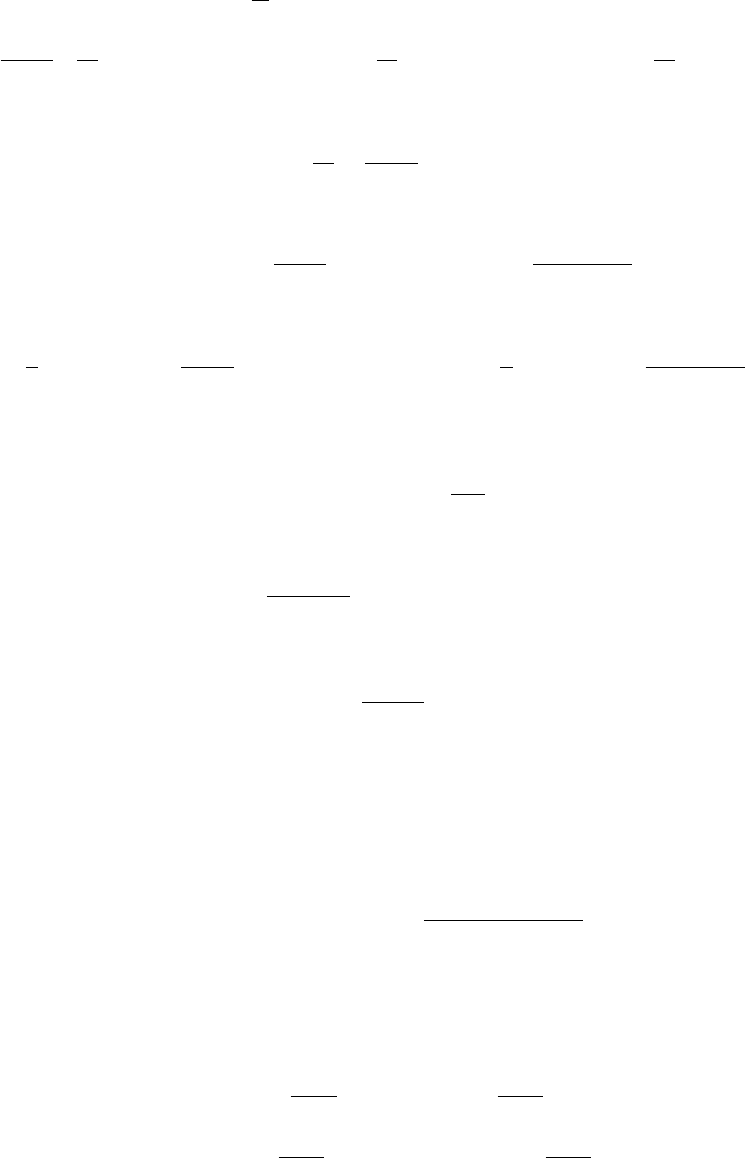

2

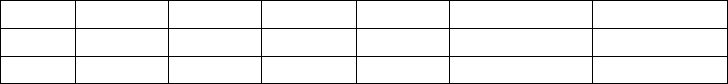

In Table 2, we

illustrate the choice of κ for different methods. Note that the resulting rule for incremental

methods is very simple for the pracitioner: select κ such that the condition number

¯

L+κ

µ+κ

is

of the order of n; then, the inner-complexity becomes O(n log(1/ε)).

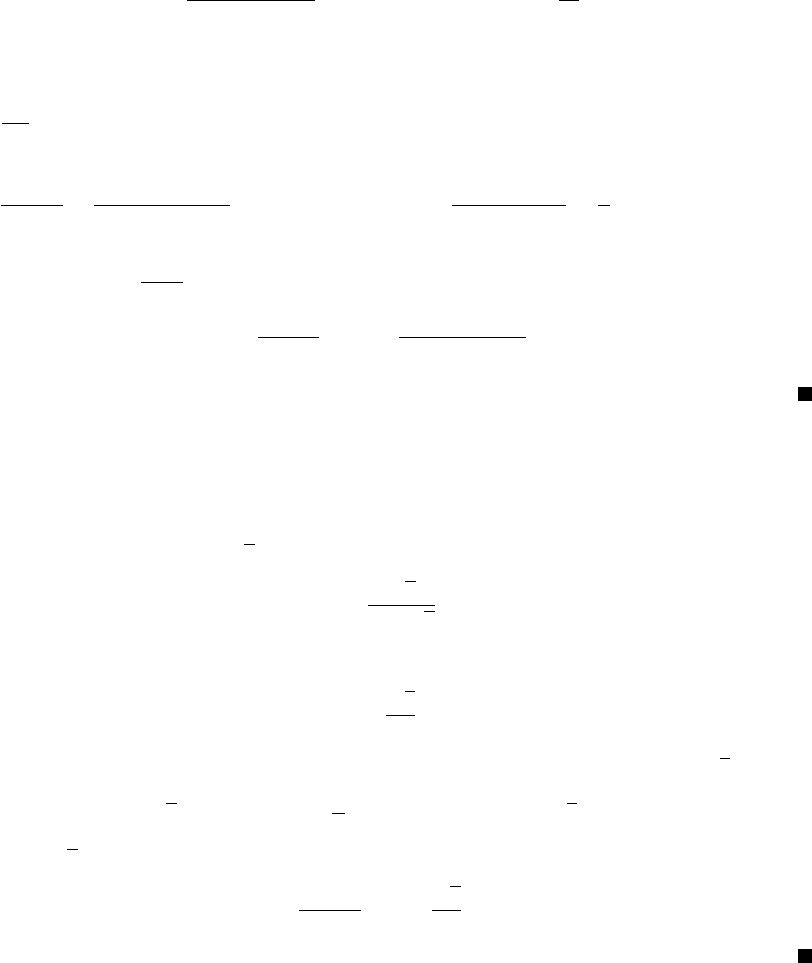

Method M Inner-complexity τ

M

Choice for κ

FG O

n

L+κ

µ+κ

log

1

ε

∝

µ+κ

L+κ

L − 2µ

SAG/SAGA/SVRG O

n +

¯

L+κ

µ+κ

log

1

ε

∝

µ+κ

n(µ+κ)+

¯

L+κ

¯

L−µ

n+1

− µ

Table 2: Example of choices of the parameter κ for the full gradient (FG) and incremental

methods SAG/SAGA/SVRG. See Table 1 for details about the complexity.

4. Convergence and Complexity Analysis

We now present the complexity analysis of Catalyst. In Section 4.1, we analyze the con-

vergence rate of the outer loop, regardless of the complexity for solving the sub-problems.

Then, we analyze the complexity of the inner-loop computations for our various stopping

criteria and warm-start strategies in Section 4.2. Section 4.3 combines the outer- and inner-

loop analysis to provide the global complexity of Catalyst applied to a given optimization

method M.

4.1 Complexity Analysis for the Outer-Loop

The complexity analysis of the first variant of Catalyst we presented in (Lin et al., 2015a)

used a tool called “estimate sequence”, which was introduced by Nesterov (2004). Here, we

provide a simpler proof. We start with criterion (C1), before extending the result to (C2).

4.1.1 Analysis for Criterion (C1)

The next theorem describes how the errors (ε

k

)

k≥0

accumulate in Catalyst.

Theorem 3 (Convergence of outer-loop for criterion (C1)) Consider the sequences

(x

k

)

k≥0

and (y

k

)

k≥0

produced by Algorithm 2, assuming that x

k

is in p

ε

k

(y

k−1

) for all k ≥ 1,

Then,

f(x

k

) − f

∗

≤ A

k−1

r

(1 − α

0

)(f(x

0

) − f

∗

) +

γ

0

2

kx

∗

− x

0

k

2

+ 3

k

X

j=1

r

ε

j

A

j−1

2

,

2. Note that the rule for the non strongly convex case, denoted here by µ = 0, slightly differs from Lin

et al. (2015a) and results from a tighter complexity analysis.

14

Catalyst for First-order Convex Optimization

where

γ

0

= (κ + µ)α

0

(α

0

− q) and A

k

=

k

Y

j=1

(1 − α

j

) with A

0

= 1 . (14)

Before we prove this theorem, we note that by setting ε

k

= 0 for all k, the speed of

convergence of f (x

k

) −f

∗

is driven by the sequence (A

k

)

k≥0

. Thus we first show the speed

of A

k

by recalling the Lemma 2.2.4 of Nesterov (2004).

Lemma 4 (Lemma 2.2.4 of Nesterov 2004) Consider the quantities γ

0

, A

k

defined in (14)

and the α

k

’s defined in Algorithm 2. Then, if γ

0

≥ µ,

A

k

≤ min

(1 −

√

q)

k

,

4

2 + k

q

γ

0

κ

2

.

For non-strongly convex objectives, A

k

follows the classical accelerated O(1/k

2

) rate of

convergence, whereas it achieves a linear convergence rate for the strongly convex case.

Intuitively, we are applying an inexact Nesterov method on the Moreau envelope F , thus

the convergence rate naturally depends on the inverse of its condition number, which is

q =

µ

µ+κ

. We now provide the proof of the theorem below.

Proof We start by defining an approximate sufficient descent condition inspired by a

remark of Chambolle and Pock (2015) regarding accelerated gradient descent methods. A

related condition was also used by Paquette et al. (2018) in the context of non-convex

optimization.

Approximate sufficient descent condition. Let us define the function

h

k

(x) = f(x) +

κ

2

kx − y

k−1

k

2

.

Since p(y

k−1

) is the unique minimizer of h

k

, the strong convexity of h

k

yields: for any k ≥ 1,

for all x in R

p

and any θ

k

> 0,

h

k

(x) ≥ h

∗

k

+

κ + µ

2

kx − p(y

k−1

)k

2

≥ h

∗

k

+

κ + µ

2

(1 − θ

k

) kx − x

k

k

2

+

κ + µ

2

1 −

1

θ

k

kx

k

− p(y

k−1

)k

2

≥ h

k

(x

k

) − ε

k

+

κ + µ

2

(1 − θ

k

) kx − x

k

k

2

+

κ + µ

2

1 −

1

θ

k

kx

k

− p(y

k−1

)k

2

,

where the (µ + κ)-strong convexity of h

k

is used in the first inequality; Lemma 19 is used

in the second inequality, and the last one uses the relation h

k

(x

k

) − h

∗

k

≤ ε

k

. Moreover,

when θ

k

≥ 1, the last term is positive and we have

h

k

(x) ≥ h

k

(x

k

) − ε

k

+

κ + µ

2

(1 − θ

k

) kx − x

k

k

2

.

15

Lin, Mairal and Harchaoui

If instead θ

k

≤ 1, the coefficient

1

θ

k

− 1 is non-negative and we have

−

κ + µ

2

1

θ

k

− 1

kx

k

− p(y

k−1

)k

2

≥ −

1

θ

k

− 1

(h

k

(x

k

) − h

∗

k

) ≥ −

1

θ

k

− 1

ε

k

.

In this case, we have

h

k

(x) ≥ h

k

(x

k

) −

ε

k

θ

k

+

κ + µ

2

(1 − θ

k

) kx − x

k

k

2

.

As a result, we have for all value of θ

k

> 0,

h

k

(x) ≥ h

k

(x

k

) +

κ + µ

2

(1 − θ

k

) kx − x

k

k

2

−

ε

k

min{1, θ

k

}

.

After expanding the expression of h

k

, we then obtain the approximate descent condition

f(x

k

)+

κ

2

kx

k

−y

k−1

k

2

+

κ + µ

2

(1 − θ

k

) kx−x

k

k

2

≤ f (x)+

κ

2

kx−y

k−1

k

2

+

ε

k

min{1, θ

k

}

. (15)

Definition of the Lyapunov function. We introduce a sequence (S

k

)

k≥0

that will act

as a Lyapunov function, with

S

k

= (1 − α

k

)(f(x

k

) − f

∗

) + α

k

κη

k

2

kx

∗

− v

k

k

2

. (16)

where x

∗

is a minimizer of f, (v

k

)

k≥0

is a sequence defined by v

0

= x

0

and

v

k

= x

k

+

1 − α

k−1

α

k−1

(x

k

− x

k−1

) for k ≥ 1,

and (η

k

)

k≥0

is an auxiliary quantity defined by

η

k

=

α

k

− q

1 − q

.

The way we introduce these variables allow us to write the following relationship,

y

k

= η

k

v

k

+ (1 −η

k

)x

k

, for all k ≥ 0,

which follows from a simple calculation. Then by setting z

k

= α

k−1

x

∗

+ (1 −α

k−1

)x

k−1

the

following relations hold for all k ≥ 1.

f(z

k

) ≤ α

k−1

f

∗

+ (1 −α

k−1

)f(x

k−1

) −

µα

k−1

(1 − α

k−1

)

2

kx

∗

− x

k−1

k

2

,

z

k

− x

k

= α

k−1

(x

∗

− v

k

),

and also the following one

kz

k

− y

k−1

k

2

= k(α

k−1

− η

k−1

)(x

∗

− x

k−1

) + η

k−1

(x

∗

− v

k−1

)k

2

= α

2

k−1

1 −

η

k−1

α

k−1

(x

∗

− x

k−1

) +

η

k−1

α

k−1

(x

∗

− v

k−1

)

2

≤ α

2

k−1

1 −

η

k−1

α

k−1

kx

∗

− x

k−1

k

2

+ α

2

k−1

η

k−1

α

k−1

kx

∗

− v

k−1

k

2

= α

k−1

(α

k−1

− η

k−1

)kx

∗

− x

k−1

k

2

+ α

k−1

η

k−1

kx

∗

− v

k−1

k

2

,

16

Catalyst for First-order Convex Optimization

where we used the convexity of the norm and the fact that η

k

≤ α

k

. Using the previous

relations in (15) with x = z

k

= α

k−1

x

∗

+ (1 −α

k−1

)x

k−1

, gives for all k ≥ 1,

f(x

k

) +

κ

2

kx

k

− y

k−1

k

2

+

κ + µ

2

(1 − θ

k

) α

2

k−1

kx

∗

− v

k

k

2

≤ α

k−1

f

∗

+ (1 −α

k−1

)f(x

k−1

) −

µ

2

α

k−1

(1 − α

k−1

)kx

∗

− x

k−1

k

2

+

κα

k−1

(α

k−1

− η

k−1

)

2

kx

∗

− x

k−1

k

2

+

κα

k−1

η

k−1

2

kx

∗

− v

k−1

k

2

+

ε

k

min{1, θ

k

}

.

Remark that for all k ≥ 1,

α

k−1

− η

k−1

= α

k−1

−

α

k−1

− q

1 − q

=

q(1 − α

k−1

)

1 − q

=

µ

κ

(1 − α

k−1

),

and the quadratic terms involving x

∗

− x

k−1

cancel each other. Then, after noticing that

for all k ≥ 1,

η

k

α

k

=

α

2

k

− qα

k

1 − q

=

(κ + µ)(1 −α

k

)α

2

k−1

κ

,

which allows us to write

f(x

k

) − f

∗

+

κ + µ

2

α

2

k−1

kx

∗

− v

k

k

2

=

S

k

1 − α

k

. (17)

We are left, for all k ≥ 1, with

1

1 − α

k

S

k

≤ S

k−1

+

ε

k

min{1, θ

k

}

−

κ

2

kx

k

− y

k−1

k

2

+

(κ + µ)α

2

k−1

θ

k

2

kx

∗

− v

k

k

2

. (18)

Control of the approximation errors for criterion (C1). Using the fact that

1

min{1, θ

k

}

≤ 1 +

1

θ

k

,

we immediately derive from equation (18) that

1

1 − α

k

S

k

≤ S

k−1

+ ε

k

+

ε

k

θ

k

−

κ

2

kx

k

− y

k−1

k

2

+

(κ + µ)α

2

k−1

θ

k

2

kx

∗

− v

k

k

2

. (19)

By minimizing the right-hand side of (19) with respect to θ

k

, we obtain the following

inequality

1

1 − α

k

S

k

≤ S

k−1

+ ε

k

+

p

2ε

k

(µ + κ)α

k−1

kx

∗

− v

k

k,

and after unrolling the recursion,

S

k

A

k

≤ S

0

+

k

X

j=1

ε

j

A

j−1

+

k

X

j=1

p

2ε

j

(µ + κ)α

j−1

kx

∗

− v

j

k

A

j−1

.

17

Lin, Mairal and Harchaoui

From Equation (17), the lefthand side is larger than

(µ+κ)α

2

k−1

kx

∗

−v

k

k

2

2A

k−1

. We may now define

u

j

=

√

(µ+κ)α

j−1

kx

∗

−v

j

k

√

2A

j−1

and a

j

= 2

√

ε

j

√

A

j−1

, and we have

u

2

k

≤ S

0

+

k

X

j=1

ε

j

A

j−1

+

k

X

j=1

a

j

u

j

for all k ≥ 1.

This allows us to apply Lemma 20, which yields

S

k

A

k

≤

v

u

u

t

S

0

+

k

X

j=1

ε

j

A

j−1

+ 2

k

X

j=1

r

ε

j

A

j−1

2

,

≤

p

S

0

+ 3

k

X

j=1

r

ε

j

A

j−1

2

which provides us the desired result given that f(x

k

) − f

∗

≤

S

k

1−α

k

and that v

0

= x

0

.

We are now in shape to state the convergence rate of the Catalyst algorithm with

criterion (C1), without taking into account yet the cost of solving the sub-problems. The

next two propositions specialize Theorem 3 to the strongly convex case and non strongly

convex cases, respectively. Their proofs are provided in Appendix B.

Proposition 5 (µ-strongly convex case, criterion (C1))

In Algorithm 2, choose α

0

=

√

q and

ε

k

=

2

9

(f(x

0

) − f

∗

)(1 − ρ)

k

with ρ <

√

q.

Then, the sequence of iterates (x

k

)

k≥0

satisfies

f(x

k

) − f

∗

≤

8

(

√

q − ρ)

2

(1 − ρ)

k+1

(f(x

0

) − f

∗

).

Proposition 6 (Convex case, criterion (C1))

When µ = 0, choose α

0

= 1 and

ε

k

=

2(f(x

0

) − f

∗

)

9(k + 1)

4+γ

with γ > 0.

Then, Algorithm 2 generates iterates (x

k

)

k≥0

such that

f(x

k

) − f

∗

≤

8

(k + 1)

2

κ

2

kx

0

− x

∗

k

2

+

4

γ

2

(f(x

0

) − f

∗

)

.

18

Catalyst for First-order Convex Optimization

4.1.2 Analysis for Criterion (C2)

Then, we may now analyze the convergence of Catalyst under criterion (C2), which offers

similar guarantees as (C1), as far as the outer loop is concerned.

Theorem 7 (Convergence of outer-loop for criterion (C2)) Consider the sequences

(x

k

)

k≥0

and (y

k

)

k≥0

produced by Algorithm 2, assuming that x

k

is in g

δ

k

(y

k−1

) for all k ≥ 1

and δ

k

in (0, 1). Then,

f(x

k

) − f

∗

≤

A

k−1

Q

k

j=1

(1 − δ

j

)

(1 − α

0

)(f(x

0

) − f

∗

) +

γ

0

2

kx

0

− x

∗

k

2

,

where γ

0

and (A

k

)

k≥0

are defined in (14) in Theorem 3.

Proof Remark that x

k

in g

δ

k

(y

k−1

) is equivalent to x

k

in p

ε

k

(y

k−1

) with an adaptive error

ε

k

=

δ

k

κ

2

kx

k

− y

k−1

k

2

. All steps of the proof of Theorem 3 hold for such values of ε

k

and

from (18), we may deduce

S

k

1 − α

k

−

(κ + µ)α

2

k−1

θ

k

2

kx

∗

− v

k

k

2

≤ S

k−1

+

δ

k

κ

2 min{1, θ

k

}

−

κ

2

kx

k

− y

k−1

k

2

.

Then, by choosing θ

k

= δ

k

< 1, the quadratic term on the right disappears and the left-hand

side is greater than

1−δ

k

1−α

k

S

k

. Thus,

S

k

≤

1 − α

k

1 − δ

k

S

k−1

≤

A

k

Q

k

j=1

(1 − δ

j

)

S

0

,

which is sufficient to conclude since (1 − α

k

)(f(x

k

) − f

∗

) ≤ S

k

.

The next propositions specialize Theorem 7 for specific choices of sequence (δ

k

)

k≥0

in

the strongly and non strongly convex cases.

Proposition 8 (µ-strongly convex case, criterion (C2))

In Algorithm 2, choose α

0

=

√

q and

δ

k

=

√

q

2 −

√

q

.

Then, the sequence of iterates (x

k

)

k≥0

satisfies

f(x

k

) − f

∗

≤ 2

1 −

√

q

2

k

(f(x

0

) − f

∗

) .

Proof This is a direct application of Theorem 7 by remarking that γ

0

= (1 −

√

q)µ and

S

0

= (1 −

√

q)

f(x

0

) − f

∗

+

µ

2

kx

∗

− x

0

k

2

≤ 2(1 −

√

q)(f(x

0

) − f

∗

).

And α

k

=

√

q for all k ≥ 0 leading to

1 − α

k

1 − δ

k

= 1 −

√

q

2

19

Lin, Mairal and Harchaoui

Proposition 9 (Convex case, criterion (C2))

When µ = 0, choose α

0

= 1 and

δ

k

=

1

(k + 1)

2

.

Then, Algorithm 2 generates iterates (x

k

)

k≥0

such that

f(x

k

) − f

∗

≤

4κkx

0

− x

∗

k

2

(k + 1)

2

. (20)

Proof This is a direct application of Theorem 7 by remarking that γ

0

= κ, A

k

≤

4

(k+2)

2

(Lemma 4) and

k

Y

i=1

1 −

1

(i + 1)

2

=

k

Y

i=1

i(i + 2)

(i + 1)

2

=

k + 2

2(k + 1)

≥

1

2

.

Remark 10 In fact, the choice of δ

k

can be improved by taking δ

k

=

1

(k+1)

1+γ

for any

γ > 0, which comes at the price of a larger constant in (20).

4.2 Analysis of Warm-start Strategies for the Inner Loop

In this section, we study the complexity of solving the subproblems with the proposed warm

start strategies. The only assumption we make on the optimization method M is that it

enjoys linear convergence when solving a strongly convex problem—meaning, it satisfies

either (12) or its randomized variant (13). Then, the following lemma gives us a relation

between the accuracy required to solve the sub-problems and the corresponding complexity.

Lemma 11 (Accuracy vs. complexity) Let us consider a strongly convex objective h

and a linearly convergent method M generating a sequence of iterates (z

t

)

t≥0

for minimiz-

ing h. Consider the complexity T (ε) = inf{t ≥ 0, h(z

t

) − h

∗

≤ ε}, where ε > 0 is the target

accuracy and h

∗

is the minimum value of h. Then,

1. If M is deterministic and satisfies (12), we have

T (ε) ≤

1

τ

M

log

C

M

(h(z

0

) − h

∗

)

ε

.

2. If M is randomized and satisfies (13), we have

E[T (ε)] ≤

1

τ

M

log

2C

M

(h(z

0

) − h

∗

)

τ

M

ε

+ 1

The proof of the deterministic case is straightforward and the proof of the randomized case

is provided in Appendix B.4. From the previous result, a good initialization is essential for

fast convergence. More precisely, it suffices to control the initialization

h(z

0

)−h

∗

ε

in order to

bound the number of iterations T (ε). For that purpose, we analyze the quality of various

warm-start strategies.

20

Catalyst for First-order Convex Optimization

4.2.1 Warm Start Strategies for Criterion (C1)

The next proposition characterizes the quality of initialization for (C1).

Proposition 12 (Warm start for criterion (C1)) Assume that M is linearly conver-

gent for strongly convex problems with parameter τ

M

according to (12), or according to (13)

in the randomized case. At iteration k + 1 of Algorithm 2, given the previous iterate x

k

in p

ε

k

(y

k−1

), we consider the following function

h

k+1

(z) = f (z) +

κ

2

kz − y

k

k

2

,

which we minimize with M, producing a sequence (z

t

)

t≥0

. Then,

• when f is smooth, choose z

0

= x

k

+

κ

κ+µ

(y

k

− y

k−1

);

• when f = f

0

+ ψ is composite, choose z

0

= [w

0

]

η

= prox

ηψ

(w

0

− η∇h

0

(w

0

)) with

w

0

= x

k

+

κ

κ+µ

(y

k

− y

k−1

), η =

1

L+κ

and h

0

= f

0

+

κ

2

k · −y

k

k

2

.

We also assume that we choose α

0

and (ε

k

)

k≥0

according to Proposition 5 for µ > 0, or

Proposition 6 for µ = 0. Then,

1. if f is µ-strongly convex, h

k+1

(z

0

) − h

∗

k+1

≤ Cε

k+1

where,

C =

L + κ

κ + µ

2

1 − ρ

+

2592(κ + µ)

(1 − ρ)

2

(

√

q − ρ)

2

µ

if f is smooth, (21)

or

C =

L + κ

κ + µ

2

1 − ρ

+

23328(L + κ)

(1 − ρ)

2

(

√

q − ρ)

2

µ

if f is composite. (22)

2. if f is convex with bounded level sets, there exists a constant B > 0 that only depends

on f, x

0

and κ such that

h

k+1

(z

0

) − h

∗

k+1

≤ B. (23)

Proof We treat the smooth and composite cases separately.

Smooth and strongly-convex case. When f is smooth, by the gradient Lipschitz as-

sumption,

h

k+1

(z

0

) − h

∗

k+1

≤

(L + κ)

2

kz

0

− p(y

k

)k

2

.

Moreover,

kz

0

− p(y

k

)k

2

=

x

k

+

κ

κ + µ

(y

k

− y

k−1

) − p(y

k

)

2

=

x

k

− p(y

k−1

) +

κ

κ + µ

(y

k

− y

k−1

) − (p(y

k

) − p(y

k−1

))

2

≤ 2kx

k

− p(y

k−1

)k

2

+ 2

κ

κ + µ

(y

k

− y

k−1

) − (p(y

k

) − p(y

k−1

))

2

.

21

Lin, Mairal and Harchaoui

Since x

k

is in p

ε

k

(y

k−1

), we may control the first quadratic term on the right by noting that

kx

k

− p(y

k−1

)k

2

≤

2

κ + µ

(h

k

(x

k

) − h

∗

k

) ≤

2ε

k

κ + µ

.

Moreover, by the coerciveness property of the proximal operator,

κ

κ + µ

(y

k

− y

k−1

) − (p(y

k

) − p(y

k−1

))

2

≤ ky

k

− y

k−1

k

2

,

see Appendix B.5 for the proof. As a consequence,

h

k+1

(z

0

) − h

∗

k+1

≤

(L + κ)

2

kz

0

− p(y

k

)k

2

≤ 2

L + κ

µ + κ

ε

k

+ (L + κ)ky

k

− y

k−1

k

2

,

(24)

Then, we need to control the term ky

k

−y

k−1

k

2

. Inspired by the proof of accelerated SDCA

of Shalev-Shwartz and Zhang (2016),

ky

k

− y

k−1

k = kx

k

+ β

k

(x

k

− x

k−1

) − x

k−1

− β

k−1

(x

k−1

− x

k−2

)k

≤ (1 + β

k

)kx

k

− x

k−1

k + β

k−1

kx

k−1

− x

k−2

k

≤ 3 max {kx

k

− x

k−1

k, kx

k−1

− x

k−2

k},

The last inequality was due to the fact that β

k

≤ 1. In fact,

β

2

k

=

α

k−1

− α

2

k−1

2

α

2

k−1

+ α

k

2

=

α

2

k−1

+ α

4

k−1

− 2α

3

k−1

α

2

k

+ 2α

k

α

2

k−1

+ α

4

k−1

=

α

2

k−1

+ α

4

k−1

− 2α

3

k−1

α

2

k−1

+ α

4

k−1

+ qα

k

+ α

k

α

2

k−1

≤ 1,

where the last equality uses the relation α

2

k

+ α

k

α

2

k−1

= α

2

k−1

+ qα

k

from (10). Then,

kx

k

− x

k−1

k ≤ kx

k

− x

∗

k + kx

k−1

− x

∗

k,

and by strong convexity of f

µ

2

kx

k

− x

∗

k

2

≤ f (x

k

) − f

∗

≤

36

(

√

q − ρ)

2

ε

k+1

,

where the last inequality is obtained from Proposition 5. As a result,

ky

k

− y

k−1

k

2

≤ 9 max

kx

k

− x

k−1

k

2

, kx

k−1

− x

k−2

k

2

≤ 36 max

kx

k

− x

∗

k

2

, kx

k−1

− x

∗

k

2

, kx

k−2

− x

∗

k

2

≤

2592 ε

k−1

(

√

q − ρ)

2

µ

.

Since ε

k+1

= (1 − ρ)

2

ε

k−1

, we may now obtain (21) from (24) and the previous bound.

22

Catalyst for First-order Convex Optimization

Smooth and convex case. When µ = 0, Eq. (24) is still valid but we need to control

ky

k

−y

k−1

k

2

in a different way. From Proposition 6, the sequence (f(x

k

))

k≥0

is bounded by

a constant that only depends on f and x

0

; therefore, by the bounded level set assumption,

there exists R > 0 such that

kx

k

− x

∗

k ≤ R, for all k ≥ 0.

Thus, following the same argument as the strongly convex case, we have

ky

k

− y

k−1

k ≤ 36R

2

for all k ≥ 1,

and we obtain (23) by combining the previous inequality with (24).

Composite case. By using the notation of gradient mapping introduced in (7), we

have z

0

= [w

0

]

η

. By following similar steps as in the proof of Lemma 2, the gradient

mapping satisfies the following relation

h

k+1

(z

0

) − h

∗

k+1

≤

1

2(κ + µ)

1

η

(w

0

− z

0

)

2

,

and it is sufficient to bound kw

0

− z

0

k = kw

0

− [w

0

]

η

k. For that, we introduce

[x

k

]

η

= prox

ηψ

(x

k

− η(∇f

0

(x

k

) + κ(x

k

− y

k−1

))).

Then,

kw

0

− [w

0

]

η

k ≤ kw

0

− x

k

k + kx

k

− [x

k

]

η

k + k[x

k

]

η

− [w

0

]

η

k, (25)

and we will bound each term on the right. By construction

kw

0

− x

k

k =

κ

κ + µ

ky

k

− y

k−1

k ≤ ky

k

− y

k−1

k.

Next, it is possible to show that the gradient mapping satisfies the following relation (see

Nesterov, 2013),

1

2η

kx

k

− [x

k

]

η

k

2

≤ h

k

(x

k

) − h

∗

k

≤ ε

k

.

And then since [x

k

]

η

= prox

ηψ

(x

k

− η(∇f

0

(x

k

) + κ(x

k

− y

k−1

))) and [w

0

]

η

= prox

ηψ

(w

0

−

η(∇f

0

(w

0

) + κ(w

0

− y

k

))). From the non expansiveness of the proximal operator, we have

k[x

k

]

η

− [w

0

]

η

k ≤ kx

k

− η(∇f

0

(x

k

) + κ(x

k

− y

k−1

)) − (w

0

− η(∇f

0

(w

0

) + κ(w

0

− y

k

))) k

≤ kx

k

− η(∇f

0

(x

k

) + κ(x

k

− y

k−1

)) − (w

0

− η(∇f

0

(w

0

) + κ(w

0

− y

k−1

))) k

+ ηκky

k

− y

k−1

k

≤ kx

k

− w

0

k + ηκky

k

− y

k−1

k

≤ 2ky

k

− y

k−1

k.

We have used the fact that kx − η∇h(x) − (y − η∇h(y))k ≤ kx − yk. By combining the

previous inequalities with (25), we finally have

kw

0

− [w

0

]

η

k ≤

p

2ηε

k

+ 3ky

k

− y

k−1

k.

23

Lin, Mairal and Harchaoui

Thus, by using the fact that (a + b)

2

≤ 2a

2

+ 2b

2

for all a, b,

h

k+1

(z

0

) − h

∗

k+1

≤

L + κ

κ + µ

2ε

k

+ 9(L + κ)ky

k

− y

k−1

k

2

,

and we can obtain (22) and (23) by upper-bounding ky

k

−y

k−1

k

2

in a similar way as in the

smooth case, both when µ > 0 and µ = 0.

Finally, the complexity of the inner loop can be obtained directly by combining the

previous proposition with Lemma 11.

Corollary 13 (Inner-loop Complexity for Criterion (C1)) Consider the setting of Propo-

sition 12; then, the sequence (z

t

)

t≥0

minimizing h

k+1

is such that the complexity T

k+1

=

inf{t ≥ 0, h

k+1

(z

t

) − h

∗

k+1

≤ ε

k+1

} satisfies

T

k+1

≤

1

τ

M

log (C

M

C) if µ > 0 =⇒ T

k+1

=

˜

O

1

τ

M

,

where C is the constant defined in (21) or in (22) for the composite case; and

T

k+1

≤

1

τ

M

log

9C

M

(k + 2)

4+η

B

2(f(x

0

) − f

∗

)

if µ = 0 =⇒ T

k+1

=

˜

O

log(k + 2)

τ

M

,

where B is the uniform upper bound in (23). Furthermore, when M is randomized, the

expected complexity E[T

k+1

] is similar, up to a factor 2/τ

M

in the logarithm—see Lemma 11,

and we have E[T

k+1

] =

˜

O(1/τ

M

) when µ > 0 and E[T

k+1

] =

˜

O(log(k + 2)/τ

M

). Here,

˜

O(.)

hides logarithmic dependencies in parameters µ, L, κ, C

M

, τ

M

and f(x

0

) − f

∗

.

4.2.2 Warm Start Strategies for Criterion (C2)

We may now analyze the inner-loop complexity for criterion (C2) leading to upper bounds

with smaller constants and simpler proofs. Note also that in the convex case, the bounded

level set condition will not be needed, unlike for criterion (C1). To proceed, we start with

a simple lemma that gives us a sufficient condition for (C2) to be satisfied.

Lemma 14 (Sufficient condition for criterion (C2)) If a point z satisfies

h

k+1

(z) − h

∗

k+1

≤

δ

k+1

κ

8

kp(y

k

) − y

k

k

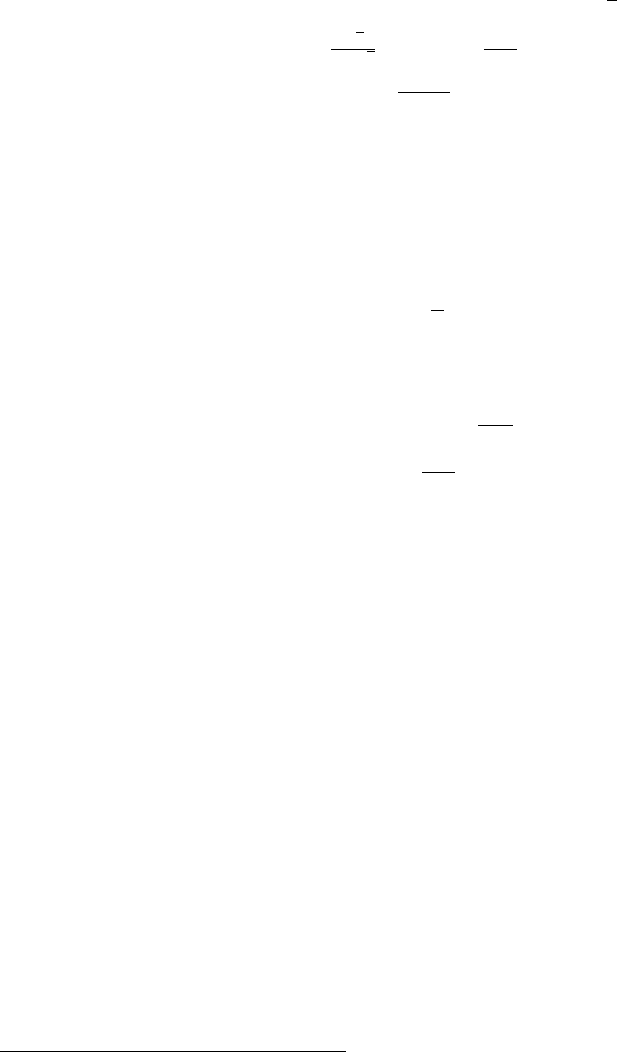

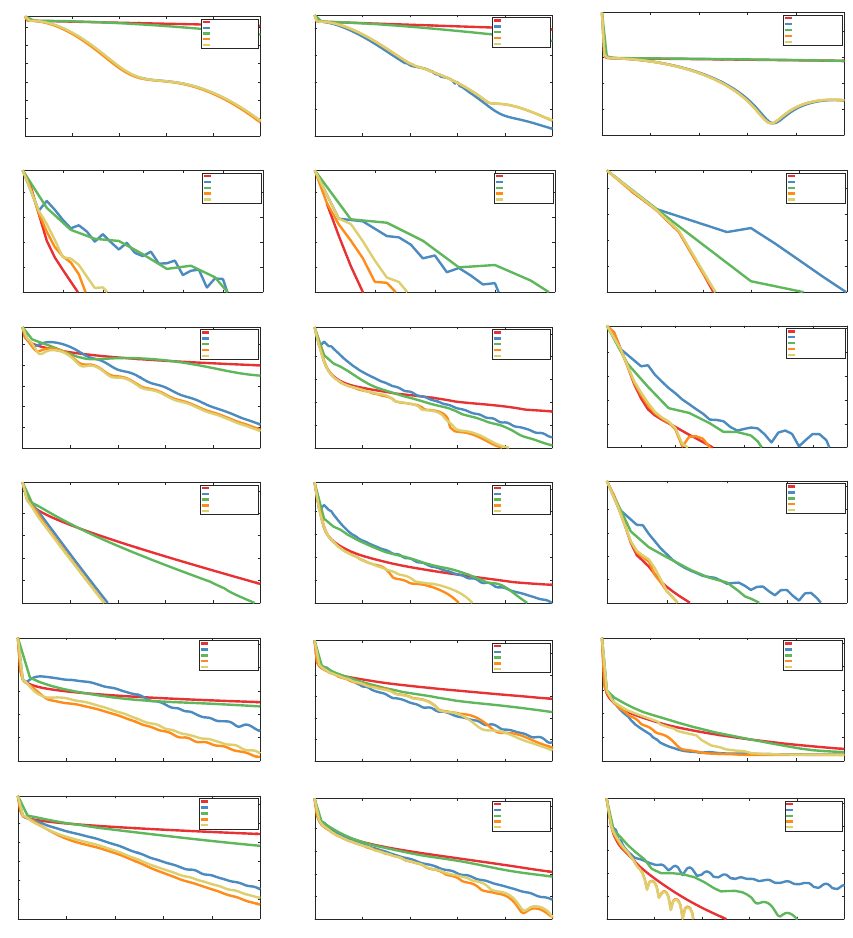

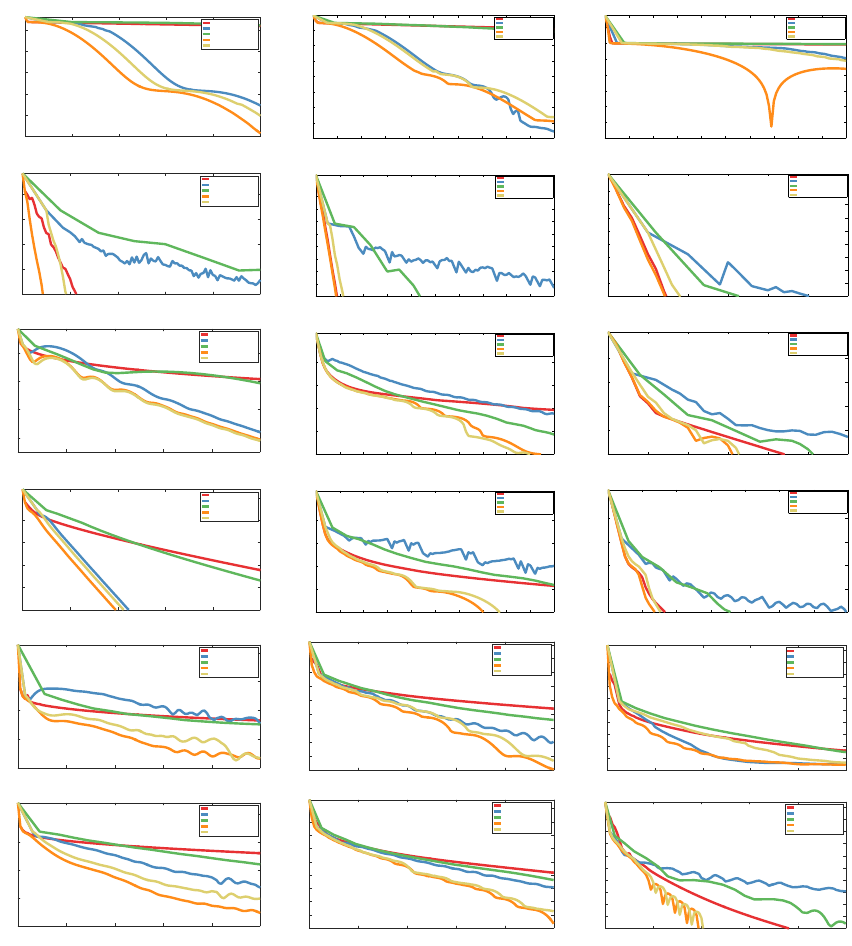

2