USNCCM 2023

Multi-Output Approximate Control

Variate Estimation for Enhanced

Variance Reduction

Thomas Dixon & A. Gorodetsky, University of Michigan

G. Bomarito & J. Warner, NASA Langley Research Center

July 26, 2023

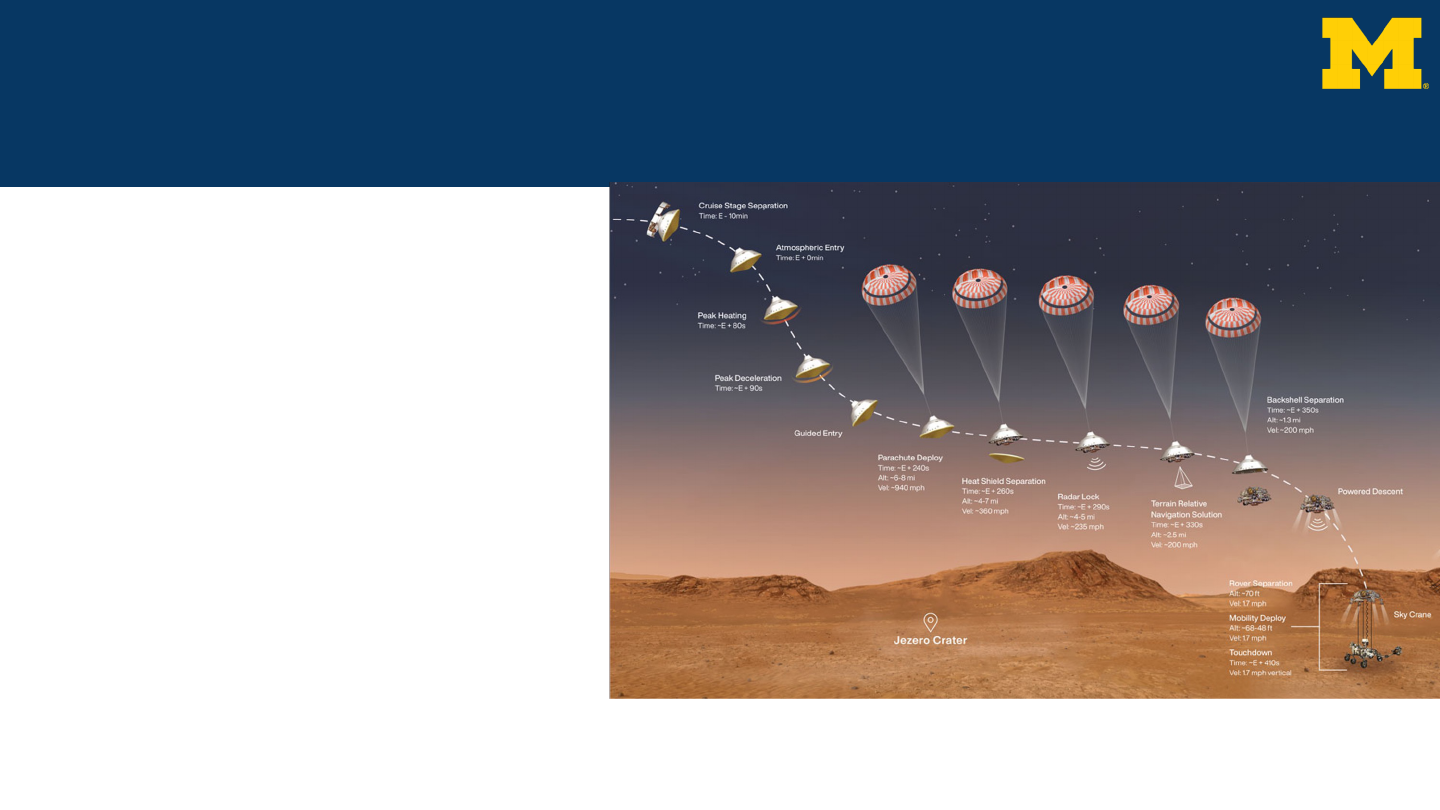

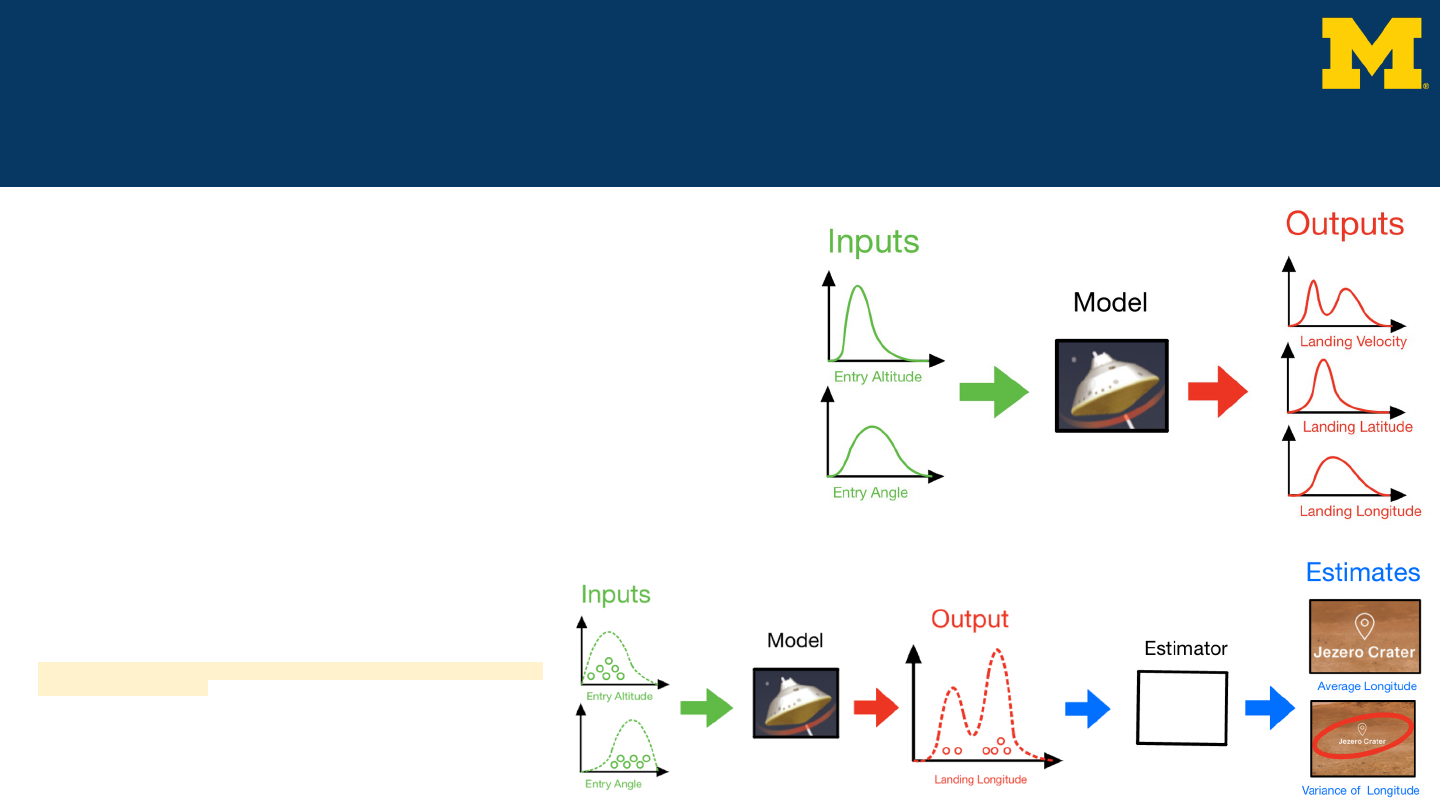

Uncertainty Quantification

● Example system

○ Entry, descent, and landing (EDL)

○ Perseverance Mars rover

● System uncertainties

○ Entry velocity

○ Entry location

● Multiple fidelity models

○ Full physics model

○ Reduced physics models

○ Machine learning

● Multiple outputs

○ Landing location

○ Landing velocity

● Multiple statistics

○ Mean

○ Variance

○ Sobol index

https://mars.nasa.gov/mars2020/timeline/landing/entry-descent-landing/

2

Thomas Dixon

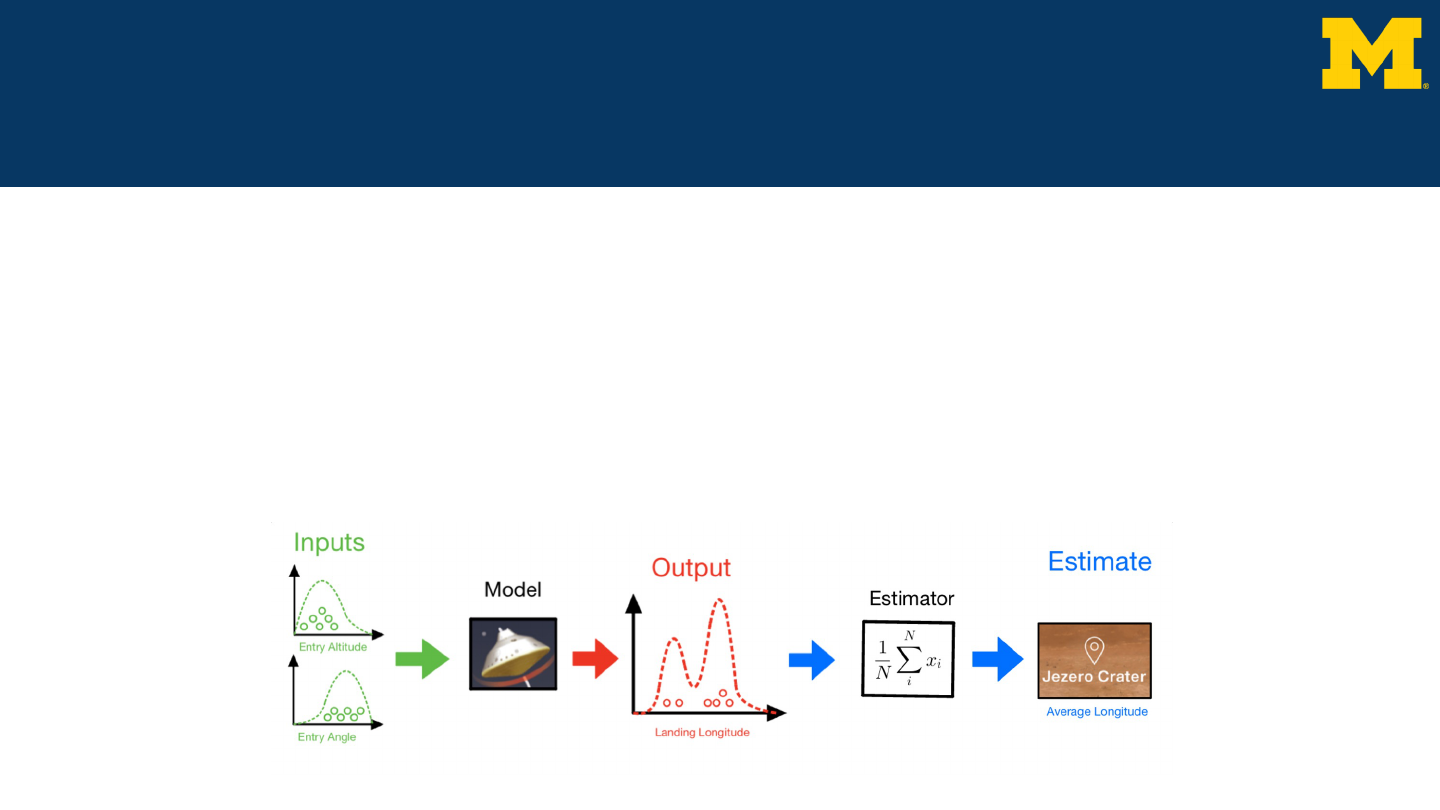

Sampling and Estimation

● Process:

○ Sample from the input space

○ Run the samples through the model

○ Estimate statistics using function evaluations

● Problem:

○ Accurate statistics are very expensive

○ Many function evaluations are required for accurate model statistics

3

Thomas Dixon

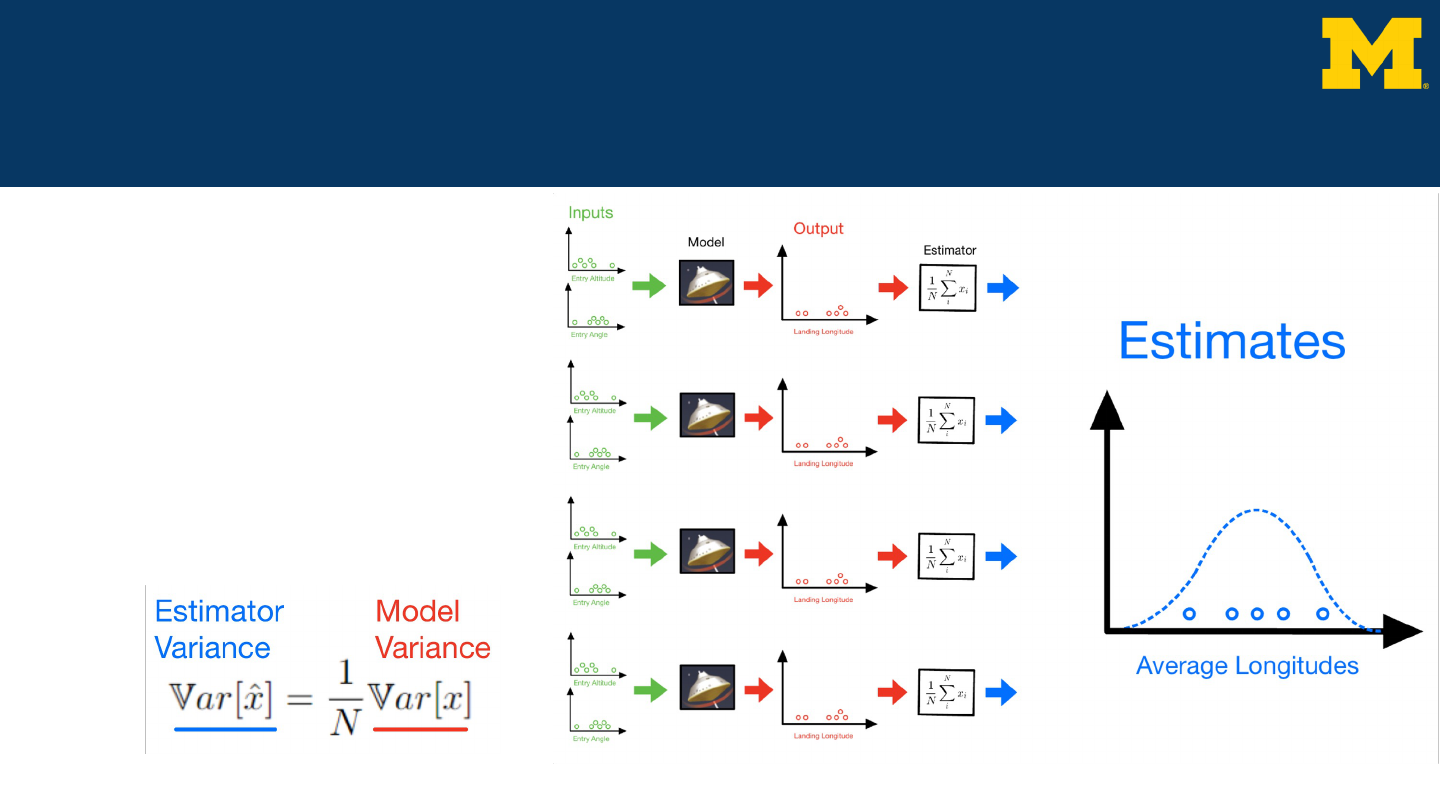

Variance Reduction

● Goal:

○ Reduce the variance of

the estimator

○ Increase the confidence

in an estimation

○ Reduce the cost of

estimation for fixed

confidence

● Monte Carlo Variance

4

Thomas Dixon

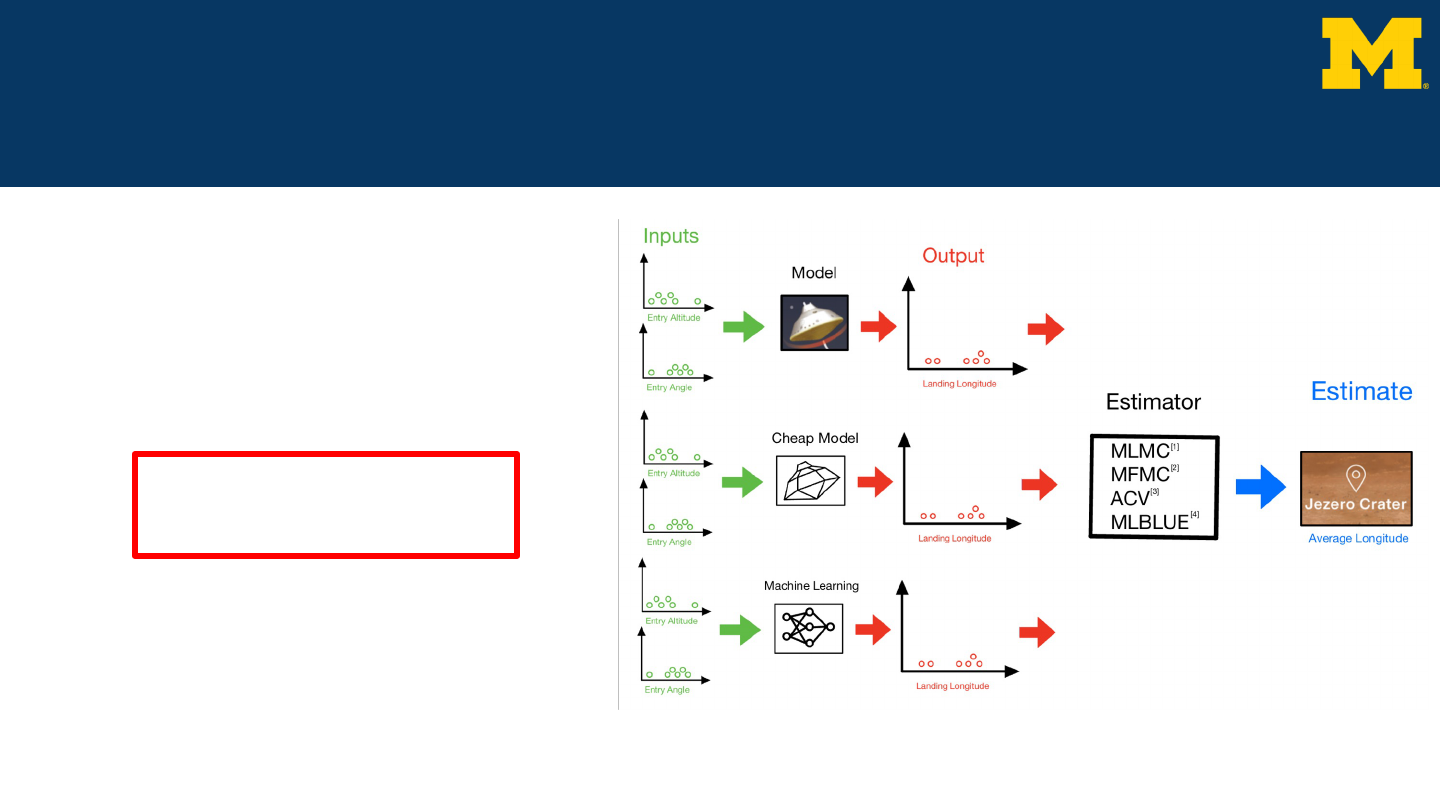

Multi-Fidelity Estimation

● Use multiple models for

variance reduction

● Cheaper data is available

from multiple sources

5

Thomas Dixon

[1] Giles. (2007). “Variance Reduction through Multilevel Monte Carlo Path Calculations”

[2] Peherstorfer, et al. (2016). “Optimal Model Management for Multifidelity Monte Carlo Estimation”

[3] Gorodetsky, et al. (2020). “A generalized approximate control variate framework for multifidelity uncertainty quantification”

[4] Schaden, et al. (2020) “On multilevel best linear unbiased estimators”

Every Method Requires:

Covariance of Estimators

Multi-Output Estimation

6

● Multiple outputs of the model

○ Velocity, latitude, longitude

● Multiple statistics of the model

○ Simultaneous mean and variance estimation

● Previous Work:

● Multi-Output MLBLUE

[6]

Thomas Dixon

[5] Croci et al. (2023). “Multi-output multilevel best linear unbiased estimators via

semidefinite programming”

[6] Destouches et al. (2023). “Multivariate extensions of the Multilevel Best Linear

Unbiased Estimator for ensemble-variational data assimilation”

Contributions and Rest of Talk

● Contributions

○ Closed-form expressions for estimator covariances

○ Multi-output approximate control variate (ACV) estimator

● Outline

○ Control variates

■ Estimator covariances

○ Theoretical results

○ Experiments and results

■ EDL example

○ Future work

7

Thomas Dixon

Low-fidelity

Many samples

High-fidelity

Few samples

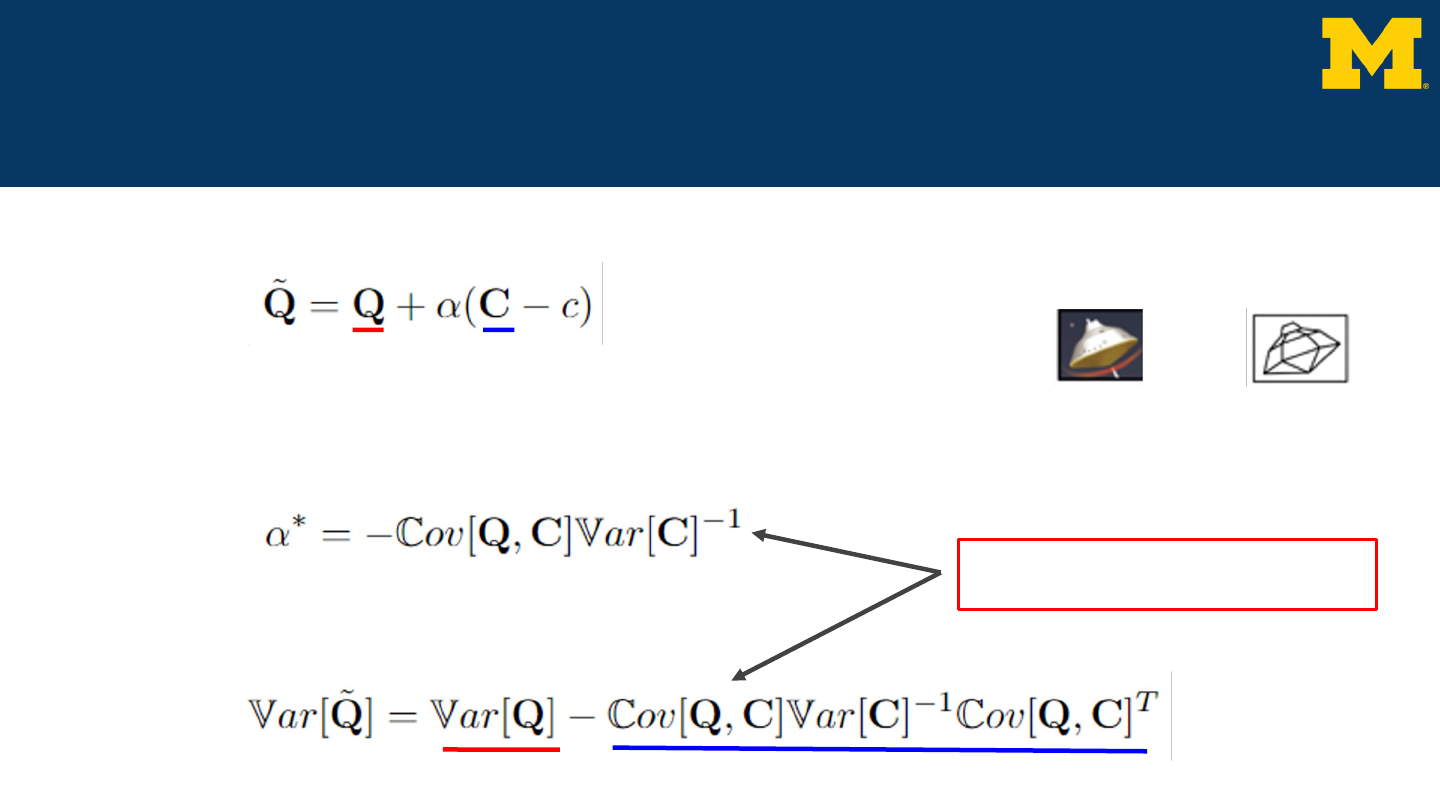

Control Variates

● Using correlated random variable to reduce variance

○ Control variate has known mean

○ Unbiased estimator

● Minimize variance to find optimal weight

● Optimal variance

○ Variance is reduced

8

Thomas Dixon

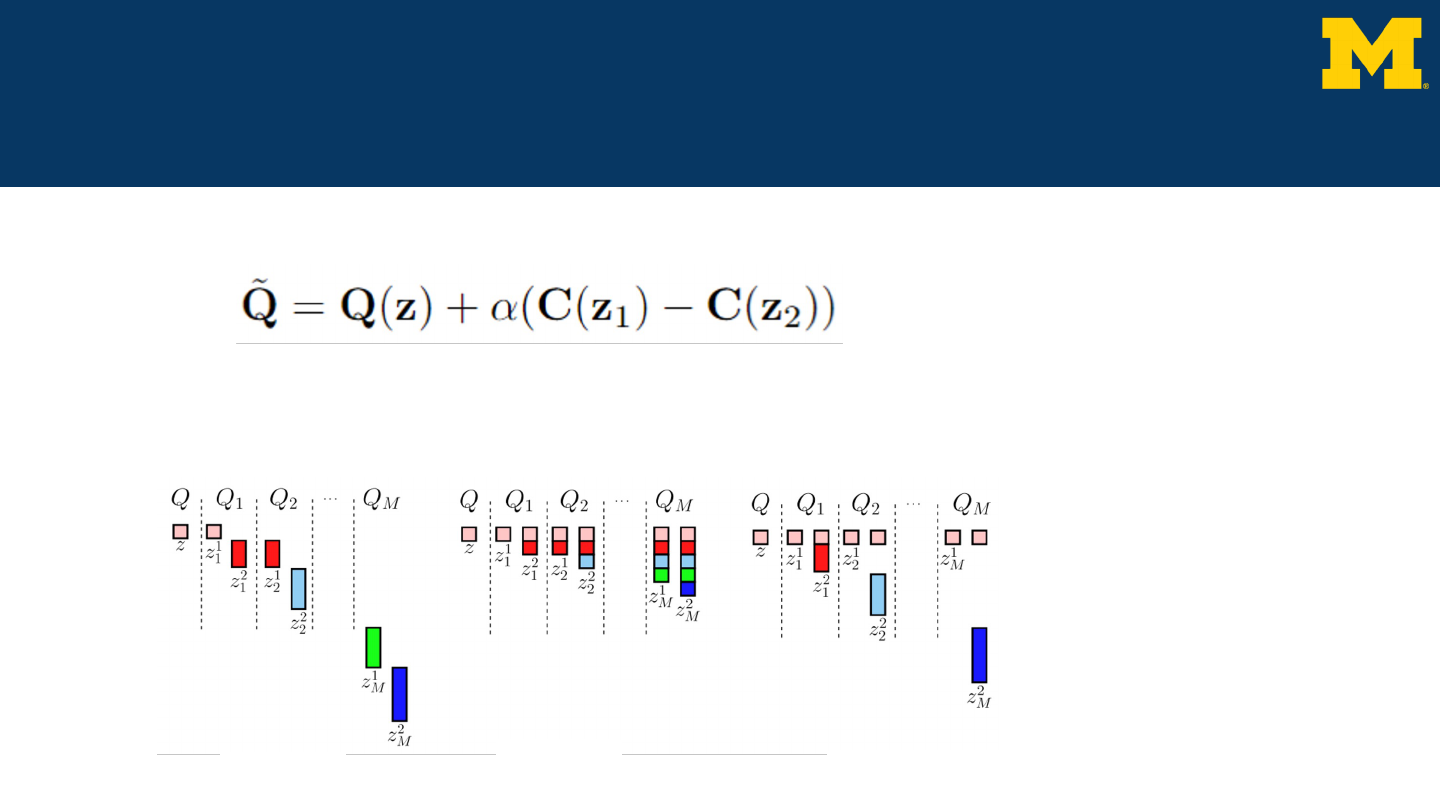

Covariance of Estimators

ACVs

● Control variate with unknown mean

○ Need to estimate the mean of the control variate

○ Control variate estimators take different input samples (z)

● Multiple control variates

9

Thomas Dixon

“A generalized approximate control variate framework for

multifidelity uncertainty quantification.” Gorodetsky, et al.

(2020)

(a) MLMC (b) MFMC

(c) ACV Independent

Samples

(a) Multilevel Monte

Carlo (MLMC)

(b) Multifidelity Monte

Carlo (MFMC)

(c) ACV Independent

Samples

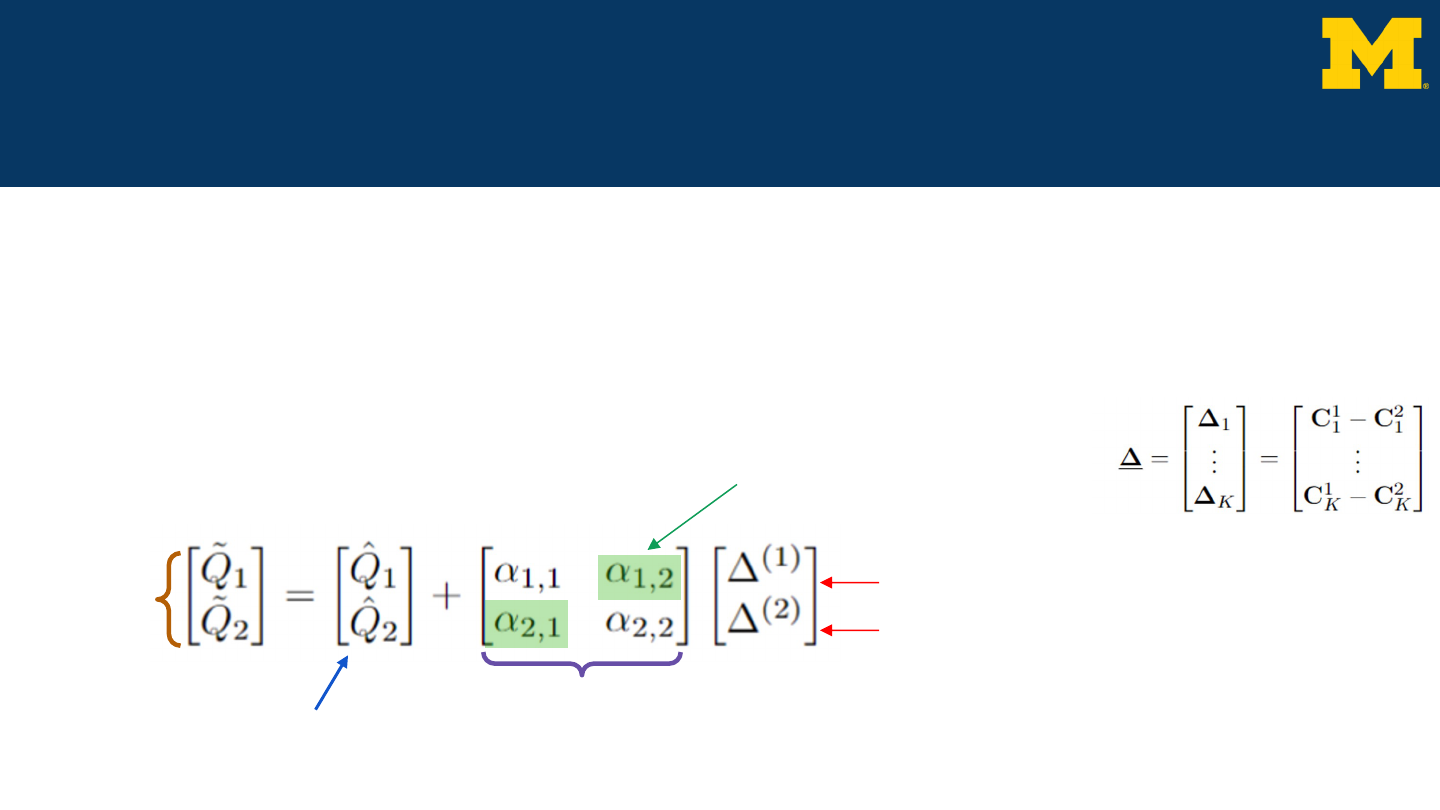

Multi-Output ACV (MOACV)

● Creating one estimator for all outputs

○ Leverages correlations between model outputs

○ Uses model outputs across fidelities as control variates for other outputs

● Previously, separate estimators have been created for each model output

● Old estimator equation:

○ Two outputs

○ One low fidelity model

10

Thomas Dixon

First model output

Second model output

Unused correlation

High-fidelity

estimator

ACV

estimator

Control variate

weights

Multi-Output ACV (MOACV)

● Creating one estimator for all outputs

○ Leverages correlations between model outputs

○ Uses model outputs across fidelities as control variates for other outputs

● Previously, separate estimators have been created for each model output

● Old estimator equation:

○ Two outputs

○ One low fidelity model

11

Thomas Dixon

First model output

Second model output

New weights

High-fidelity

estimator

ACV

estimator

Control variate

weights

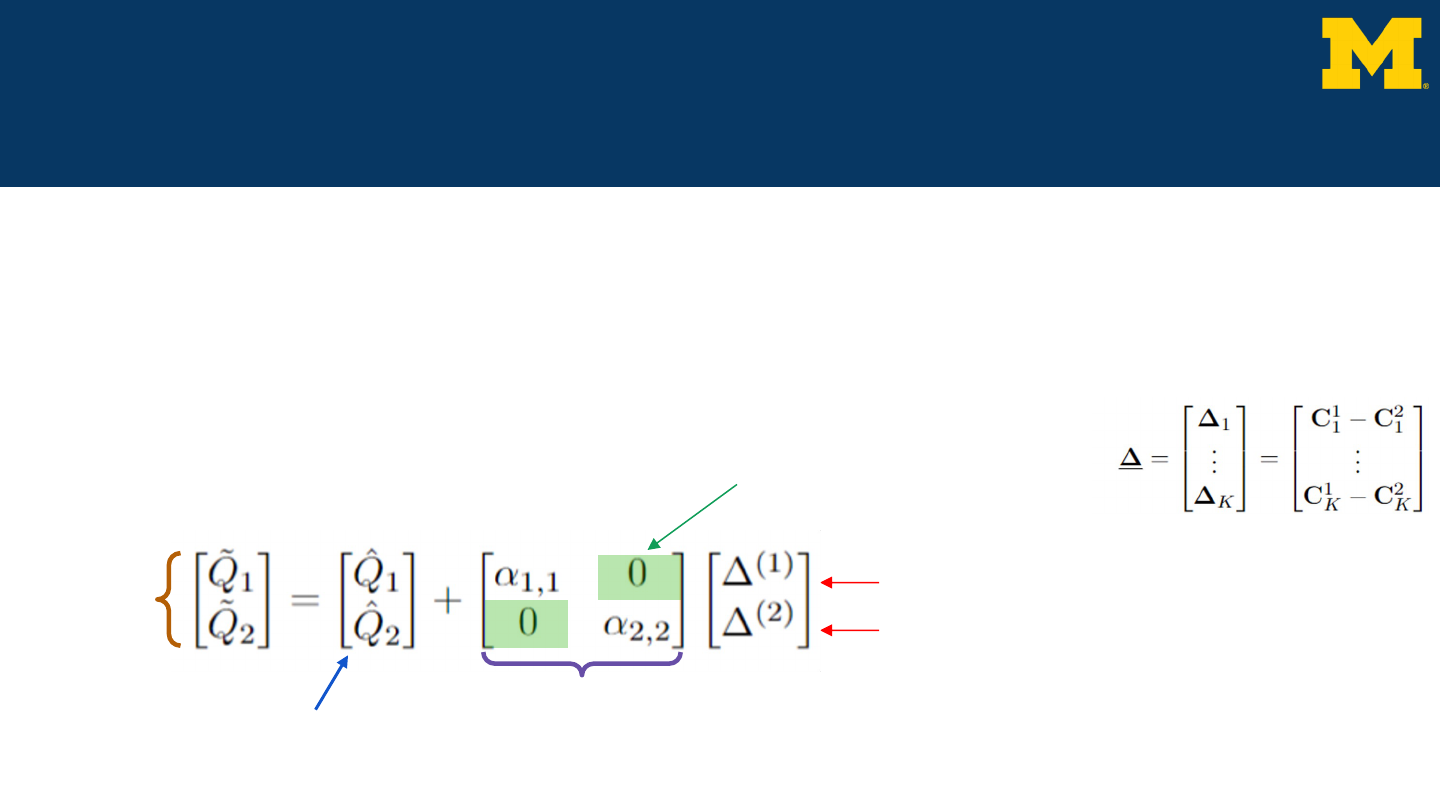

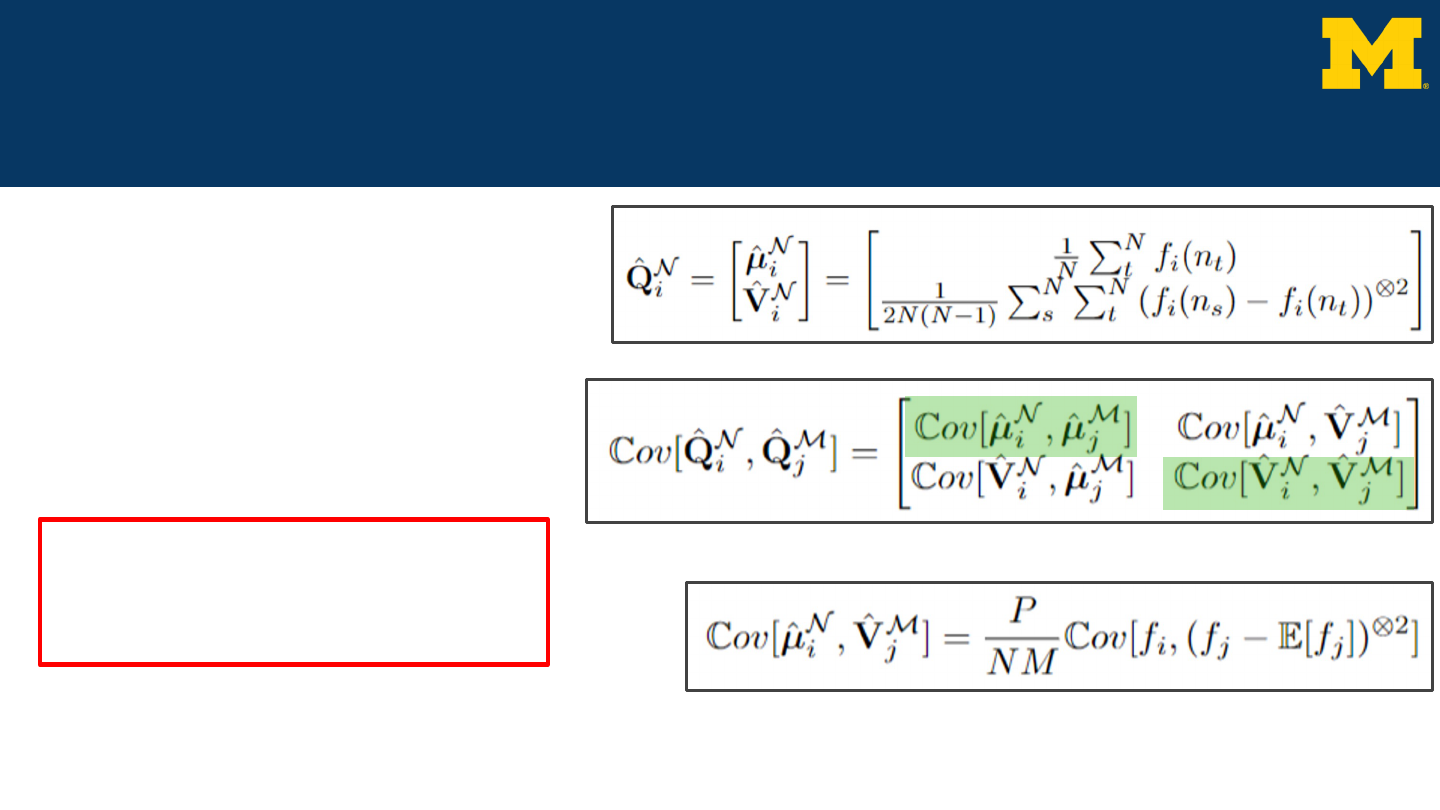

Combining Estimators - Multiple Statistics

● Simultaneous statistic estimation

○ Further reduced variance

● Correlations between estimators can also be extracted

● Equation

○ Any number of outputs

■ Mean

■ Variance

○ One low-fidelity model

○ Boxed in green are the new control variate weights

12

Thomas Dixon

ACV mean estimator

ACV variance estimator

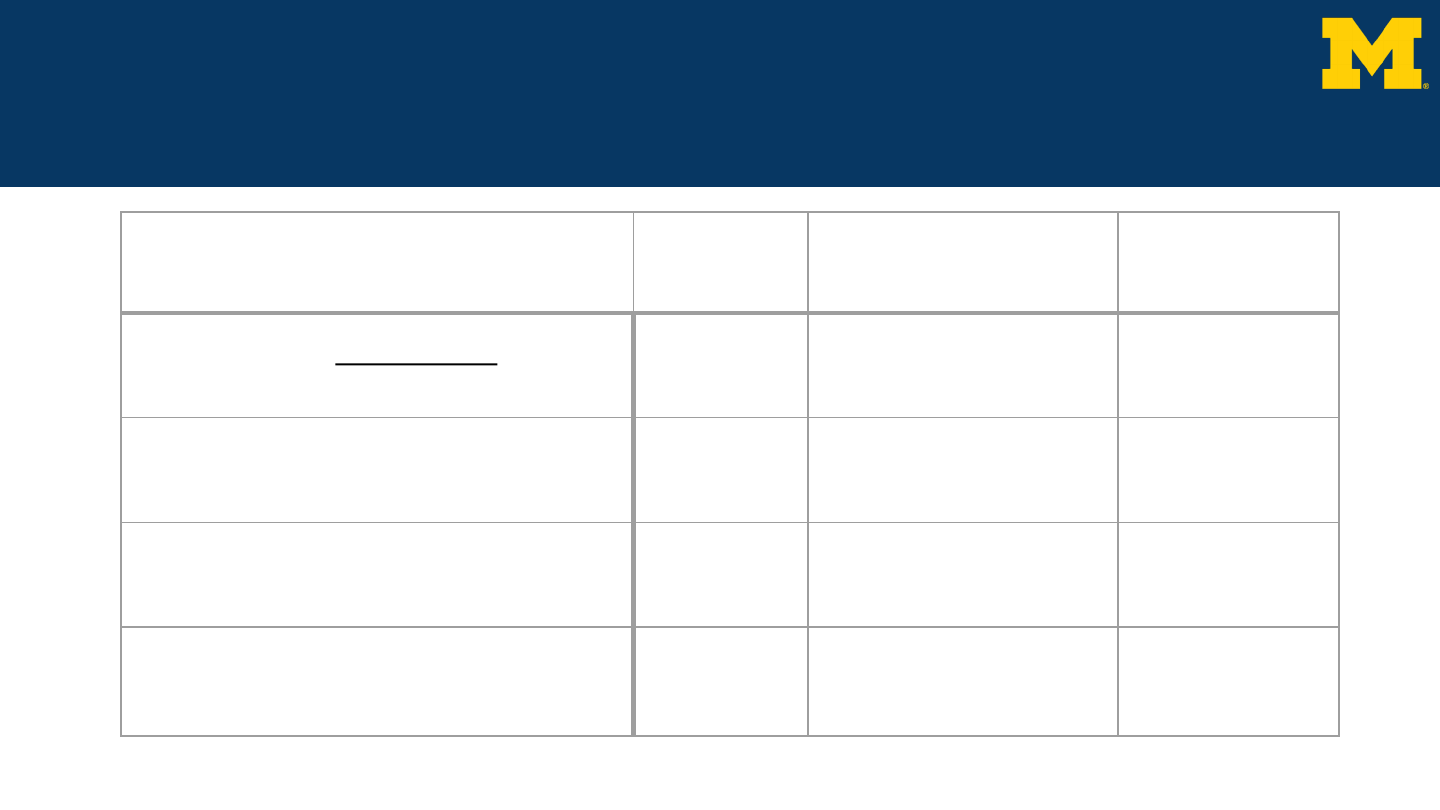

Types of Estimators

13

Thomas Dixon

Estimator Statistics

Quantities of

Interest

Fidelities

MC - Monte Carlo

Single Single Single

ACV

Single Single Multiple

MOACV

Single Multiple Multiple

Combined MOACV

Multiple Multiple Multiple

Theoretical Results

Mean Estimator Covariances

● Mean estimation

● Covariance between mean estimators

● Required covariances

14

Thomas Dixon

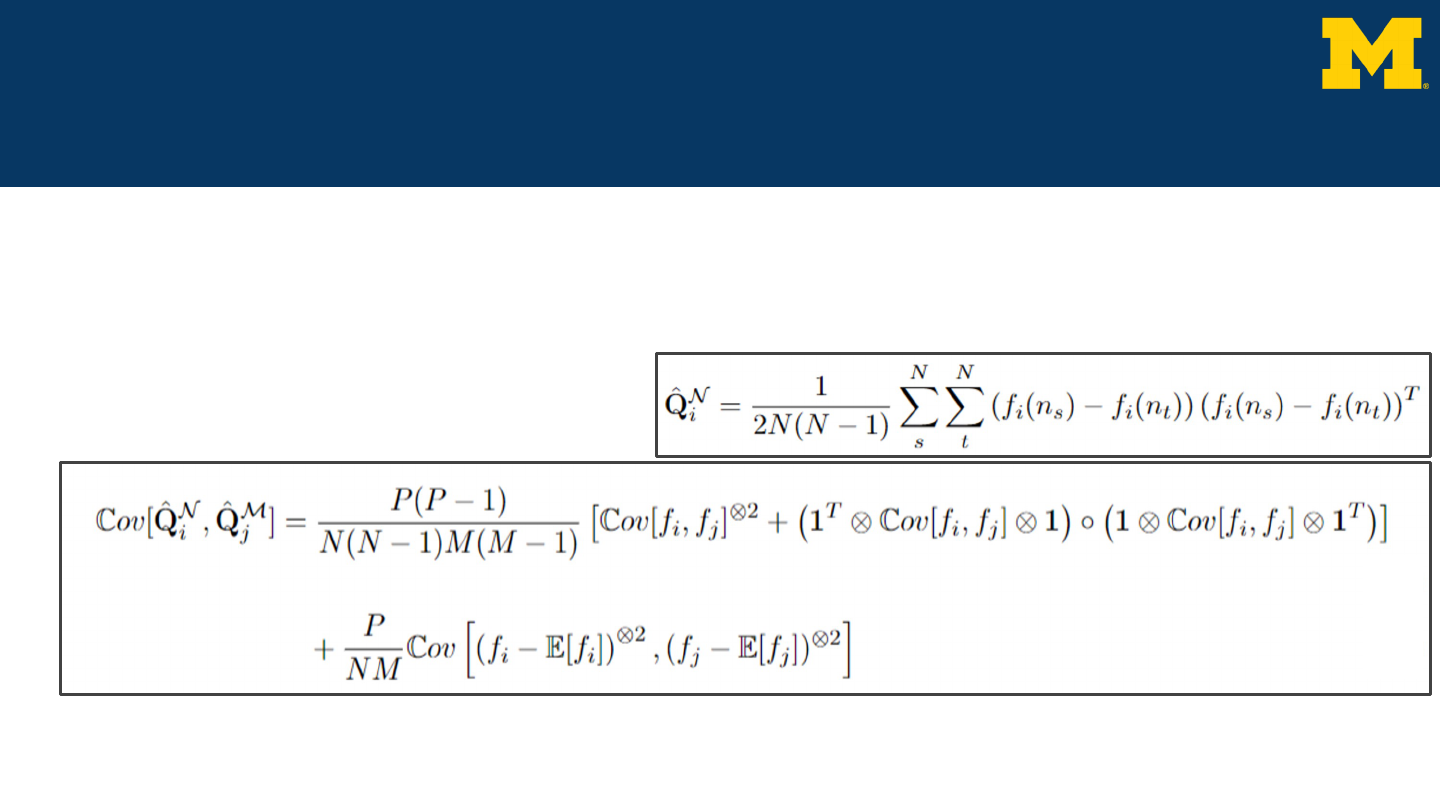

Theoretical Results

Variance Estimator Covariances

● Variance estimation

● Covariance between variance estimators

15

Thomas Dixon

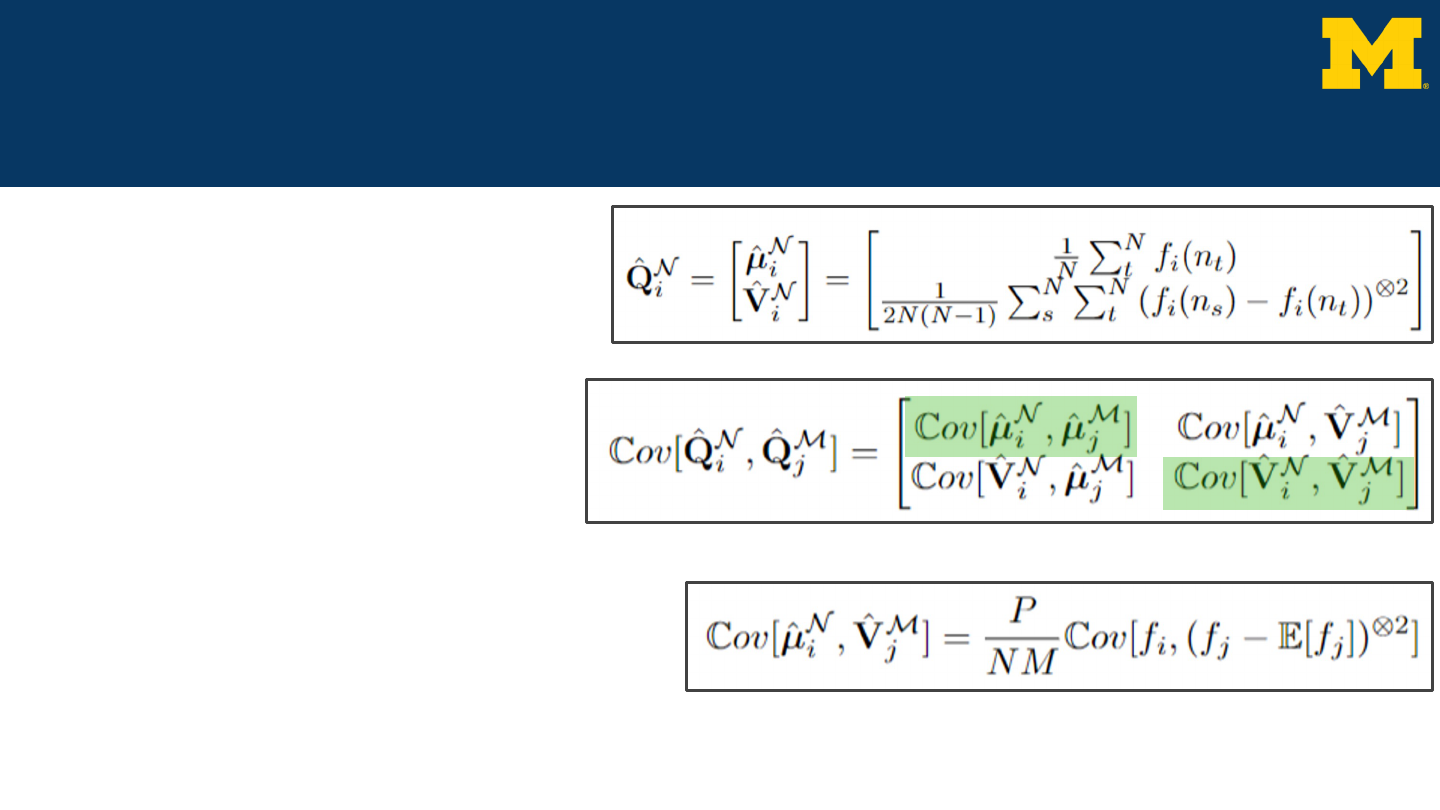

Theoretical Results

Combined Estimator Covariances

● Mean and variance

estimation

● Covariance between

estimators

○ Using previous results

16

Thomas Dixon

Theoretical Results

Combined Estimator Covariances

● Mean and variance

estimation

● Covariance between

estimators

○ Using previous results

17

Thomas Dixon

Main takeaway:

Closed-form expressions for the

covariance between estimators

EDL Application

● “Multi-Model Monte Carlo Estimators for

Trajectory Simulation” by Warner, et al. (2021)

● 75 uncertain inputs

● 15 Quantities of Interest (QoIs)

● One high-fidelity model

○ Trajectory simulation

● Three low-fidelity models

○ Reduced physics model

○ Coarse time step model

○ Machine learning model

● Mean and variance estimation

○ Optimized sample allocation

○ Minimized weighted sum of variances

18

Thomas Dixon

s

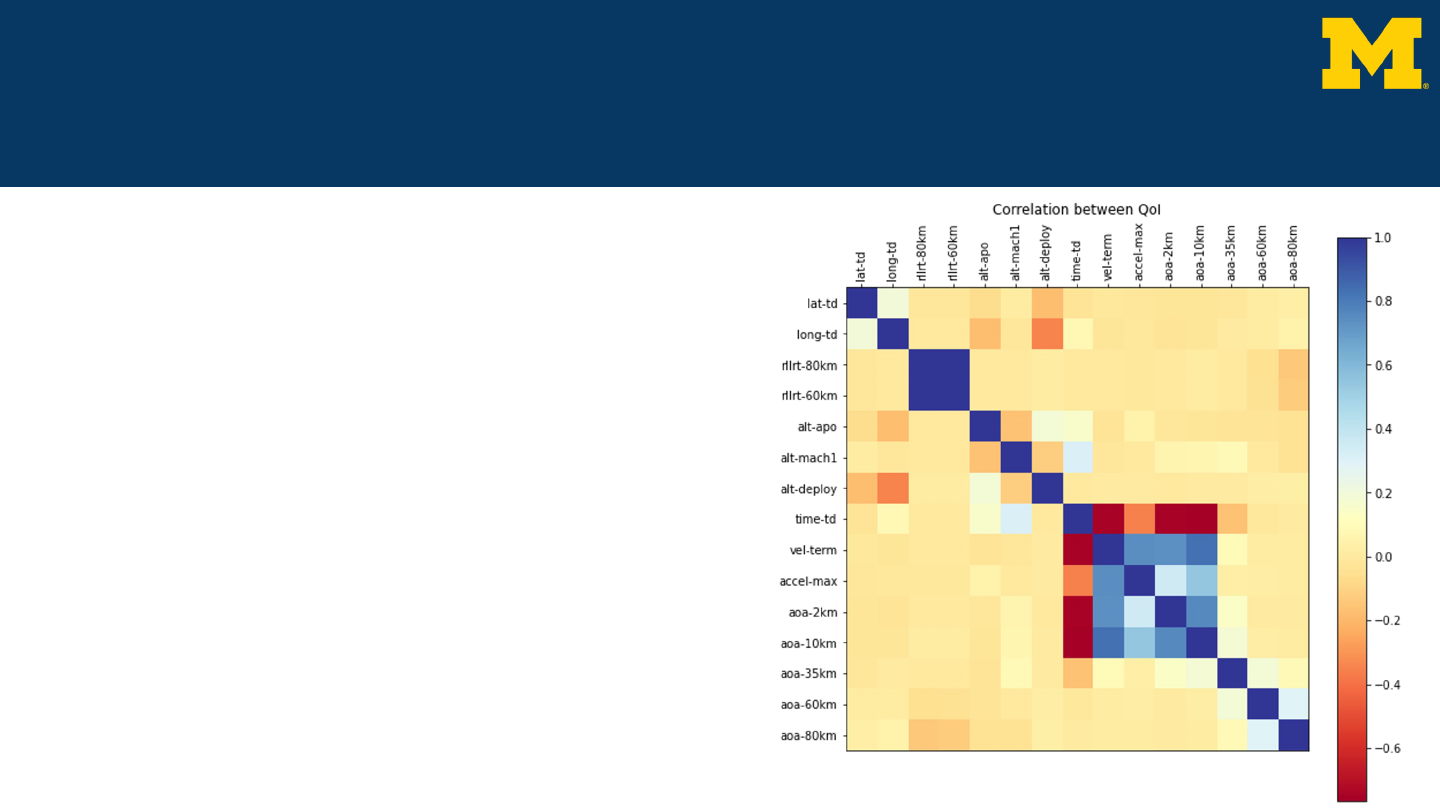

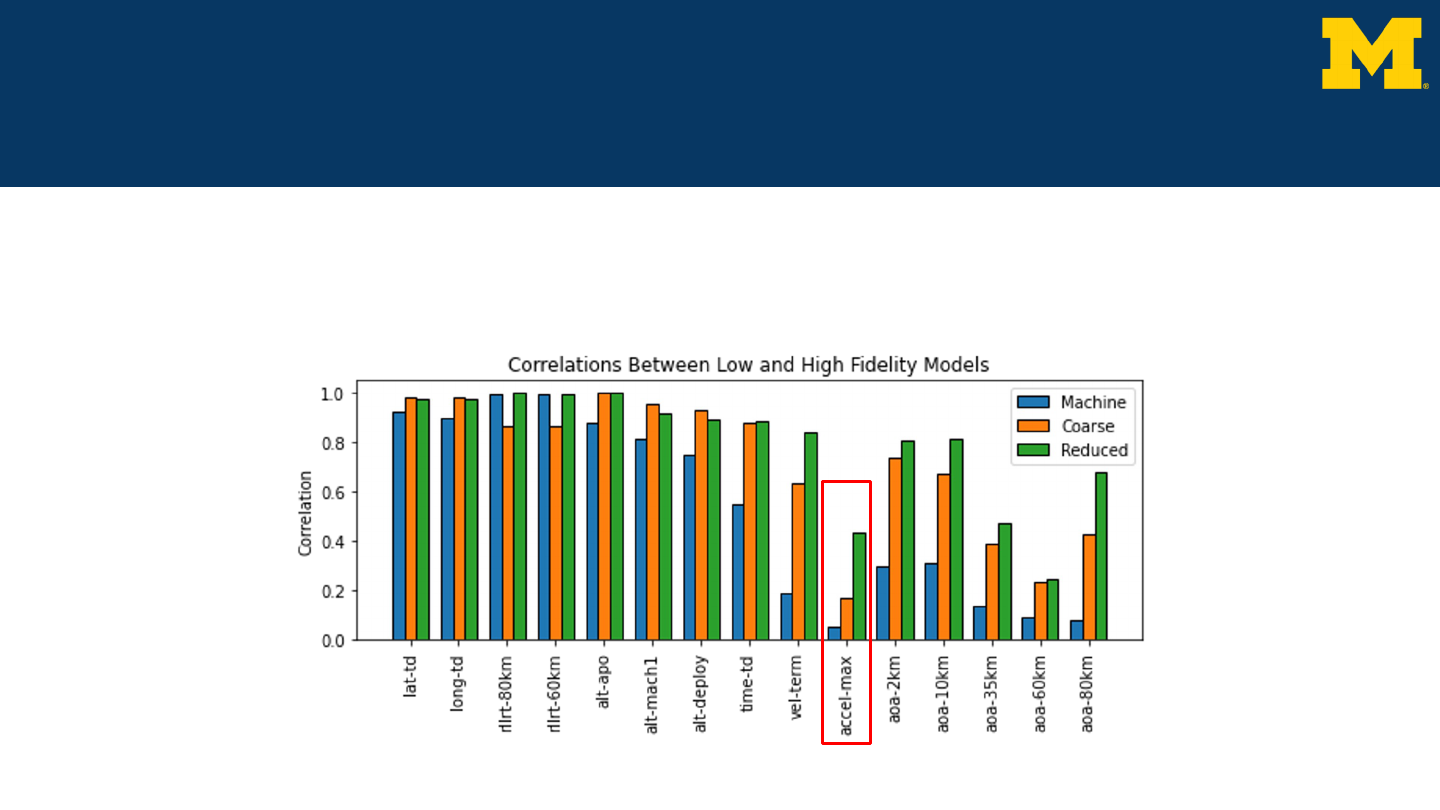

Correlation Between Models

● Plotting correlations between high-fidelity and the low-fidelity models

○ Each QoI has a separate correlation between models

● Maximum acceleration has low correlations between models

19

Thomas Dixon

QoIs

Types of Estimators

20

Thomas Dixon

Estimator Statistics QoIs Fidelities

MC

Single Single Single

ACV

Single Single Multiple

MOACV

Single Multiple Multiple

Combined MOACV

Multiple Multiple Multiple

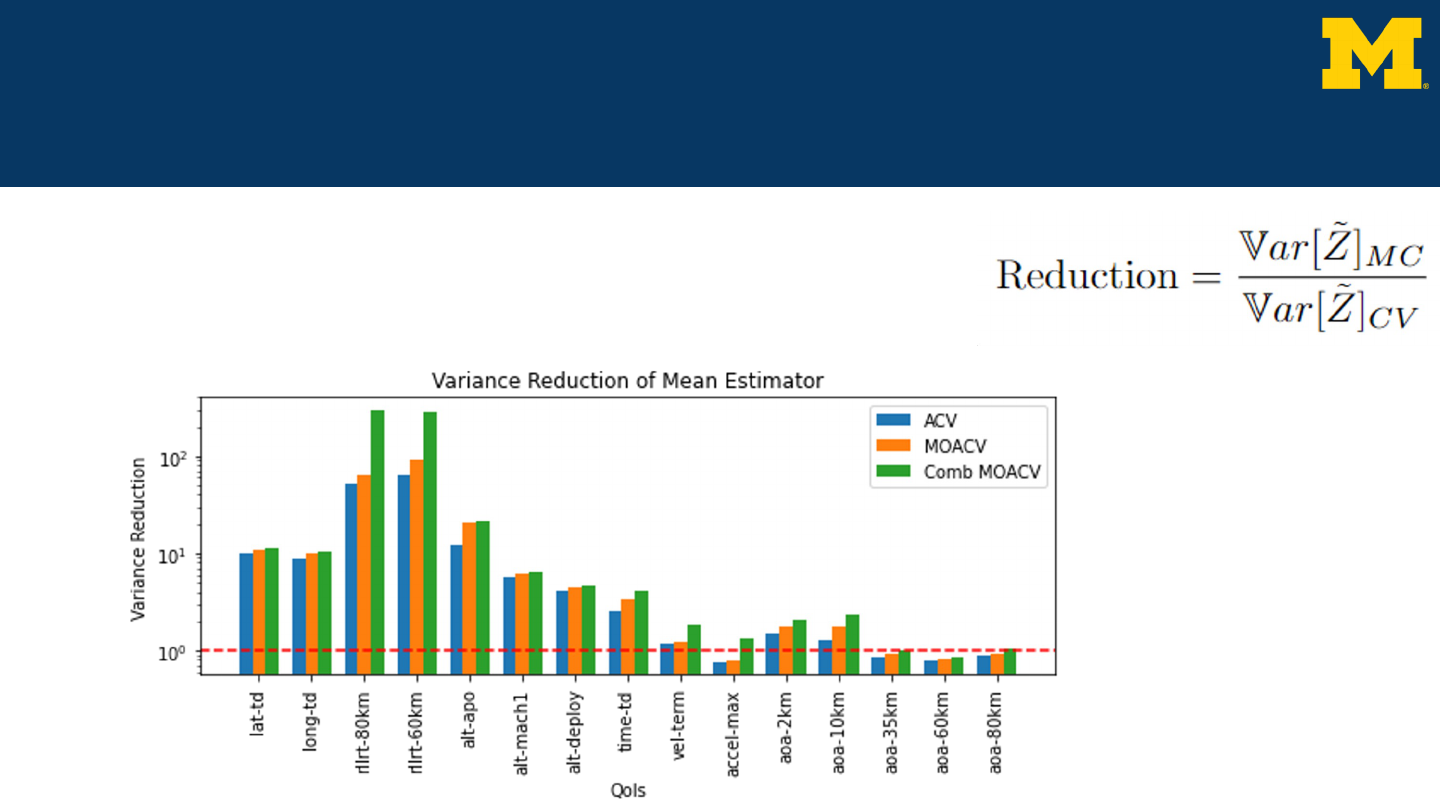

Results - Mean Estimation

● Variance reduction for each QoI

● Significant reduction in QoI with high correlations

● MC achieved at red line

21

Thomas Dixon

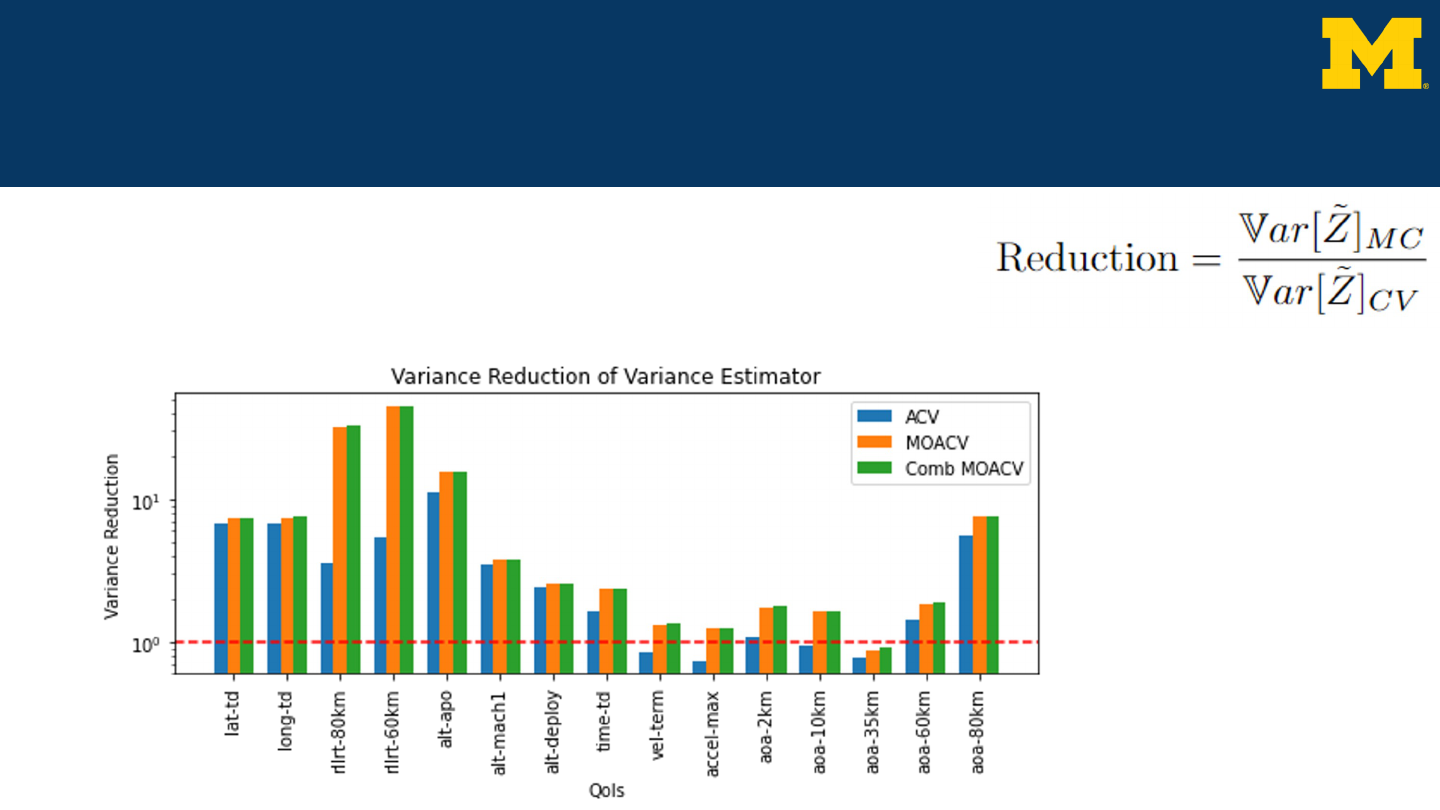

Results - Variance Estimation

● Significant variance reduction achieved

compared to traditional approaches

22

Thomas Dixon

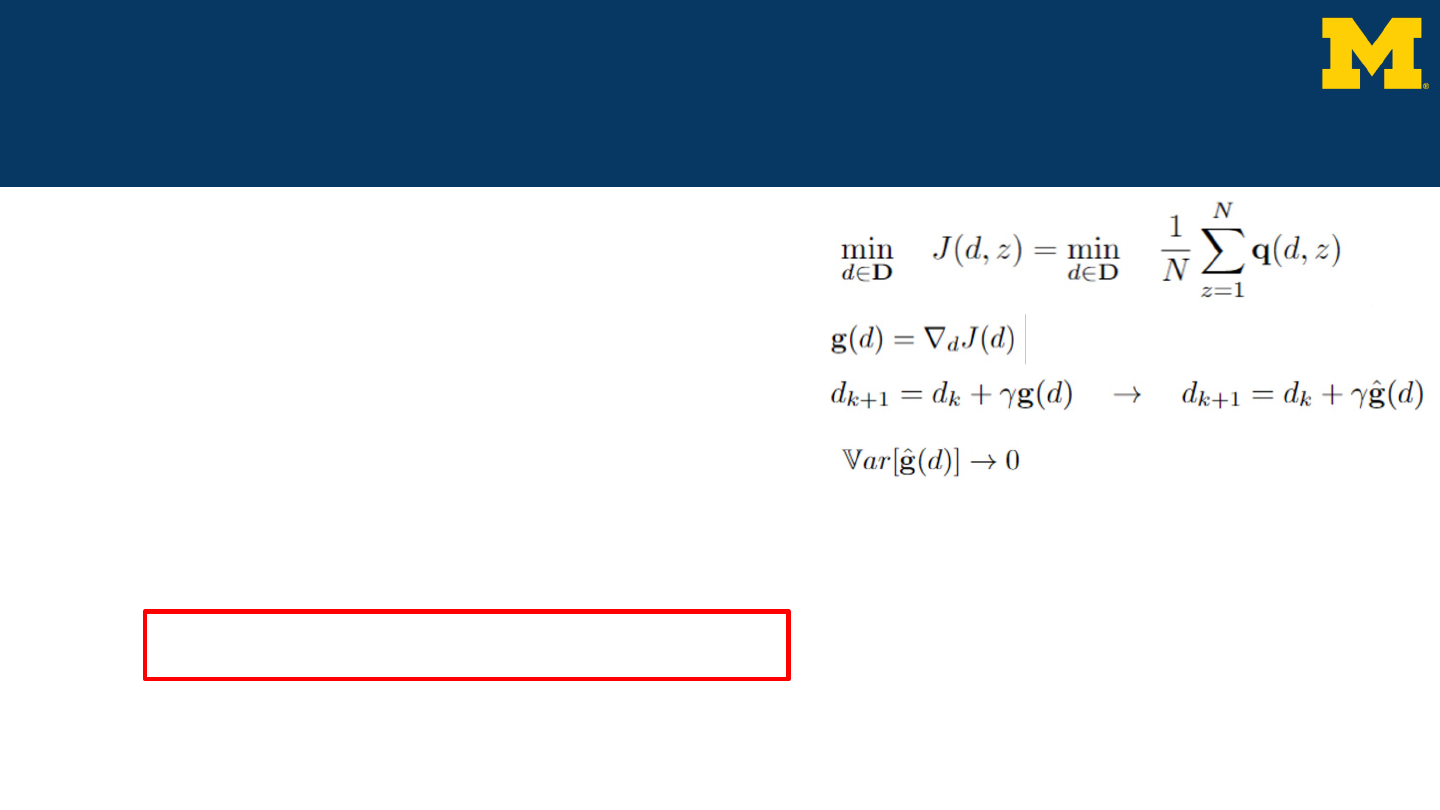

Future Work

Stochastic Optimization

● Goal: Minimize finite-sum objective function

● Stochastic gradient descent

● Approximate the gradient of the objective

● Variance reduction methods

○ Variance of estimate approaches 0

○ Examples:

■ Stochastic variance reduced gradient (SVRG)

[1]

■ Stochastic average gradient (SAG)

[2]

● Use MOACV to reduce variance further

23

Thomas Dixon

[1] Johnson, et al. (2013). “Accelerating stochastic gradient descent using predictive variance reduction”

[2] Roux, et al. (2012). “A stochastic gradient method with an exponential convergence rate for finite training sets”

Conclusion

● Derived closed-form expressions for estimator covariance

● New multi-output estimator outperforms previous methods

● Questions?

24

Thomas Dixon

Supplementary Material