Challenges in AI Infrastructure for

Enterprise Foundation Models

Jeffrey L. Burns, Ph.D.

Director, AI Compute and IBM Research AI Hardware Center

IBM Research

August 9, 2023

IBM Research / © 2023 IBM Corporation

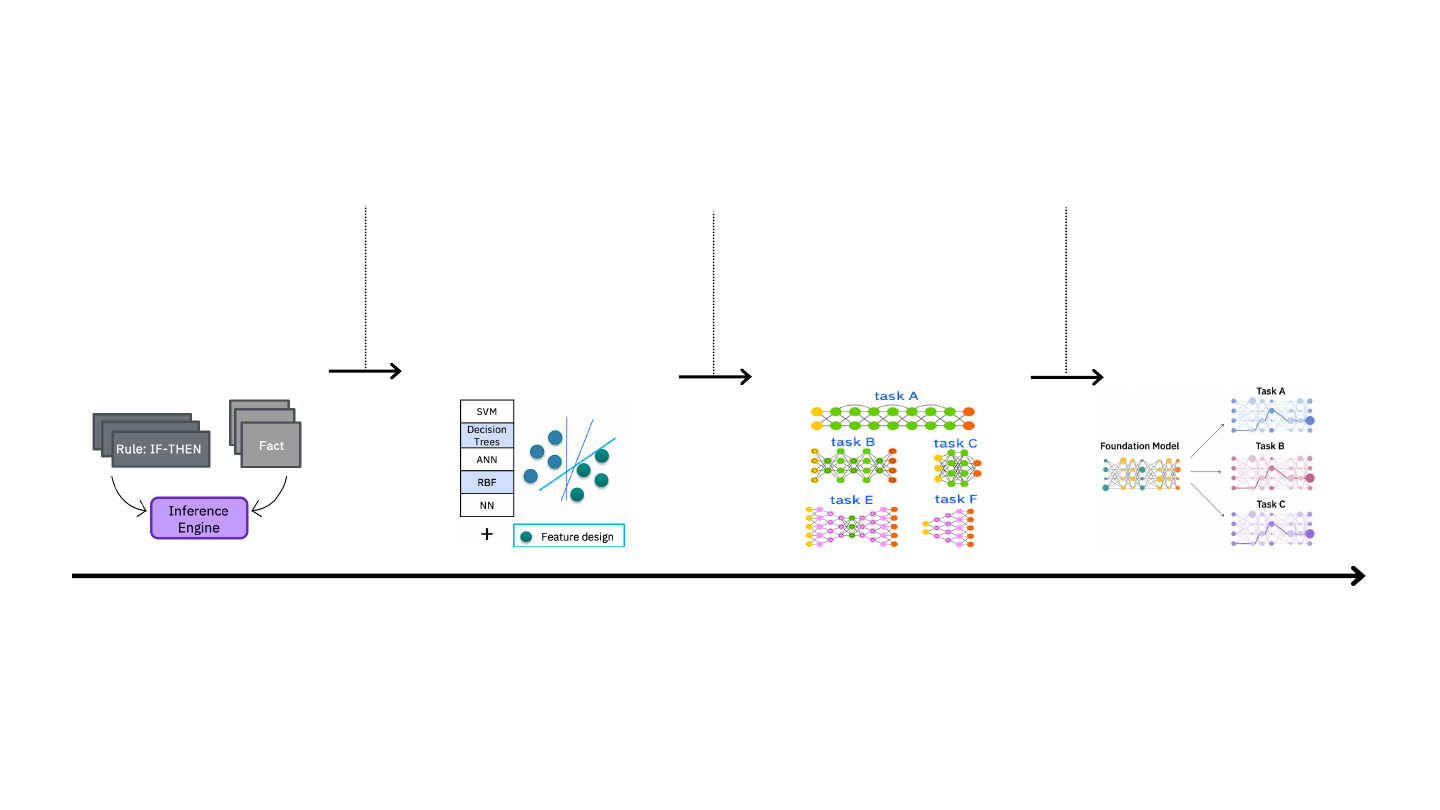

Foundation Models: An inflection point in

generalizable and adaptable representations

2

Expert Systems

Hand-crafted symbolic

representations

Machine Learning

Task-specific hand-crafted

feature representations

Deep Learning

Task-specific learnt

feature representations

1980s

1980s to 2012

Big data

Massive labeled data

+

Compute

Foundation Models

Generalizable & adaptable

learnt representations

Self-supervision at scale

+

Massive unlabeled data

+

Compute

2018+

IBM Research AI Hardware Center / © 2023 IBM Corporation

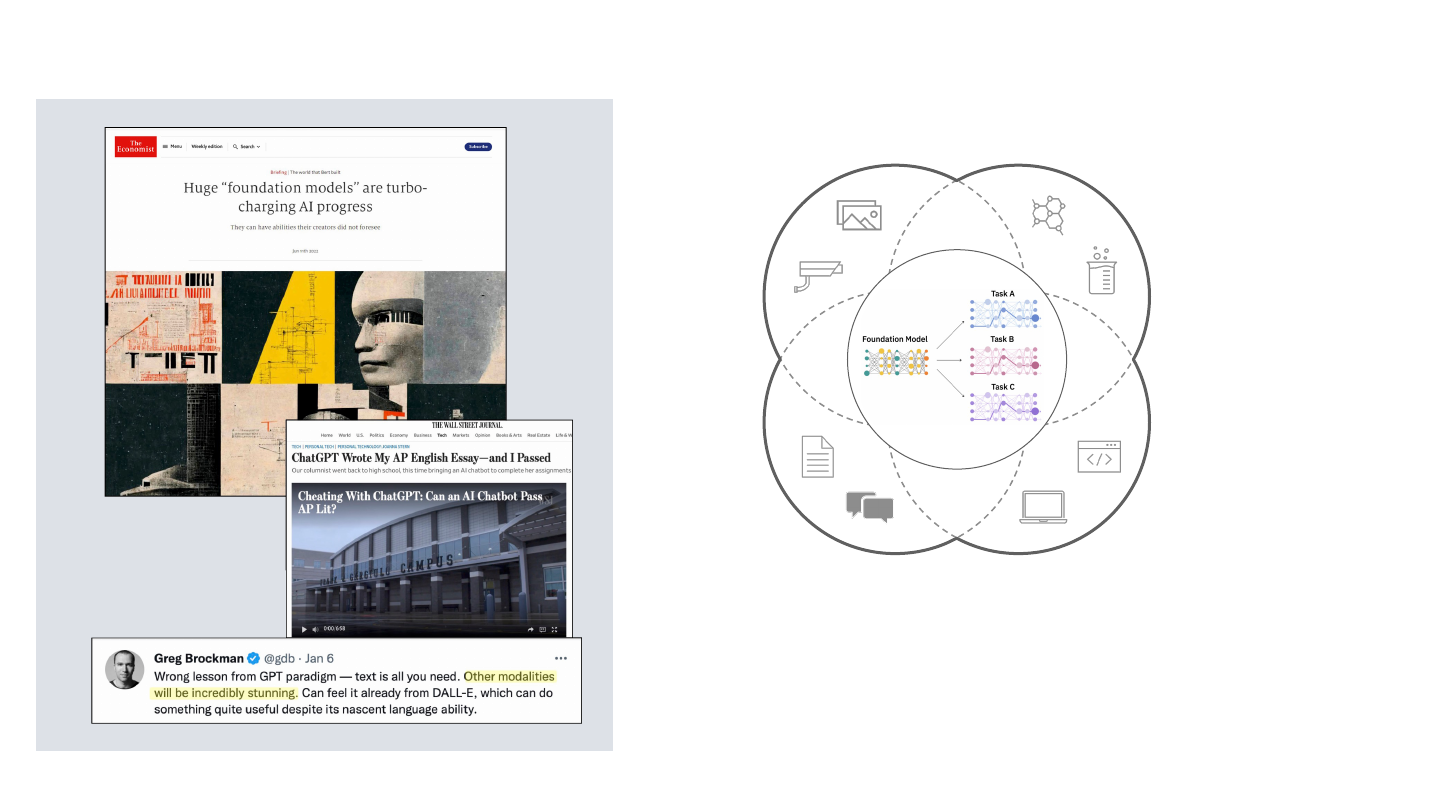

Incredible opportunities around enterprise applications

In each of these domains there is ample unlabeled data

available in enterprises, which can be used to train custom

foundation models, potentially opening the doors for solving

business problems that were previously considered intractable.

3

Chemistry

& Materials

Sensor Data

Natural Language

Programming

Languages (Code)

Structured

Business

Data

Geospatial

Data

Speech

IT Data

IBM Research AI Hardware Center / © 2023 IBM Corporation

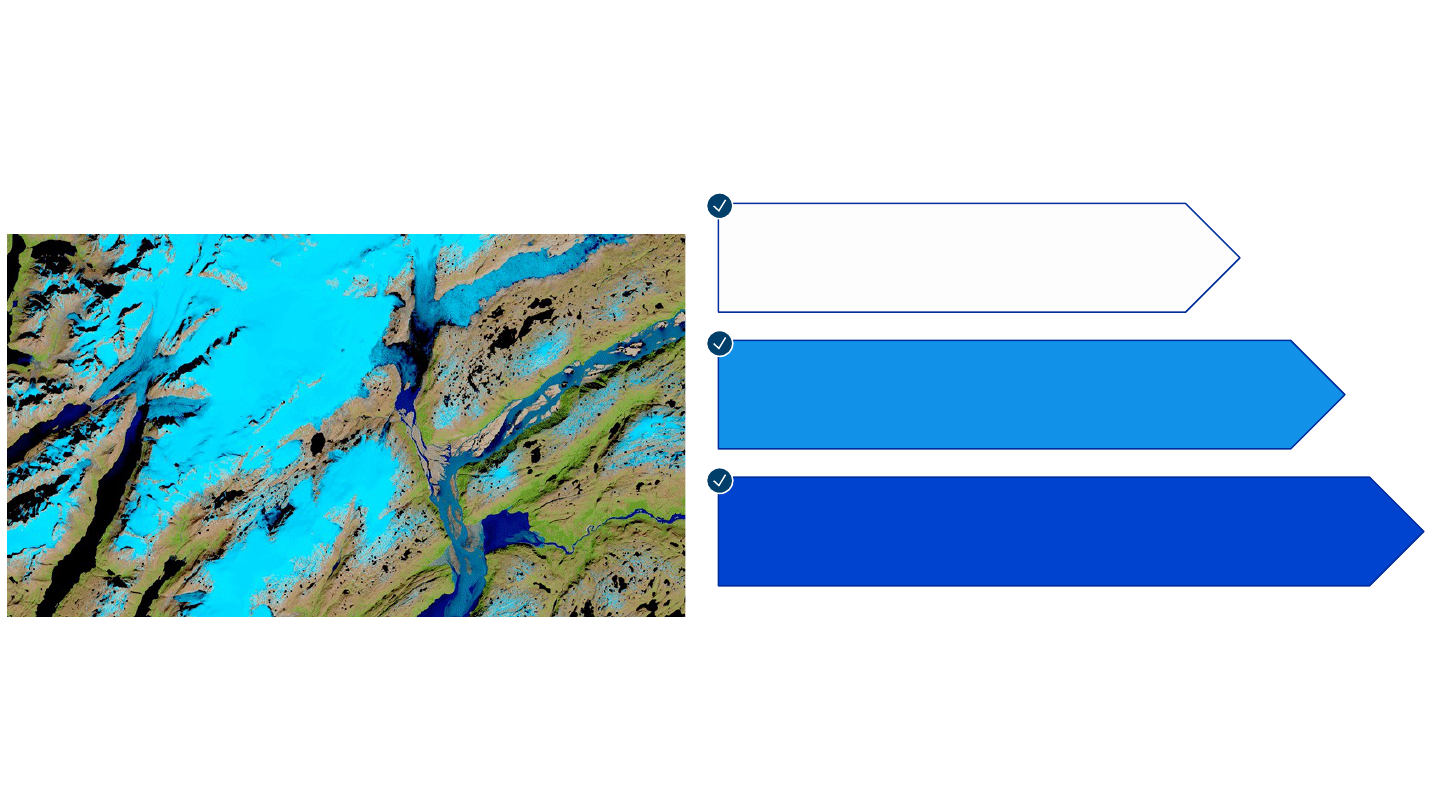

Geospatial Foundation Models

4

Pre-trained on sufficient datasets in

partnership with content-rich institutions

(e.g. NASA)

Leverage self-supervised learning (i.e., masking

imagery or timeseries)

Able to effectively complete multiple downstream tasks

while meeting accuracy baselines (e.g., flood mapping, land

cover classification, outage prediction)

Note: while transformer architecture is most prevalent in foundation

models, definition not restricted by model architecture

IBM and NASA have teamed up to apply

foundation

model AI technology

to leverage earth science data

for

geospatial intelligence

.

This work with NASA is part of an effort across IBM

Research to pioneer

applications of foundation

models beyond language.

IBM Research AI Hardware Center / © 2023 IBM Corporation

https://www.earthdata.nasa.gov/news/impact-ibm-hls-foundation-model

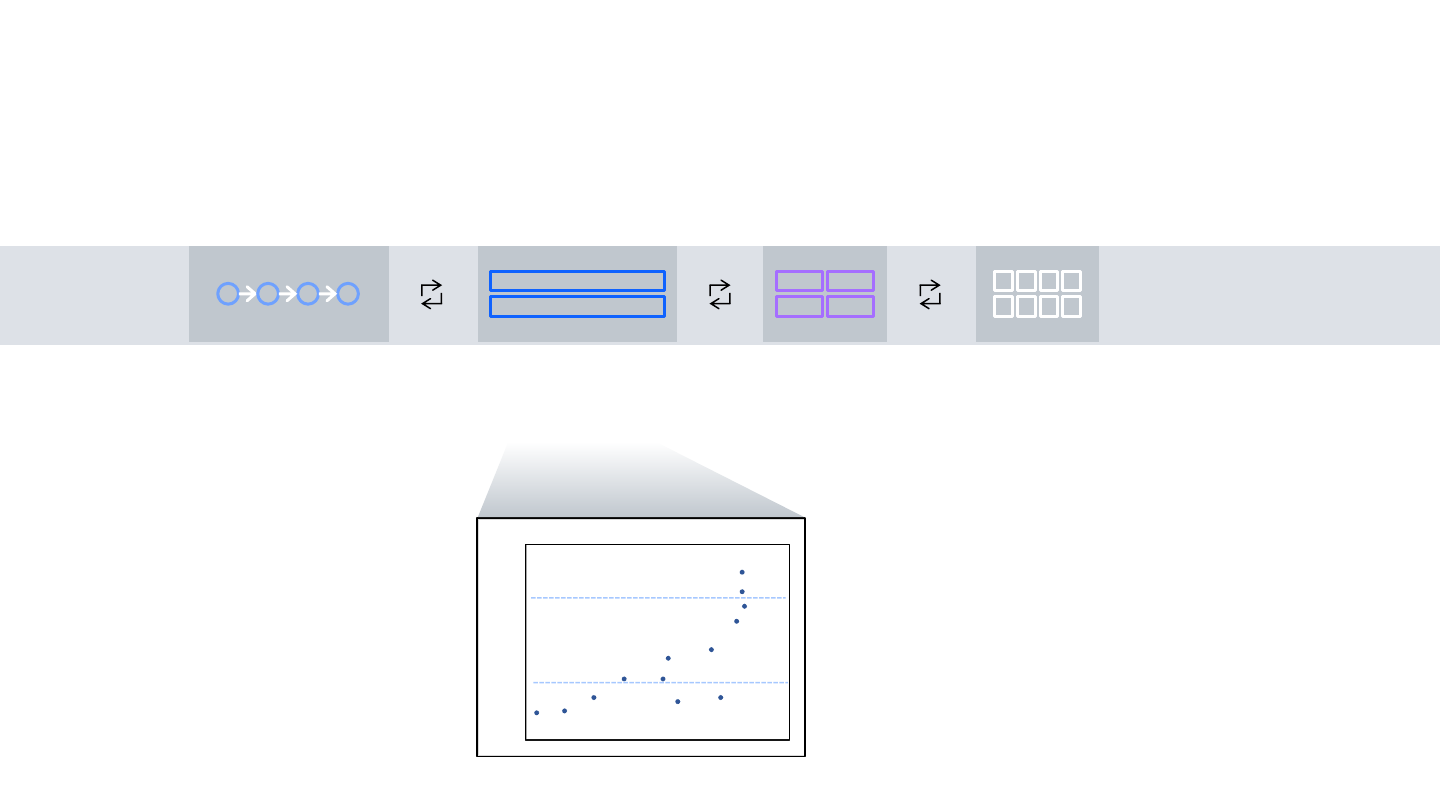

Optimizing the infrastructure for Foundation Models

Across the whole AI workflow

InferenceModel adaptationDistributed training

and model validation

May have sensitivity to

latency/throughput,

always cost-sensitive

Long-running job on

massive infrastructure

Data preparation

e.g., remove hate

and profanity,

deduplicate, etc.

ELMo

BERT Large

GPT-1

GPT-2 RoBERTa Large

Megatron

ALBERT xxl

Microsoft T-NLG

ELECTRA Large

GPT-3

GShard

Baidu RecSys-C

Baidu RecSys-E

1.00E+07

1.00E+08

1.00E+09

1.00E+10

1.00E+11

1.00E+12

1.00E+13

1.00E+14

Model Size (# of Params)

2018 2019 2020

1 billion

1 trillion

Model tuning with

custom data set for

downstream tasks

6

IBM Research AI Hardware Center / © 2023 IBM Corporation

AWS AzureOn-Prem

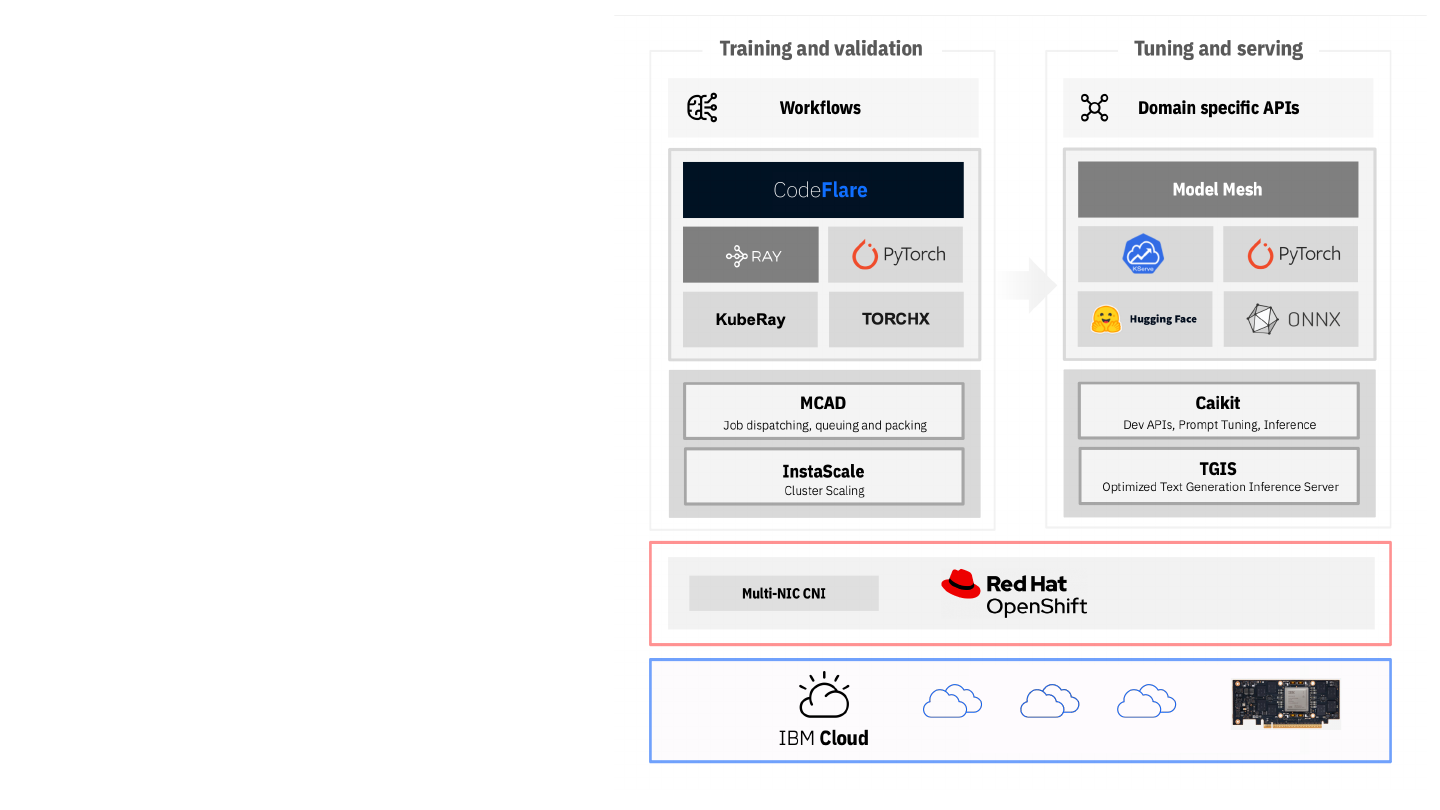

World-class infrastructure for training, tuning

and serving foundation models

(on-prem and in the cloud)

Platform that deliver portability and abstracts

infrastructure complexity

Middleware that simplifies end-to-end AI

workflow and optimizes use of underlying

infrastructure

Building the FM technology stack

7

IBM Research AI Hardware Center / © 2023 IBM Corporation

AI-optimized infrastructure

Cloud-native design for

large-scale distributed

model training

Training: Vela

N. Wang et al, NeurIPS 2022

https://research.ibm.com/blog/AI-supercompu te r -Vela-GP U-cluster https://research.ibm.com/blog/ibm-artificial-intellige nce-unit-aiu

Inference: IBM AIU

Designed for energy-

efficient AI compute at

reduced precision

8

IBM Research AI Hardware Center / © 2023 IBM Corporation

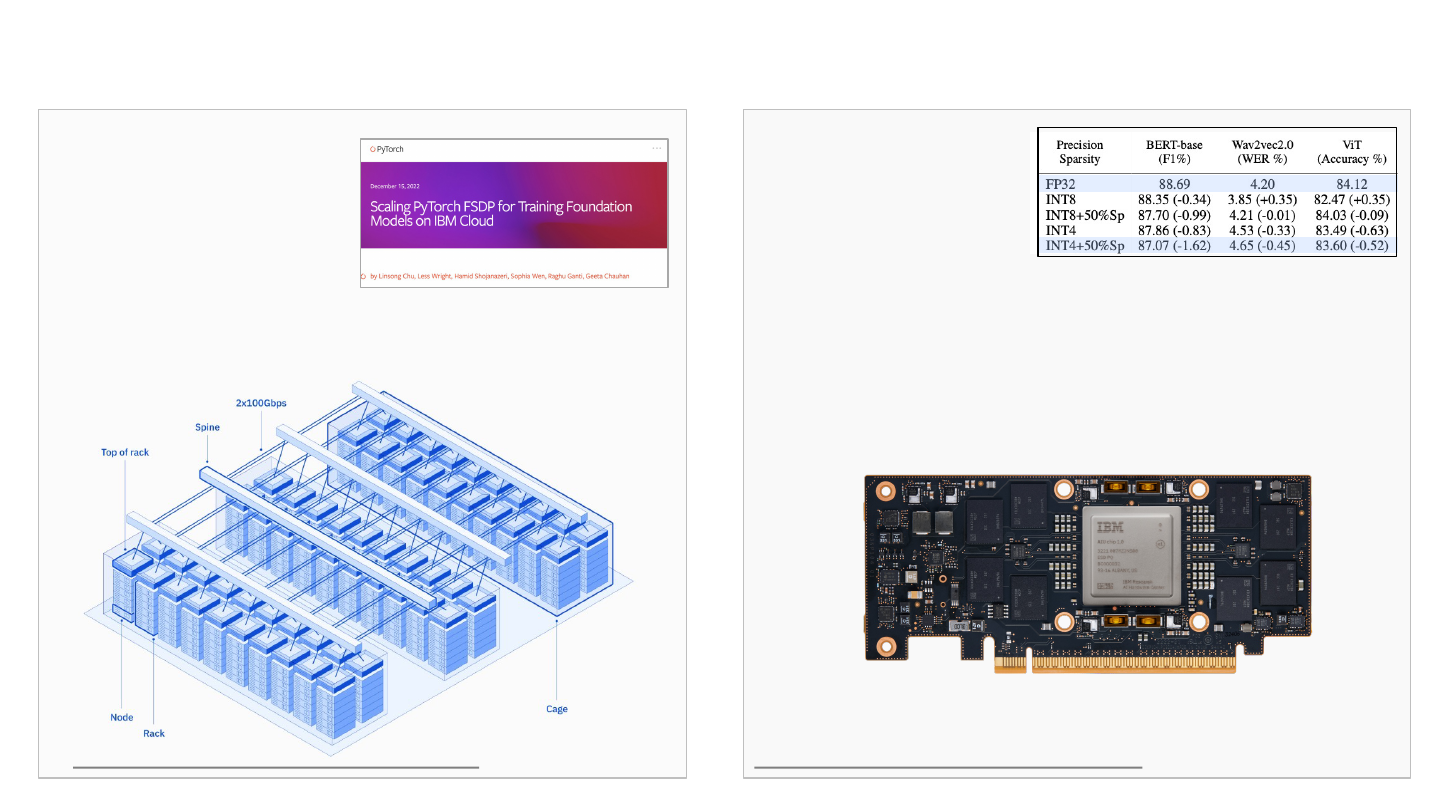

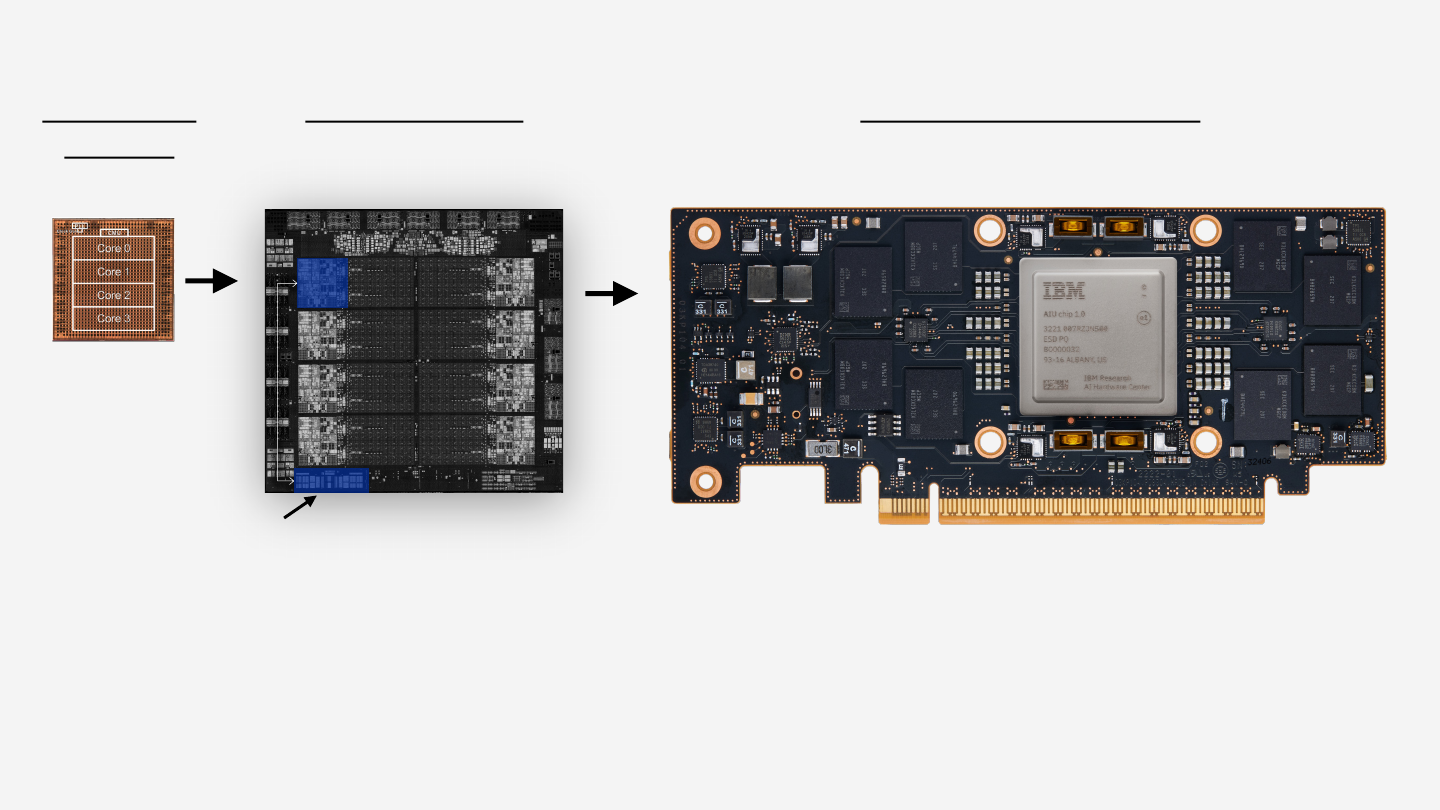

IBM Research AIU background

Gen-3 AI Core

Prototype

2019

IBM Research AI Hardware Center / © 2023 IBM Corporation

AI accelerator

Core

IBM z16 Te l u m Chip

2022 GA

1 Gen-3 AI Core

AIU (Artifical Intelligence Unit)

2022

32 Gen-3 AI Cores

AIU overview:

• Complete AI accelerator, plugs into a standard PCIe slot

• 32 Gen-3 AI cores

• Optimized for AI inferencing, supports all operations for fine-tuning

and training as well

• Designed to ease cloud integration, enabled in Red Hat stack

• Support for all common neural network types

zAIU overview:

• One Gen-3 AI core, integrated in the z16

processor chip

• Off-loads AI tasks from the 8 CPU cores

• Optimized for in-transaction AI inferencing

• Seamless integration into z software stack

zAIU

9

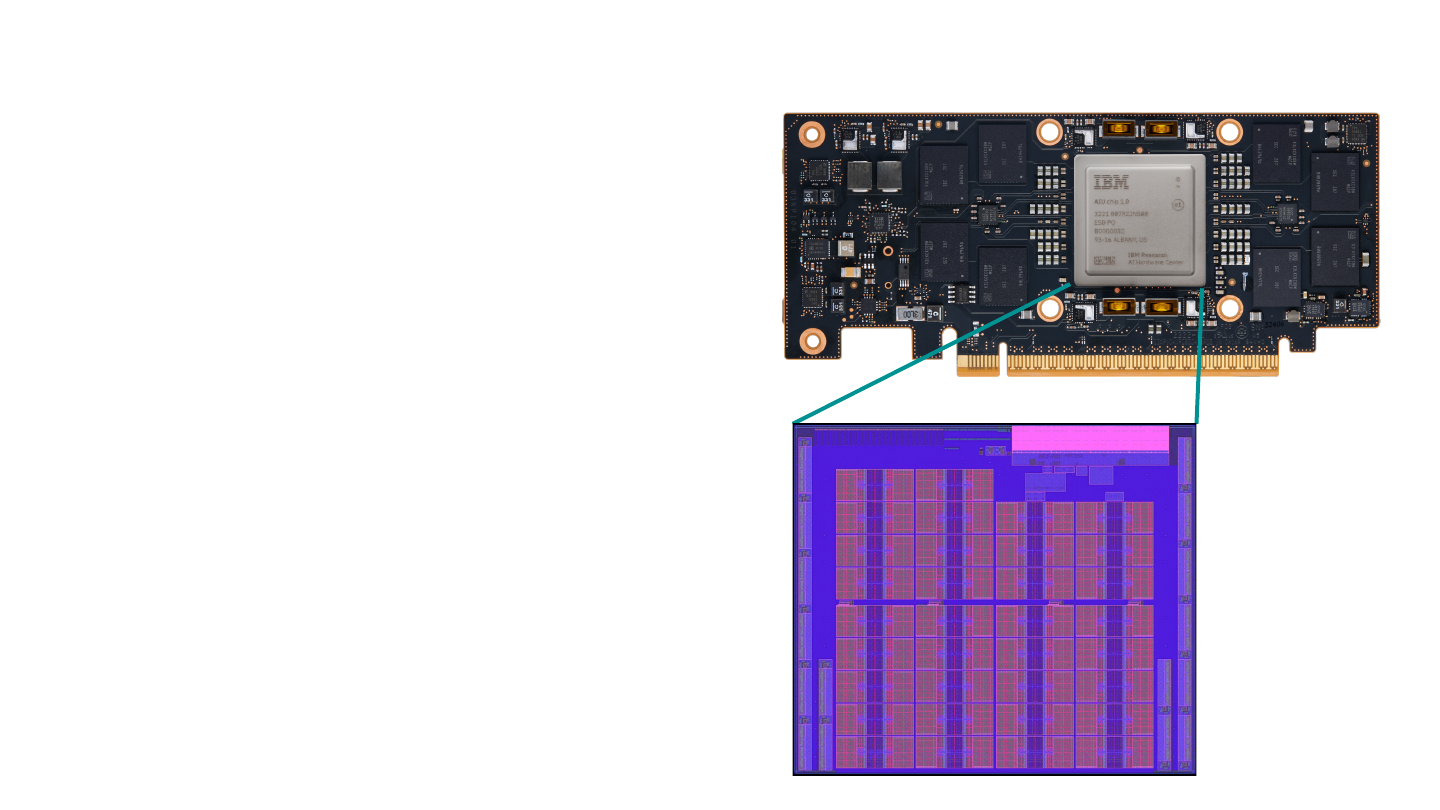

IBM Artificial Intelligence Unit (AIU)

SoC implements IBM’s leadership innovations in low-

precision AI arithmetic and algorithms

– Chip architecture optimized for enterprise AI workloads,

including foundation models

– Enabled in the Red Hat and Foundation Models software

stacks

– Supports multi-precision inference (and some training)

FP16, FP8, INT8, INT4, INT2

– Implemented in leading edge 5nm technology

10

IBM Research AI Hardware Center / © 2023 IBM Corporation

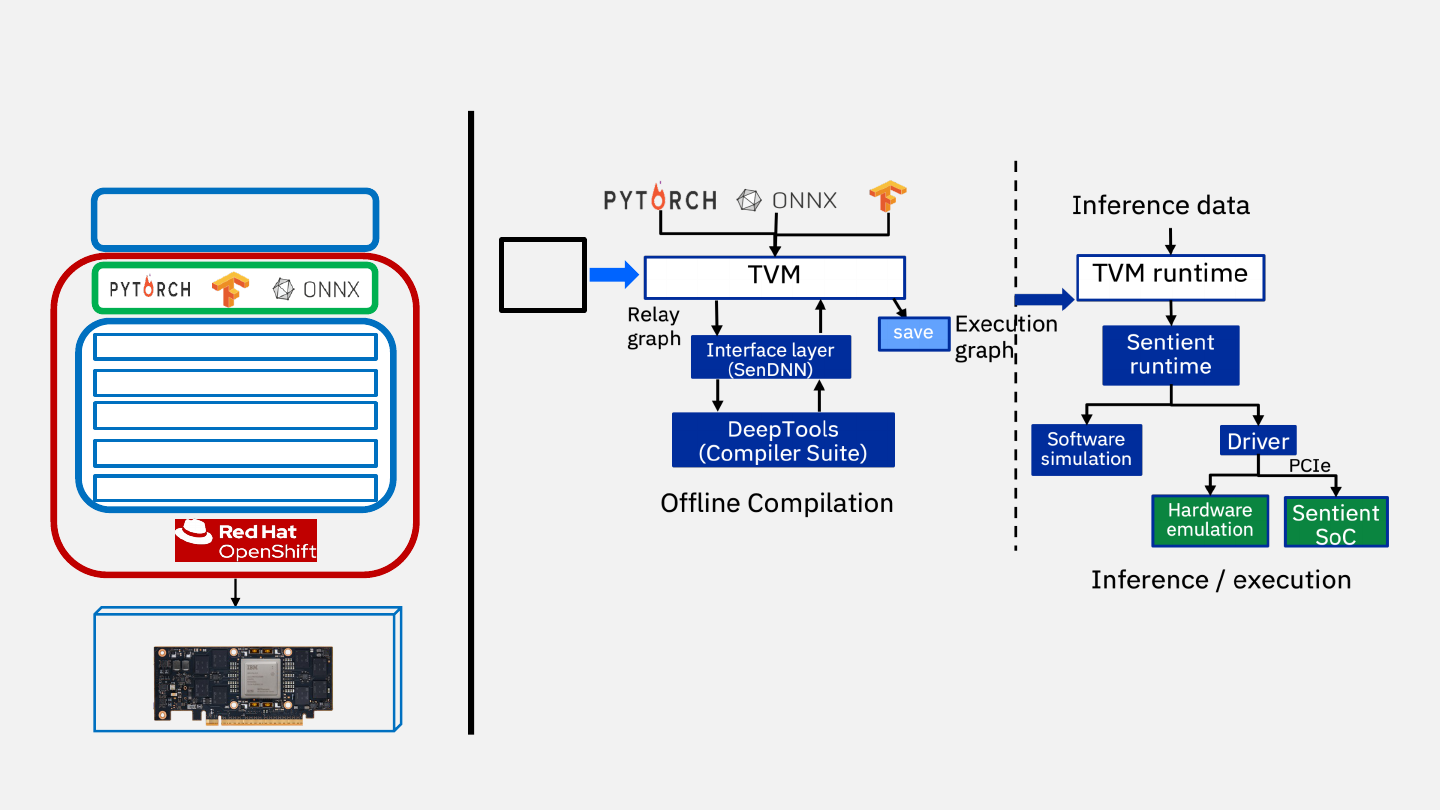

IBM AIU inference stack integrated with watsonx

11

IBM Research AI Hardware Center / © 2023 IBM Corporation

TVM: Tensor Virtual

Machine, open-

source

framework/runtime

Model

Optimizer

(SenQNN)

Key challenge: develop the entire AIU software stack

in parallel with developing the SoC and PCIe card

PCIe

IBM AIU Card

watsonx Services

AIU (DeepTools) Compiler

Model Optimizer

AIU Runtime

AIU Driver

AI Framework Integration

User’s view: watsonx

services (only)

Internal software architecture

components

12

IBM Research AI Hardware Center / © 2023 IBM Corporation

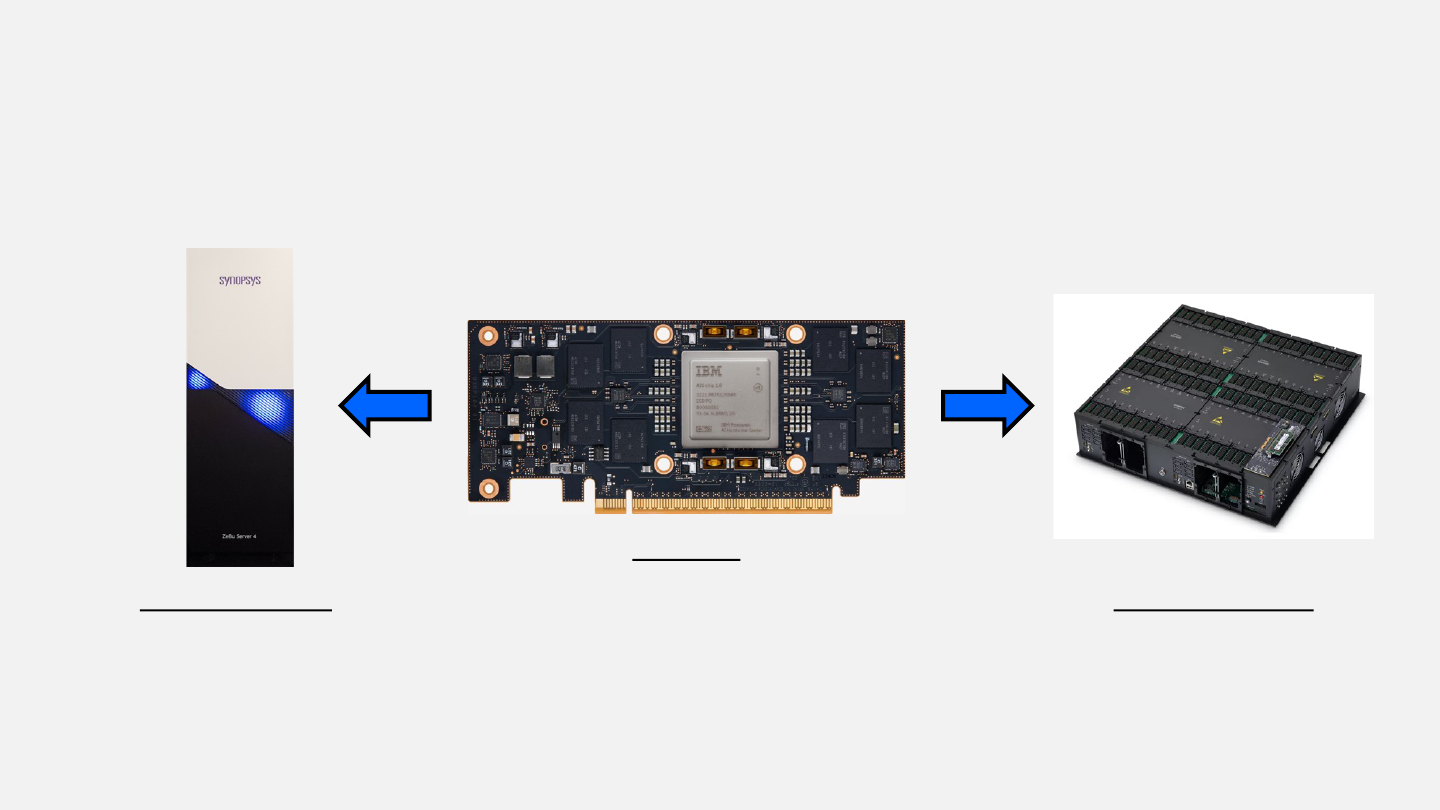

• Emulation systems have been essential for:

• Hardware verification: Uncover functional/performance bugs

• Software development: Provide platform for chip internal/external software development

IBM AIU emulation overview

IBM AIU

Synopsys ZeBu

• 96 Xilinx VU440 FPGAs

• Hardware verification

• Compiler / hardware co-

development

Synopsys HAPS

• 4-8 Xilinx VU440 FPGAs

• Device driver development

Full AIU computational emulation

13

IBM Research AI Hardware Center / © 2023 IBM Corporation

SenLib

Driver

Virtual Machine

ZeBu Host PC

PCIe-Xtor

Software

PCIe-Xtor

Hardware

Virtual PCIe

Link

IBM AIU

§ Objective: high-fidelity model of all computational

elements – cores and interconnect – of the SoC

§ Model build:

§ ZeBu system from Synopsys

§ 96 Xilinx VU440 FPGAs

§ Very high fill rate, ~90% LUT utilization

§ 24h model build time (RTL to bitfiles)

§ 1 – 1.5 MHz operating frequency; limited by

memory interface

§ Impact highlights:

§ Found several high impact hardware bugs

§ Rare, hard to hit scenarios, practically

impossible to find in simulation

§ Vital for compiler development

§ Complete cycle-accurate processing of 1

image: 1 min on ZeBu vs. 9 hours in

simulation

Example

Number of different NNs exercised

14

Tests run (32 images/features per run)

100,000

Image/feature inferences completed

3.2 million

Total emulation run time

7000 hours

Equivalent SoC run time

7 hours

AIU nest emulation

14

Why a second emulation platform?

• Develop device driver stack for AIU: require SoC-

like hardware fidelity (e.g., host-PCIe interface)

Platform and model details:

• HAPS system from Synopsys

• 4-8 Xilinx VU440 FPGAs emulate a mini SoC

– SoC faithful nest + 1 AI core (vs 32 AI cores)

– Running at MHz speed

• Includes PCIe Gen5 PHY daughter card from

Synopsys

• Includes DDR4 DIMMs

• Uniquely suited for AIU driver development

– Faithfully realizes the host-PCIe interface of the

SoC

IBM Research AI Hardware Center / © 2023 IBM Corporation

Network

HAPS runtime

(sec/image or

sec/feature)

ZeBu

runtime

(sec/image or

sec/feature)

ResNet50

1.46

10.02

MobileNetV1

0.59

3.37

InceptionV4

4.35

43.76

BERT

-large

(seq=384)

67

292

Modeling and emulation impact

15

§ Multiple software and FPGA-based methods have been essential to IBM’s full-stack AIU and AI

system development

§ Our SoC design process leverages multiple levels of simulation for architecture development, logic and

chip design, and design verification

§ Our software stack development, accelerator software integration development, and compiler /

hardware co-optimization leveraged FPGA-based emulation systems

§ Full-chip emulation via ZeBu for full-chip performance & accuracy analyses of AI models on multi-

core models, compiler optimizations, architectural modifications and power estimation

§ Detailed SoC nest emulation via HAPS for device driver development, low-level software stack

development, and evaluation of multi-chip configurations

§ These methods enabled us to develop a full system, end-to-end hardware and software stack for

Foundation Model inference in parallel to SoC and PCIe card development

IBM Research AI Hardware Center / © 2023 IBM Corporation

16

IBM Research AI Hardware Center / © 2023 IBM Corporation

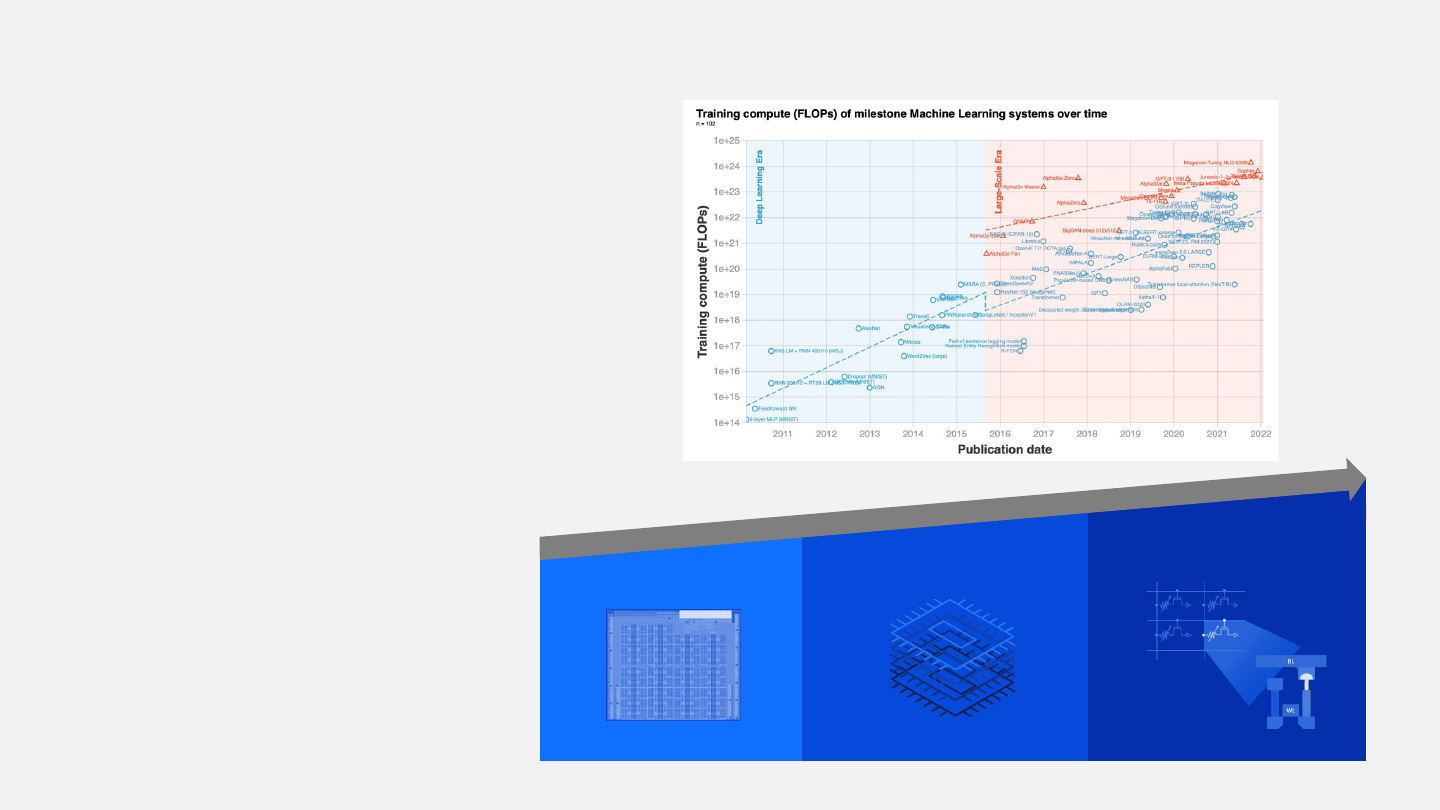

Foundation Models are an inflection point for enterprise AI

Sevilla, arXiv ‘22

• Our approach emphasizes:

• Cloud-native architectures

• Ease-of-use for developers and

clients

• Hybrid cloud consumption

• AI accelerator design and

technology innovations

Innovation: Algorithm + Architecture

Augmented w/ Heterogeneous

Integration

Reduced Precision Arithmetic

Augmented w/ Analog Compute

+ +

• FMs enable a proliferation of task-

specific models, but with large and

escalating compute demands

• Inference, fine-tuning, and distributed

training systems differing in

requirements

• Full-system innovation is required

17

IBM Research AI Hardware Center / © 2023 IBM Corporation