Microsoft SQLServer To Amazon Aurora with Post-

greSQL Compatibility

Migration Playbook

1.0 Preliminary September 2018

© 2018 Amazon Web Services, Inc. or its affiliates. All rights reserved.

Notices

This document is provided for informational purposes only. It represents AWS’s current product offer-

ings and practices as of the date of issue of this document, which are subject to change without notice.

Customers are responsible for making their own independent assessment of the information in this

document and any use of AWS’s products or services, each of which is provided “as is” without war-

ranty of any kind, whether express or implied. This document does not create any warranties, rep-

resentations, contractual commitments, conditions or assurances from AWS, its affiliates, suppliers or

licensors. The responsibilities and liabilities of AWS to its customers are controlled by AWS agree-

ments, and this document is not part of, nor does it modify, any agreement between AWS and its cus-

tomers.

- 2 -

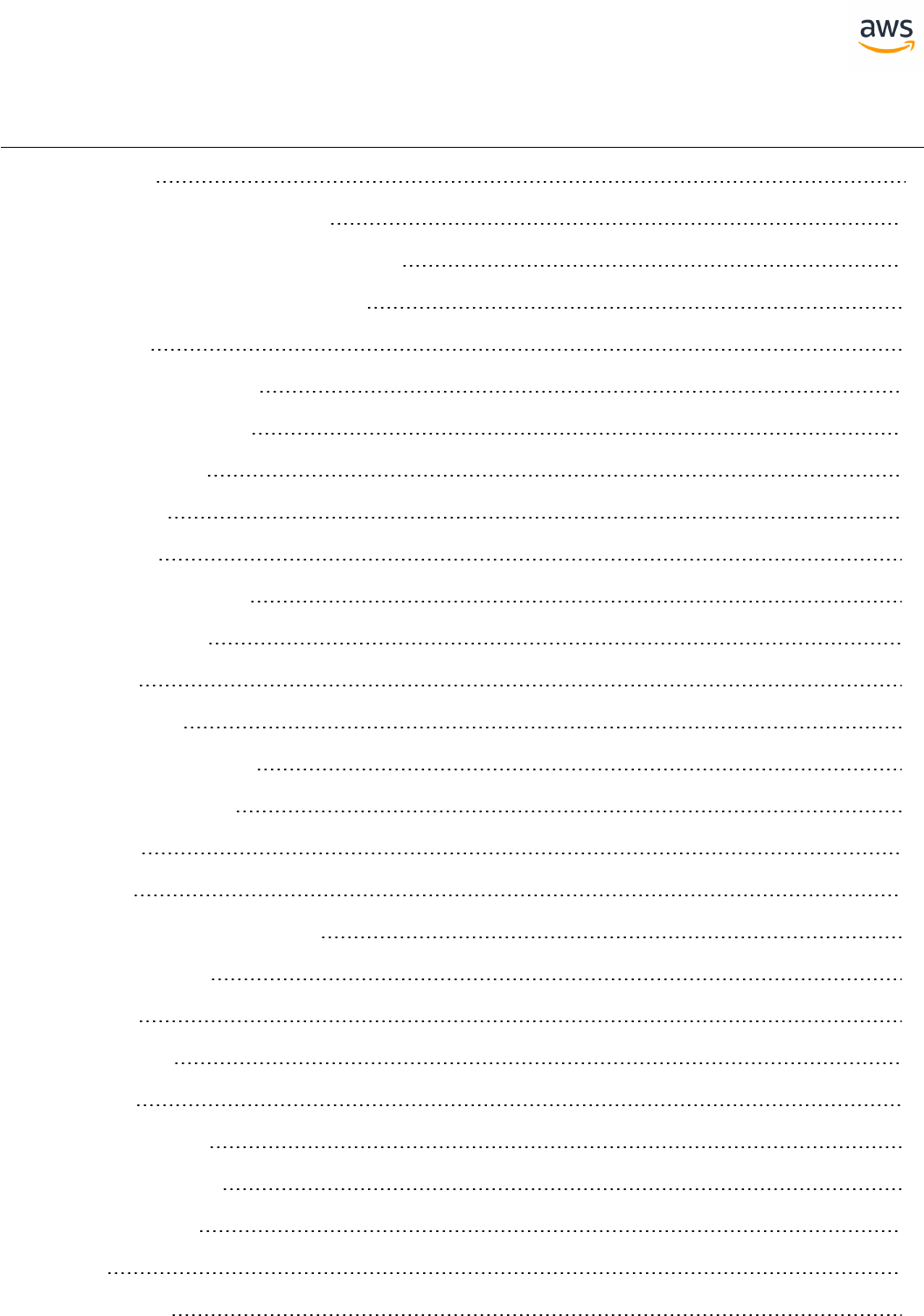

Table of Contents

Introduction 9

Tables of Feature Compatibility 12

AWS Schema and Data Migration Tools 20

AWS Schema Conversion Tool (SCT) 21

Overview 21

Migrating a Database 21

SCT Action Code Index 31

Creating Tables 32

Data Types 32

Collations 33

PIVOT and UNPIVOT 33

TOP and FETCH 34

Cursors 34

Flow Control 35

Transaction Isolation 35

Stored Procedures 36

Triggers 36

MERGE 37

Query hints and plan guides 37

Full Text Search 38

Indexes 38

Partitioning 39

Backup 40

SQL Server Mail 40

SQL Server Agent 41

Service Broker 41

XML 42

Constraints 42

- 3 -

Linked Servers 42

AWS Database Migration Service (DMS) 43

Overview 43

Migration Tasks Performed by AWS DMS 43

How AWS DMS Works 44

ANSI SQL 46

Migrate from: SQL Server Constraints 47

Migrate to: Aurora PostgreSQL Table Constraints 51

Migrate from: SQL Server Creating Tables 58

Migrate to: Aurora PostgreSQL Creating Tables 62

Migrate from: SQL Server Common Table Expressions 67

Migrate to: Aurora PostgreSQL Common Table Expressions (CTE) 70

Migrate from: SQL Server Data Types 74

Migrate to: Aurora PostgreSQL Data Types 76

Migrate from: SQL Server Derived Tables 81

Migrate to: Aurora PostgreSQL Derived Tables 82

Migrate from: SQL Server GROUP BY 83

Migrate to: Aurora PostgreSQL GROUP BY 87

Migrate from: SQL Server Table JOIN 90

Migrate to: Aurora PostgreSQL Table JOIN 95

Migrate from: SQL Server Temporal Tables 99

Migrate to: Aurora PostgreSQL Triggers (Temporal Tables alternative) 101

Migrate from: SQL Server Views 102

Migrate to: Aurora PostgreSQL Views 105

Migrate from: SQL Server Window Functions 108

Migrate to: Aurora PostgreSQL Window Functions 110

T-SQL 114

Migrate from: SQL Server Service Broker Essentials 115

Migrate to: Aurora PostgreSQL AWS Lambda or DB links 119

Migrate from: SQL Server Cast and Convert 120

- 4 -

Migrate to: Aurora PostgreSQL CAST and CONVERSION 122

Migrate from: SQL Server Common Library Runtime (CLR) 124

Migrate to: Aurora PostgreSQL PL/Perl 125

Migrate from: SQL Server Collations 126

Migrate to: Aurora PostgreSQL Encoding 129

Migrate from: SQL Server Cursors 132

Migrate to: Aurora PostgreSQL Cursors 134

Migrate from: SQL Server Date and Time Functions 139

Migrate to: Aurora PostgreSQL Date and Time Functions 141

Migrate from: SQL Server String Functions 143

Migrate to: Aurora PostgreSQL String Functions 145

Migrate from: SQL Server Databases and Schemas 148

Migrate to: Aurora PostgreSQL Databases and Schemas 151

Migrate from: SQL Server Dynamic SQL 153

Migrate to: Aurora PostgreSQL EXECUTE and PREPARE 156

Migrate from: SQL Server Transactions 159

Migrate to: Aurora PostgreSQL Transactions 163

Migrate from: SQL Server Synonyms 169

Migrate to: Aurora PostgreSQL Views, Types & Functions 171

Migrate from: SQL Server DELETE and UPDATE FROM 173

Migrate to: Aurora PostgreSQL DELETE and UPDATE FROM 176

Migrate from: SQL Server Stored Procedures 179

Migrate to: Aurora PostgreSQL Stored Procedures 182

Migrate from: SQL Server Error Handling 186

Migrate to: Aurora PostgreSQL Error Handling 191

Migrate from: SQL Server Flow Control 194

Migrate to: Aurora PostgreSQL Control Structures 197

Migrate from: SQL Server Full-Text Search 200

Migrate to: Aurora PostgreSQL Full-Text Search 203

Migrate from: SQL Server JSON and XML 206

- 5 -

Migrate to: Aurora PostgreSQL JSON and XML 209

Migrate from: SQL Server MERGE 213

Migrate to: Aurora PostgreSQL MERGE 216

Migrate from: SQL Server PIVOT and UNPIVOT 218

Migrate to: Aurora PostgreSQL PIVOT and UNPIVOT 222

Migrate from: SQL Server Triggers 225

Migrate to: Aurora PostgreSQL Triggers 228

Migrate from: SQL Server TOP and FETCH 232

Migrate to: Aurora PostgreSQL LIMIT and OFFSET (TOP and FETCH Equivalent) 235

Migrate from: SQL Server User Defined Functions 238

Migrate to: Aurora PostgreSQL User Defined Functions 241

Migrate from: SQL Server User Defined Types 242

Migrate to: Aurora PostgreSQL User Defined Types 245

Migrate from: SQL Server Sequences and Identity 248

Migrate to: Aurora PostgreSQL Sequences and SERIAL 253

Configuration 258

Migrate from: SQL Server Session Options 259

Migrate to: Aurora PostgreSQL Session Options 262

Migrate from: SQL Server Database Options 265

Migrate to: Aurora PostgreSQL Database Options 266

Migrate from: SQL Server Server Options 267

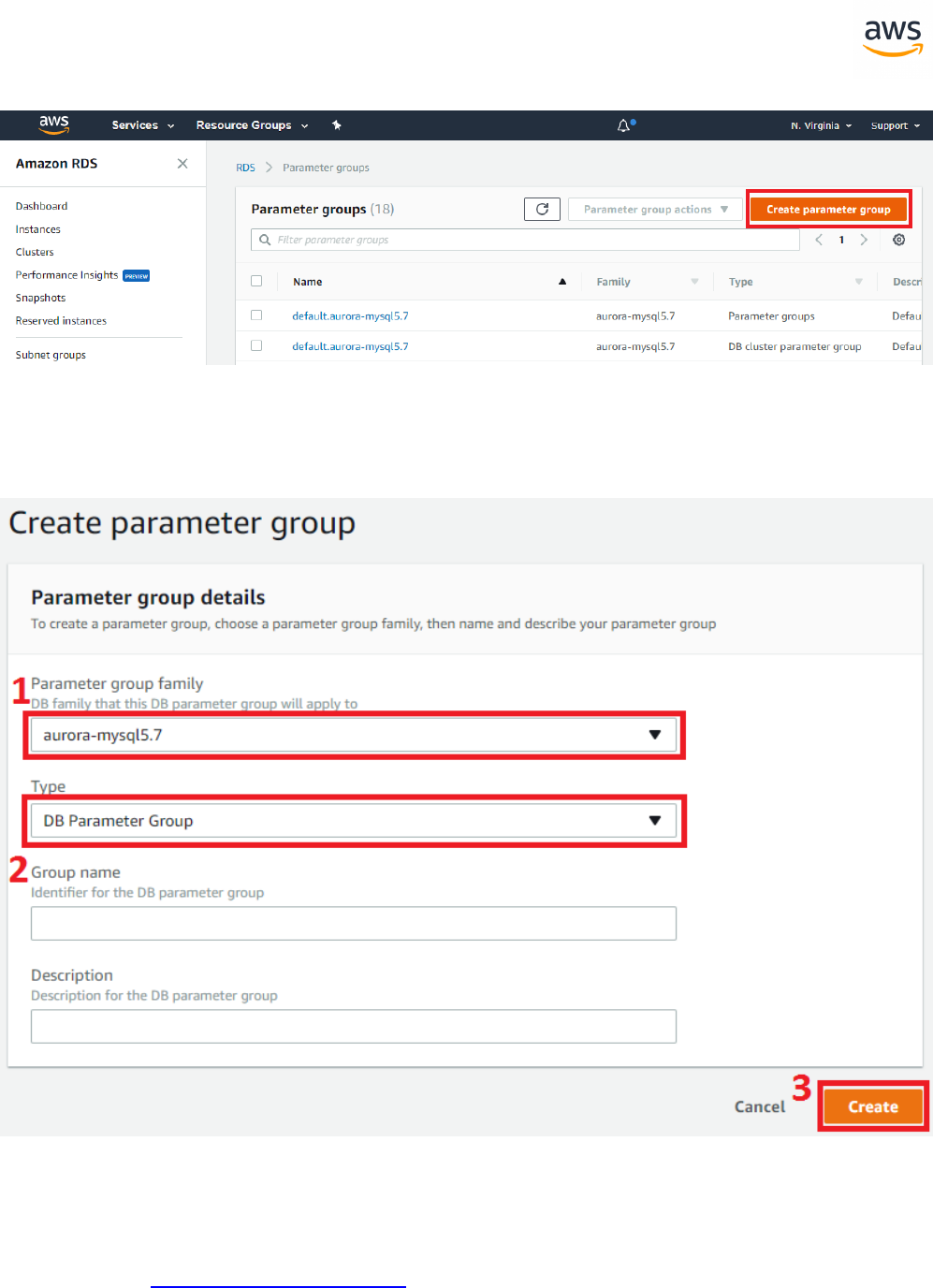

Migrate to: Aurora PostgreSQL Aurora Parameter Groups 269

High Availability and Disaster Recovery (HADR) 276

Migrate from: SQL Server Backup and Restore 277

Migrate to: Aurora PostgreSQL Backup and Restore 281

Migrate from: SQL Server High Availability Essentials 289

Migrate to: Aurora PostgreSQL High Availability Essentials 293

Indexes 303

Migrate from: SQL Server Clustered and Non Clustered Indexes 304

Migrate to: Aurora PostgreSQL Clustered and Non Clustered Indexes 308

- 6 -

Management 313

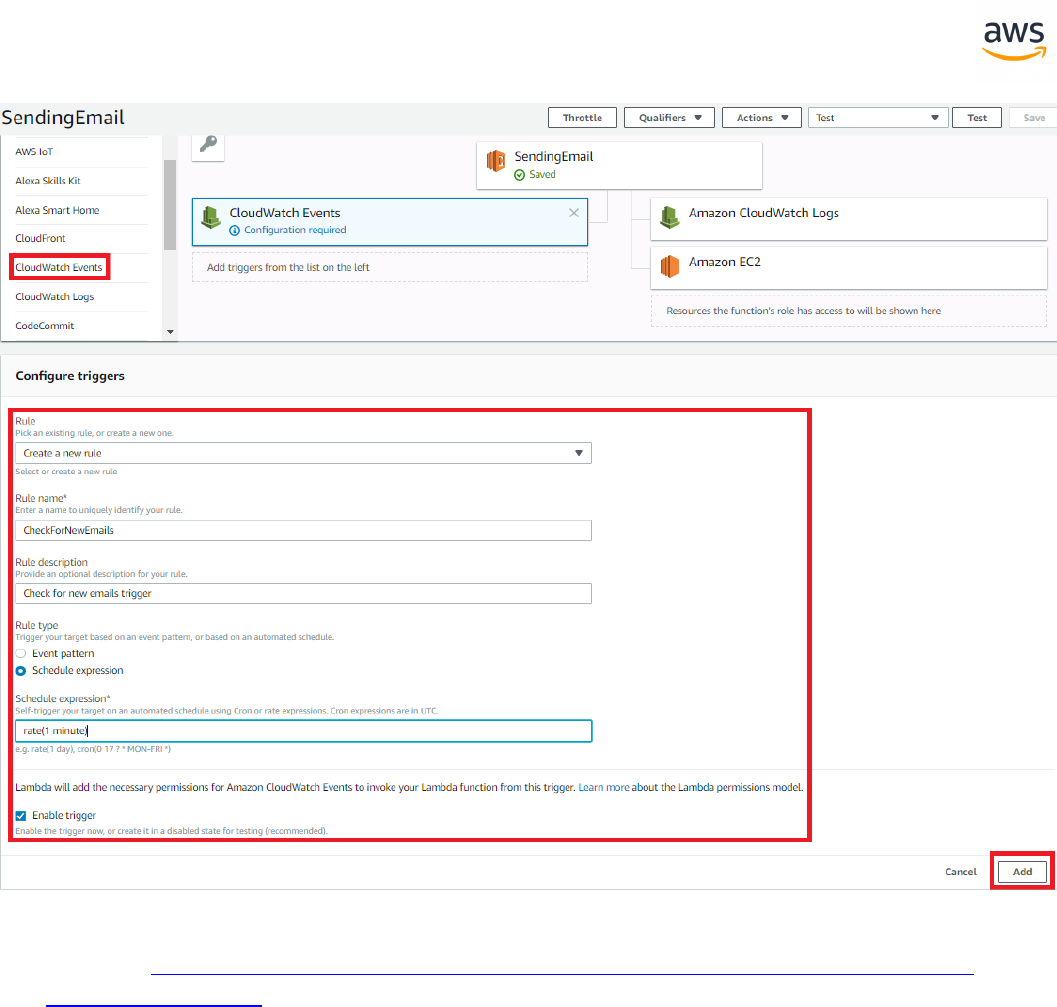

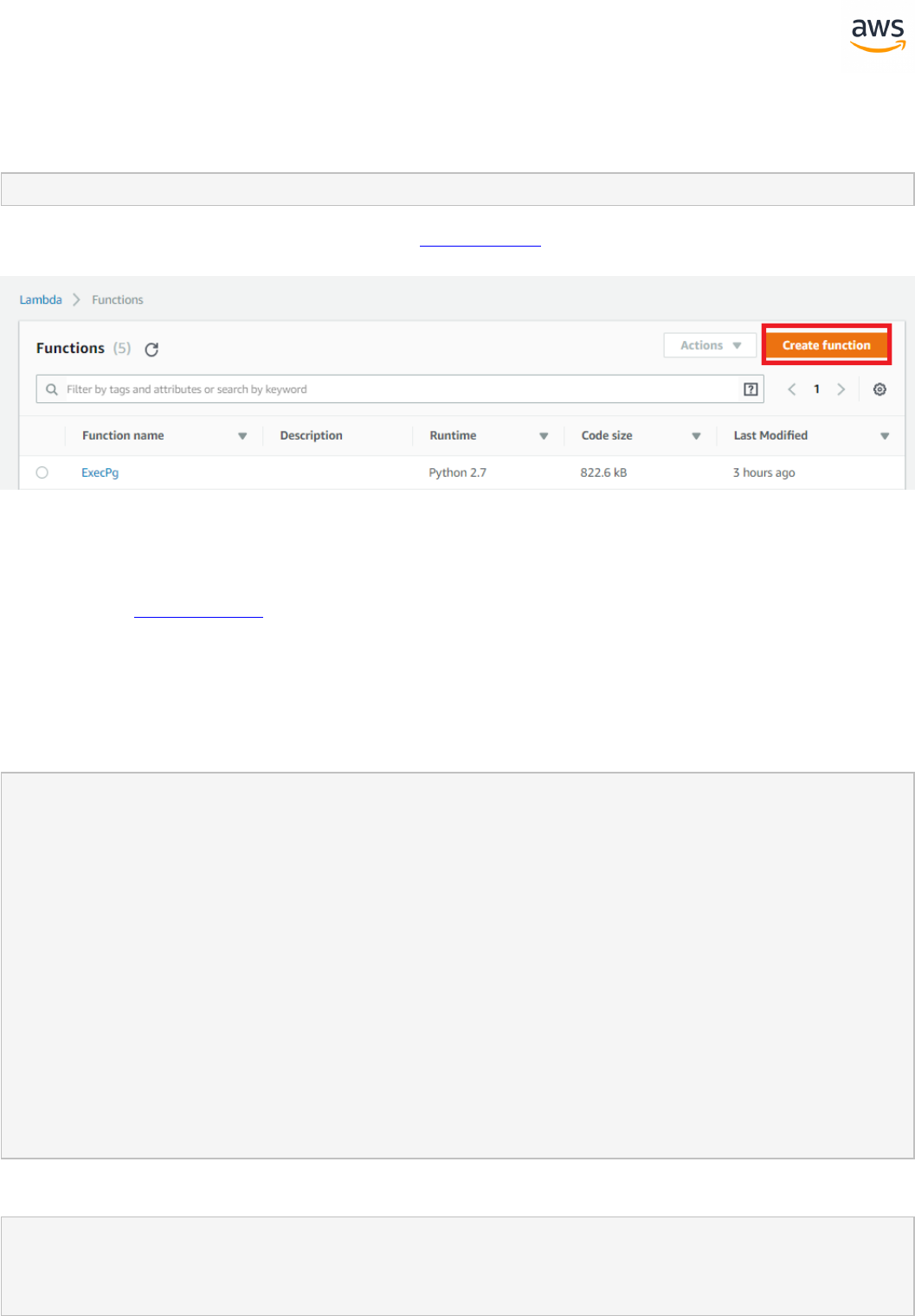

Migrate from: SQL Server Agent 314

Migrate to: Aurora PostgreSQL Scheduled Lambda 315

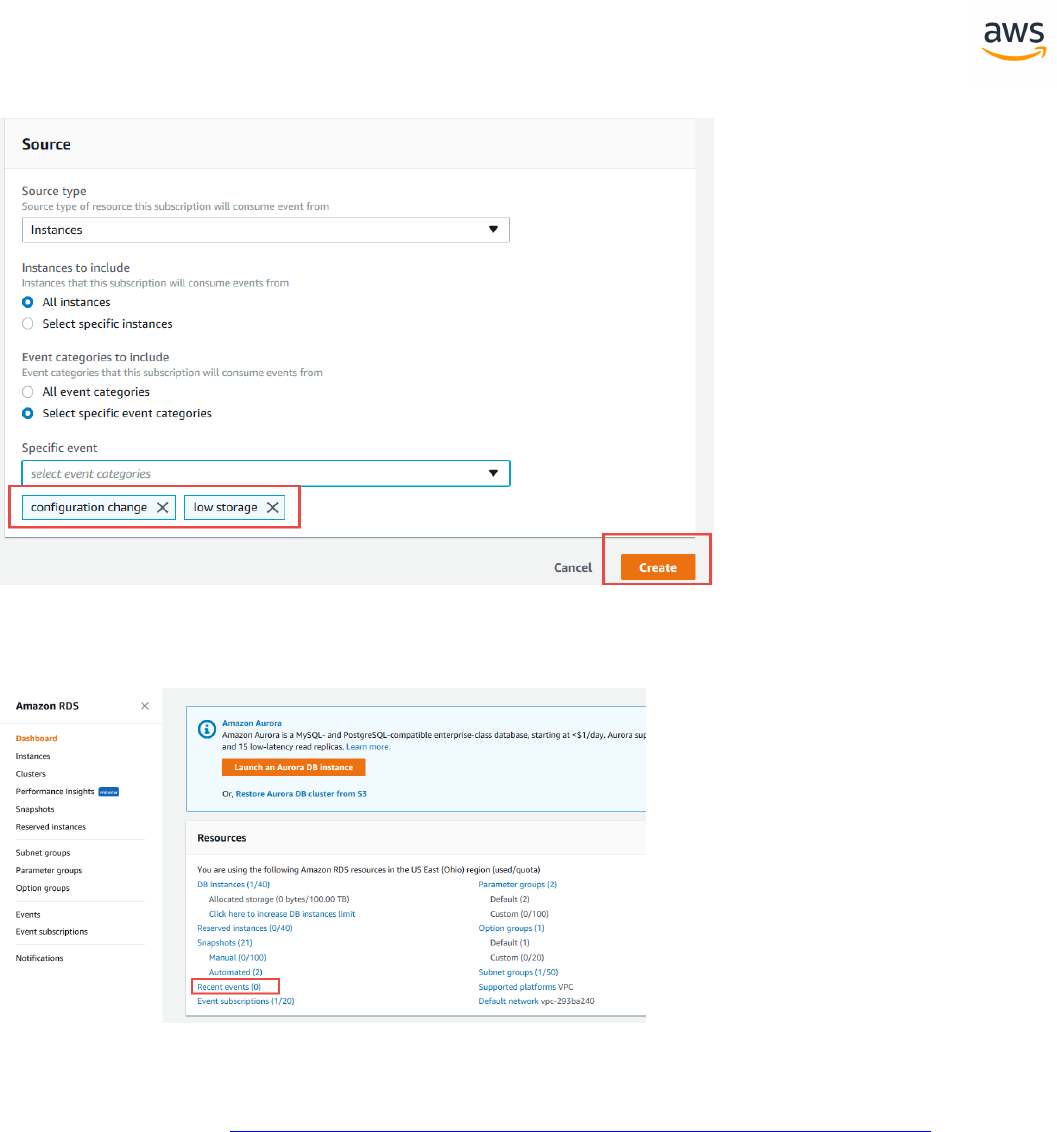

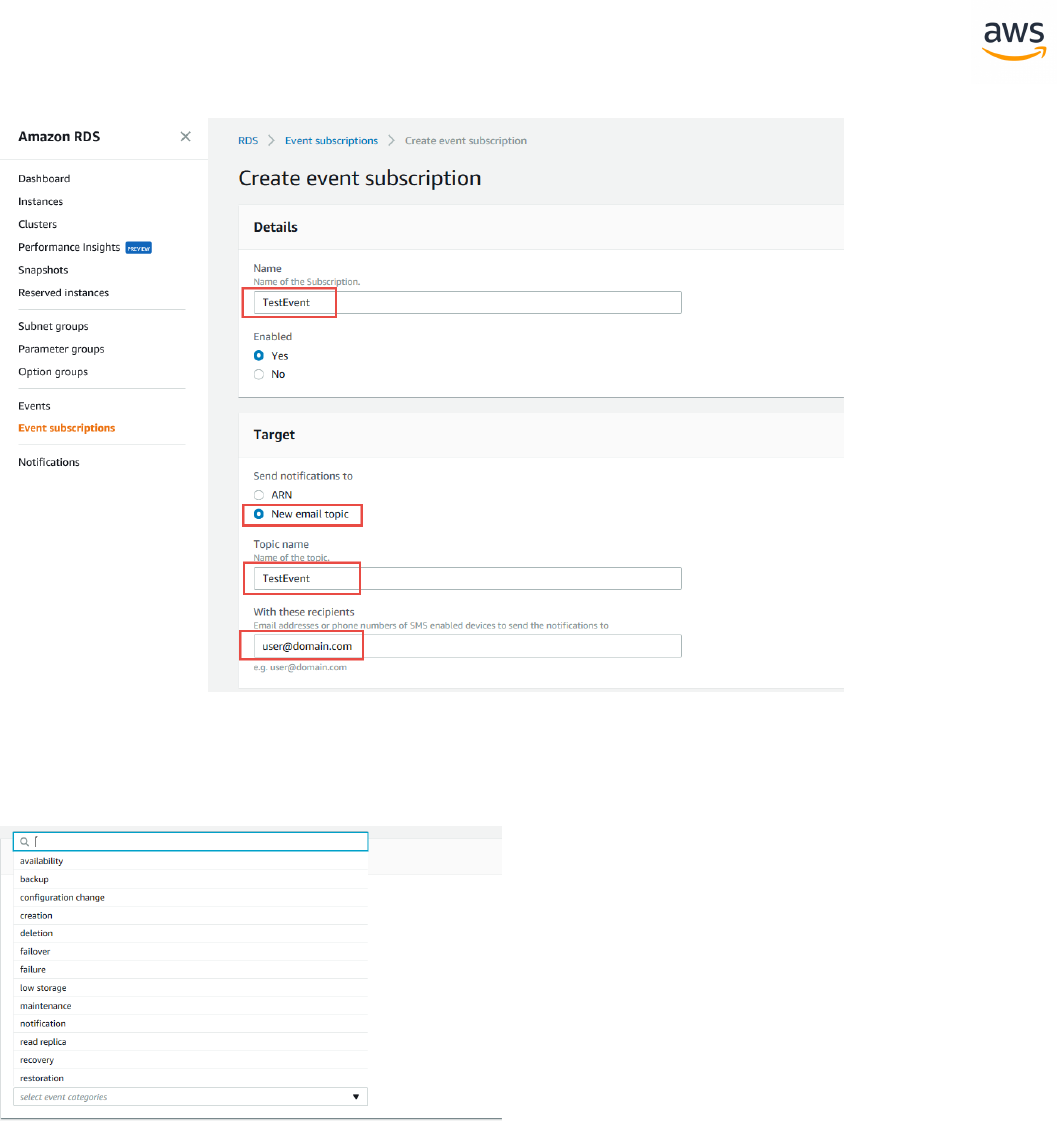

Migrate from: SQL Server Alerting 316

Migrate to: Aurora PostgreSQL Alerting 318

Migrate from: SQL Server Database Mail 323

Migrate to: Aurora PostgreSQL Database Mail 326

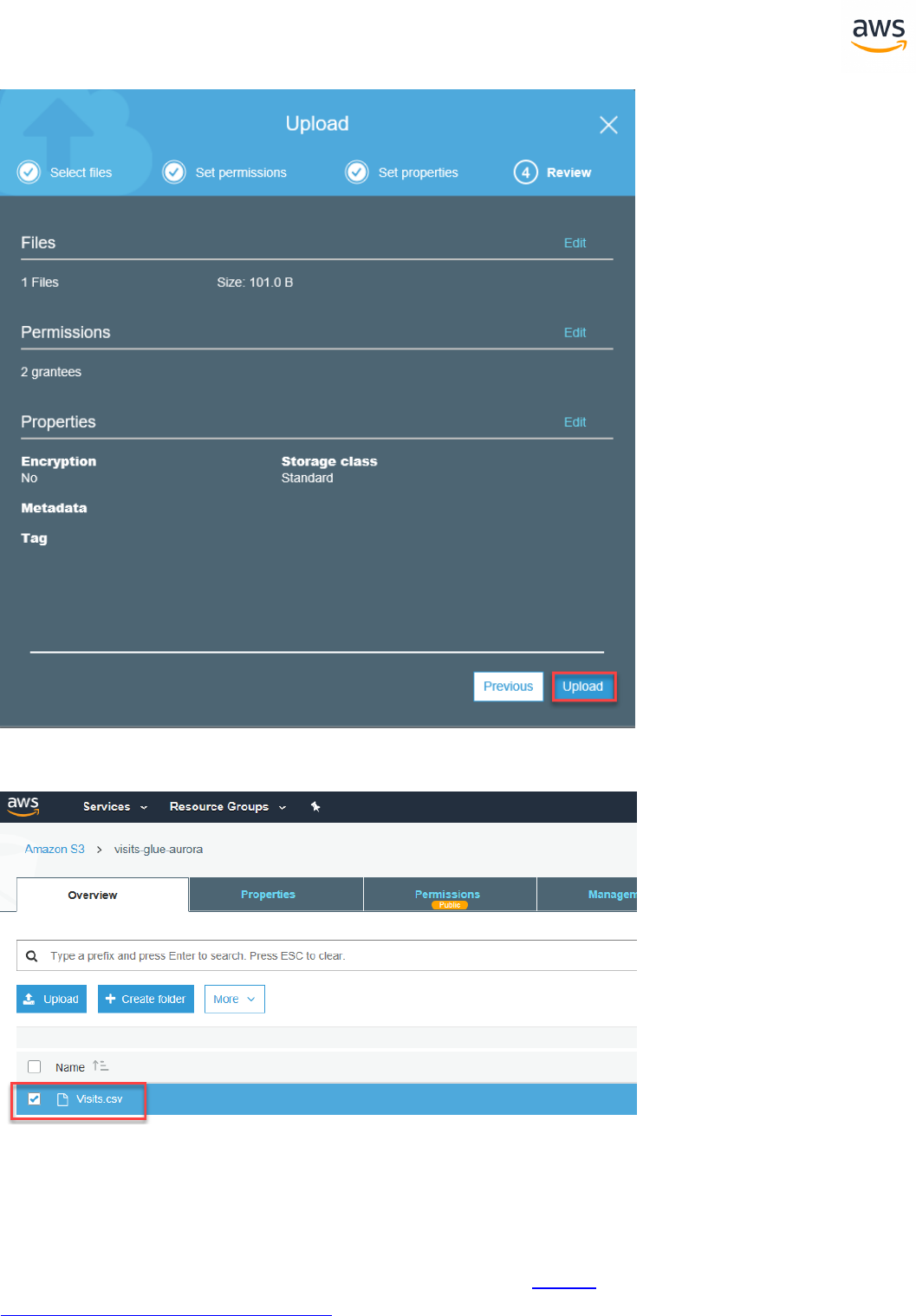

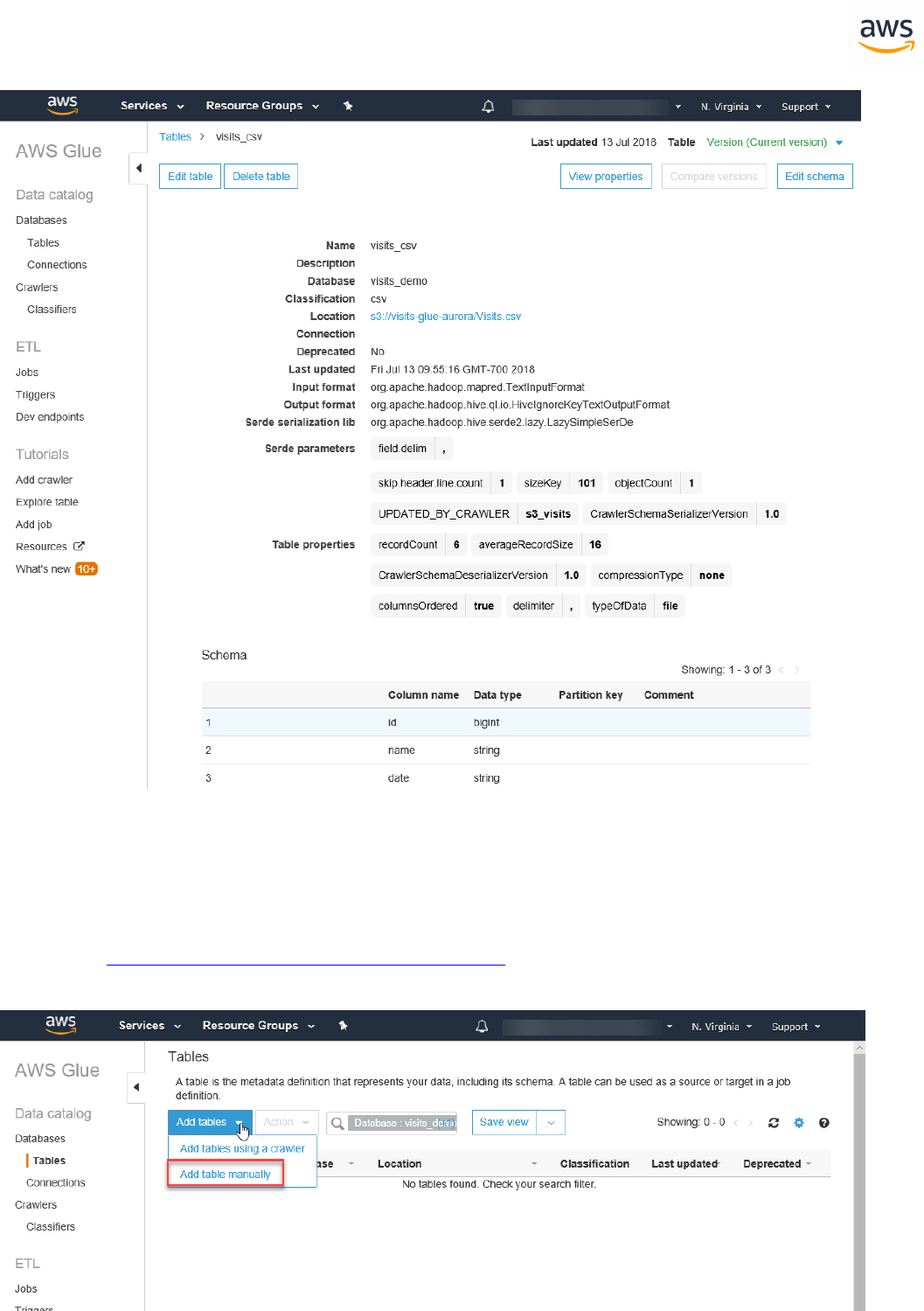

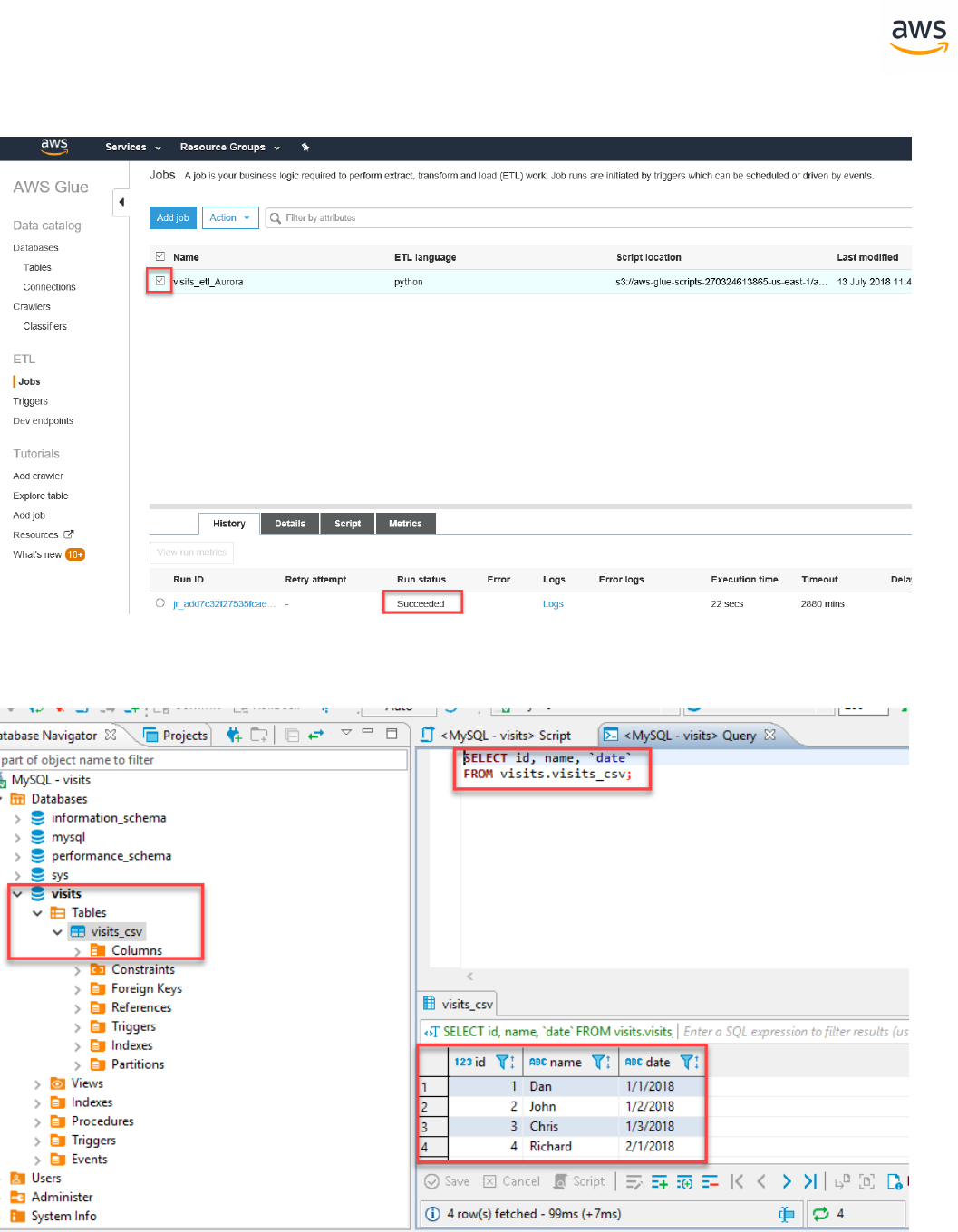

Migrate from: SQL Server ETL 334

Migrate to: Aurora PostgreSQL ETL 336

Migrate from: SQL Server Export and Import with Text files 361

Migrate to: Aurora PostgreSQL pg_dump and pg_restore 363

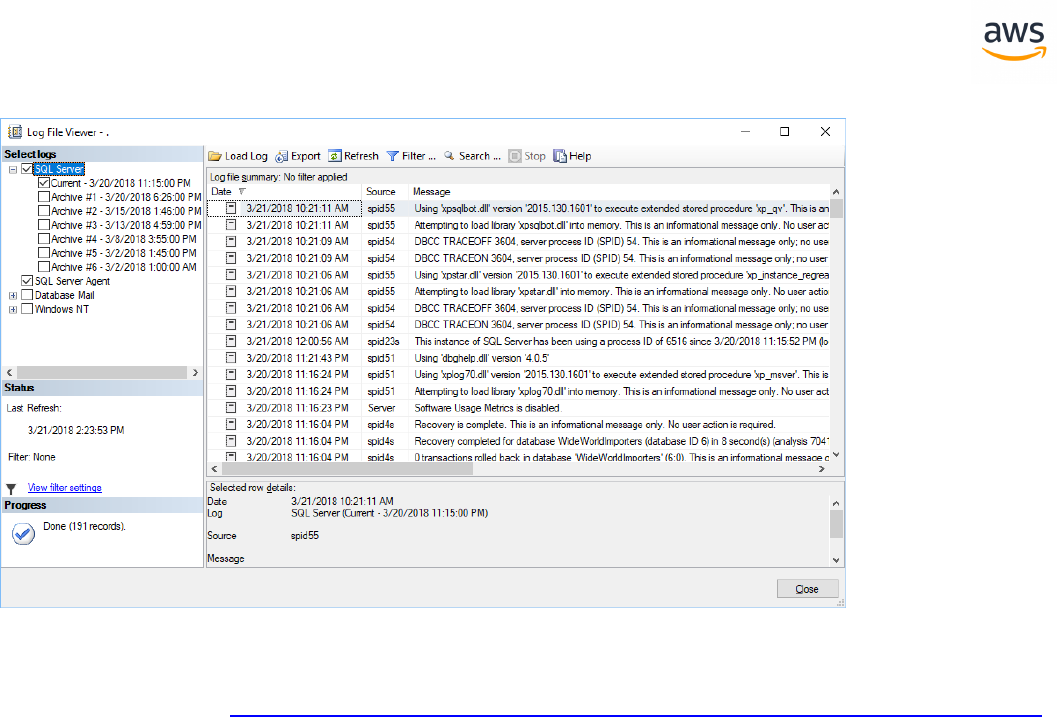

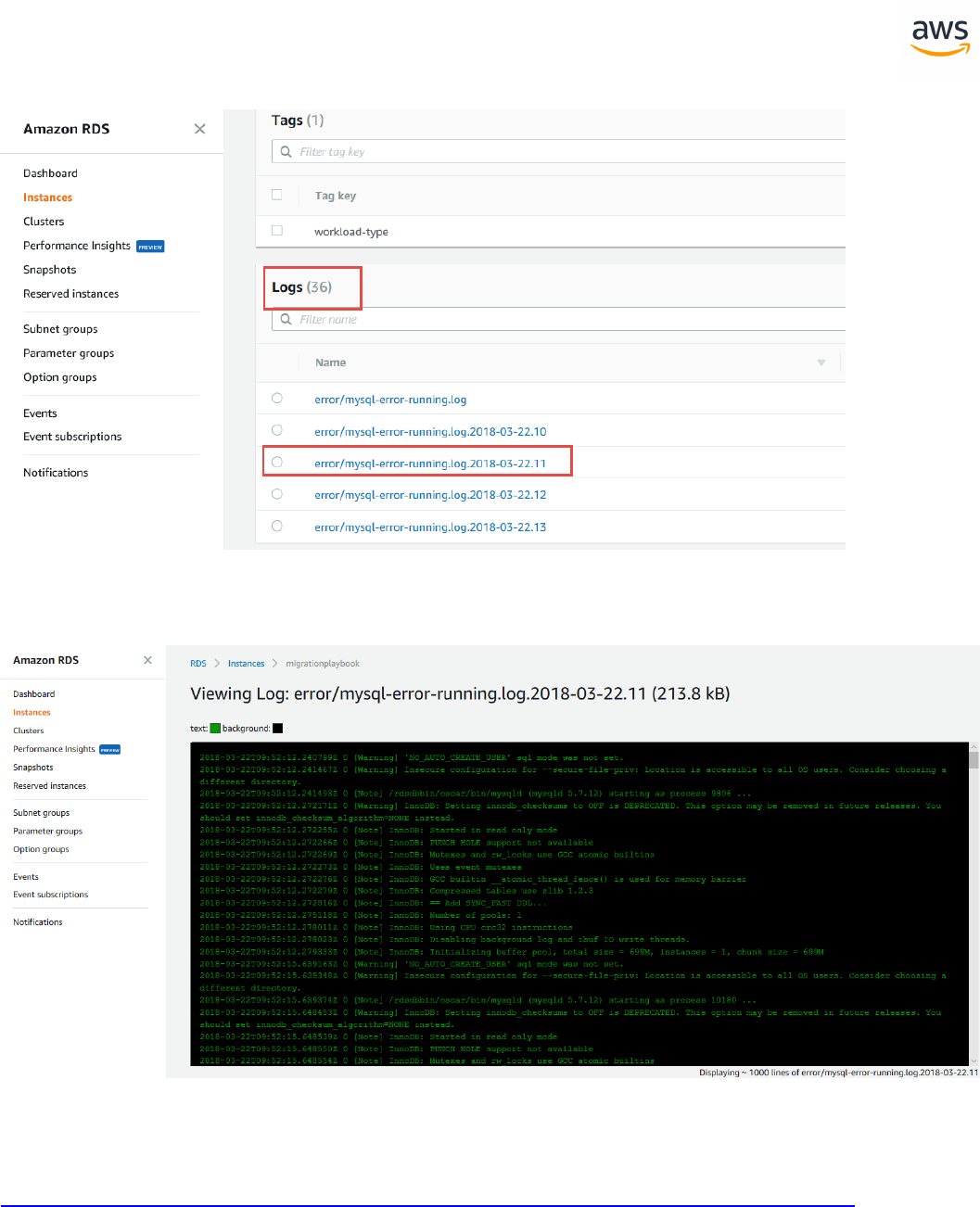

Migrate from: SQL Server Viewing Server Logs 366

Migrate to: Aurora PostgreSQL Viewing Server Logs 368

Migrate from: SQL Server Maintenance Plans 371

Migrate to: Aurora PostgreSQL Maintenance Plans 373

Migrate from: SQL Server Monitoring 378

Migrate to: Aurora PostgreSQL Monitoring 381

Migrate from: SQL Server Resource Governor 383

Migrate to: Aurora PostgreSQL Dedicated Amazon Aurora Clusters 385

Migrate from: SQL Server Linked Servers 388

Migrate to: Aurora PostgreSQL DBLink and FDWrapper 391

Migrate from: SQL Server Scripting 393

Migrate to: Aurora PostgreSQL Scripting 395

Performance Tuning 399

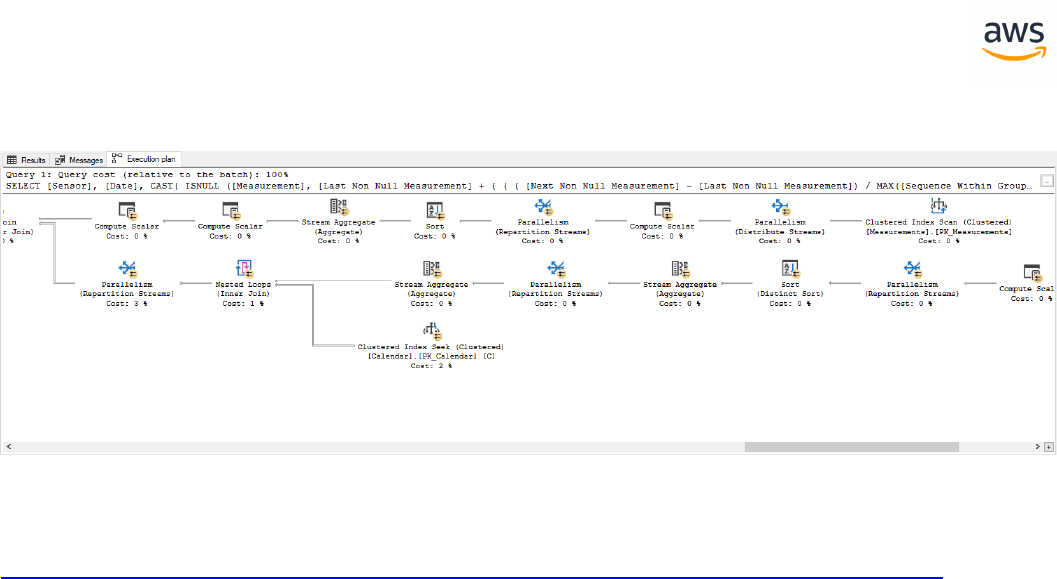

Migrate from: SQL Server Execution Plans 400

Migrate to: Aurora PostgreSQL Execution Plans 402

Migrate from: SQL Server Query Hints and Plan Guides 404

Migrate to: Aurora PostgreSQL DB Query Planning 408

Migrate from: SQL Server Managing Statistics 409

Migrate to: Aurora PostgreSQL Table Statistics 411

- 7 -

Physical Storage 414

Migrate from: SQL Server Columnstore Index 415

Migrate to: Aurora PostgreSQL 416

Migrate from: SQL Server Indexed Views 417

Migrate to: Aurora PostgreSQL Materialized Views 419

Migrate from: SQL Server Partitioning 422

Migrate to: Aurora PostgreSQL Table Inheritance 424

Security 429

Migrate from: SQL Server Column Encryption 430

Migrate to: Aurora PostgreSQL Column Encryption 432

Migrate from: SQL Server Data Control Language 434

Migrate to: Aurora PostgreSQL Data Control Language 435

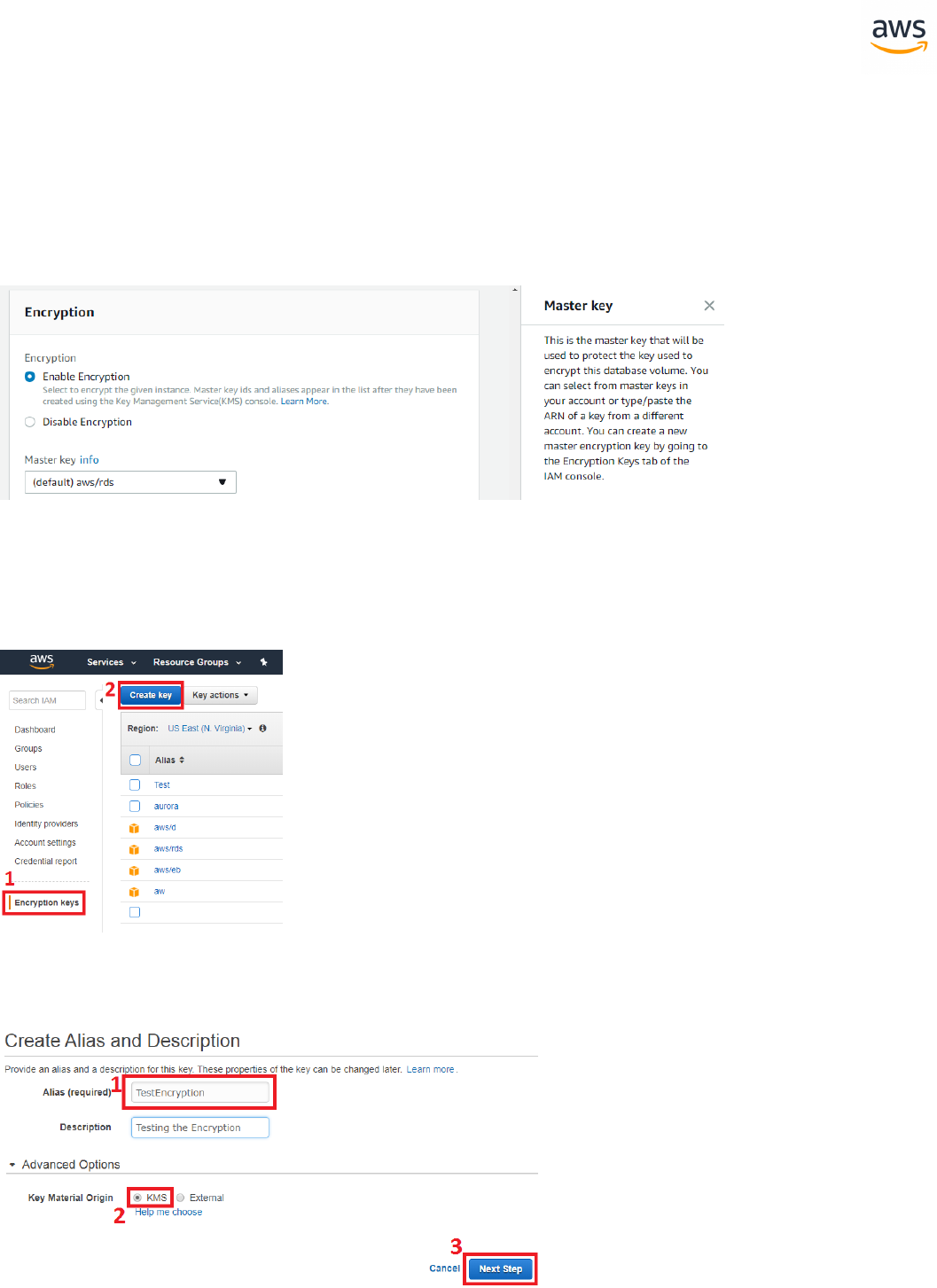

Migrate from: SQL Server Transparent Data Encryption 438

Migrate to: Aurora PostgreSQL Transparent Data Encryption 439

Migrate from: SQL Server Users and Roles 444

Migrate to: Aurora PostgreSQL Users and Roles 446

Appendix A:SQL Server 2018 Deprecated Feature List 448

Migration Quick Tips 449

Migration Quick Tips 450

Management 450

SQL 450

Glossary 453

- 8 -

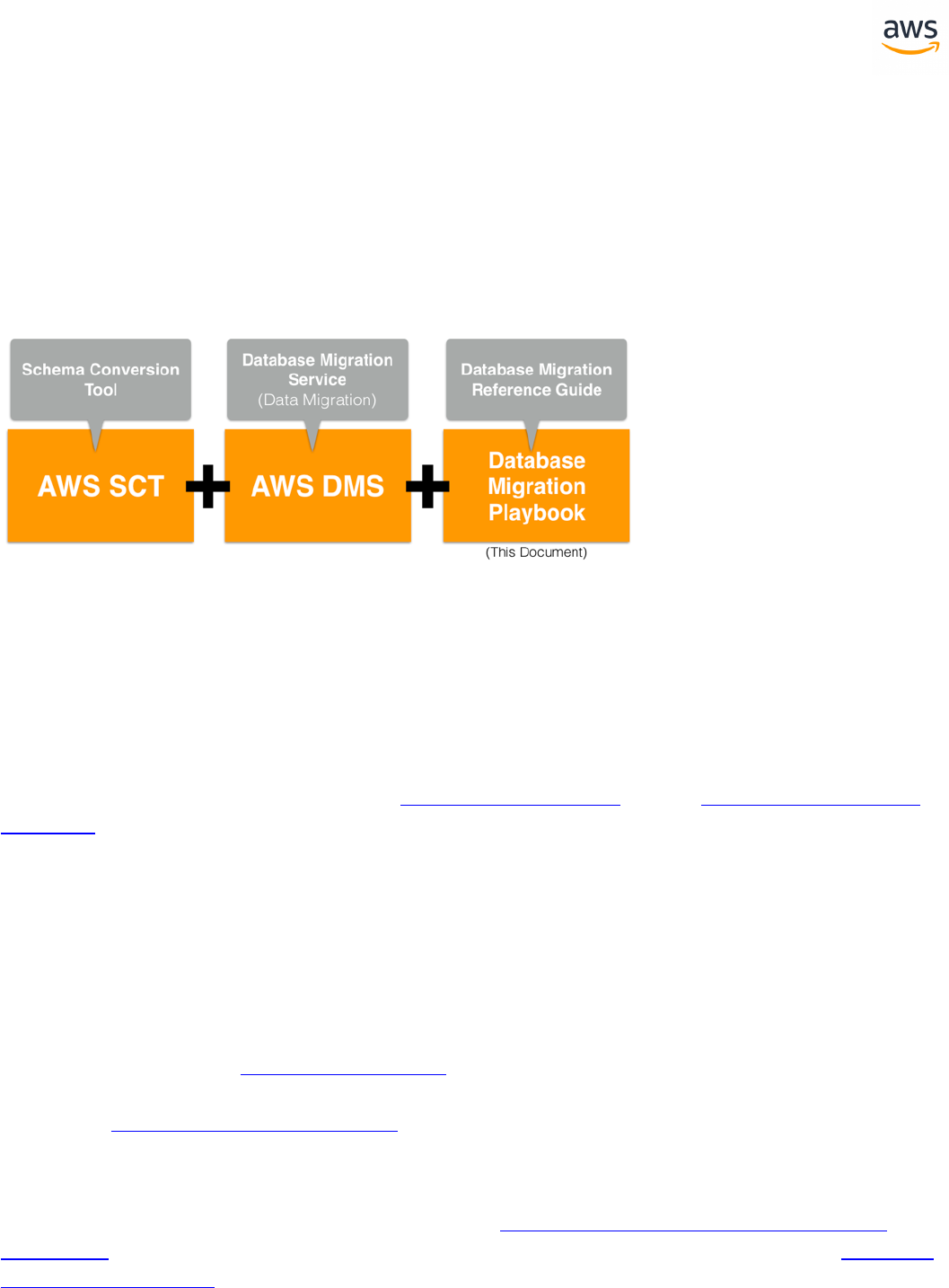

Introduction

The migration process from SQL Server to Amazon Aurora PostgreSQL typically involves several stages.

The first stage is to use the AWS Schema Conversion Tool (SCT) and the AWS Database Migration Ser-

vice (DMS) to convert and migrate the schema and data. While most of the migration work can be auto-

mated, some aspects require manual intervention and adjustments to both database schema objects

and database code.

The purpose of this Playbook is to assist administrators tasked with migrating SQL Server databases to

Aurora PostgreSQL with the aspects that can't be automatically migrated using the Amazon Web Ser-

vices Schema Conversion Tool (AWS SCT). It focuses on the differences, incompatibilities, and sim-

ilarities between SQL Server and Aurora PostgreSQL in a wide range of topics including T-SQL,

Configuration, High Availability and Disaster Recovery (HADR), Indexing, Management, Performance

Tuning, Security, and Physical Storage.

The first section of this document provides an overview of AWS SCT and the AWS Data Migration Ser-

vice (DMS) tools for automating the migration of schema, objects and data. The remainder of the doc-

ument contains individual sections for SQL Server features and their Aurora PostgreSQL counterparts.

Each section provides a short overview of the feature, examples, and potential workaround solutions

for incompatibilities.

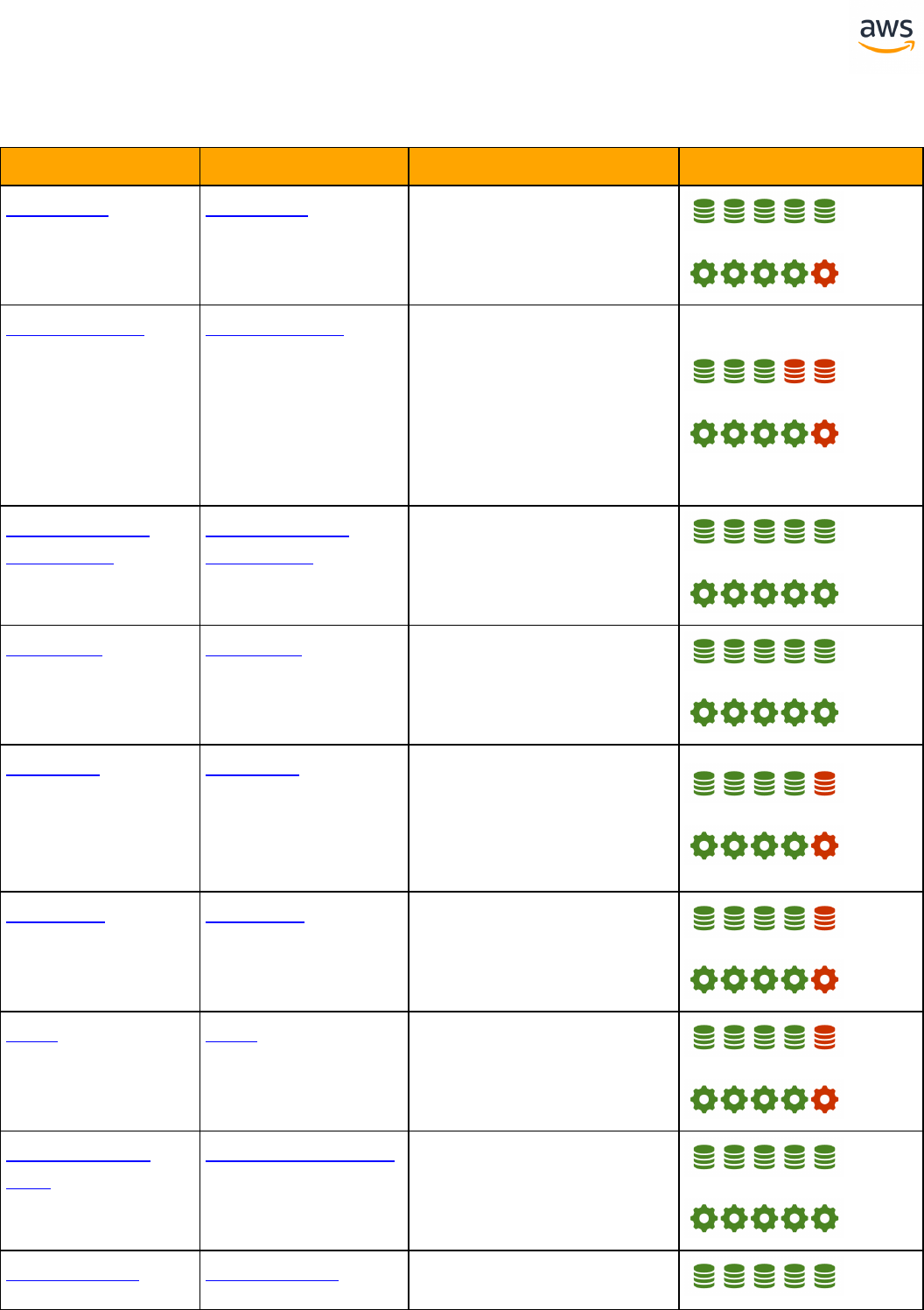

You can use this playbook either as a reference to investigate the individual action codes generated by

the AWS SCT tool, or to explore a variety of topics where you expect to have some incompatibility

issues. When using the AWSSCT, you may see a report that lists Action codes, which indicates some

manual conversion is required, or that a manual verification is recommended. For your convenience,

this Playbook includes an SCT Action Code Index section providing direct links to the relevant topics

that discuss the manual conversion tasks needed to address these action codes. Alternatively, you can

explore the Tables of Feature Compatibility section that provides high-level graphical indicators and

descriptions of the feature compatibility between SQL Server and Aurora PostgreSQL. It also includes a

graphical compatibility indicator and links to the actual sections in the playbook.

There are two appendices at the end of this playbook: Appendix A: SQL Server 2008 Deprecated

Feature List provides focused links on features that were deprecated in SQL Server 2008R2. Appendix

B: Migration Quick Tips provides a list of tips for SQL Server administrators or developers who have

little experience with PostgreSQL. It briefly highlights key differences between SQL Server and Aurora

PostgreSQL that they are likely to encounter.

- 9 -

Note that not all SQL Server features are fully compatible with Aurora PostgreSQL, or have simple work-

arounds. From a migration perspective, this document does not yet cover all SQL Server features and

capabilities. This first release focuses on some of the most important features and will be expanded

over time.

- 10 -

Disclaimer

The various code snippets, commands, guides, best practices, and scripts included in this document

should be used for reference only and are provided as-is without warranty. Test all of the code snip-

petts, commands, best practices, and scripts outlined in this document in a non-production envir-

onment first. Amazon and its affiliates are not responsible for any direct or indirect damage that may

occur from the information contained in this document.

- 11 -

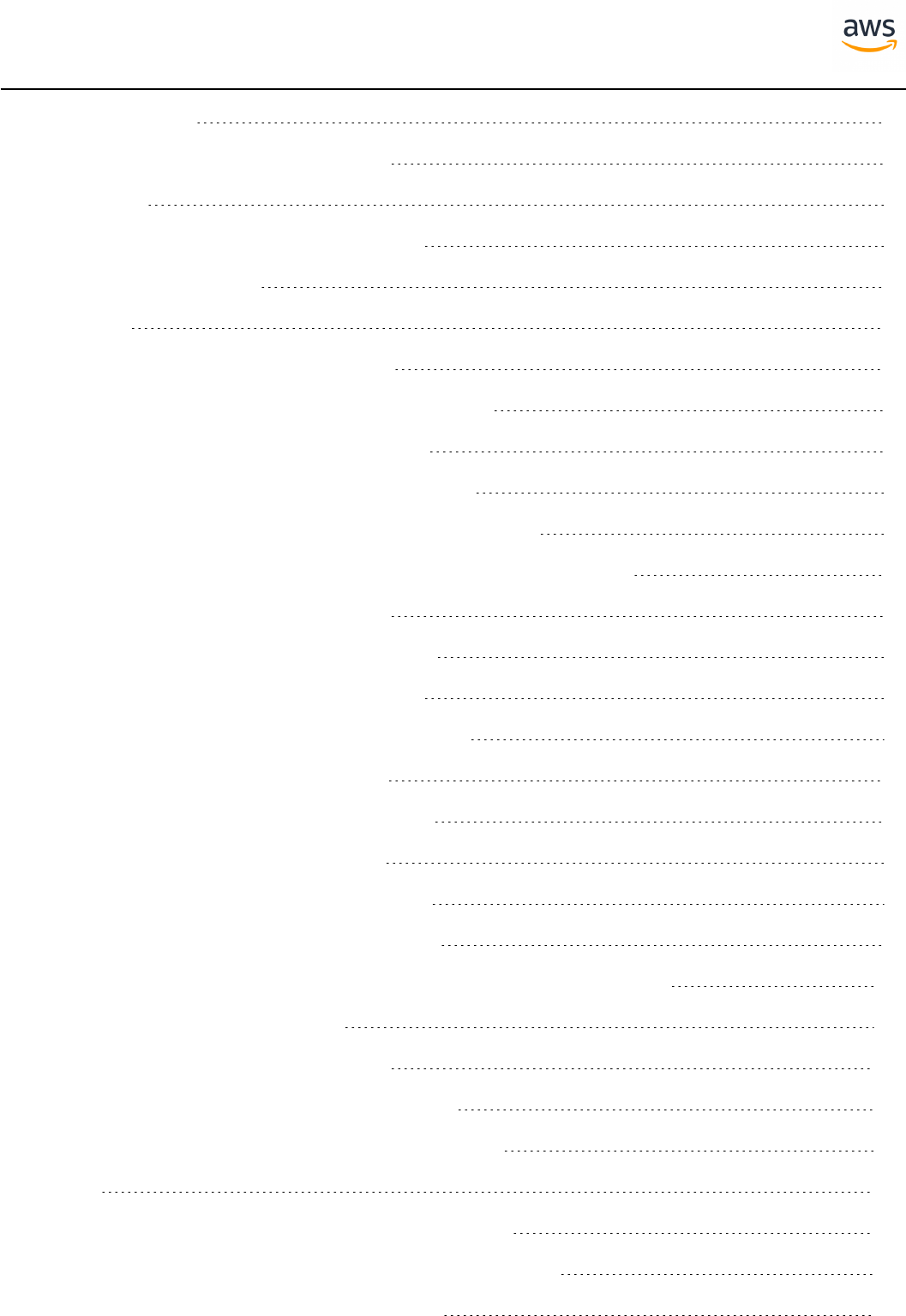

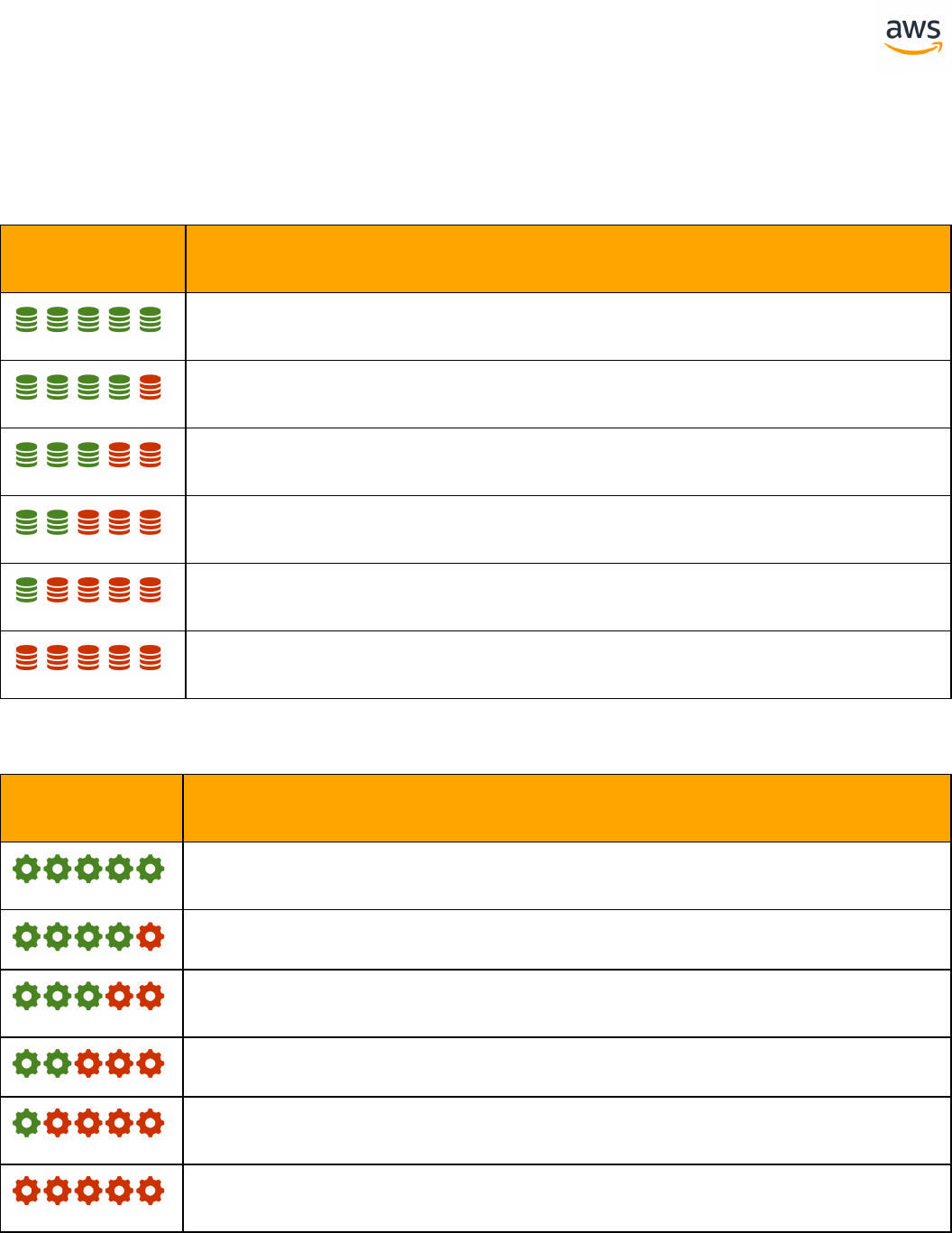

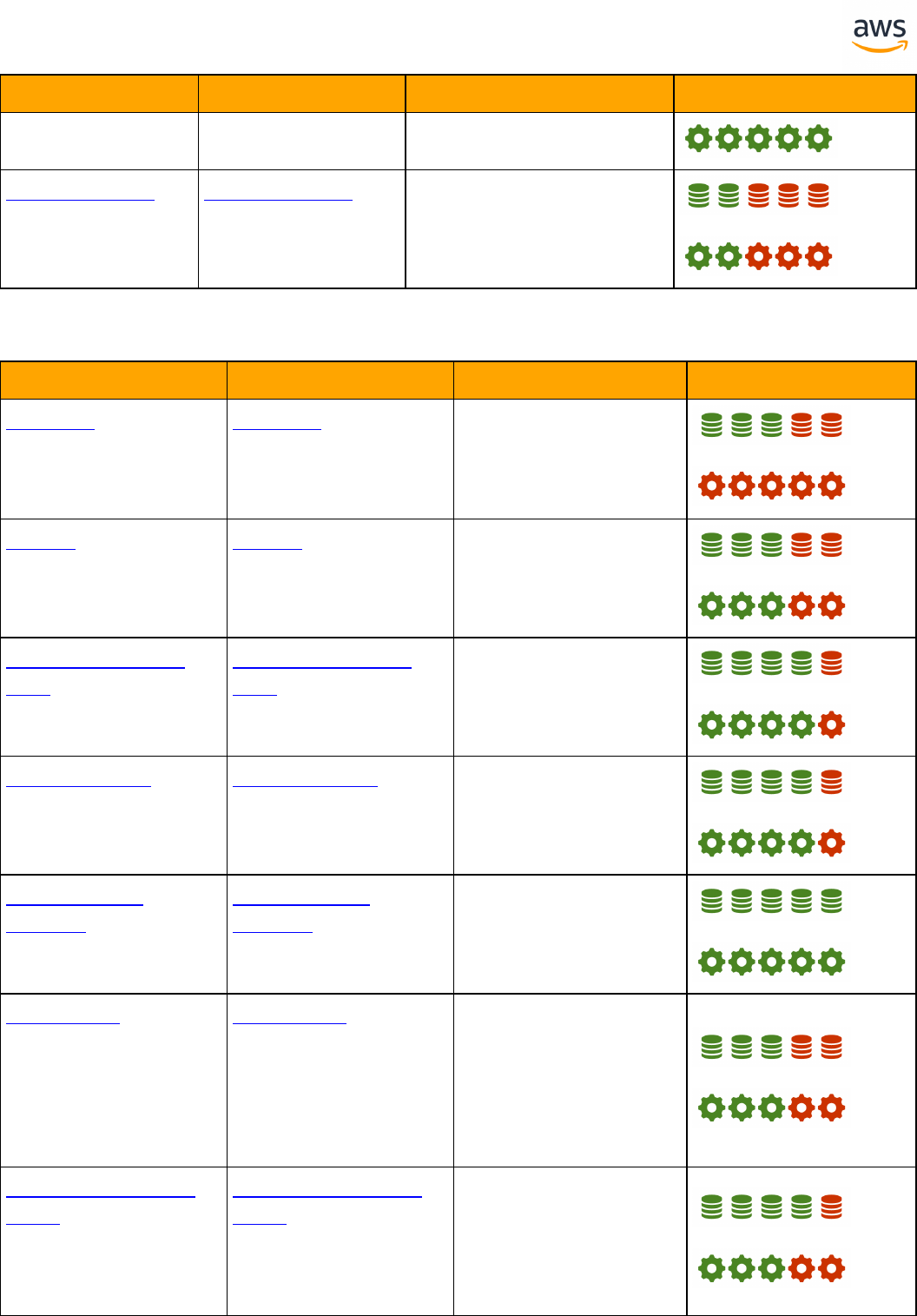

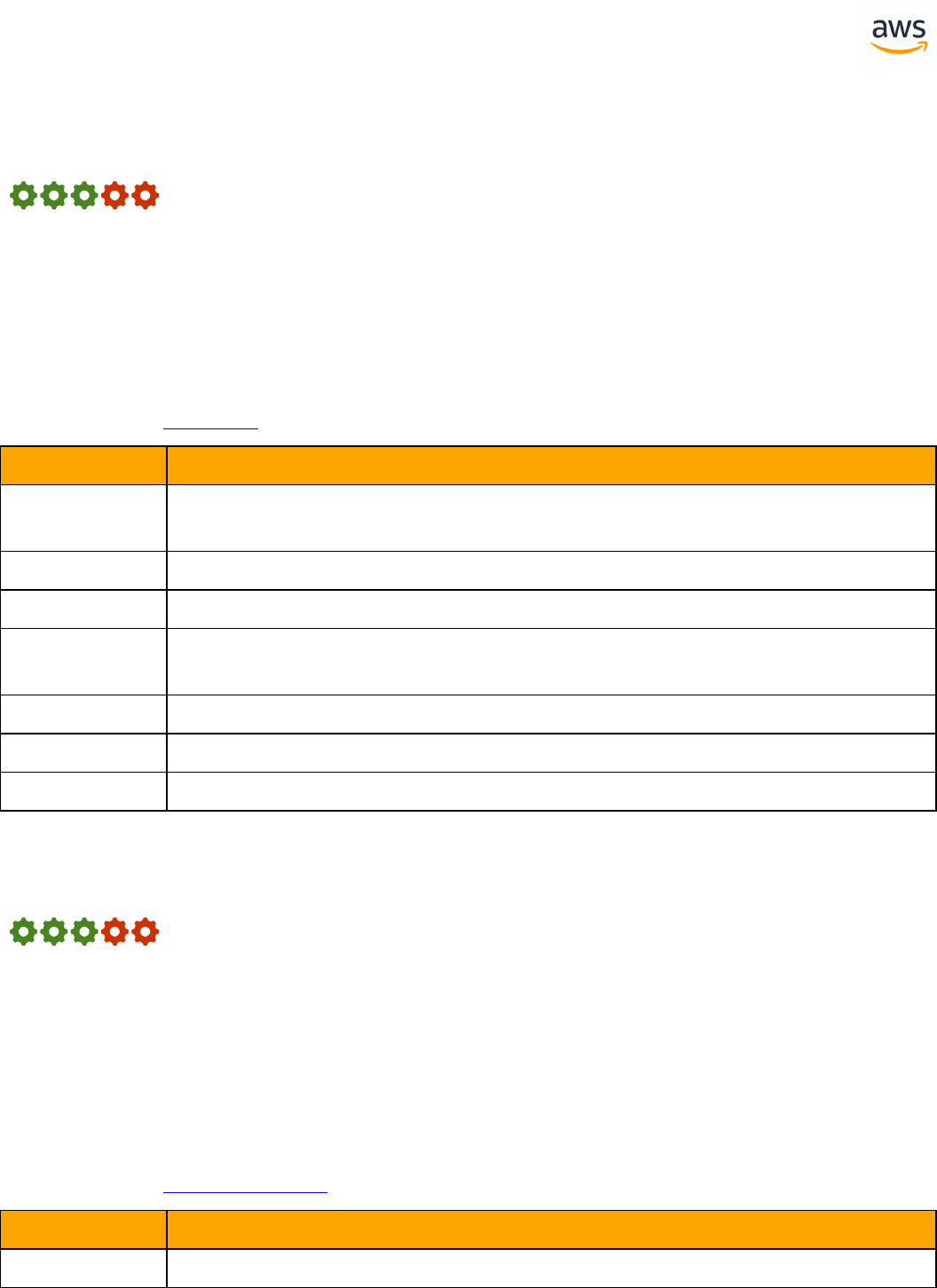

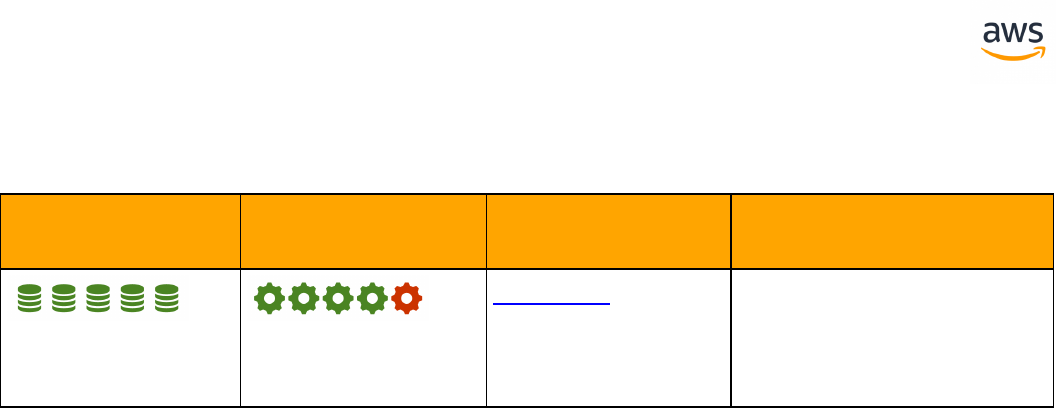

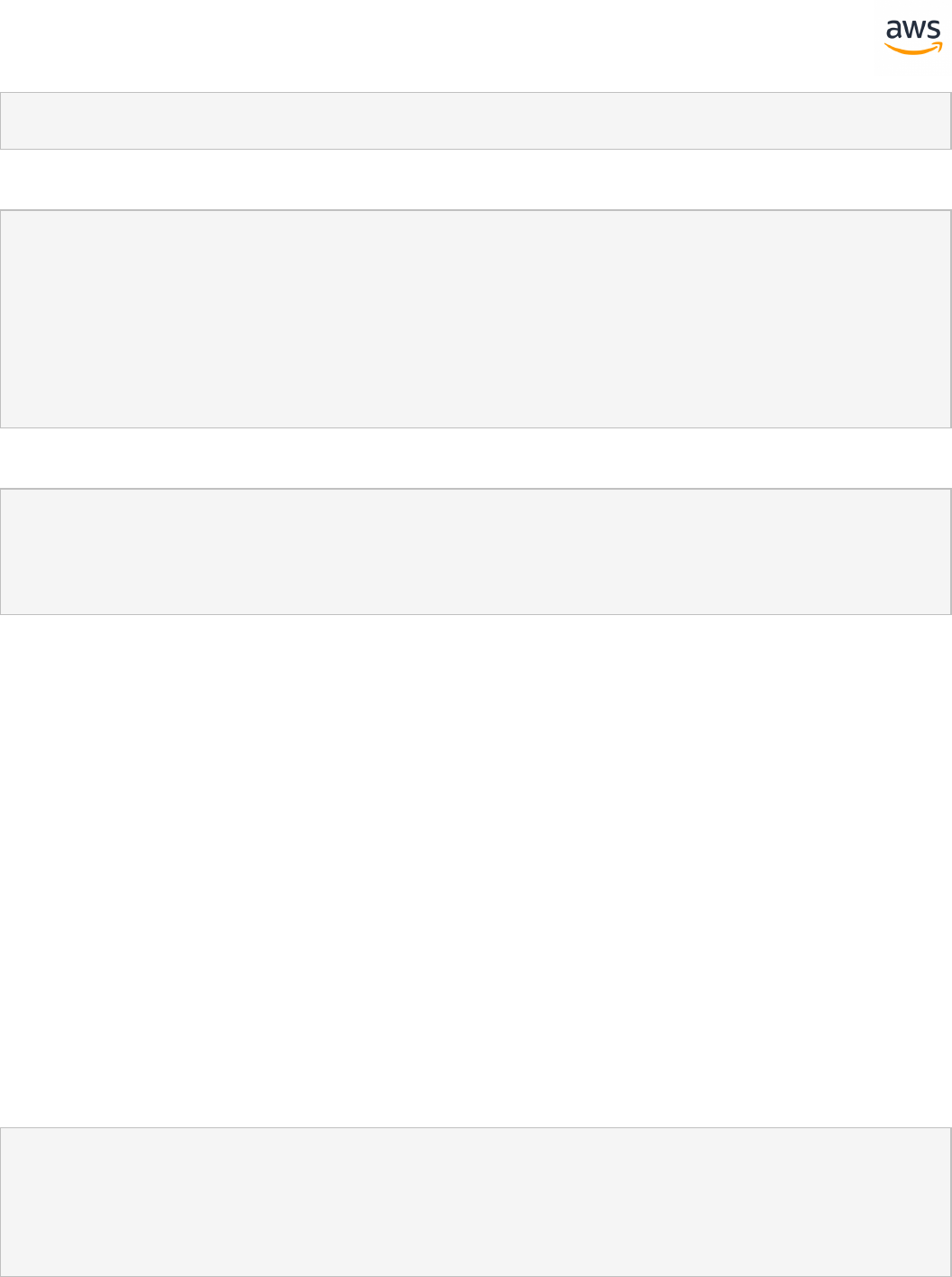

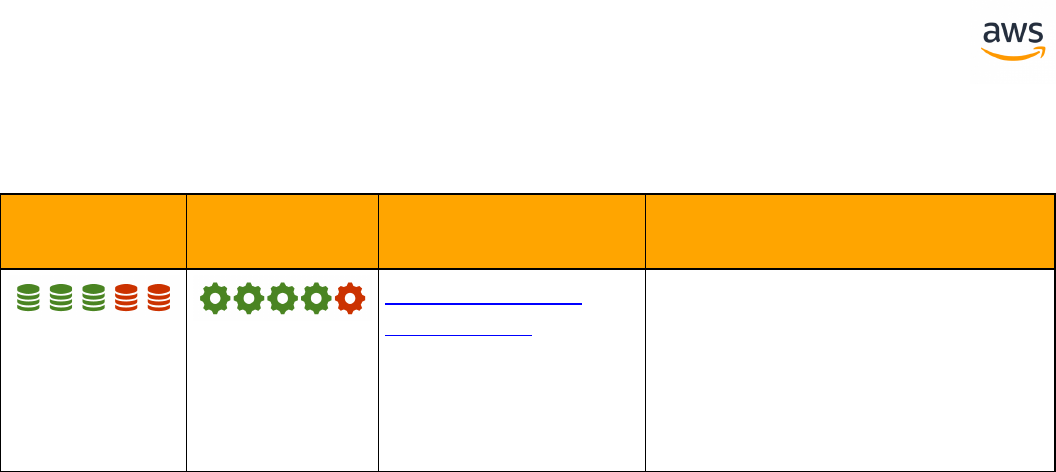

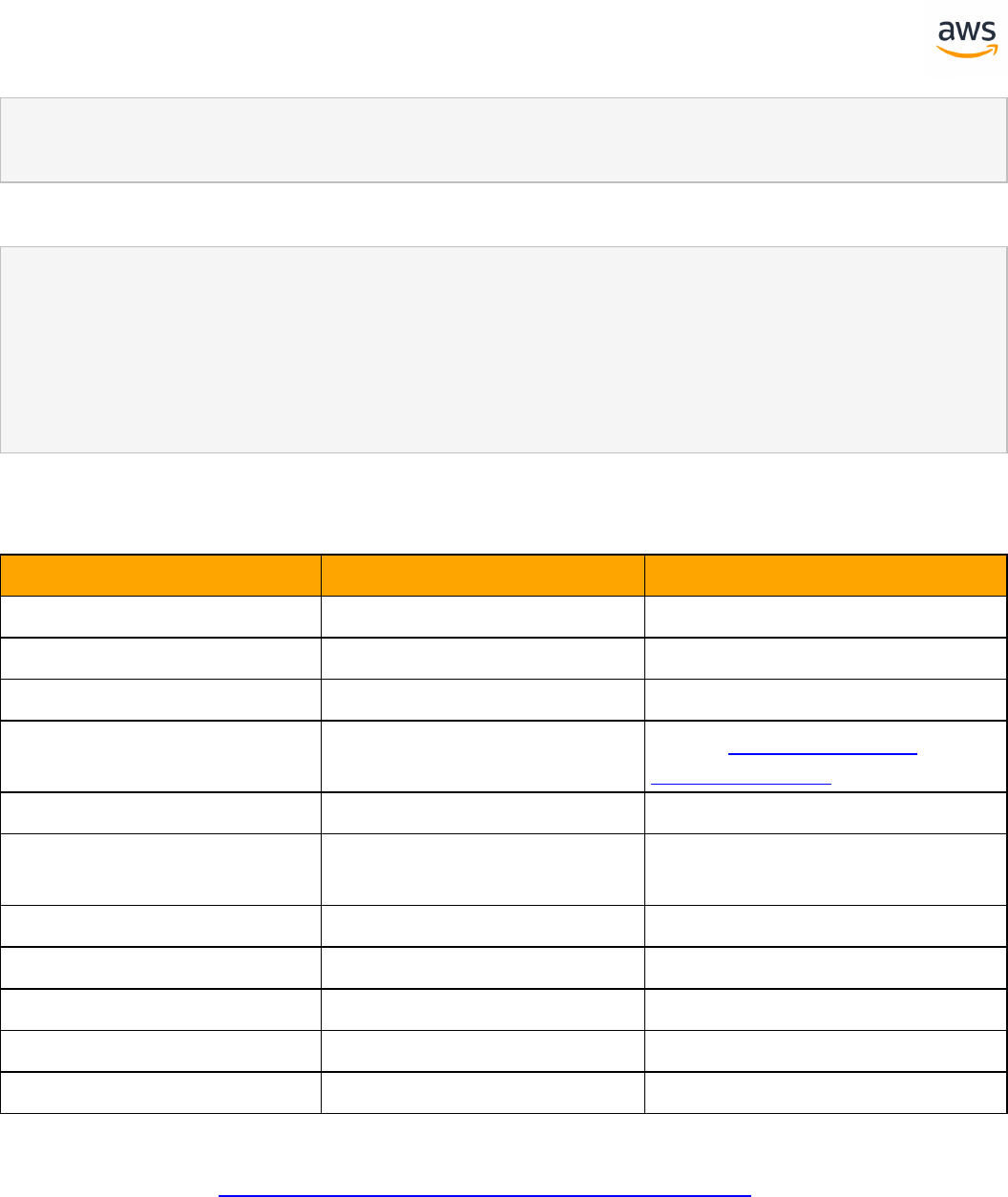

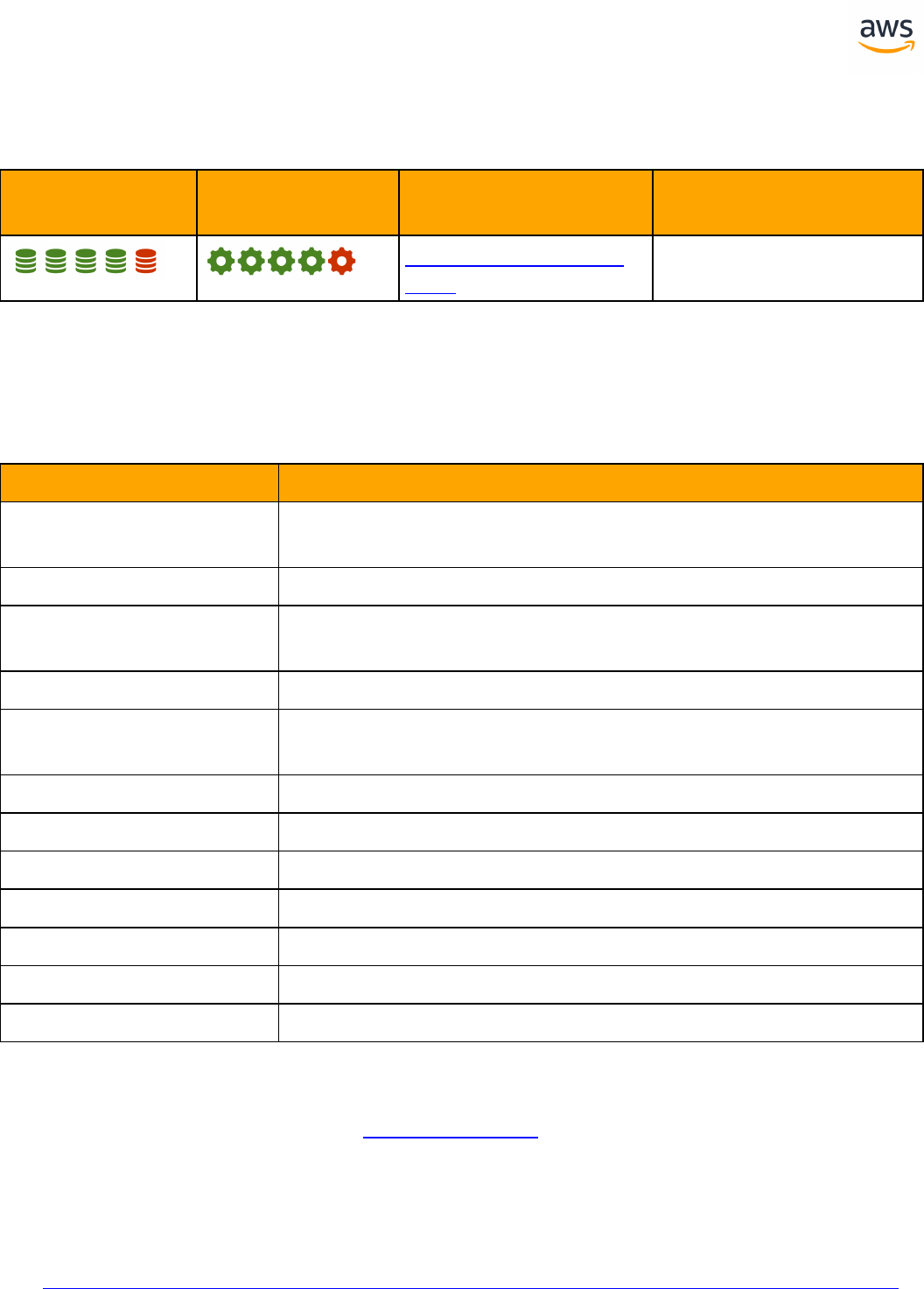

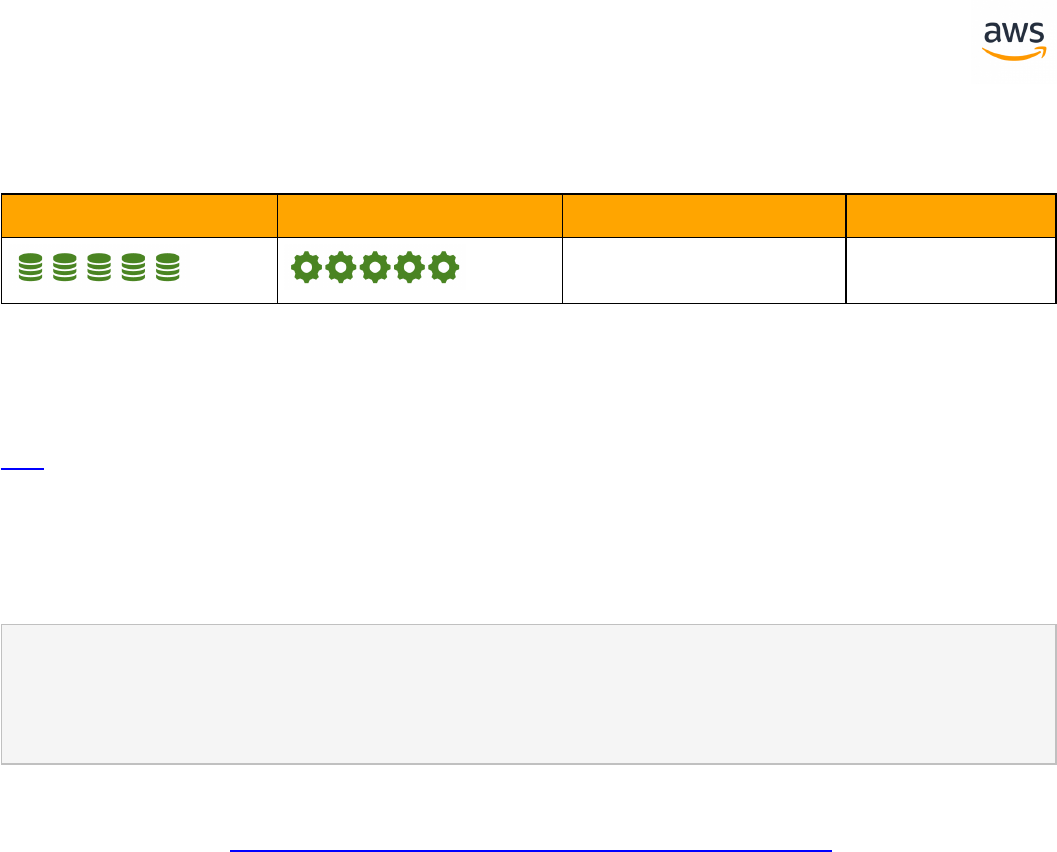

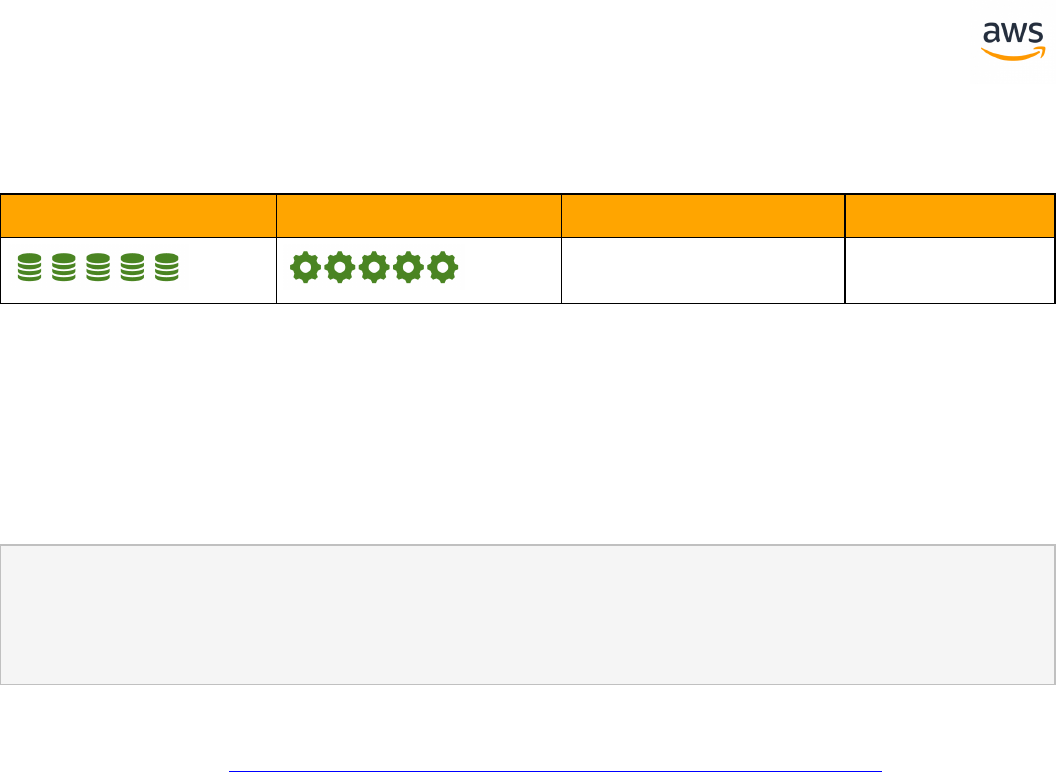

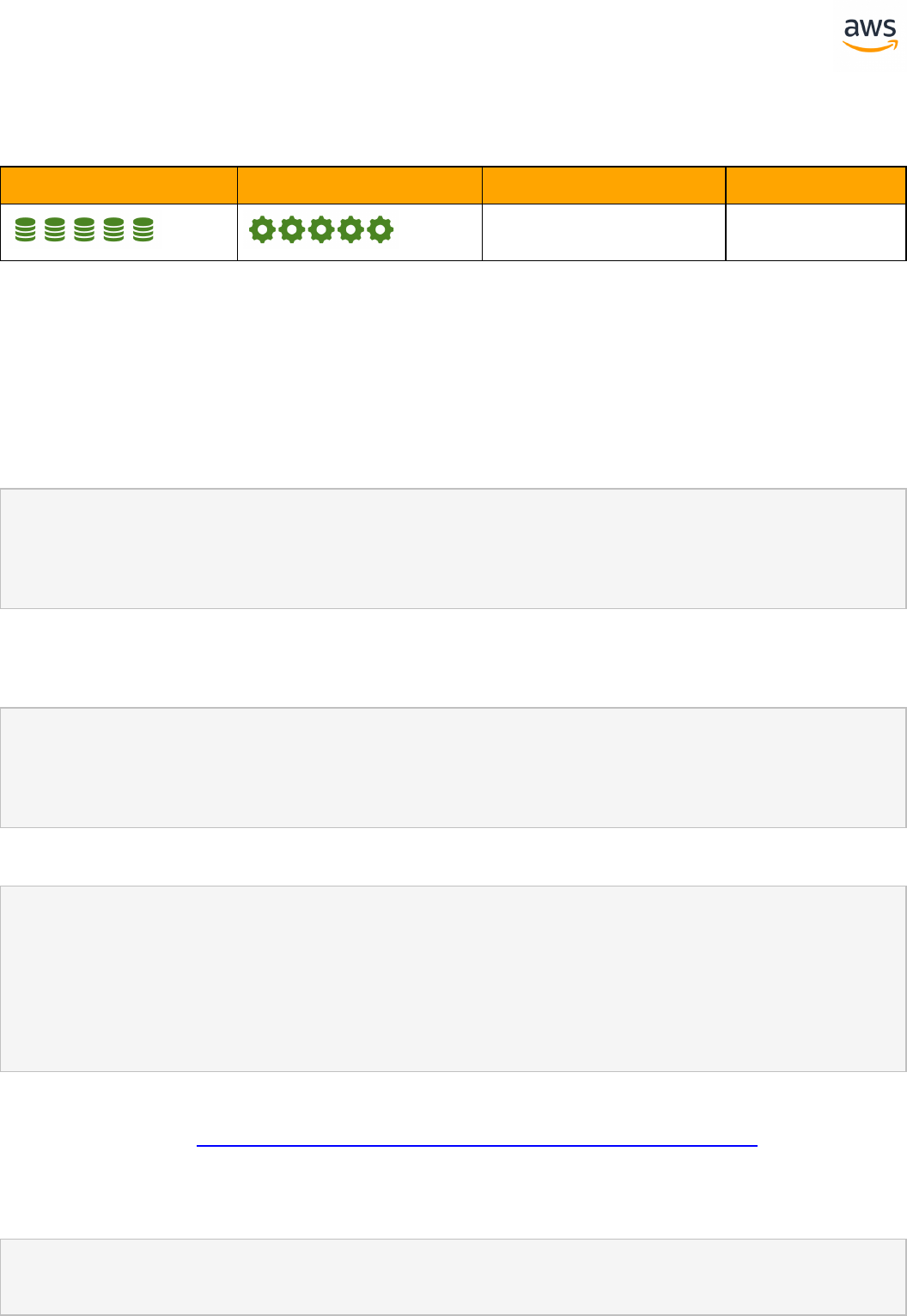

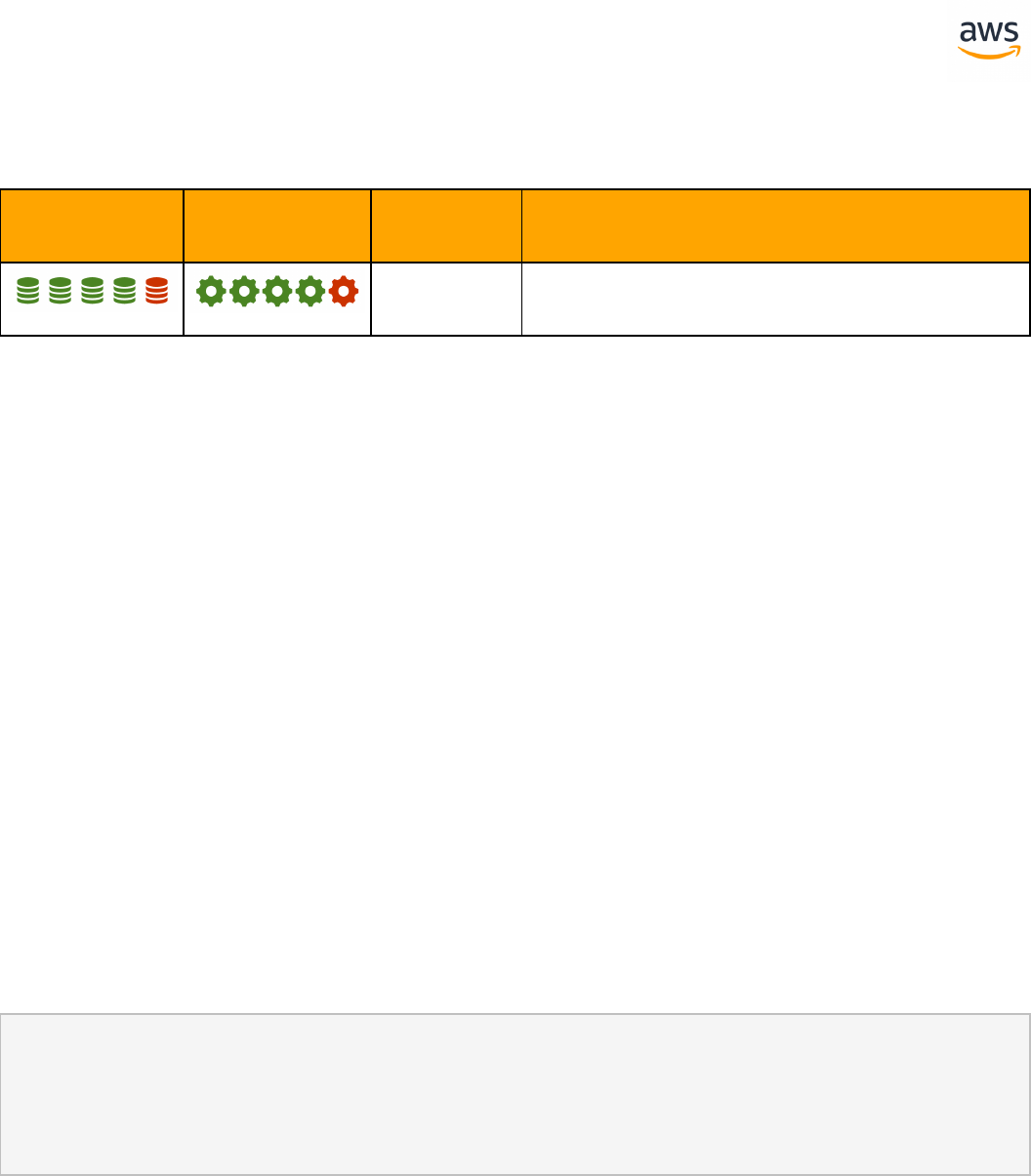

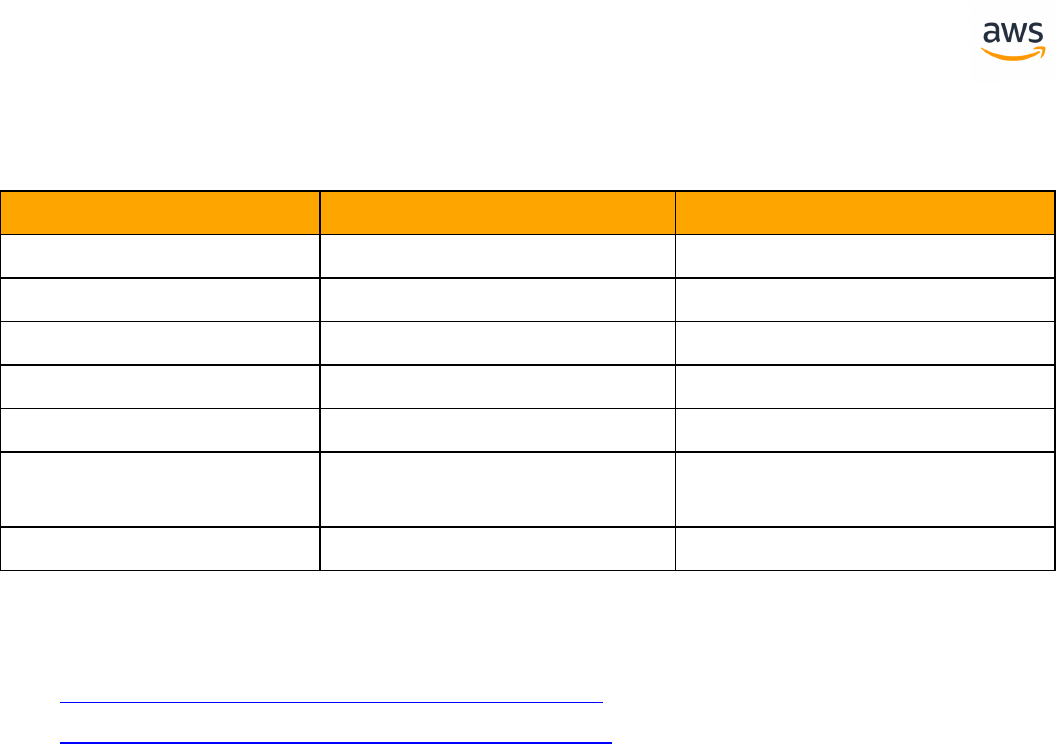

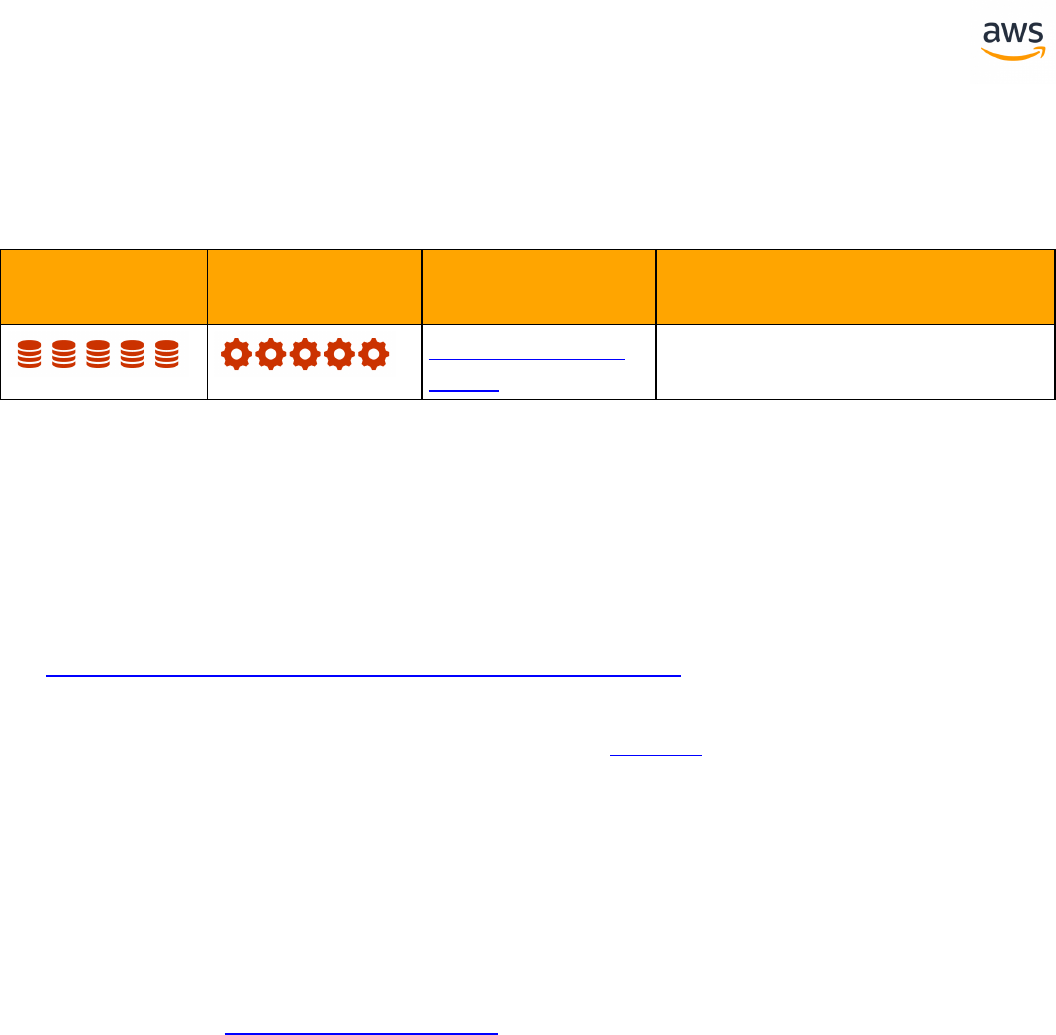

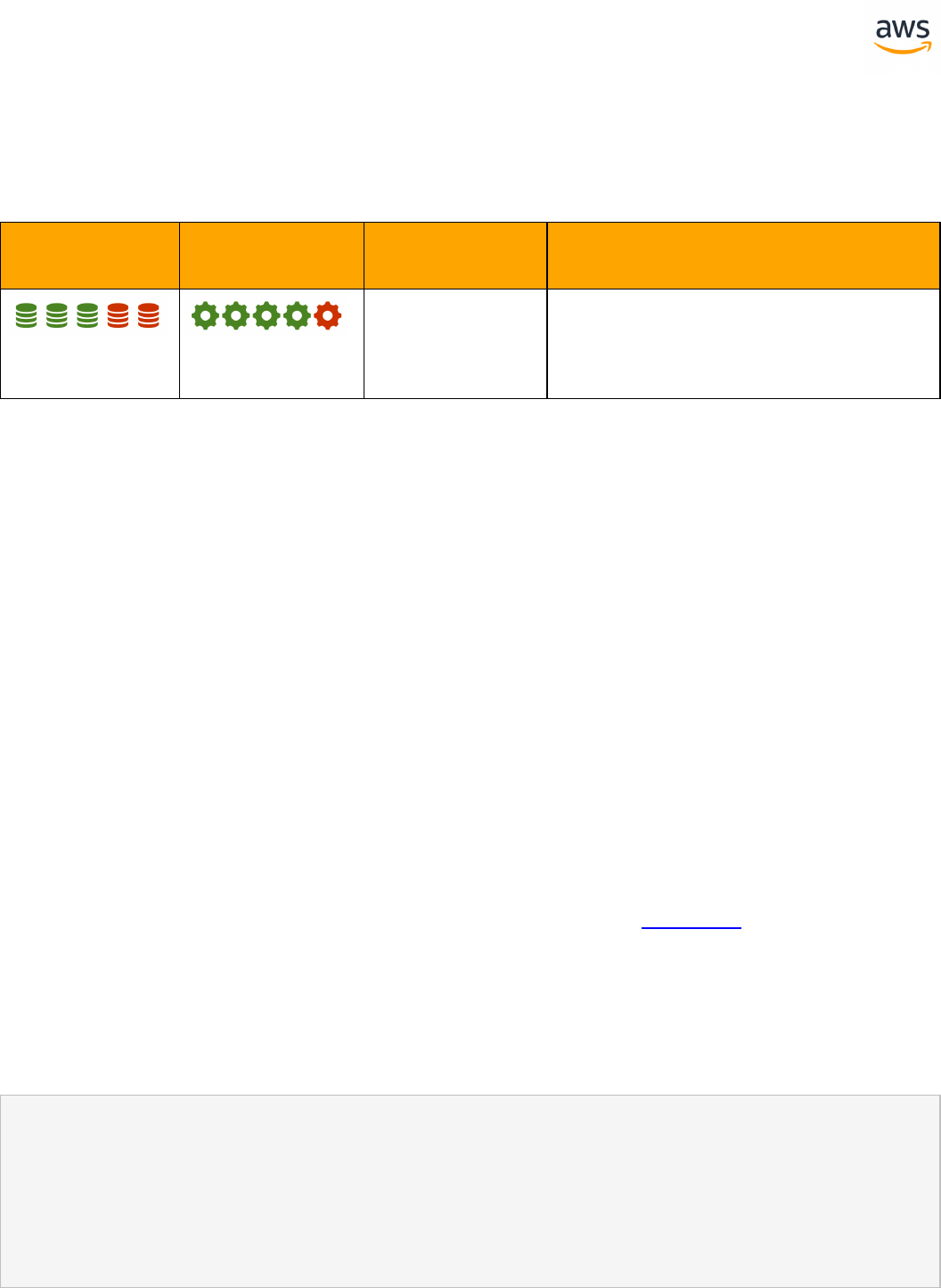

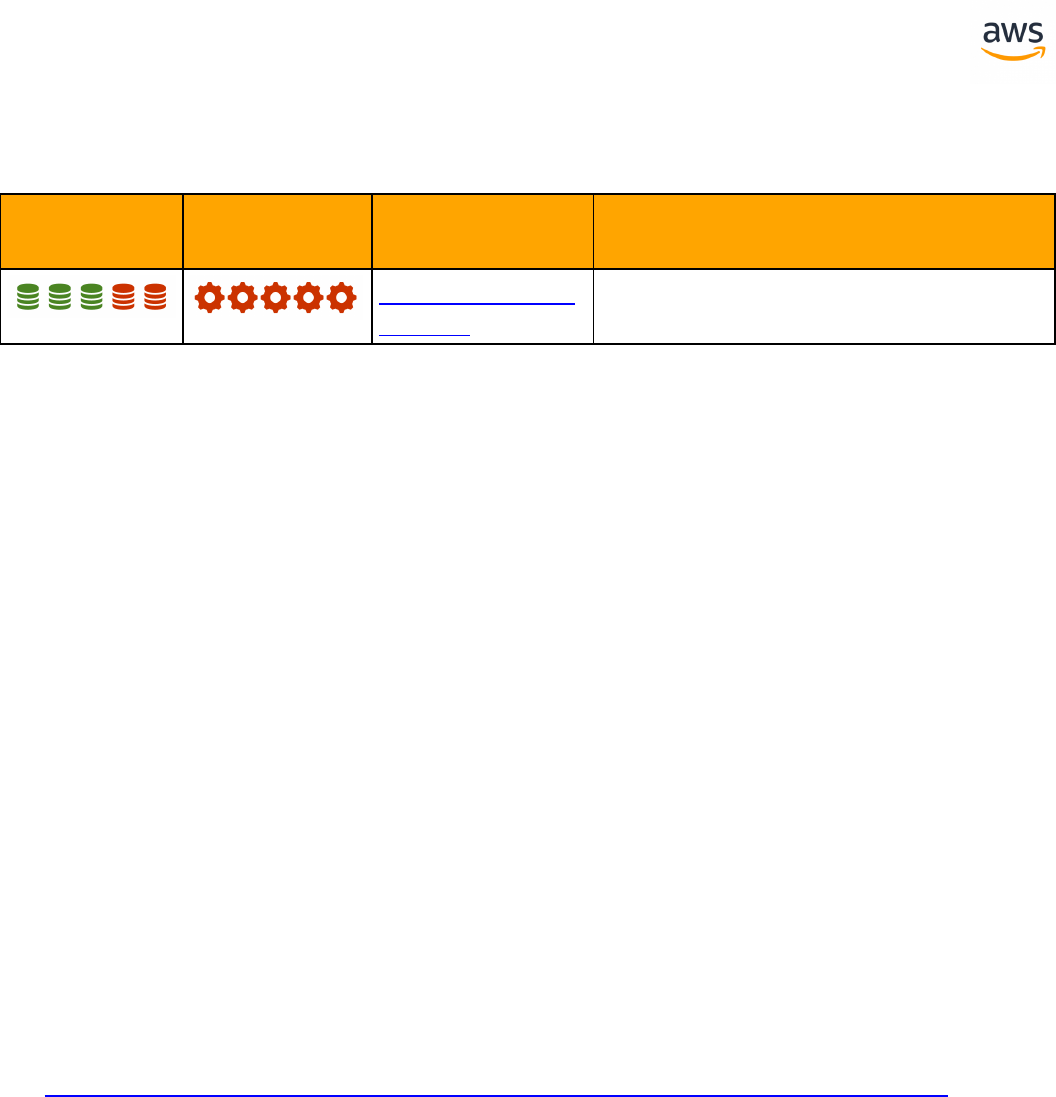

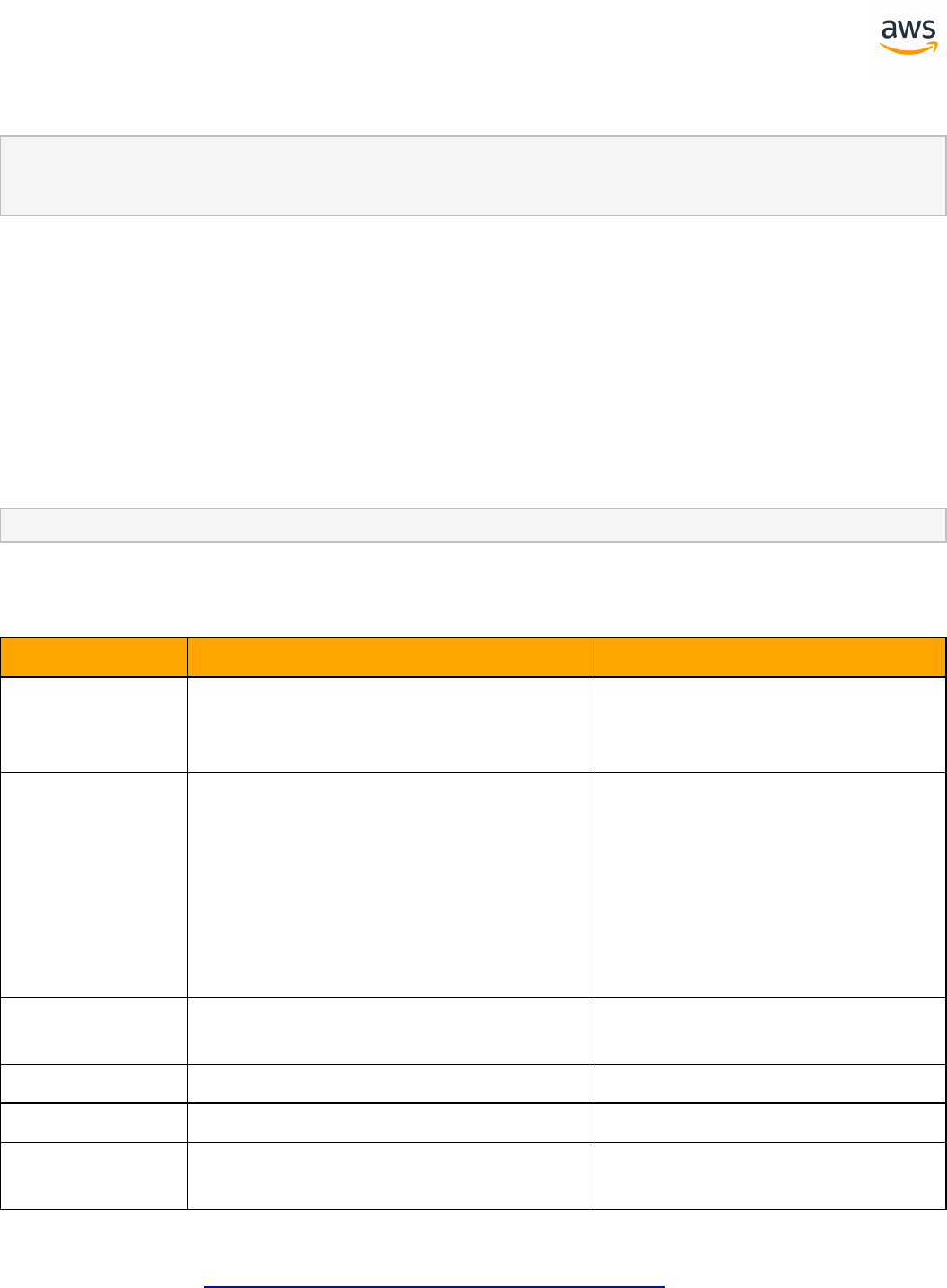

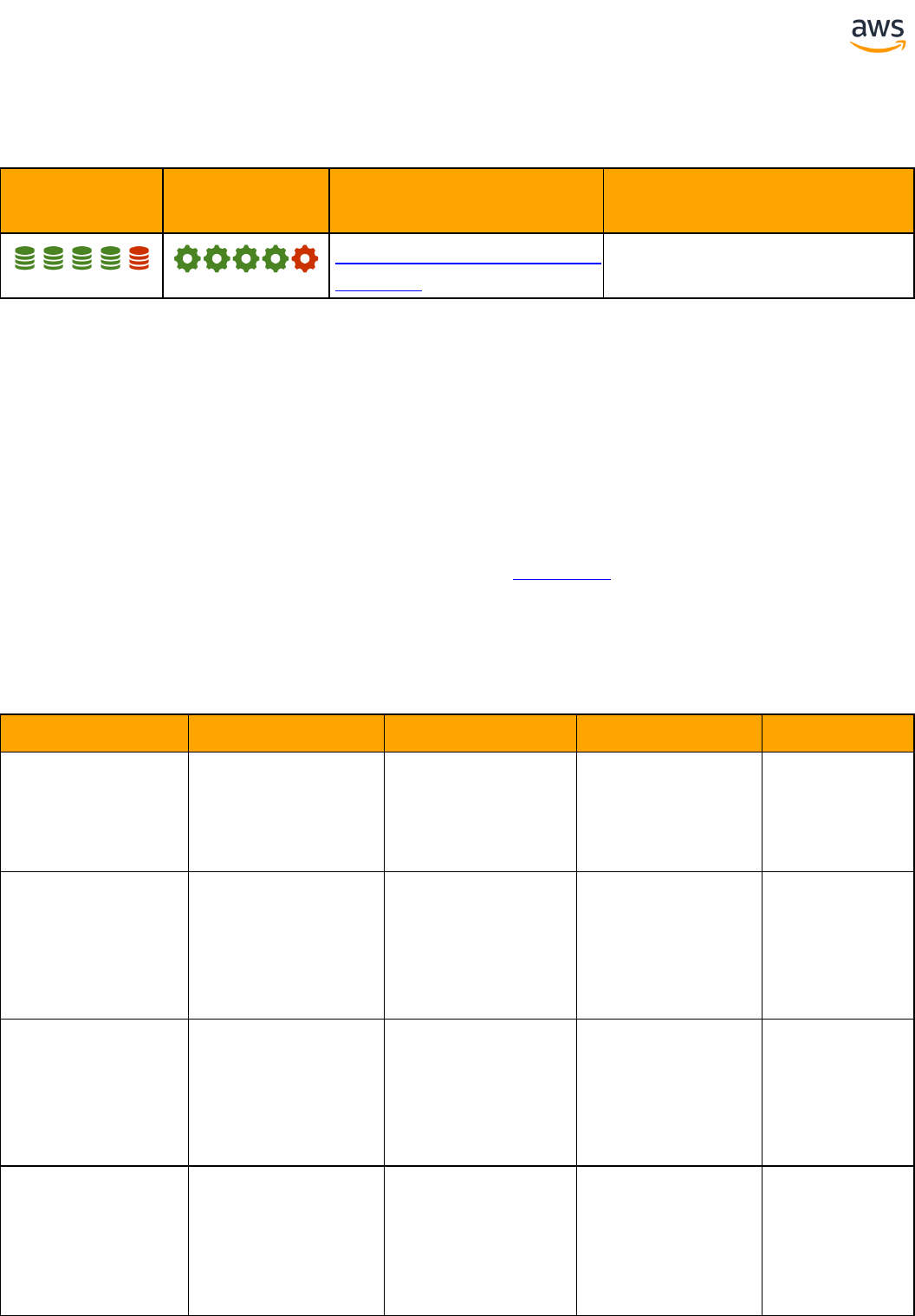

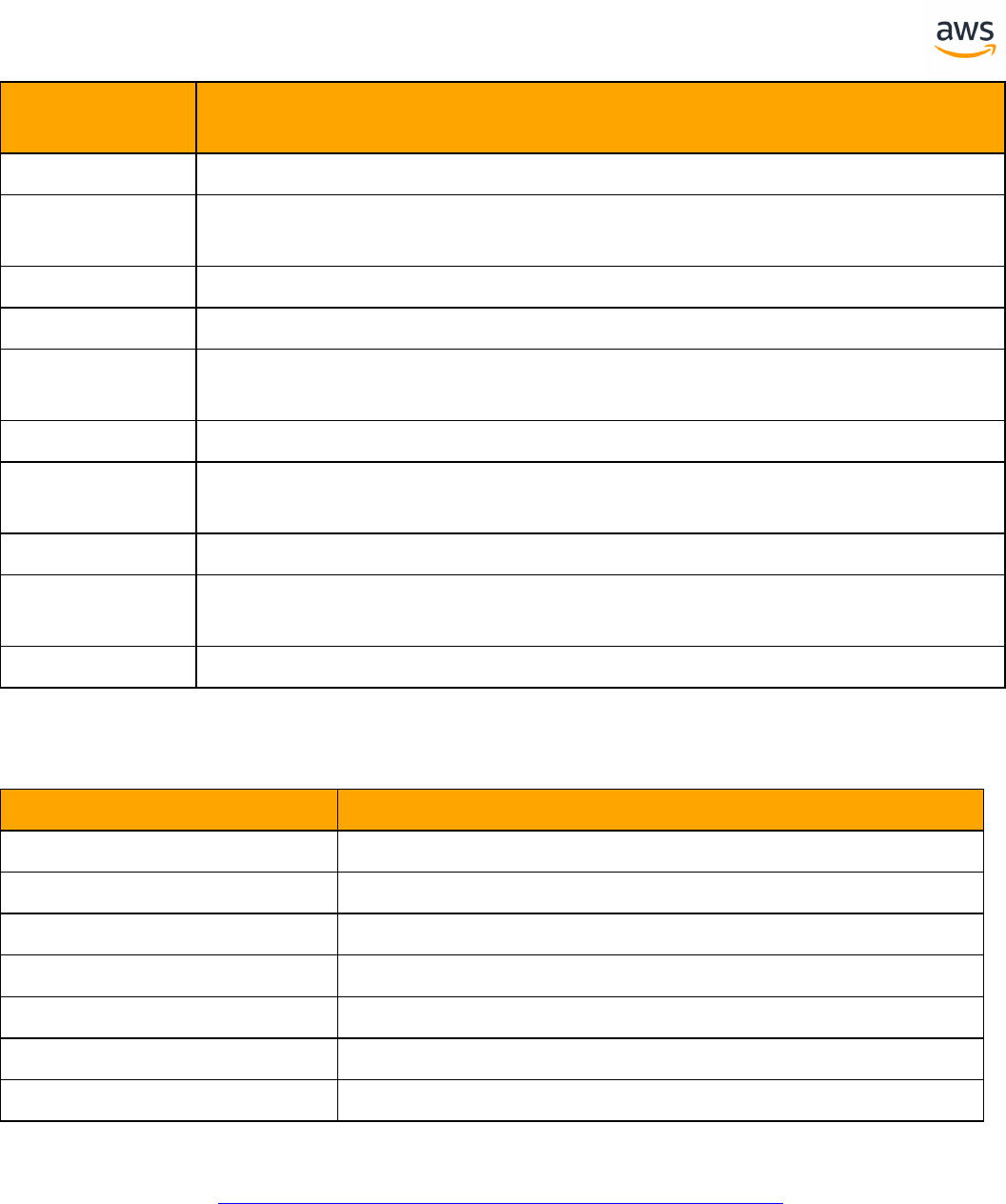

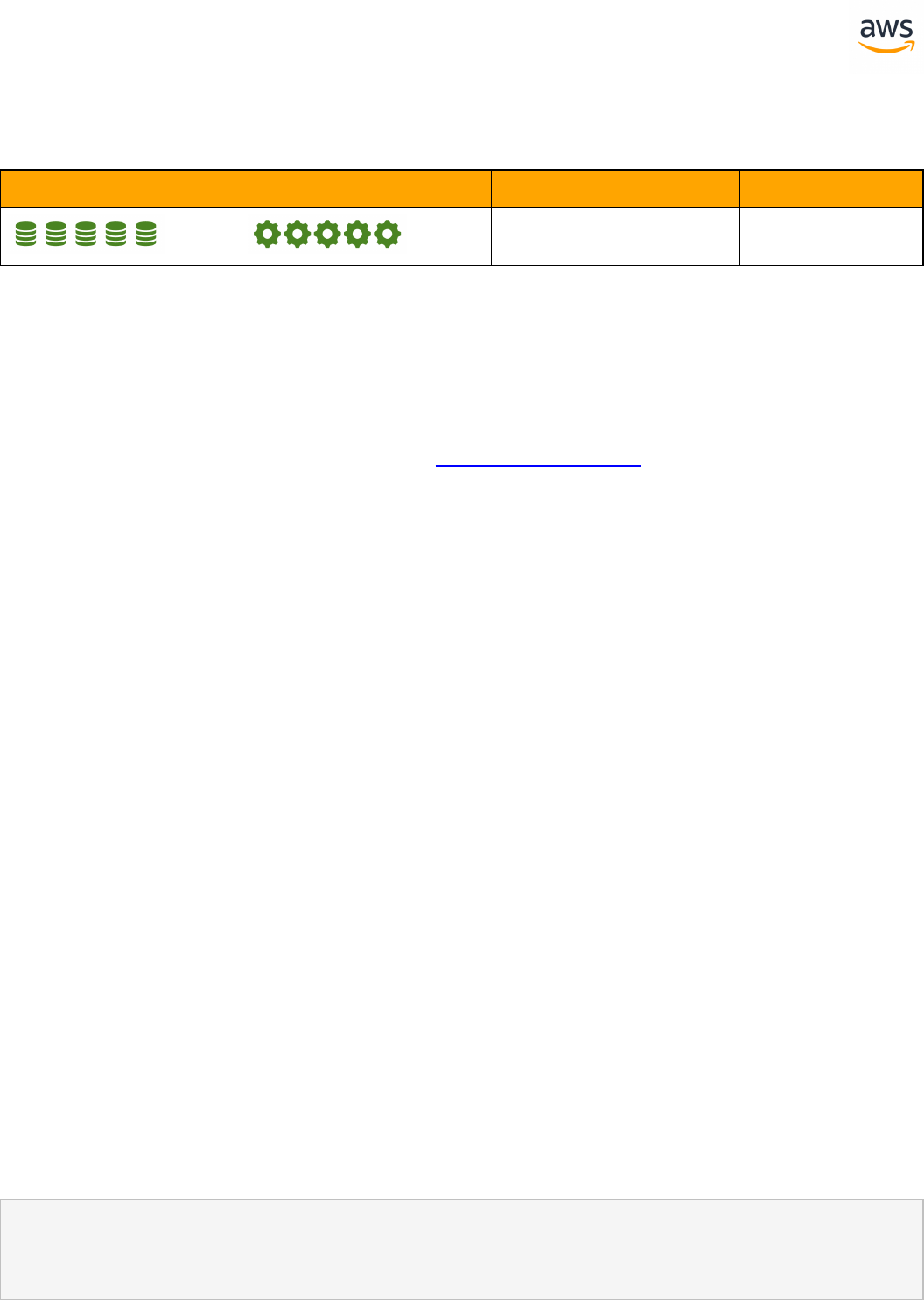

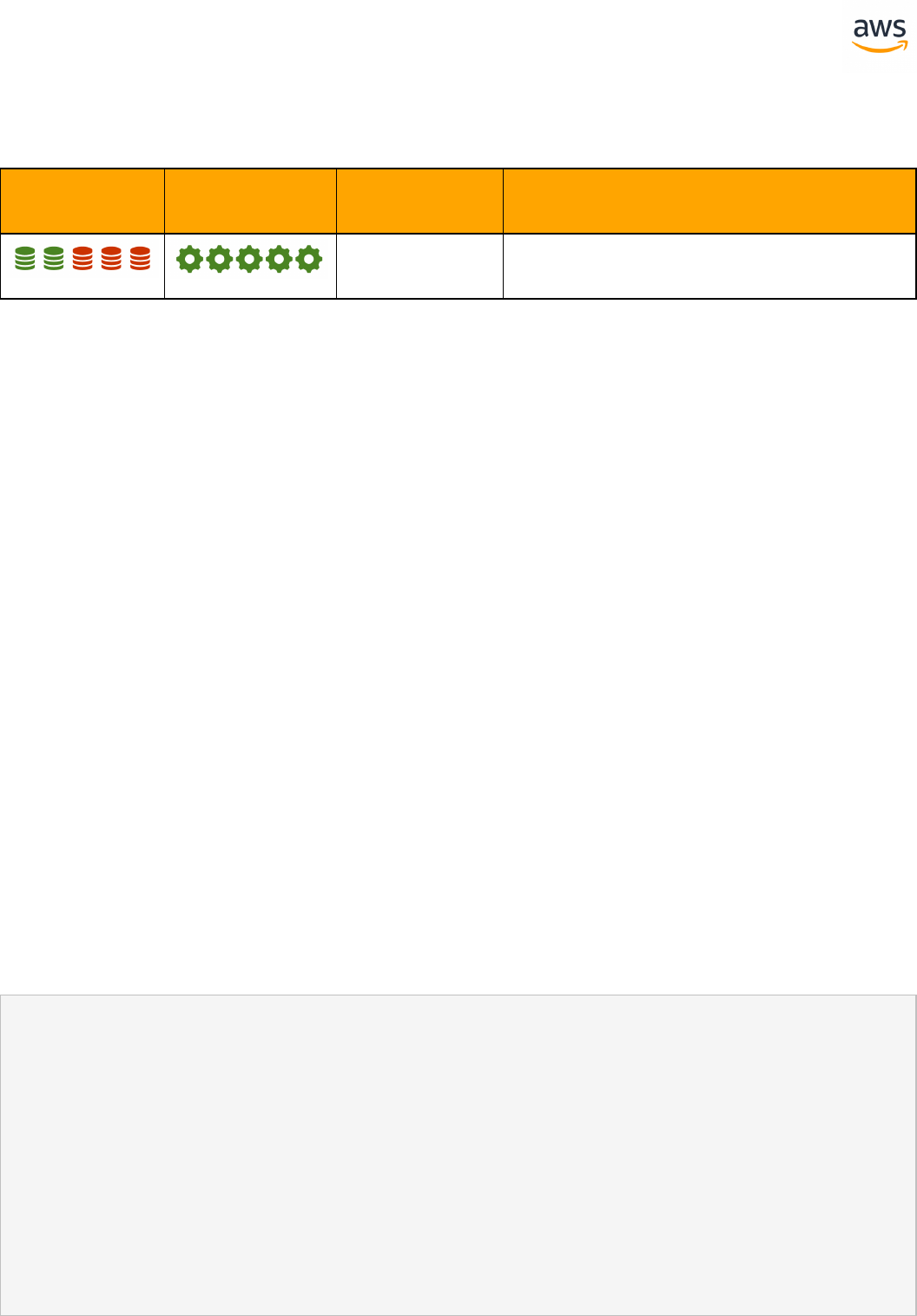

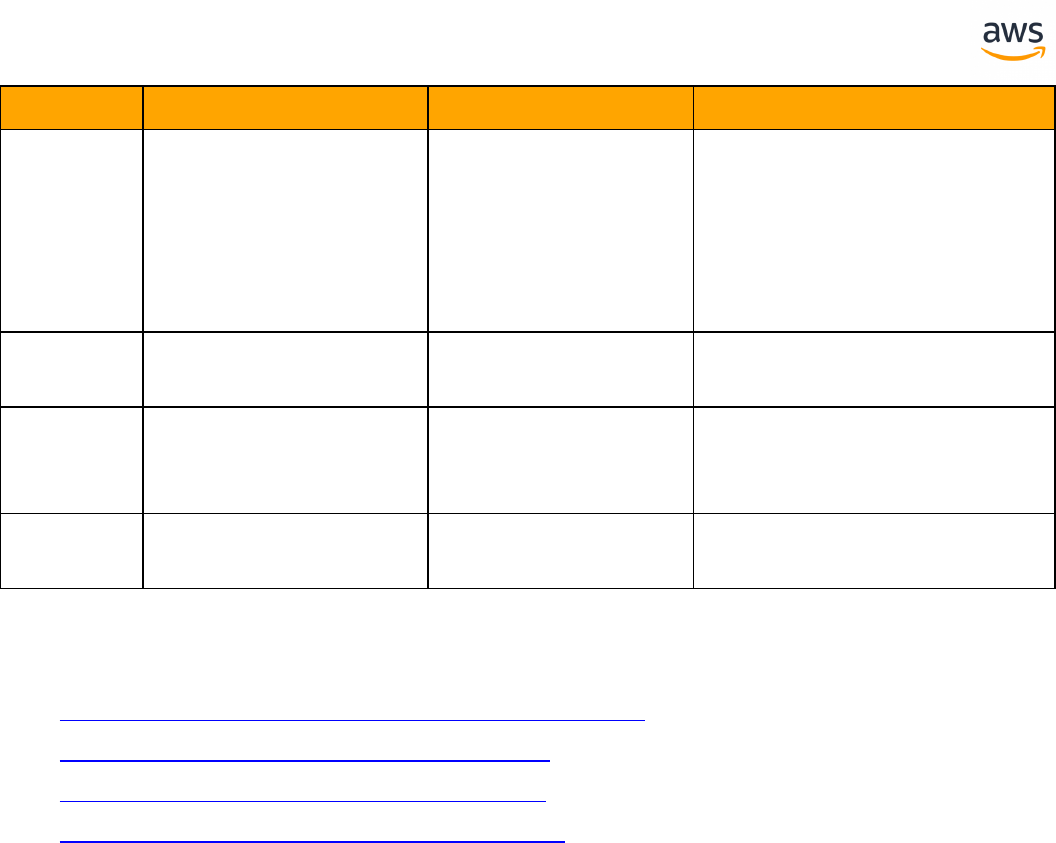

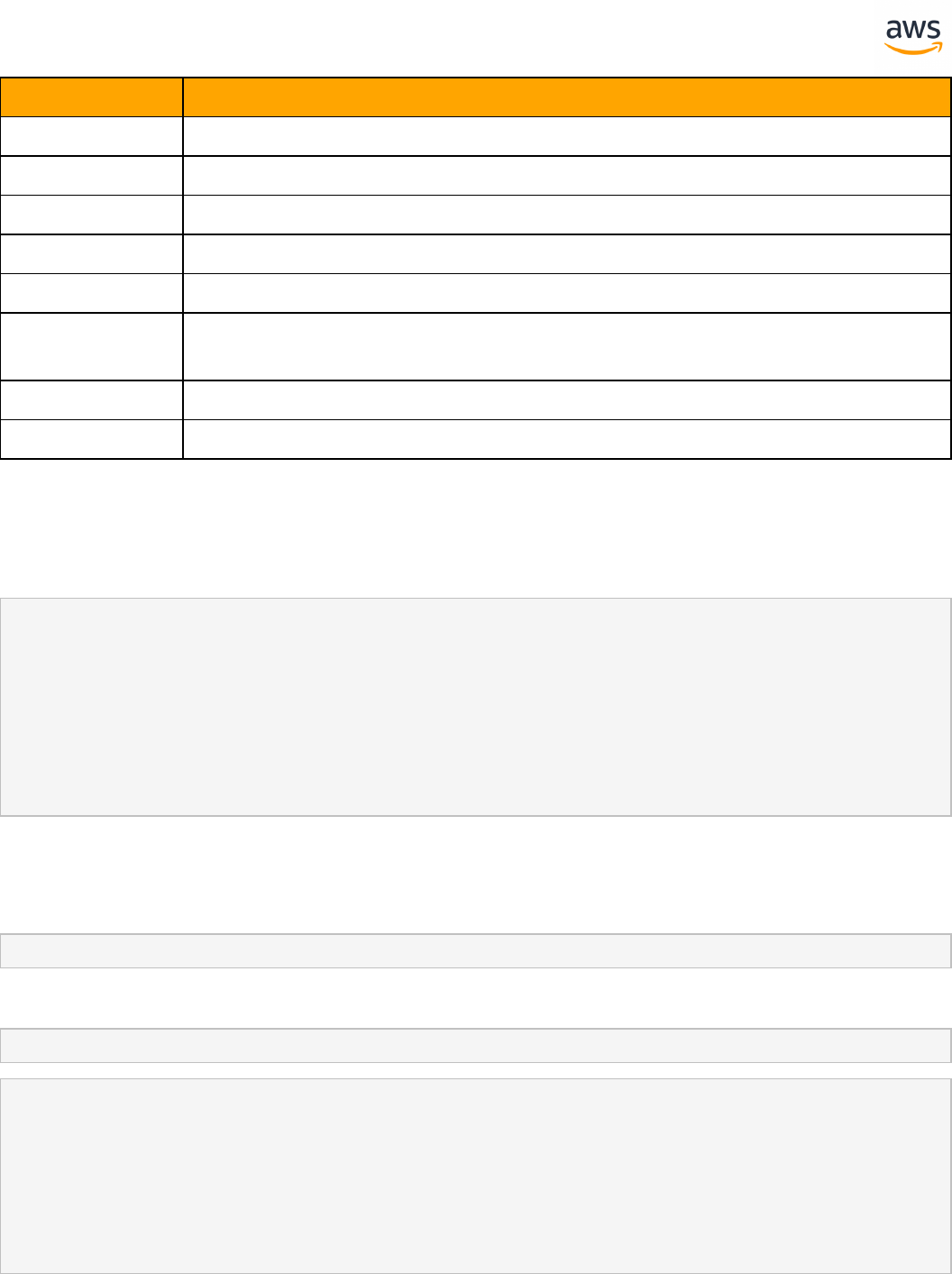

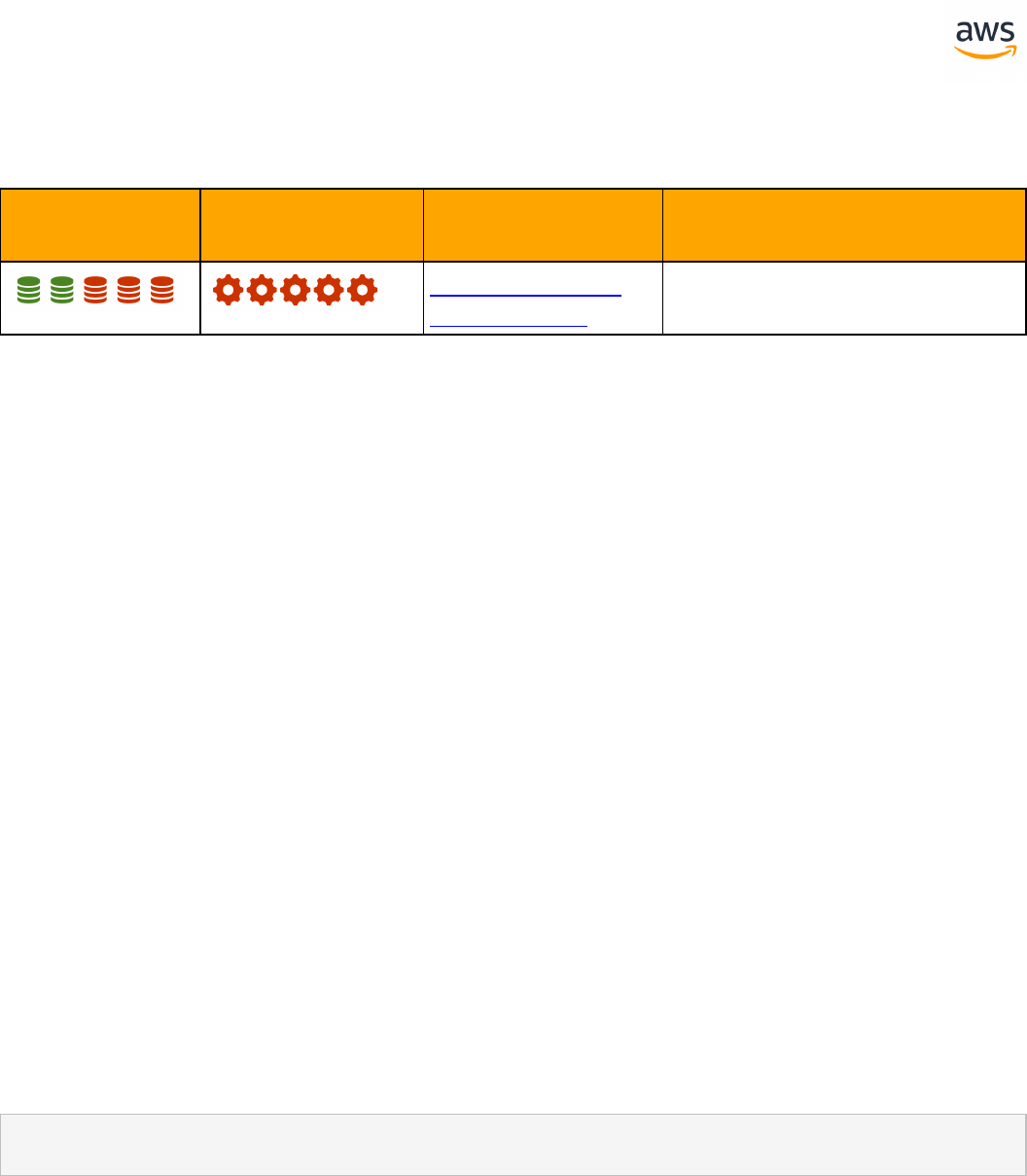

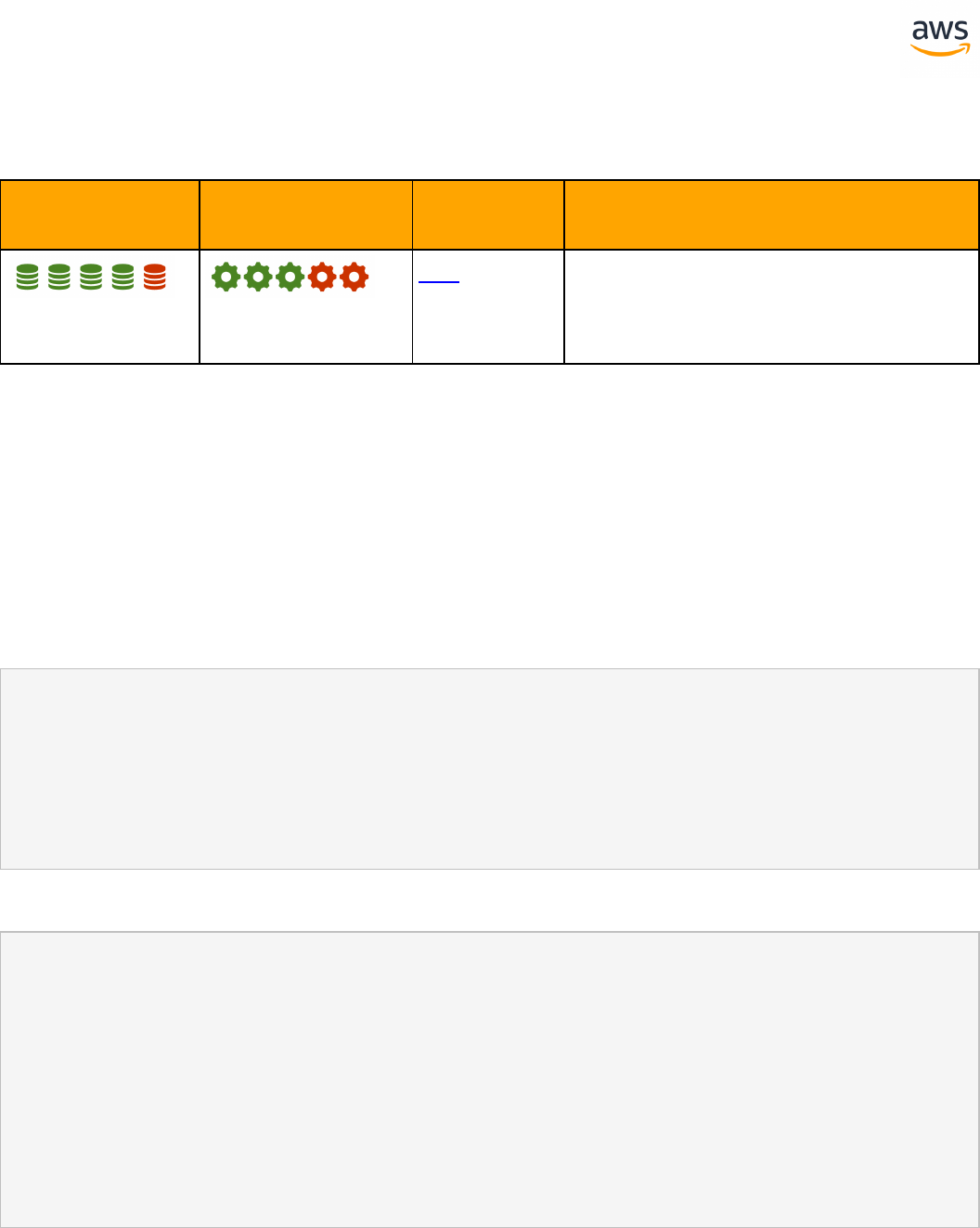

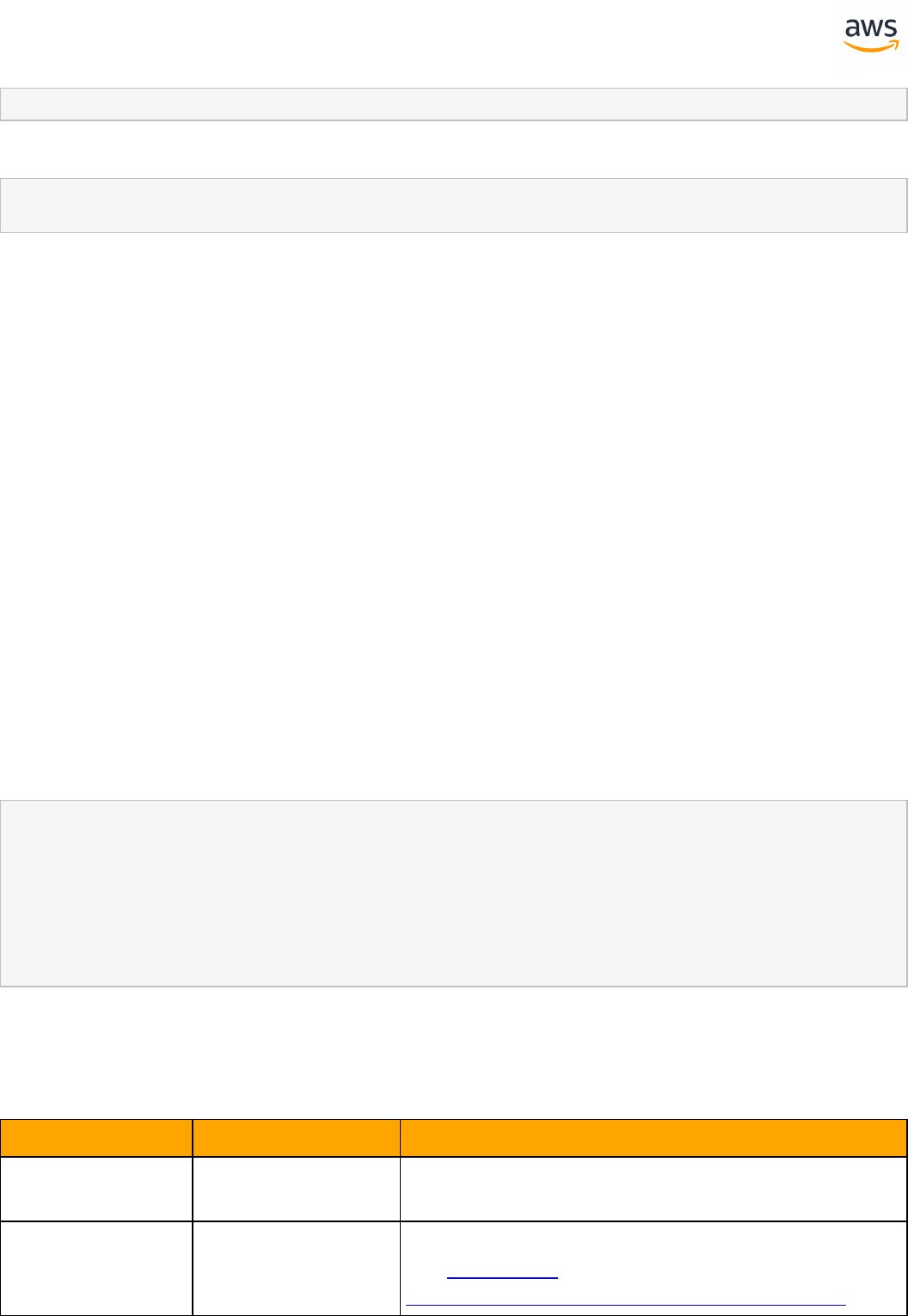

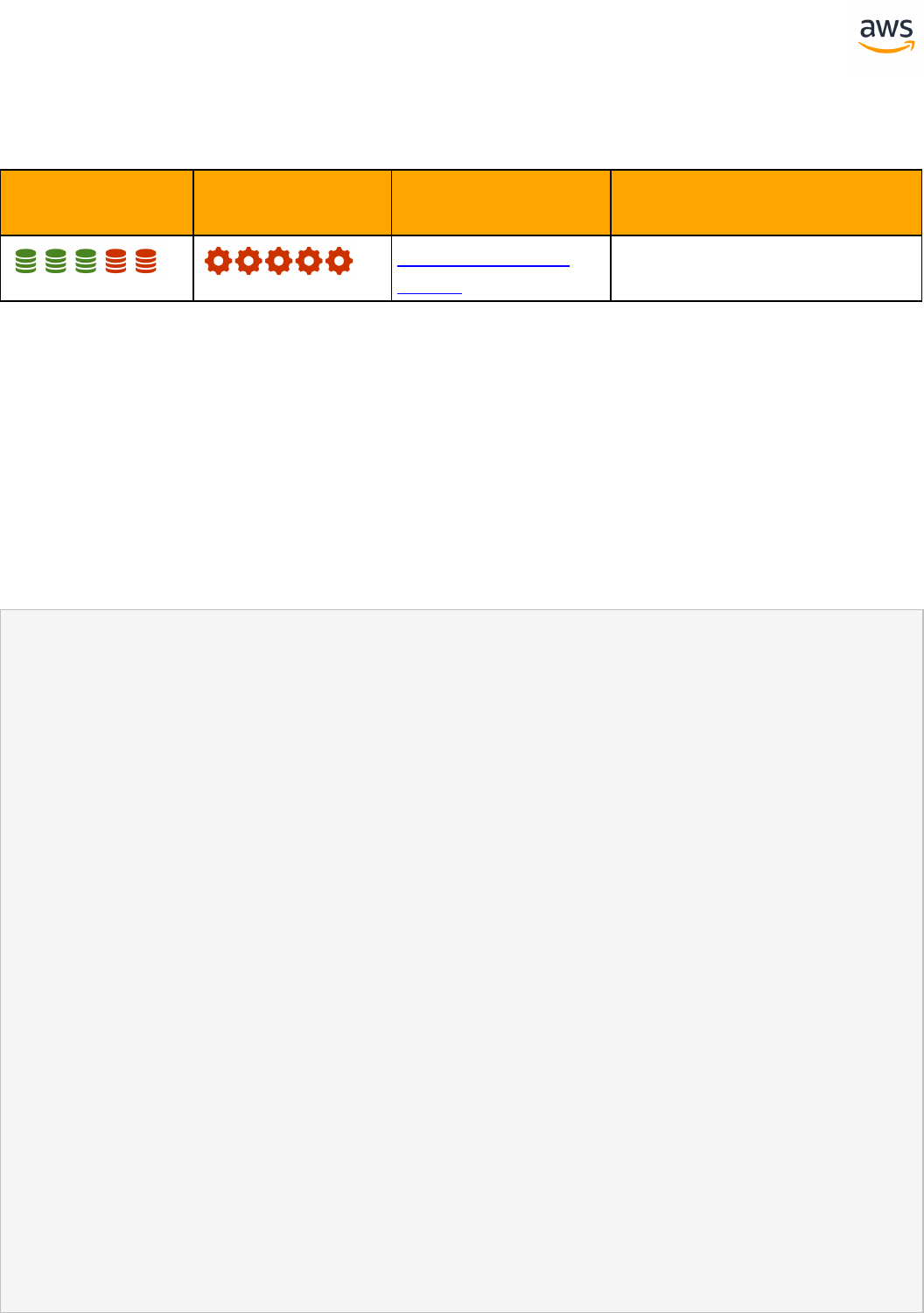

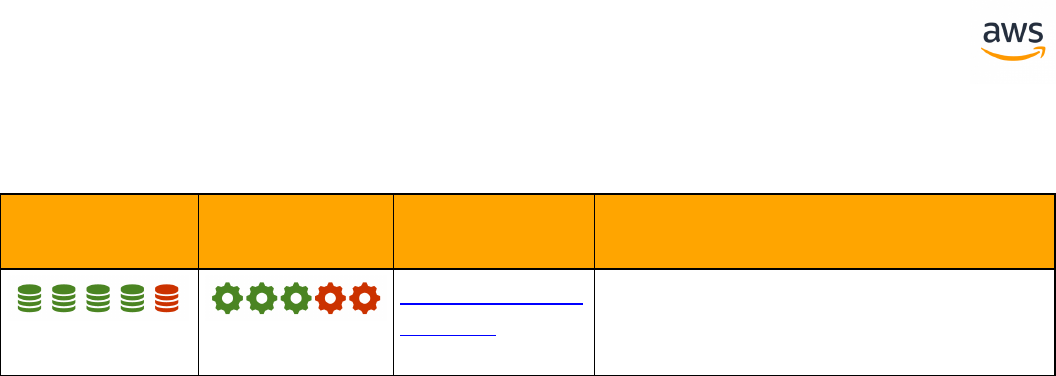

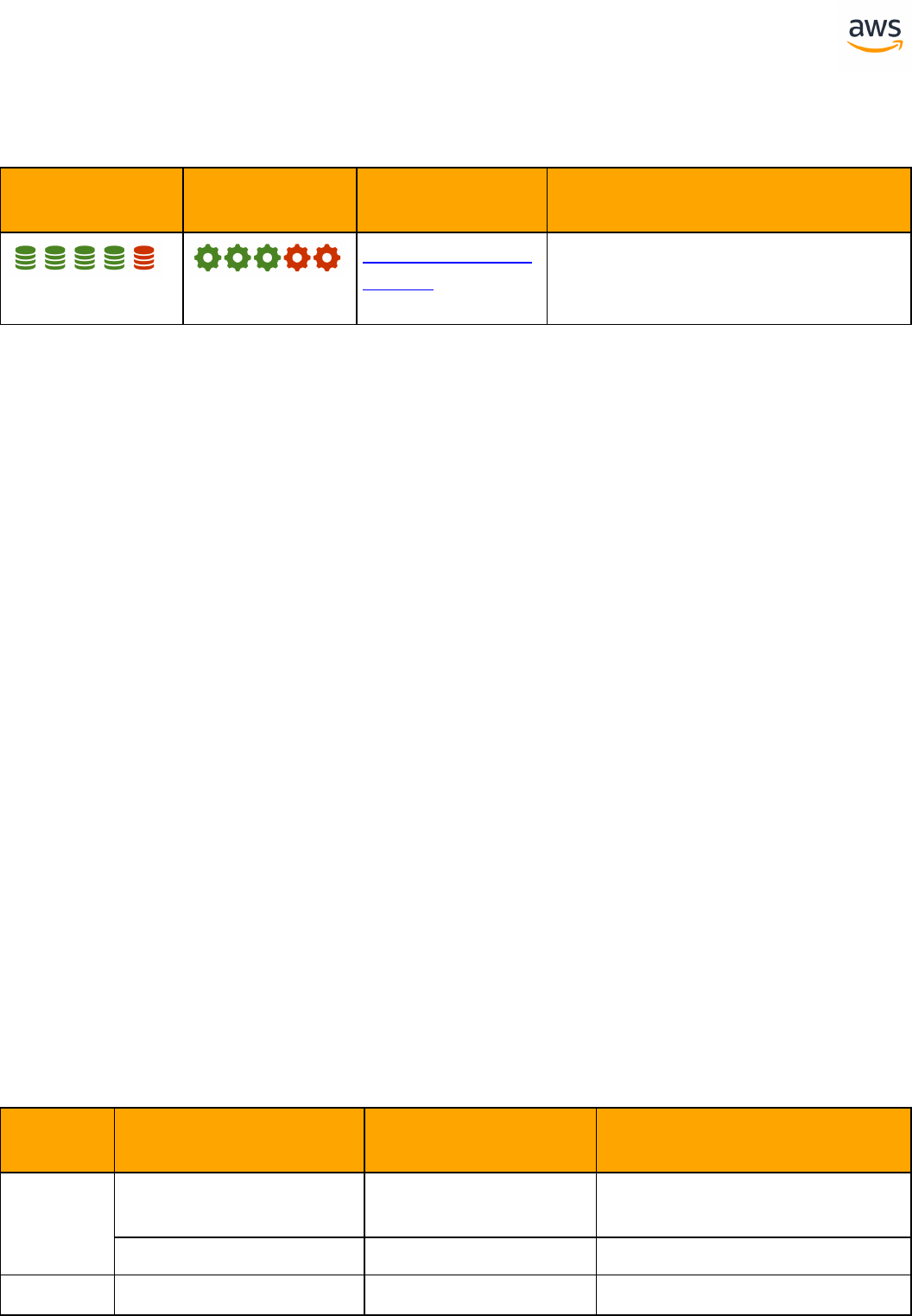

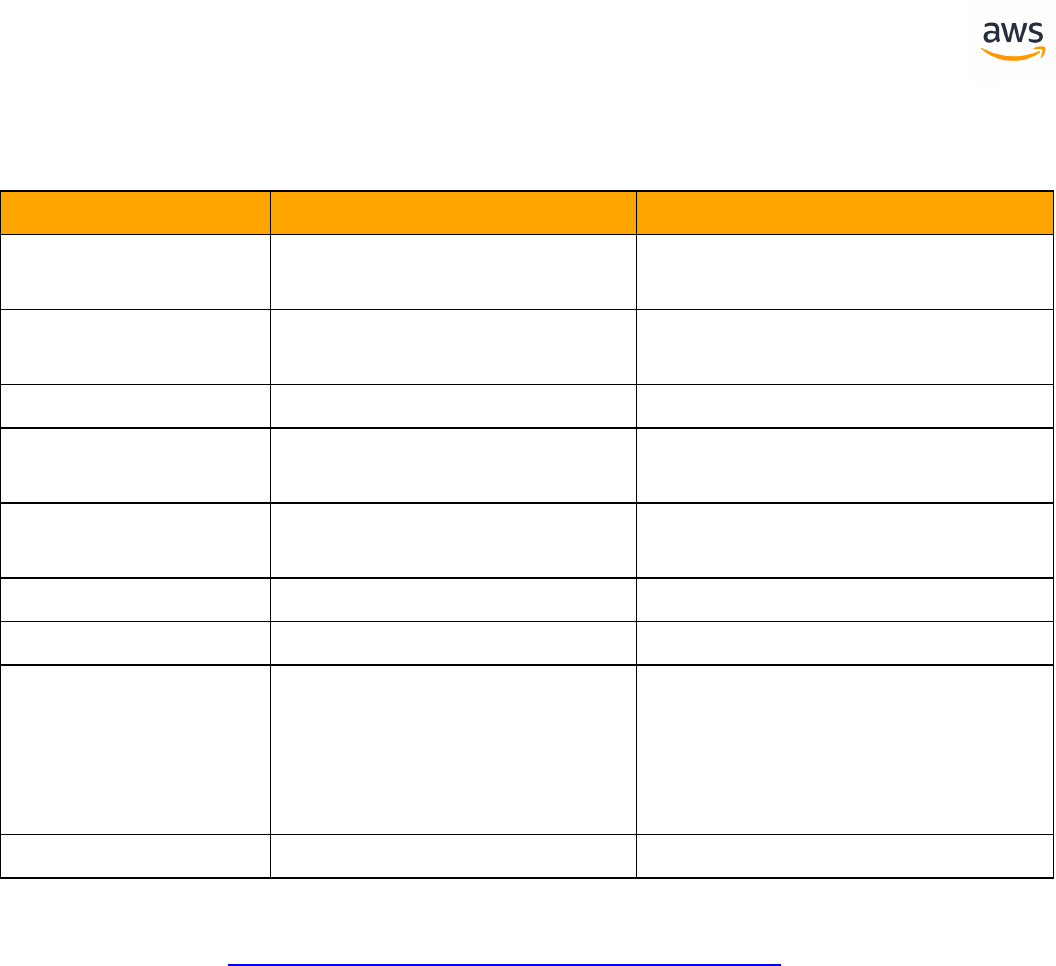

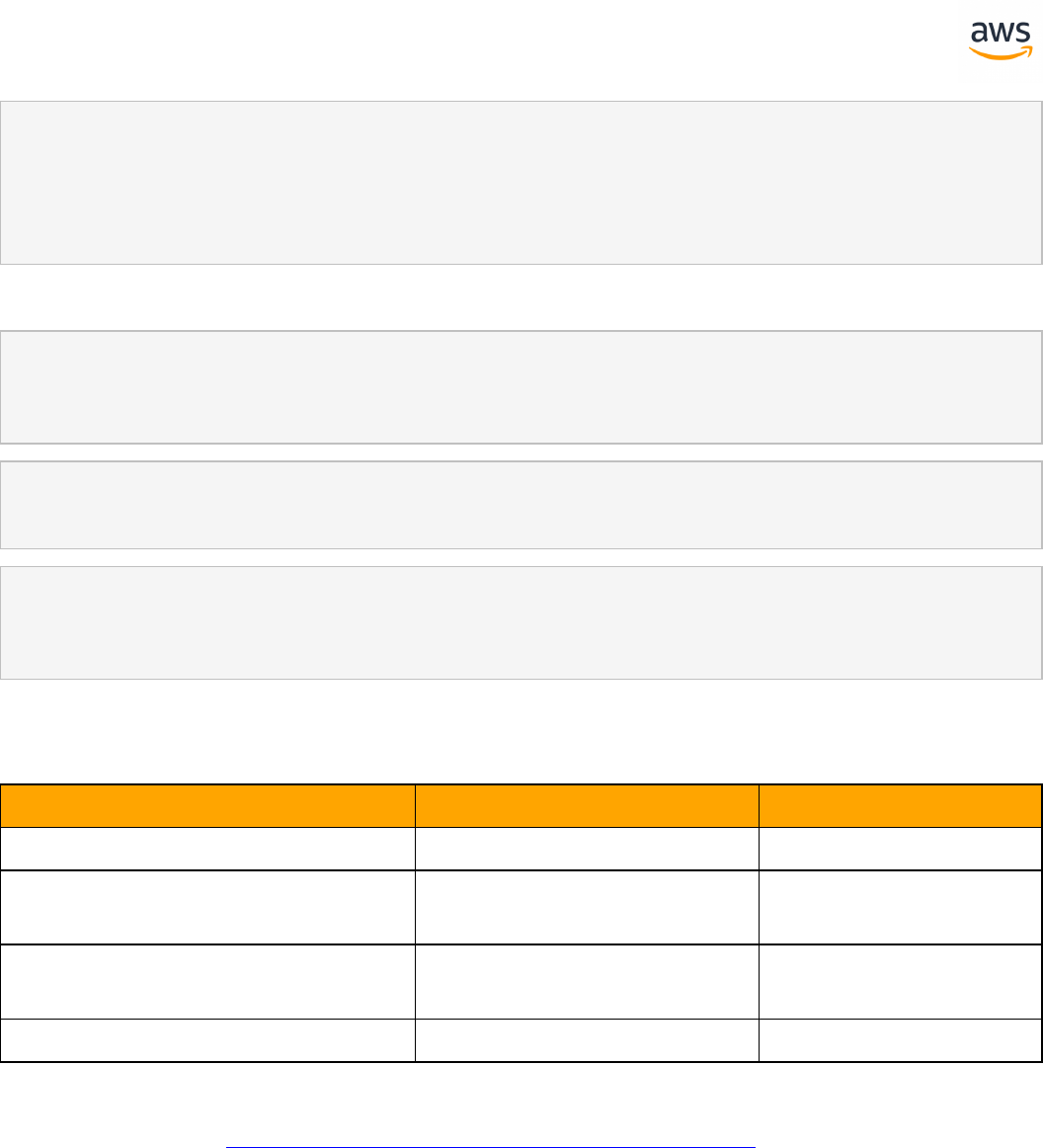

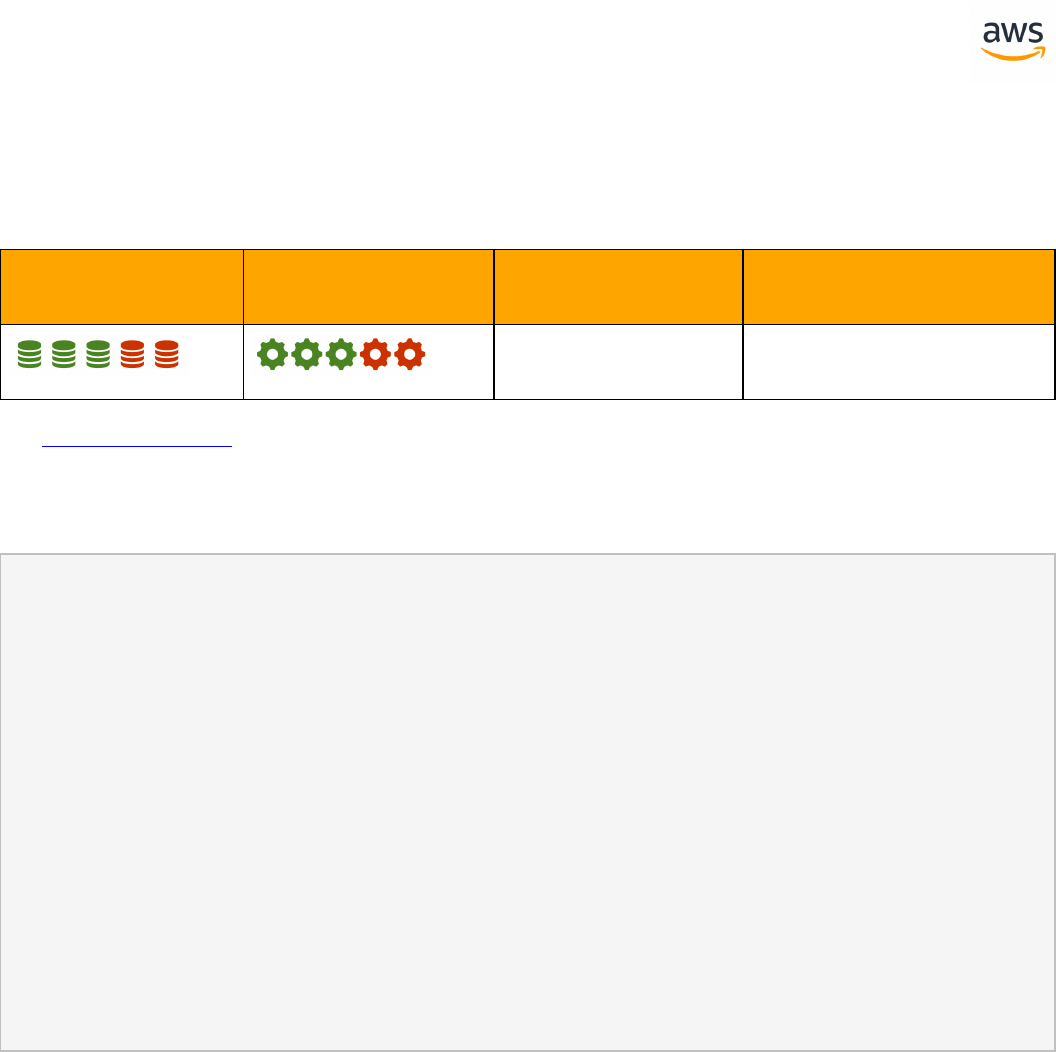

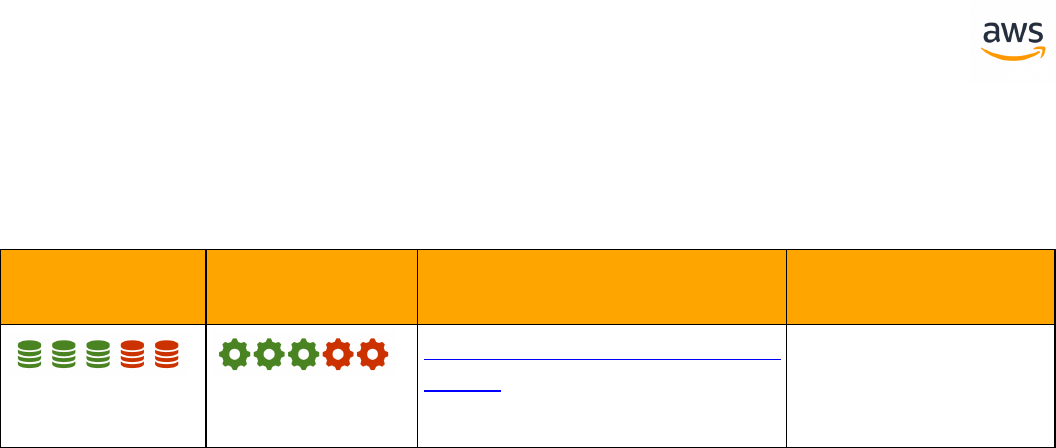

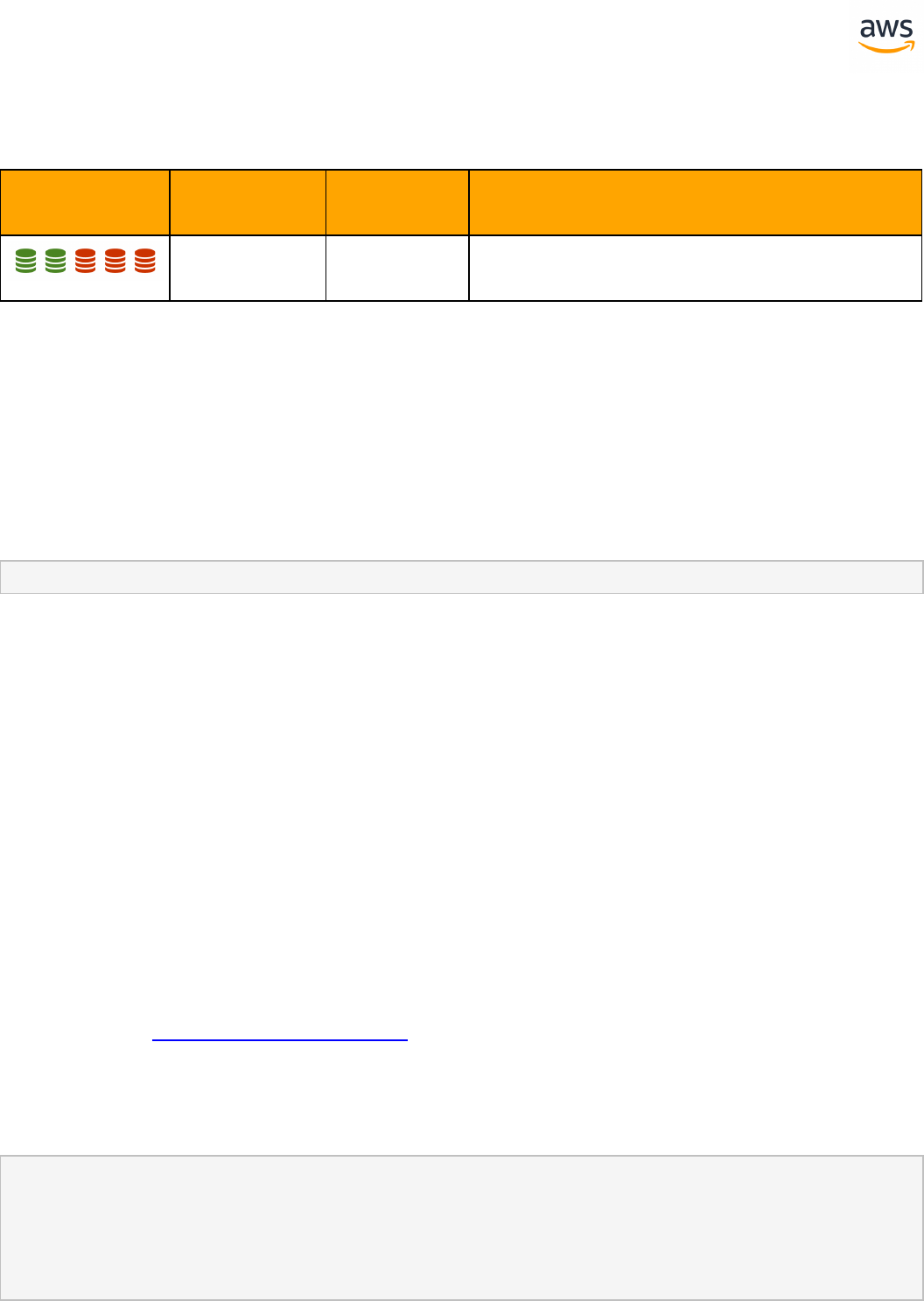

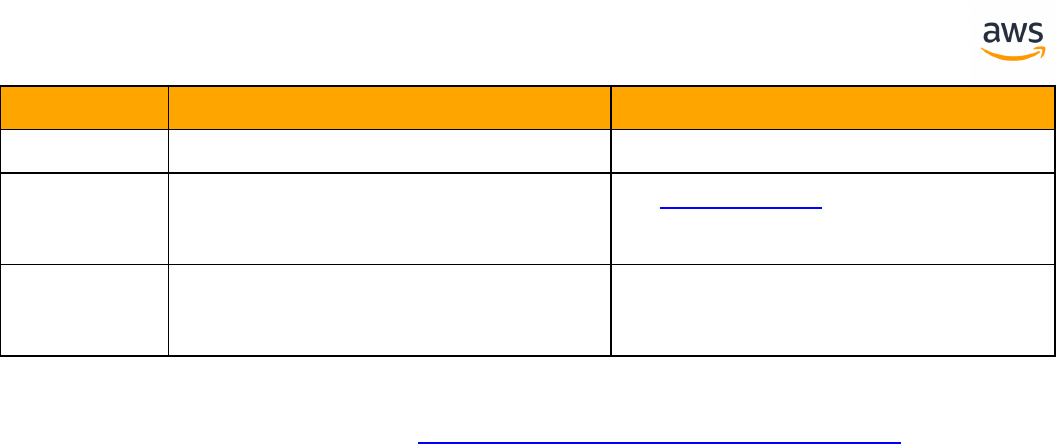

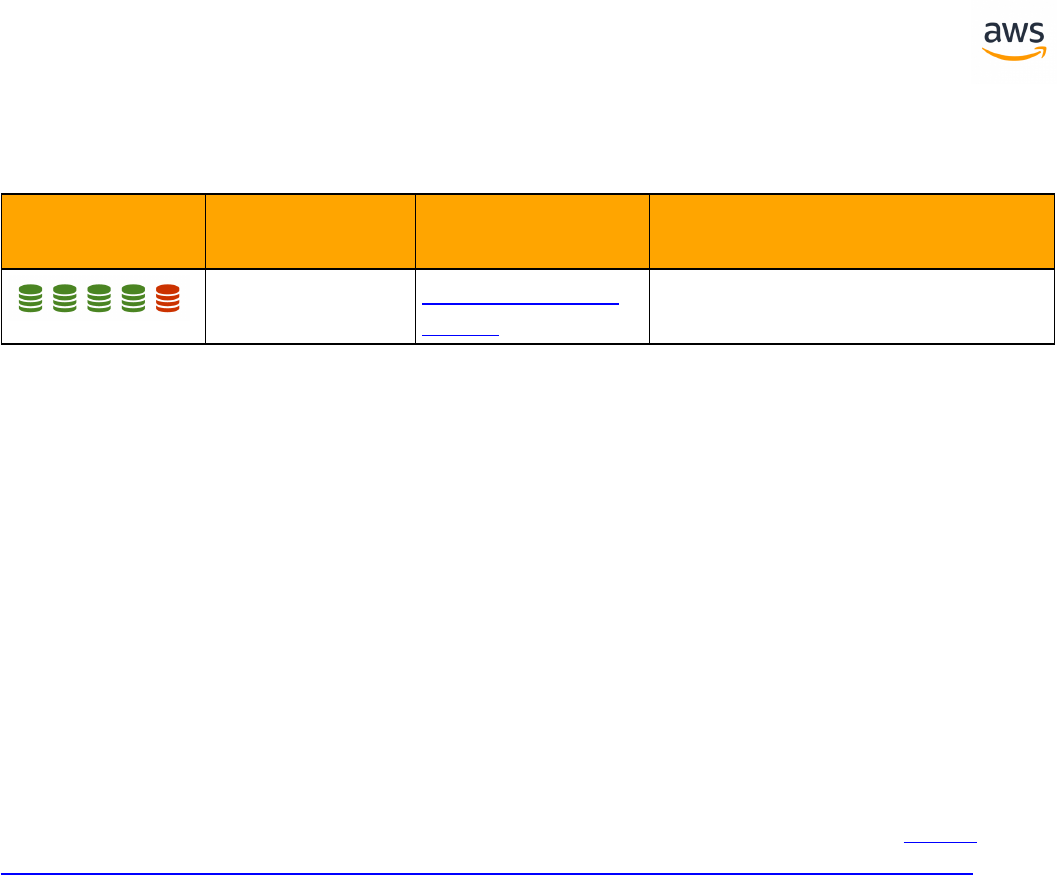

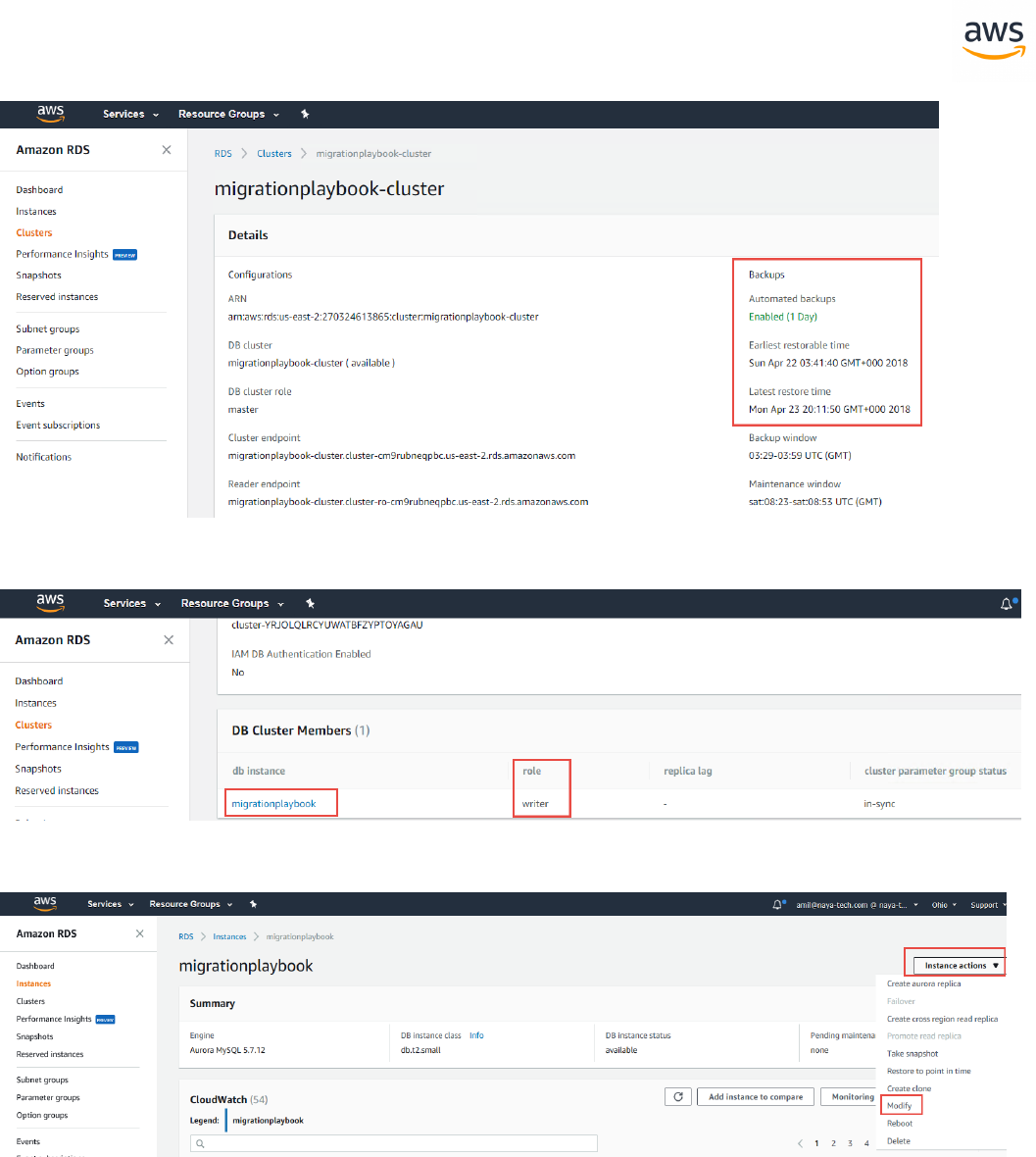

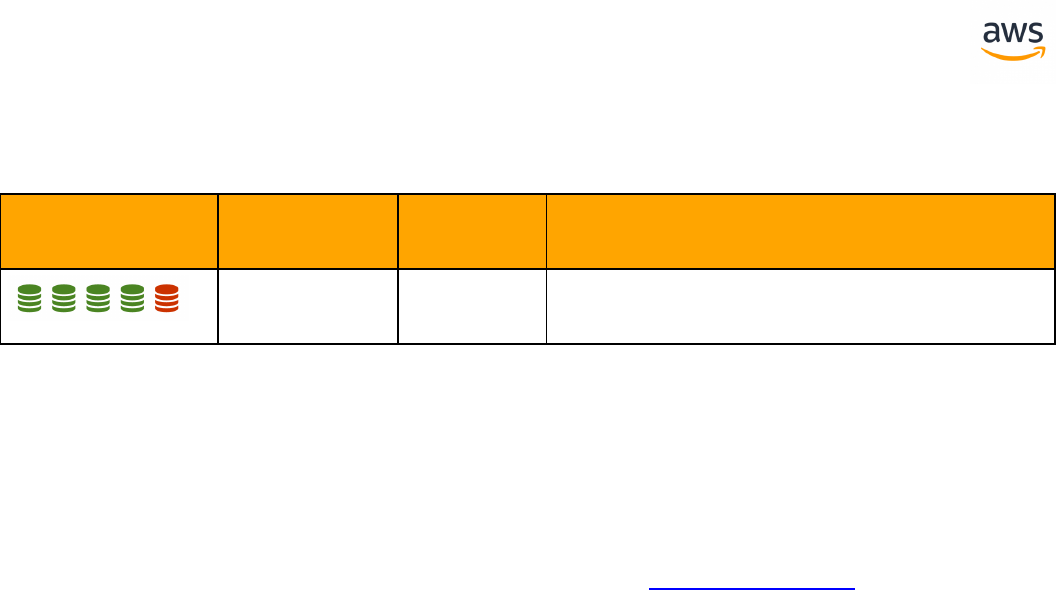

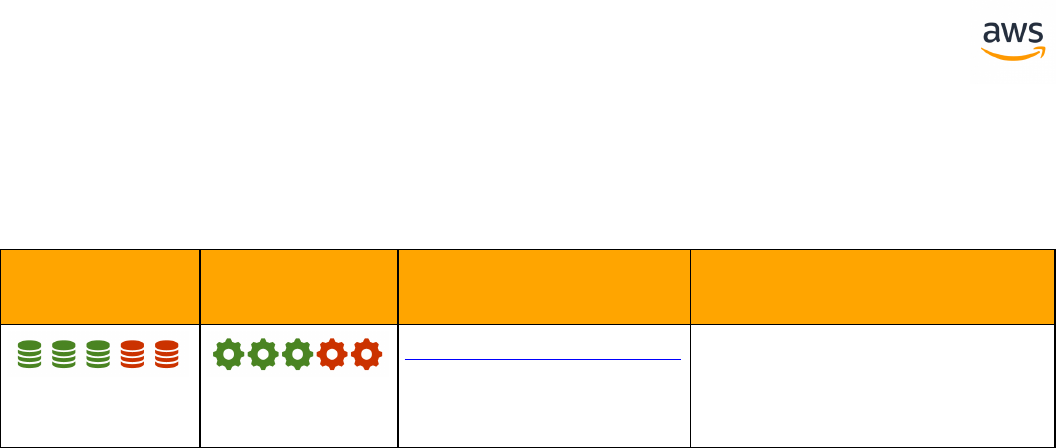

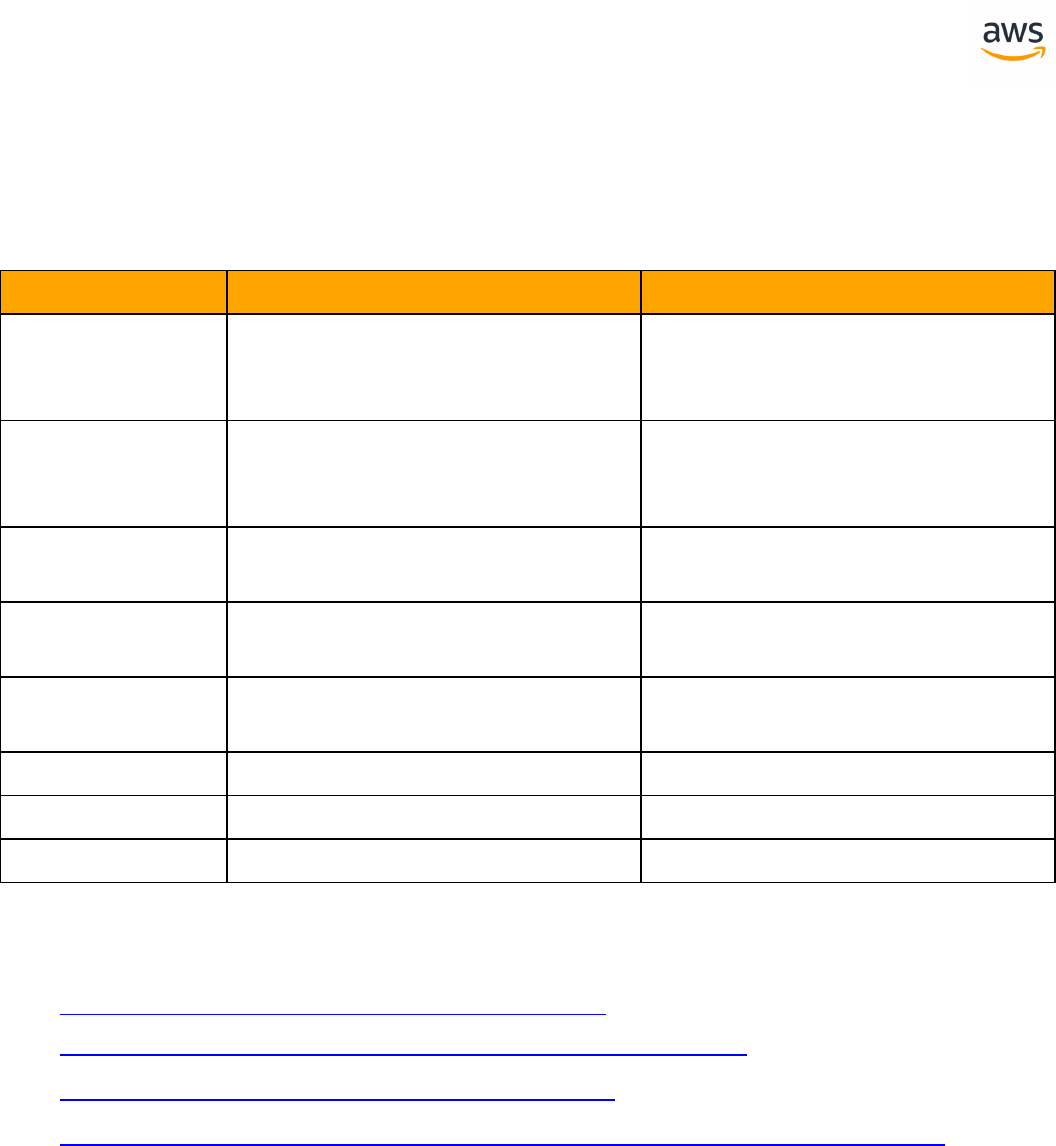

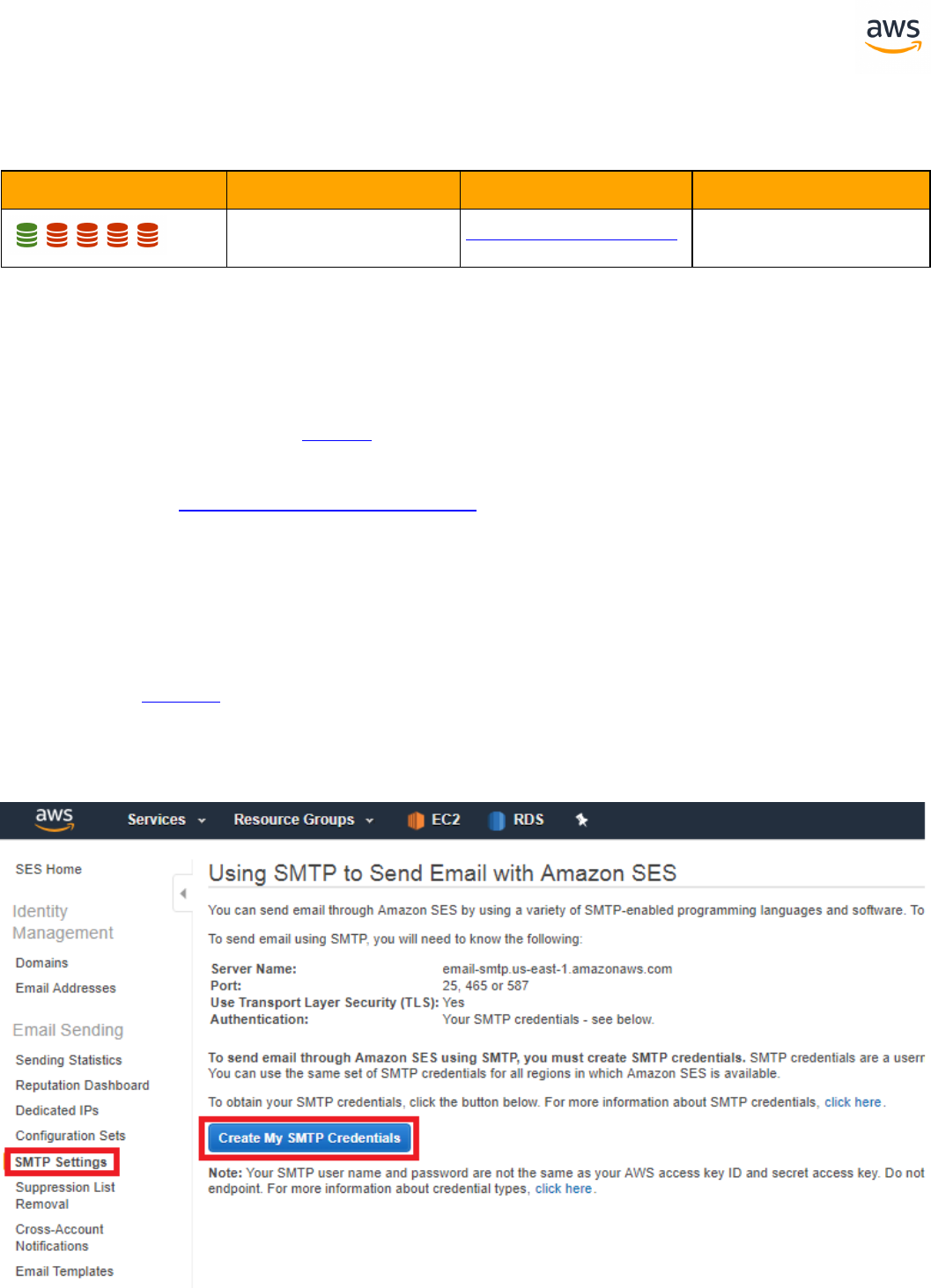

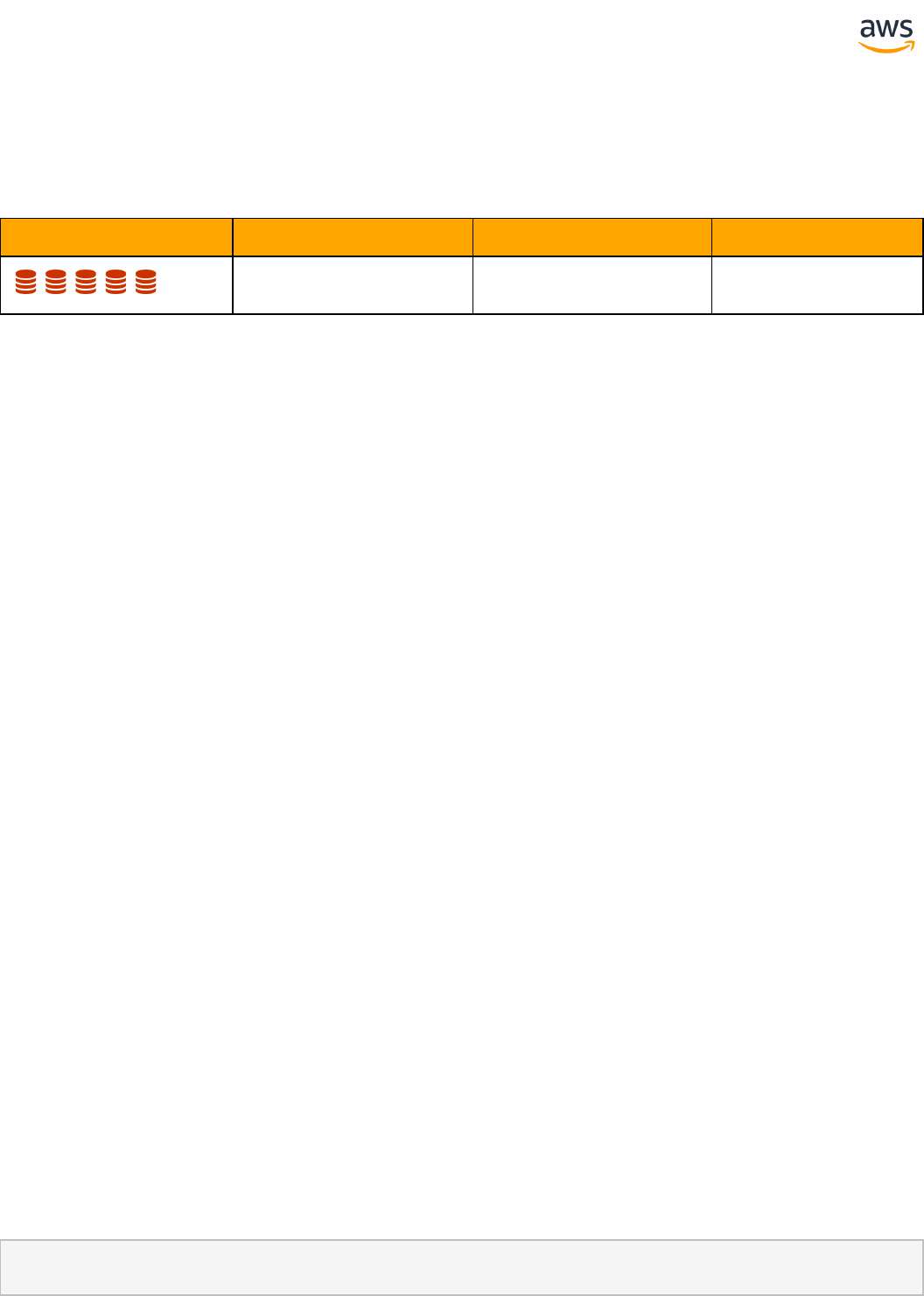

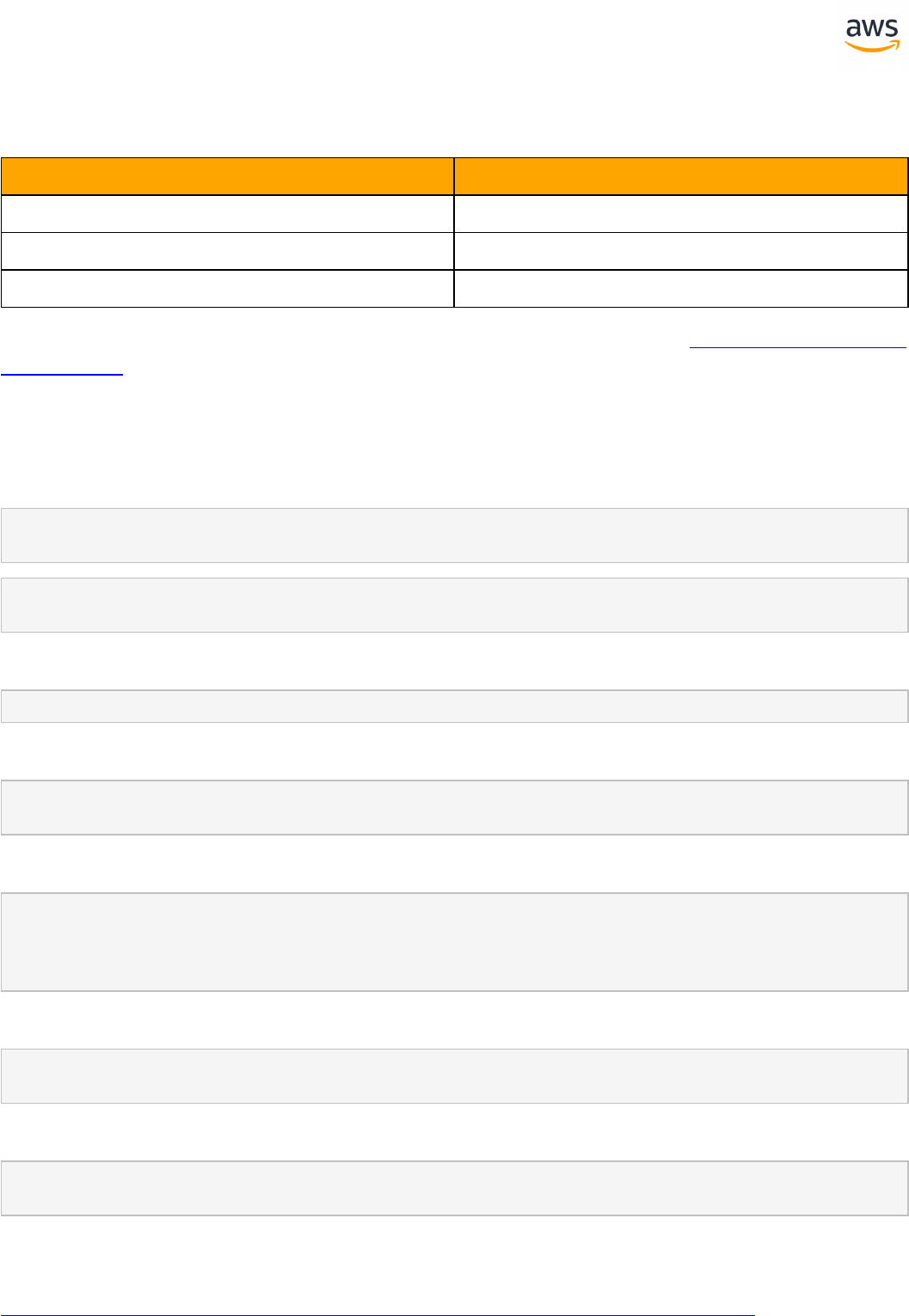

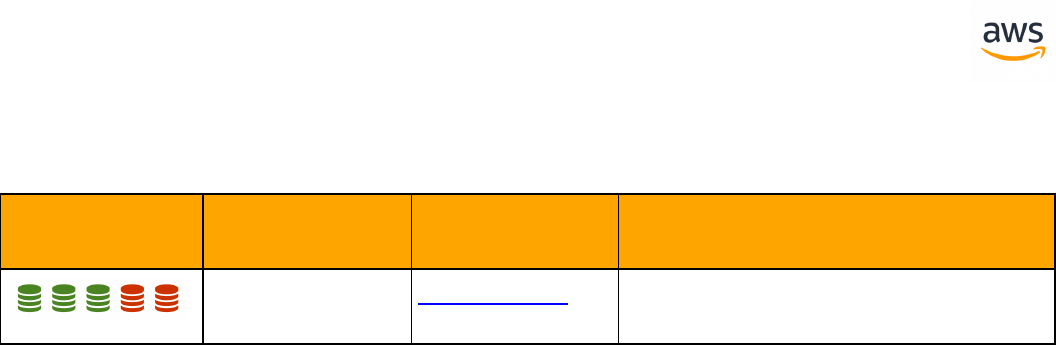

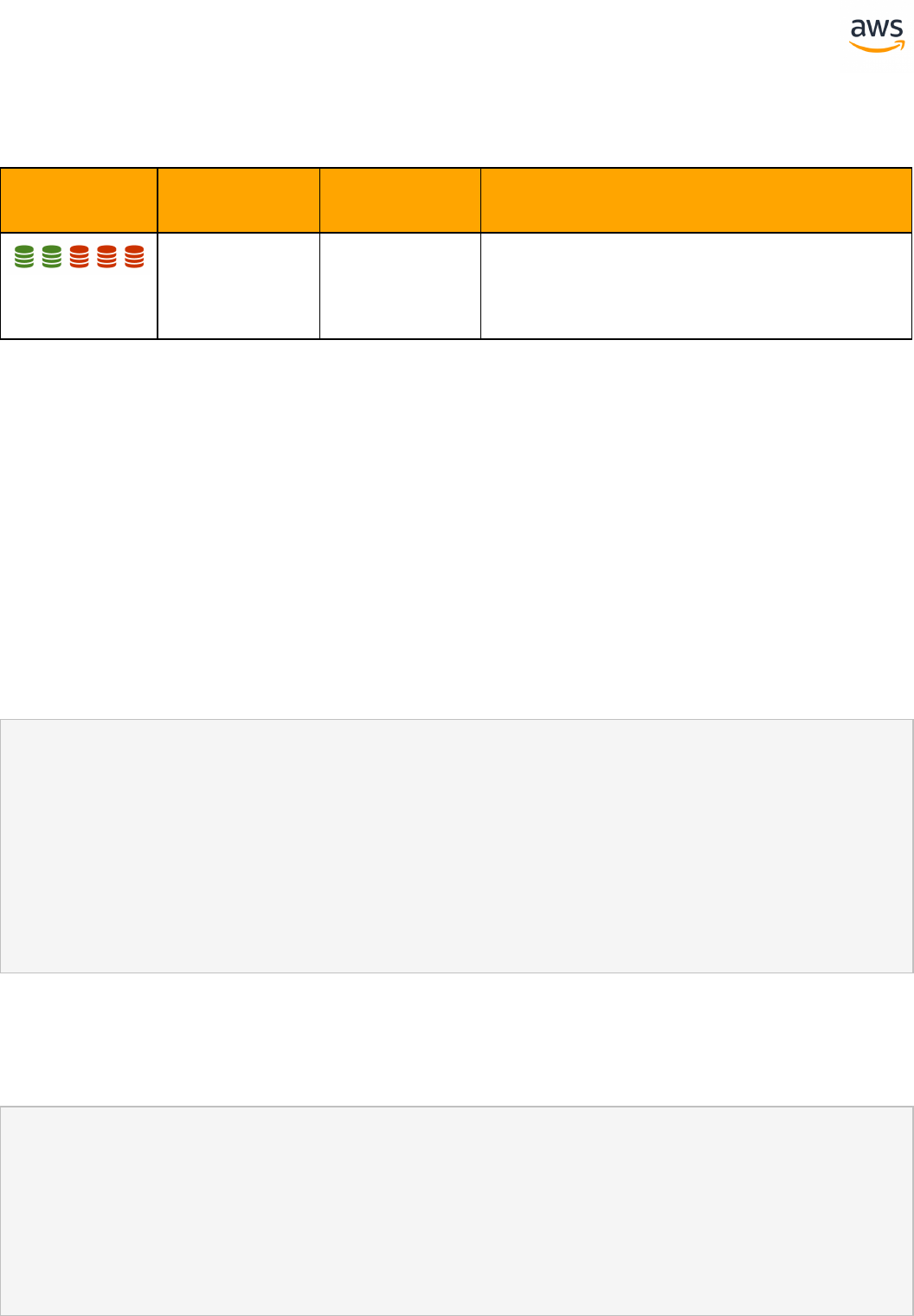

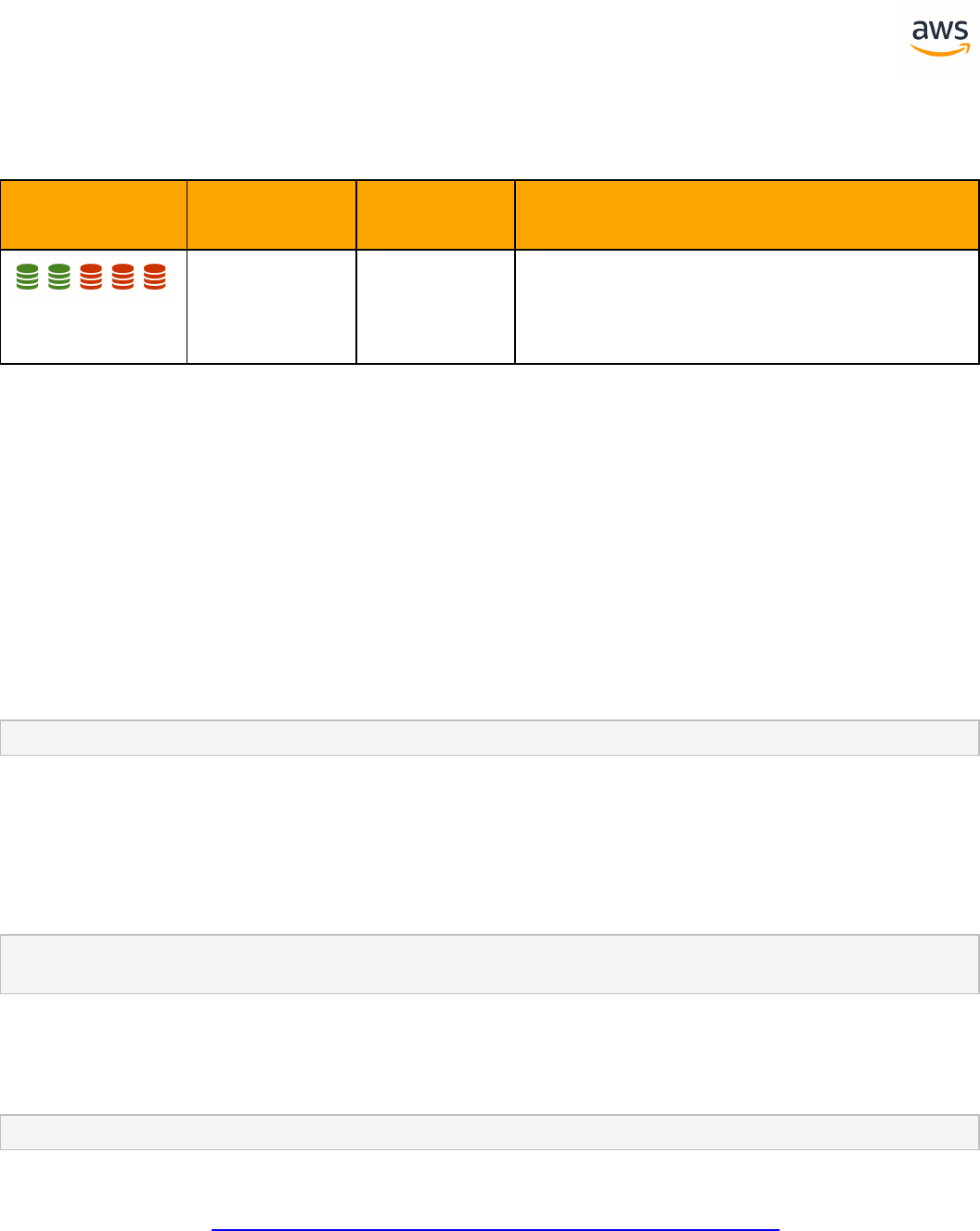

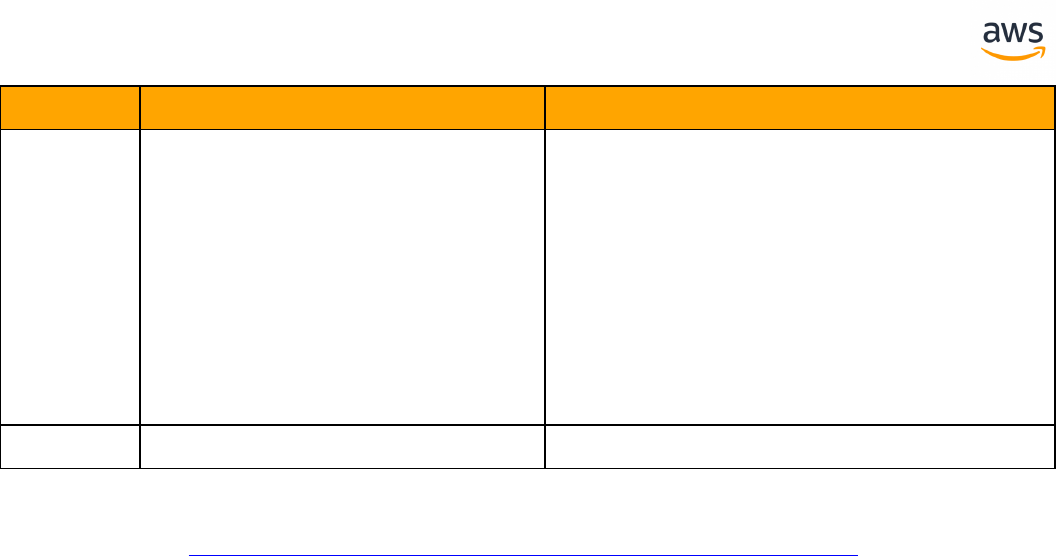

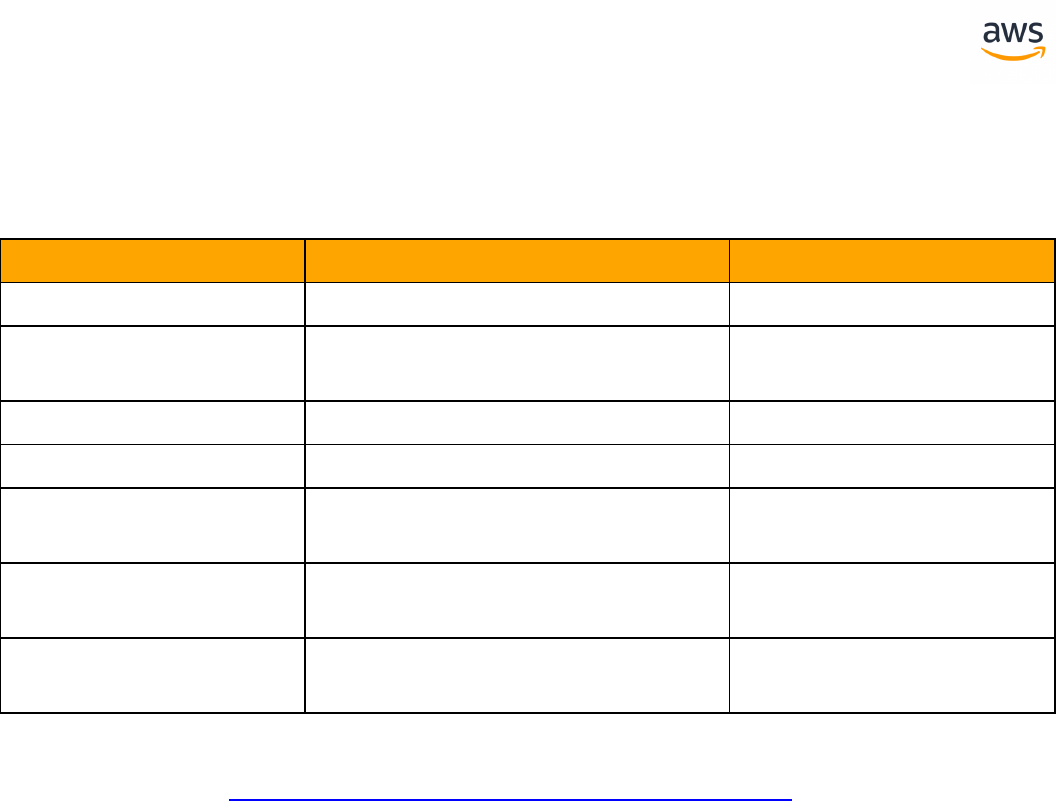

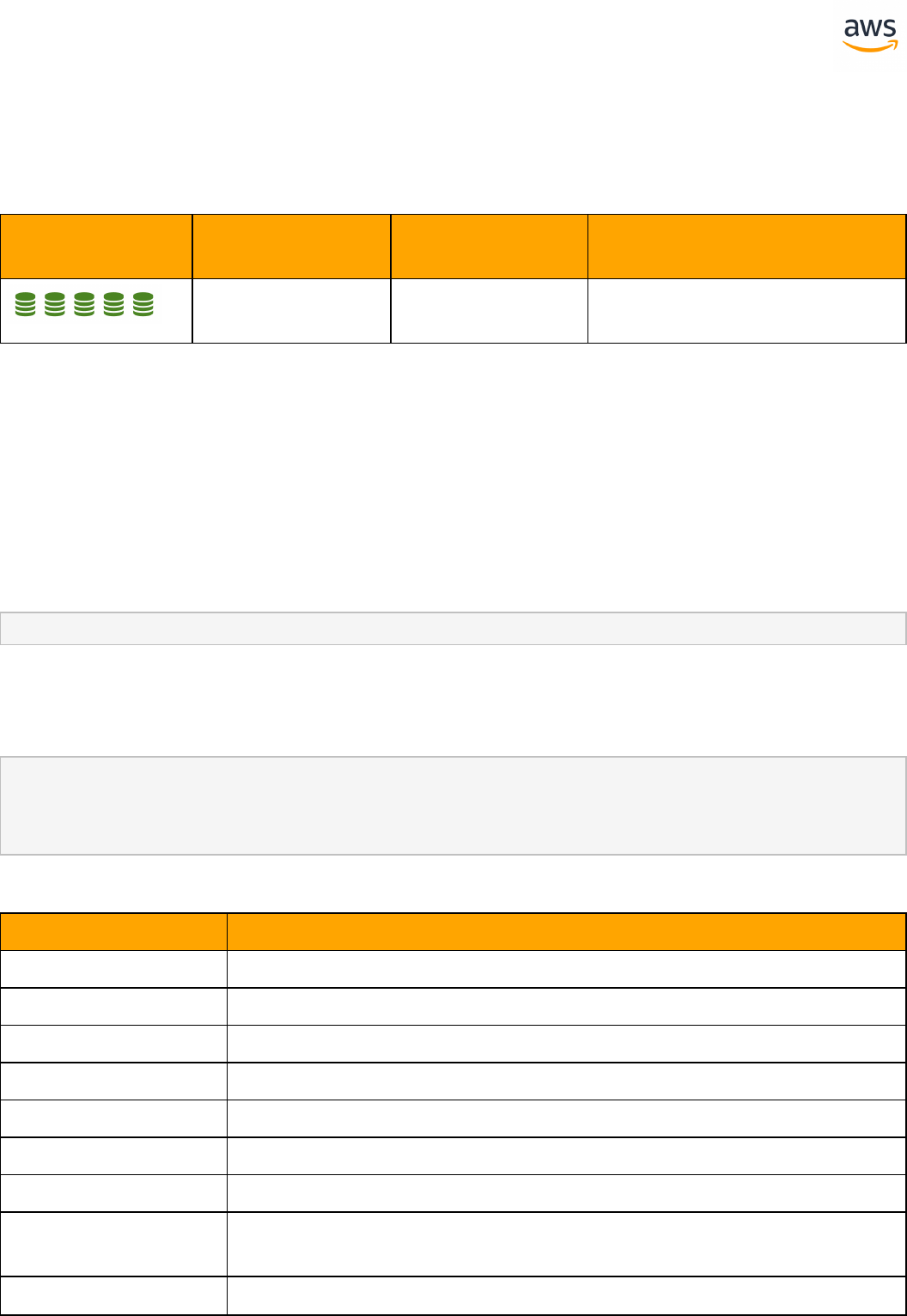

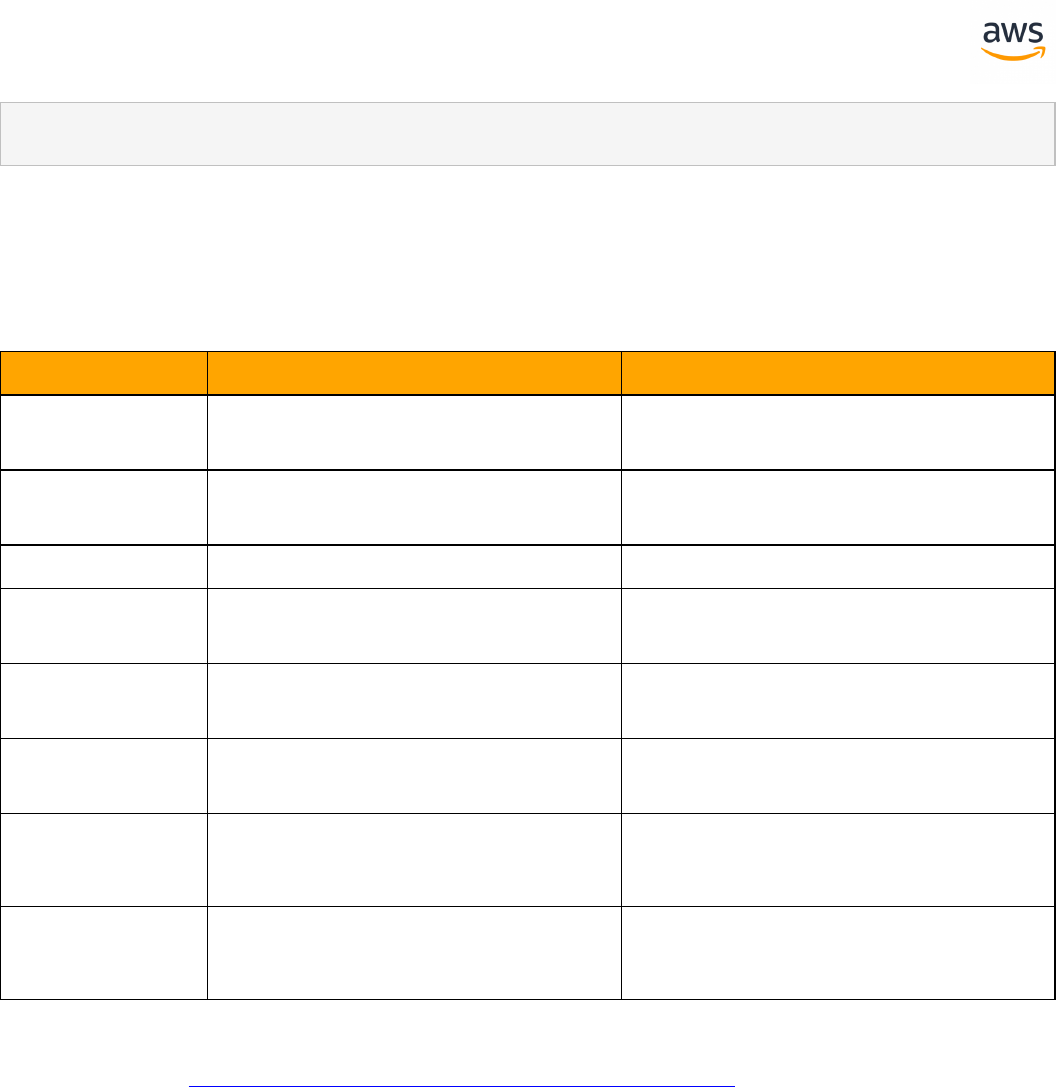

Tables of Feature Compatibility

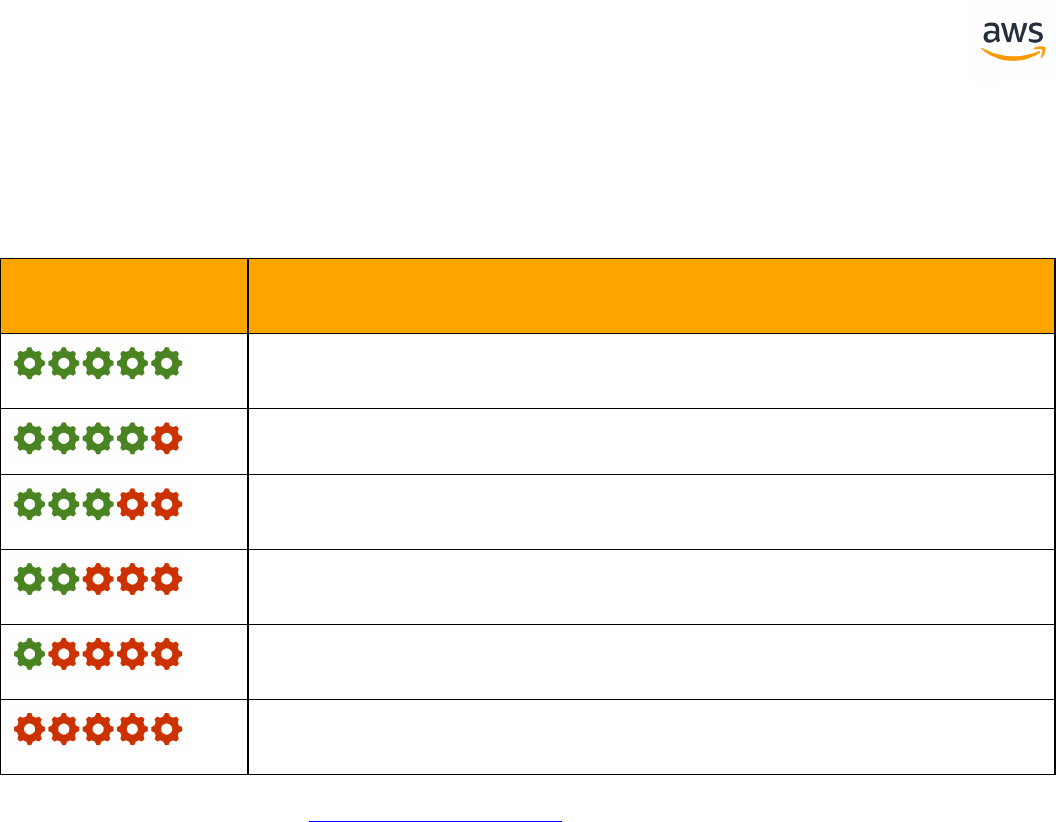

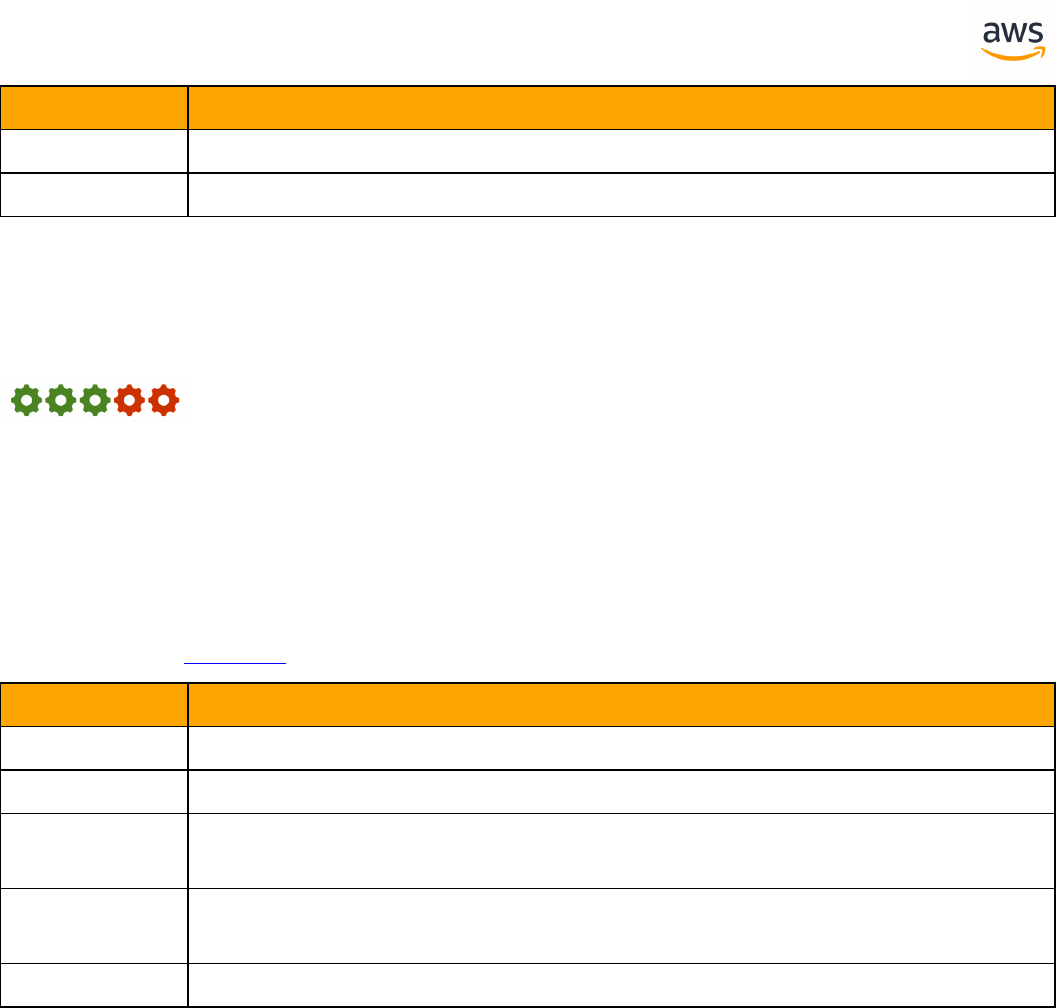

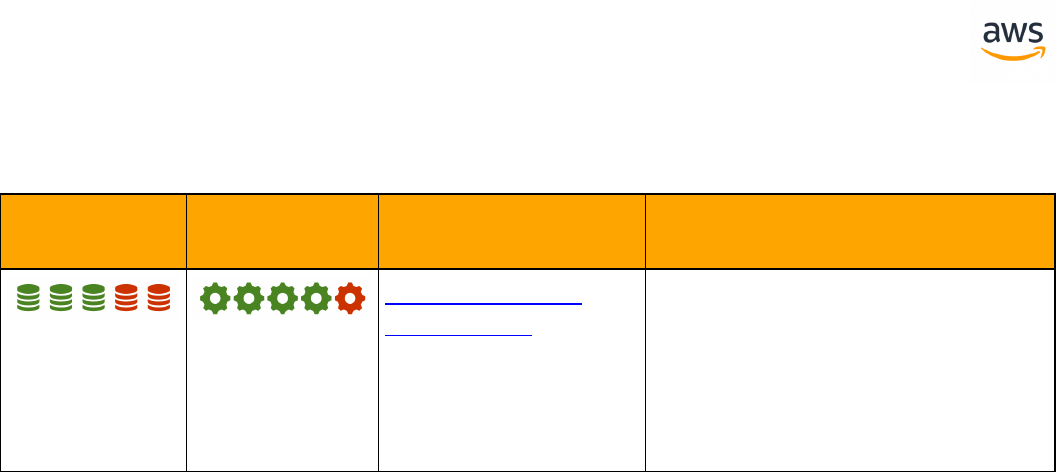

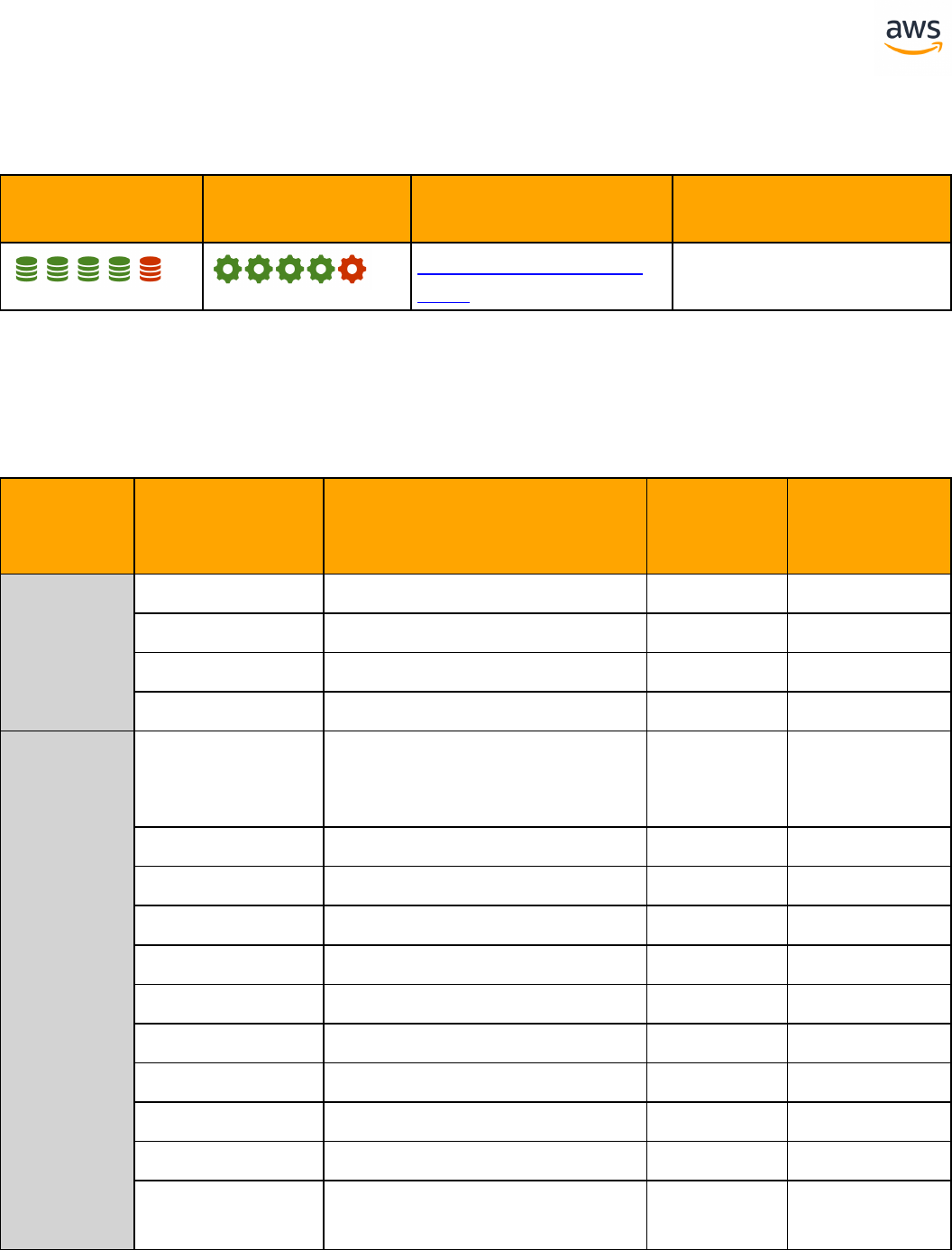

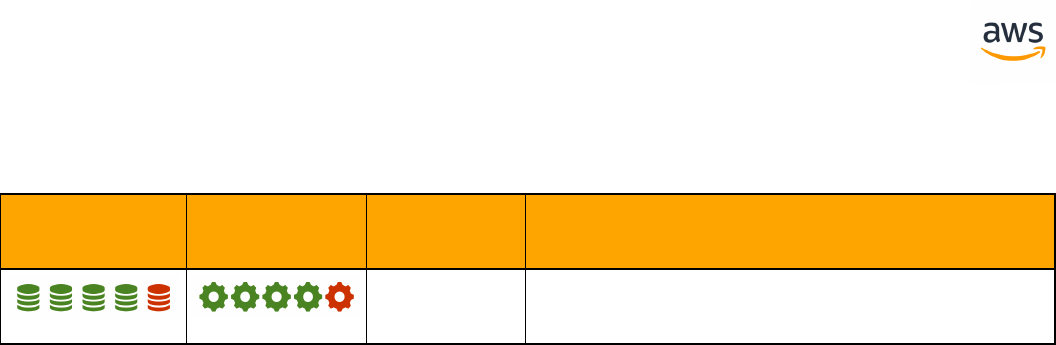

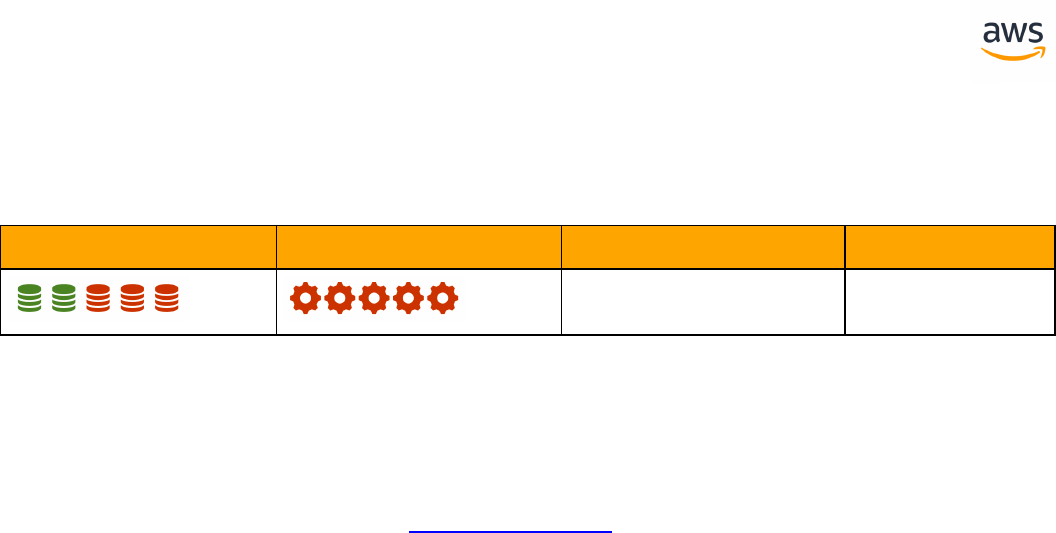

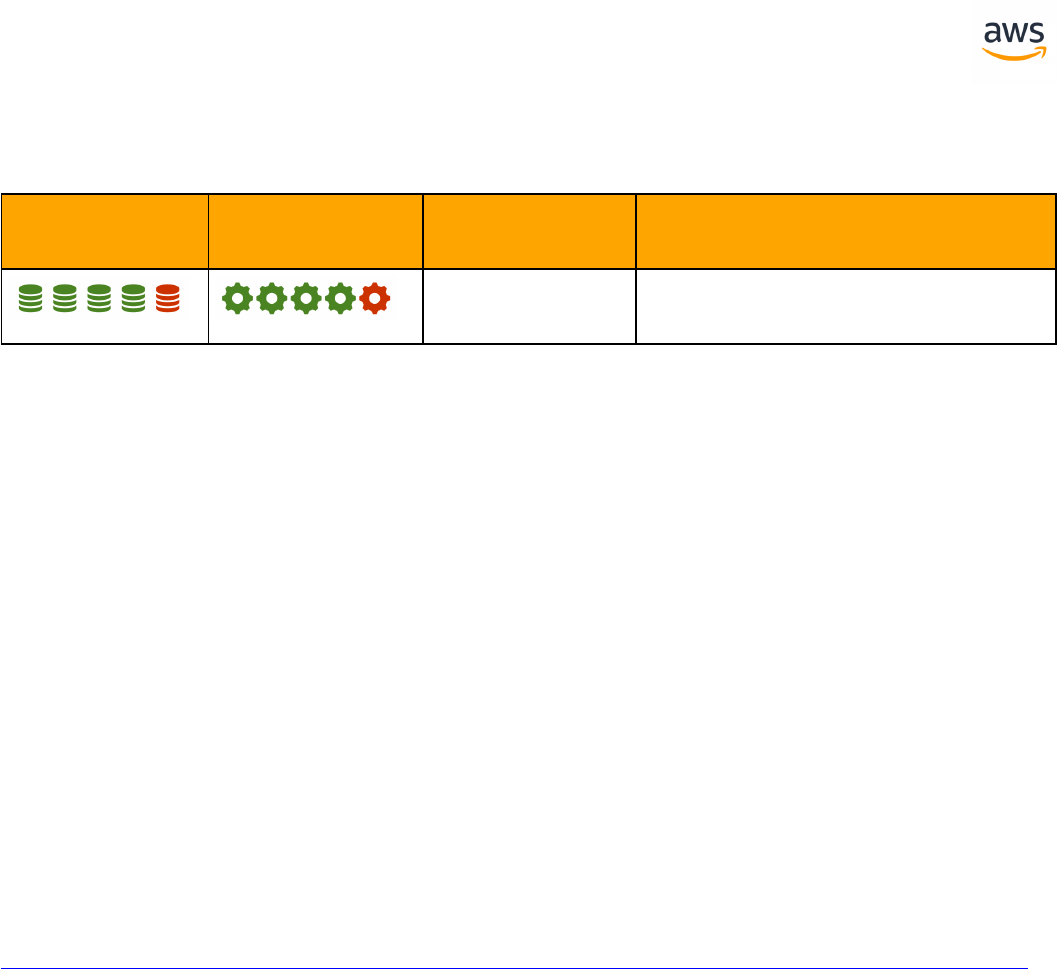

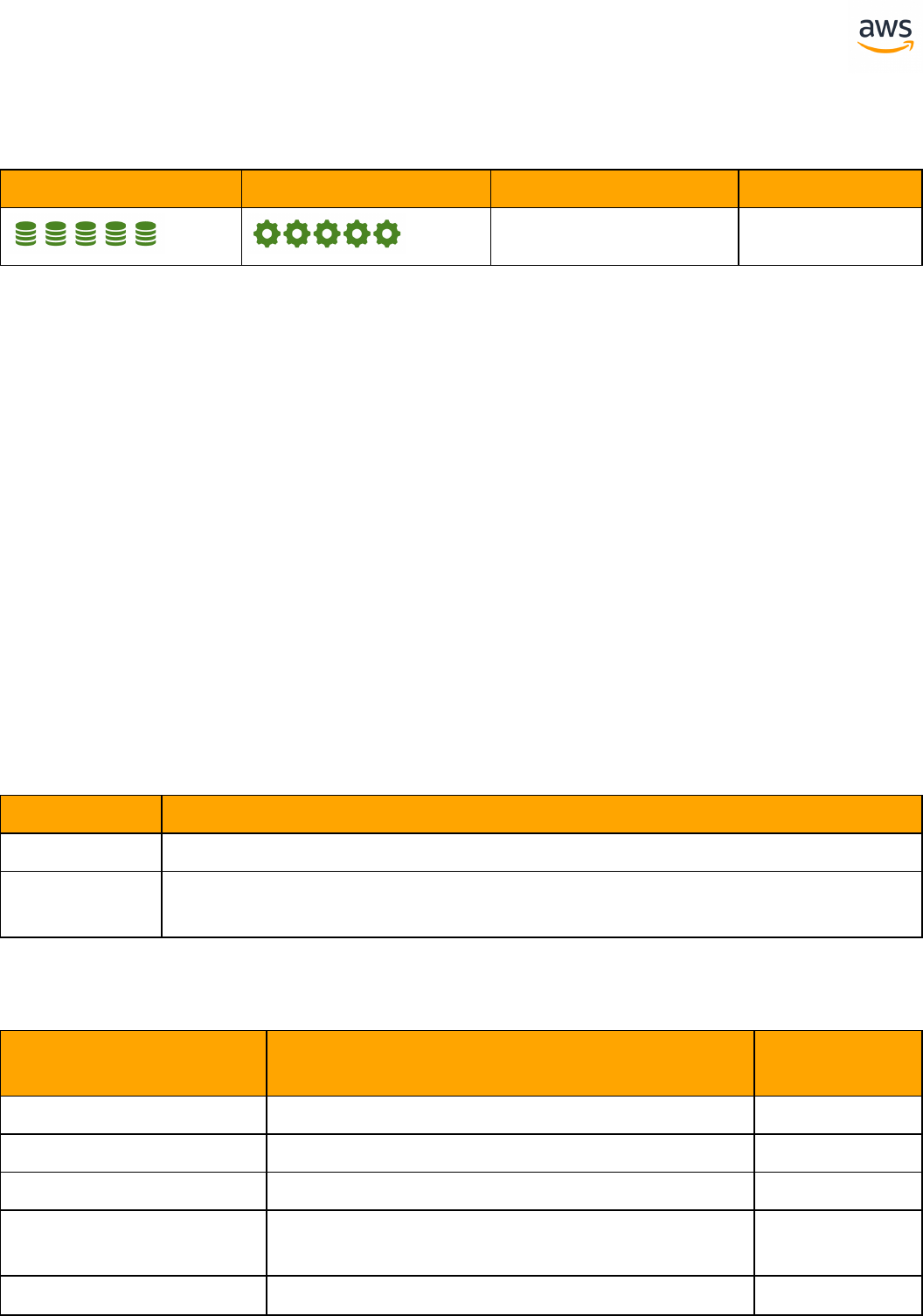

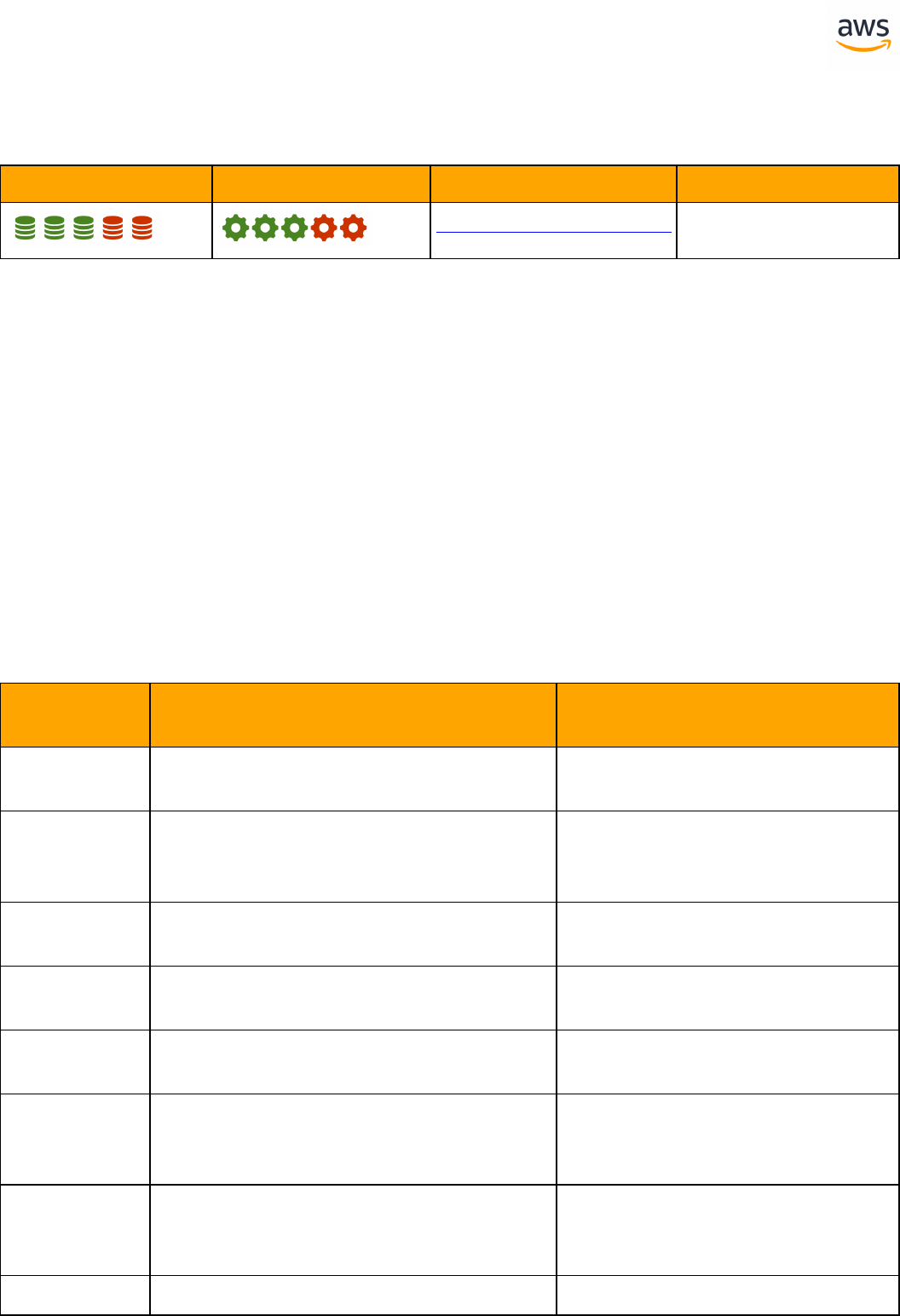

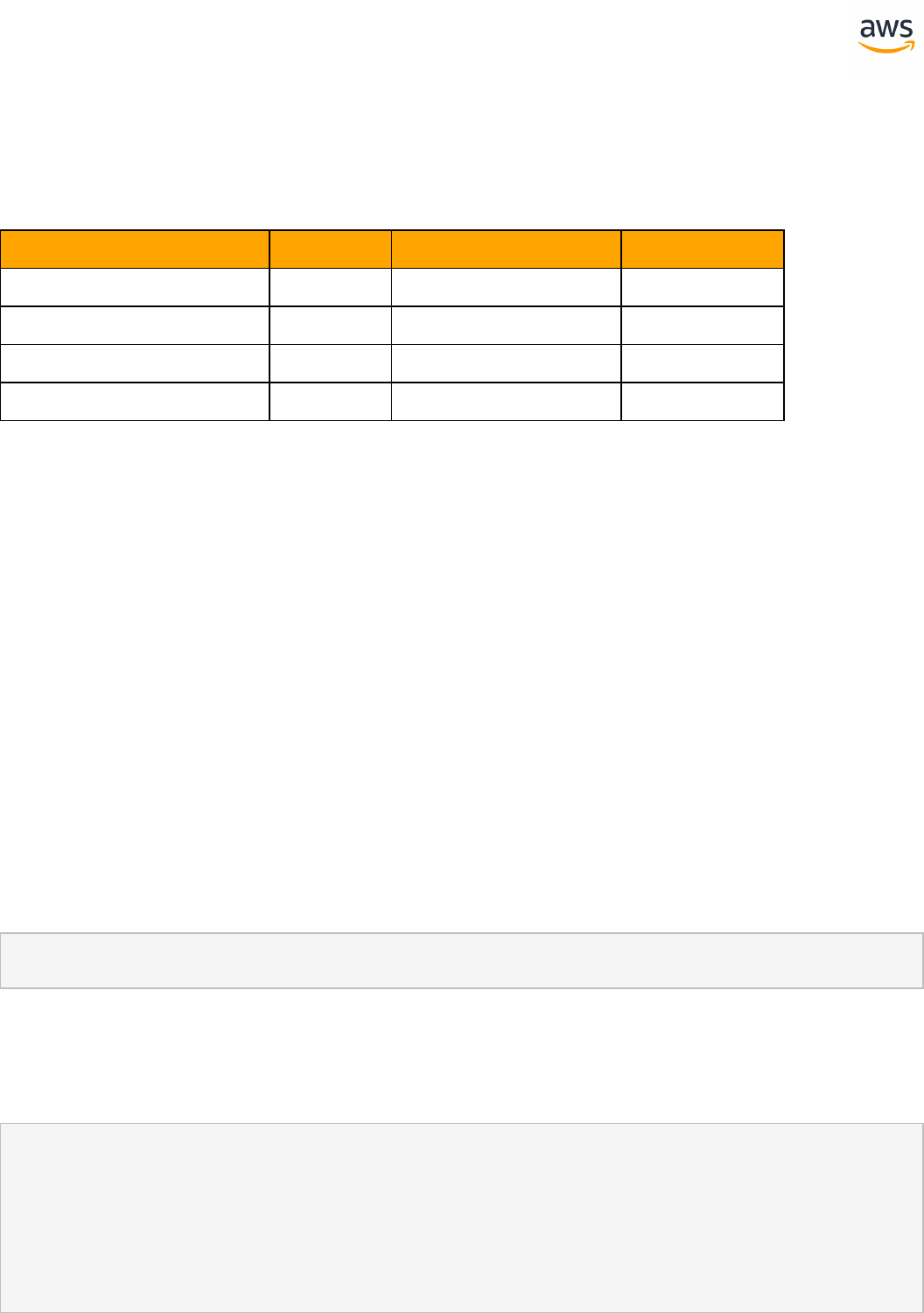

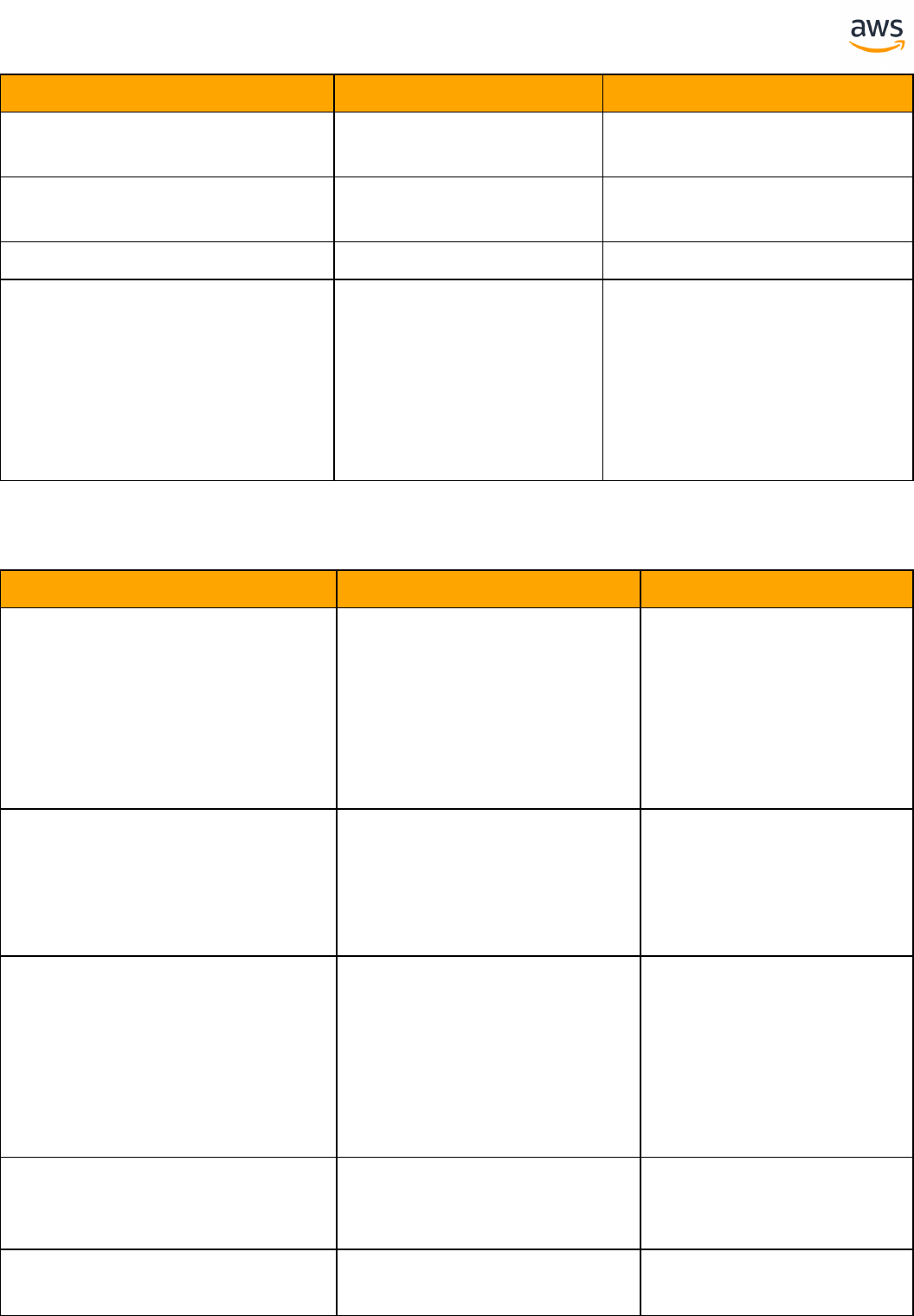

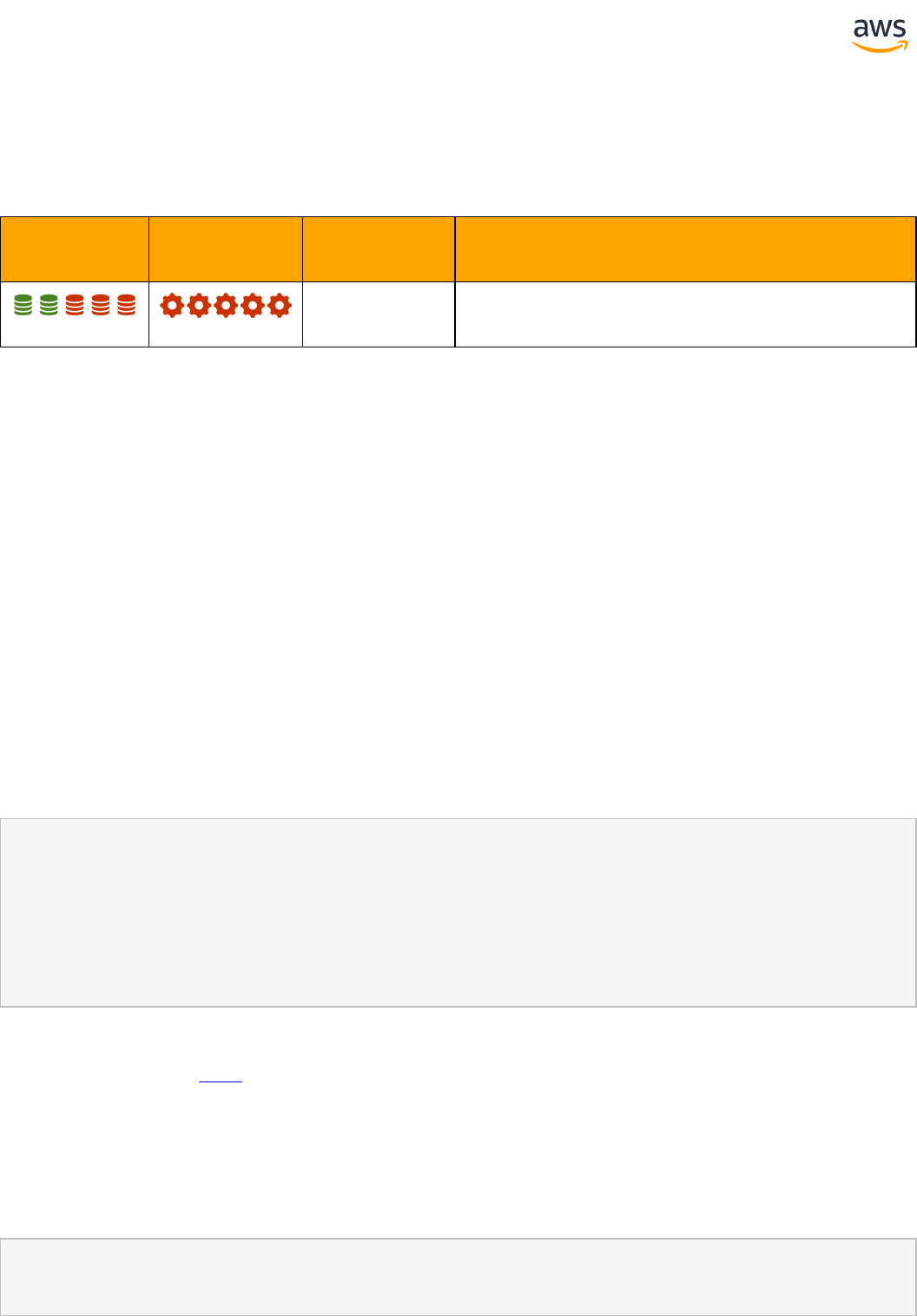

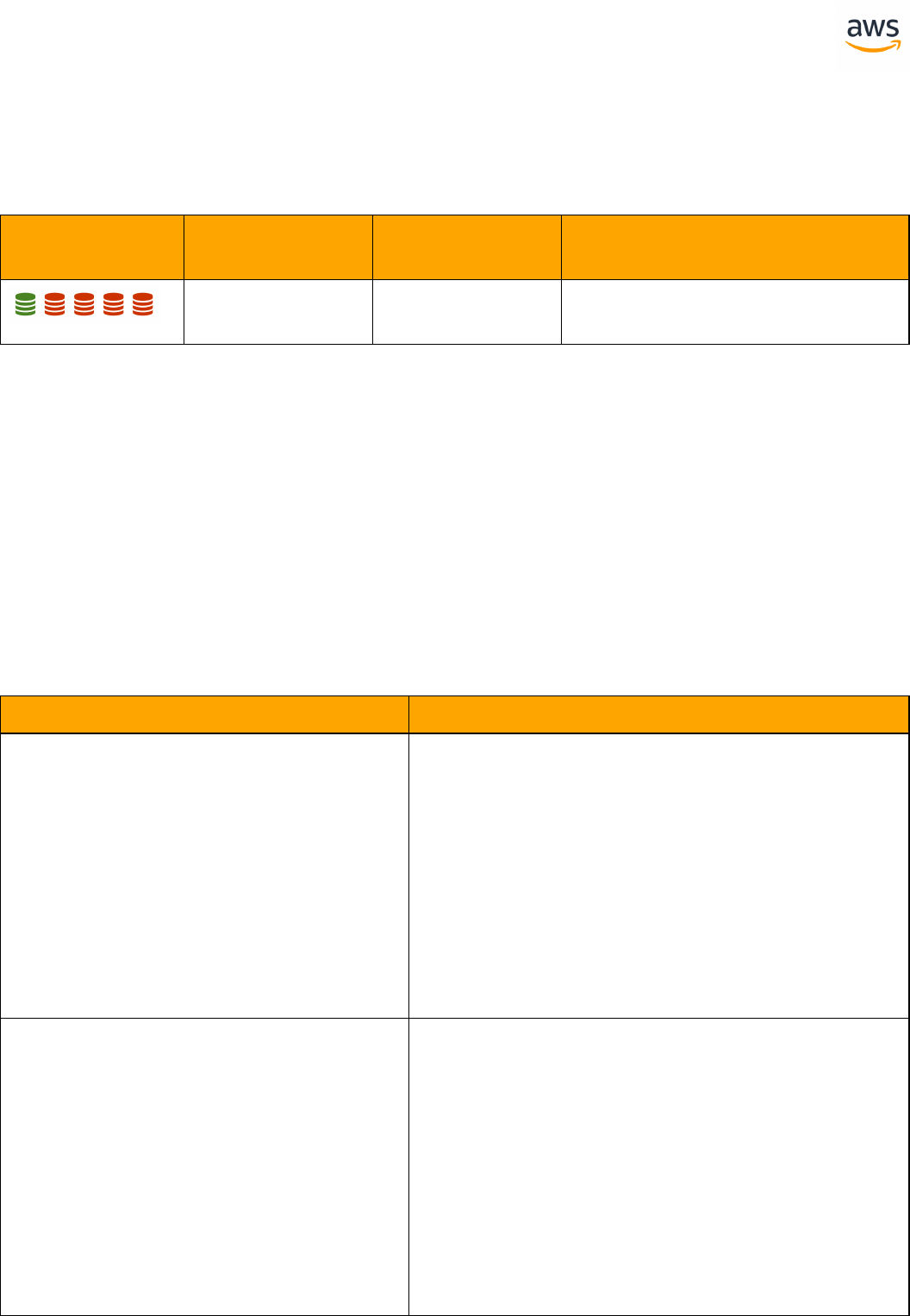

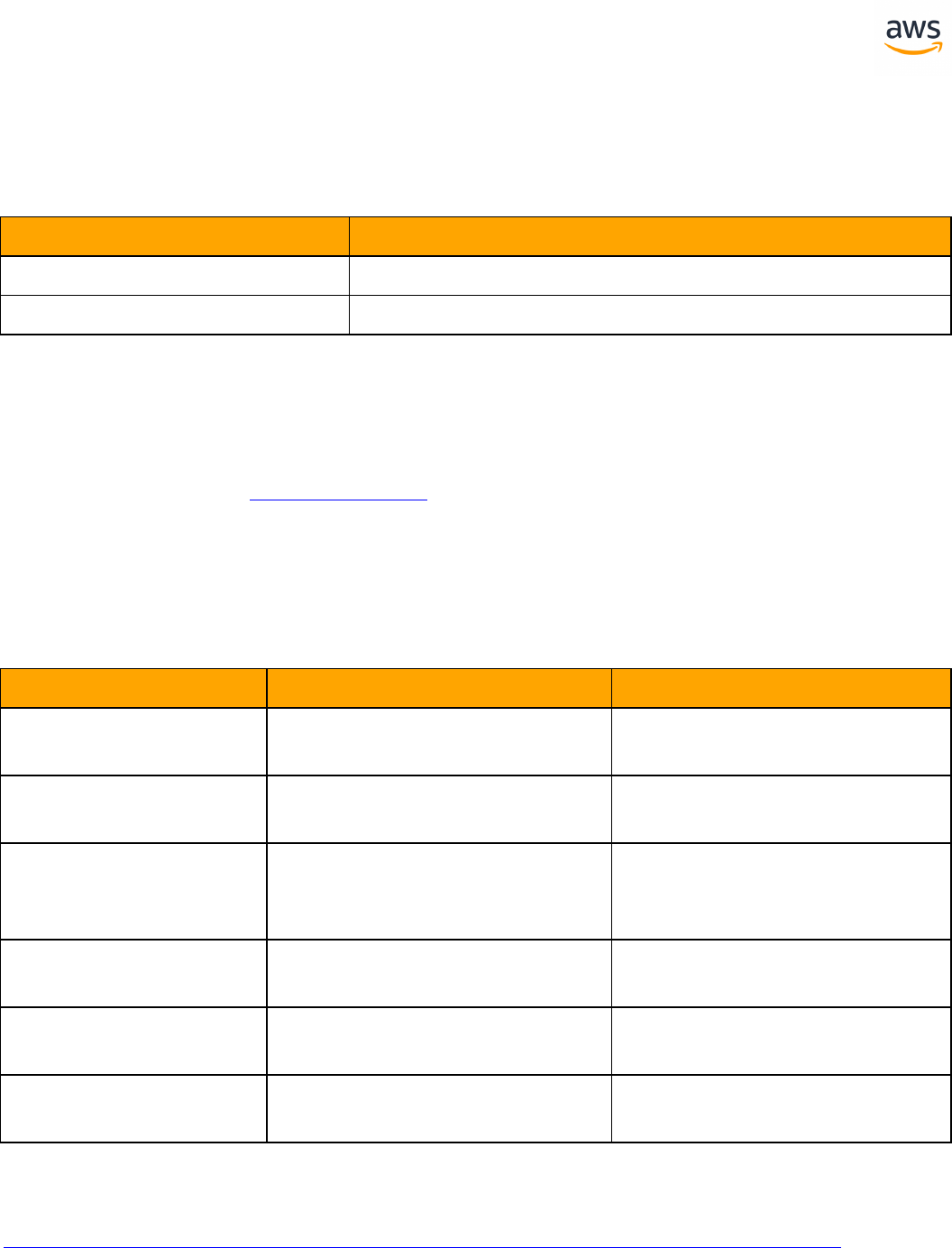

Feature Compatibility Legend

Compatibility

Score Symbol

Description

Very high compatibility: None or minimal low-risk and low-effort rewrites

needed

High compatibility: Some low-risk rewrites needed, easy workarounds exist for

incompatible features

Medium compatibility: More involved low-medium risk rewrites needed, some

redesign may be needed for incompatible features

Low compatibility: Medium to high risk rewrites needed, some incompatible fea-

tures require redesign and reasonable-effort workarounds exist

Very low compatibility: High risk and/or high-effort rewrites needed, some fea-

tures require redesign and workarounds are challenging

Not compatible: No practical workarounds yet, may require an application level

architectural solution to work around incompatibilities

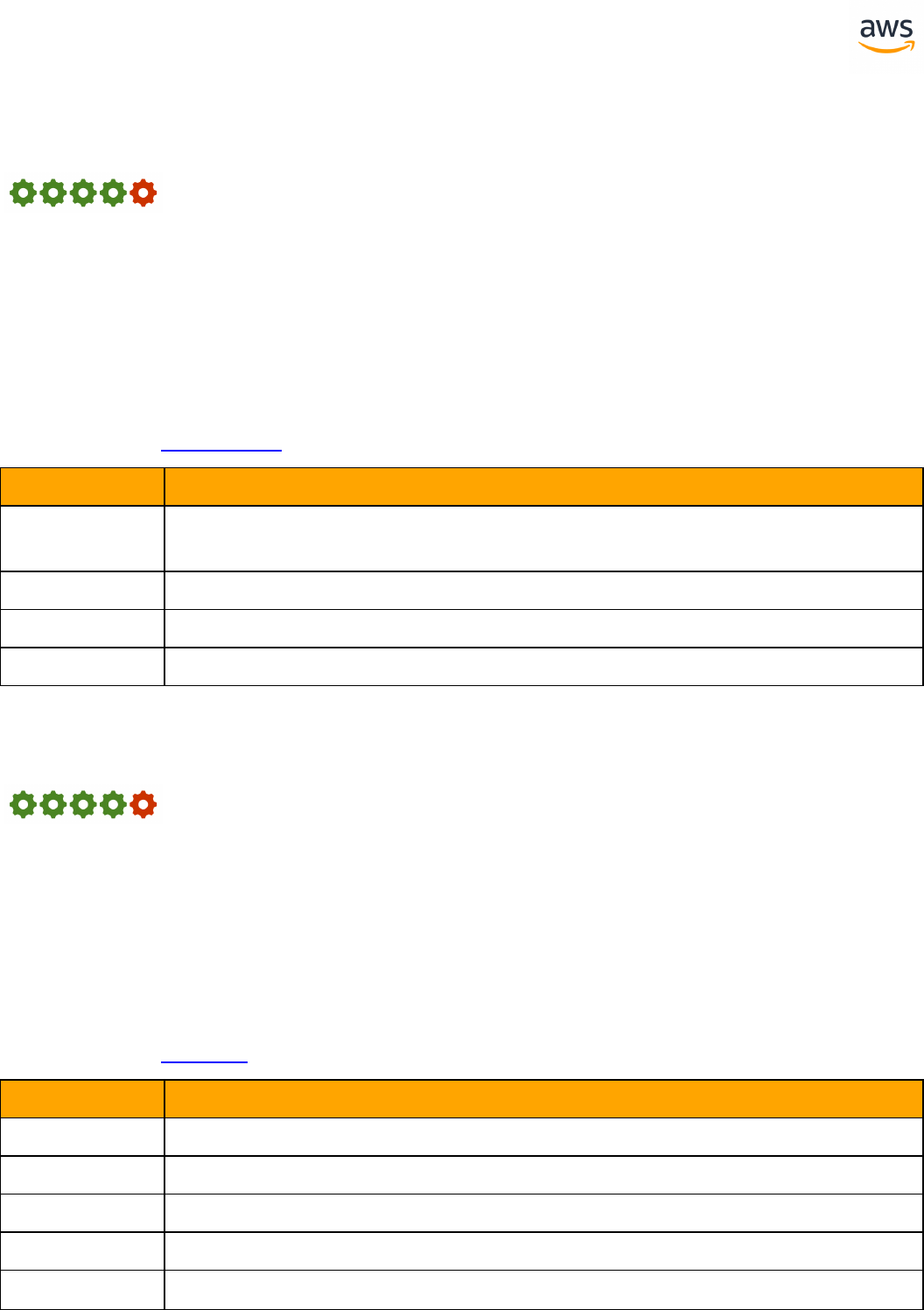

SCT Automation Level Legend

SCT Automation

Level Symbol

Description

Full Automation SCT performs fully automatic conversion, no manual con-

version needed.

High Automation: Minor, simple manual conversions may be needed.

Medium Automation: Low-medium complexity manual conversions may be

needed.

Low Automation: Medium-high complexity manual conversions may be needed.

Very Low Automation: High risk or complex manual conversions may be

needed.

No Automation: Not currently supported by SCT, manual conversion is required

for this feature.

- 12 -

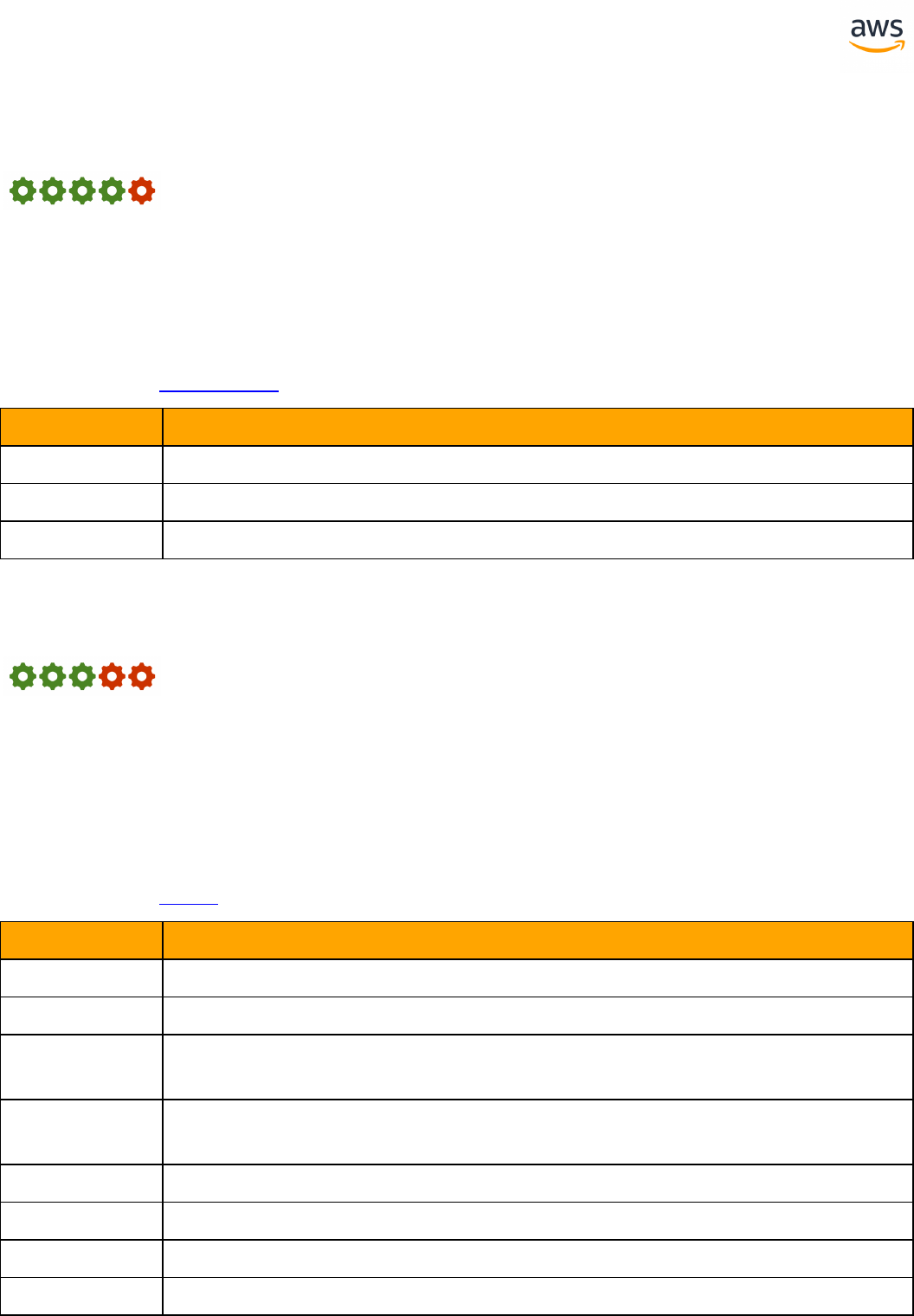

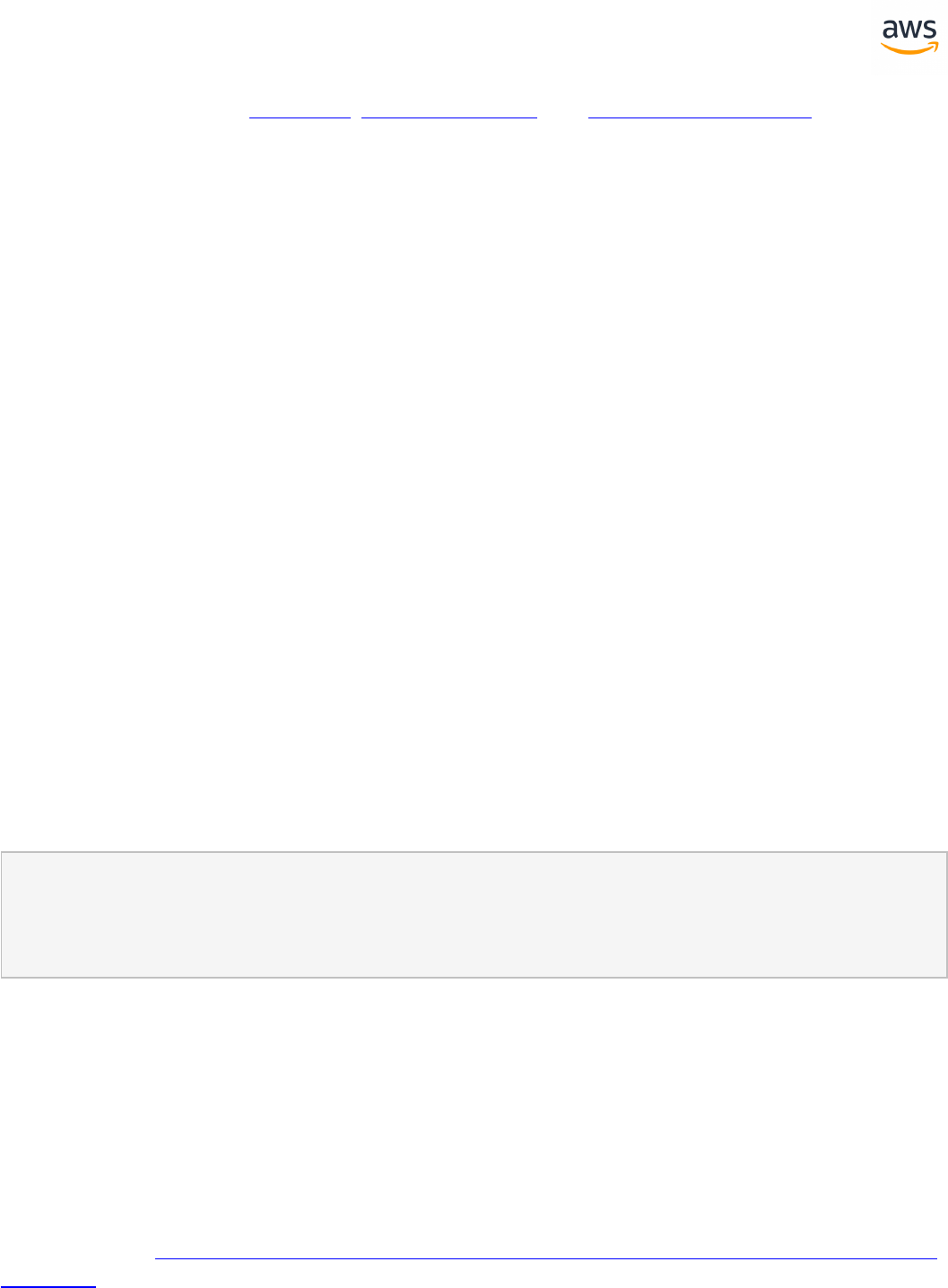

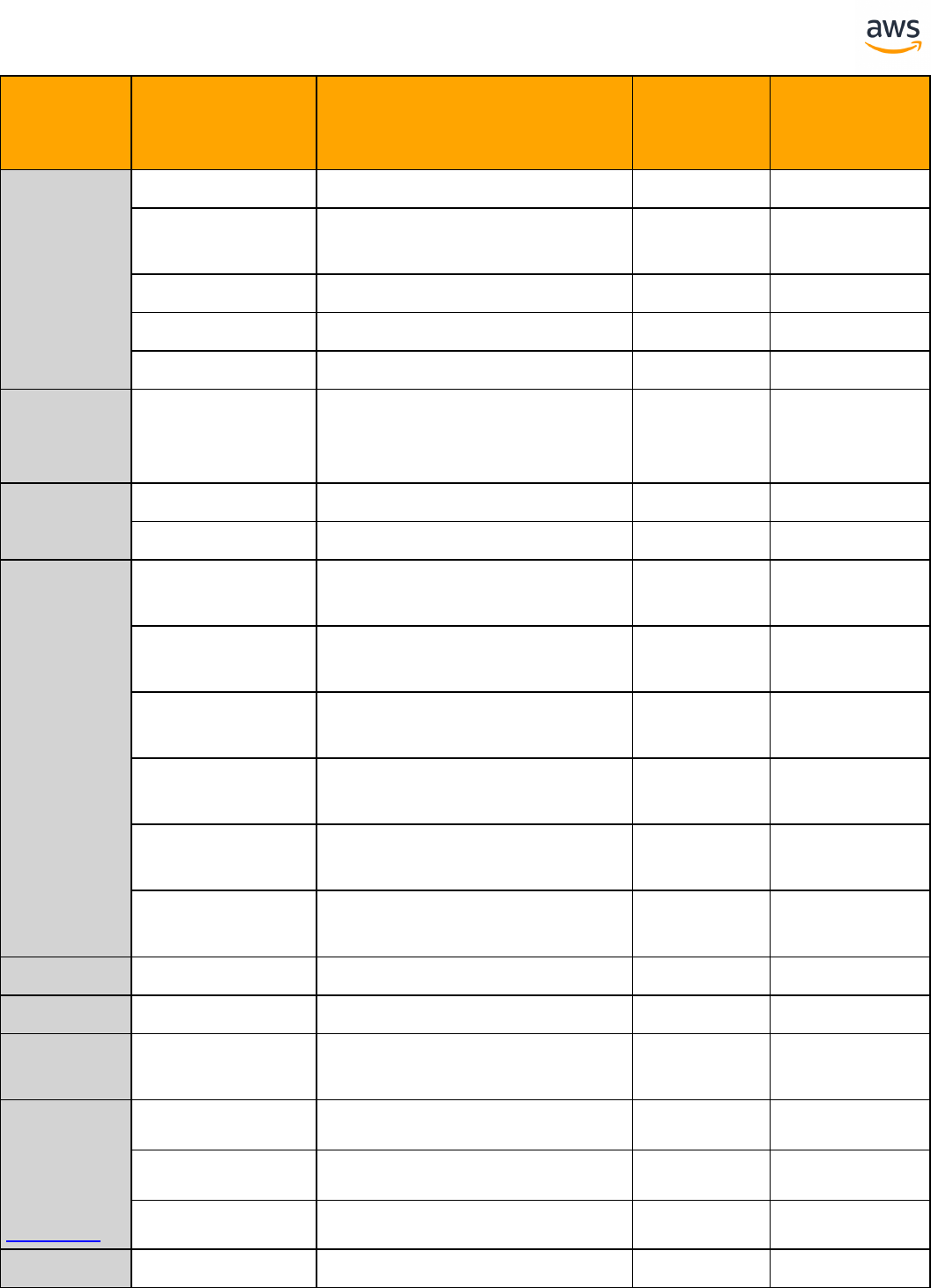

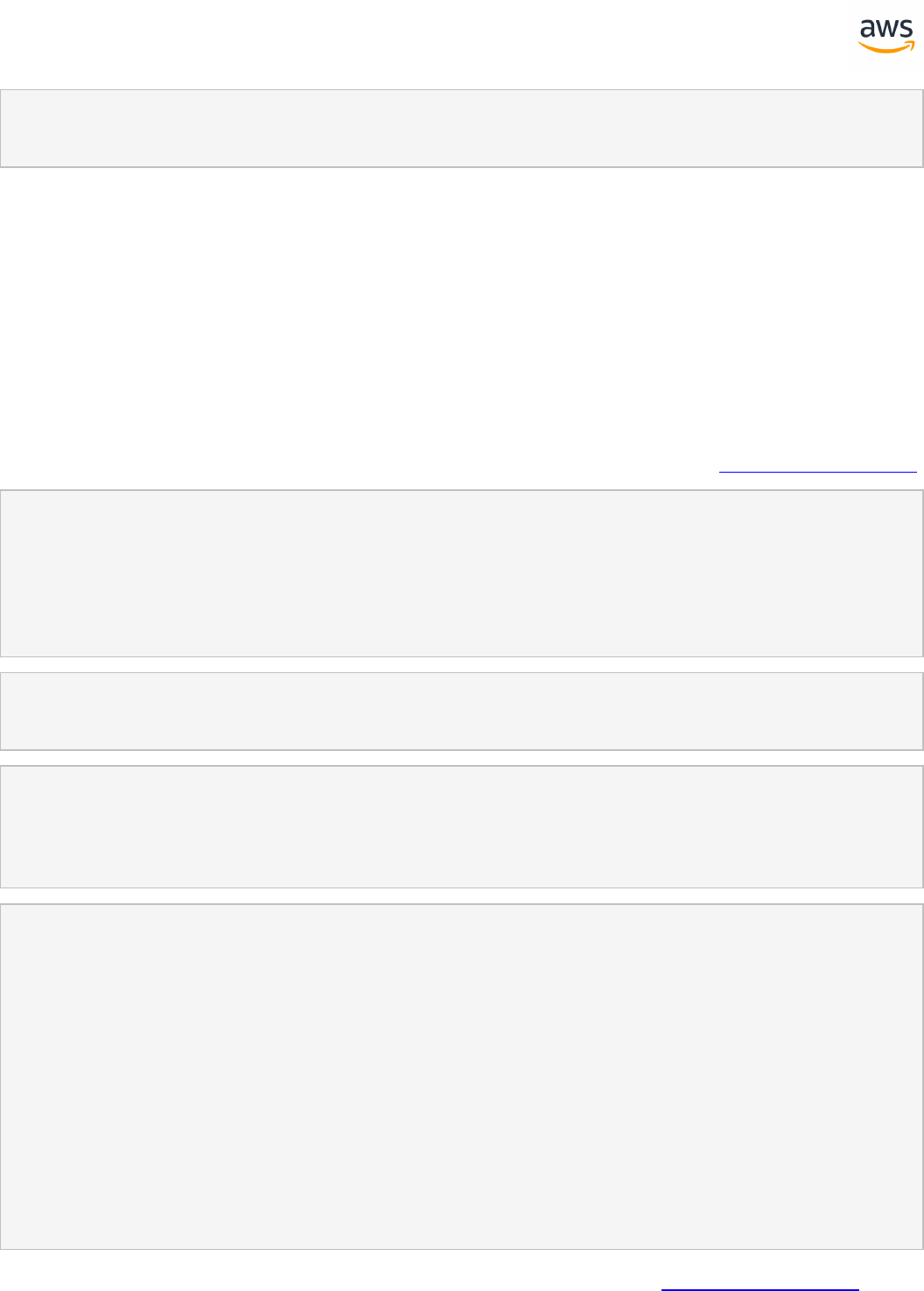

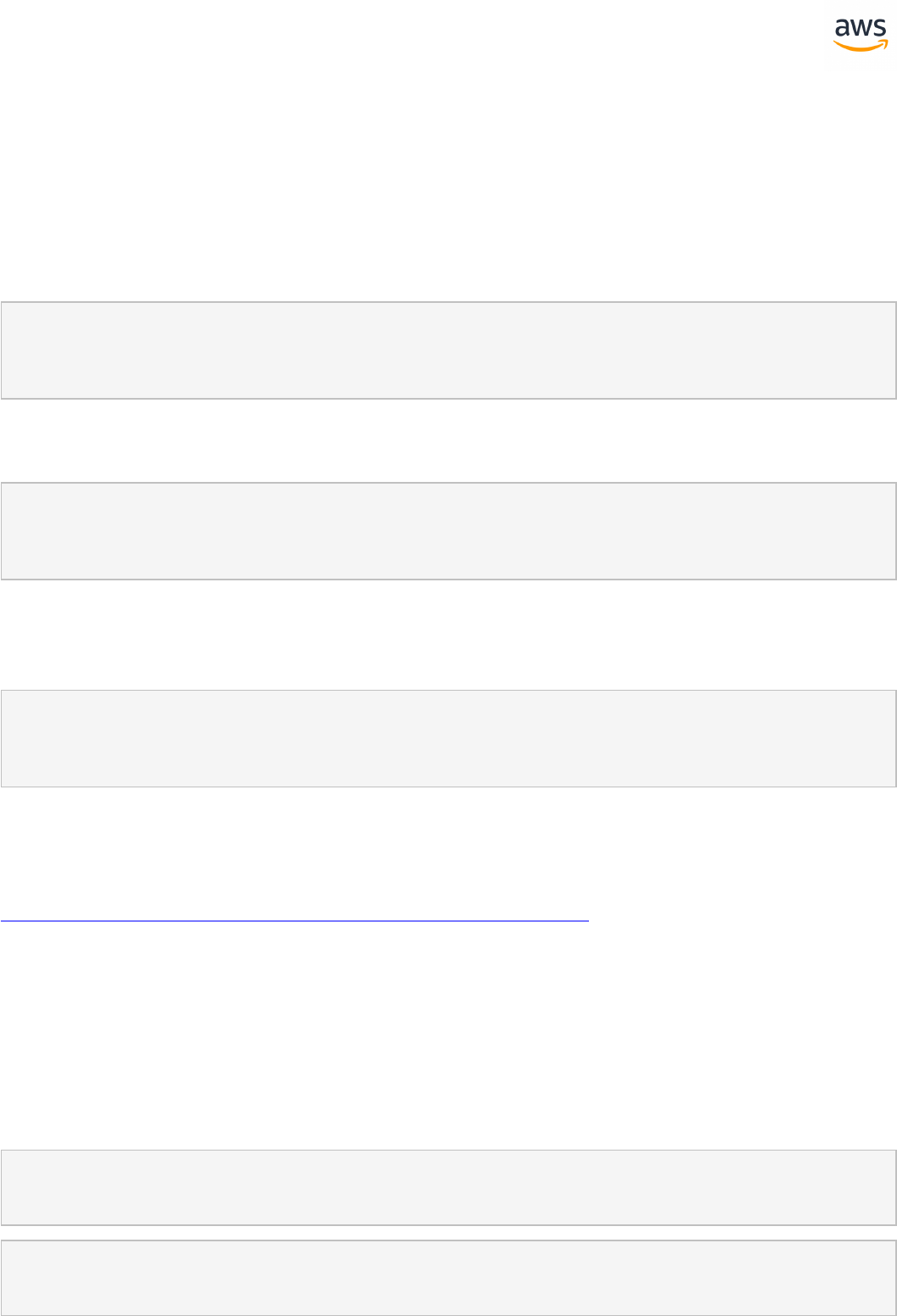

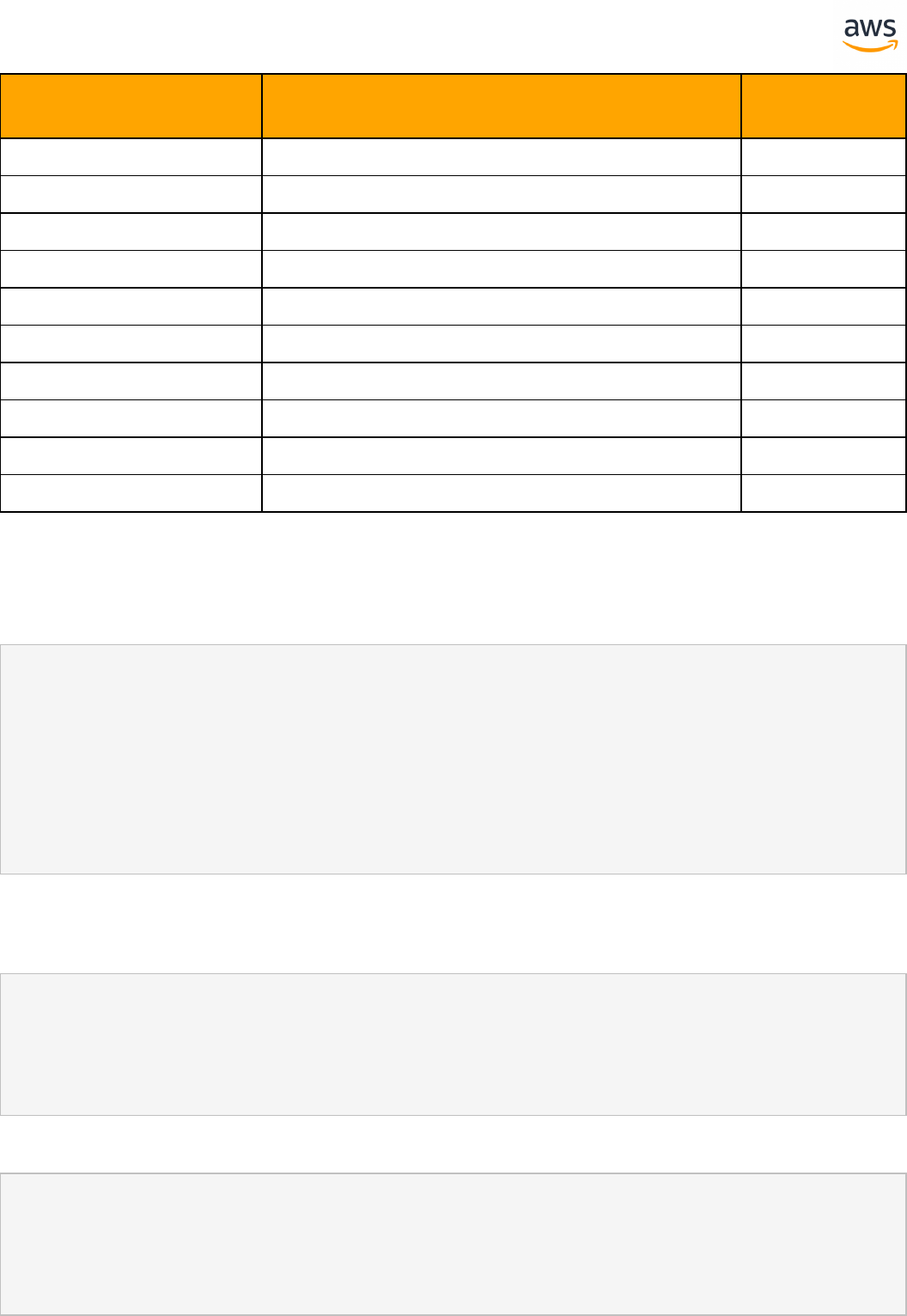

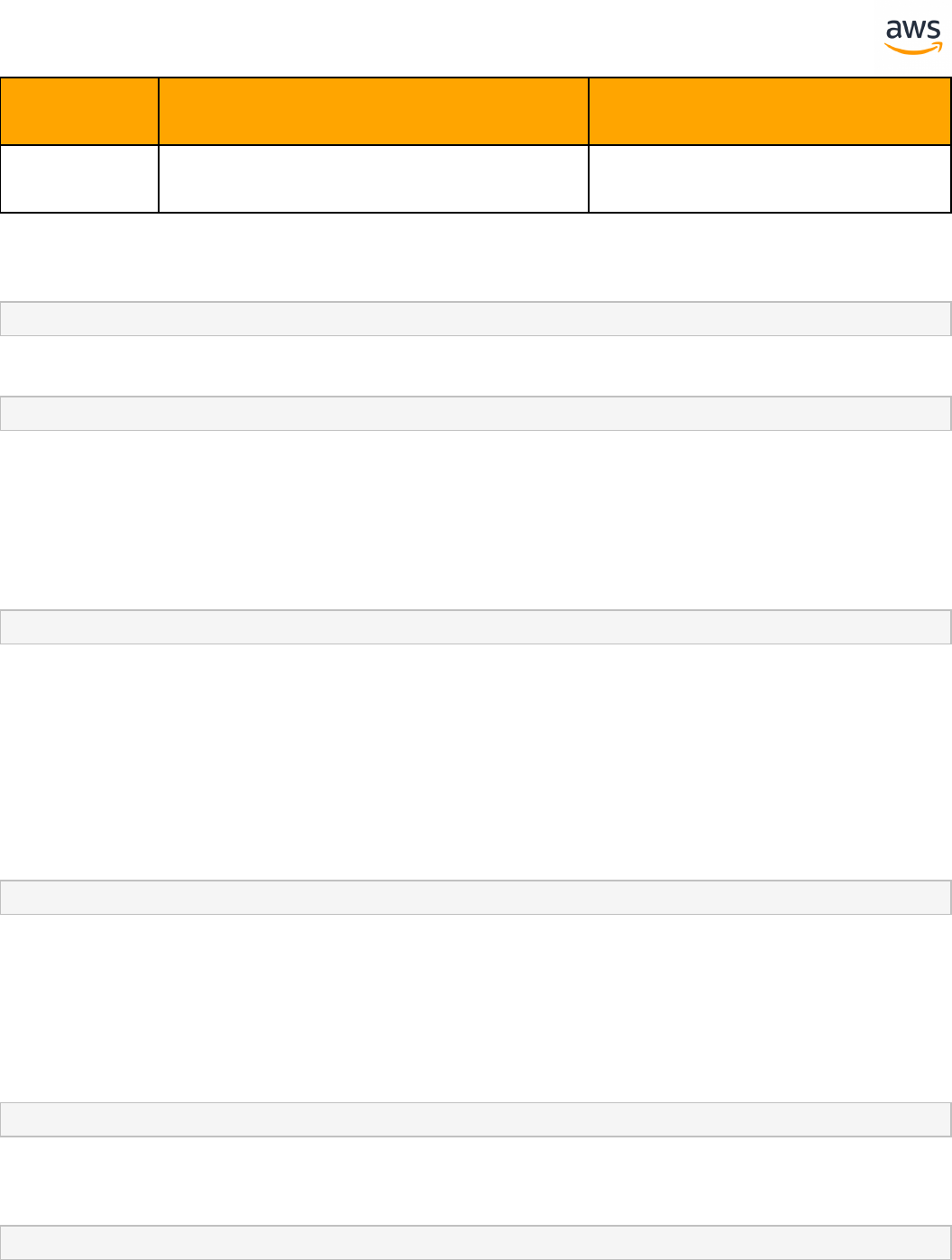

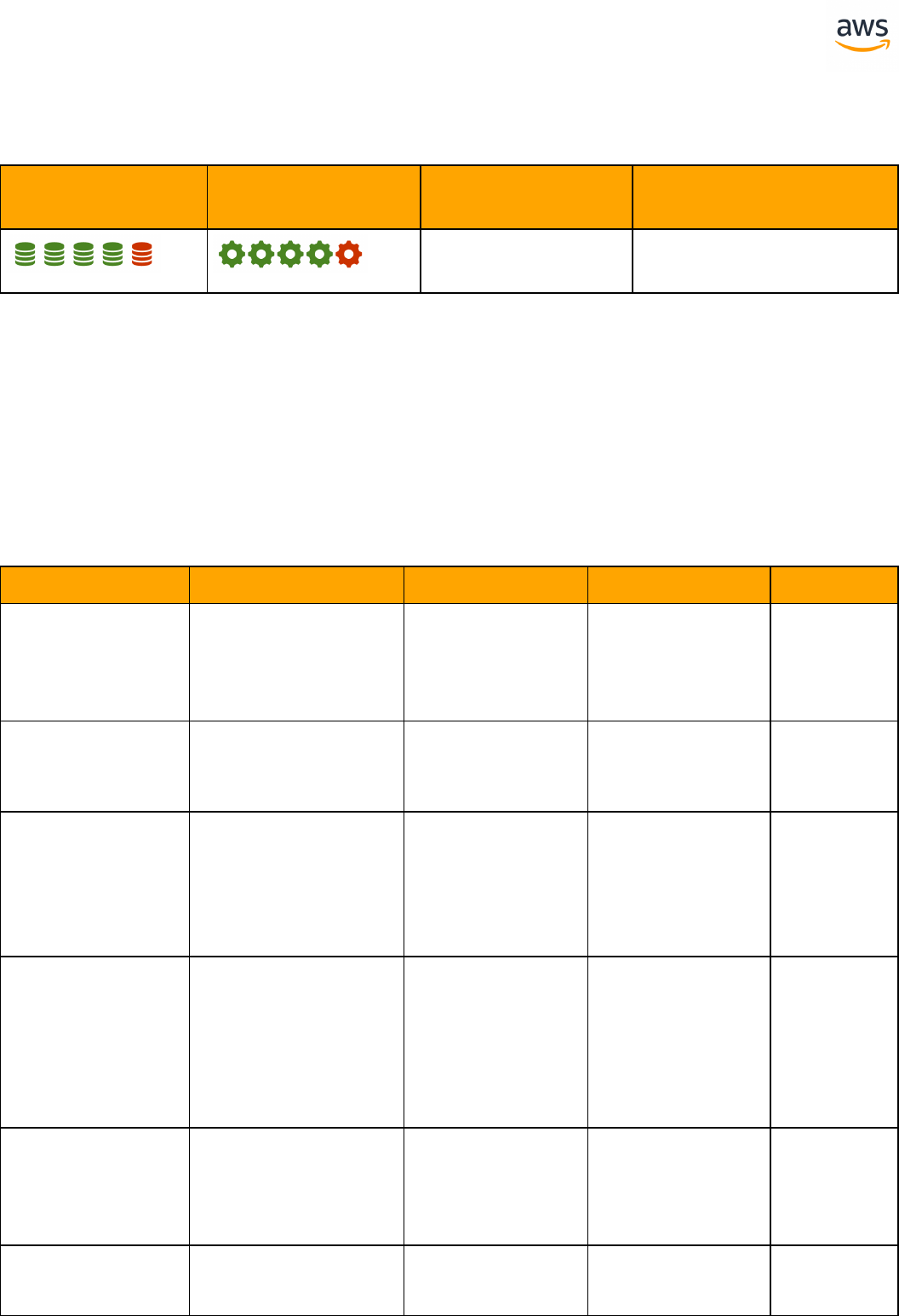

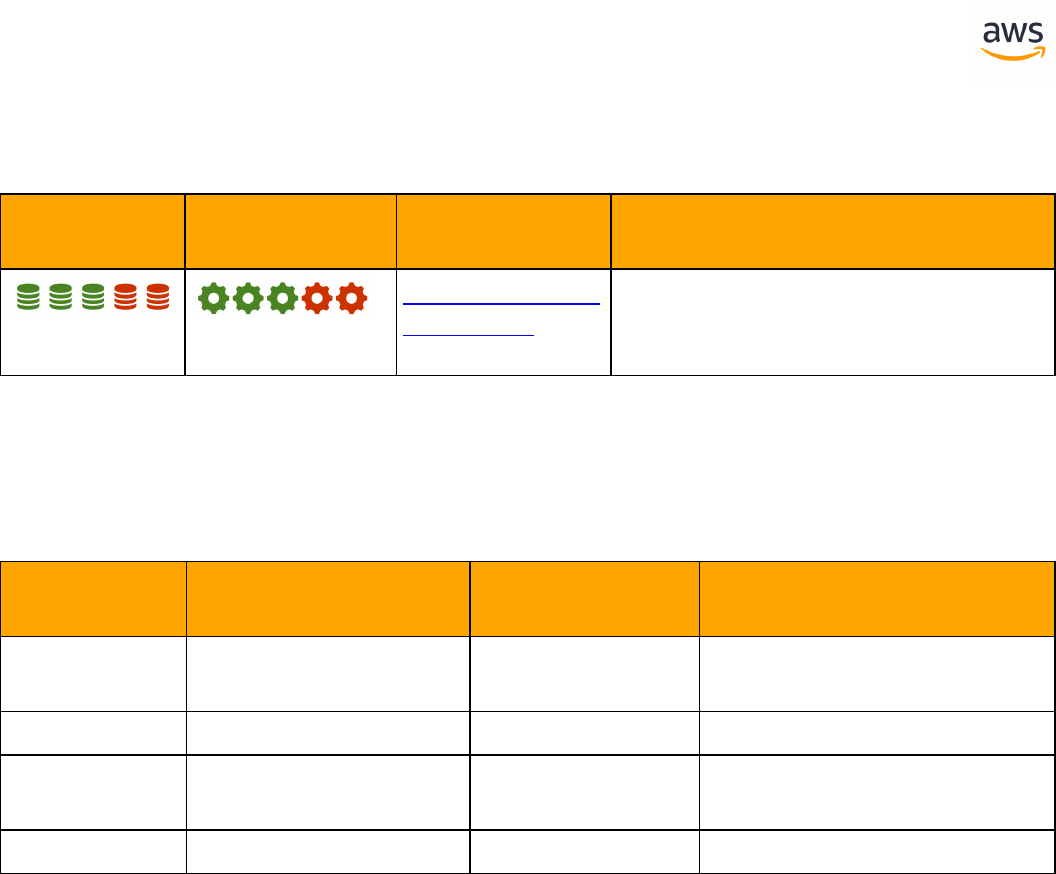

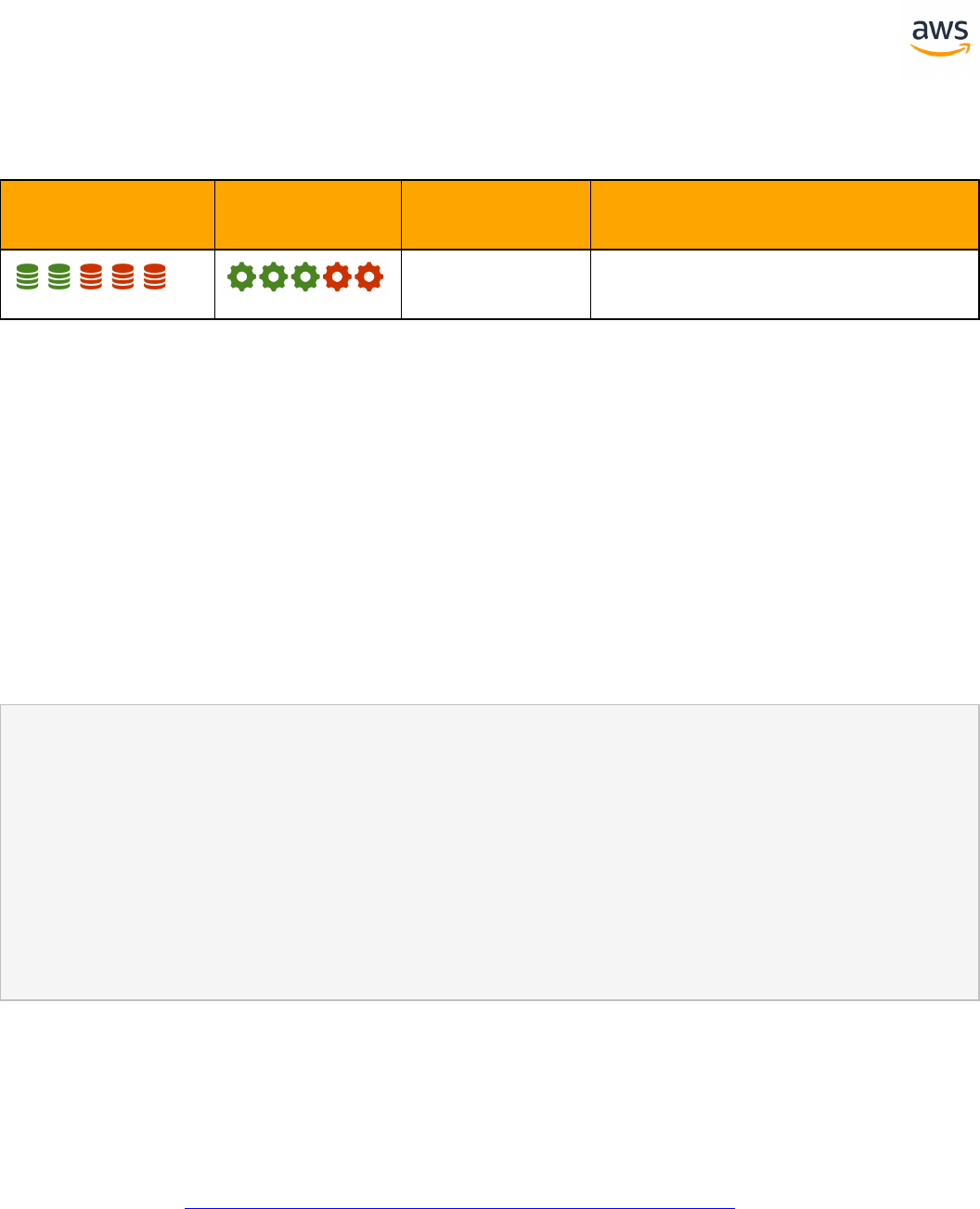

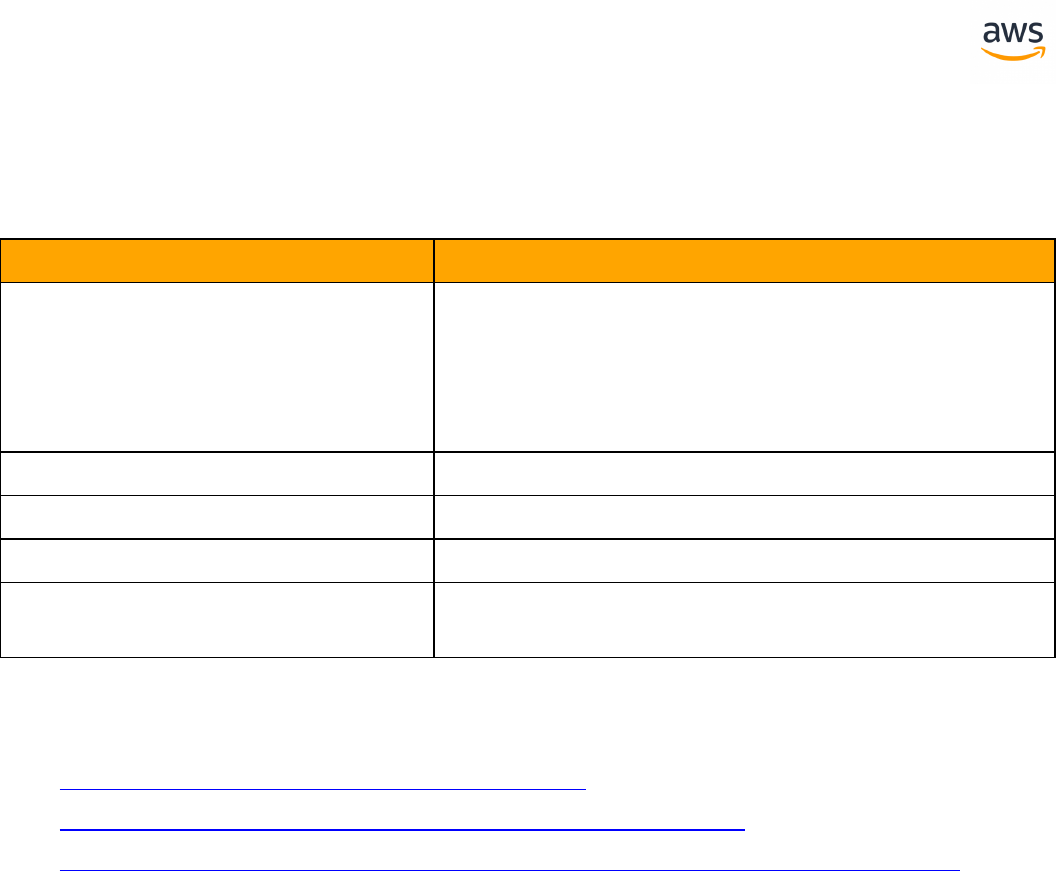

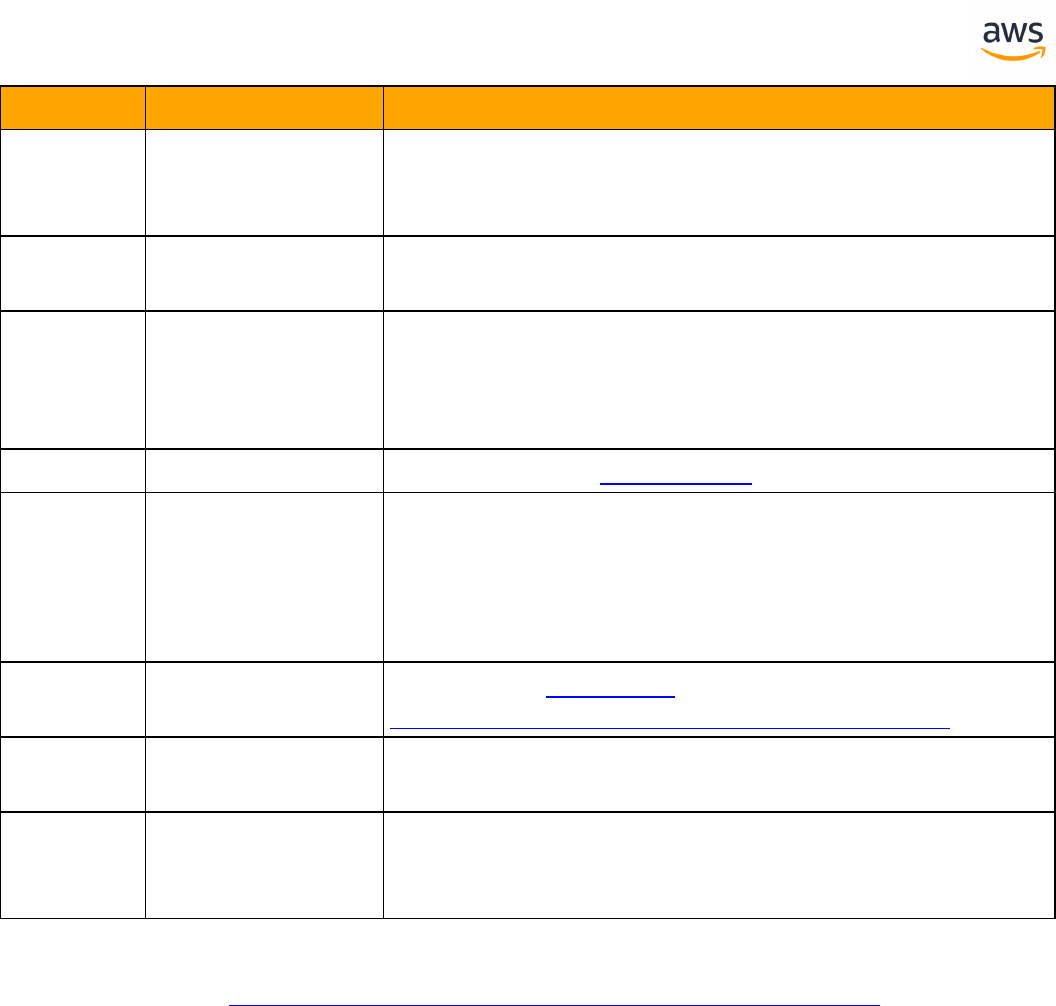

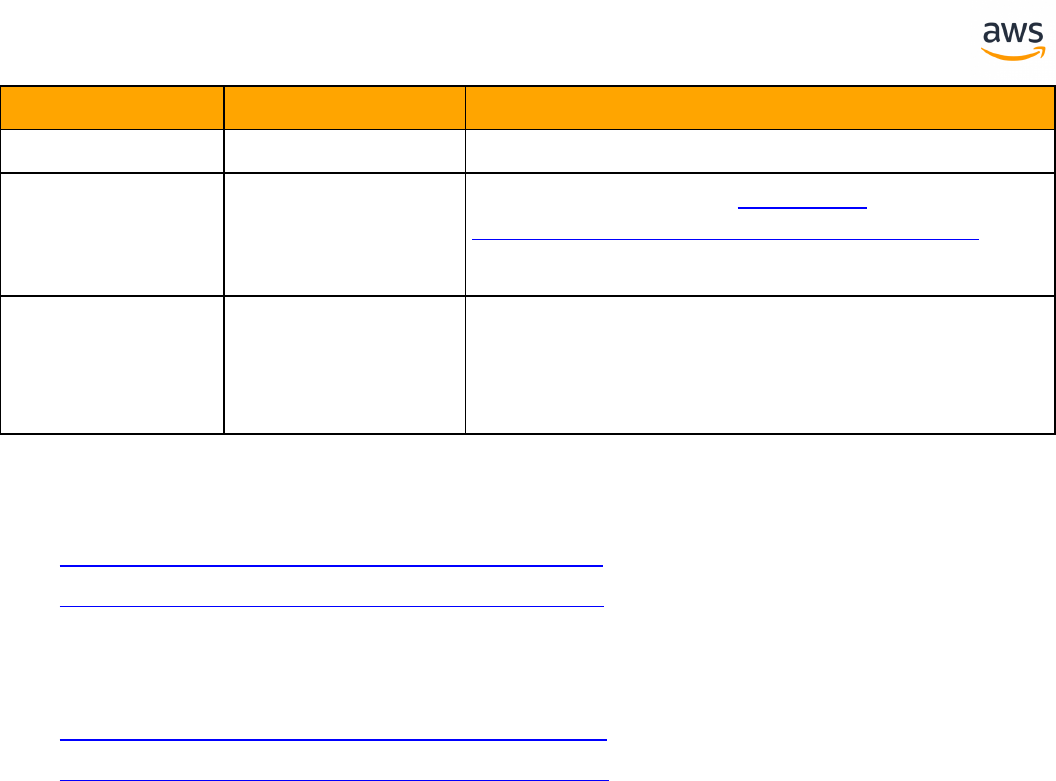

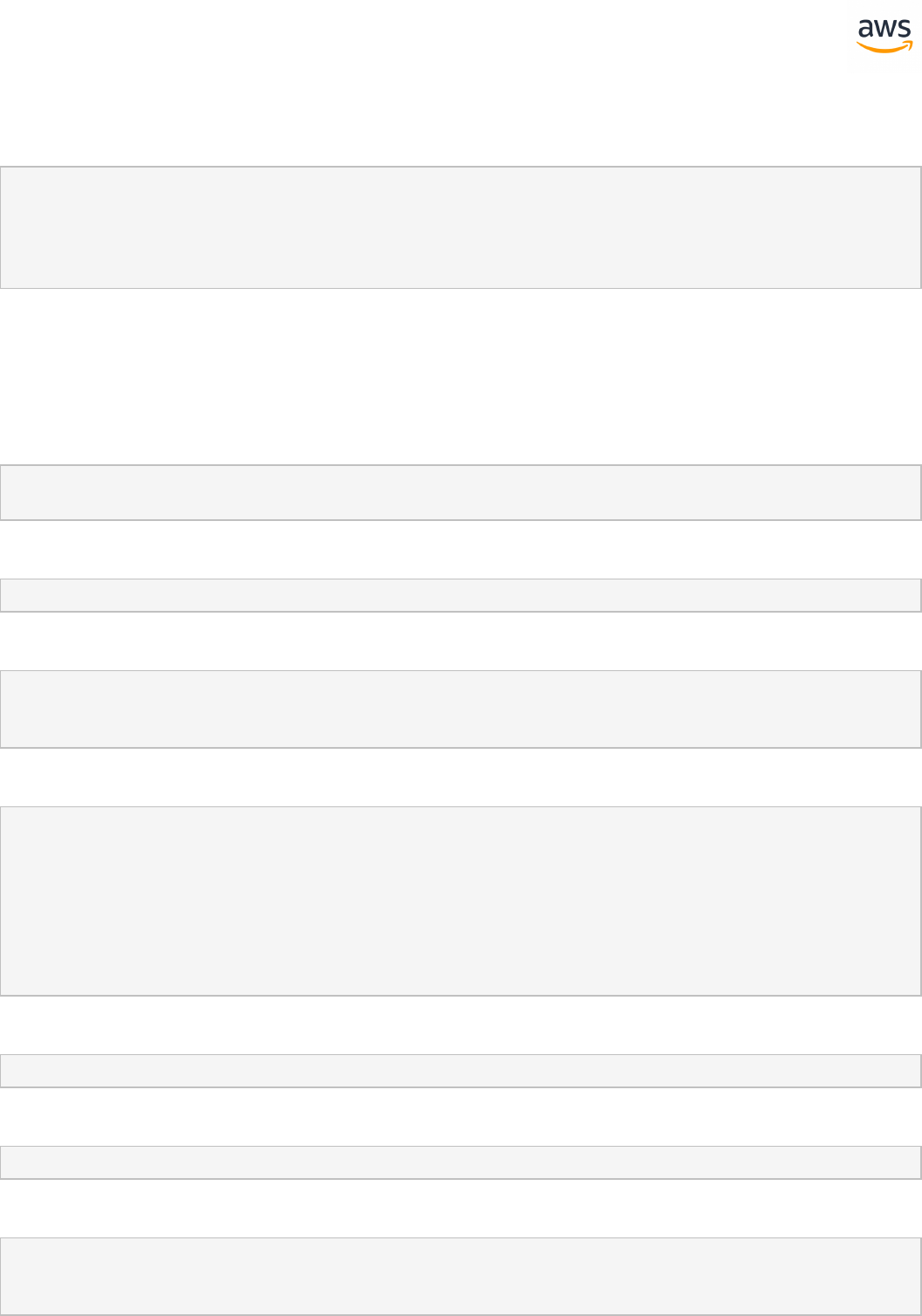

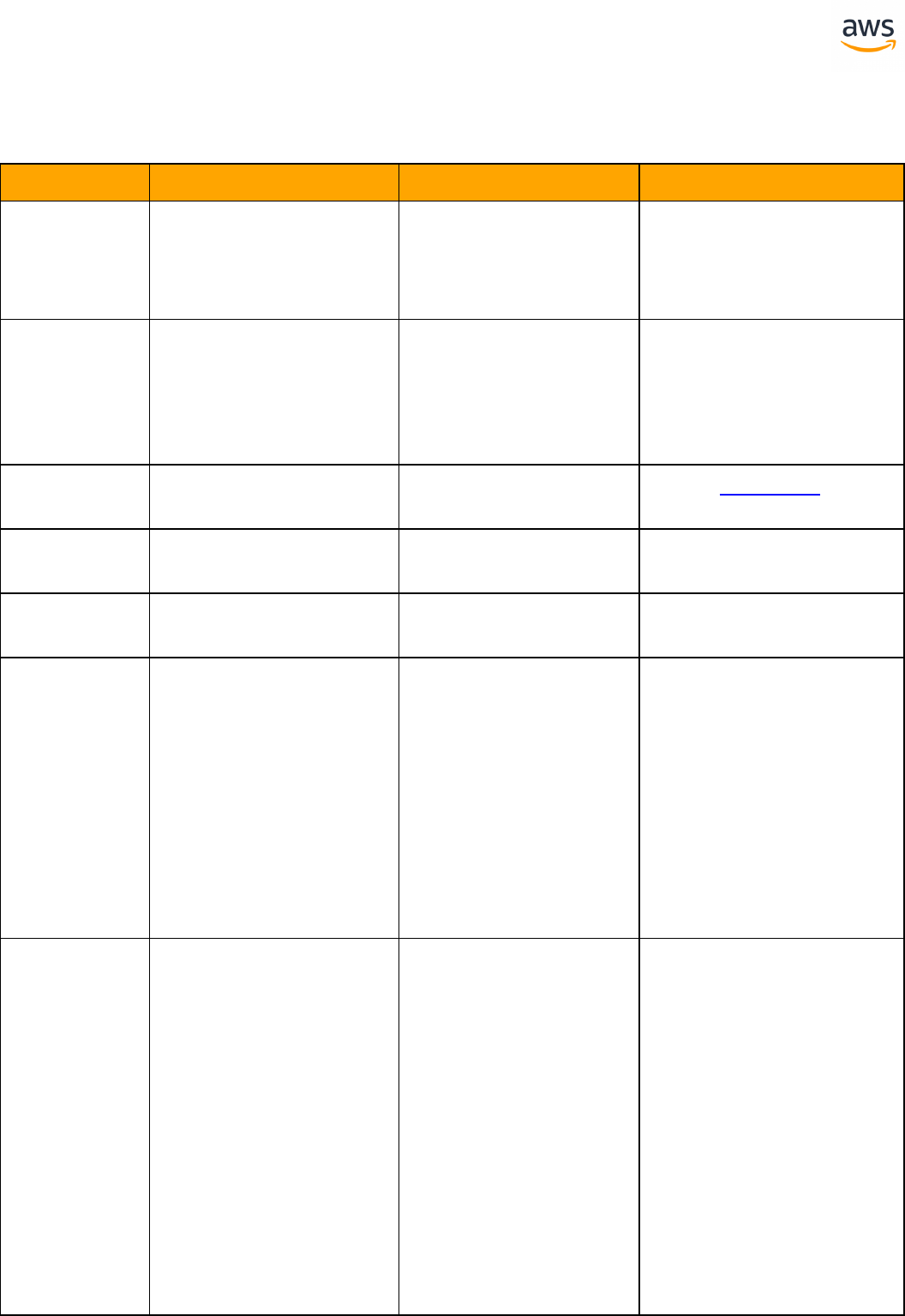

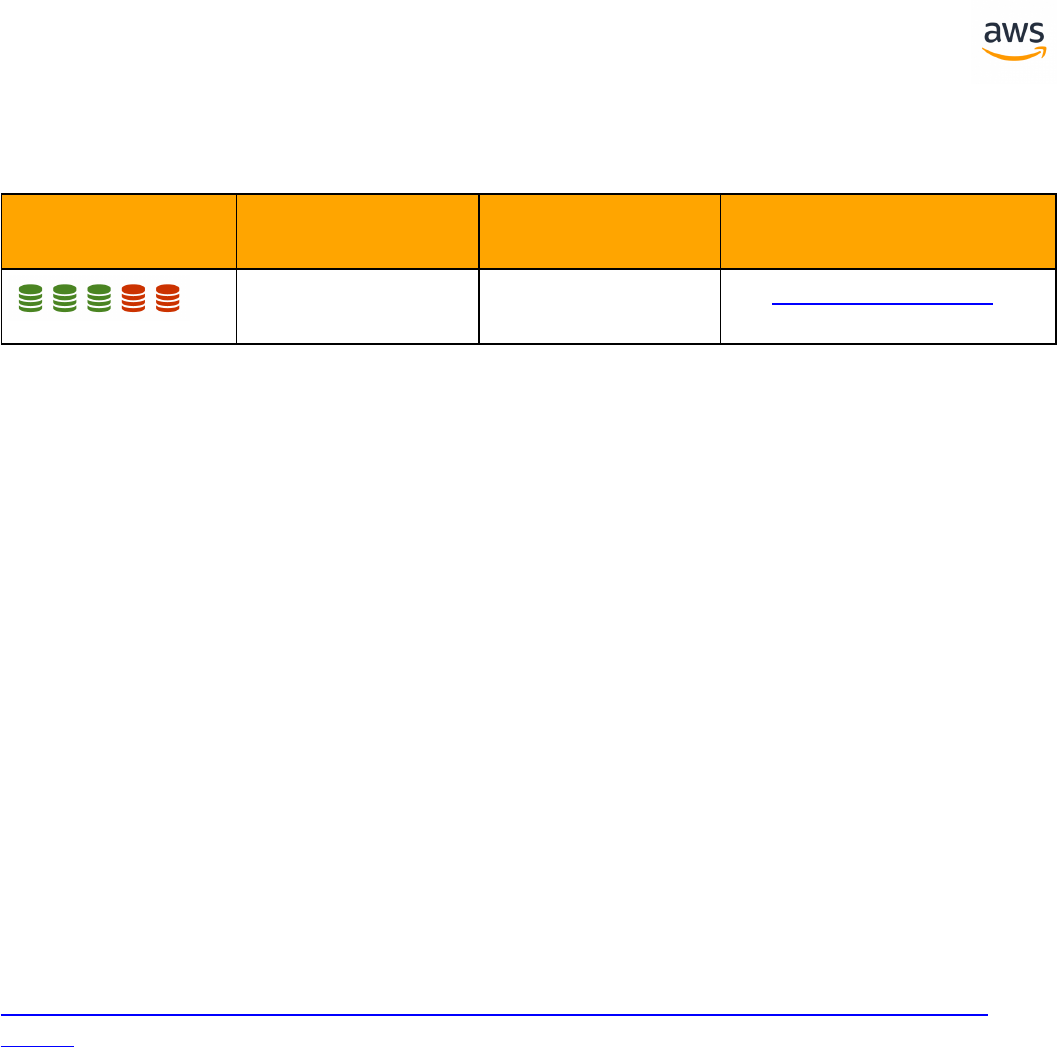

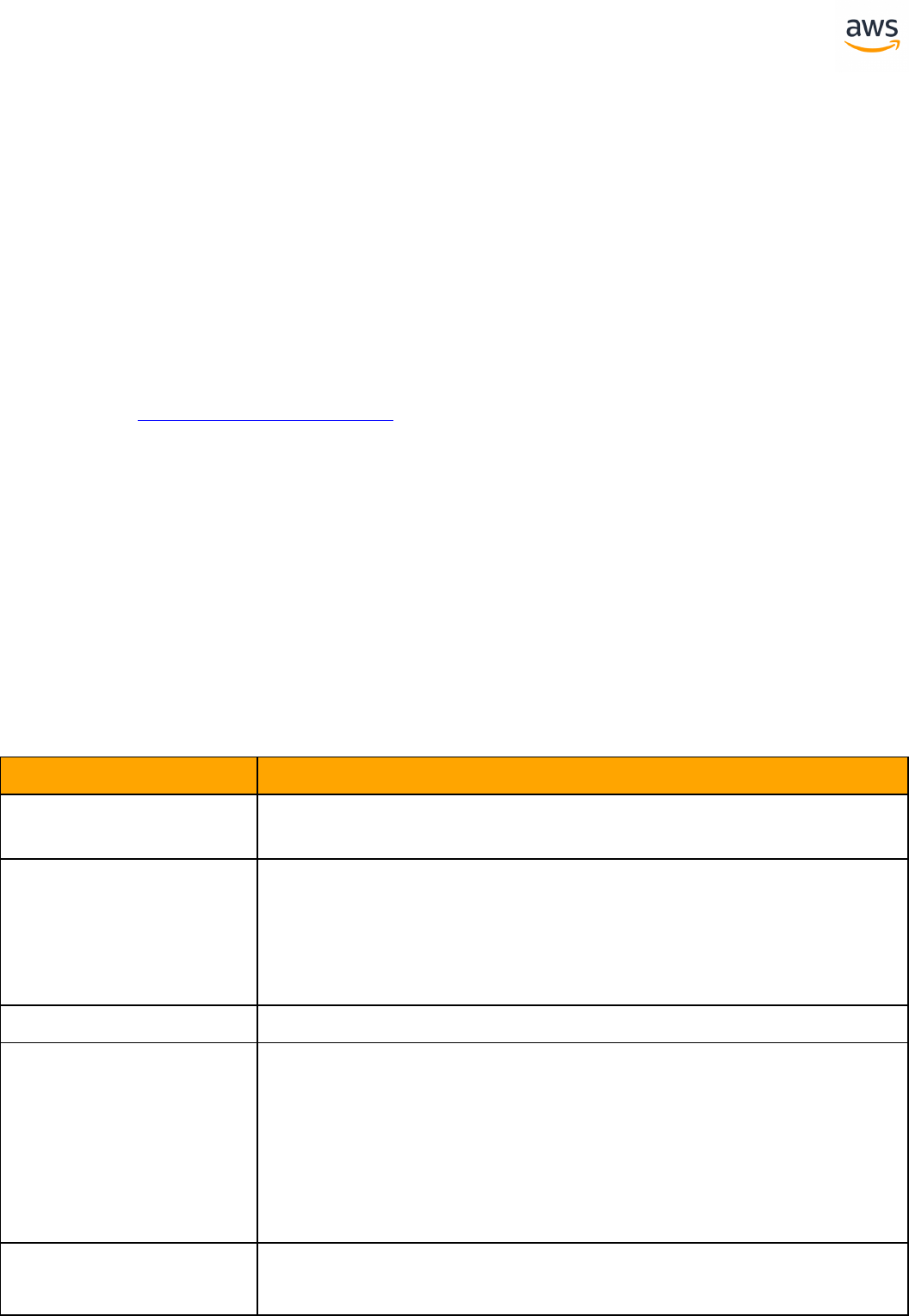

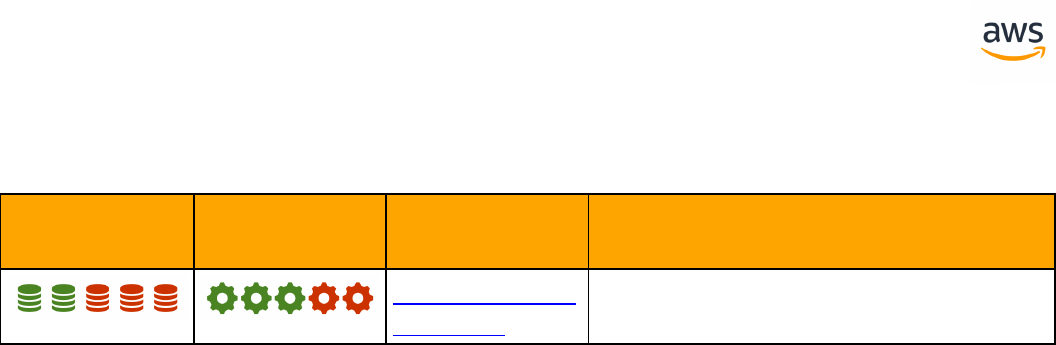

ANSI SQL

SQLServer Aurora PostgreSQL Key Differences Compatibility

Constraints Constraints l SET DEFAULT option is

missing

Creating Tables Creating Tables l Auto generated value

column is different

l Can't use physical

attribute ON

l Missing table variable

and memory optim-

ized table

Common Table

Expressions

Common Table

Expressions

GROUP BY GROUP BY

Table JOIN Table JOIN l OUTER JOIN with com-

mas

l CROSS APPLY and

OUTER APPLY are not

supported

Data Types Data Types l Syntax and handling

differences

Views Views l Indexed and Par-

titioned view are not

supported

Windowed Func-

tions

Windowed Functions

Derived Tables Derived Tables

- 13 -

SQLServer Aurora PostgreSQL Key Differences Compatibility

Temporal Tables Temporal Tables l Temporal tables are

not supported

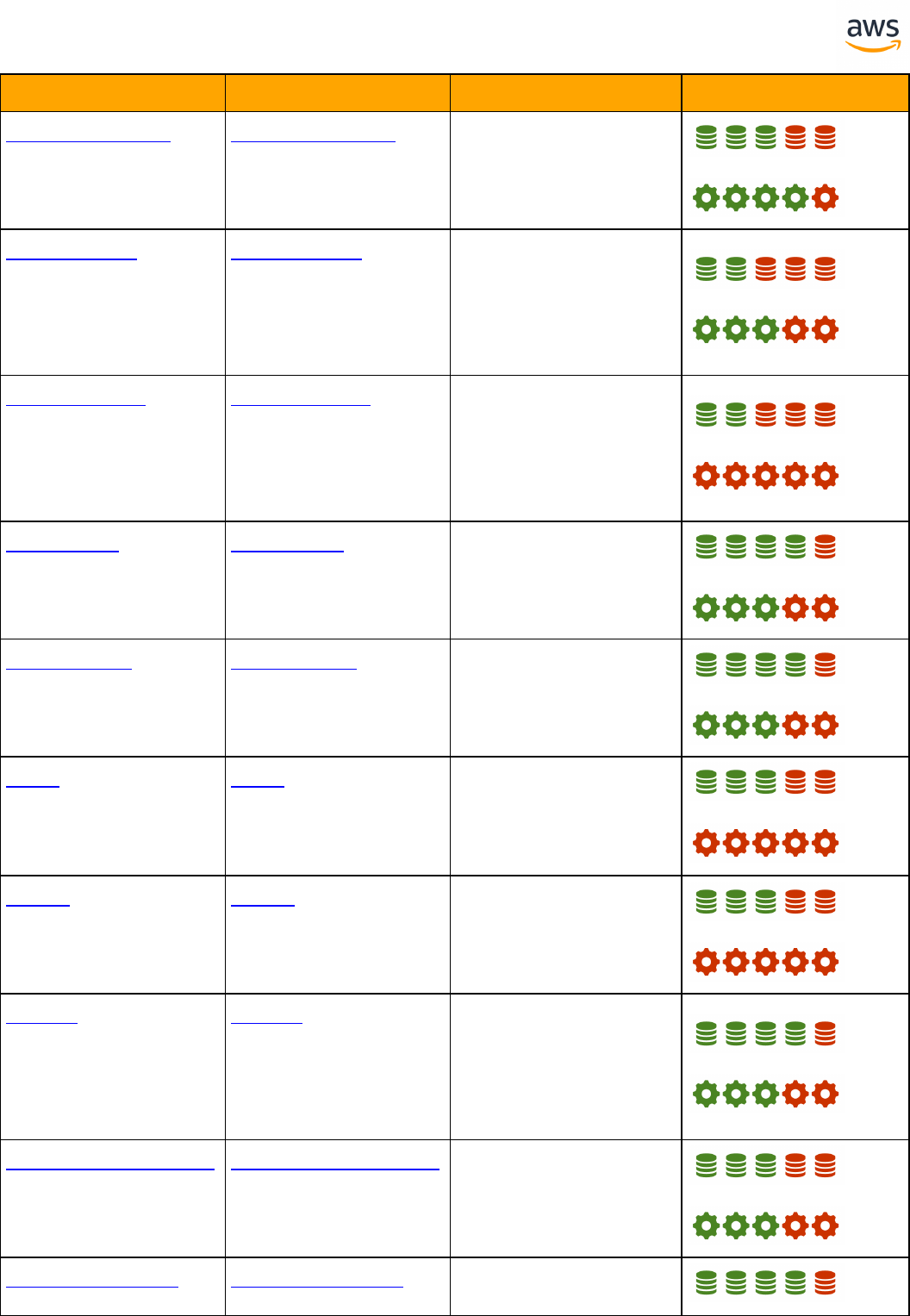

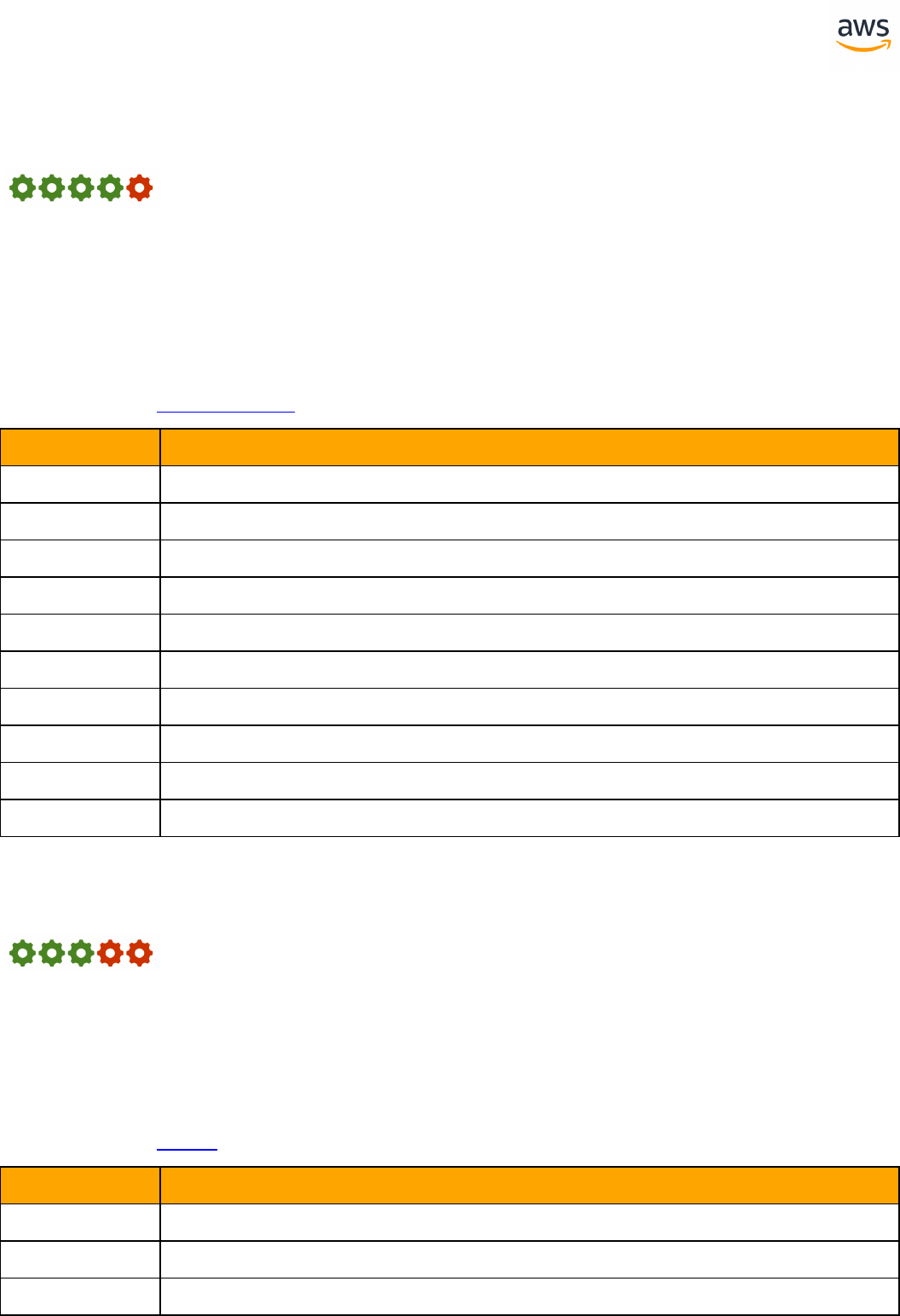

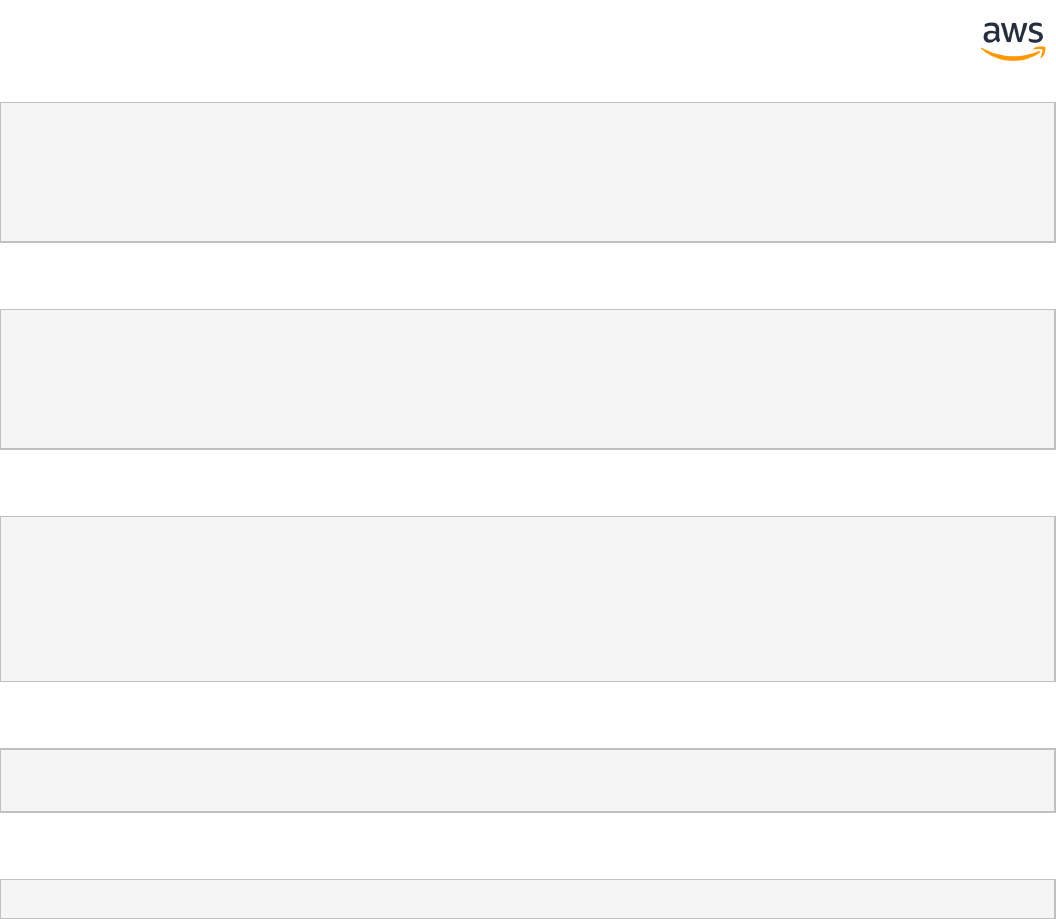

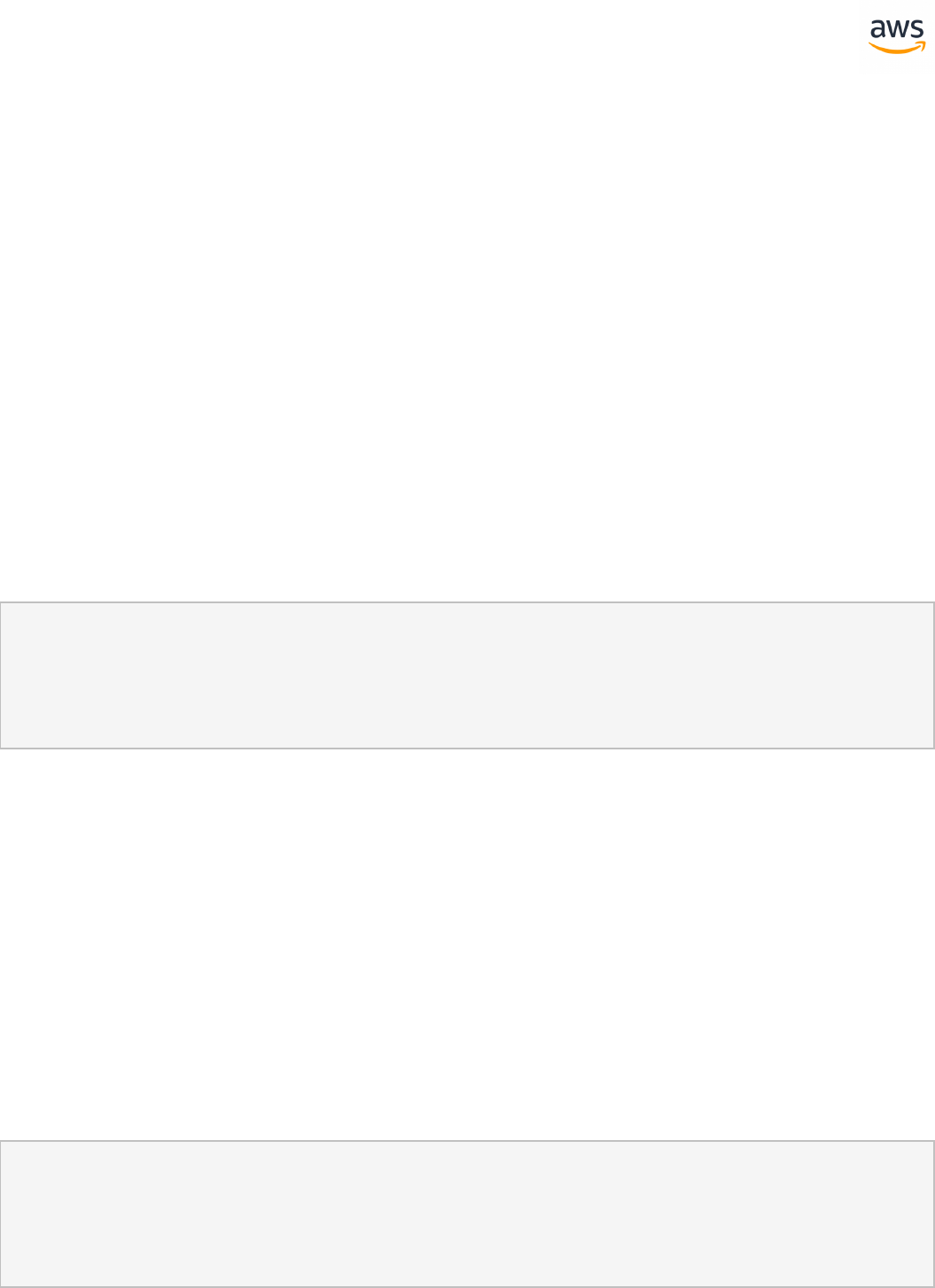

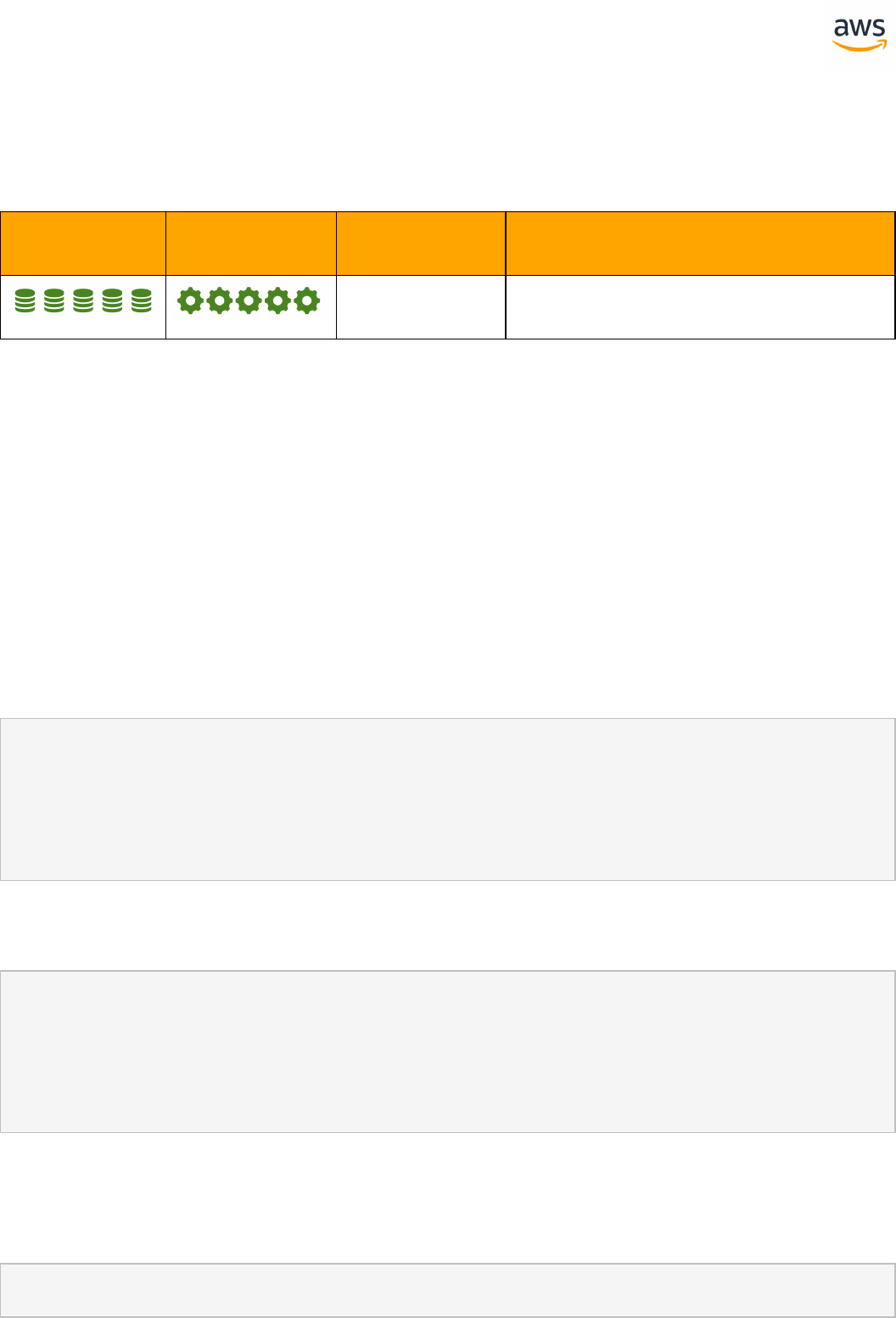

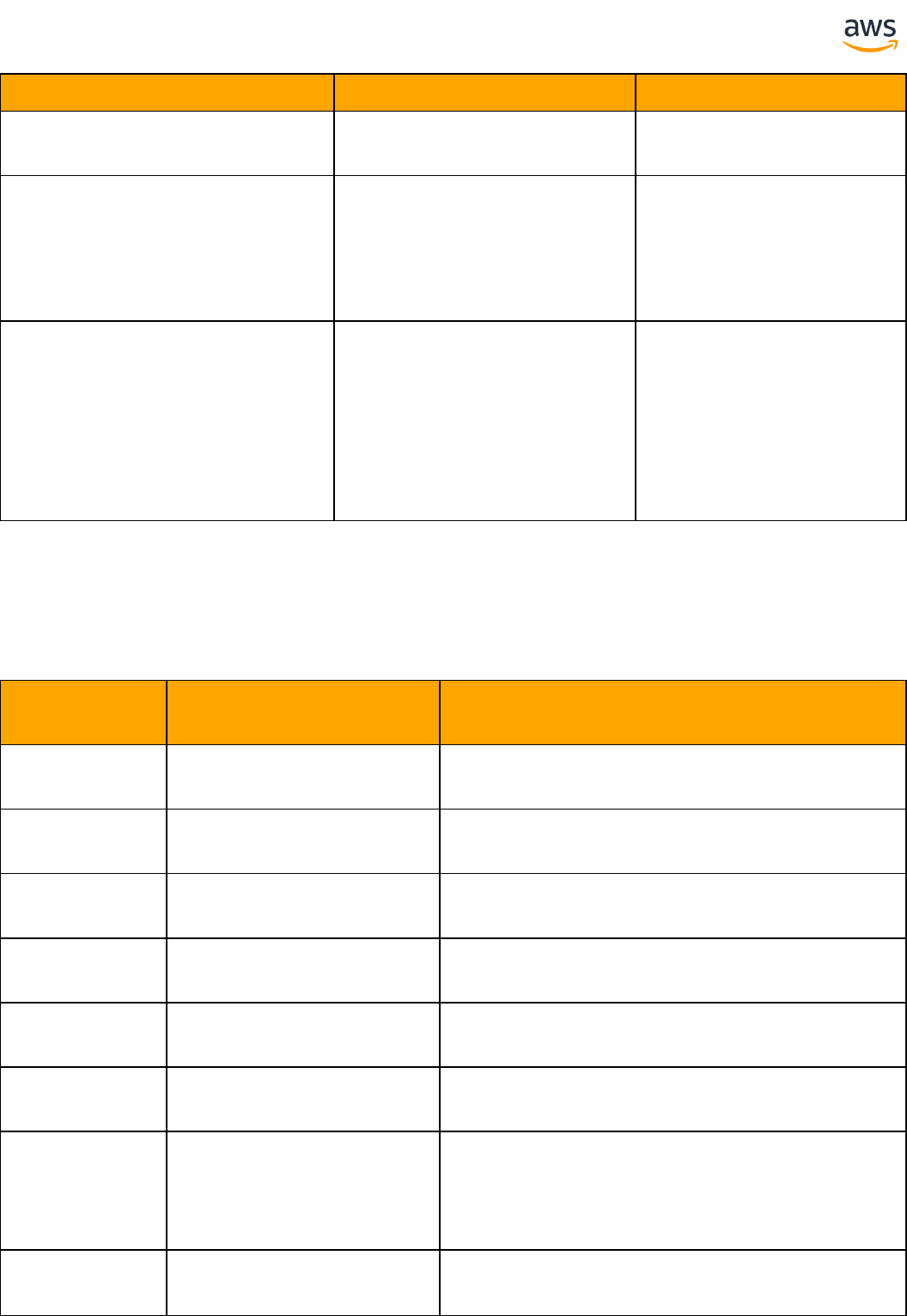

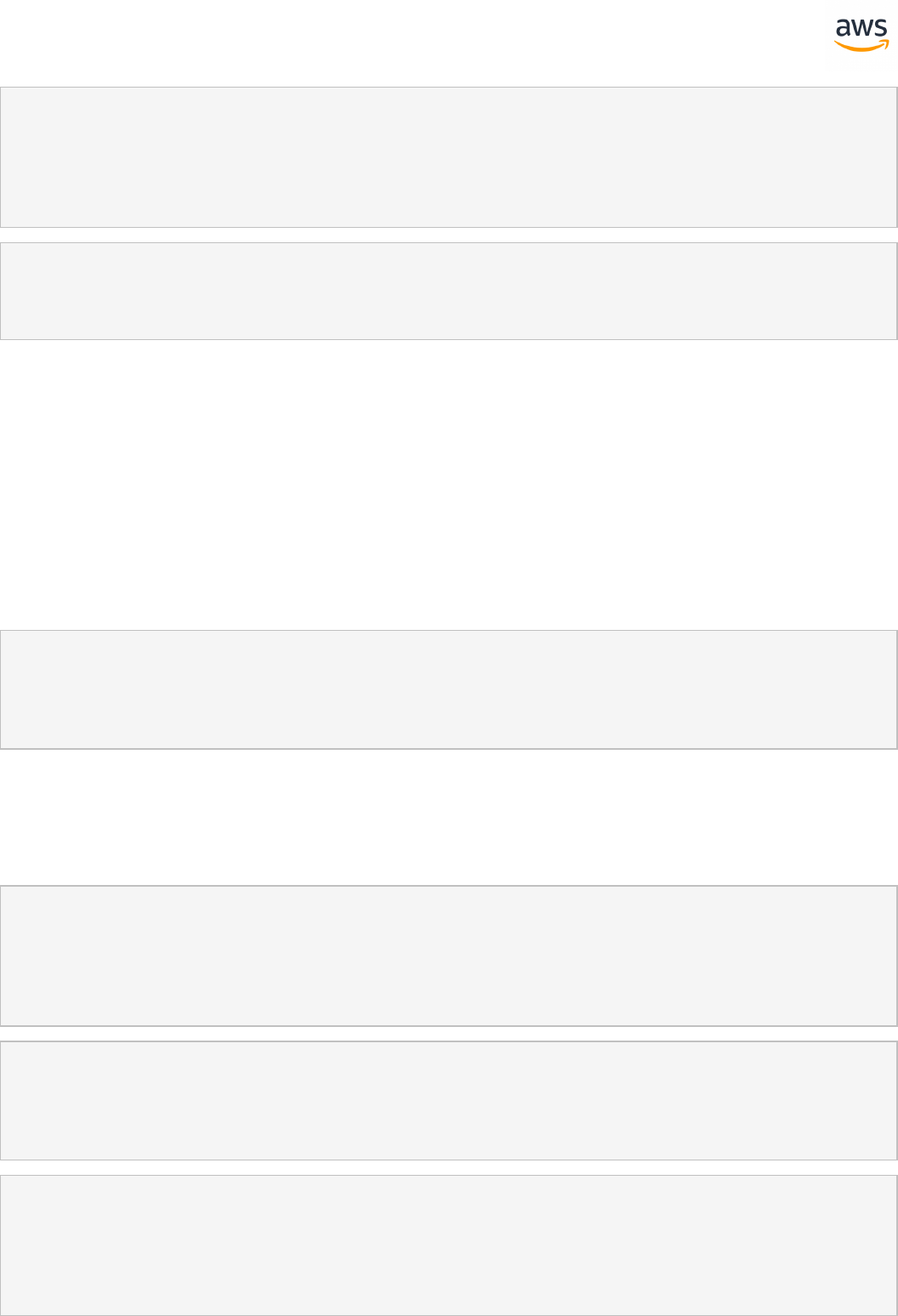

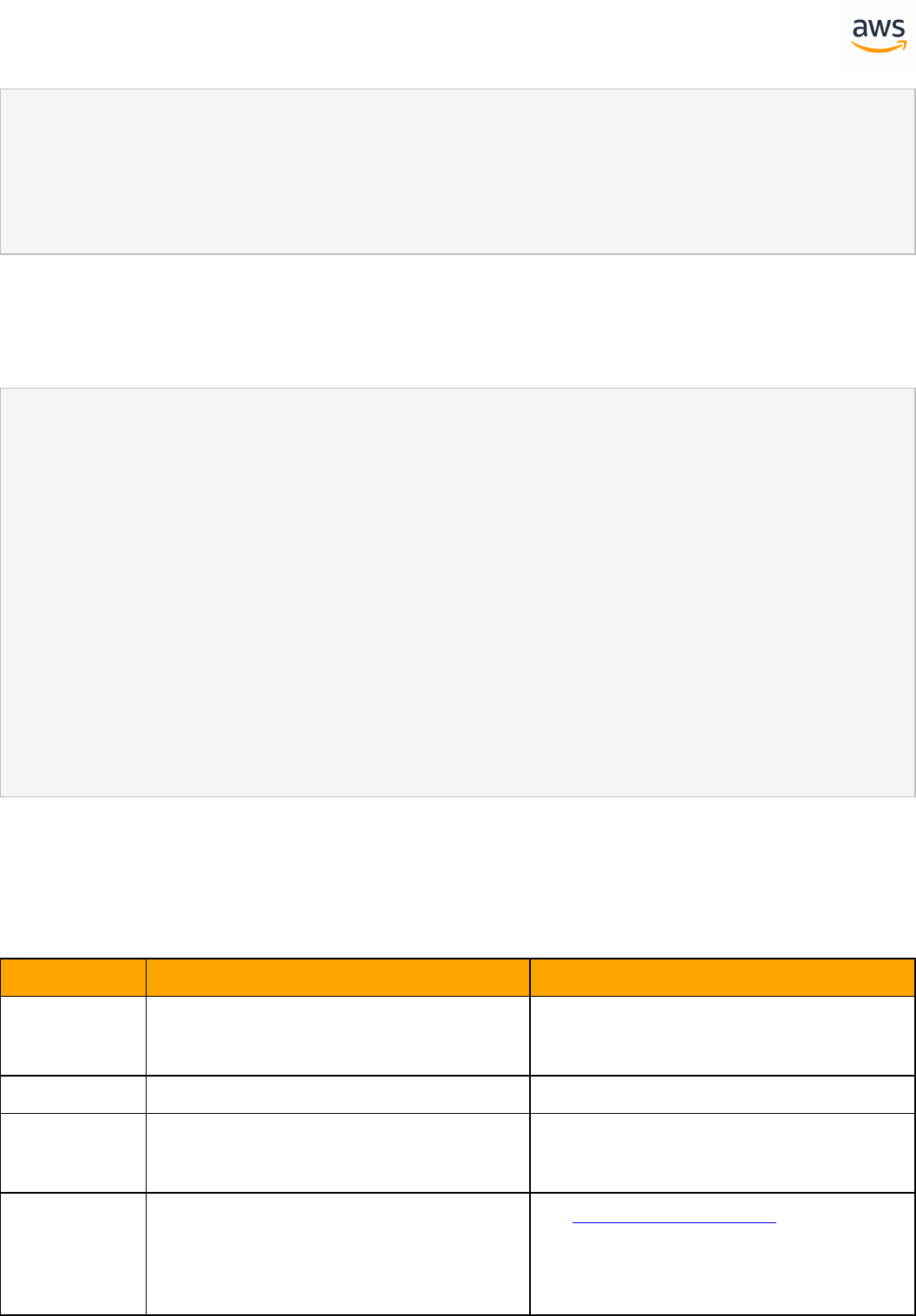

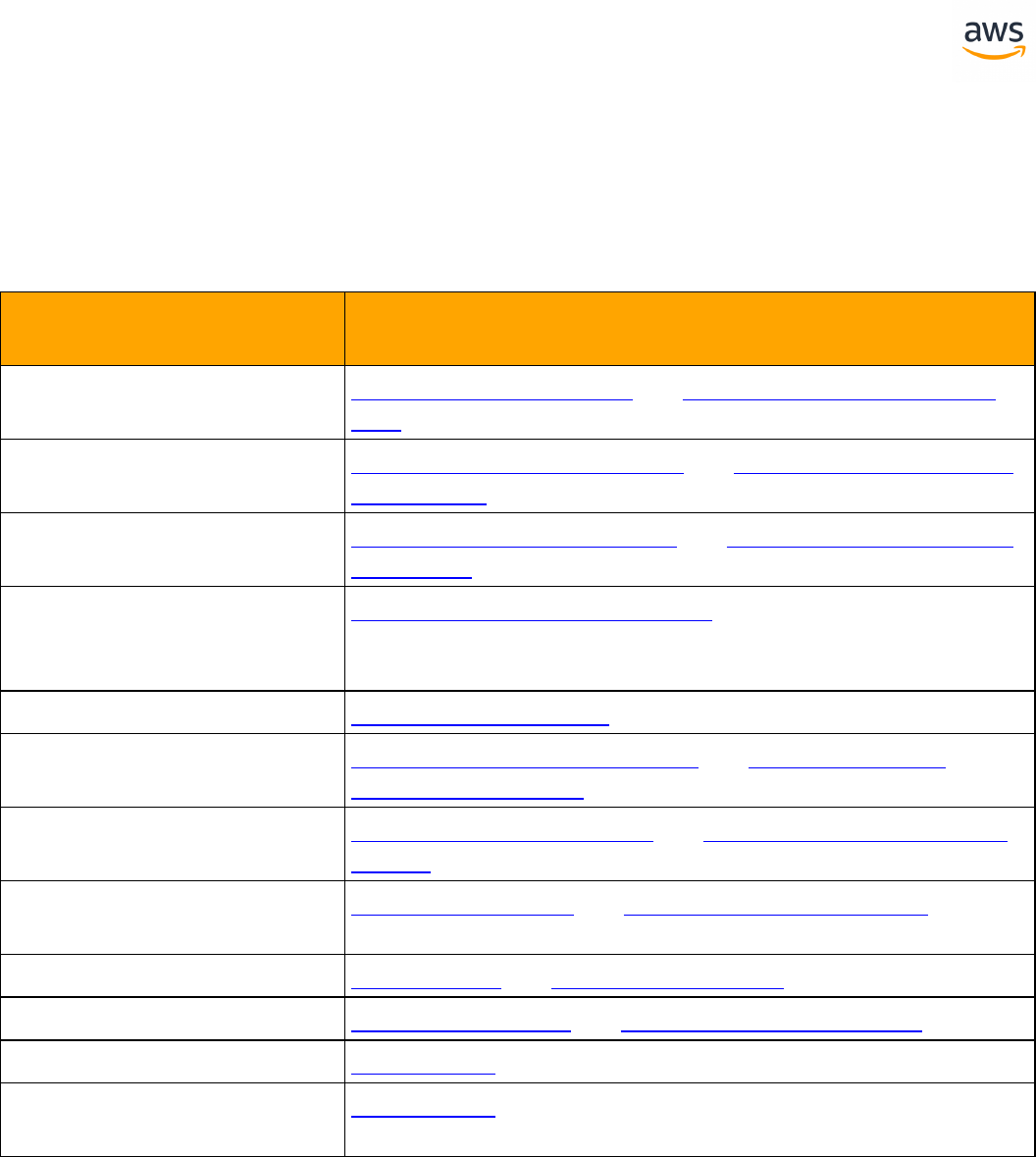

T-SQL

SQLServer Aurora PostgreSQL Key Differences Compatibility

Collations Collations l UTF16 and

NCHAR/NVARCHAR

data types are not

supported

Cursors Cursors l Different cursor

options

Date and Time Func-

tions

Date and Time Func-

tions

l PostgreSQL is

using different

function names

String Functions String Functions l Syntax and option

differences

Databases and

Schemas

Databases and

Schemas

Transactions Transactions l Nested trans-

actions are not

supported

l syntax diffrences

for initializing a

transaction

DELETE and UPDATE

FROM

DELETE and UPDATE

FROM

l DELETE...FROM

from_list is not

supported -

rewrite to use sub-

queries

- 14 -

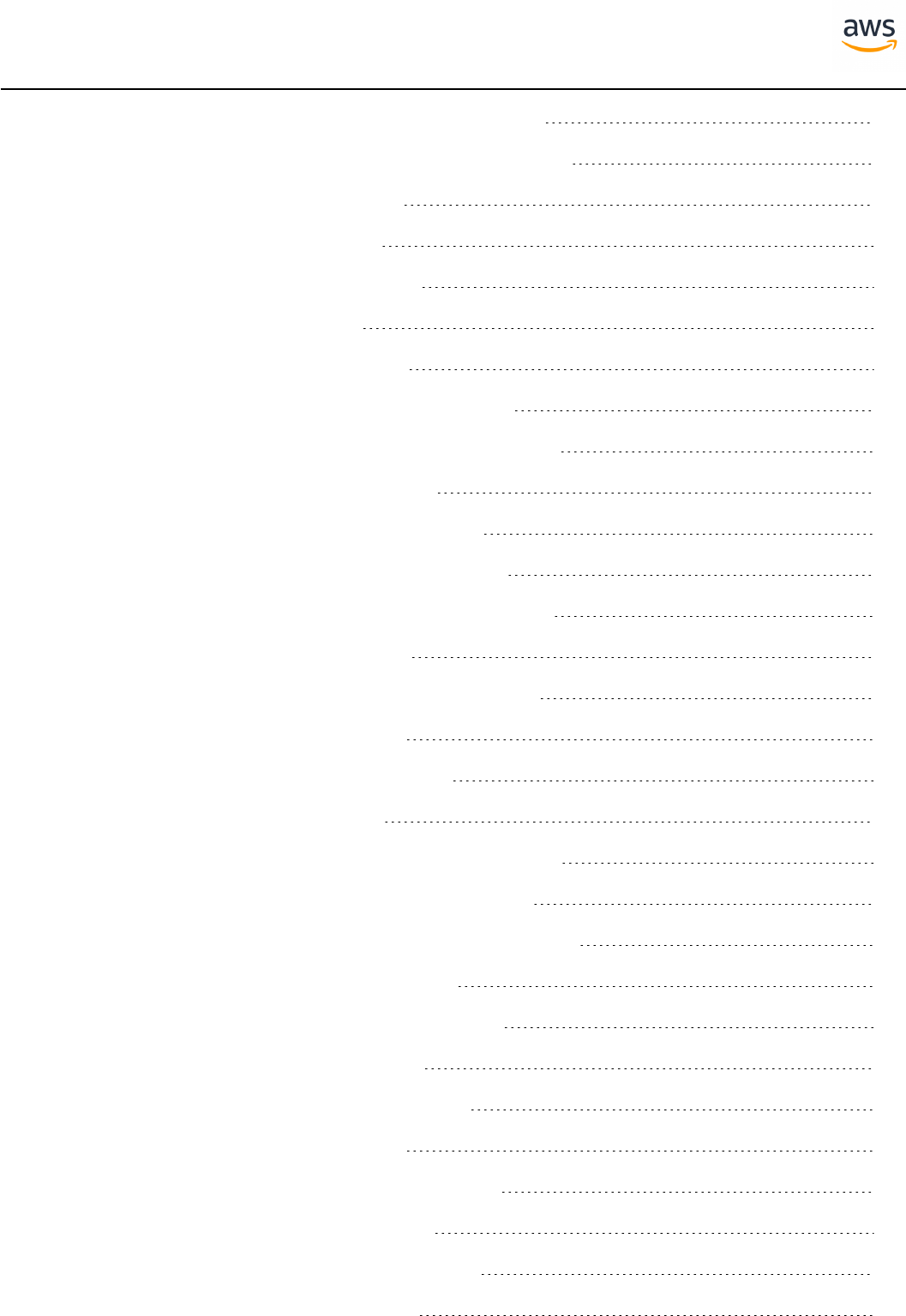

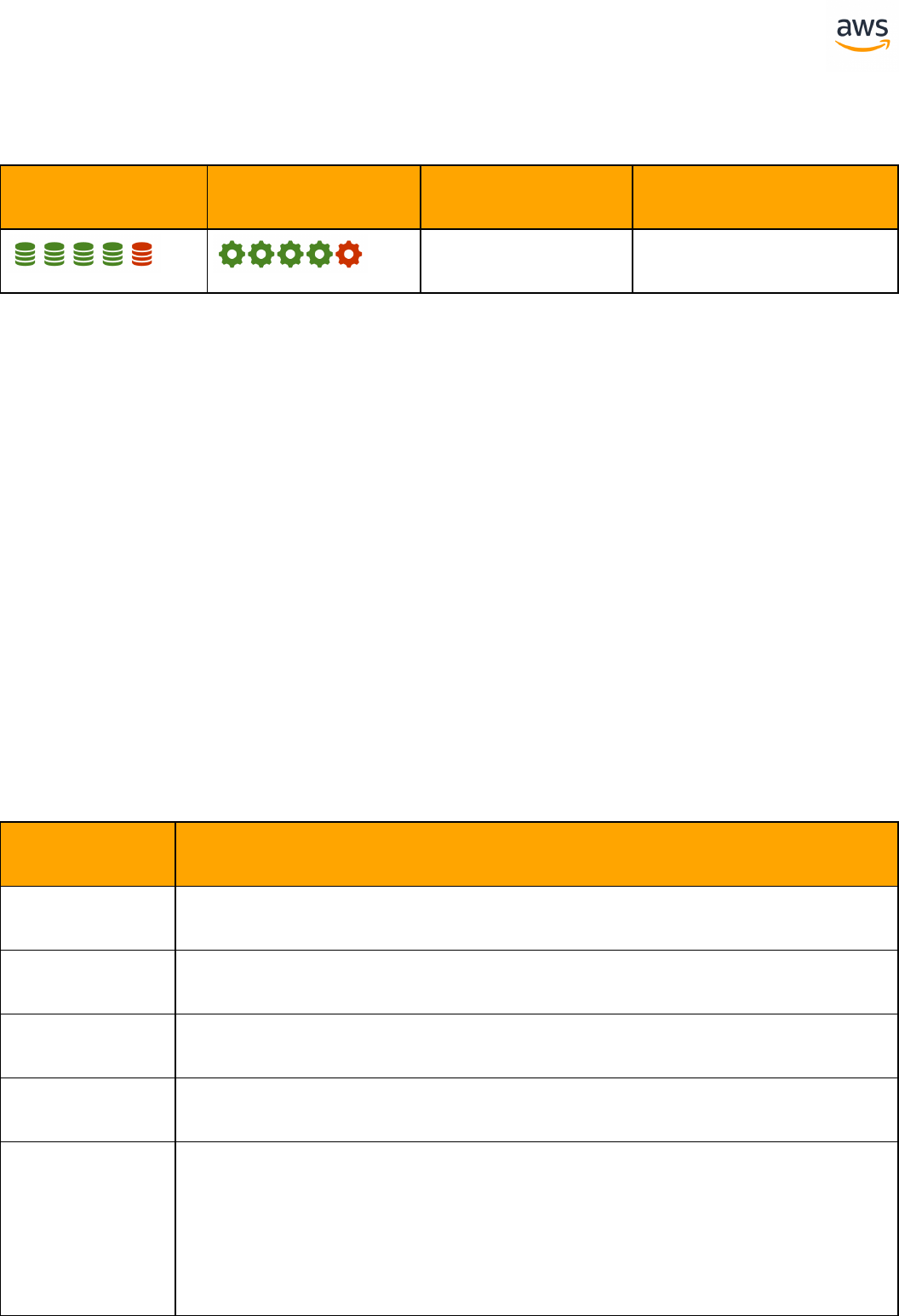

SQLServer Aurora PostgreSQL Key Differences Compatibility

Stored Procedures Stored Procedures l Syntax and option

differences

Error Handling Error Handling l Different

paradigm and syn-

tax will require

rewrite of error

handling code

Full Text Search Full Text Search l Different

paradigm and syn-

tax will require

application/drivers

rewrite.

Flow Control Flow Control l Postgres does not

support GOTO and

WAITFOR TIME

JSON and XML JSON and XML l Syntax and option

differences, sim-

ilar functionality

PIVOT PIVOT l Straight forward

rewrite to use tra-

ditional SQL syn-

tax

MERGE MERGE l Rewrite to use

INSERT… ON

CONFLICT

Triggers Triggers l Syntax and option

differences, sim-

ilar functionality -

PostgreSQL trigger

calling a function

User Defined Functions User Defined Functions l Syntax and option

differences

User Defined Types User Defined Types

- 15 -

SQLServer Aurora PostgreSQL Key Differences Compatibility

Sequences and Identity Sequences and Identity l Less options with

SERIAL

l Reseeding need to

be rewrited

Synonyms Synonyms l PostgreSQL does

not support Syn-

onym - there is an

available work-

around

TOP and FETCH LIMIT and OFFSET l TOP is not sup-

portd

Dynamic SQL Dynamic SQL l Different

paradigm and syn-

tax will require

application/drivers

rewrite.

CAST and CONVERT CAST and CONVERT l CONVERT is used

only to convert

between collations

l CAST uses dif-

ferent syntax

Broker Broker l Use Amazon

Lambda for sim-

ilar functionality

CLR Objects CLR Objects l Migrating CLR

objects will require

a full code rewrite

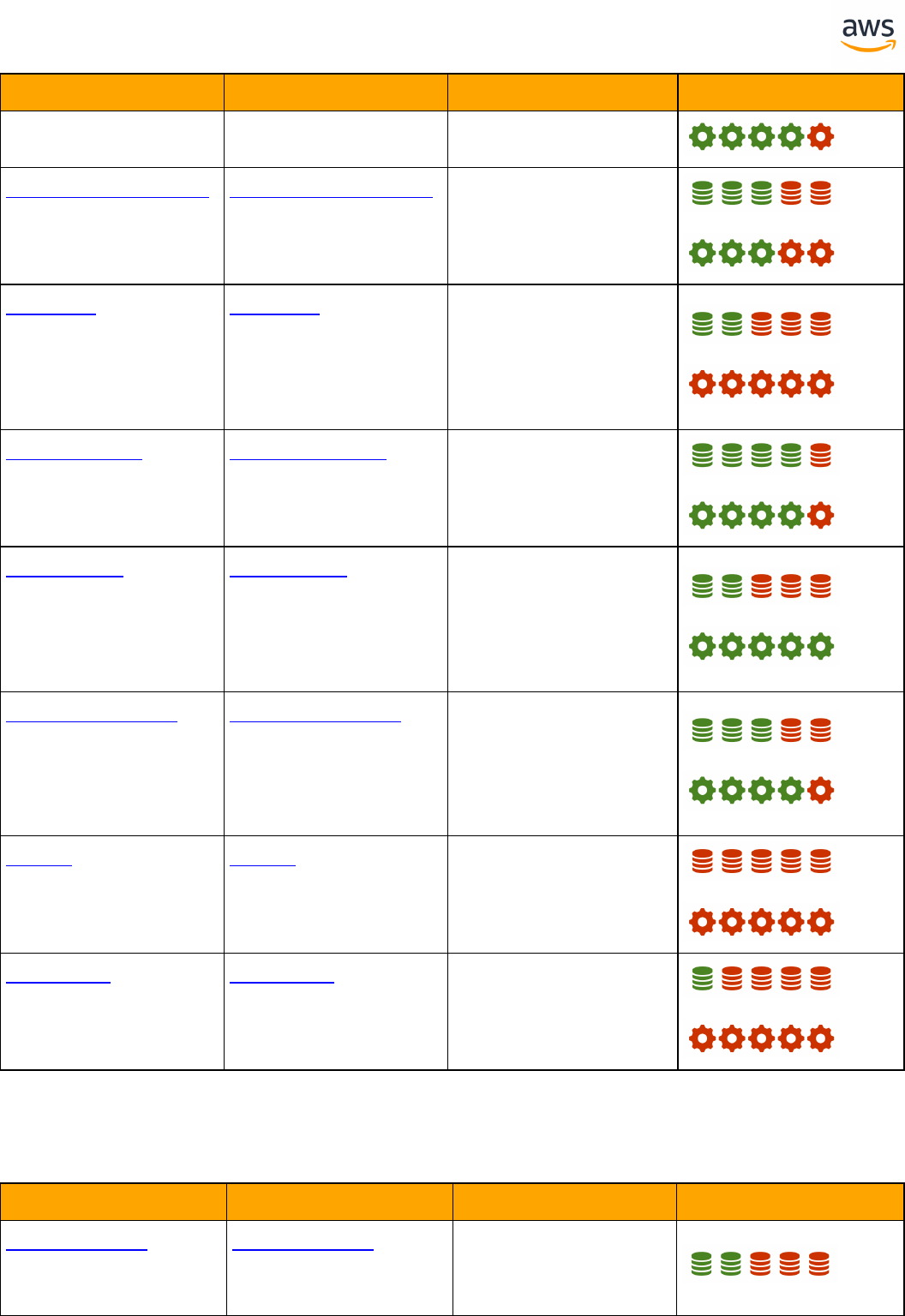

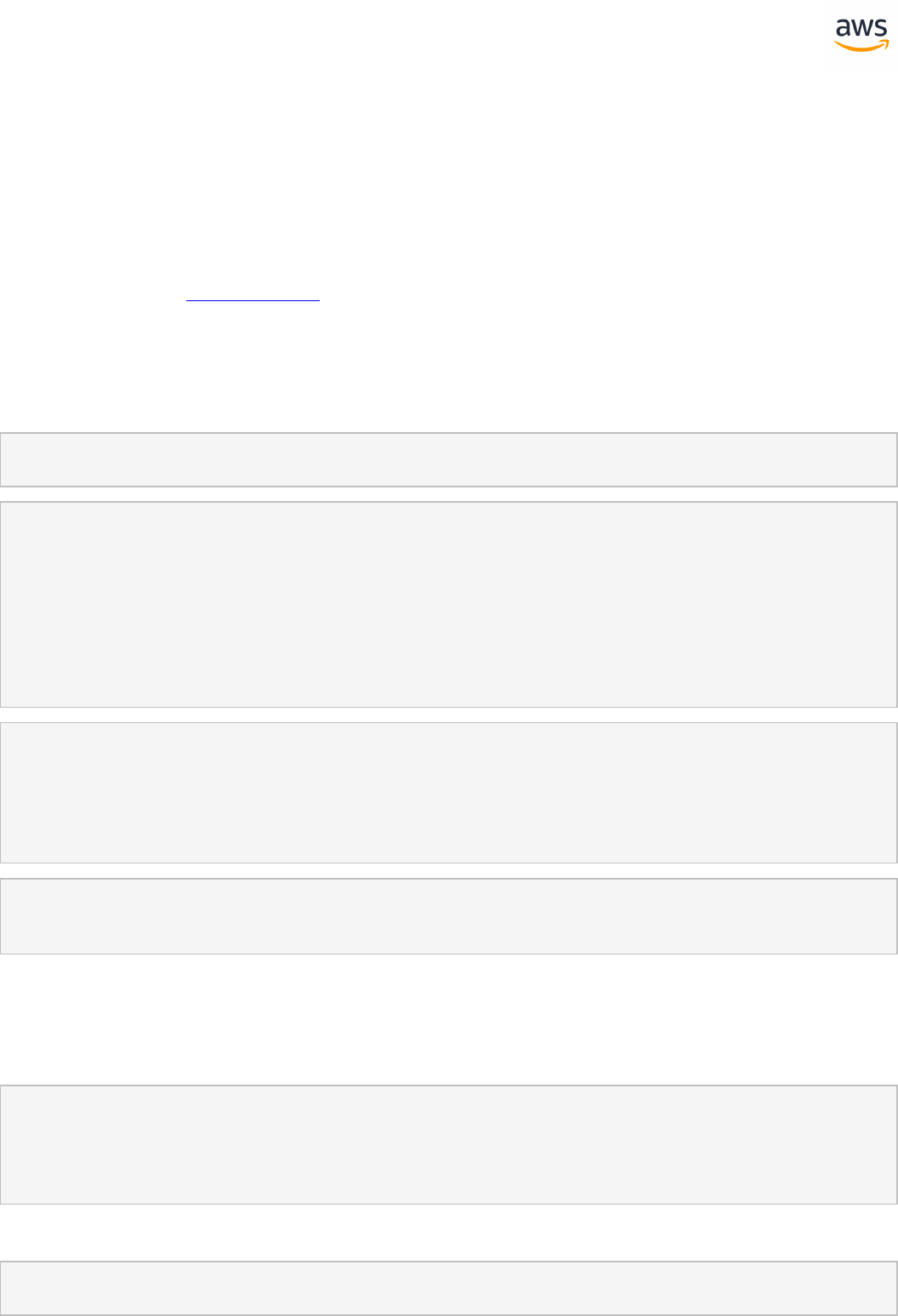

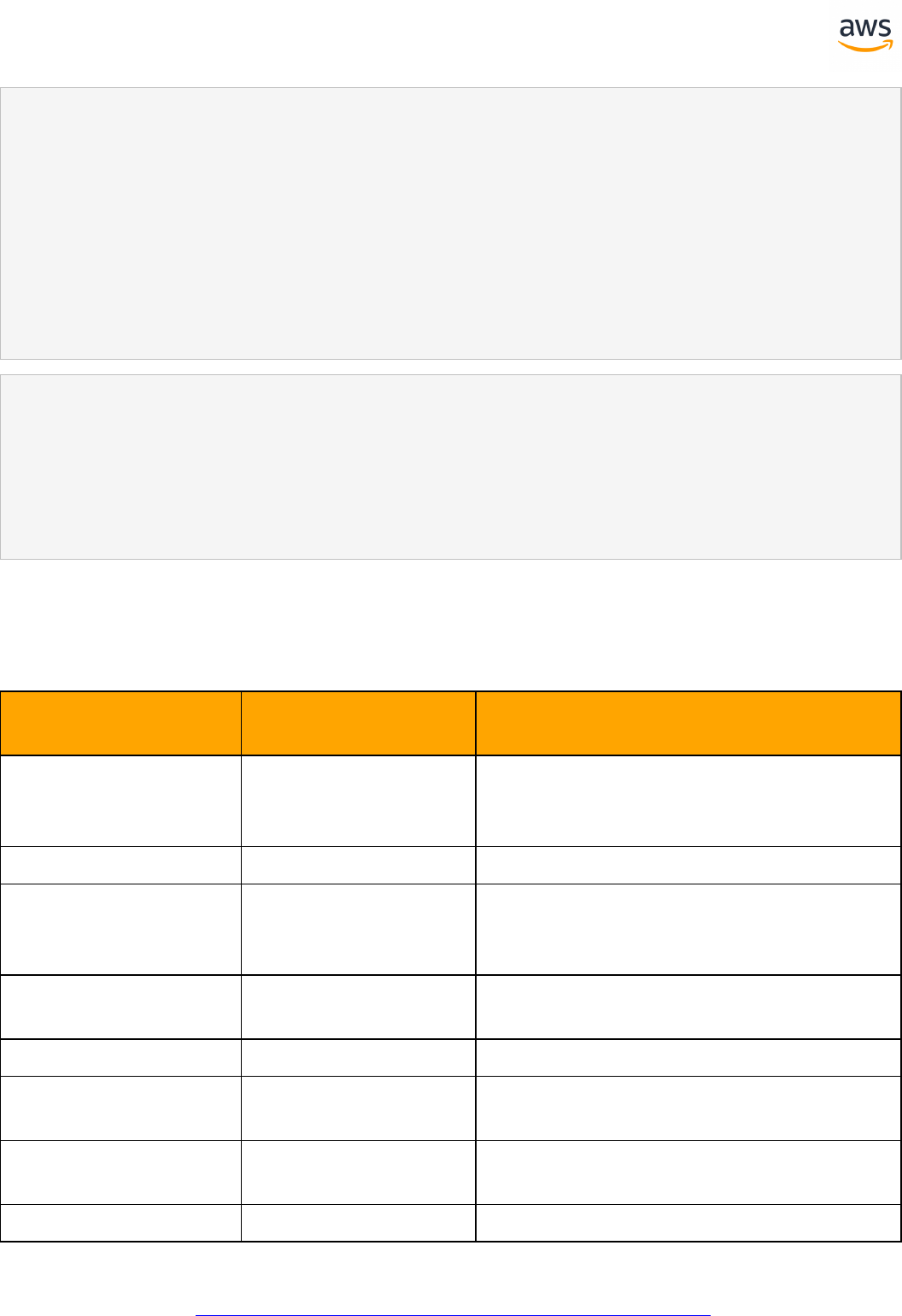

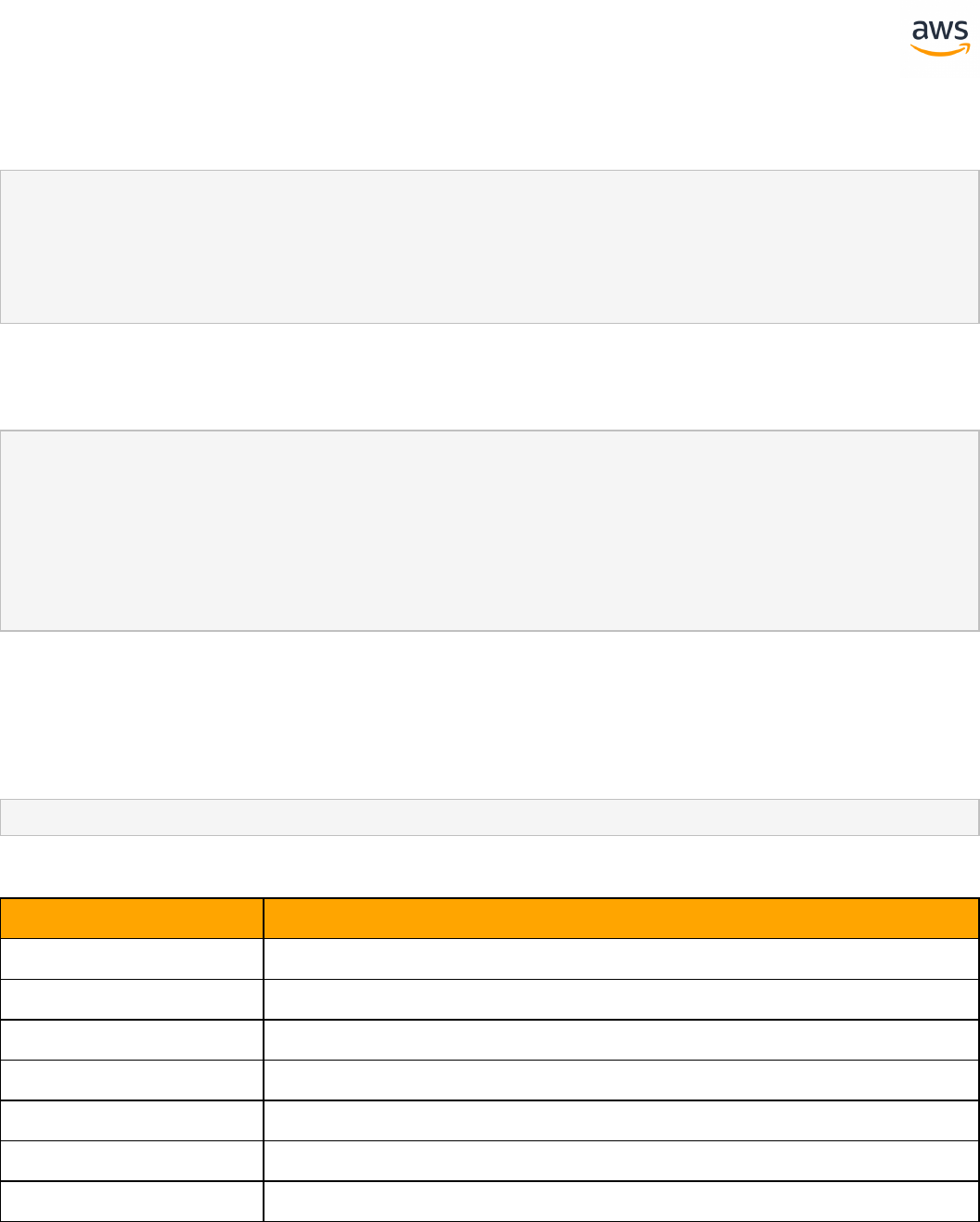

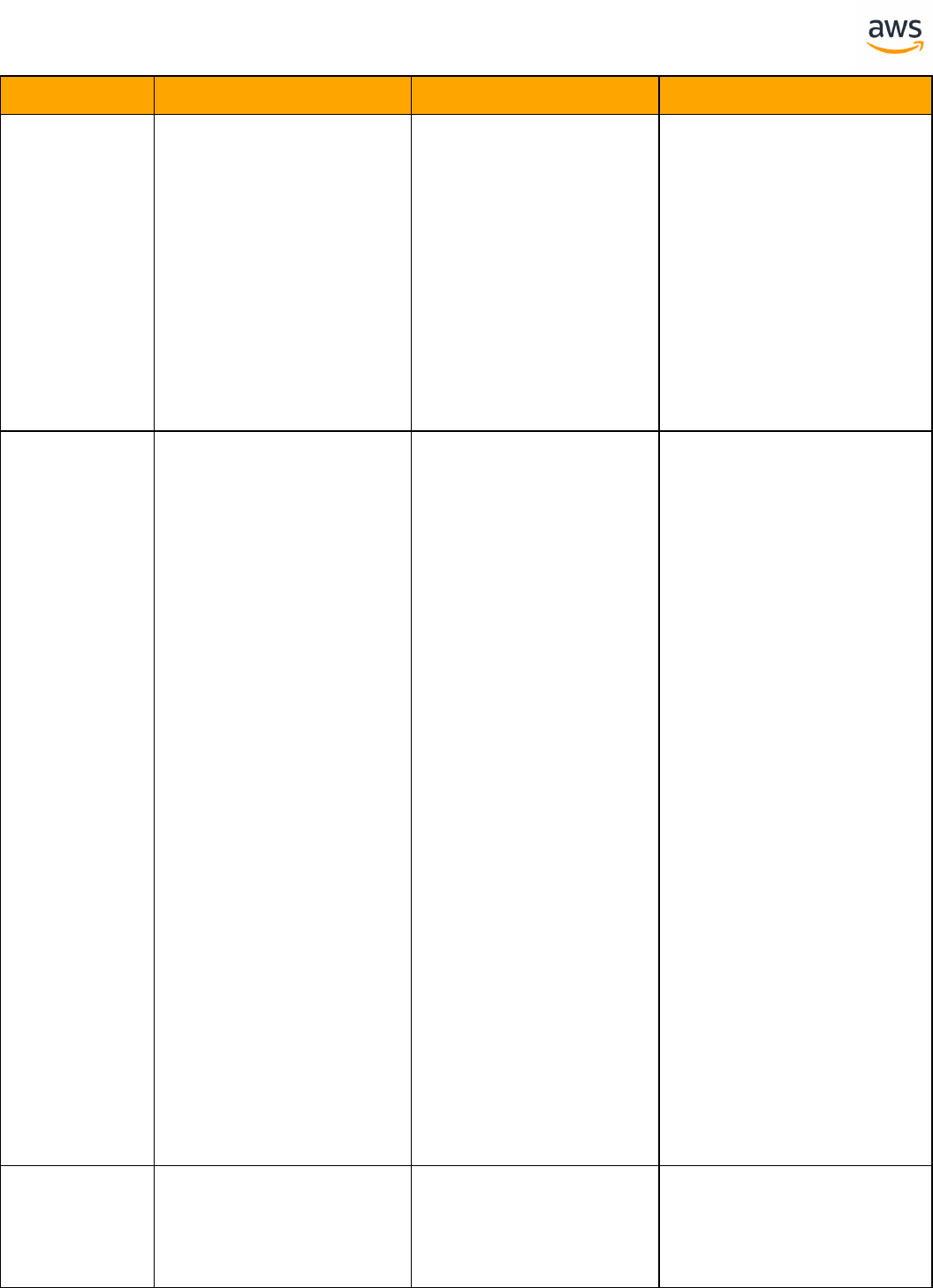

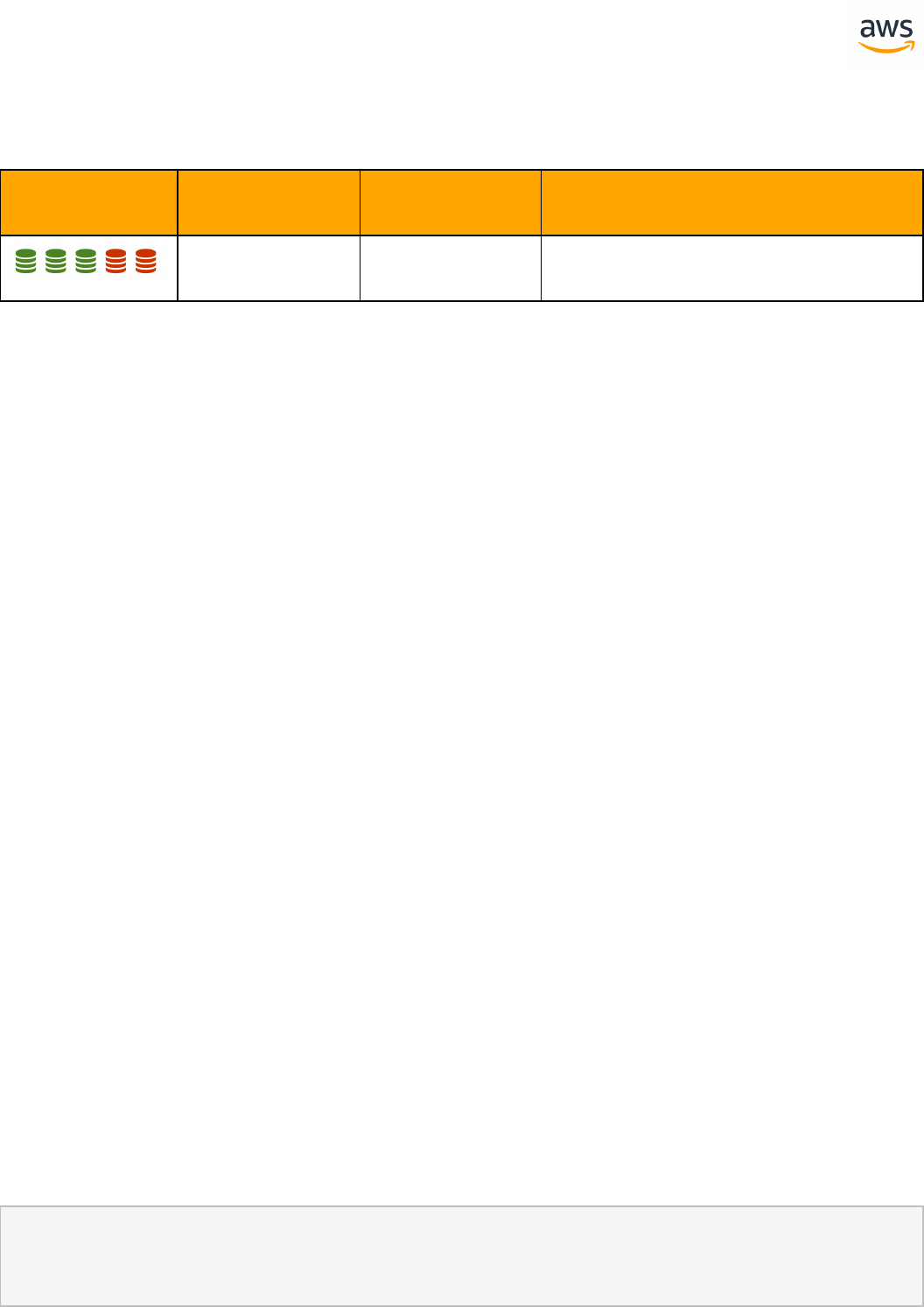

Configuration

SQLServer Aurora PostgreSQL Key Differences Compatibility

Session Options Session Options l SET options are

significantly dif-

ferent, except for

- 16 -

SQLServer Aurora PostgreSQL Key Differences Compatibility

transaction isol-

ation control

Database Options Database Options l Use Cluster and

Database/Cluster

Parameters

Server Options Server Options l Use Cluster and

Database/Cluster

Parameters

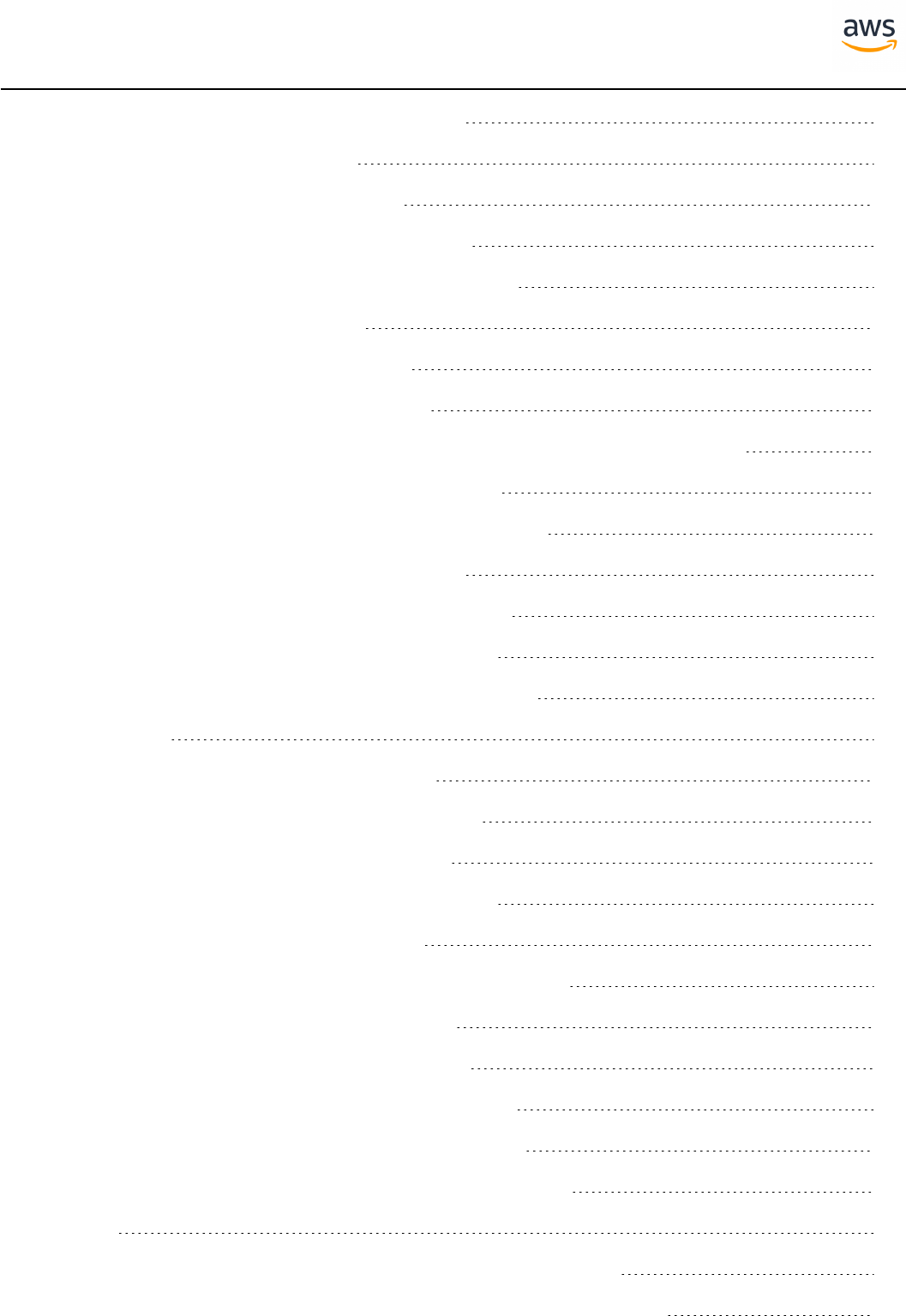

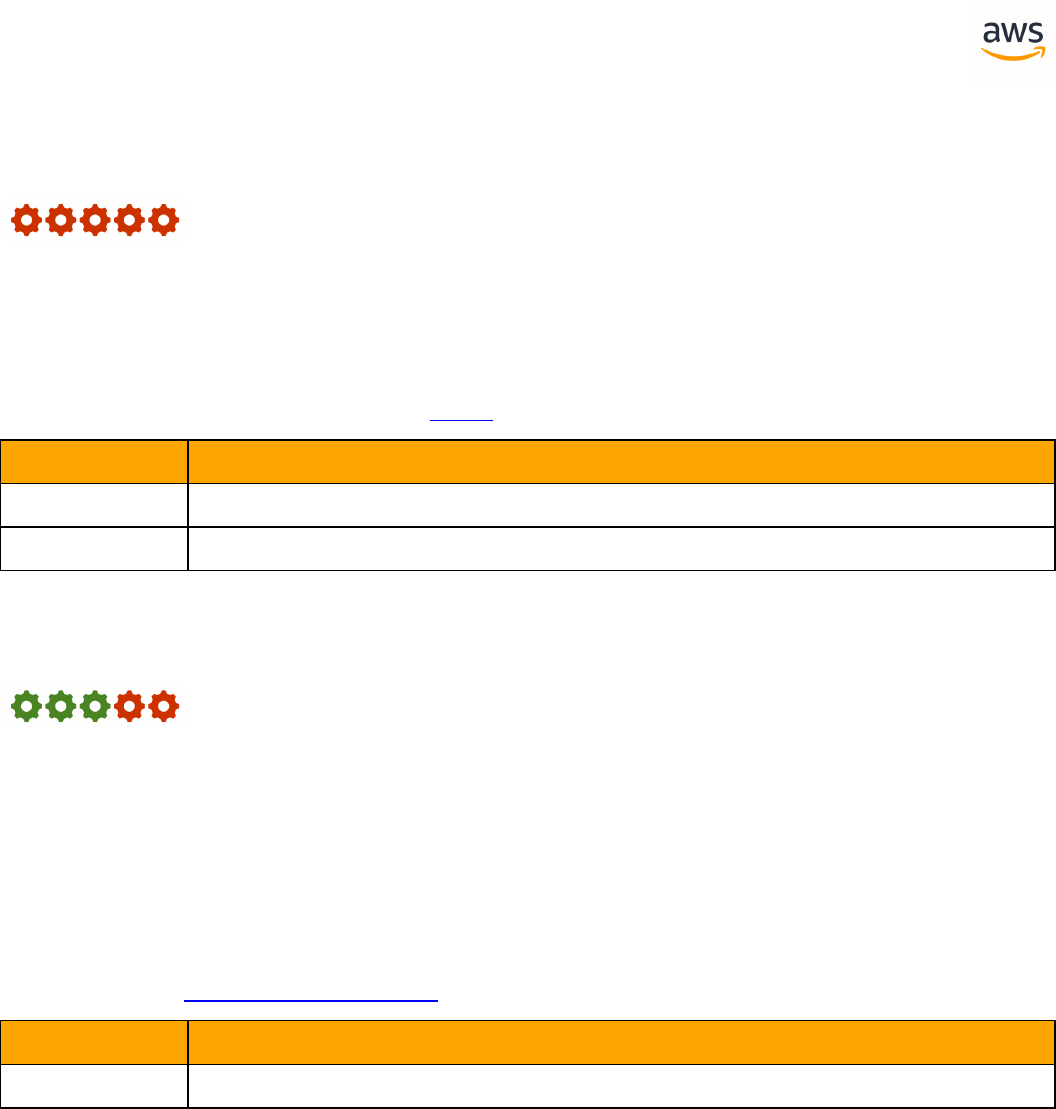

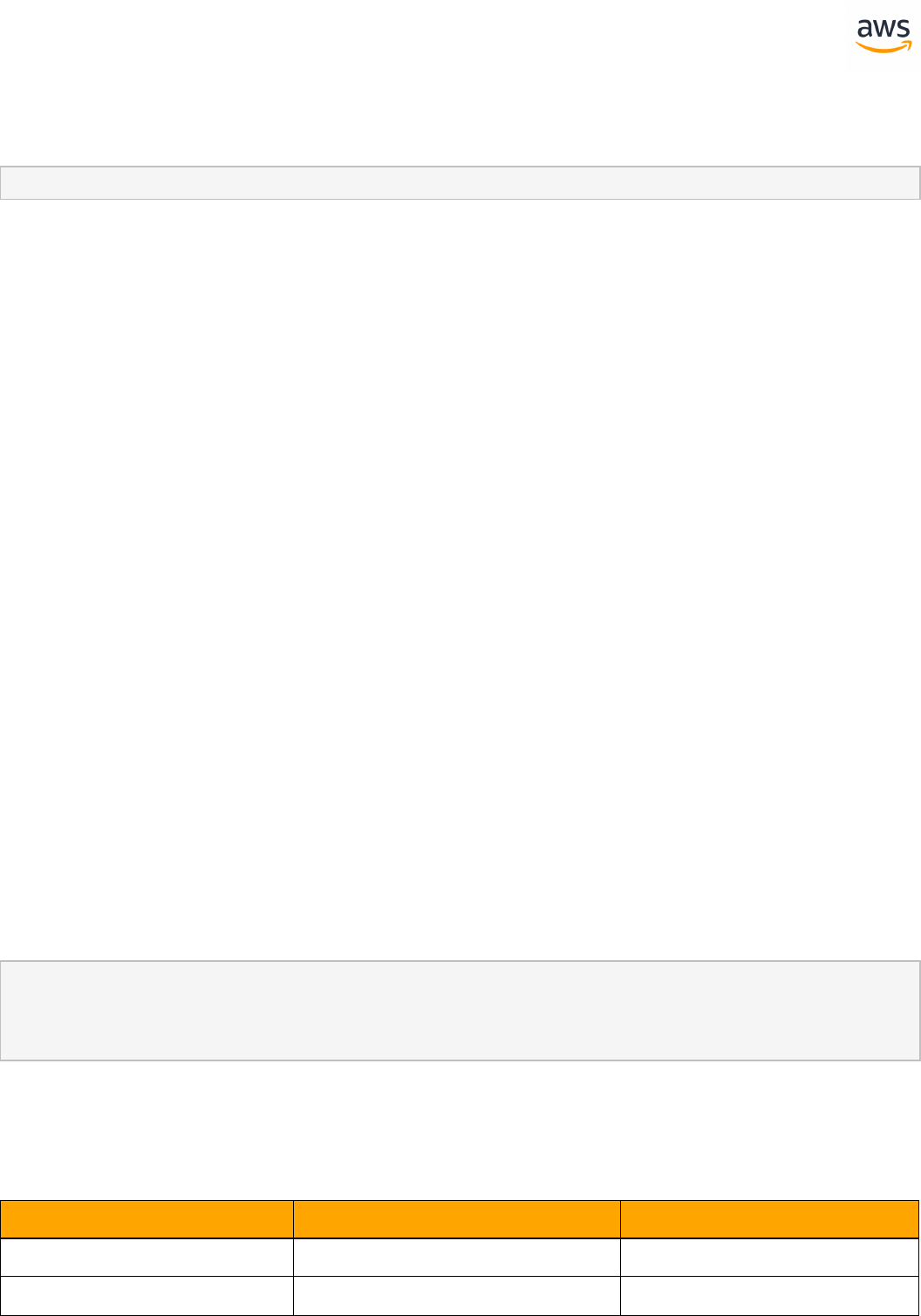

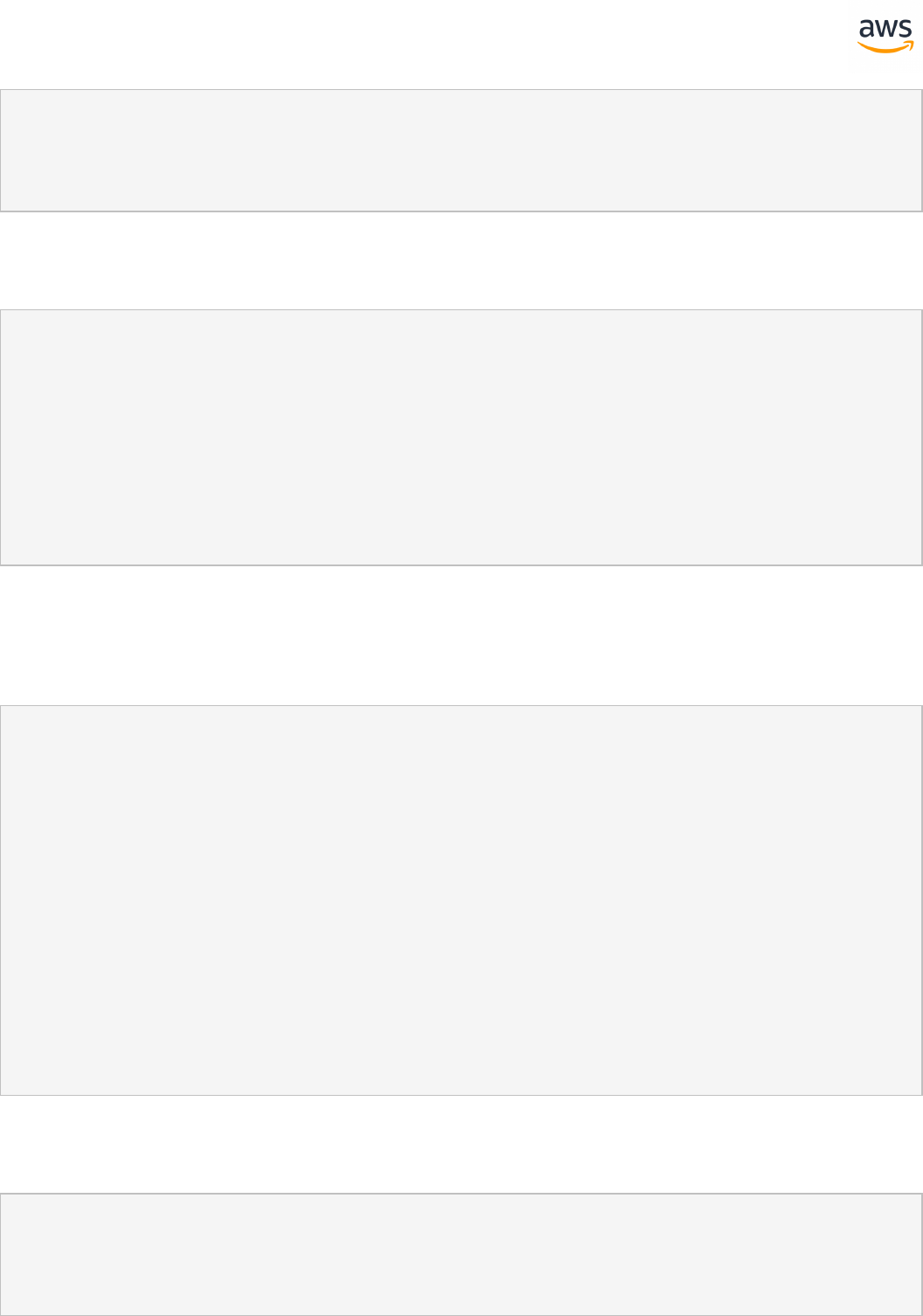

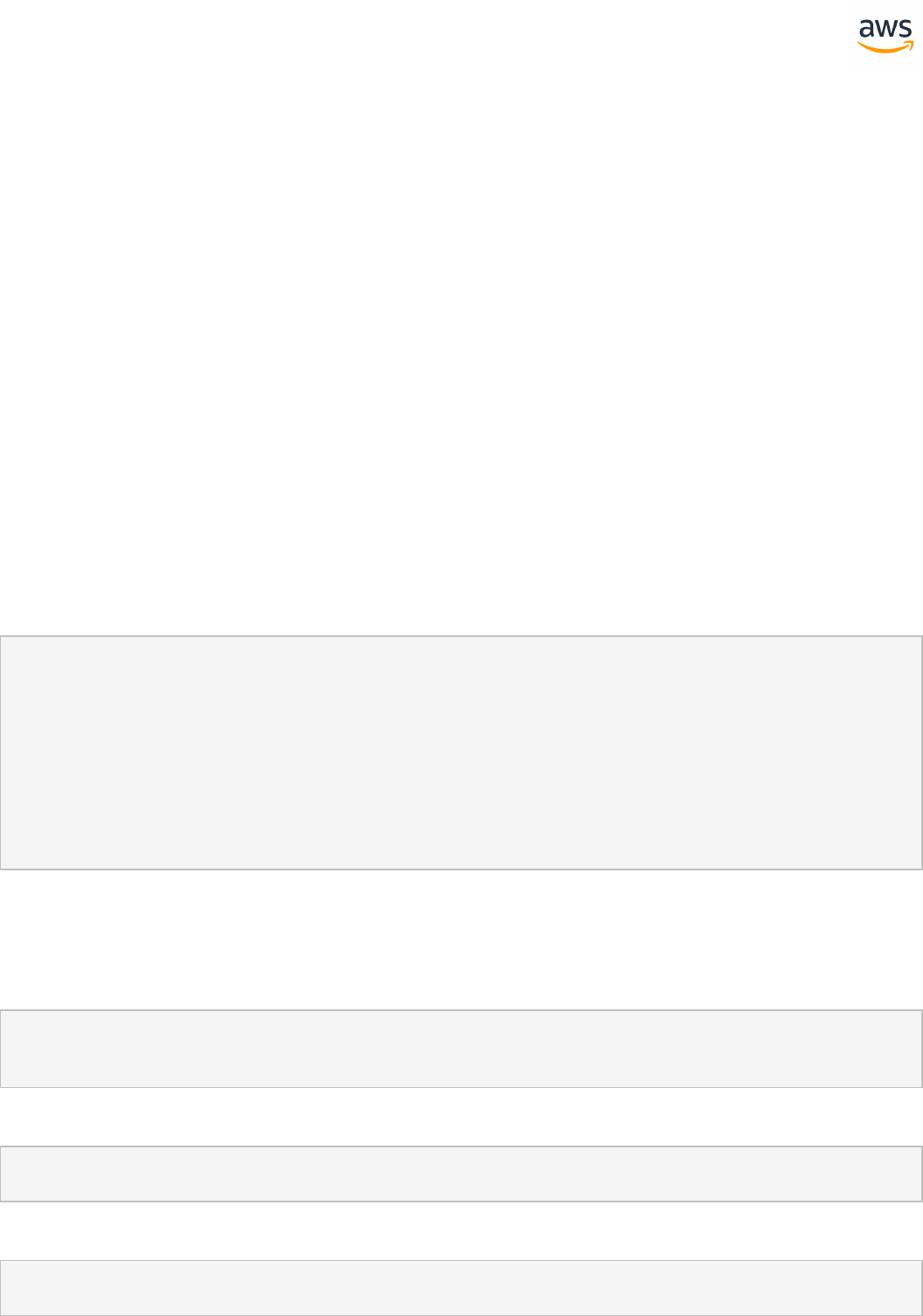

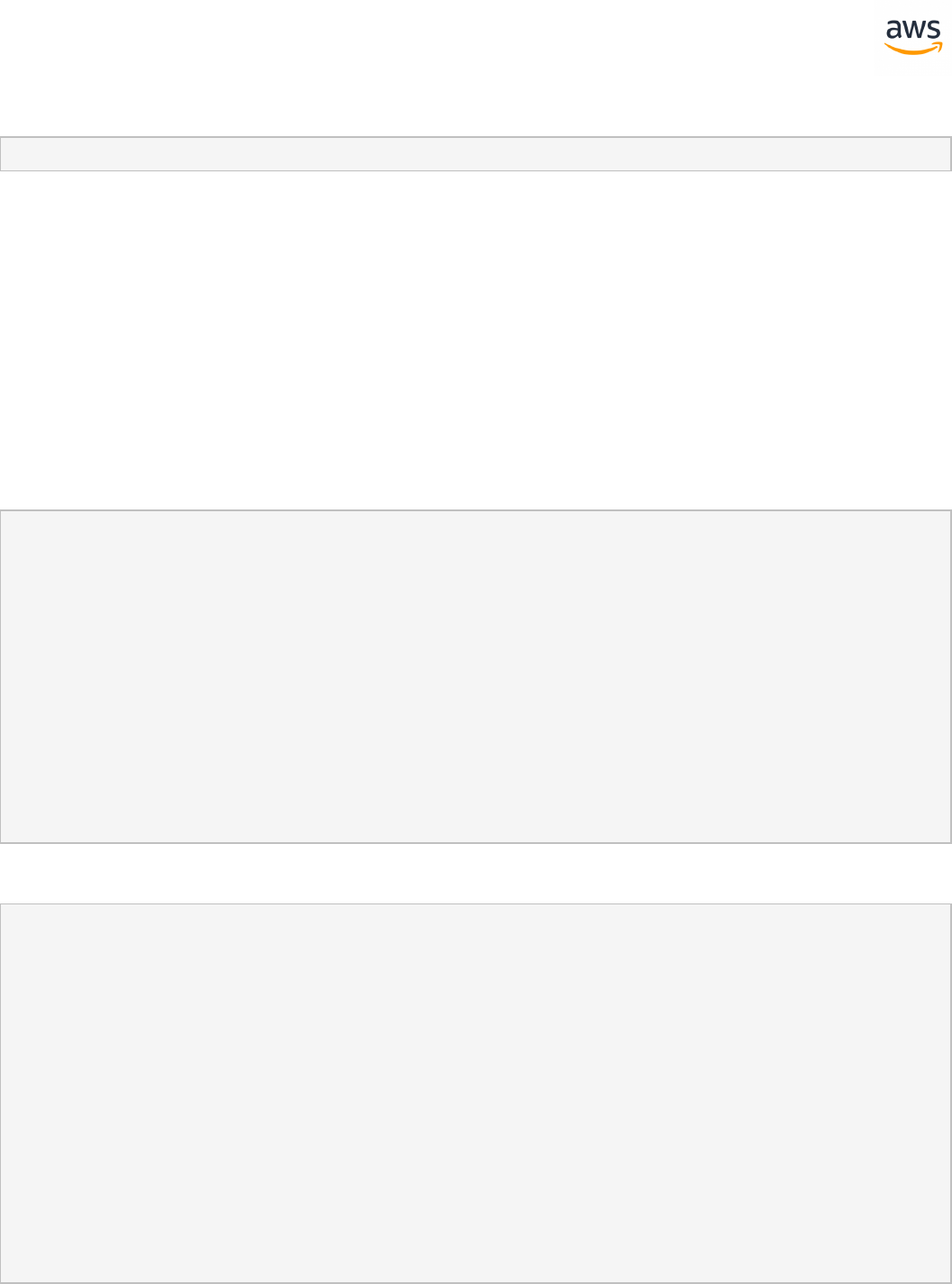

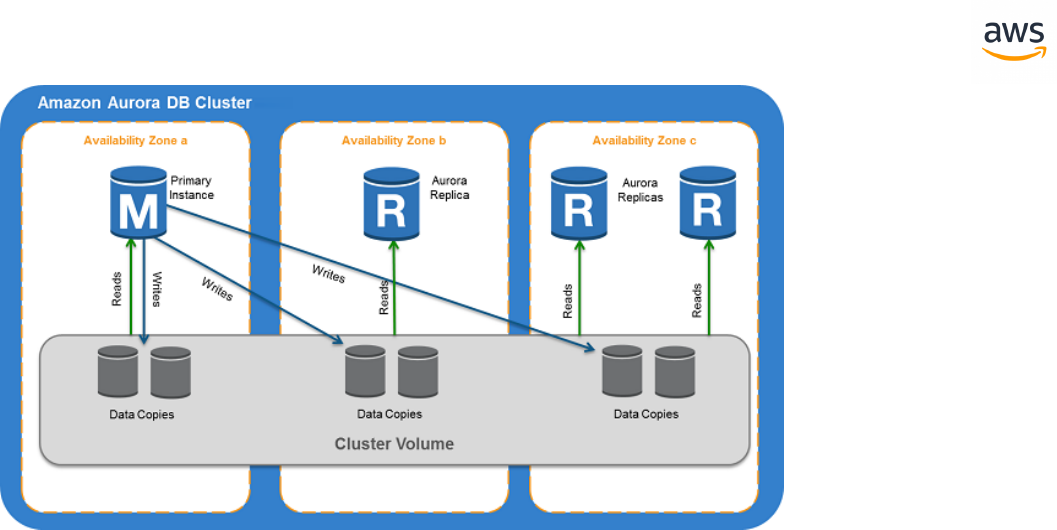

High Availability and Disaster Recovery (HADR)

SQLServer Aurora PostgreSQL Key Differences Compatibility

Backup and Restore Backup and Restore l Storage level

backup managed

by Amazon RDS

High Availability Essen-

tials

High Availability Essen-

tials

l Multi replica,

scale out solution

using Amazon Aur-

ora clusters and

Availability Zones

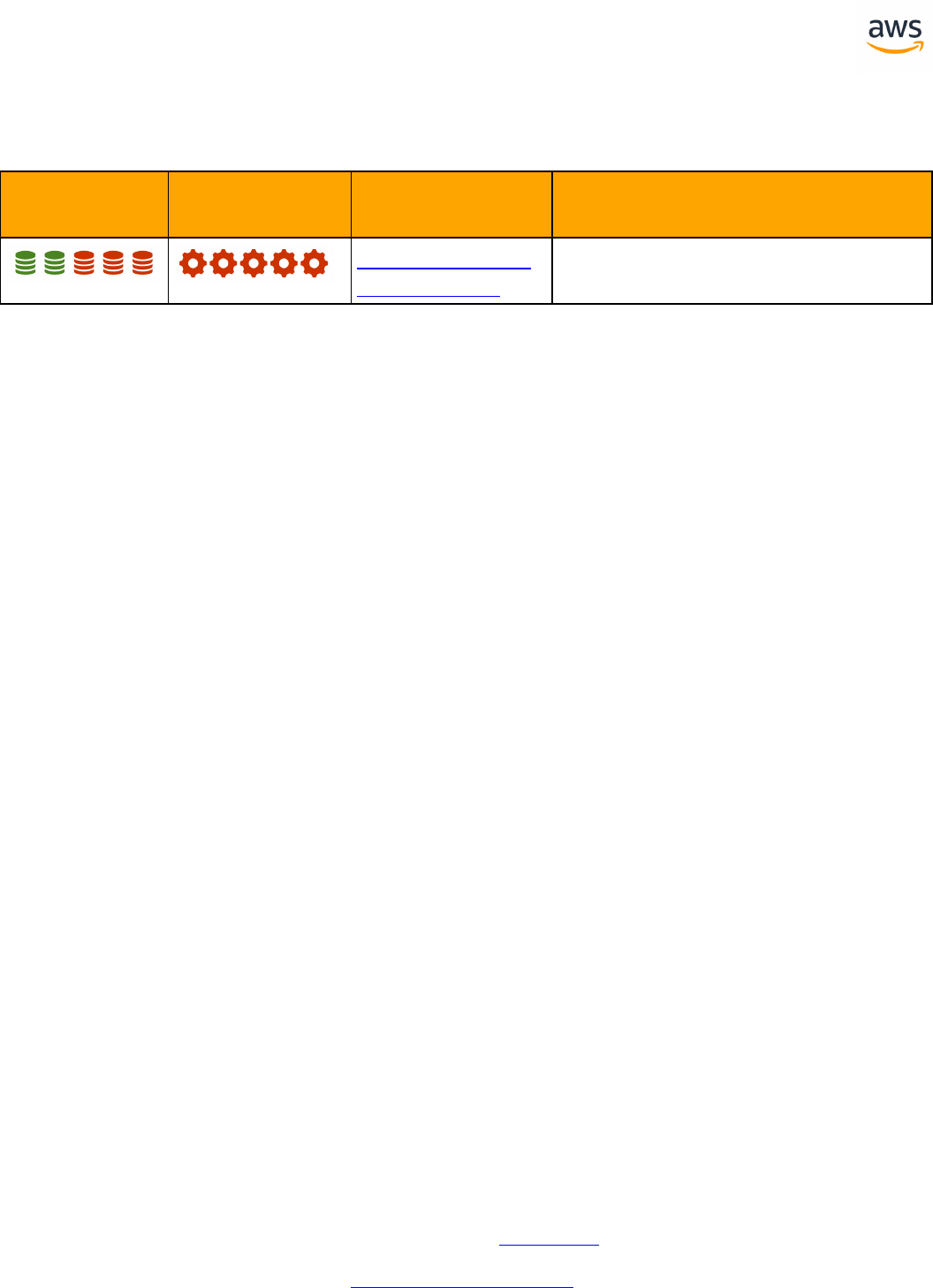

Indexes

SQLServer Aurora PostgreSQL Key Differences Compatibility

Clustered and Non

Clustered Indexes

Clustered and Non

Clustered Indexes

l CLUSTERED INDEX

is not supported

l There are few

missing options

Indexed Views Indexed Views l Different

paradigm and syn-

tax will require

application/drivers

rewrite.

Columnstore Columnstore l Aurora Post-

greSQL offers no

comparable fea-

ture

- 17 -

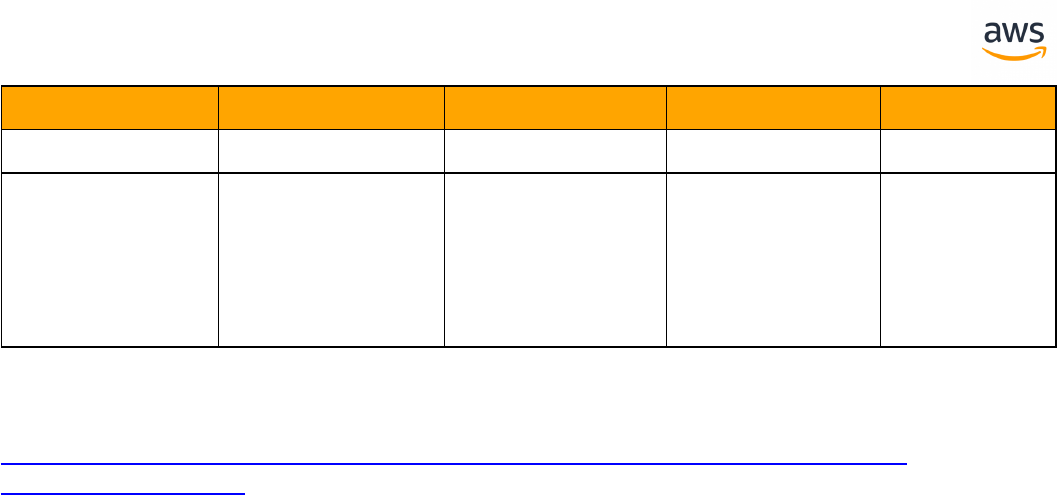

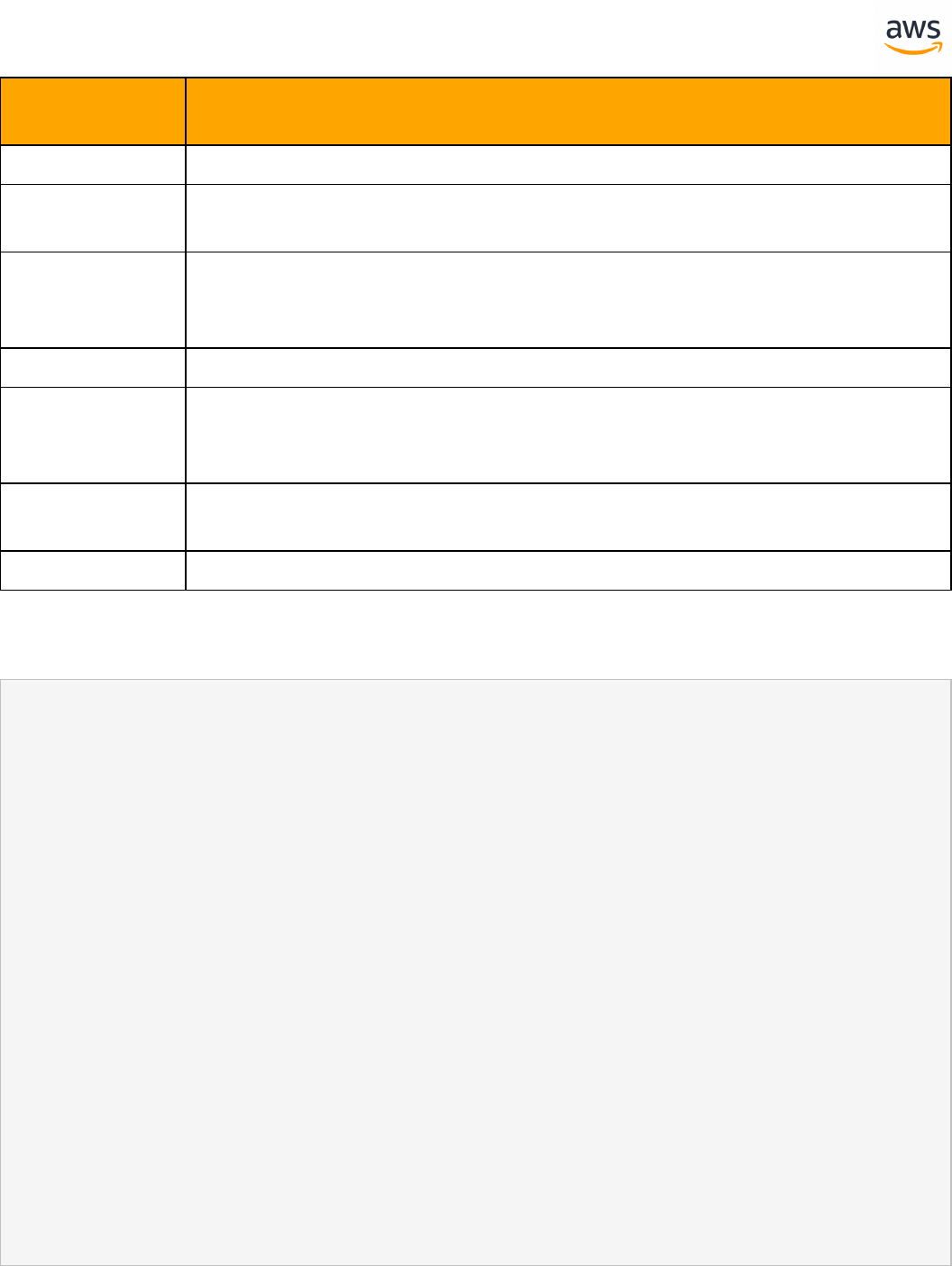

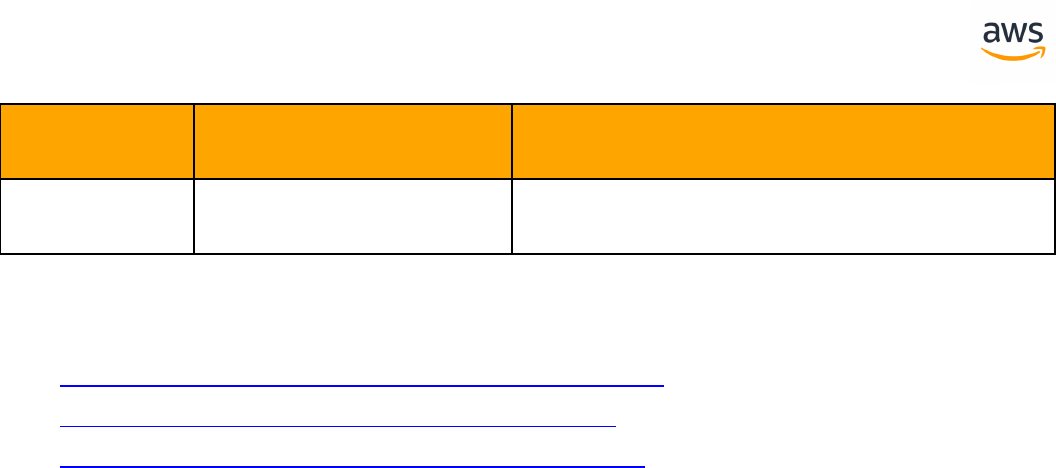

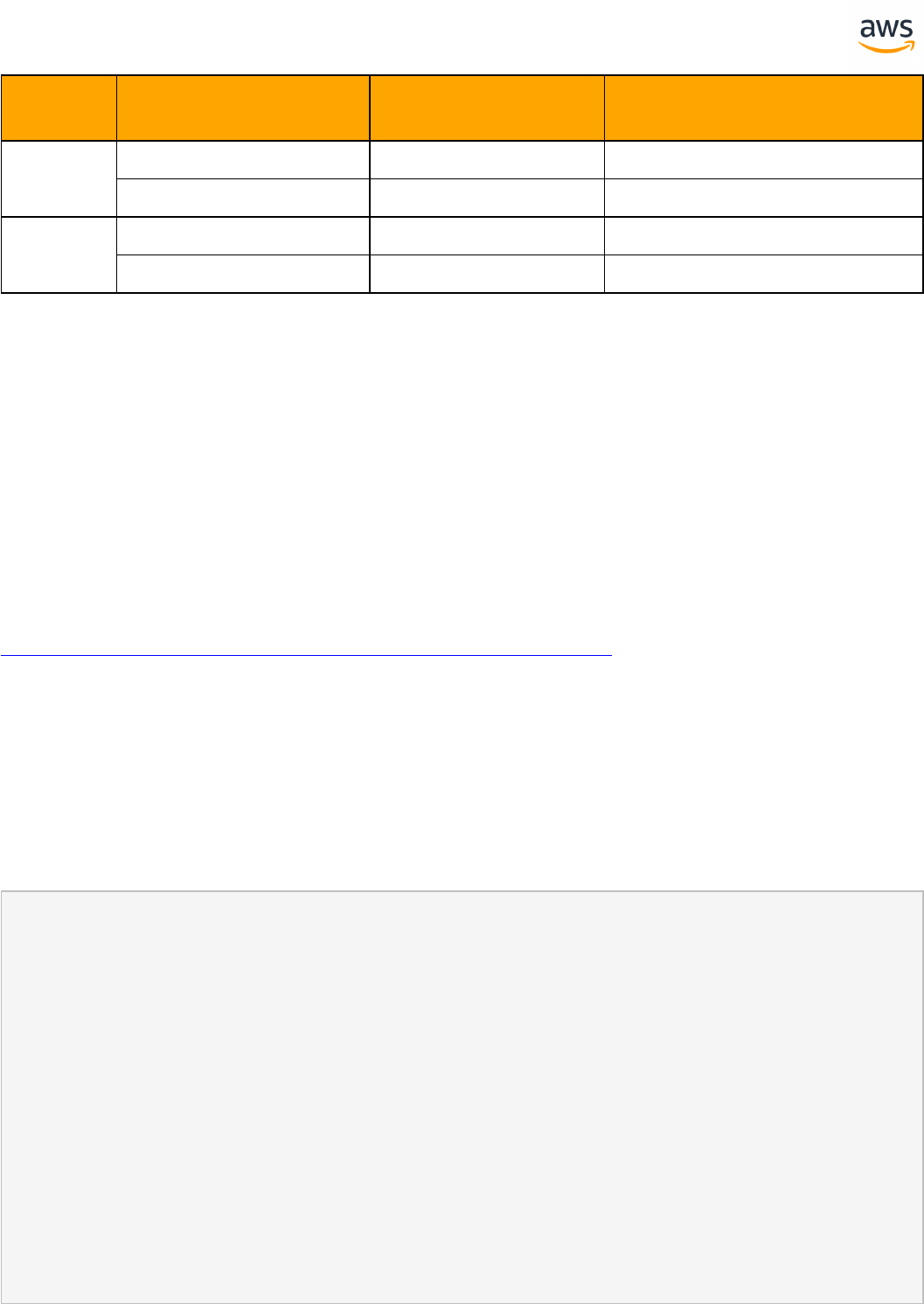

Management

SQLServer

Aurora Post-

greSQL

Key Differences Compatibility

SQL Server

Agent

SQL Agent l See Alerting and Maintenance

Plans

Alerting Alerting l Use Event Notifications Sub-

scription with Amazon Simple Noti-

fication Service (SNS)

ETL ETL l Use Amazon Glue for ETL

Database Mail Database Mail l Use Lambda Integration

Viewing Server

Logs

Viewing Server Logs l View logs from the Amazon RDS

console, the Amazon RDS API, the

AWS CLI, or the AWS SDKs

Maintenance

Plans

Maintenance Plans l Backups via the RDS services

l Table maintenance via SQL

Monitoring Monitoring l Use Amazon Cloud Watch service

Resource

Governor

Resource Governor l Distribute load/applications/users

across multiple instances

Linked Servers Linked Servers l Syntax and option differences, sim-

ilar functionality

Scripting &

PowerShell

Scripting & Power-

Shell

l Non-compatible tool sets and

scripting languages

l Use PostgreSQL pgAdmin, Amazon

RDS API, AWS Management Con-

sole, and Amazon CLI

Import and

Export

Import and Export l Non compatible tool

Performance Tuning

SQLServer Aurora PostgreSQL Key Differences Compatibility

Execution

Plans

Execution Plans l Syntax differences

l Completely different optimizer

with different operators and rules

Query Hints

and Plan

Guides

Query Hints and

Plan Guides

l Very limited set of hints - Index

hints and optimizer hints as com-

ments

- 18 -

SQLServer Aurora PostgreSQL Key Differences Compatibility

l Syntax differences

Managing Stat-

istics

Managing Statistics l Syntax and option differences,

similar functionality

Physical Storage

SQLServer Aurora PostgreSQL Key Differences Compatibility

Partitioning Partitioning l Does not support

LEFT partition or

foreign keys ref-

erencing par-

titioned tables

Security

SQLServer Aurora PostgreSQL Key Differences Compatibility

Column Encryption Column Encryption l Syntax and option

differences, sim-

ilar functionality

Data Control Language Data Control Language l Similar syntax and

similar func-

tionality

Transparent Data

Encryption

Transparent Data

Encryption

l Storage level

encryption man-

aged by Amazon

RDS

Users and Roles Users and Roles l Syntax and option

differences, sim-

ilar functionality

l There are no

users - only roles

- 19 -

AWS Schema and Data Migration Tools

- 20 -

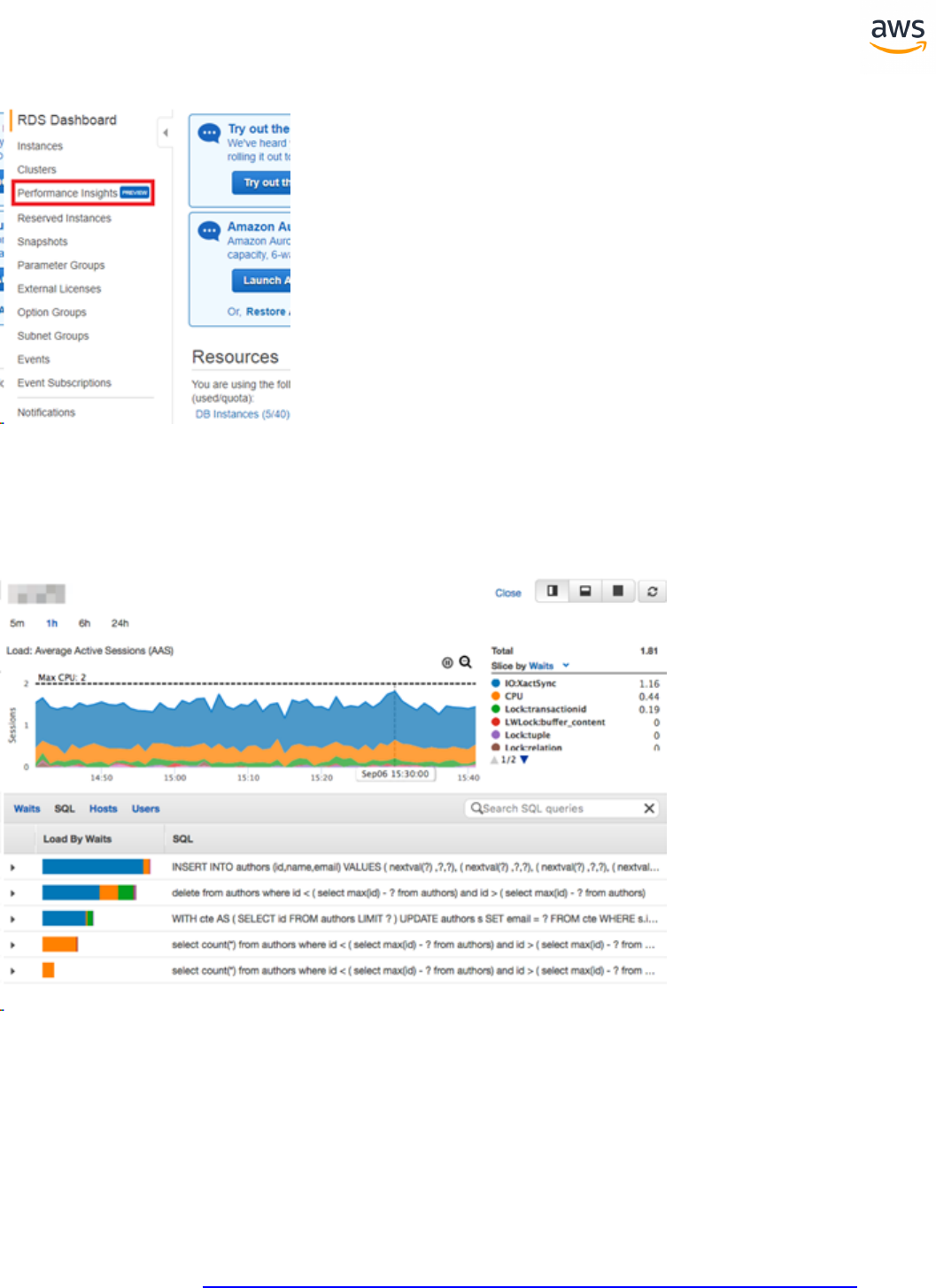

AWS Schema Conversion Tool (SCT)

Overview

The AWS Schema Conversion Tool (SCT) is a stand alone tool that connects to source and target data-

bases, scans the source database schema objects (tables, views, indexes, procedures, etc.), and con-

verts them to target database objects.

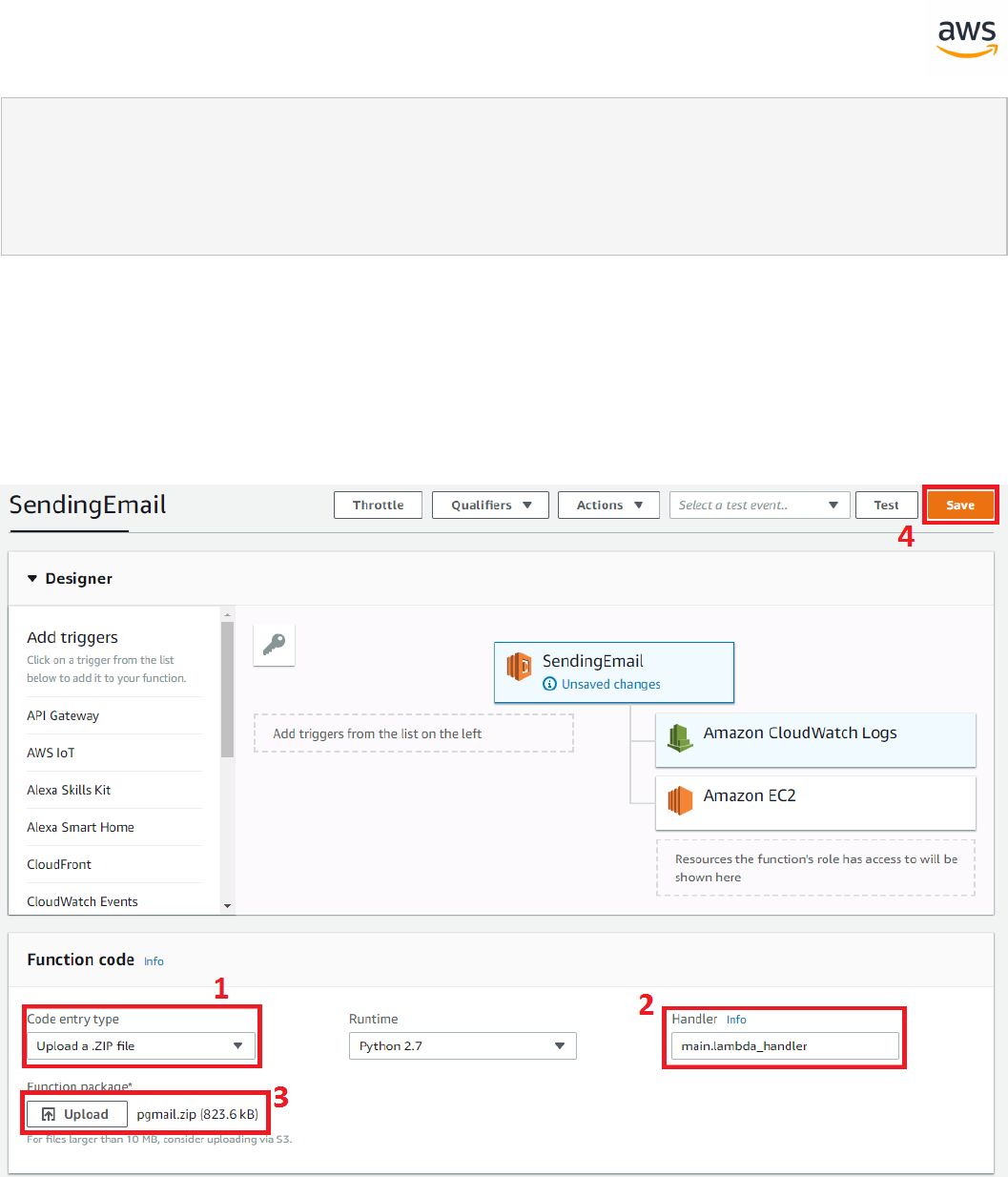

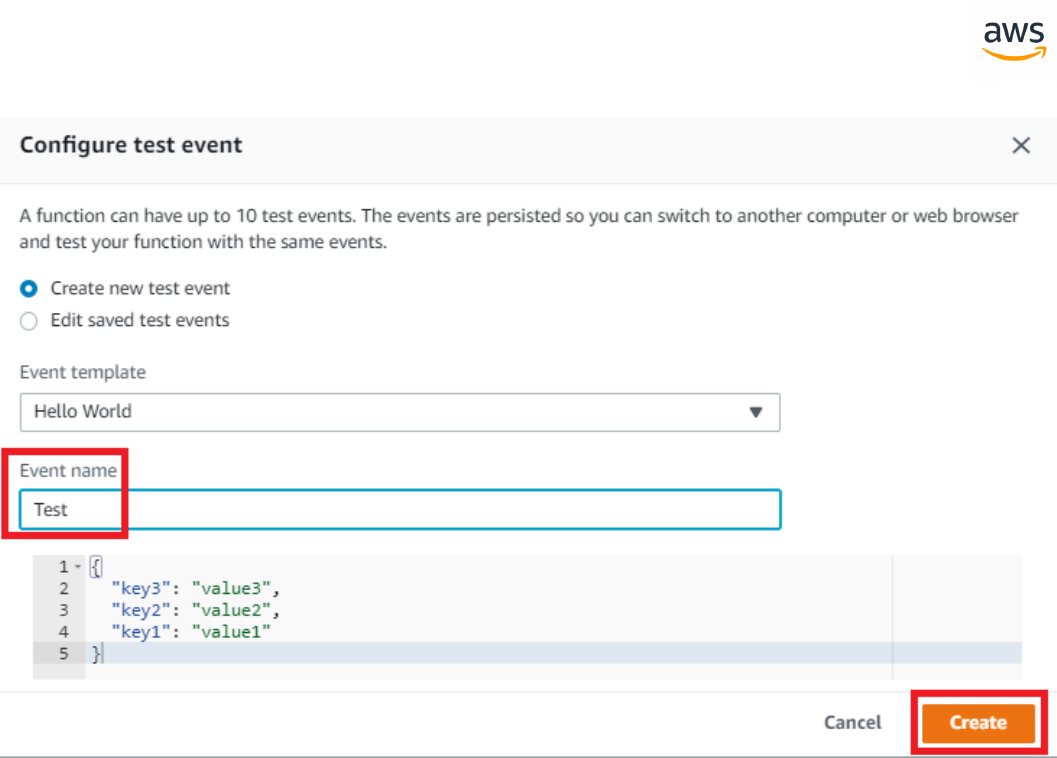

This section provides a step-by-step process for using AWSSCT to migrate an SQL Server database to

an Aurora PostgreSQL database cluster. Since AWS SCT can automatically migrate most of the data-

base objects, it greatly reduces manual effort.

It is recommended to start every migration with the process outlined in this section and then use the

rest of the Playbook to further explore manual solutions for objects that could not be migrated auto-

matically. Even though AWS SCT can automatically migrate most schema objects, it is highly recom-

mended that you allocate sufficient resources to perform adequate testing, and performance tuning

due to the differences between the SQL Server engine and the Aurora PostgreSQL engine. For more

information, see http://docs.aws.amazon.com/SchemaConversionTool/latest/userguide/Welcome.html

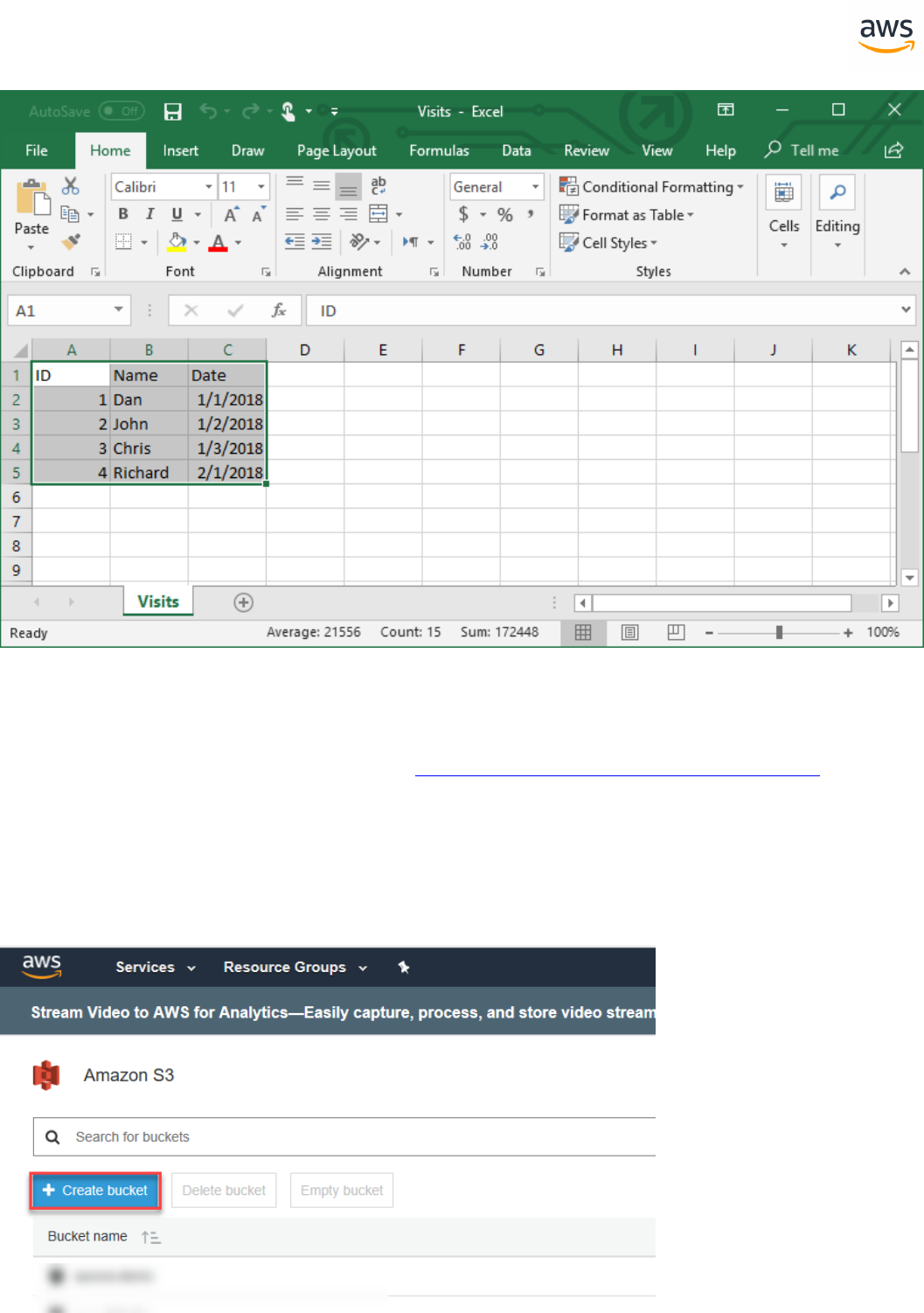

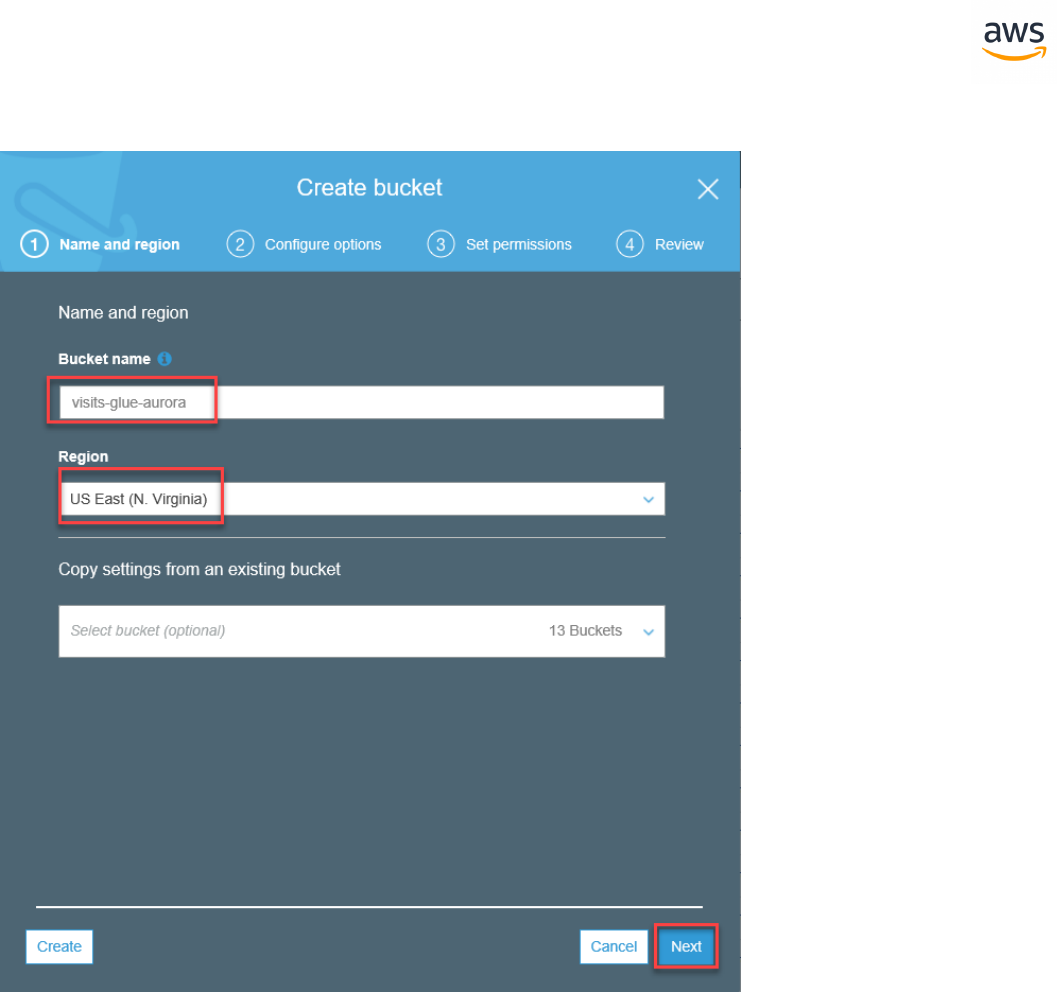

Migrating a Database

Note: This walkthrough uses the AWS DMS Sample Database. You can download it from

https://github.com/aws-samples/aws-database-migration-samples.

Download the Software and Drivers

1. Download and install the AWS SCT from https://-

docs.aws.amazon.com/SchemaConversionTool/latest/userguide/CHAP_Installing.html.

2. Download the SQL Server driver from https://www.microsoft.com/en-us/-

download/details.aspx?displaylang=en&id=11774

3. Download the PostgreSQL driver from https://jdbc.postgresql.org/

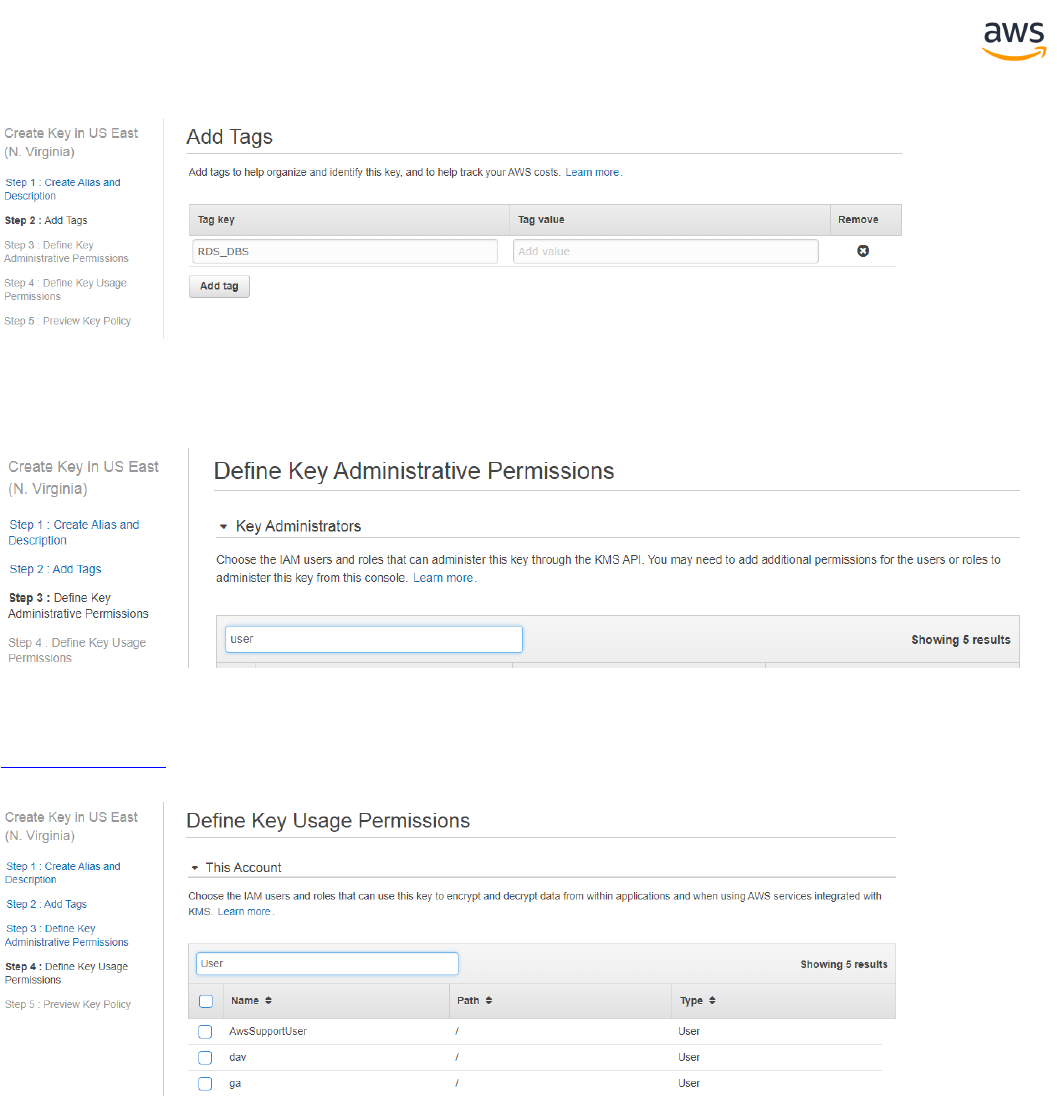

Configure SCT

Launch SCT. Click the Settings button and select Global Settings.

- 21 -

On the left navigation bar, click Drivers. Enter the paths for the SQL Server and PostgreSQL drivers

downloaded in the first step. Click Apply and then OK.

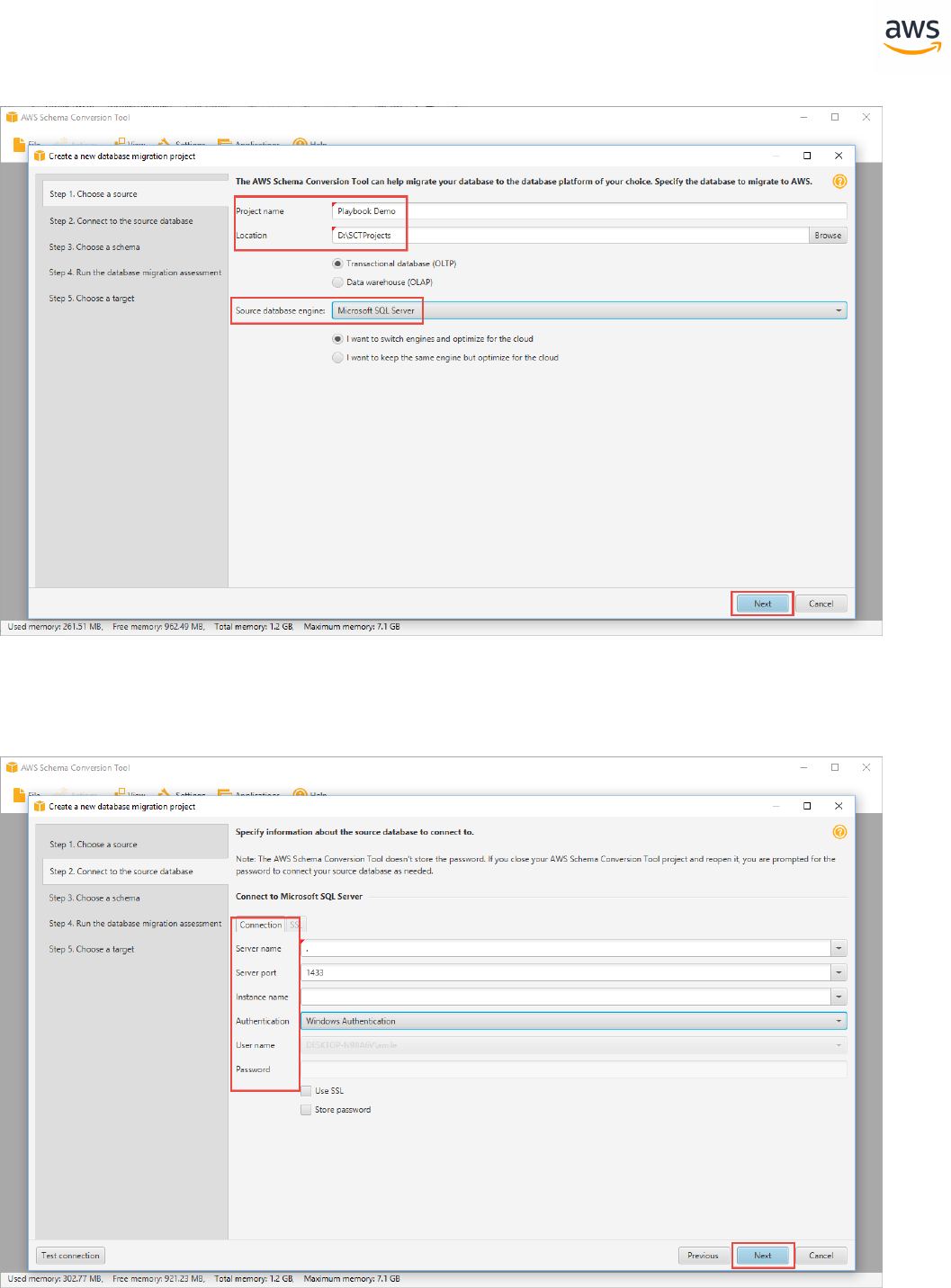

Create a New Migration Project

Click File > New project wizard. Alternatively, use the keyboard shortcut <Ctrl+W>.

Enter a project name and select a location for the project files. Click Next.

- 22 -

Enter connection details for the source SQL Server database and click Test Connection to verify. Click

Next.

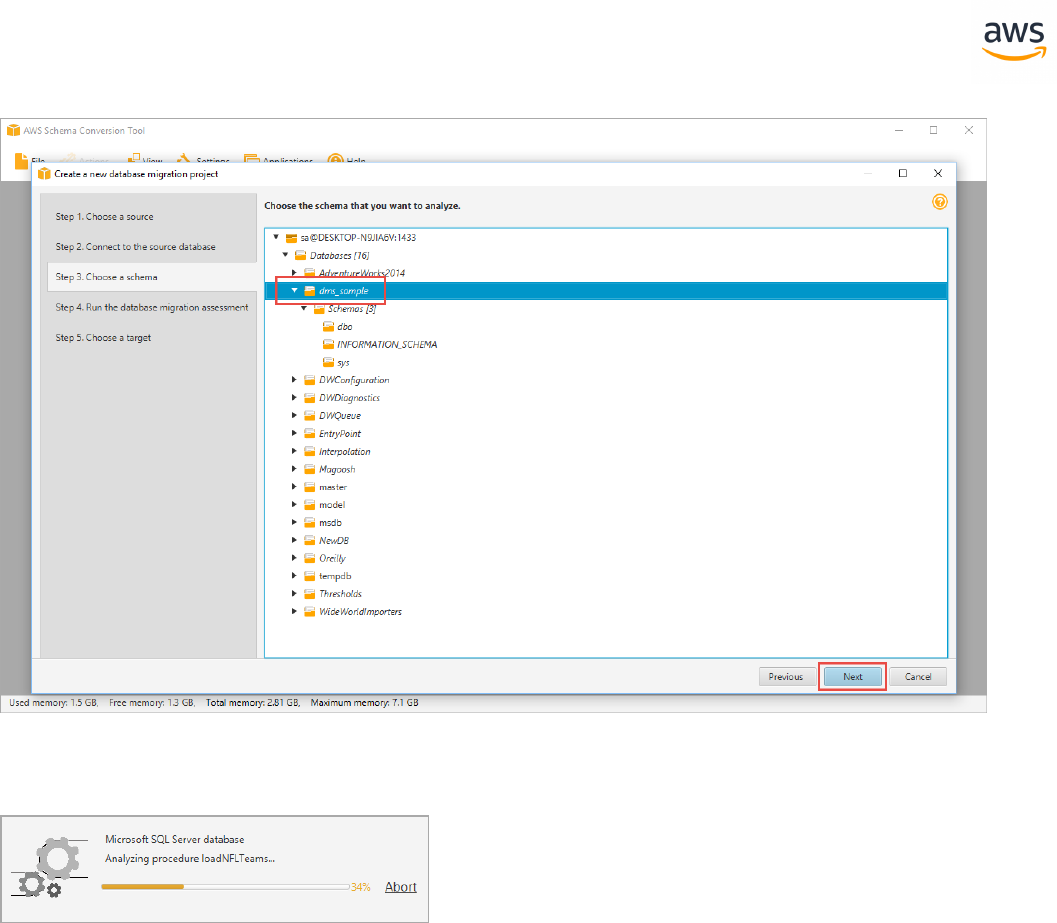

Select the schema or database to migrate and click Next.

- 23 -

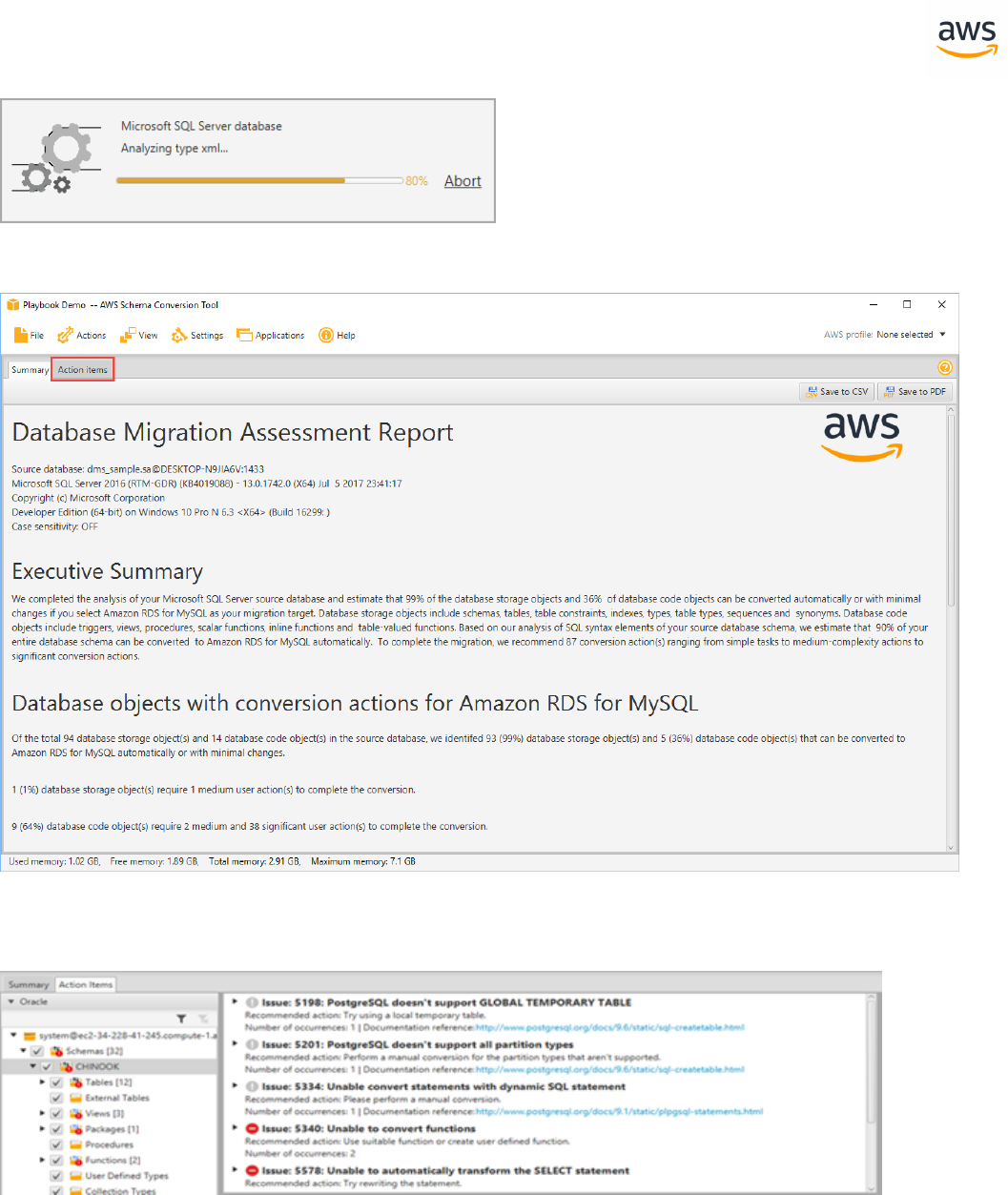

The progress bar displays the objects being analyzed.

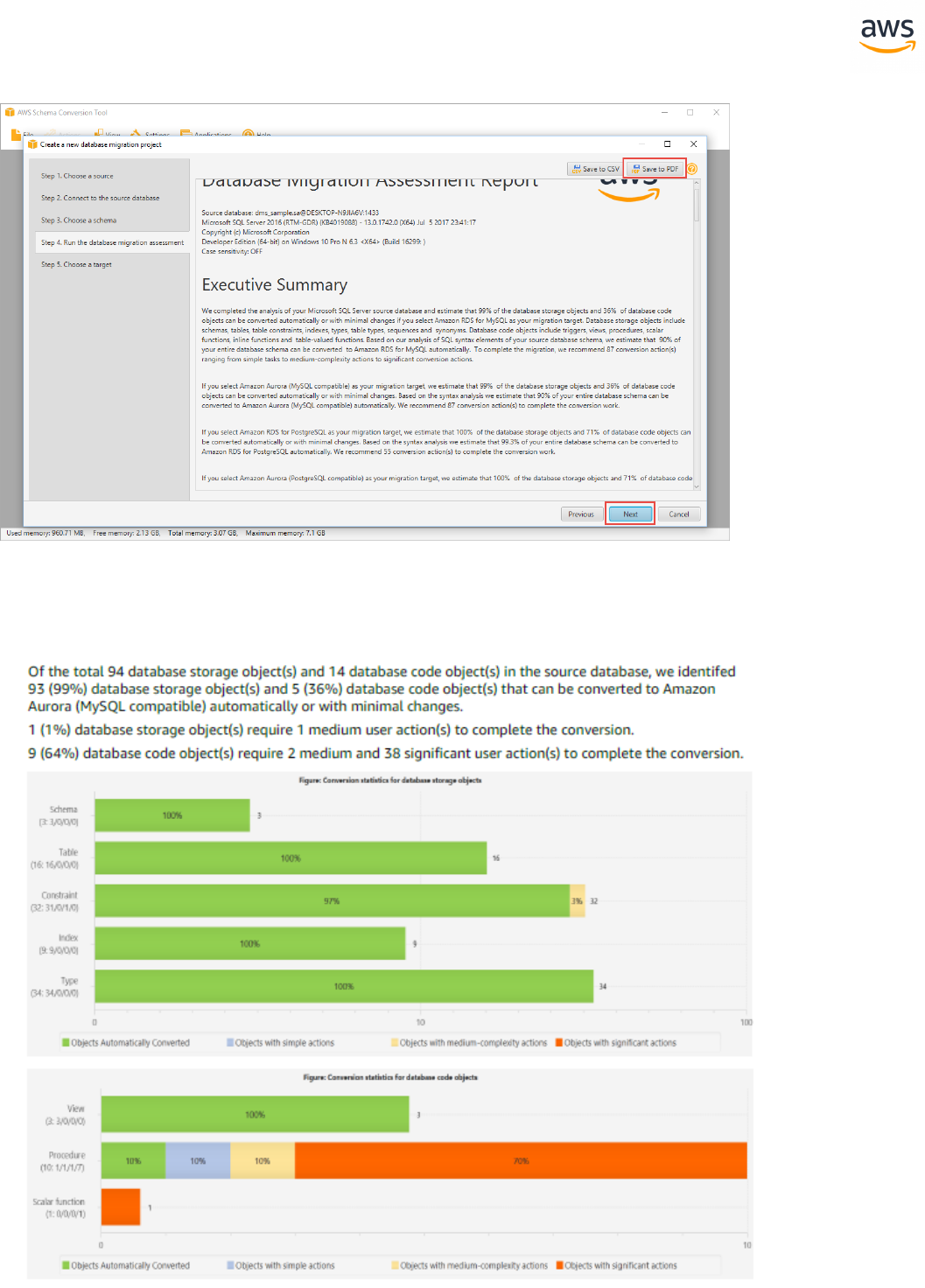

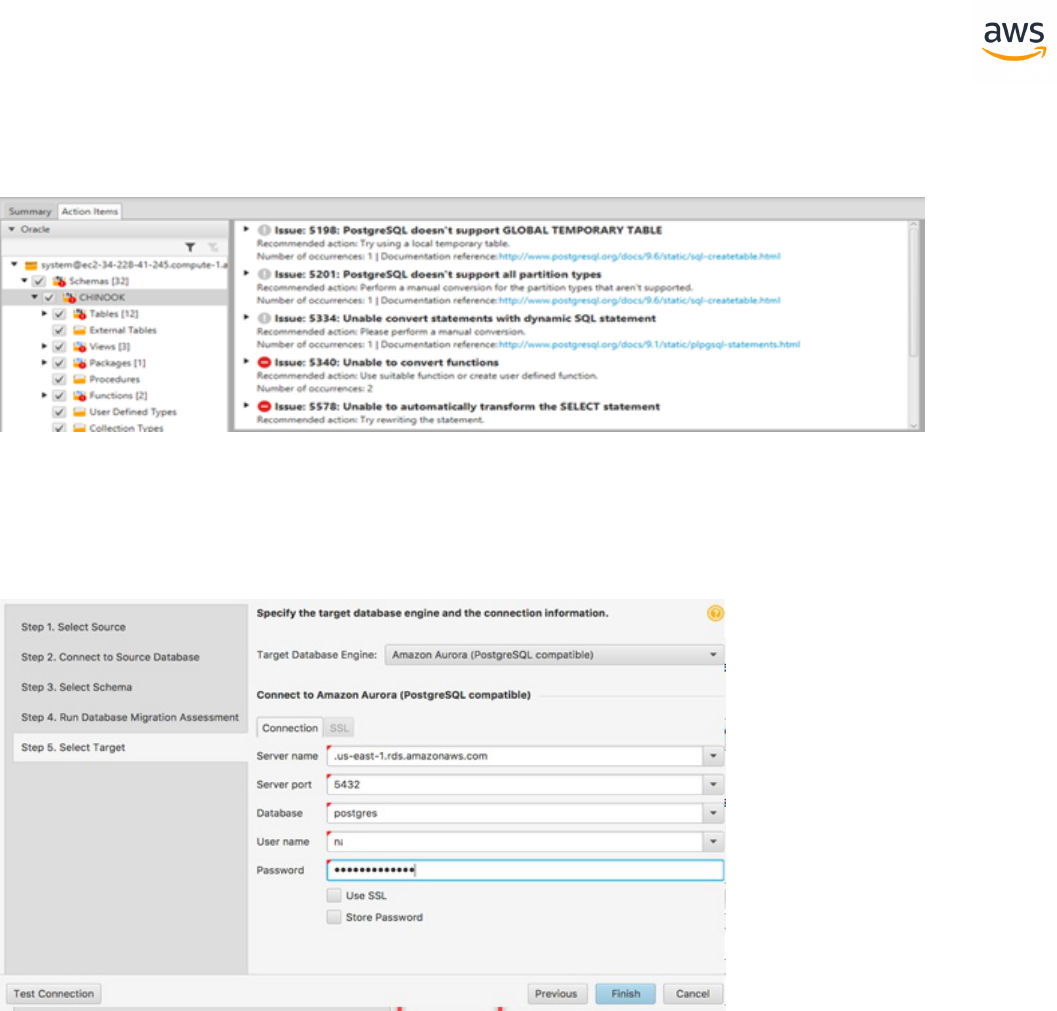

The Database Migration Assessment Report is displayed when the analysis completes. Read the Exec-

utive summary and other sections. Note that the information on the screen is only partial. To read the

full report, including details of the individual issues, click Save to PDF and open the PDFdocument.

- 24 -

Scroll down to the section Database objects with conversion actions for Amazon Aurora (Post-

greSQL compatible).

- 25 -

Scroll further down to the section Detailed recommendations for Amazon Aurora (PostgreSQL com-

patible) migrations.

Return to AWS SCT and click Next. Enter the connection details for the target Aurora PostgreSQL data-

base and click Finish.

Note: The changes have not yet been saved to the target.

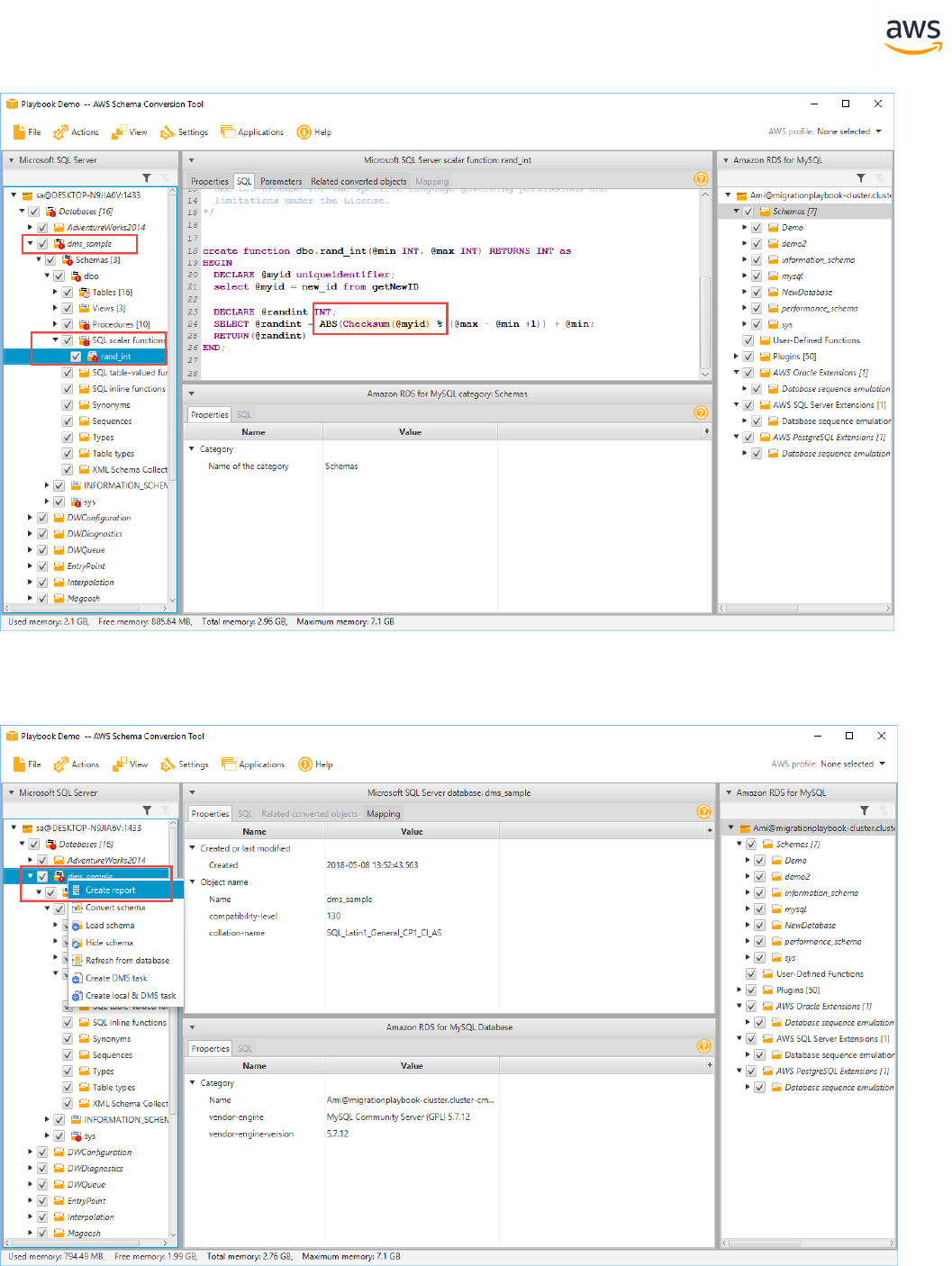

When the connection is complete, AWS SCTdisplays the main window. In this interface, you can

explore the individual issues and recommendations discovered by AWSSCT.

For example, expand sample database > dbo default schema > SQL scalar functions > rand_int.

This issue has a red marker indicating it could not be automatically converted and requires a manual

code change (issue 811 above). Select the object to highlight the incompatible code section.

- 26 -

Right-click the database and then click Create Report to create a report tailored for the target data-

base type. It can be viewed in AWSSCT.

The progress bar updates while the report is generated.

- 27 -

The executive summary page displays. Click the Action Items tab.

In this window, you can investigate each issue in detail and view the suggested course of action. For

each issue, drill down to view all instances of that issue.

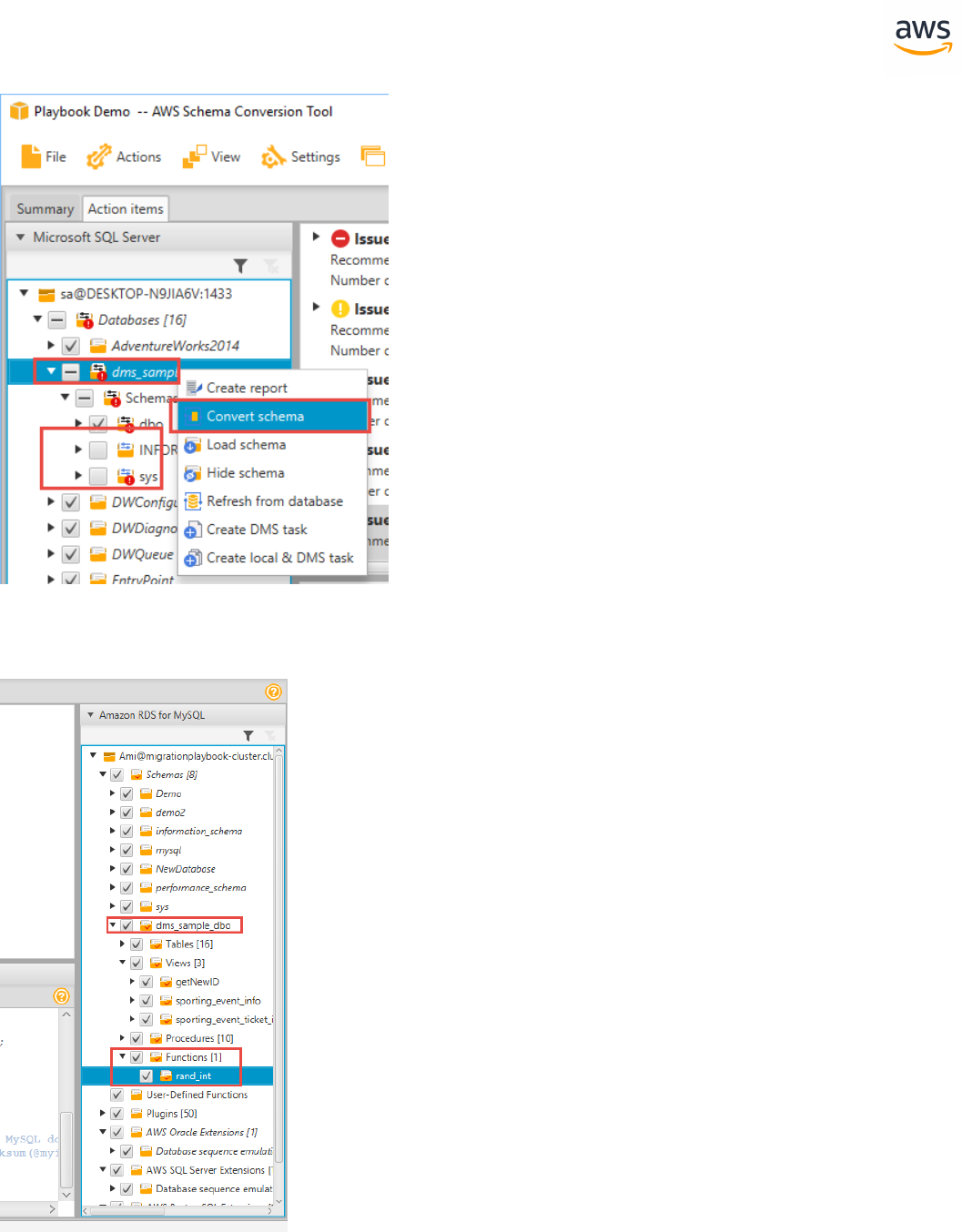

Right-click the database name and click Convert Schema.

Note: Be sure to uncheck the sys and information_schema system schemas. Aurora Post-

greSQL already has an information_schema schema.

Note: This step does not make any changes to the target database.

- 28 -

On the right pane, the new virtual schema is displayed as if it exists in the target database. Drilling

down into individual objects displays the actual syntax generated by AWSSCT to migrate the objects.

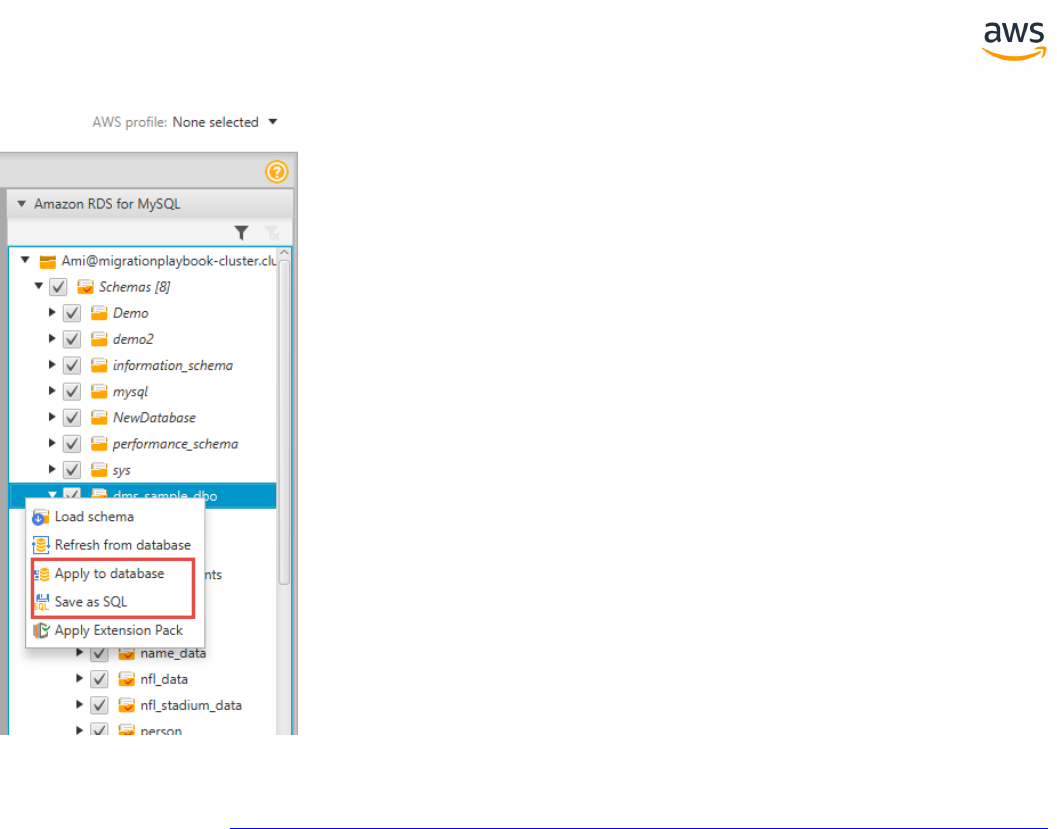

Right-click the database on the right pane and choose either Apply to database to automatically

execute the conversion script against the target database, or click Save as SQL to save to an SQL file.

Saving to an SQL file is recommended because it allows you to verify and QA the SCT code. Also, you

can make the adjustments needed for objects that could not be automatically converted.

- 29 -

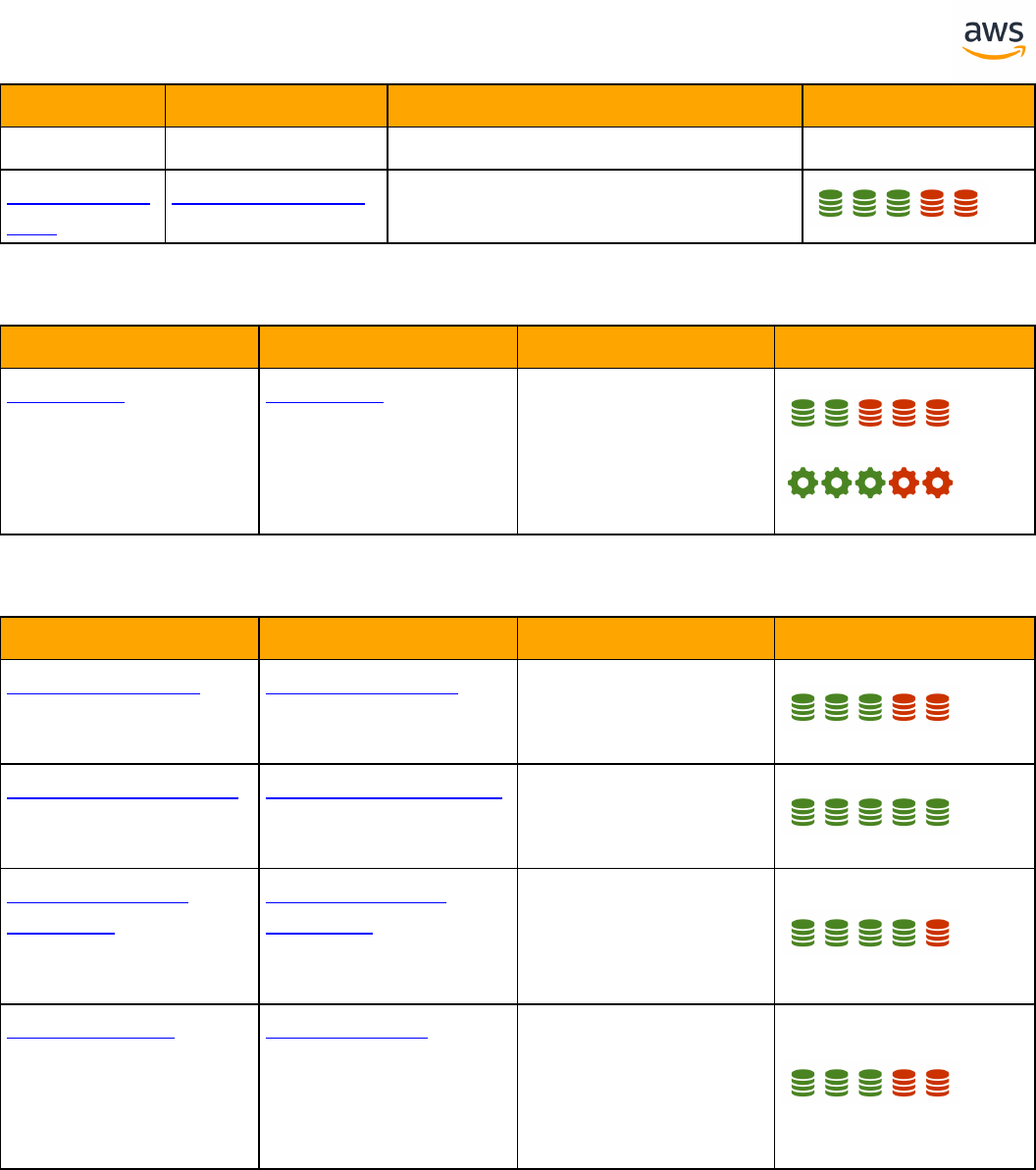

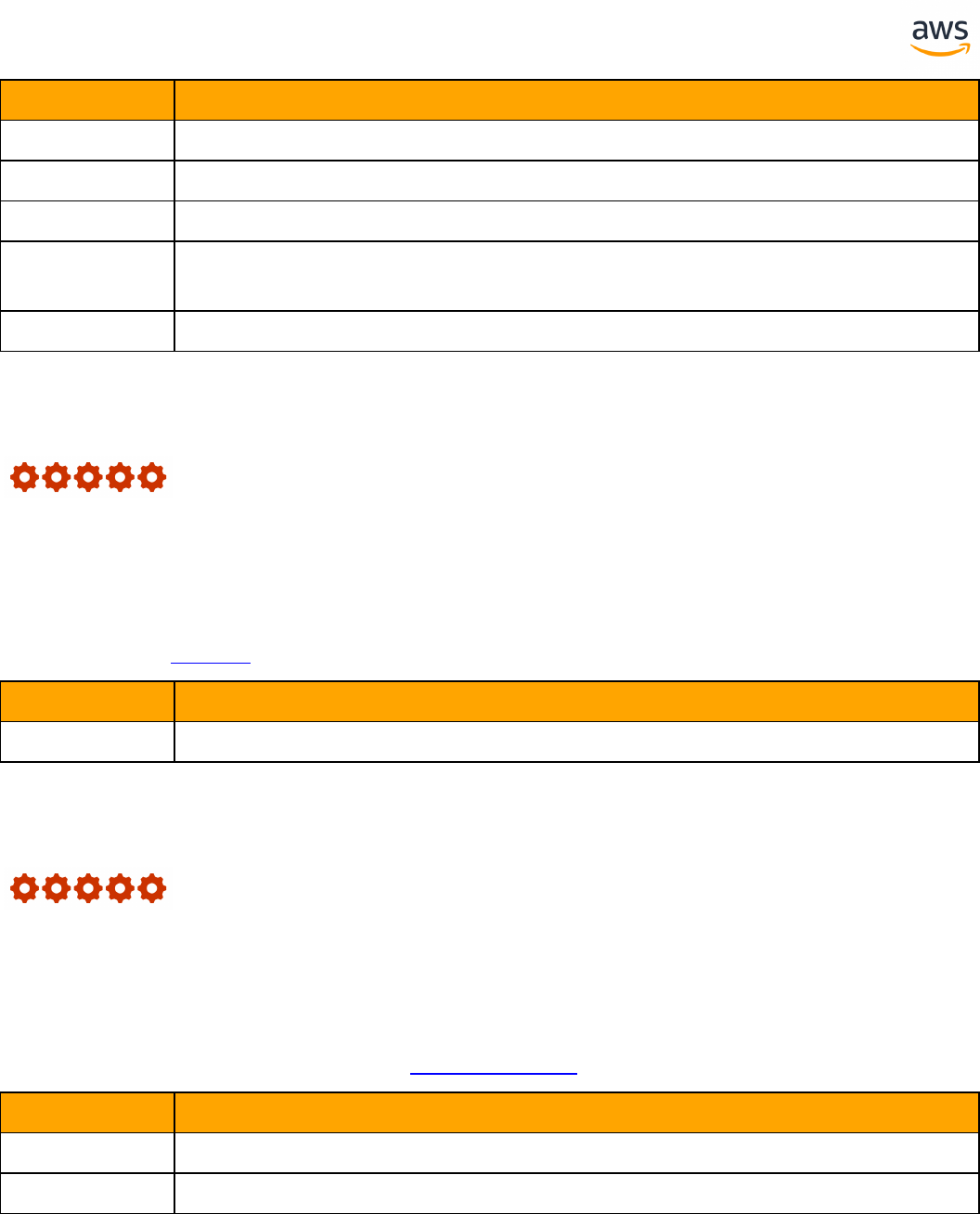

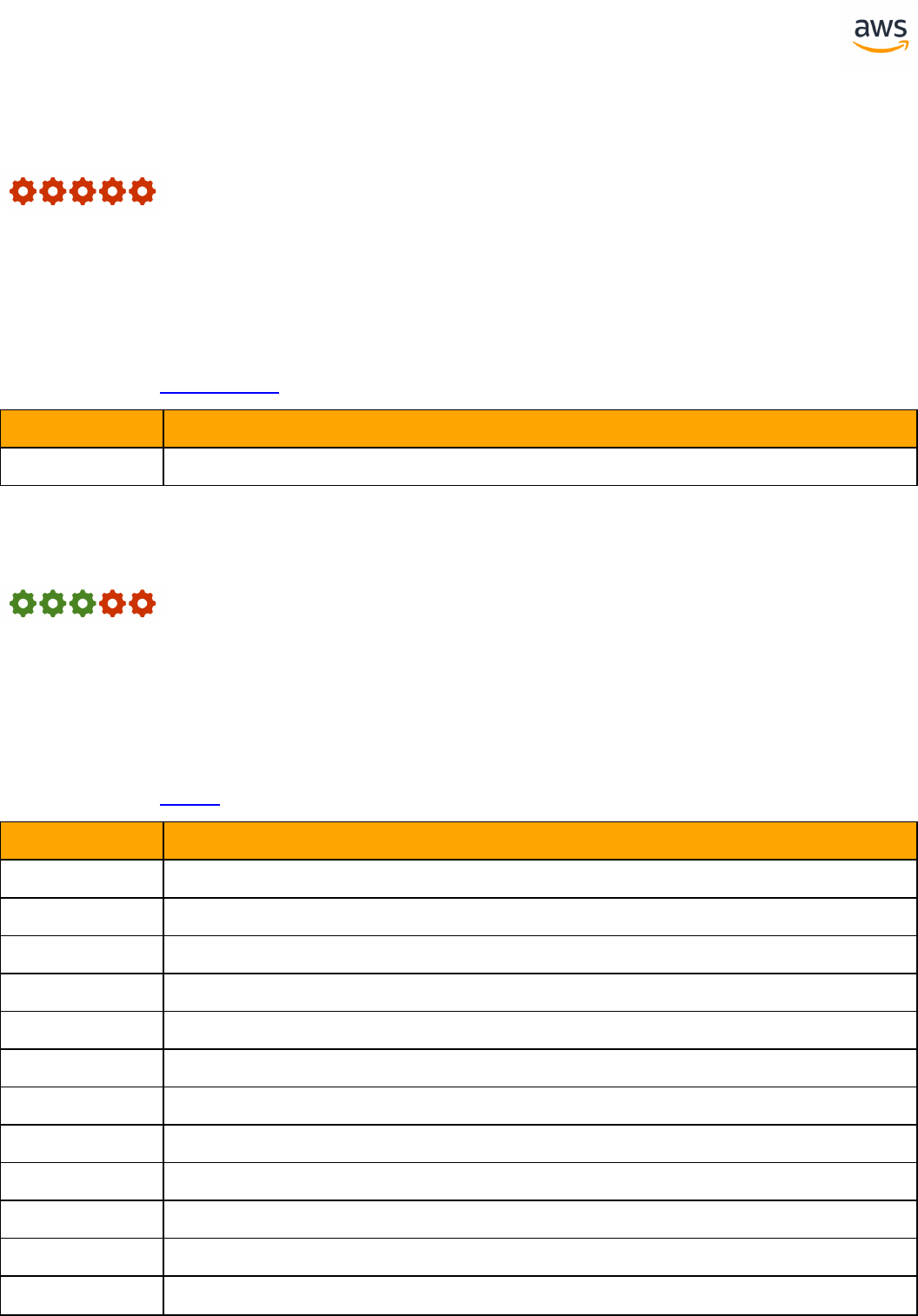

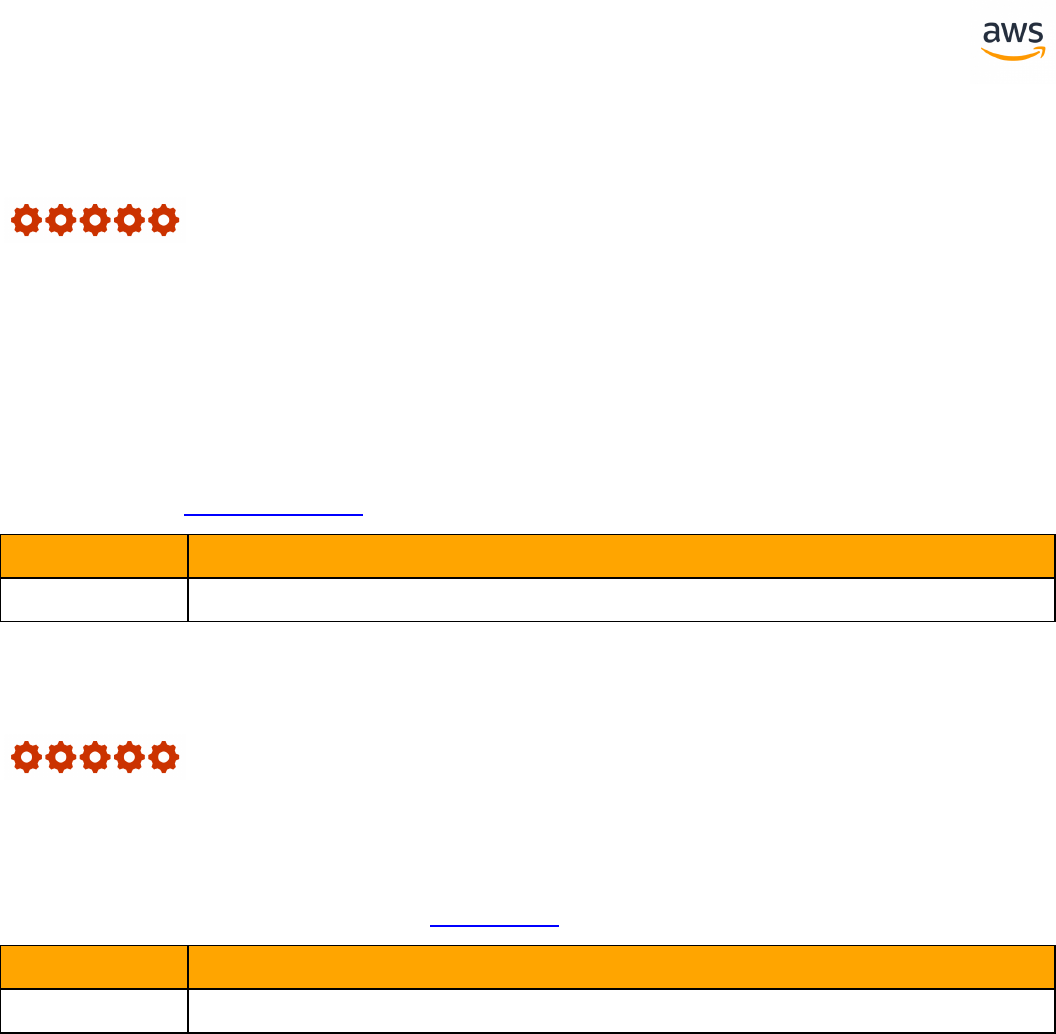

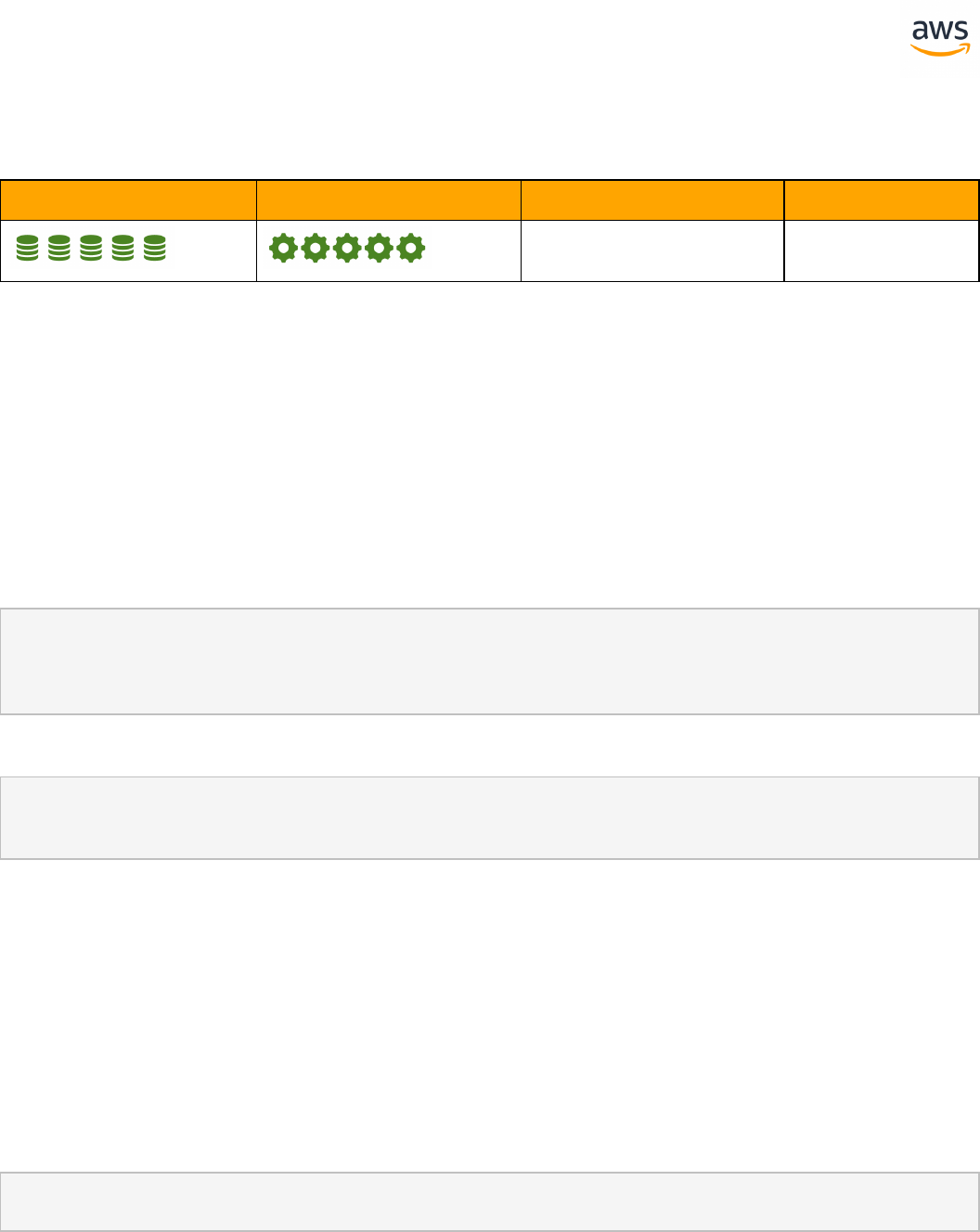

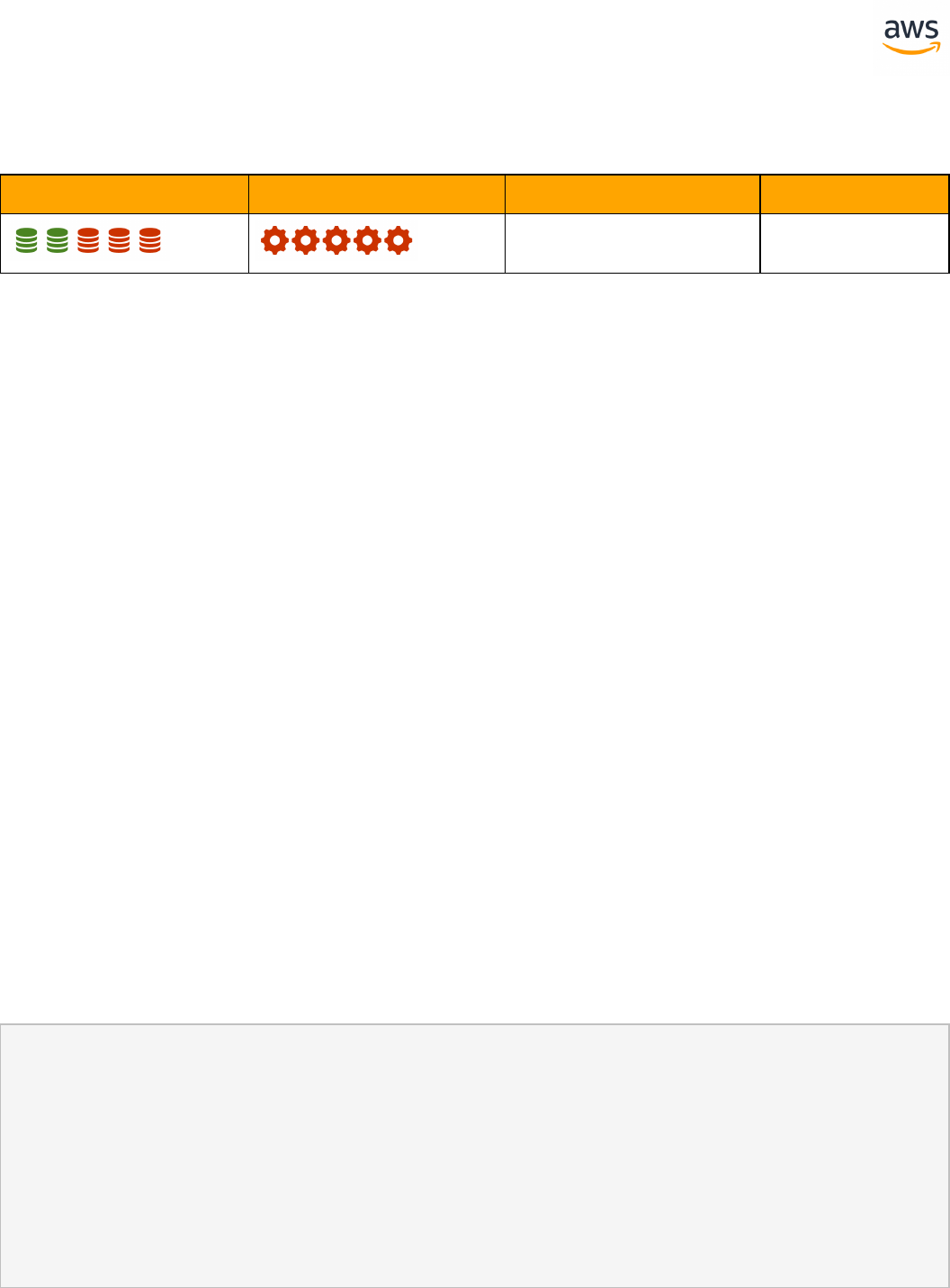

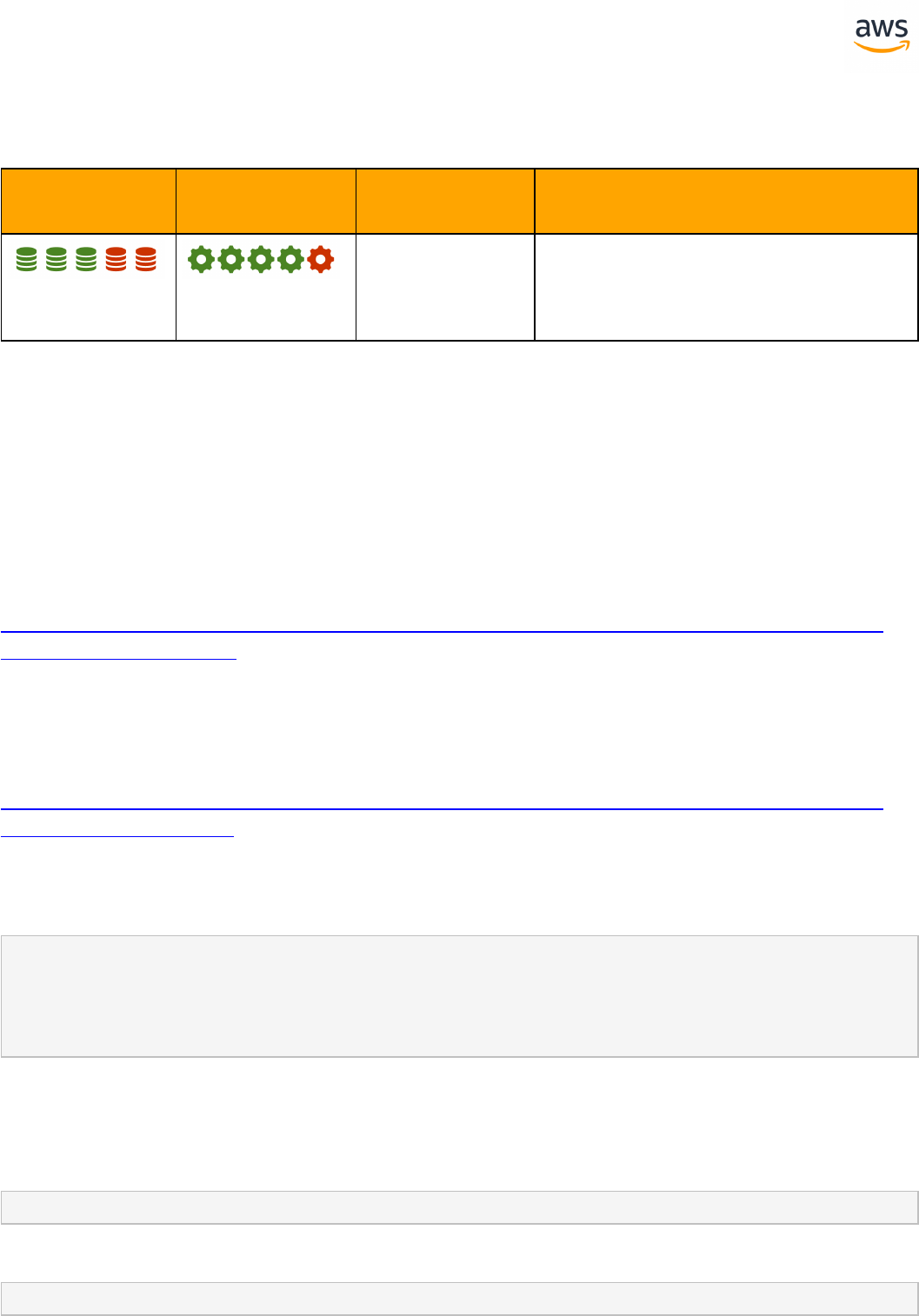

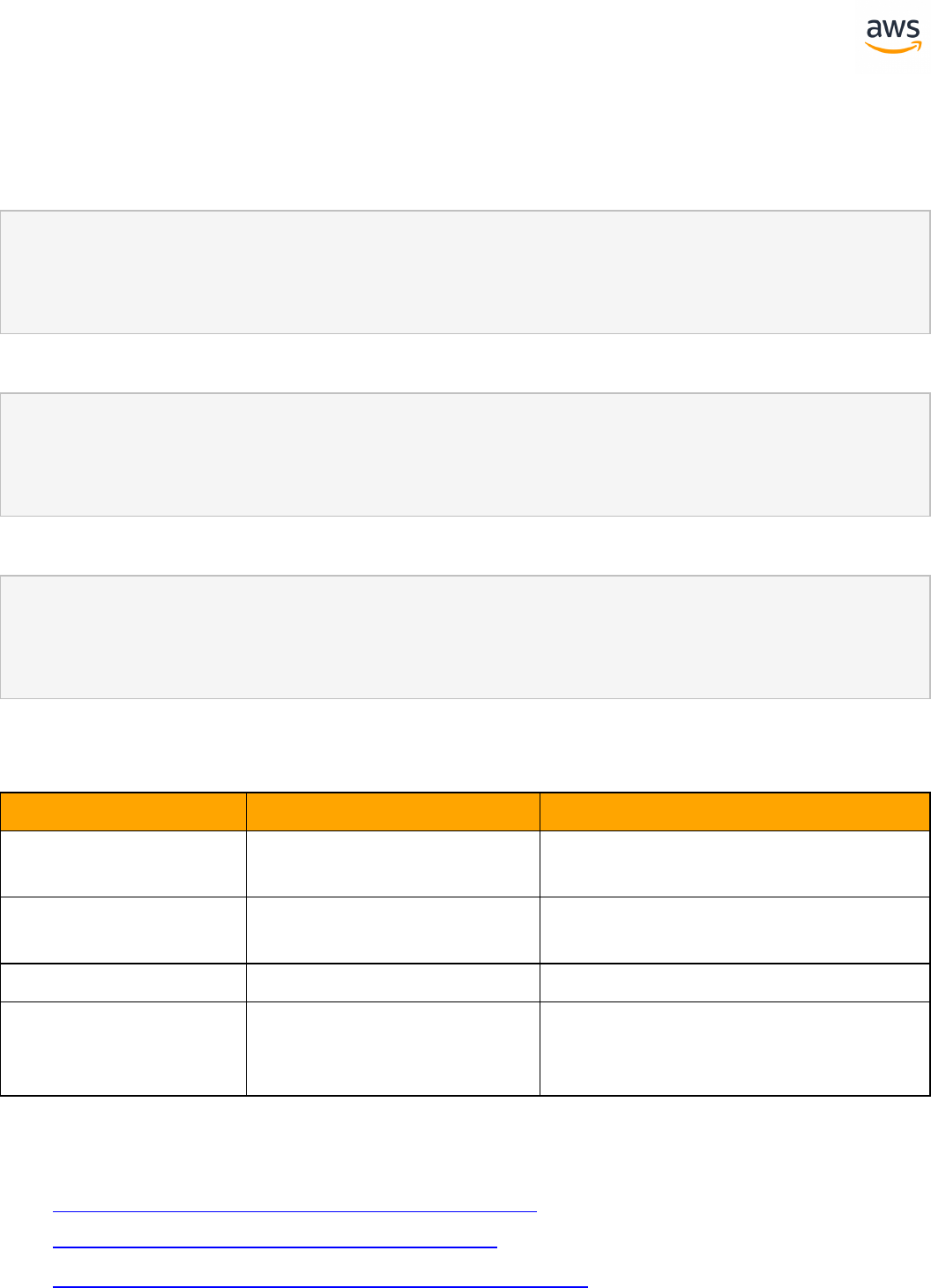

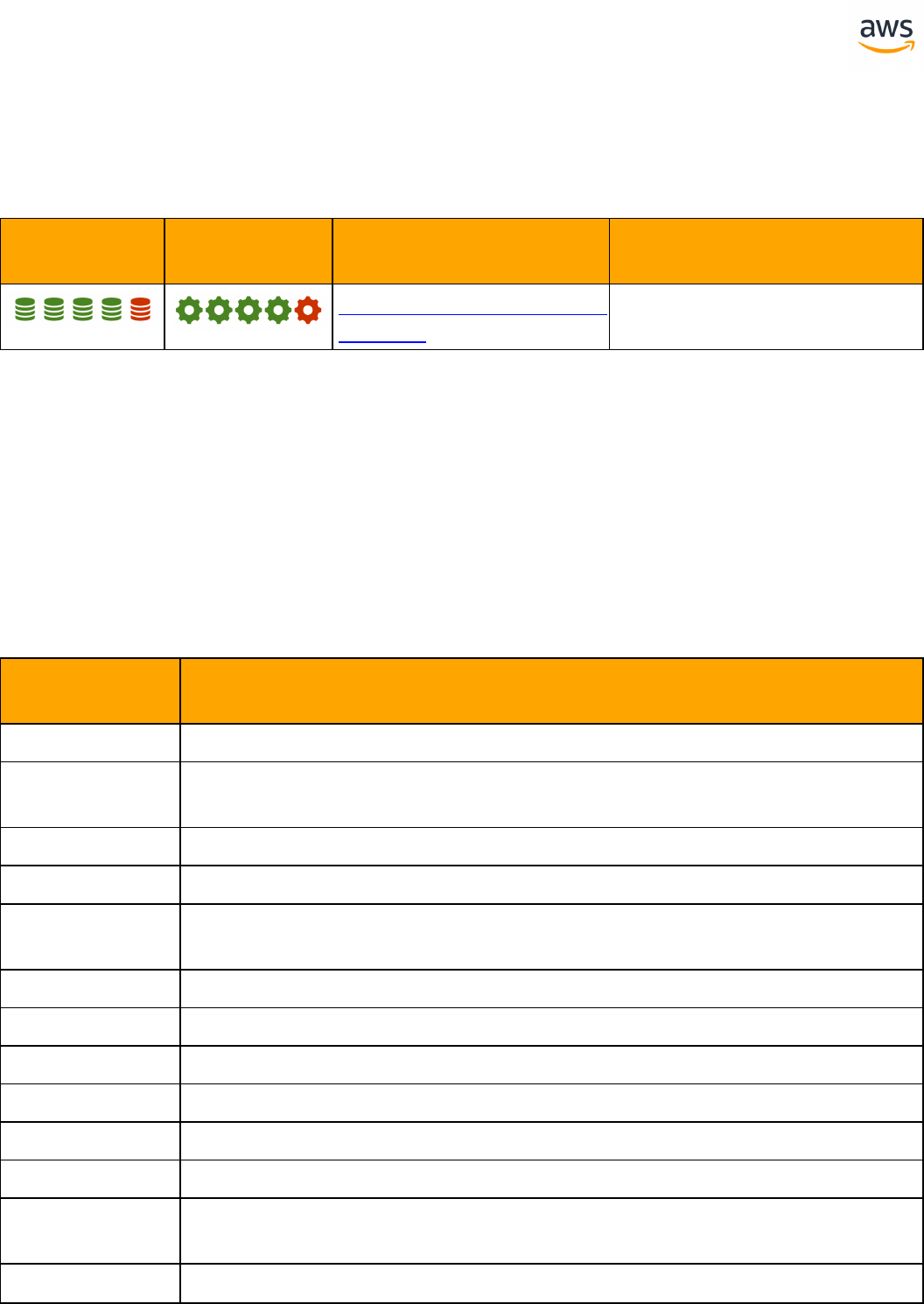

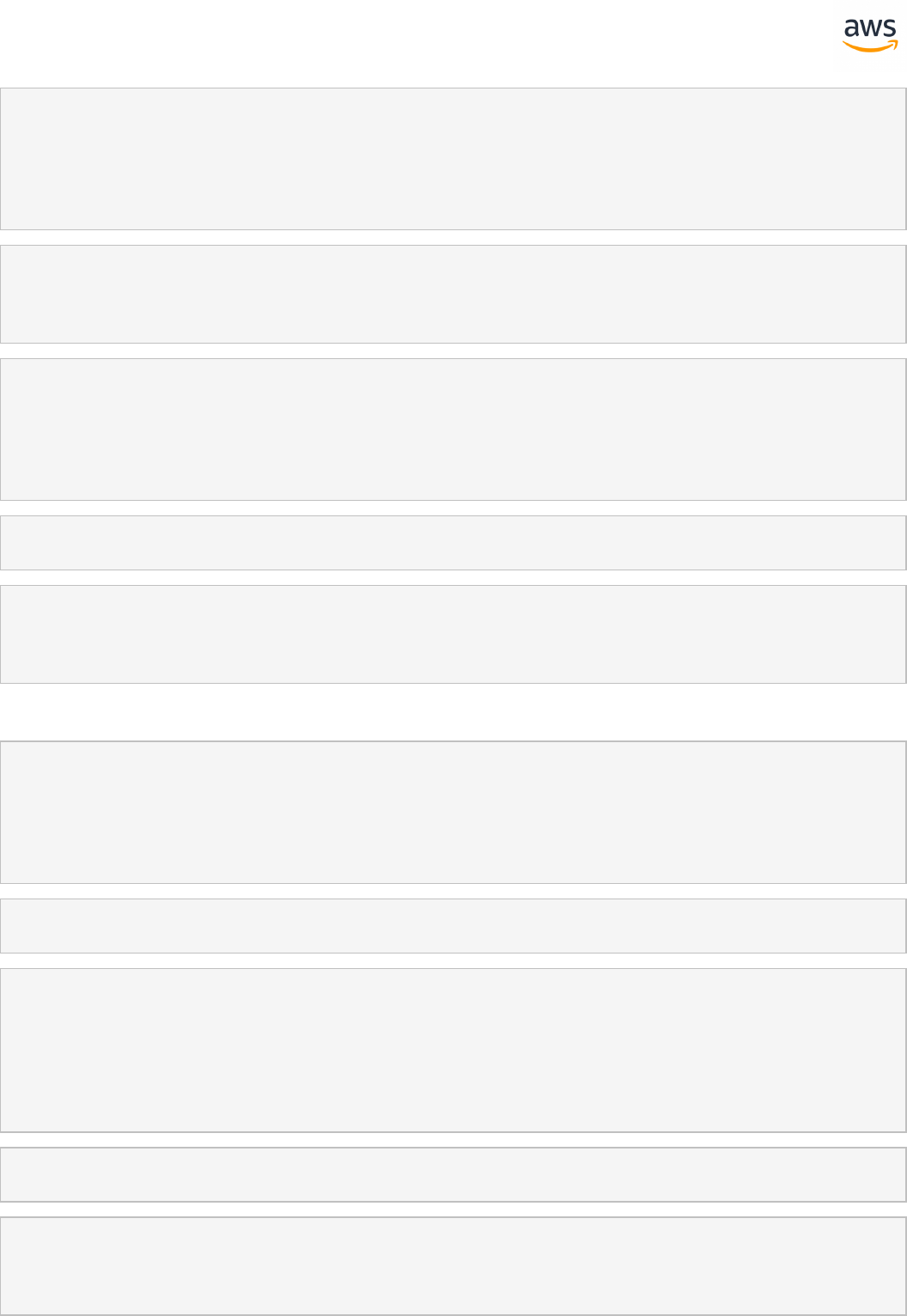

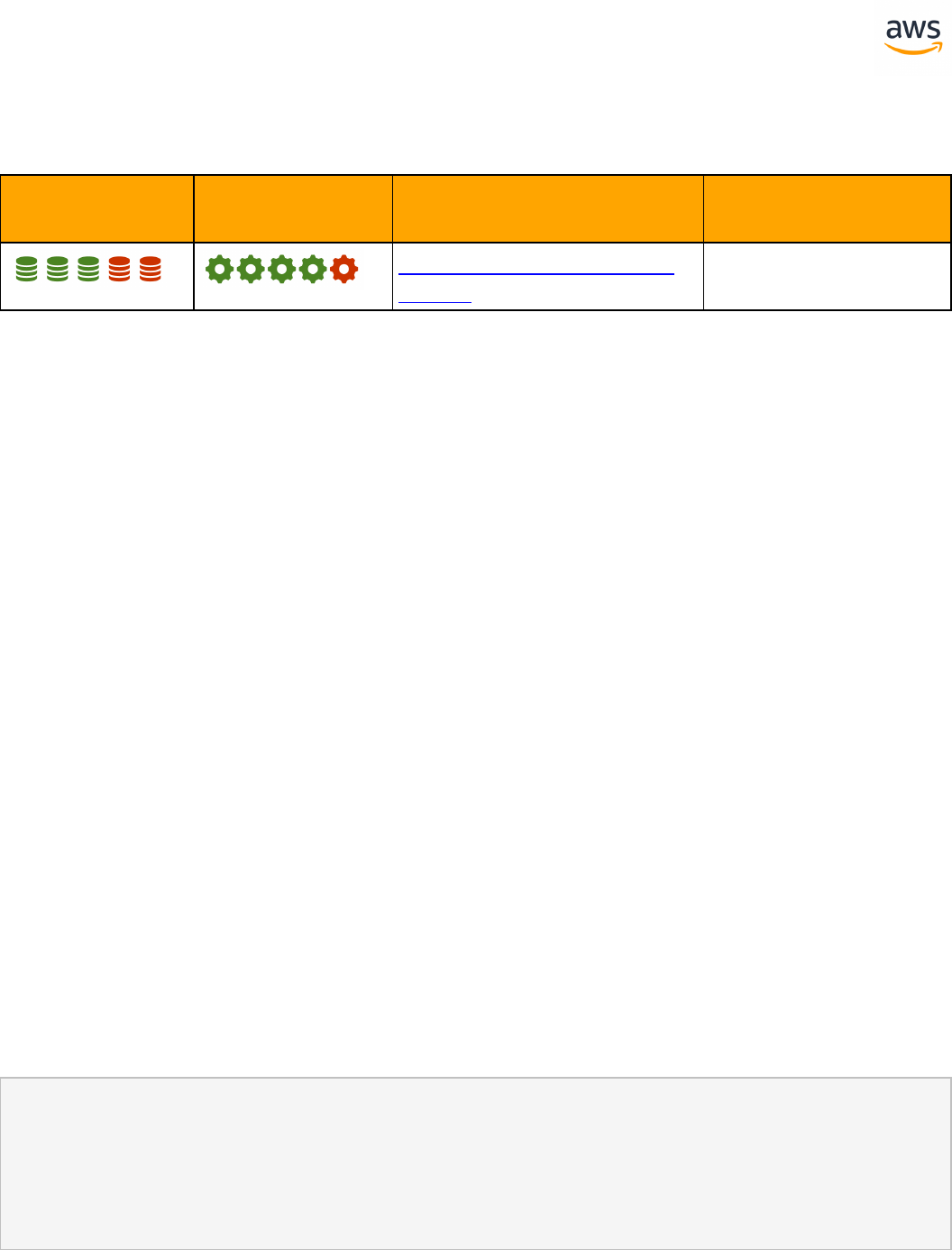

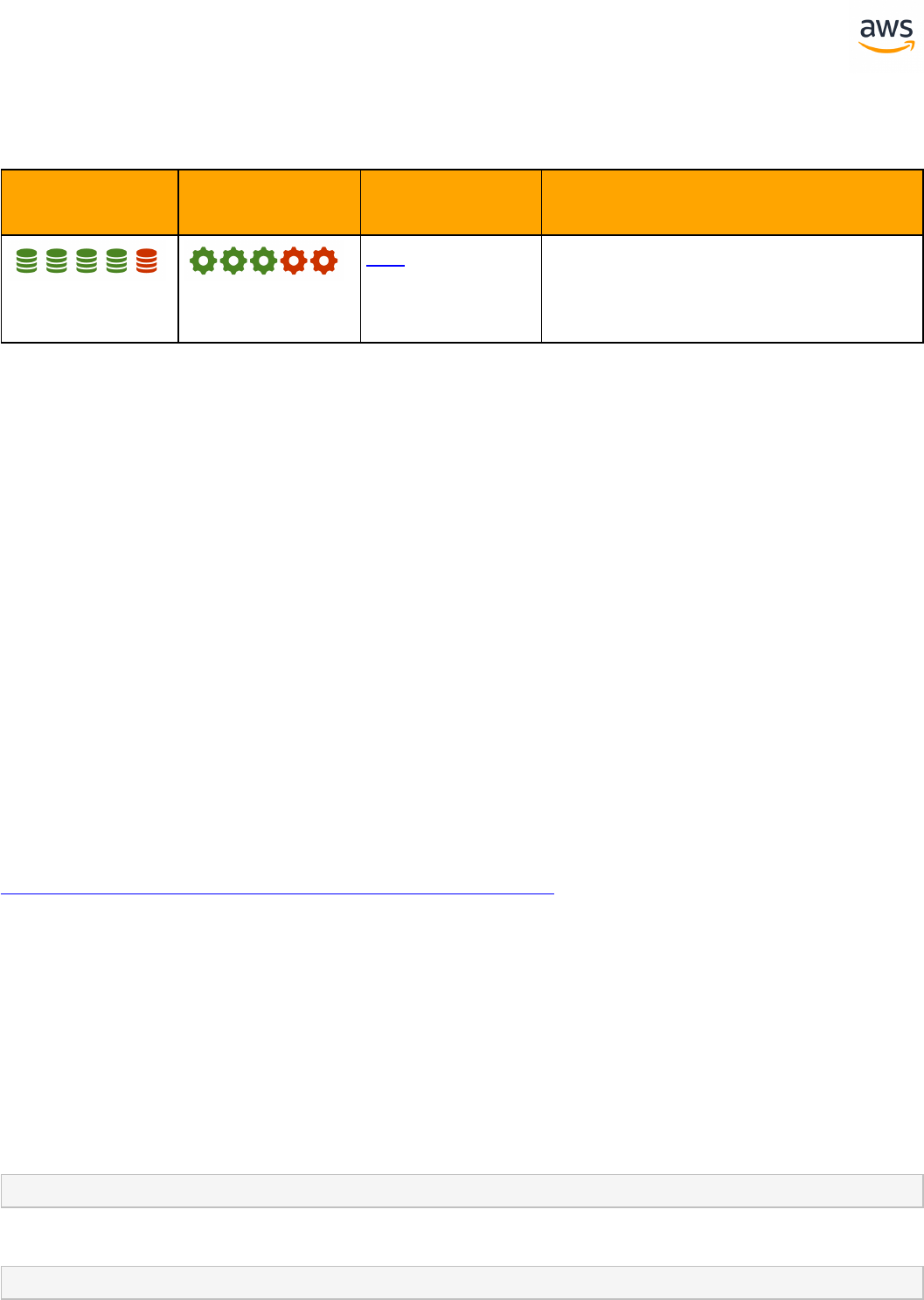

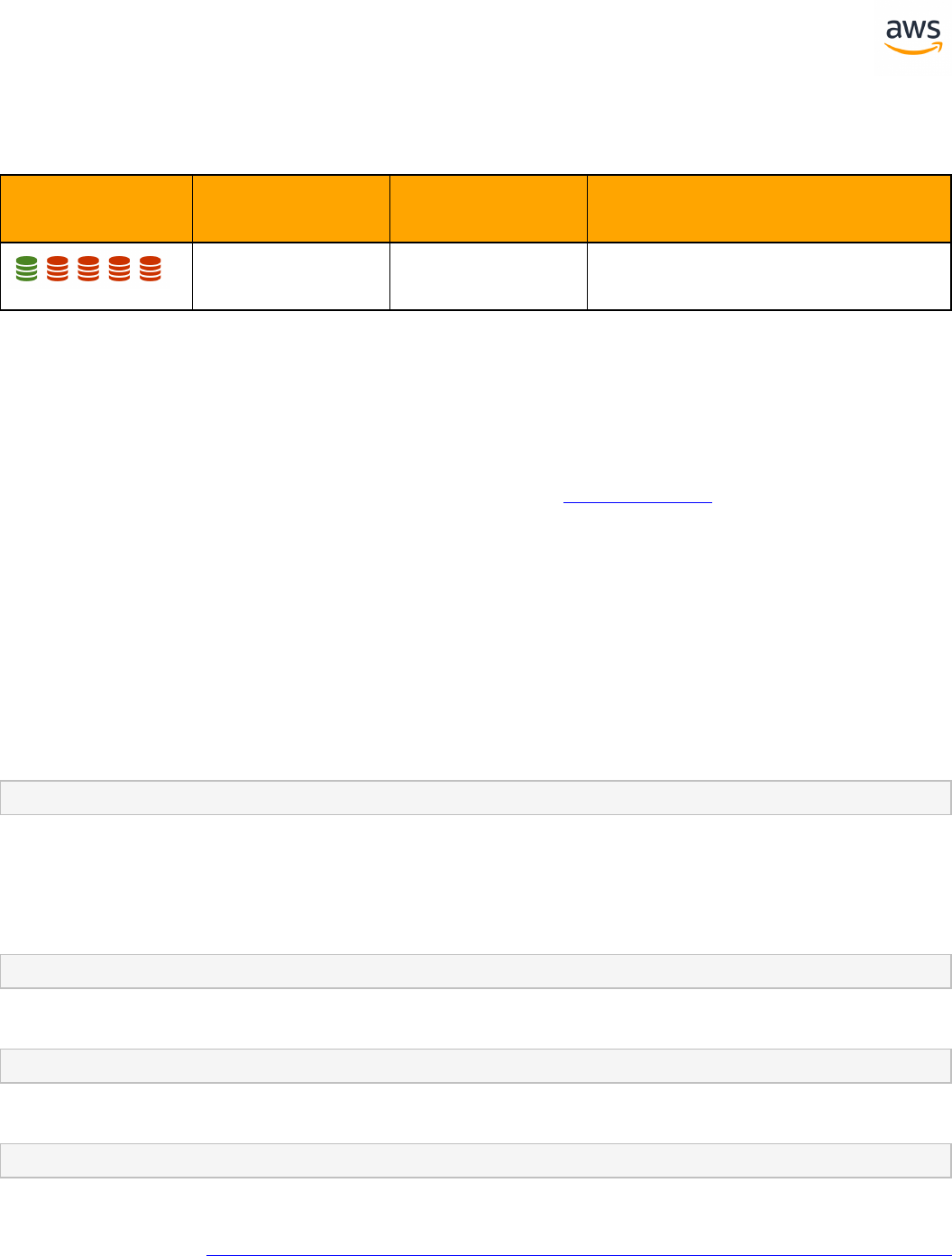

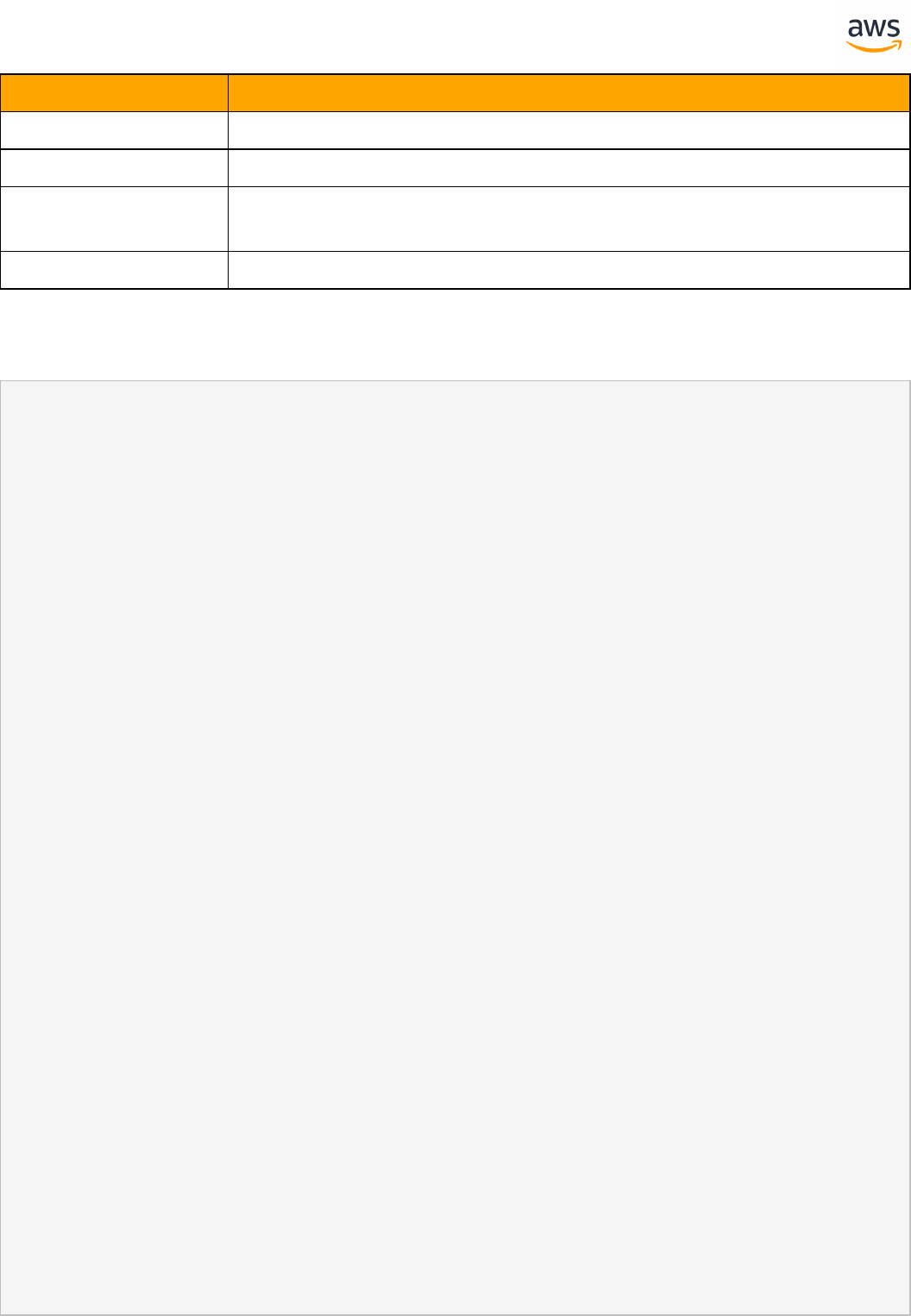

SCT Action Code Index

Legend

SCT Automation

Level Symbol

Description

Full Automation SCT performs fully automatic conversion, no manual con-

version needed.

High Automation: Minor, simple manual conversions may be needed.

Medium Automation: Low-medium complexity manual conversions may be

needed.

Low Automation: Medium-high complexity manual conversions may be

needed.

Very Low Automation: High risk or complex manual conversions may be

needed.

No Automation: Not currently supported by SCT, manual conversion is

required for this feature.

The following sections list the Schema Conversion Tool Action codes for topics that are covered in this

playbook.

Note: The links in the table point to the Microsoft SQL Server topic pages, which are imme-

diately followed by the PostgreSQL pages for the same topics.

- 31 -

Creating Tables

AWS SCT automatically converts the most commonly used constructs of the CREATE TABLE statement

as both SQL Server and Aurora PostgreSQL support the entry level ANSI compliance. These items

include table names, containing security schema (or database), column names, basic column data

types, column and table constraints, column default values, primary, candidate (UNIQUE), and foreign

keys. Some changes may be required for computed columns and global temporary tables.

For more details, see Creating Tables.

Action Code Action Message

7659 The scope table-variables and temporary tables is different. You must apply

manual conversion, if you are using recursion

7679 A computed column is replaced by the triggers 7680

7680 PostgreSQL doesn't support global temporary tables

7812 Temporary table must be removed before the end of the function

Data Types

Data type syntax and rules are very similar between SQL Server and Aurora PostgreSQL and most are

converted automatically by AWS SCT. Note that date and time handling paradigms are different for SQL

Server and Aurora PostgreSQL and require manual verifications and/or conversion. Also note that due

to differences in data type behavior between SQL Server and Aurora PostgreSQL , manual verification

and strict testing are highly recommended.

For more details, see Data Types.

Action Code Action Message

7657 PostgreSQL doesn't support this type. A manual conversion is required

7658 PostgreSQL doesn't support this type. A manual conversion is required

7662 PostgreSQL doesn't support this type. A manual conversion is required

7664 PostgreSQL doesn't support this type. A manual conversion is required

7690 PostgreSQL doesn't support table types

- 32 -

Action Code Action Message

7706 Unable convert the variable declaration of unsupported %s datatype

7707 Unable convert variable reference of unsupported %s datatype

7708 Unable convert complex usage of unsupported %s datatype

7773 Unable to perform an automated migration of arithmetic operations with several

dates

7775 Check the data type conversion. Possible loss of accuracy

Collations

The collation paradigms of SQL Server and Aurora PostgreSQL are significantly different. The AWS SCT

tool can not migrate collation automaticly to PostgreSQL

For more details, see Collations.

Action Code Action Message

7646 Automatic conversion of collation is not supported

PIVOT and UNPIVOT

Aurora PostgreSQL version 9.6 does not support the PIVOT and UNPIVOT syntax and it cannot be auto-

matically converted by AWS SCT.

For workarounds using traditional SQL syntax, see PIVOT and UNPIVOT.

Action Code Action Message

7905 PostgreSQL doesn't support the PIVOT clause for the SELECT statement

7906 PostgreSQL doesn't support the UNPIVOT clause for the SELECT statement

- 33 -

TOP and FETCH

Aurora PostgreSQL supports the non-ANSI compliant (but popular with other engines) LIMIT... OFFSET

operator for paging results sets. Some options such as WITH TIES cannot be automatically converted

and require manual conversion.

For more details, see TOP and FETCH.

Action Code Action Message

7605 PostgreSQL doesn't support the WITH TIES option

7796 PostgreSQL doesn't support TOP option in the operator UPDATE

7798 PostgreSQL doesn't support TOP option in the operator DELETE

Cursors

PostgreSQL has PL/pgSQL cursors that enable you to iterate business logic on rows read from the data-

base. They can encapsulate the query and read the query results a few rows at a time. All access to curs-

ors in PL/pgSQL is performed through cursor variables, which are always of the refcursor data type.

There are specific options which are not supported for automaic conversion by SCT.

For more details, see Cursors.

Action Code Action Message

7637 PostgreSQL doesn't support GLOBAL CURSORS. Requires manual Conversion

7639 PostgreSQL doesn't support DYNAMIC cursors

7700 The membership and order of rows never changes for cursors in PostgreSQL, so

this option is skipped

7701 Setting this option corresponds to the typical behavior of cursors in PostgreSQL, so

this option is skipped

7702 All PostgreSQL cursors are read-only, so this option is skipped

7704 PostgreSQL doesn't support the option OPTIMISTIC, so this option is skipped

7705 PostgreSQL doesn't support the option TYPE_WARNING, so this option is skipped

7803 PostgreSQL doesn't support the option FOR UPDATE, so this option is skipped

- 34 -

Flow Control

Although the flow control syntax of SQL Server differs from Aurora PostgreSQL , the AWS SCT can con-

vert most constructs automatically including loops, command blocks, and delays. Aurora PostgreSQL

does not support the GOTO command nor the WAITFOR TIME command, which require manual con-

version.

For more details, see Flow Control.

Action Code Action Message

7628 PostgreSQL doesn't support the GOTO option. Automatic conversion can't be per-

formed

7691 PostgreSQL doesn't support WAITFOR TIME feature

7801 The table can be locked open cursor

7802 A table that is created within the procedure, must be deleted before the end of the

procedure

7821 Automatic conversion operator WAITFOR with a variable is not supported

7826 Check the default value for a DateTime variable

7827 Unable to convert default value

Transaction Isolation

Aurora PostgreSQL supports the four transaction isolation levels specified in the SQL:92 standard:

READ UNCOMMITTED, READ COMMITTED, REPEATABLE READ, and SERIALIZABLE, all of which are auto-

matically converted by AWS SCT. AWS SCT also converts BEGIN / COMMIT and ROLLBACK commands

that use slightly different syntax. Manual conversion is required for named, marked, and delayed dur-

ability transactions that are not supported by Aurora PostgreSQL .

For more details, see Transaction Isolation.

Action Code Action Message

7807 PostgreSQL does not support explicit transaction management in functions

- 35 -

Stored Procedures

Aurora PostgreSQL Stored Procedures (functions) provides very similar functionality to SQL Server

stored procedures and can be automatically converted by AWS SCT. Manual conversion is required for

procedures that use RETURN values and some less common EXECUTE options such as the RECOMPILE

and RESULTS SETS options.

For more details, see Stored Procedures.

Action Code Action Message

7640 The EXECUTE with RECOMPILE option is ignored

7641 The EXECUTE with RESULT SETS UNDEFINED option is ignored

7642 The EXECUTE with RESULT SETS NONE option is ignored

7643 The EXECUTE with RESULT SETS DEFINITION option is ignored

7672 Automatic conversion of this command is not supported

7695 PostgreSQL doesn't support the execution of a procedure as a variable

7800 PostgreSQL doesn't support result sets in the style of MSSQL

7830 Automatic conversion arithmetic operations with operand CASE is not supported

7838 The EXECUTE with LOGIN | USER option is ignored

7839 Converted code might be incorrect because of the parameter names

Triggers

Aurora PostgreSQL supports BEFORE and AFTER triggers for INSERT, UPDATE, and DELETE. However,

Aurora PostgreSQL triggers differ substantially from SQL Server's triggers, but most common use cases

can be migrated with minimal code changes.

For more details, see Triggers.

Action Code Action Message

7809 PostgreSQL does not support INSTEAD OF triggers on tables

7832 Unable to convert INSTEAD OF triggers on view

7909 Unable to convert the clause

- 36 -

MERGE

Aurora PostgreSQL version 9.6 does not support the MERGE statement and it cannot be automatically

converted by AWS SCT. Manual conversion is straight-forward in most cases.

For more details and potential workarounds, see MERGE.

Action Code Action Message

7915 Please check unique(exclude) constraint existence on field %s

7916 Current MERGE statement can not be emulated by INSERT ON CONFLICT usage

Query hints and plan guides

Basic query hints such as index hints can be converted automatically by AWS SCT, except for DML state-

ments. Note that specific optimizations used for SQL Server may be completely inapplicable to a new

query optimizer. It is recommended to start migration testing with all hints removed. Then, selectively

apply hints as a last resort if other means such as schema, index, and query optimizations have failed.

Plan guides are not supported by Aurora PostgreSQL.

For more details, see Query hints and Plan Guides.

Action Code Action Message

7823 PostgreSQL doesn't support table hints in DML statements

- 37 -

Full Text Search

Migrating Full-Text indexes from SQL Server to Aurora PostgreSQL requires a full rewrite of the code

that deals with both creating, managing, and querying Full-Text indexes. They cannot be automatically

converted by AWS SCT.

For more details, see Full Text Search.

Action Code Action Message

7688 PostgreSQL doesn't support the FREETEXT predicate

Indexes

Basic non-clustered indexes, which are the most commonly used type of indexes are automatically

migrated by AWS SCT. In addition, filtered indexes, indexes with included columns, and some SQL

Server specific index options can not be migrated automatically and require manual conversion.

For more details, see Indexes.

Action Code Action Message

7675 PostgreSQL doesn't support sorting options (ASC | DESC) for constraints

7681 PostgreSQL doesn't support clustered indexes

7682 PostgreSQL doesn't support the INCLUDE option in indexes

7781 PostgreSQL doesn't support the PAD_INDEX option in indexes

7782 PostgreSQL doesn't support the SORT_IN_TEMPDB option in indexes

7783 PostgreSQL doesn't support the IGNORE_DUP_KEY option in indexes

7784 PostgreSQL doesn't support the STATISTICS_NORECOMPUTE option in indexes

7785 PostgreSQL doesn't support the STATISTICS_INCREMENTAL option in indexes

7786 PostgreSQL doesn't support the DROP_EXISTING option in indexes

7787 PostgreSQL doesn't support the ONLINE option in indexes

7788 PostgreSQL doesn't support the ALLOW_ROW_LOCKS option in indexes

7789 PostgreSQL doesn't support the ALLOW_PAGE_LOCKS option in indexes

- 38 -

Action Code Action Message

7790 PostgreSQL doesn't support the MAXDOP option in indexes

7791 PostgreSQL doesn't support the DATA_COMPRESSION option in indexes

Partitioning

Aurora PostgreSQL uses "table inheritance", some of the physical aspects of partitioning in SQL Server

do not apply to Aurora PostgreSQL . For example, the concept of file groups and assigning partitions

to file groups. Aurora PostgreSQL supports a much richer framework for table partitioning than SQL

Server, with many additional options such as hash partitioning, and sub partitioning.

For more details, see Partitioning.

Action Code Action Message

7910 NULL columns not supported for partitioning

7911 PostgreSQL does not support foreign keys referencing partitioned tables

7912 PostgreSQL does not support foreign key references from a partitioned table to

some other table

7913 PostgreSQL does not support LEFT partitioning - partition values distribution could

vary

7914 Update of the partitioned table may lead to errors

- 39 -

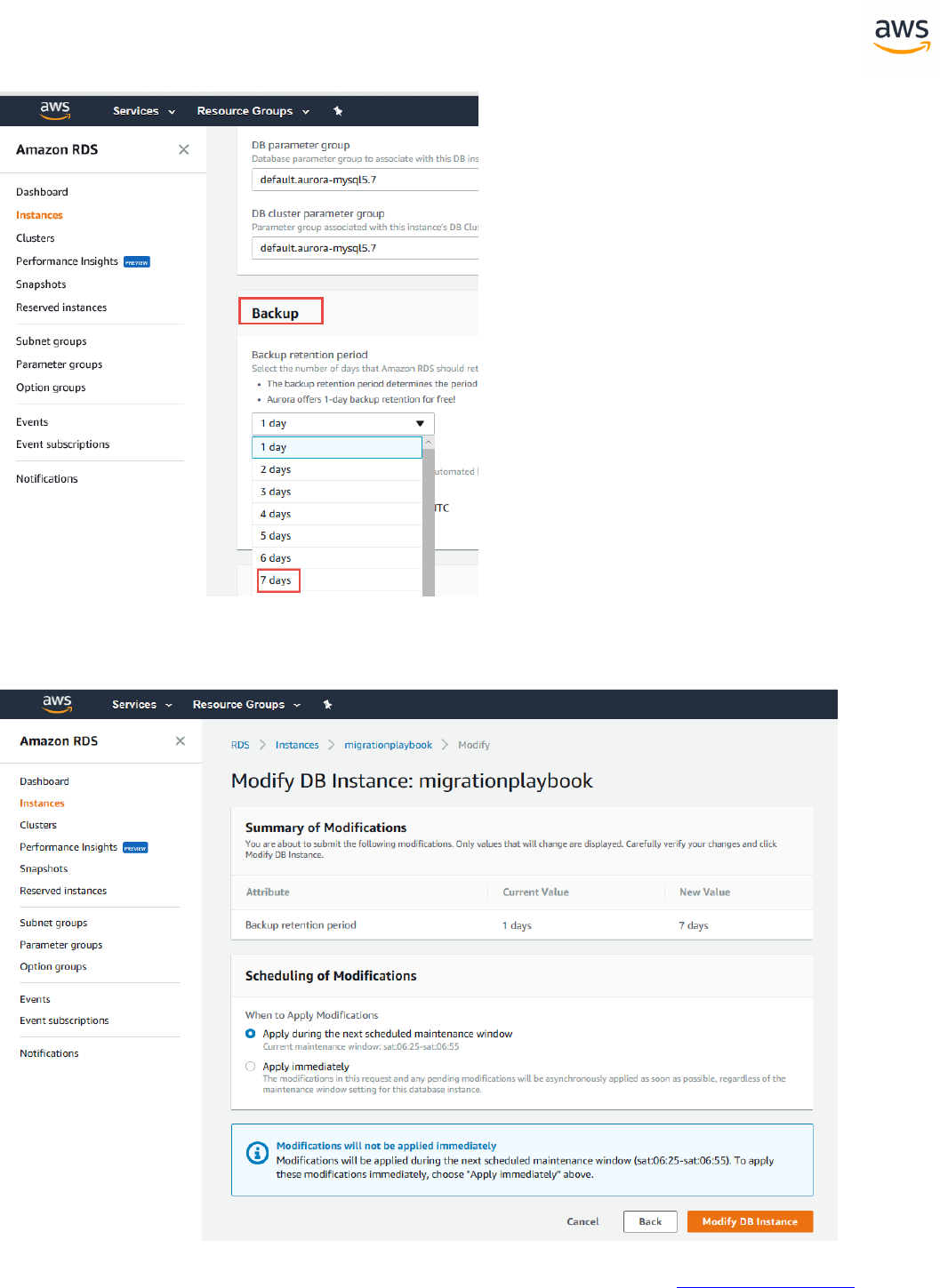

Backup

Migrating from a self-managed backup policy to a Platform as a Service (PaaS) environment such as

Aurora PostgreSQL is a complete paradigm shift. You no longer need to worry about transaction logs,

file groups, disks running out of space, and purging old backups. Amazon RDS provides guaranteed

continuous backup with point-in-time restore up to 35 days. Therefor, AWS SCT does not automatically

convert backups.

For more details, see Backup and Restore.

Action Code Action Message

7903 PostgreSQL does not have functionality similar to SQL Server Backup

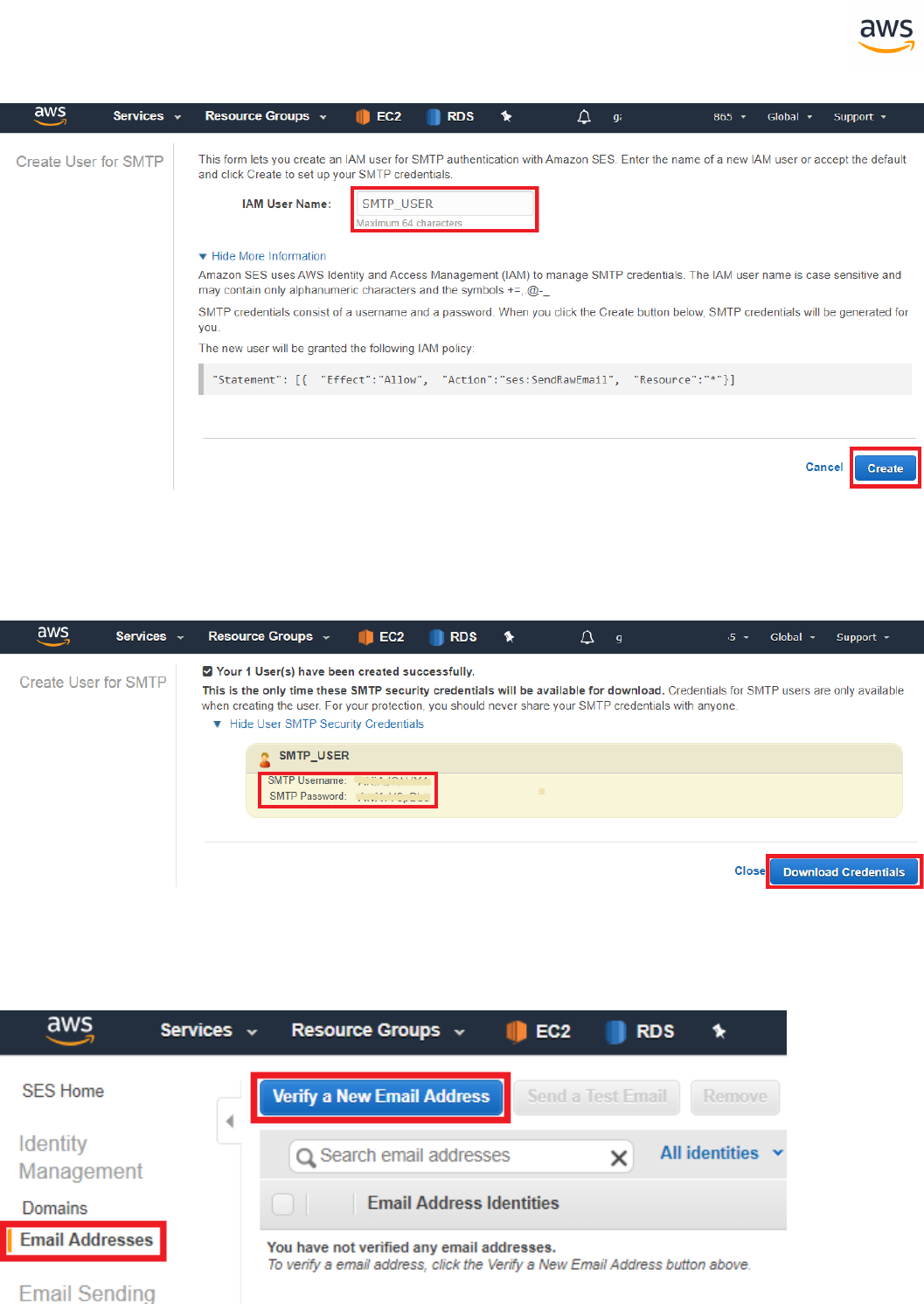

SQL Server Mail

Aurora PostgreSQL does not provide native support sending mail from the database.

For more details and potential workarounds, see Database Mail.

Action Code Action Message

7900 PostgreSQL does not have functionality similar to SQL Server Database Mail

- 40 -

SQL Server Agent

Aurora PostgreSQL does not provide functionality similar to SQL Server Agent as an external, cross-

instance scheduler. However, Aurora PostgreSQL does provide a native, in-database scheduler. It is lim-

ited to the cluster scope and can't be used to manage multiple clusters. Therefore, AWS SCT can not

automatically convert Agent jobs and alerts.

For more details, see SQL Server Agent.

Action Code Action Message

7902 PostgreSQL does not have functionality similar to SQL Server Agent

Service Broker

Aurora PostgreSQL does not provide a compatible solution to the SQL Server Service Broker. However,

you can use DB Links and AWS Lambda to achieve similar functionality.

For more details, see Service Broker.

Action Code Action Message

7901 PostgreSQL does not have functionality similar to SQL Server Service Broker

- 41 -

XML

The XML options and features in Aurora PostgreSQL are similar to SQL Server and the most important

functions (XPATH and XQUERY)or almost identical.

PostgreSQL does not support FOR XML clause, the walkaround for that is using string_agg instead. In

some cases, it might be more efficient to use JSON instead of XML.

For more details, see XML.

Action Code Action Message

7816 PostgreSQL doesn't support any methods for datatype XML

7817 PostgreSQL doesn't support option [for xml path] in the SQL-queries

Constraints

Constraints feature is almost fully automated and compatible between SQL Server and Aurora Post-

greSQL.

The differences are: missing SET DEFAULT and Check constraint with sub-query.

For more details, see Constraints.

Action Code Action Message

7825 The default value for a DateTime column removed

7915 Please check unique(exclude) constraint existence on field %s

Linked Servers

Aurora PostgreSQL does support remote data access from the database. Connectivity between

schemas is trivial, but connectivity to other instances require an extension installation

For more details, see Constraints.

Action Code Action Message

7645 PostgreSQL doesn't support executing a pass-through command on a linked server

- 42 -

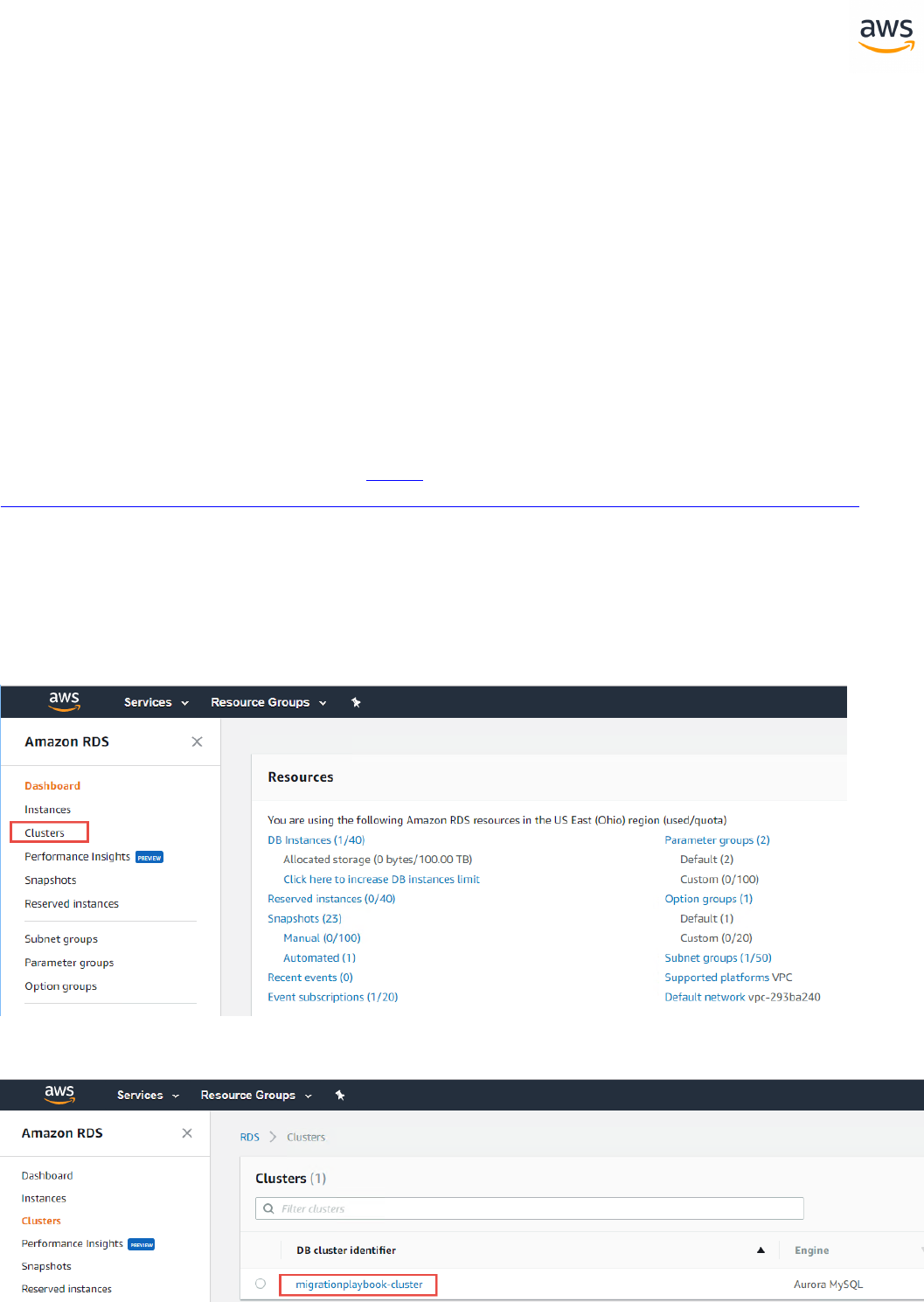

AWS Database Migration Service (DMS)

Overview

The AWS Database Migration Service (DMS) helps you migrate databases to AWS quickly and securely.

The source database remains fully operational during the migration, minimizing downtime to applic-

ations that rely on the database. The AWS Database Migration Service can migrate your data to and

from most widely-used commercial and open-source databases.

The service supports homogenous migrations such as Oracle to Oracle as well as heterogeneous migra-

tions between different database platforms such as Oracle to Amazon Aurora or Microsoft SQL Server

to MySQL. It also allows you to stream data to Amazon Redshift, Amazon DynamoDB, and Amazon S3

from any of the supported sources, which are Amazon Aurora, PostgreSQL, MySQL, MariaDB, Oracle

Database, SAP ASE, SQL Server, IBM DB2 LUW, and MongoDB, enabling consolidation and easy ana-

lysis of data in a petabyte-scale data warehouse. The AWS Database Migration Service can also be used

for continuous data replication with high-availability.

When migrating databases to Aurora, Redshift or DynamoDB, you can use DMS free for six months.

For all supported sources for DMS, see

https://docs.aws.amazon.com/dms/latest/userguide/CHAP_Source.html

For all supported targets for DMS, see

https://docs.aws.amazon.com/dms/latest/userguide/CHAP_Target.html

Migration Tasks Performed by AWS DMS

l In a traditional solution, you need to perform capacity analysis, procure hardware and software,

install and administer systems, and test and debug the installation. AWS DMS automatically man-

ages the deployment, management, and monitoring of all hardware and software needed for

your migration. Your migration can be up and running within minutes of starting the AWS DMS

configuration process.

l With AWS DMS, you can scale up (or scale down) your migration resources as needed to match

your actual workload. For example, if you determine that you need additional storage, you can

easily increase your allocated storage and restart your migration, usually within minutes. On the

other hand, if you discover that you aren't using all of the resource capacity you configured, you

can easily downsize to meet your actual workload.

l AWS DMS uses a pay-as-you-go model. You only pay for AWS DMS resources while you use them

as opposed to traditional licensing models with up-front purchase costs and ongoing main-

tenance charges.

l AWS DMS automatically manages all of the infrastructure that supports your migration server

including hardware and software, software patching, and error reporting.

- 43 -

l AWS DMS provides automatic failover. If your primary replication server fails for any reason, a

backup replication server can take over with little or no interruption of service.

l AWS DMS can help you switch to a modern, perhaps more cost-effective database engine than

the one you are running now. For example, AWS DMS can help you take advantage of the man-

aged database services provided by Amazon RDS or Amazon Aurora. Or, it can help you move to

the managed data warehouse service provided by Amazon Redshift, NoSQL platforms like

Amazon DynamoDB, or low-cost storage platforms like Amazon S3. Conversely, if you want to

migrate away from old infrastructure but continue to use the same database engine, AWS DMS

also supports that process.

l AWS DMS supports nearly all of today’s most popular DBMS engines as data sources, including

Oracle, Microsoft SQL Server, MySQL, MariaDB, PostgreSQL, Db2 LUW, SAP, MongoDB, and

Amazon Aurora.

l AWS DMS provides a broad coverage of available target engines including Oracle, Microsoft SQL

Server, PostgreSQL, MySQL, Amazon Redshift, SAP ASE, S3, and Amazon DynamoDB.

l You can migrate from any of the supported data sources to any of the supported data targets.

AWS DMS supports fully heterogeneous data migrations between the supported engines.

l AWS DMS ensures that your data migration is secure. Data at rest is encrypted with AWS Key Man-

agement Service (AWS KMS) encryption. During migration, you can use Secure Socket Layers (SSL)

to encrypt your in-flight data as it travels from source to target.

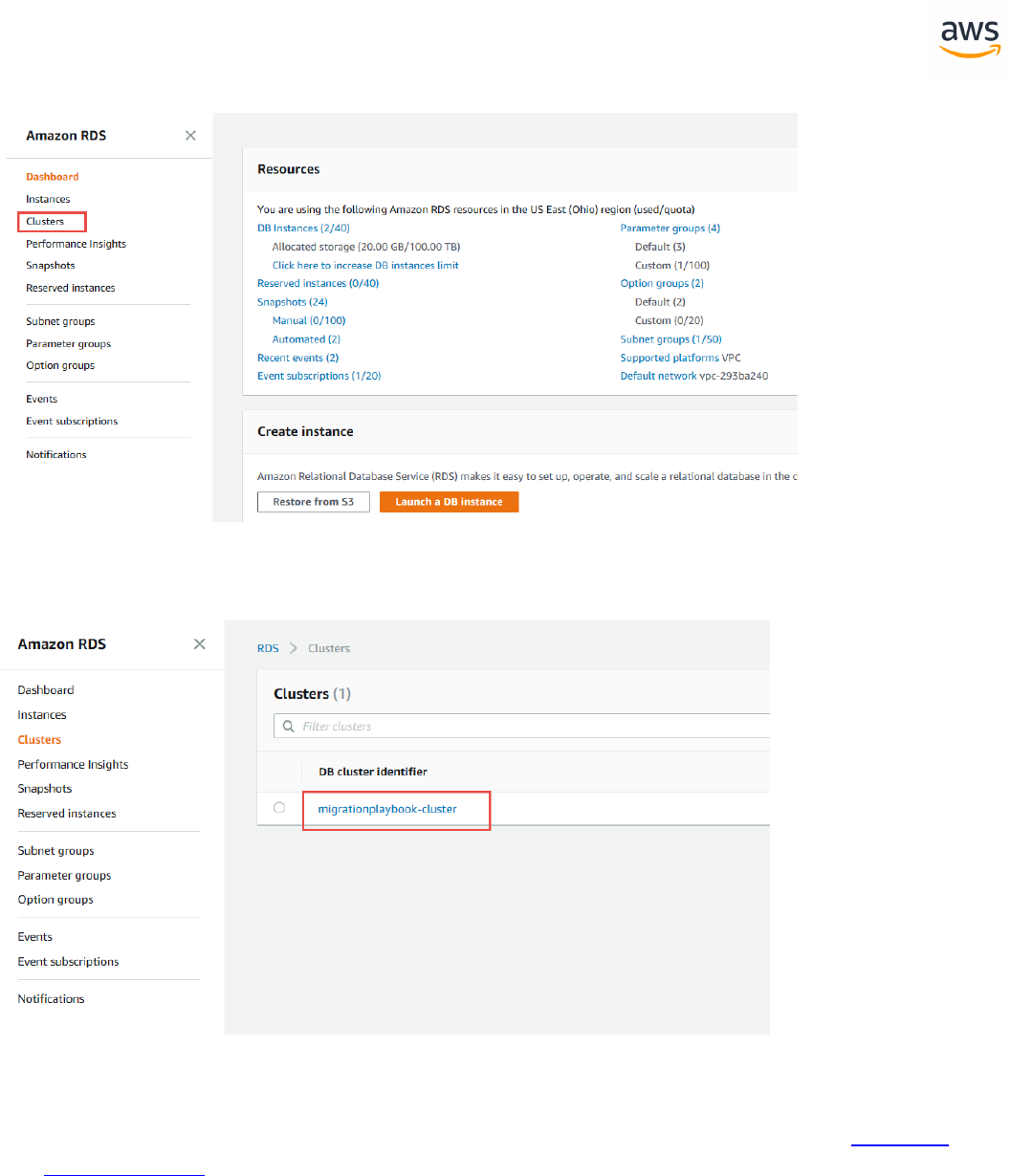

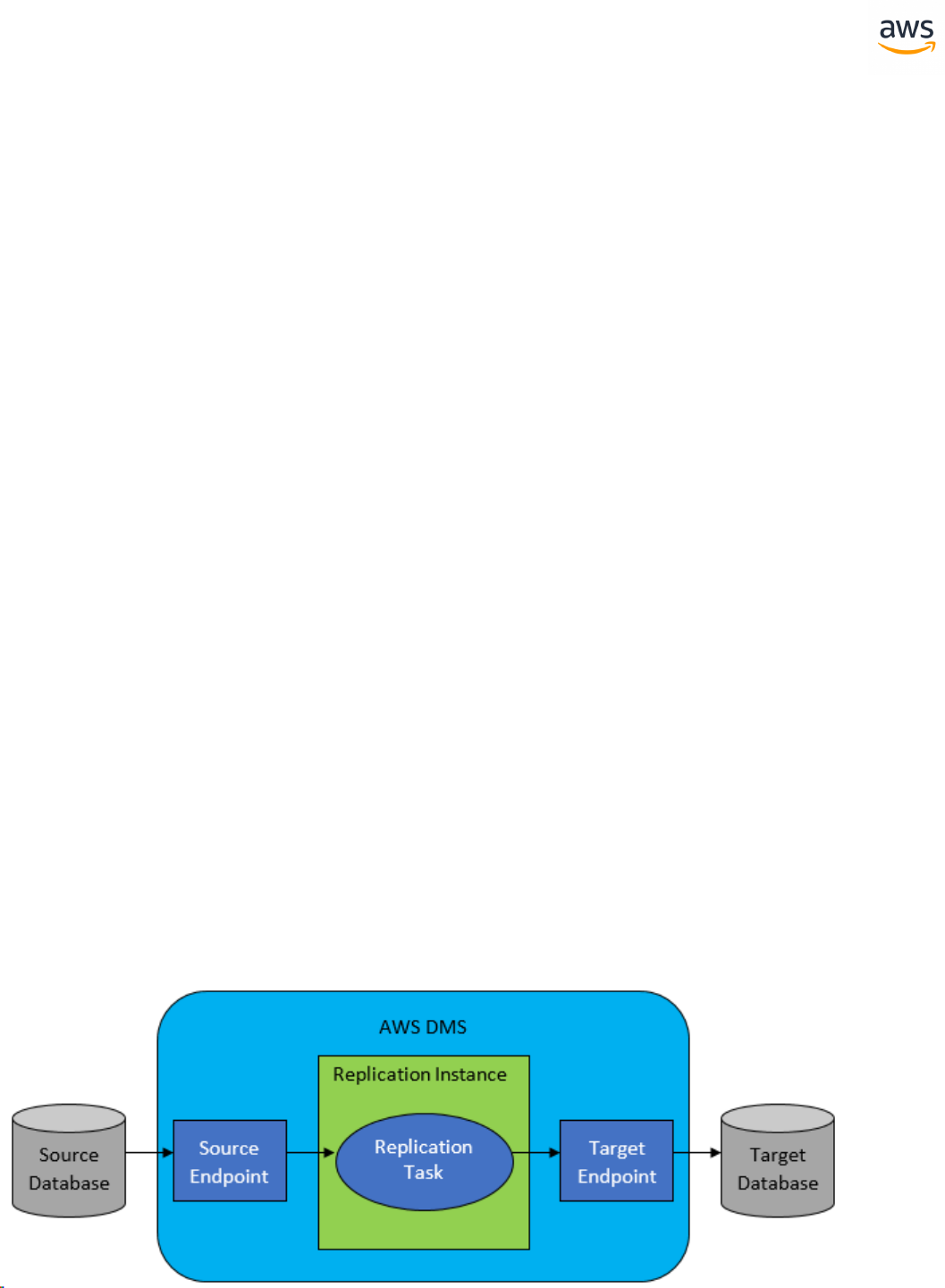

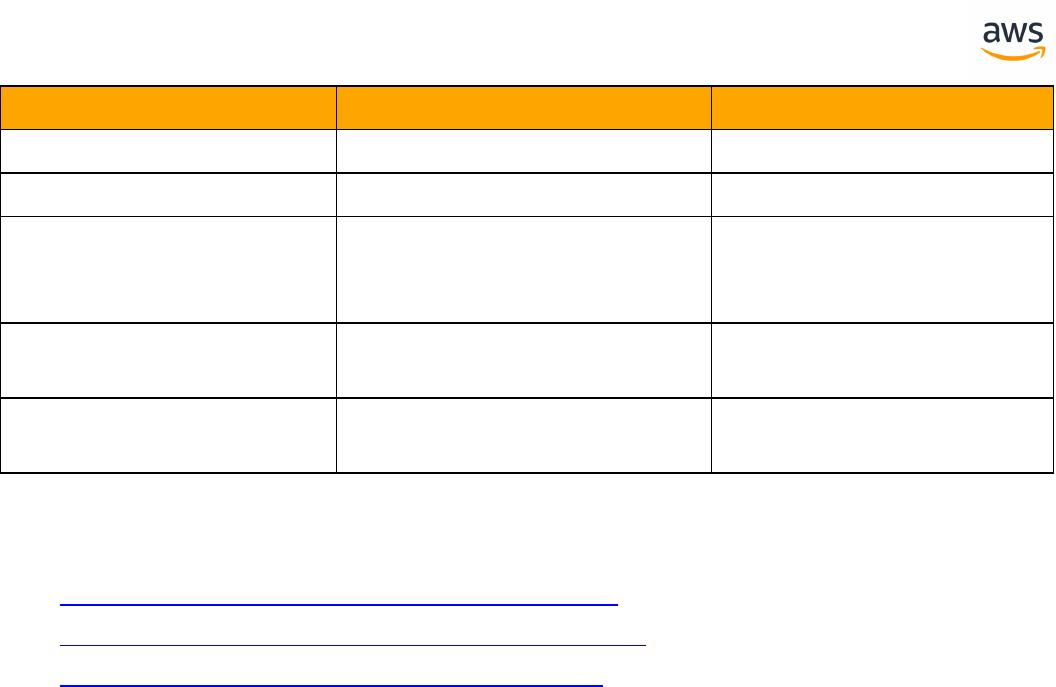

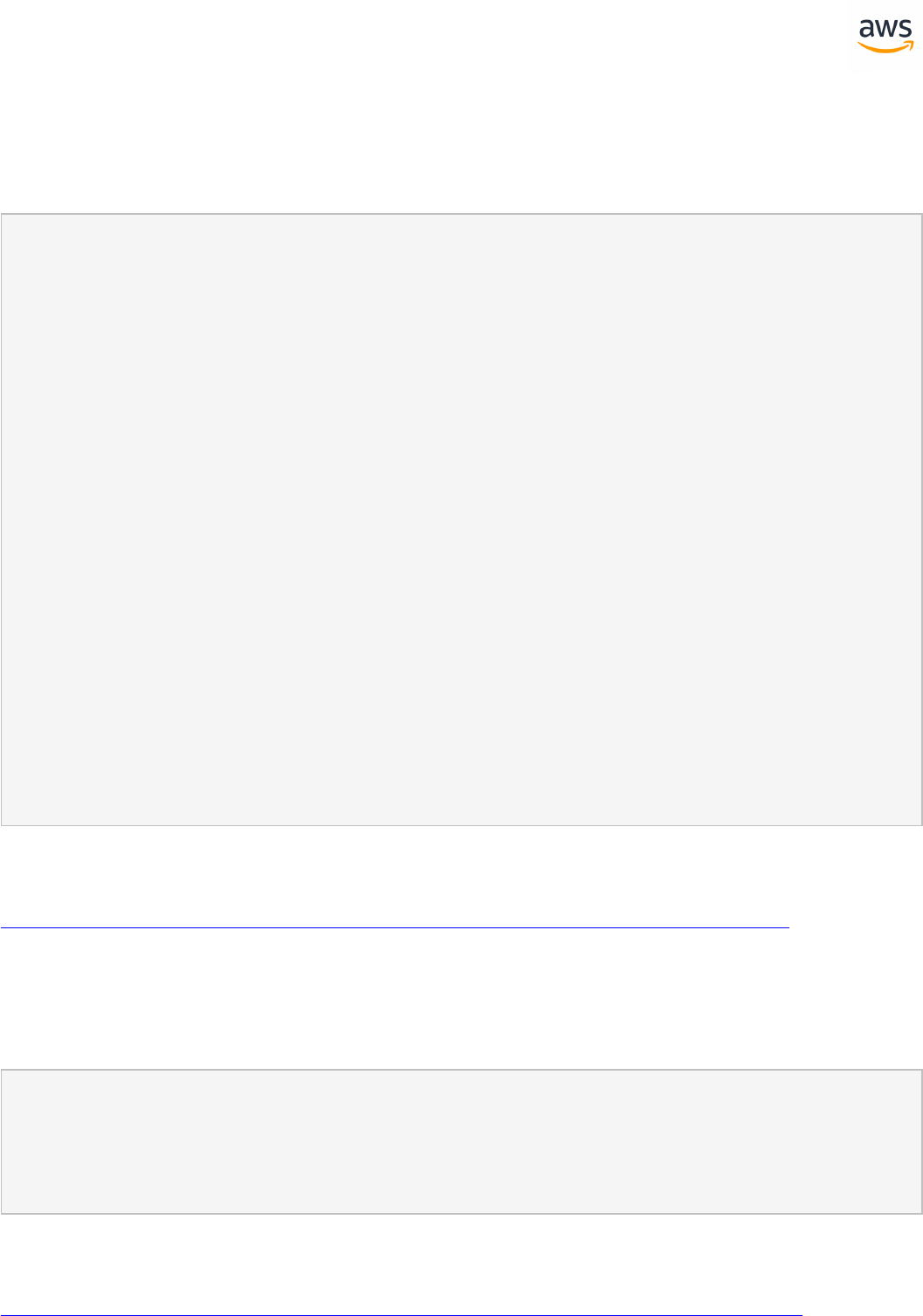

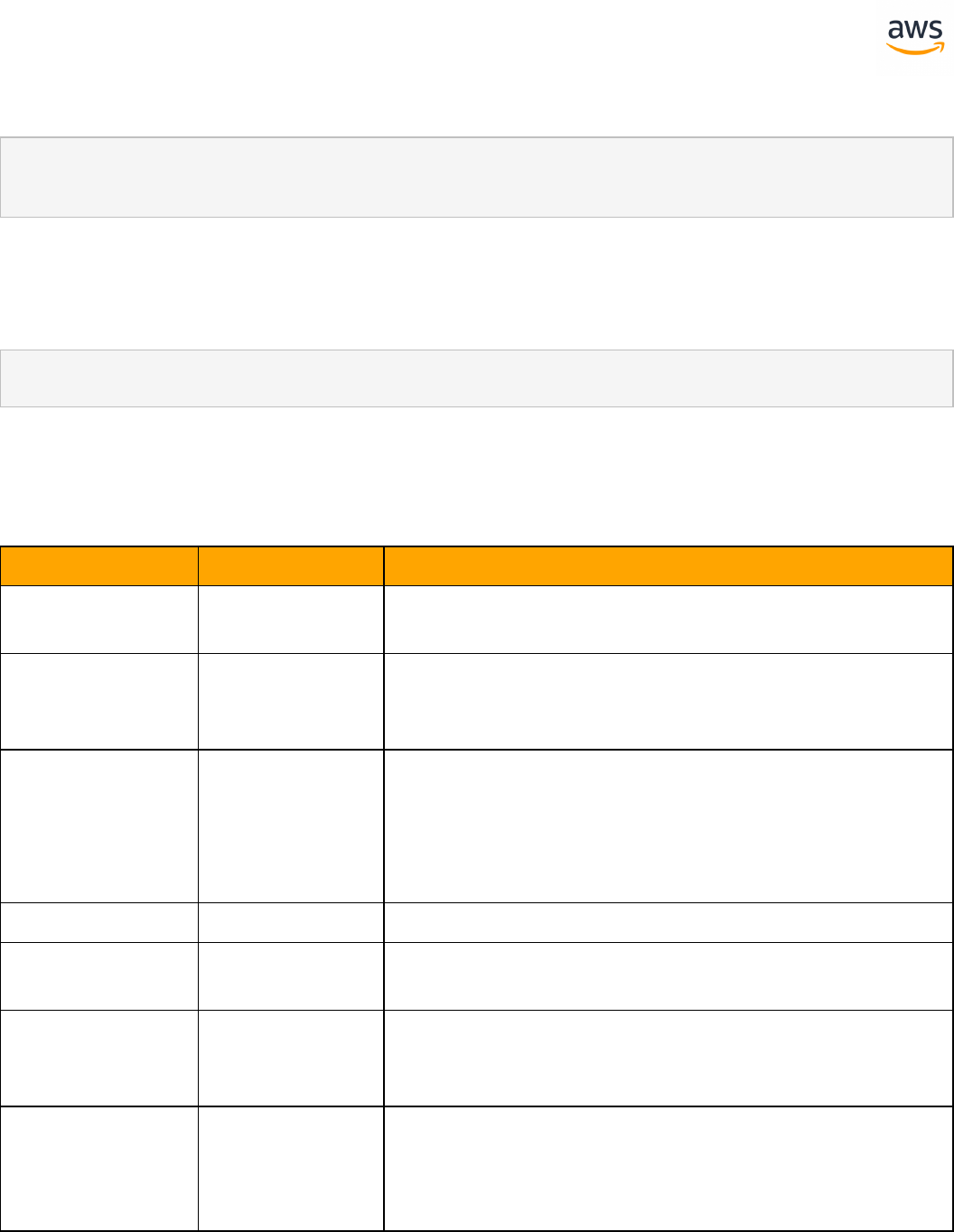

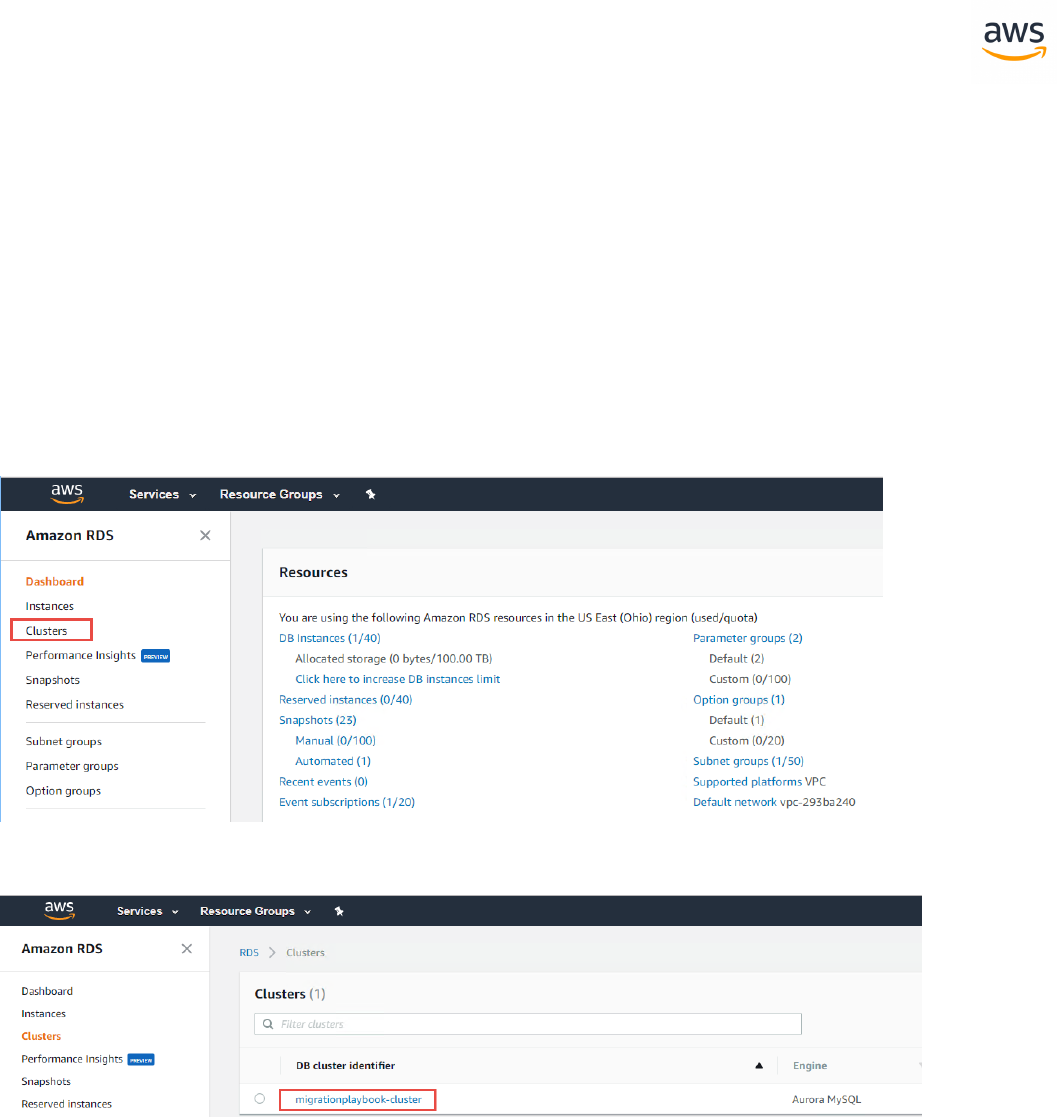

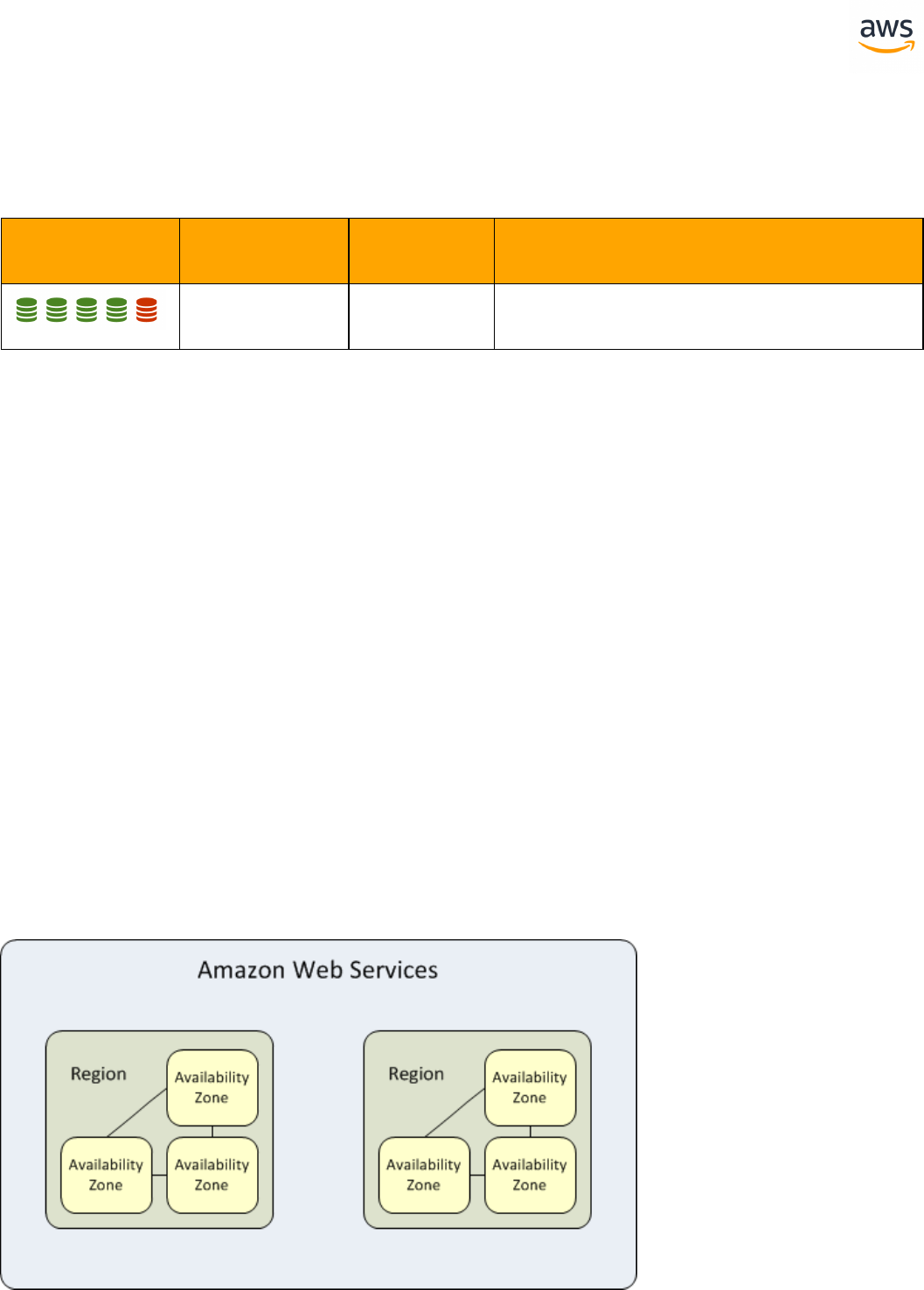

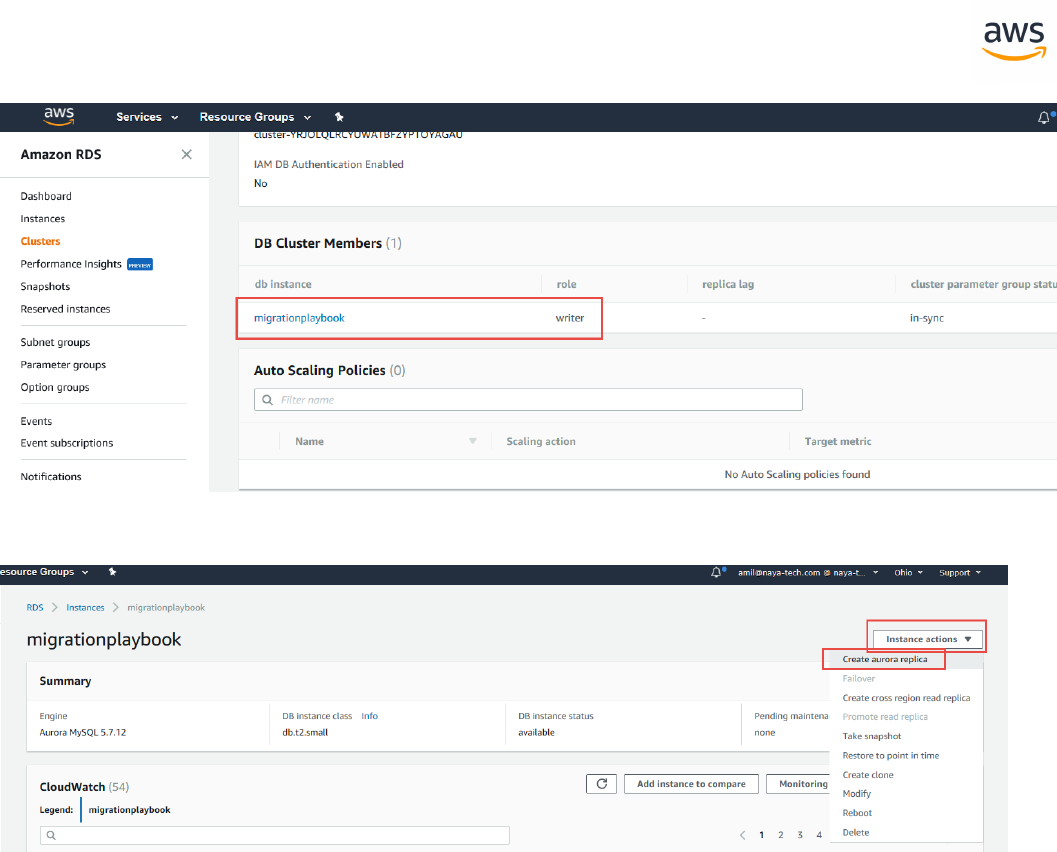

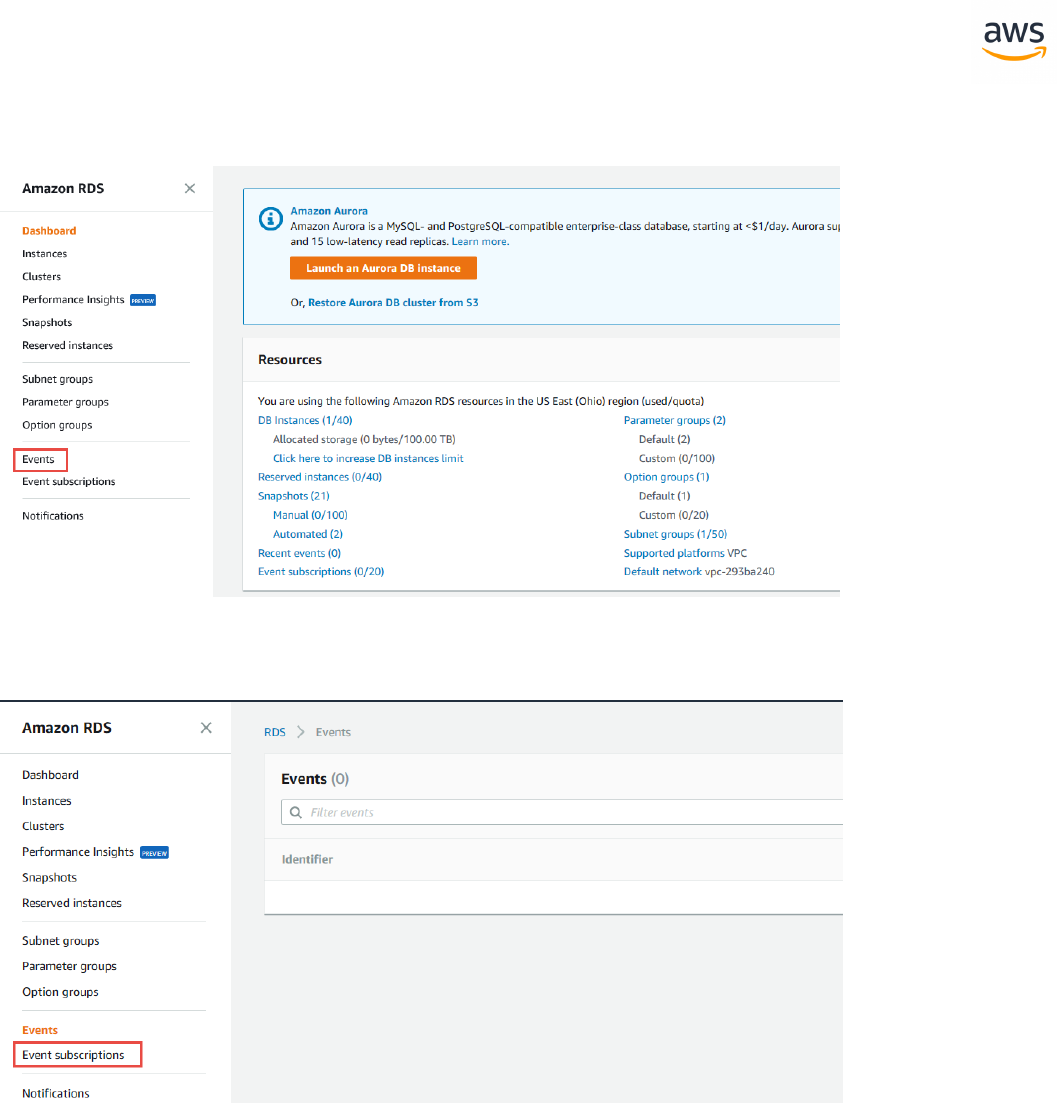

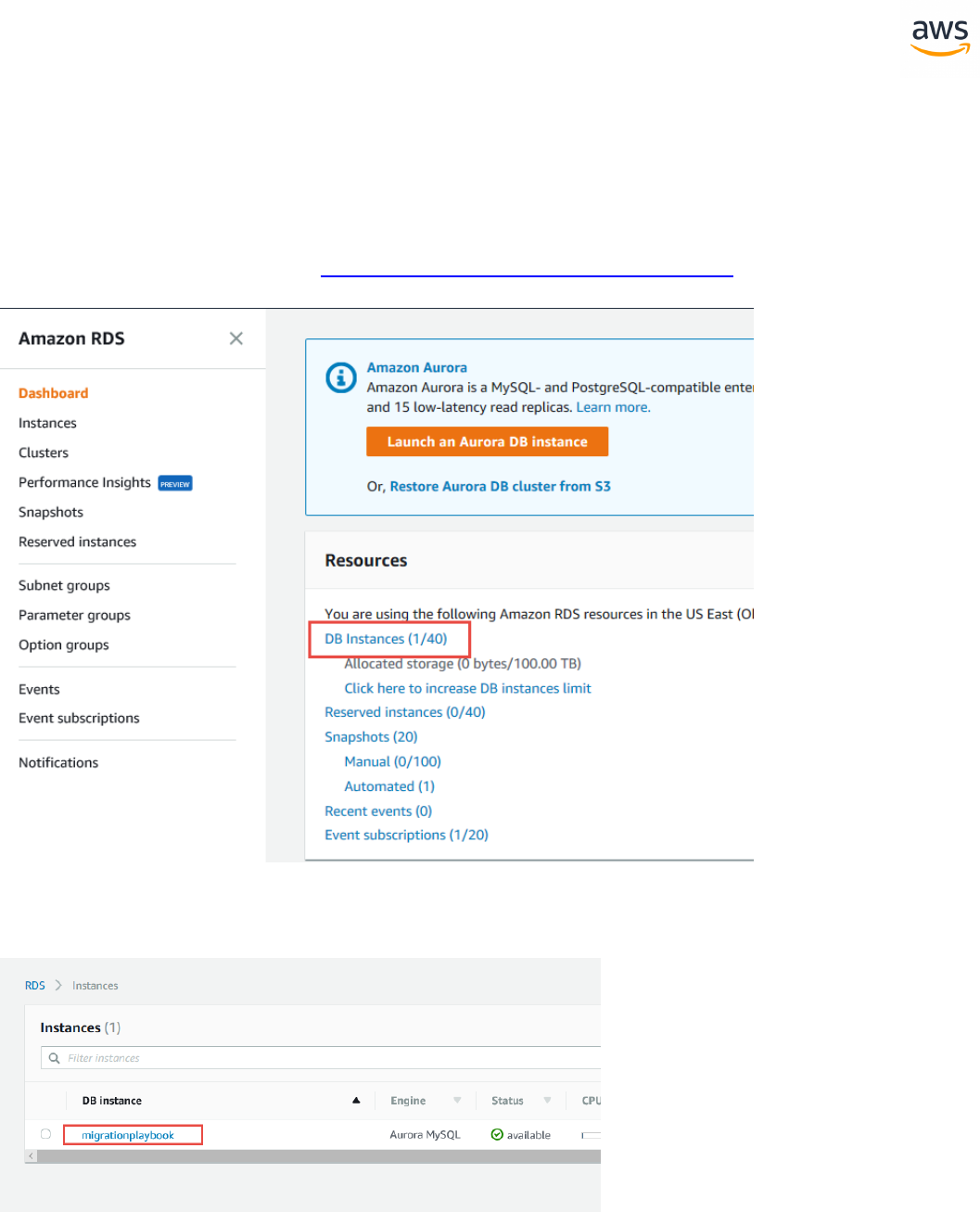

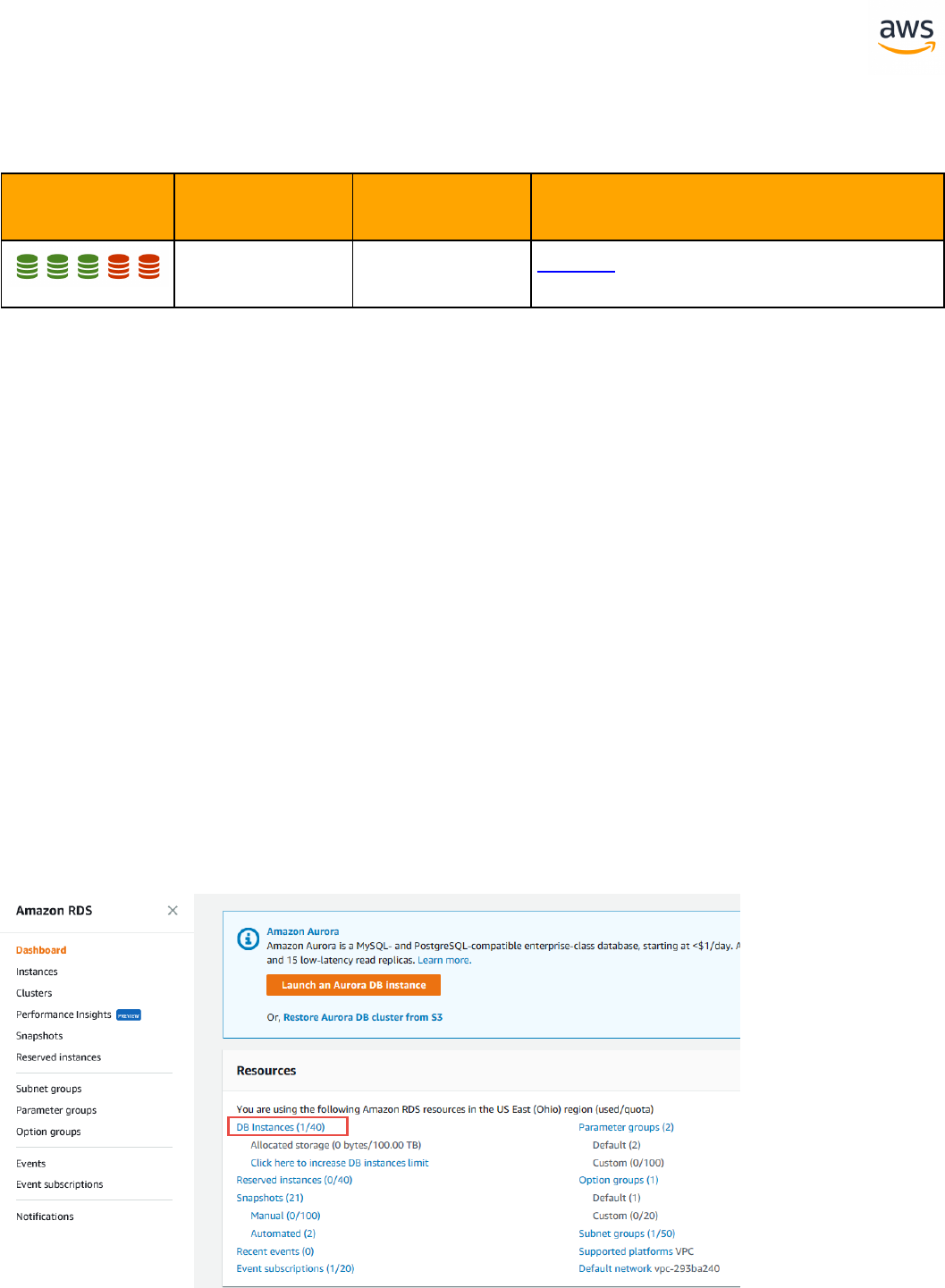

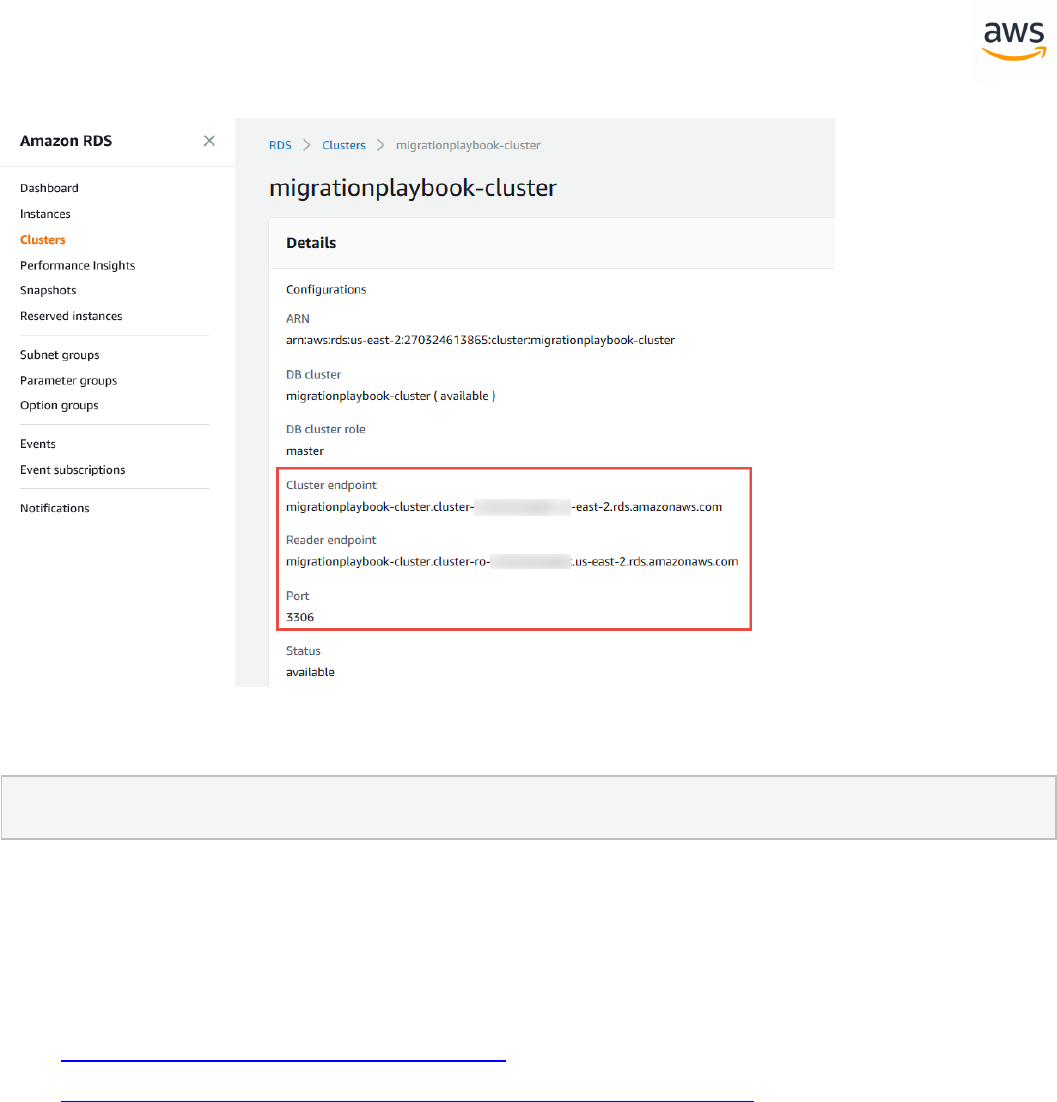

How AWS DMS Works

At its most basic level, AWS DMS is a server in the AWS Cloud that runs replication software. You create

a source and target connection to tell AWS DMS where to extract from and load to. Then, you schedule

a task that runs on this server to move your data. AWS DMS creates the tables and associated primary

keys if they don't exist on the target. You can pre-create the target tables manually if you prefer. Or you

can use AWS SCT to create some or all of the target tables, indexes, views, triggers, and so on.

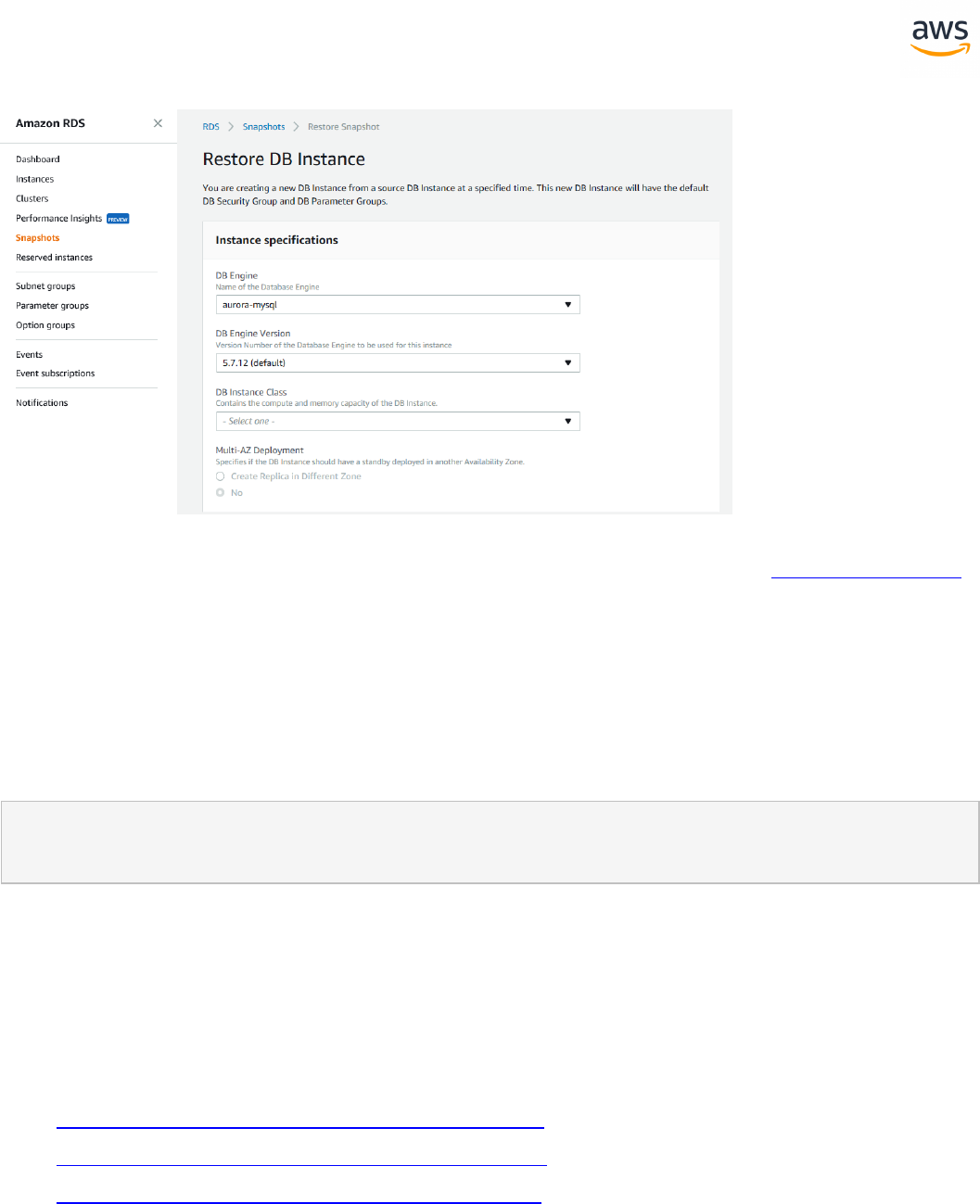

The following diagram illustrates the AWS DMS process.

- 44 -

For a complete guide with a step-by-step walkthrough including all the latest notes for migrating SQL

Server to Aurora MySQL (which is very similar to migrate from SQL Server to Aurora PostgerSQL) with

DMS, see

https://docs.aws.amazon.com/dms/latest/sbs/CHAP_SQLServer2Aurora.html

For more information about DMS, see:

l https://docs.aws.amazon.com/dms/latest/userguide/Welcome.html

l https://docs.aws.amazon.com/dms/latest/userguide/CHAP_BestPractices.html

- 45 -

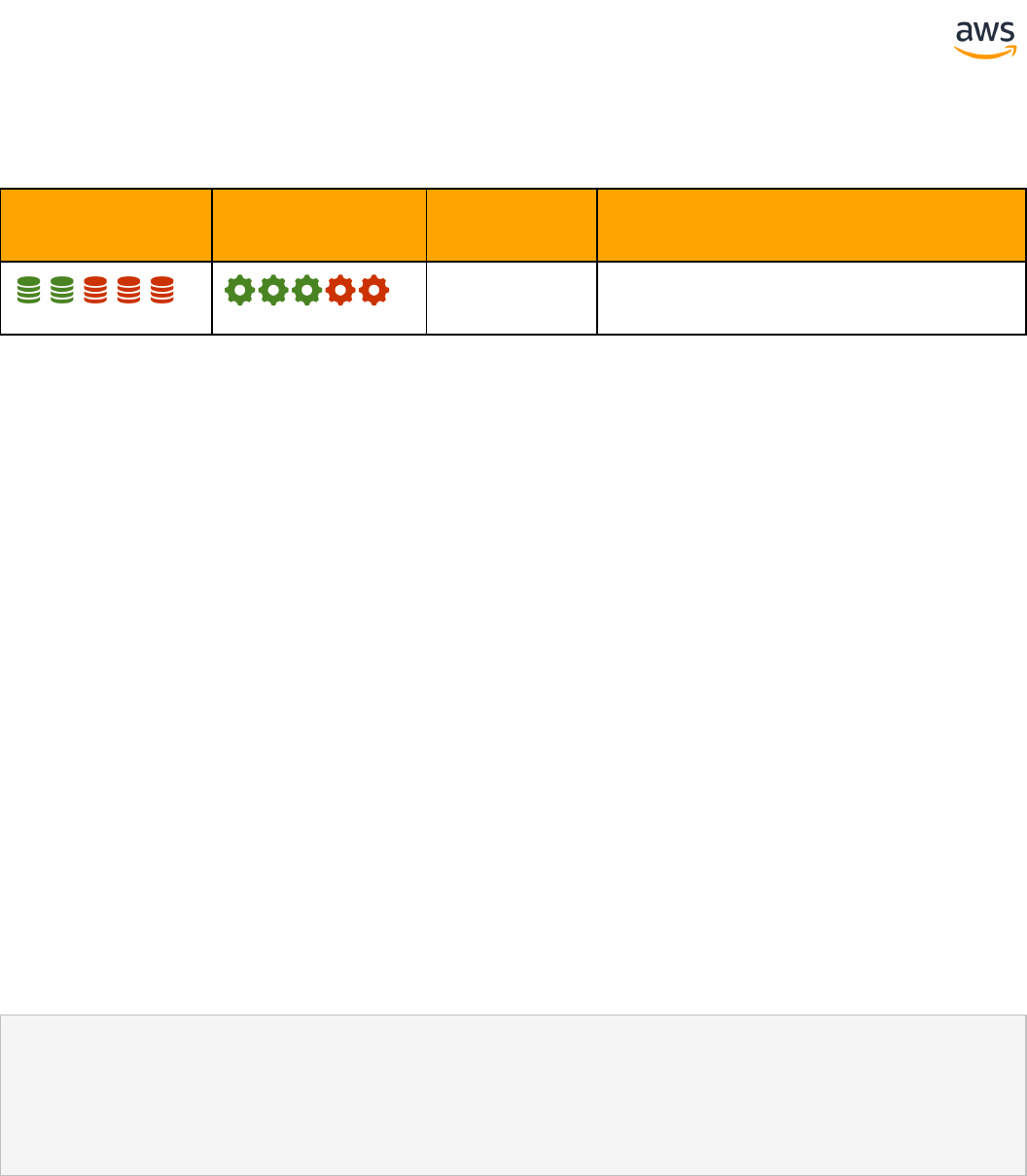

ANSI SQL

- 46 -

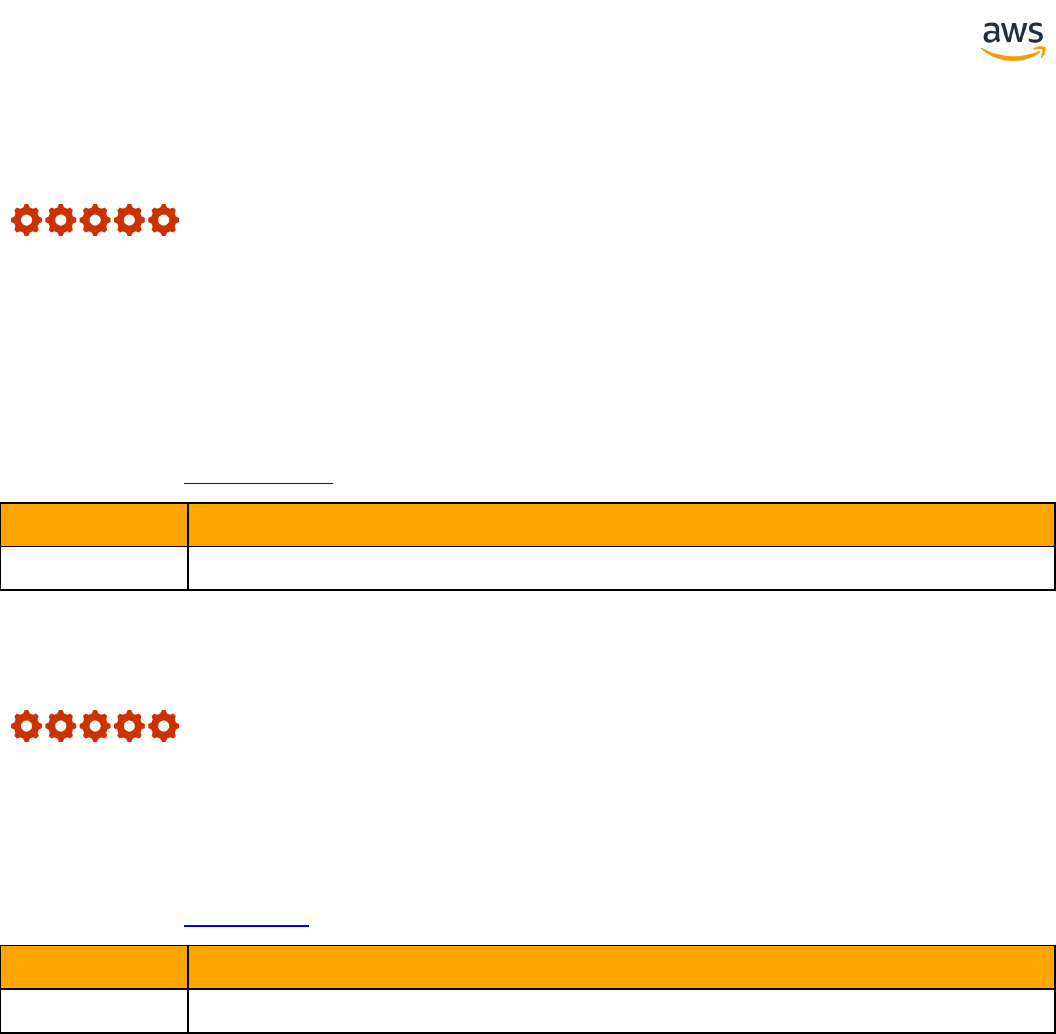

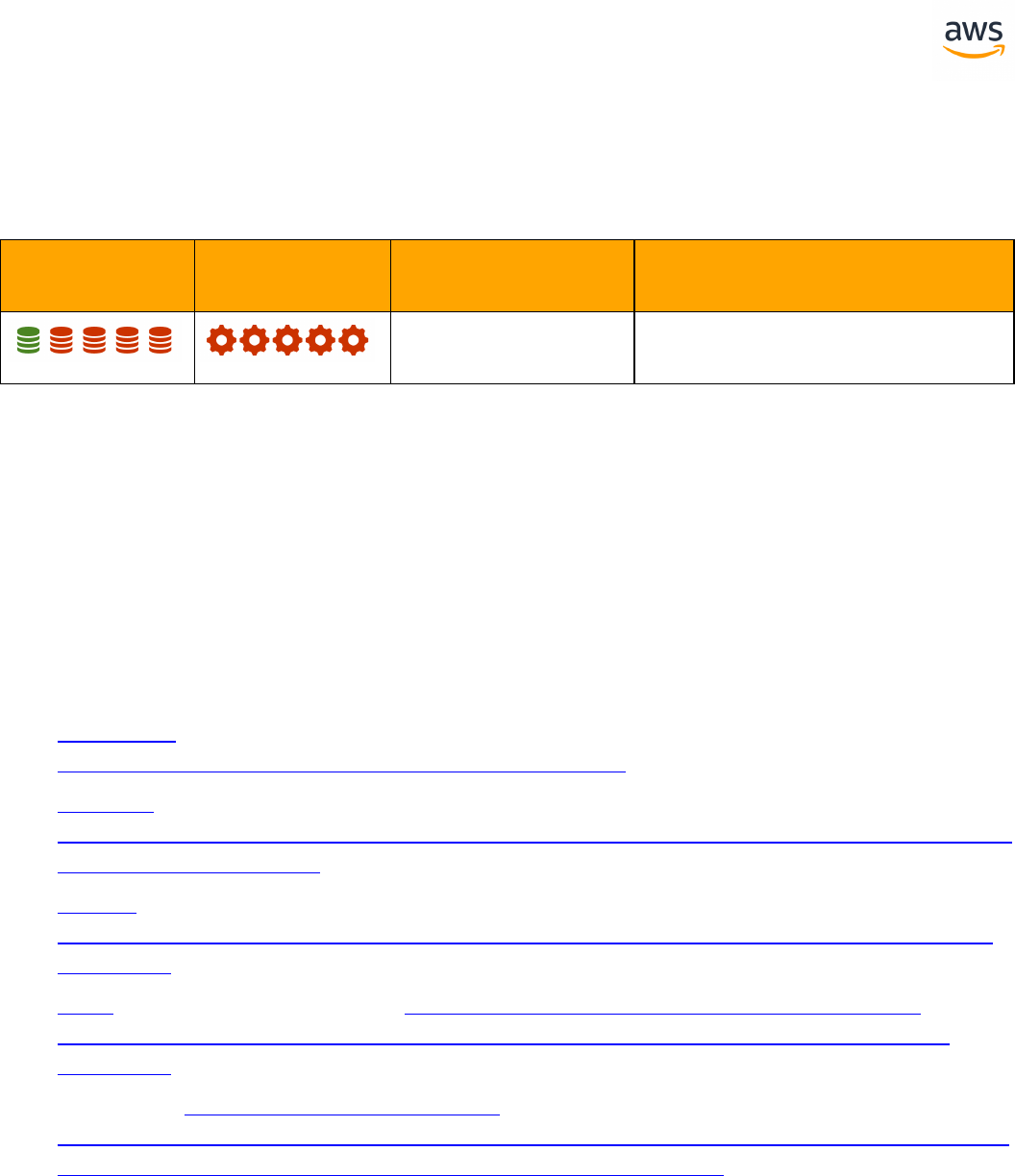

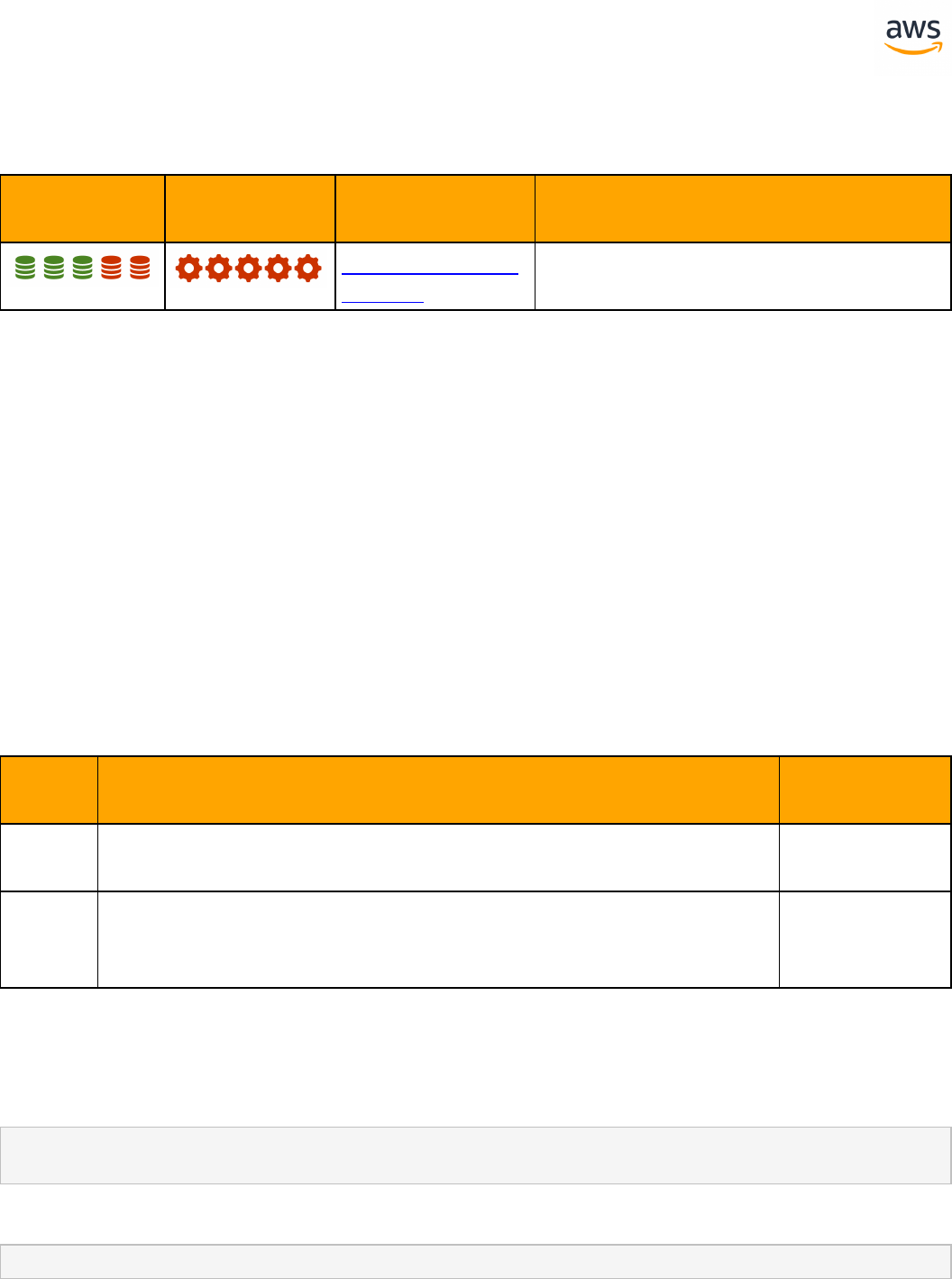

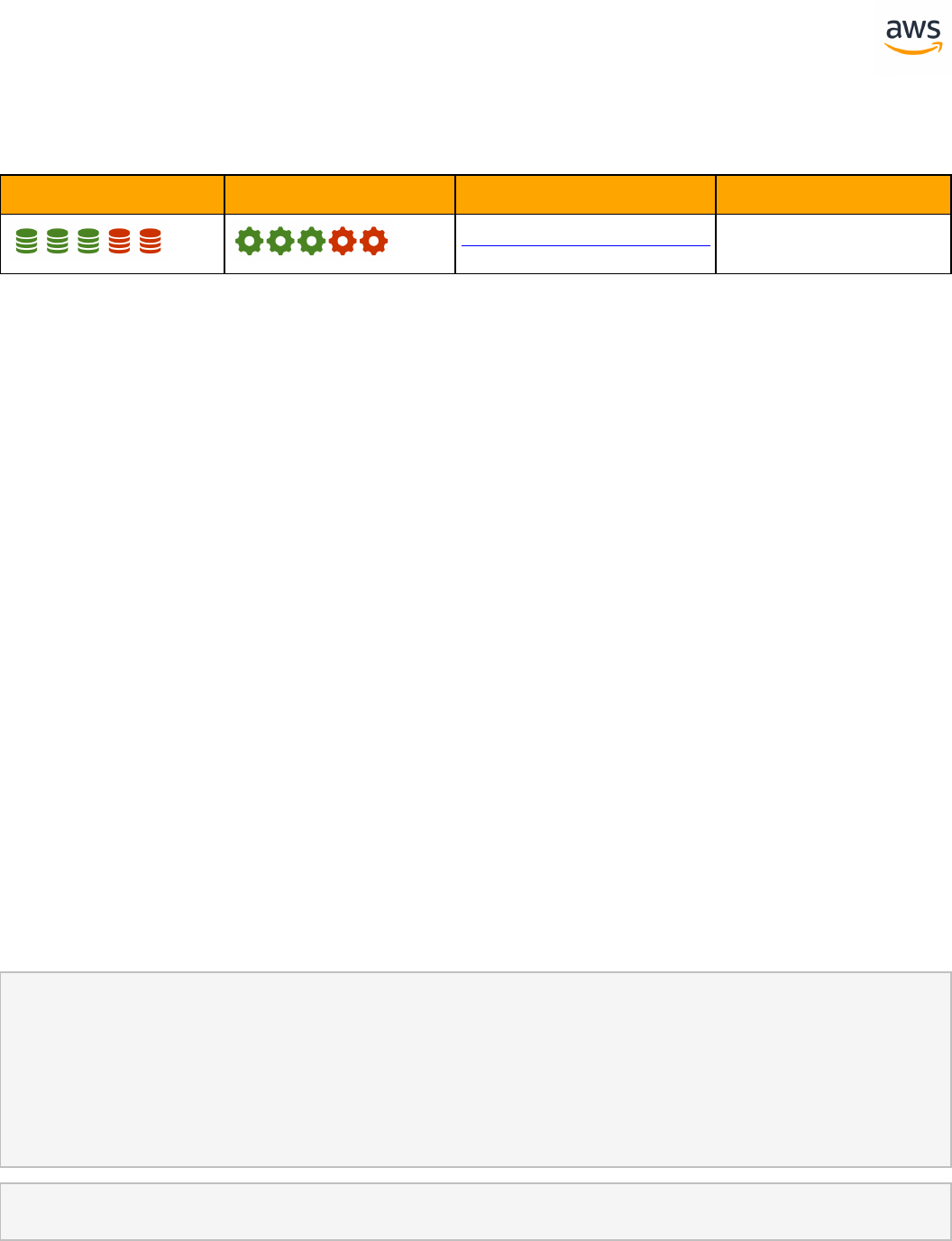

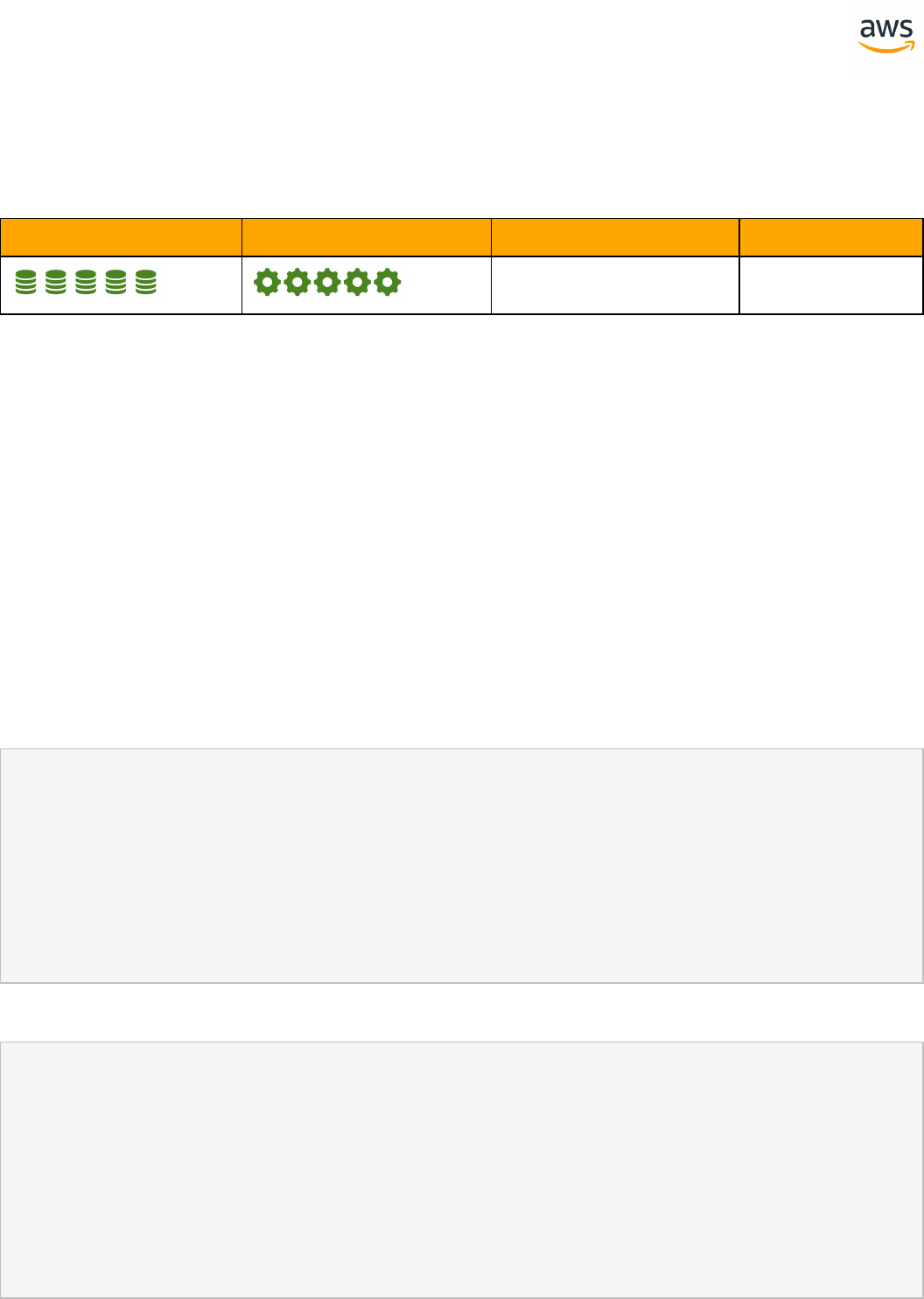

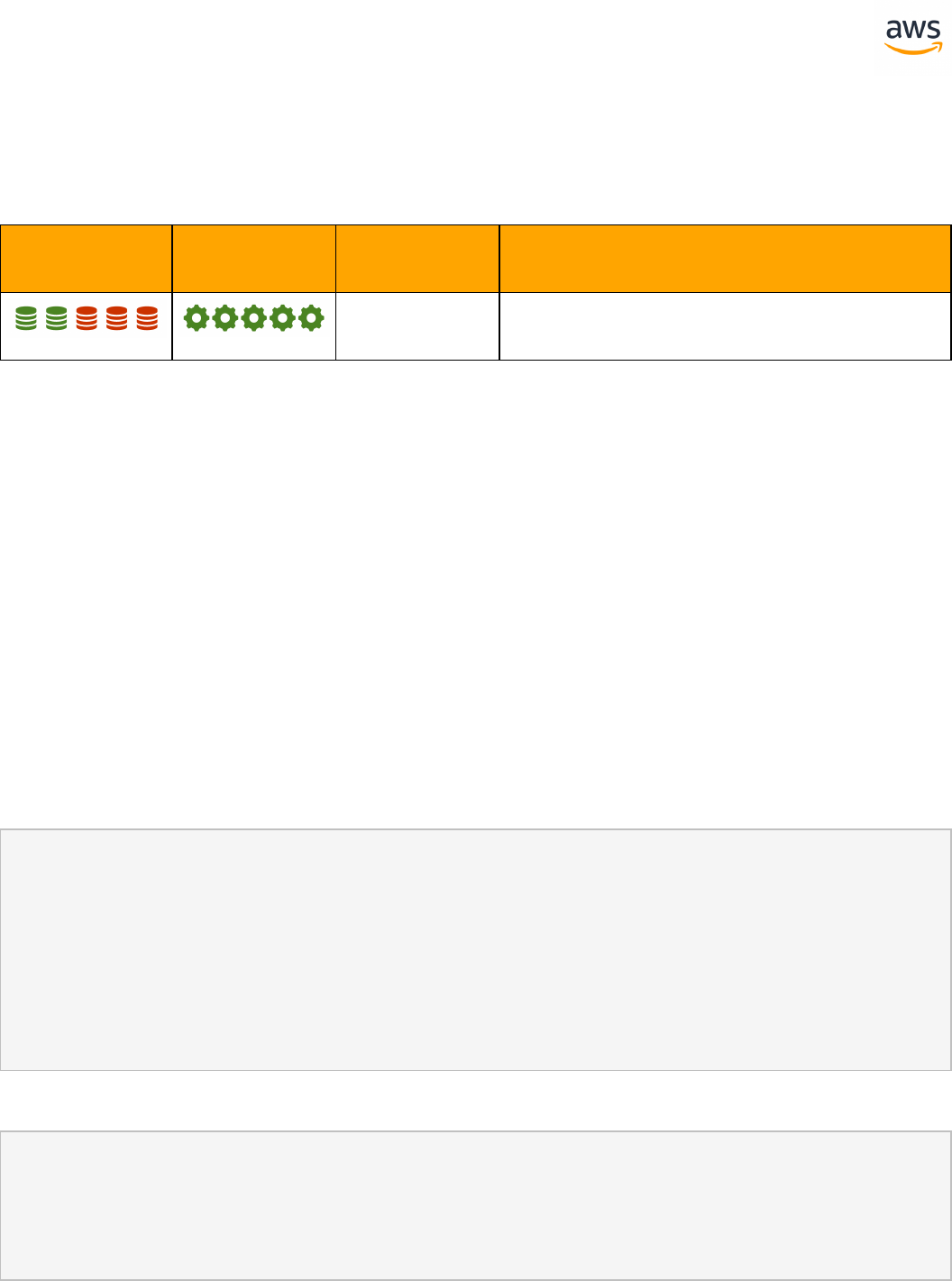

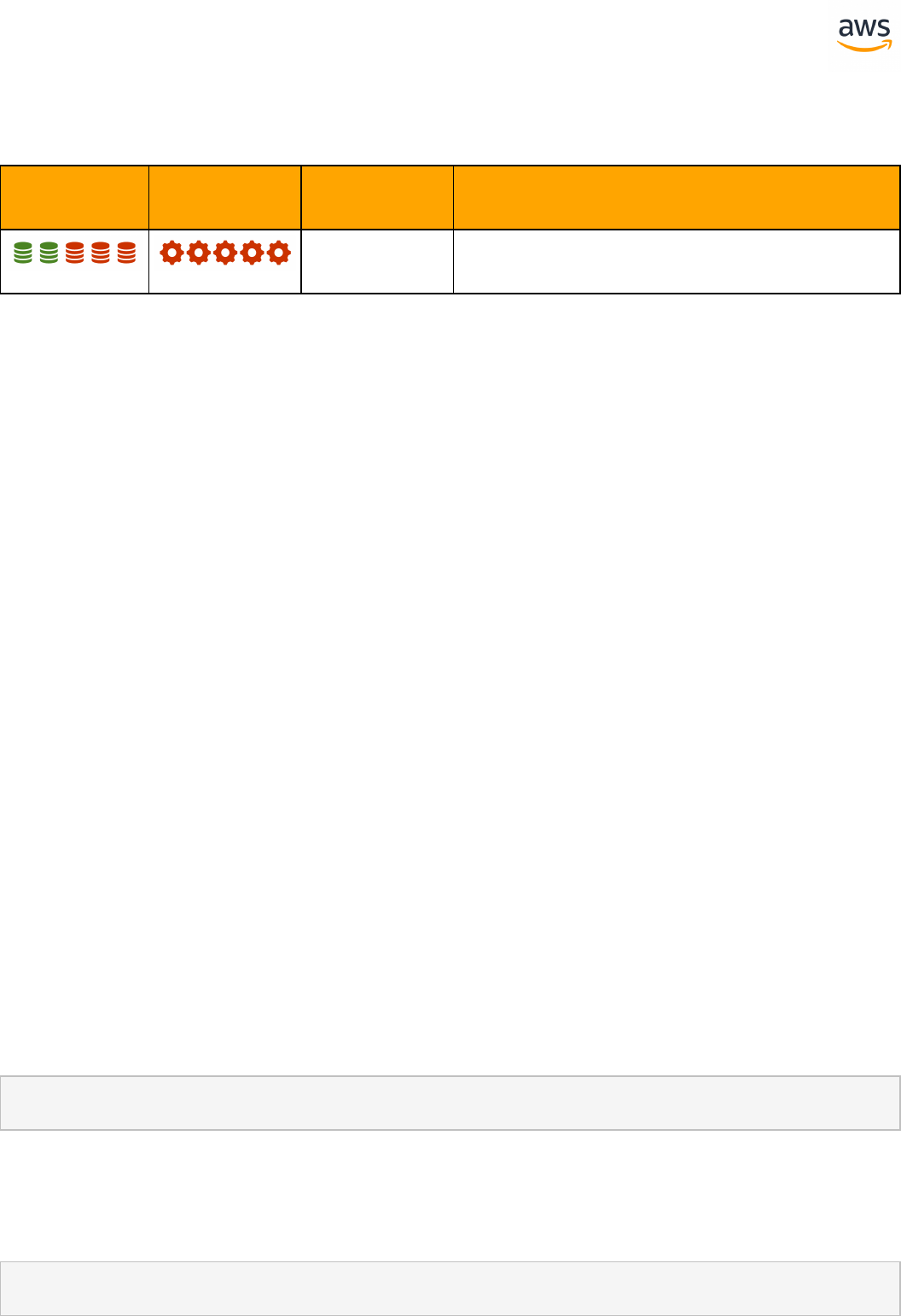

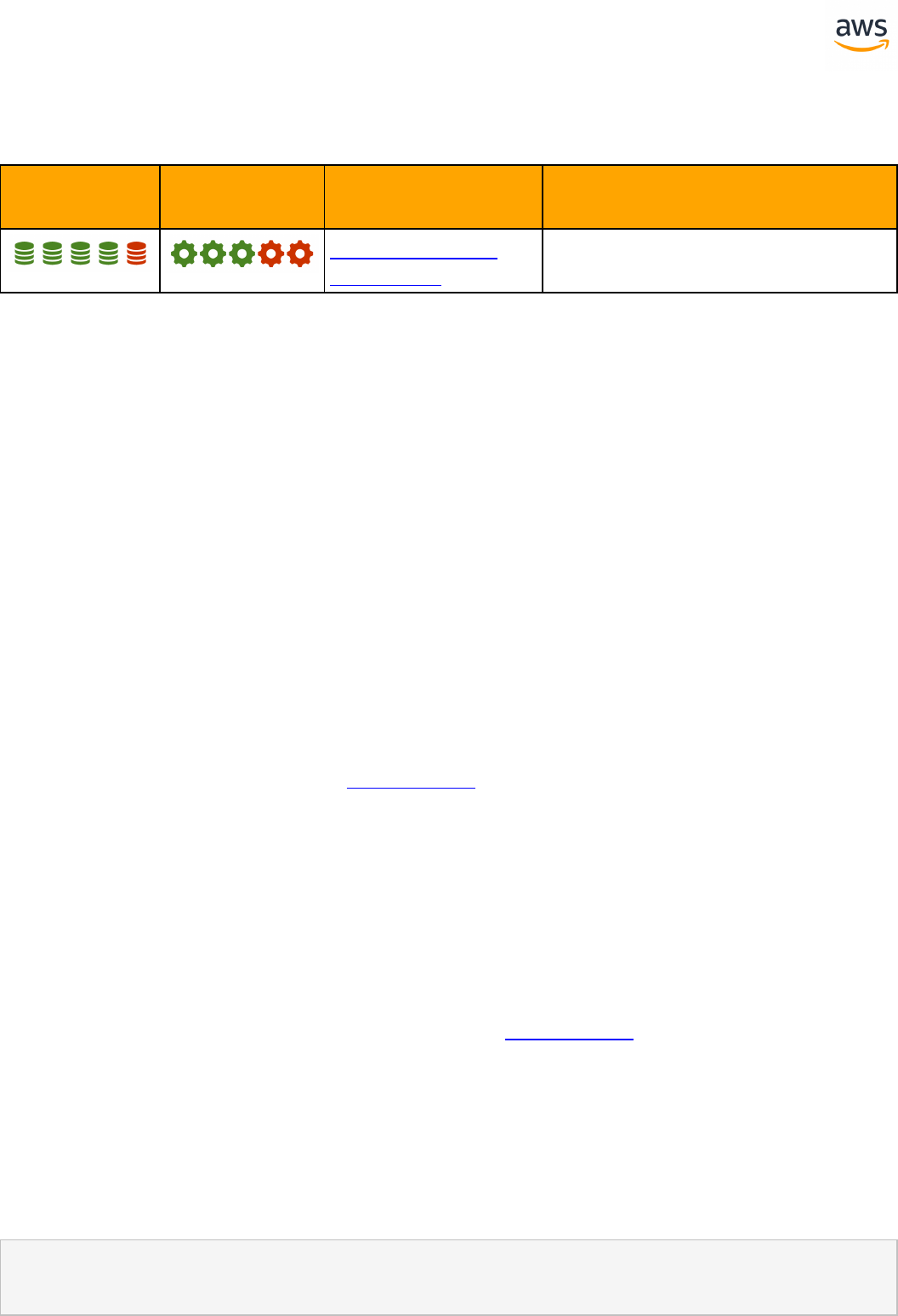

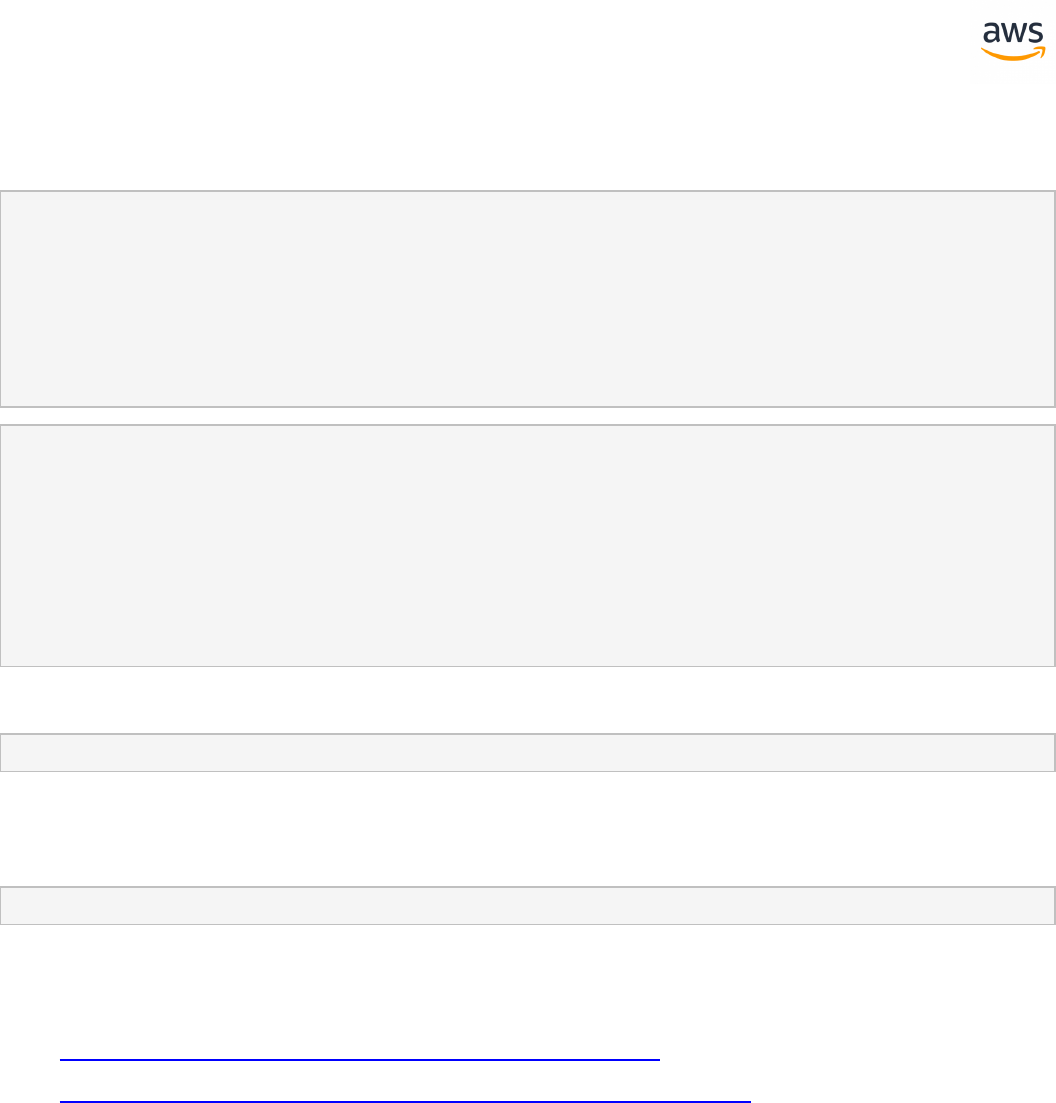

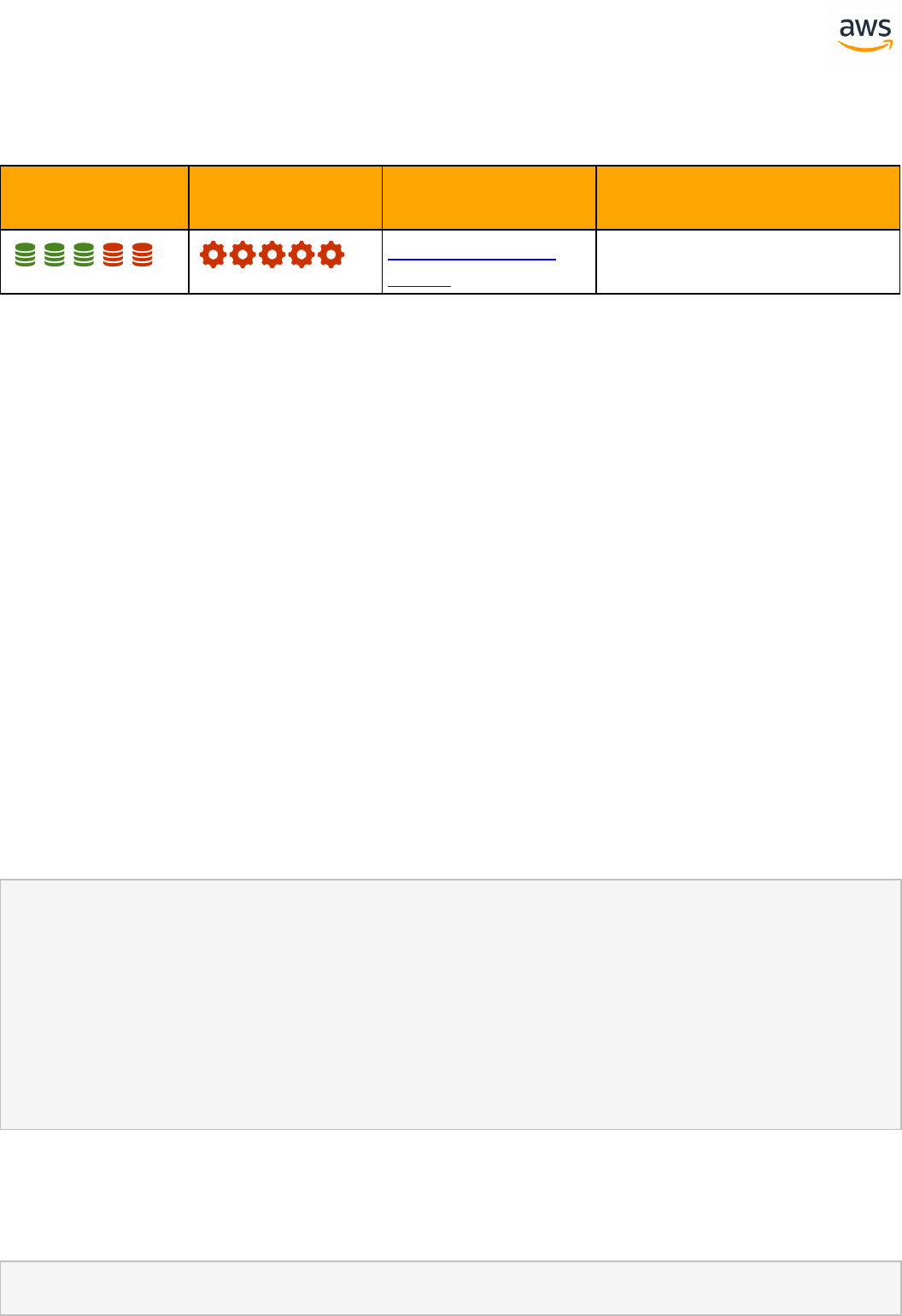

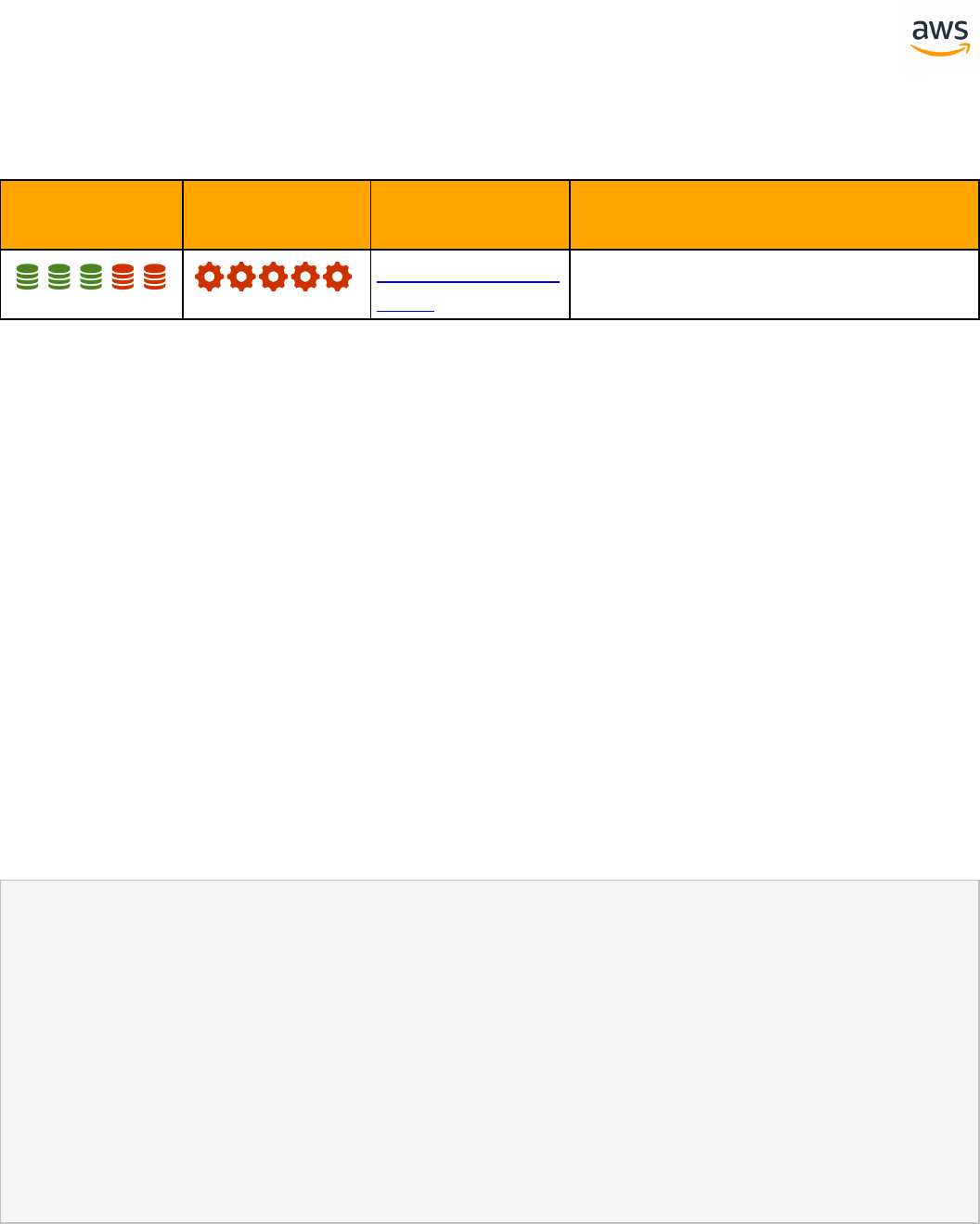

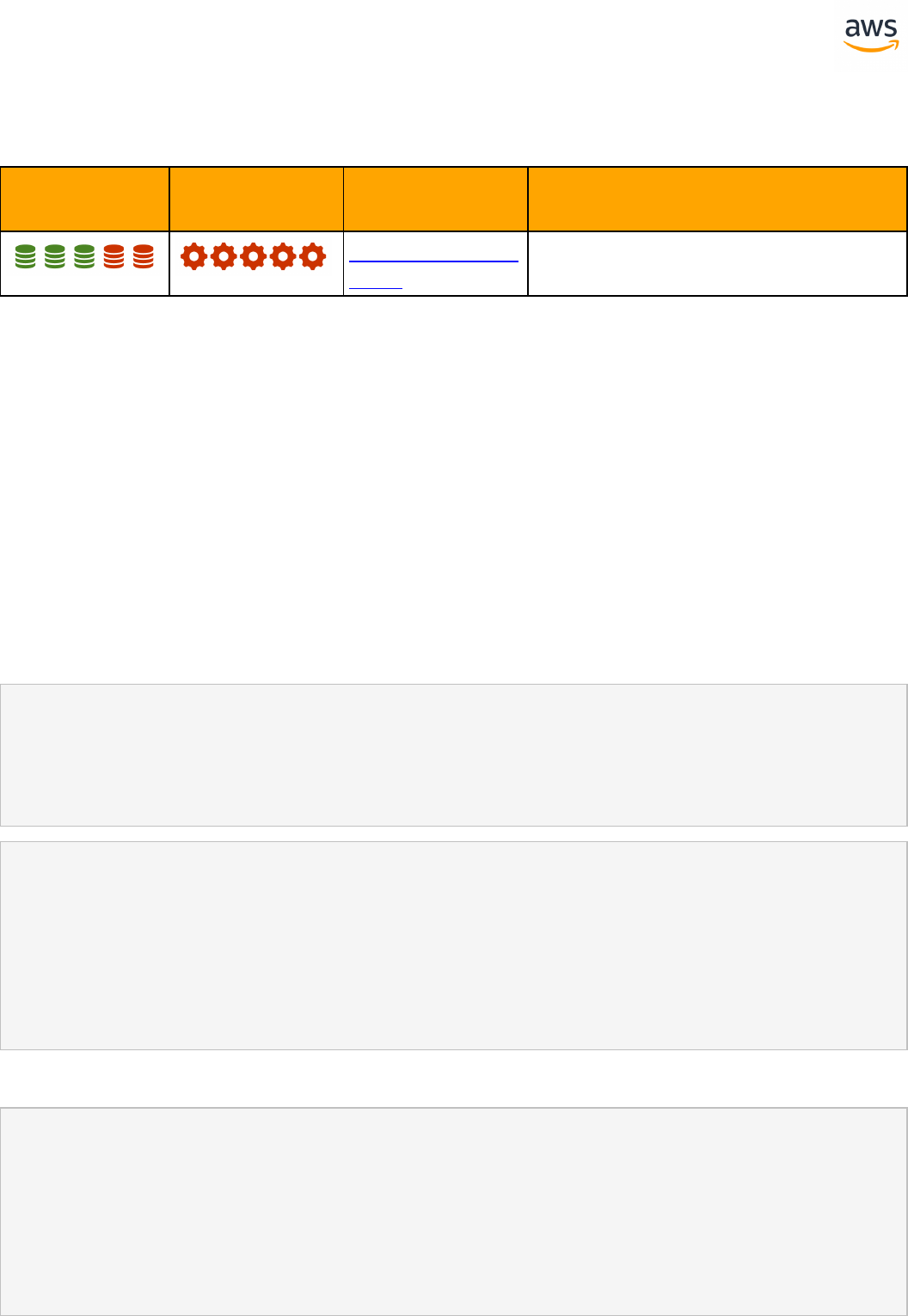

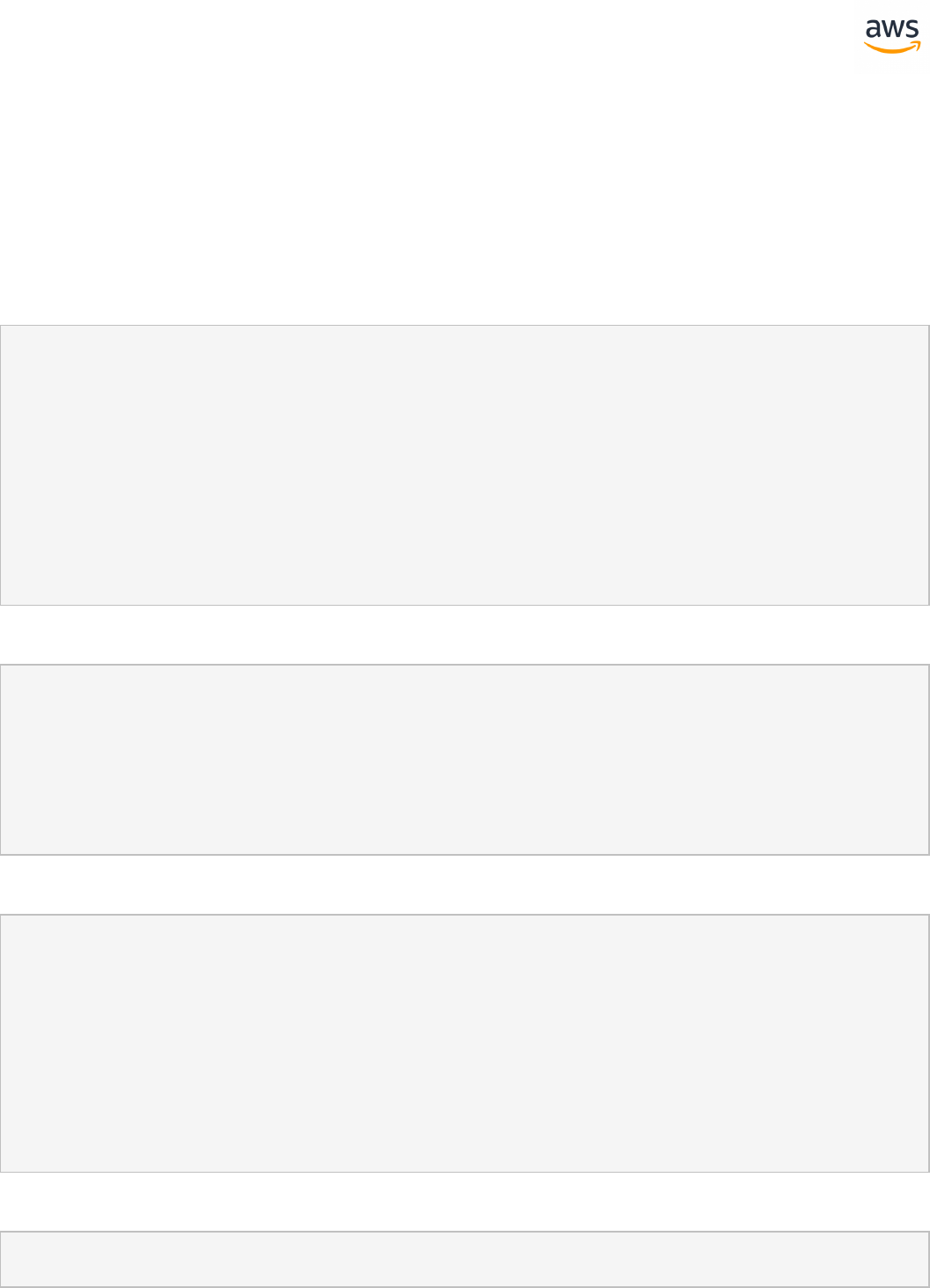

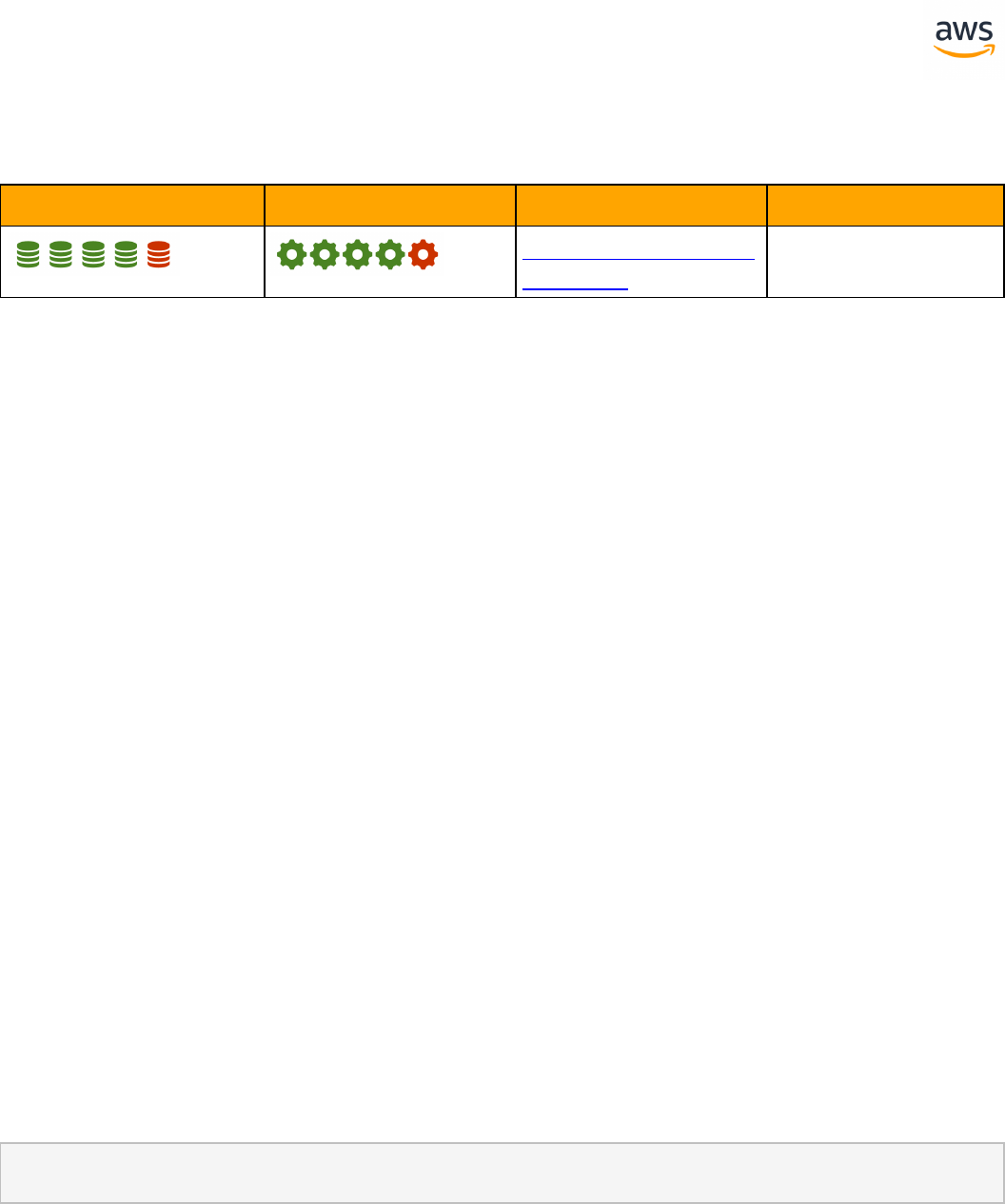

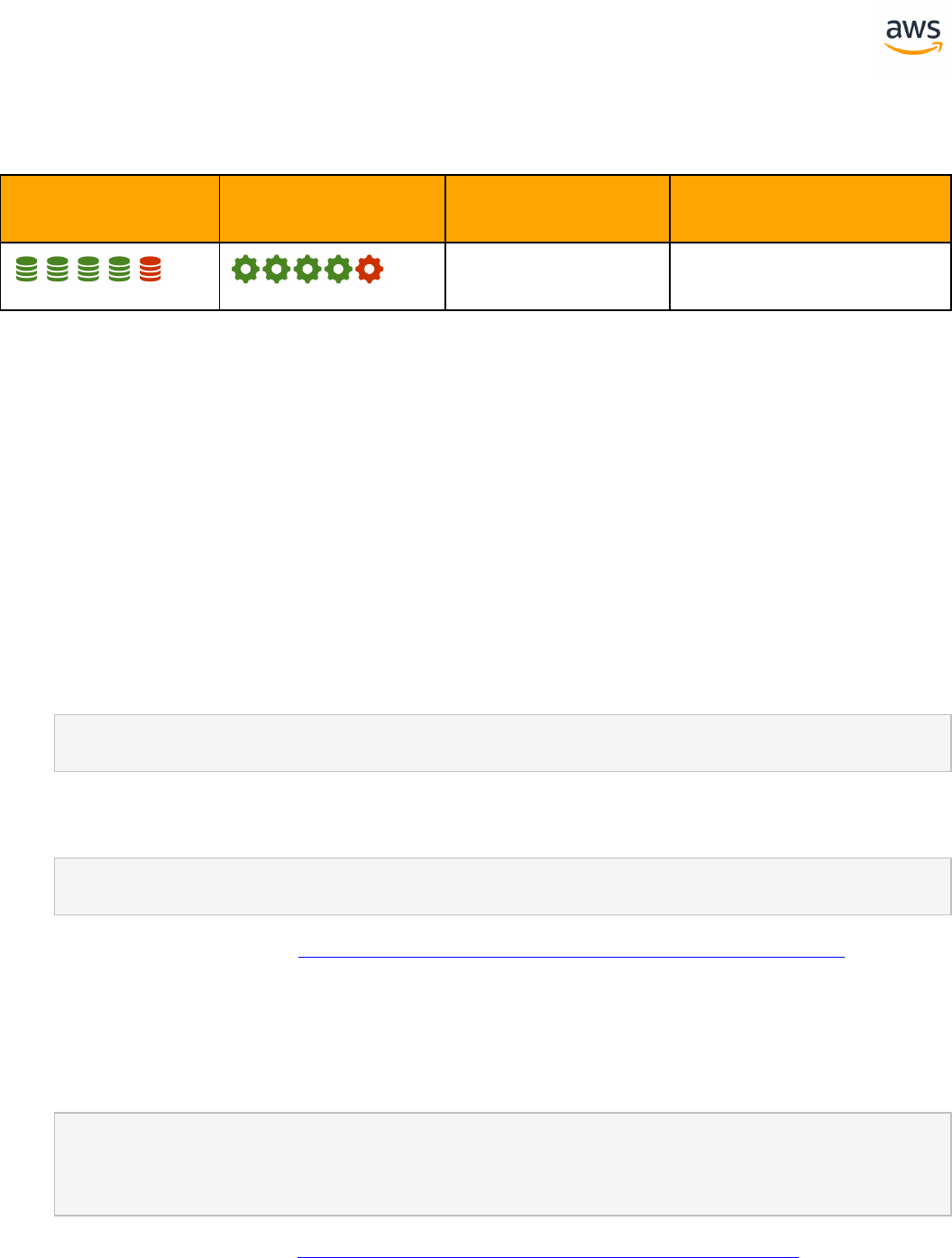

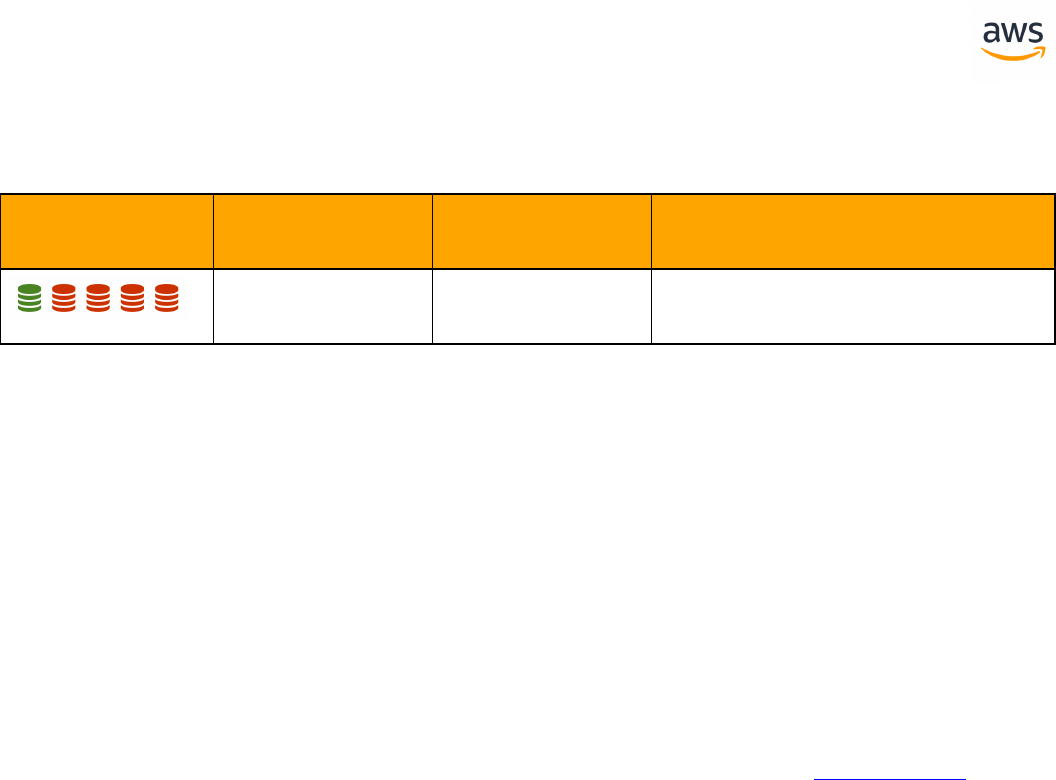

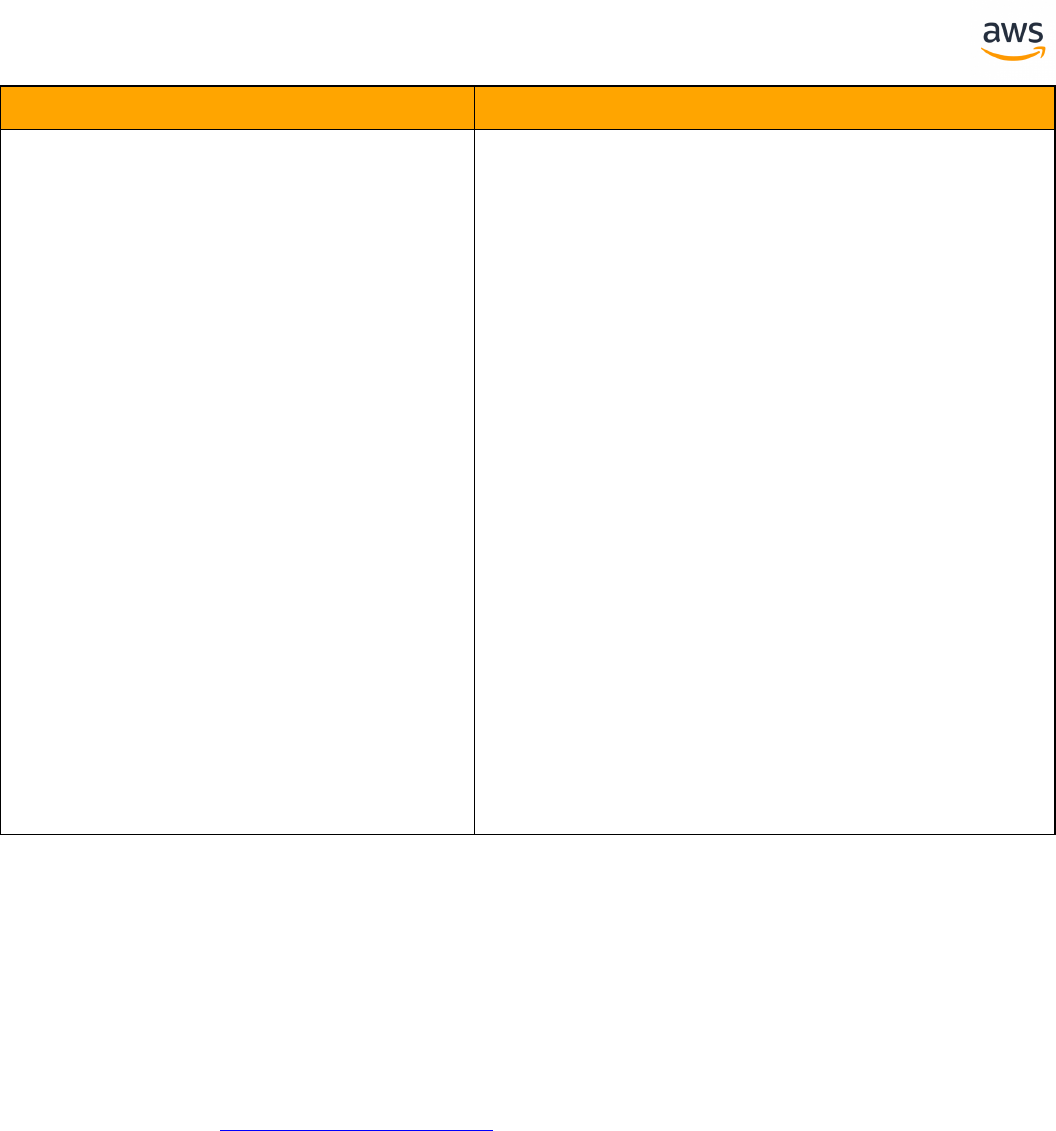

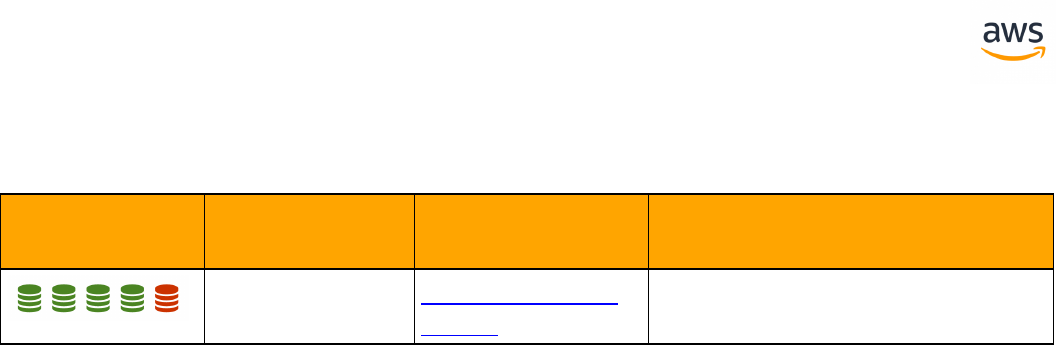

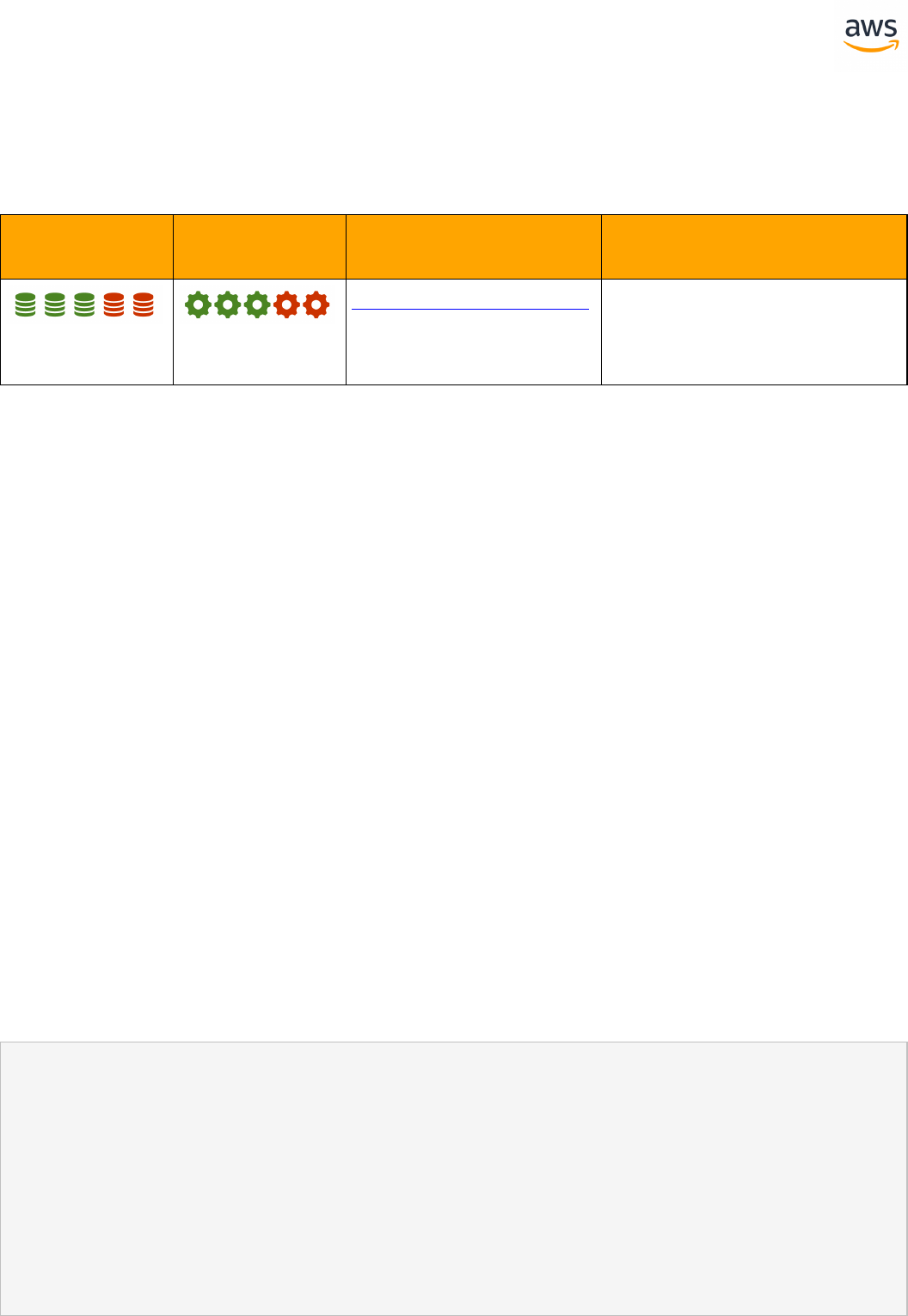

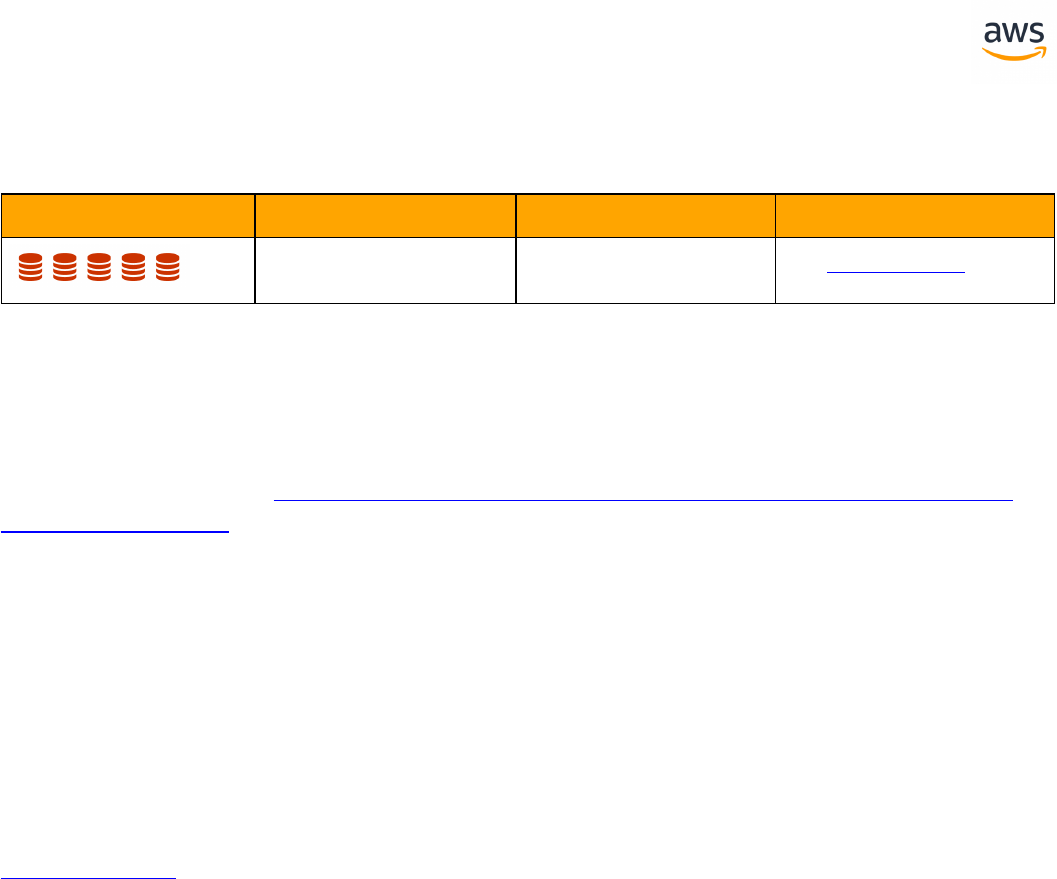

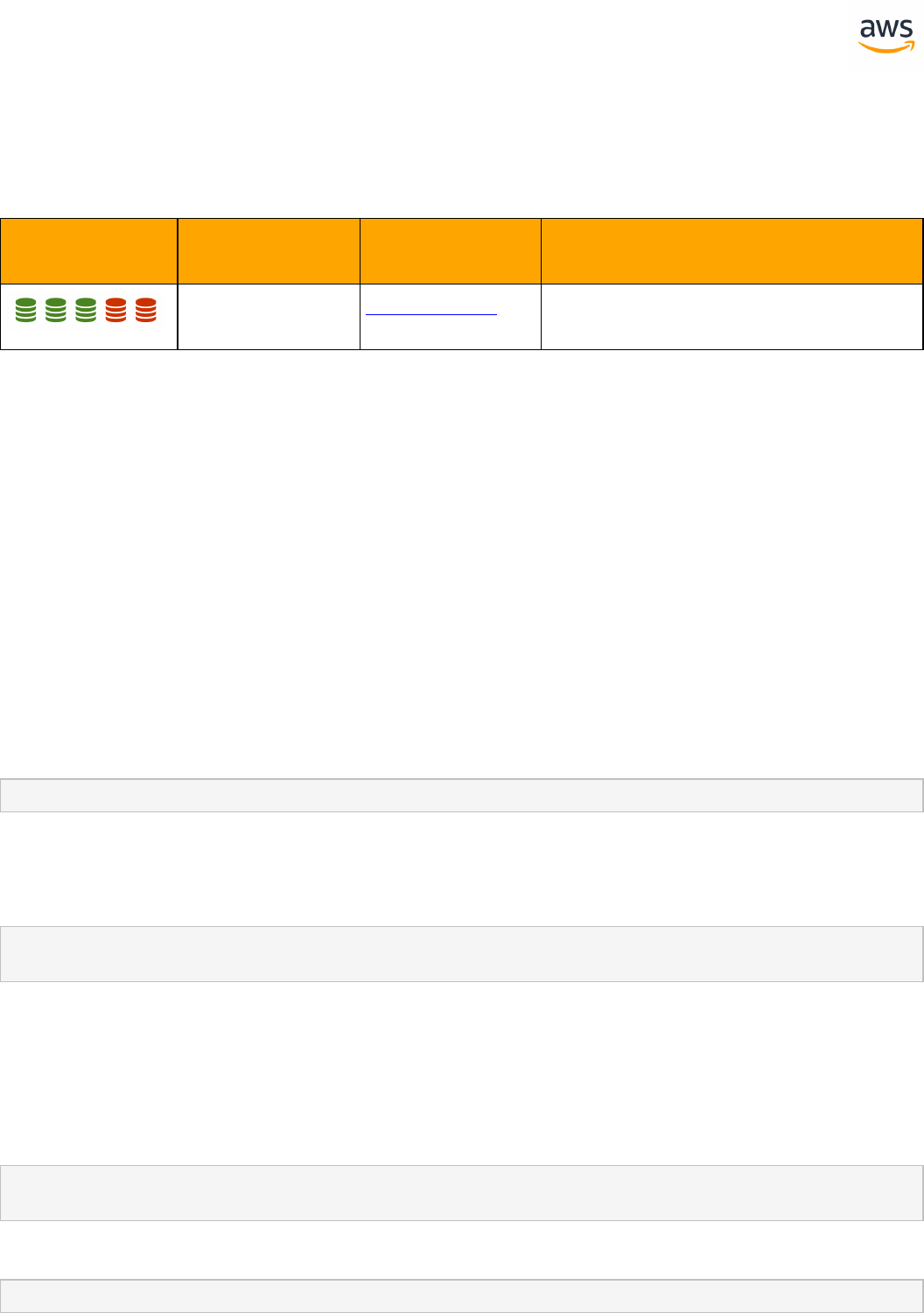

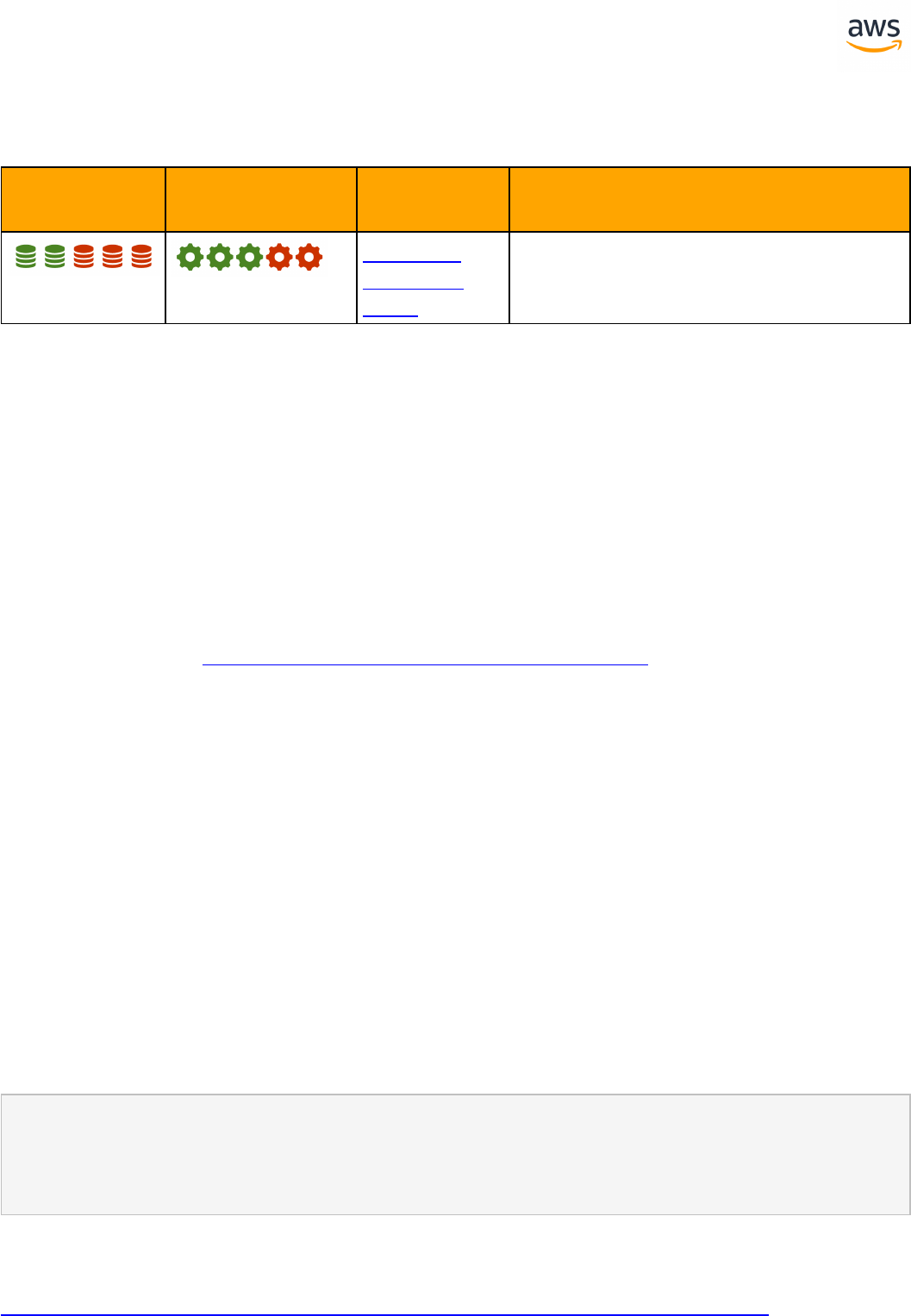

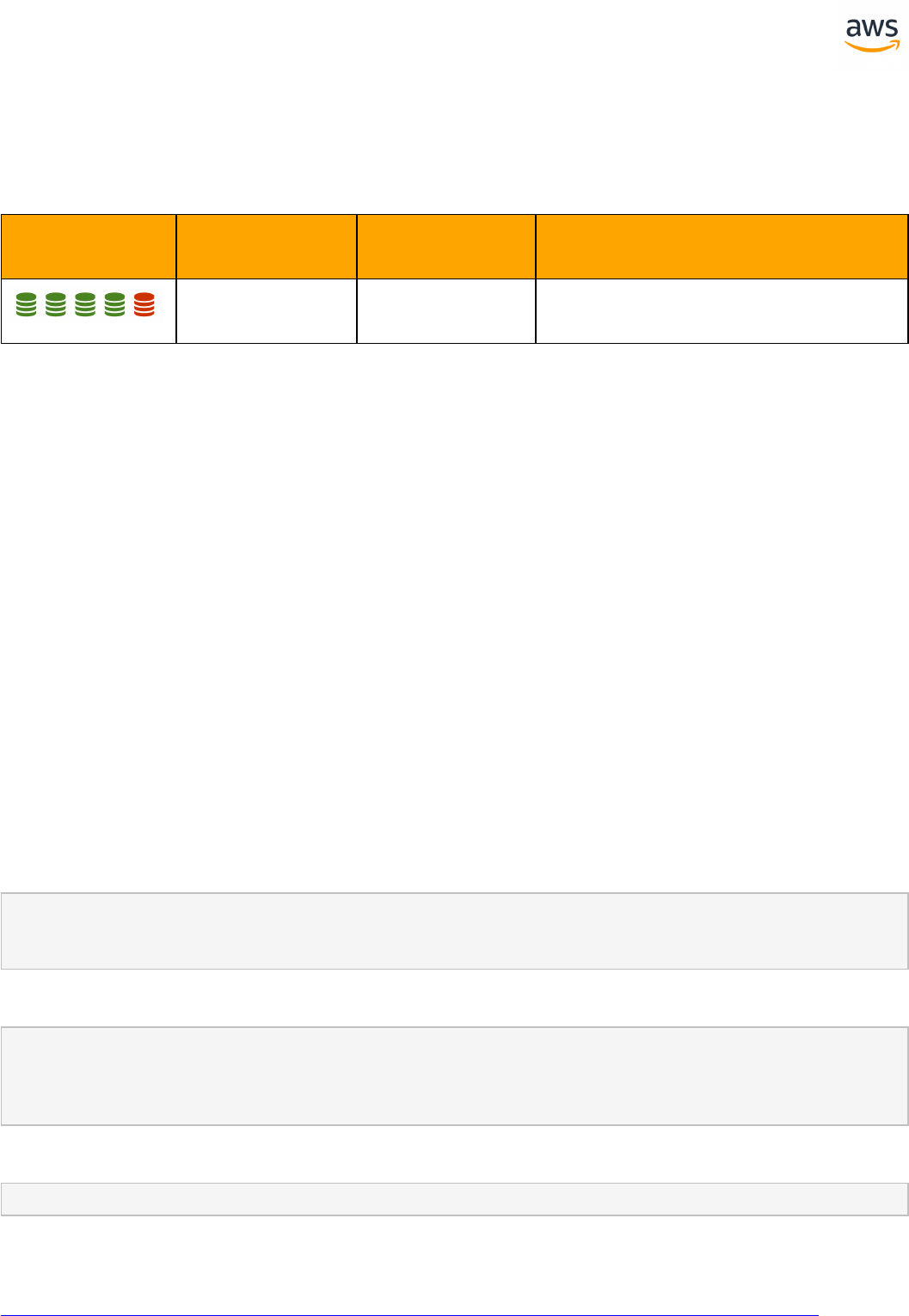

Migrate from: SQL Server Constraints

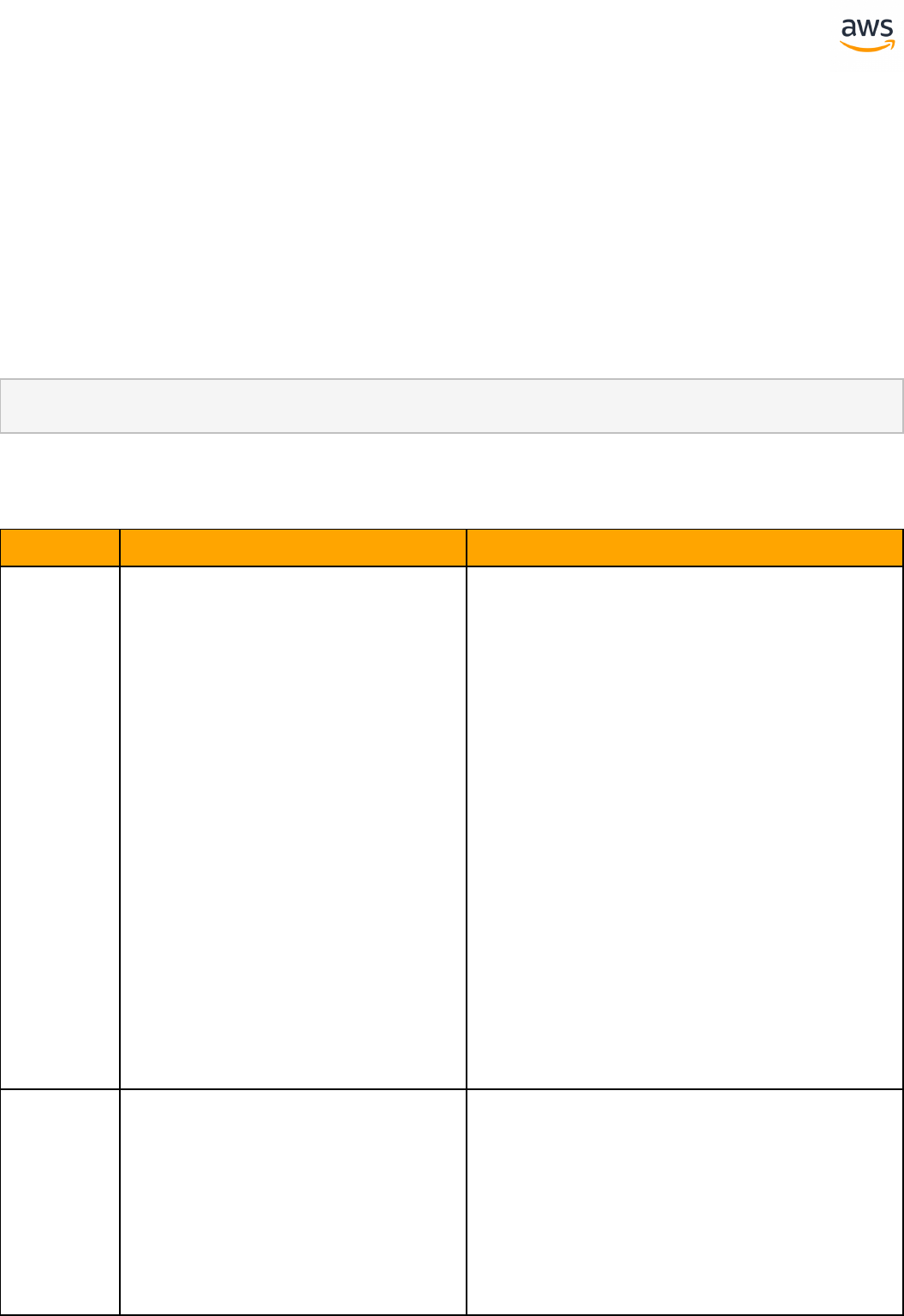

Feature Com-

patibility

SCT Automation Level

SCT Action Code

Index

Key Differences

Constraints SET DEFAULT option is miss-

ing

Check constraint with sub-

query

Overview

Column and table constraints are defined by the SQL standard and enforce relational data consistency.

There are four types of SQLconstraints:Check Constraints, Unique Constraints, Primary Key Con-

straints, and Foreign Key Constraints.

Check Constraints

Syntax

CHECK (<Logical Expression>)

CHECK constraints enforce domain integrity by limiting the data values stored in table columns. They

are logical boolean expressions that evaluate to one of three values: TRUE, FALSE, and UNKNOWN.

Note: CHECK constraint expressions behave differently than predicates in other query

clauses. For example, in a WHEREclause, a logical expression that evaluates to UNKNOWN is

functionally equivalent to FALSE and the row is filtered out. For CHECK constraints, an expres-

sion that evaluates to UNKNOWN is functionally equivalent to TRUE because the value is per-

mitted by the constraint.

Multiple CHECK constraints may be assigned to a column. A single CHECK constraint may apply to mul-

tiple columns (in this case, it is known as a Table-Level Check Constraint).

In ANSI SQL, CHECK constraints can not access other rows as part of the expression. SQL Server allows

using User Defined Functions in constraints to access other rows, tables, or databases.

Unique Constraints

Syntax

UNIQUE [CLUSTERED | NONCLUSTERED] (<Column List>)

- 47 -

UNIQUE constraints should be used for all candidate keys. A candidate key is an attribute or a set of

attributes (columns) that uniquely identify each tuple (row) in the relation (table data).

UNIQUE constraints guarantee that no rows with duplicate column values exist in a table.

A UNIQUE constraint can be simple or composite. Simple constraints are composed of a single

column. Composite constraints are composed of multiple columns. A column may be a part of more

than one constraint.

Although the ANSI SQLstandard allows multiple rows having NULLvalues for UNIQUE constraints, SQL

Server allows a NULLvalue for only one row. Use a NOTNULL constraint in addition to a UNIQUE con-

straint to disallow all NULL values.

To improve efficiency, SQL Server creates a unique index to support UNIQUE constraints. Otherwise,

every INSERT and UPDATE would require a full table scan to verify there are no duplicates. The default

index type for UNIQUE constraints is non- clustered.

Primary Key Constraints

Syntax

PRIMARY KEY [CLUSTERED | NONCLUSTERED] (<Column List>)

A PRIMARY KEY is a candidate key serving as the unique identifier of a table row. PRIMARY KEYS may

consist of one or more columns. All columns that comprise a primary key must also have a NOT NULL

constraint. Tables can have one primary key.

The default index type for PRIMARY KEYS is a clustered index.

Foreign Key Constraints

Syntax

FOREIGN KEY (<Referencing Column List>)

REFERENCES <Referenced Table>(<Referenced Column List>)

FOREIGN KEY constraints enforce domain referential integrity. Similar to CHECK constraints, FOREIGN

KEYS limit the values stored in a column or set of columns.

FOREIGN KEYS reference columns in other tables, which must be either PRIMARY KEYS or have UNIQUE

constraints. The set of values allowed for the referencing table is the set of values existing the ref-

erenced table.

Although the columns referenced in the parent table are indexed (since they must have either a

PRIMARY KEY or UNIQUE constraint), no indexes are automatically created for the referencing columns

in the child table. A best practice is to create appropriate indexes to support joins and constraint

enforcement.

- 48 -

FOREIGN KEY constraints impose DML limitations for the referencing child and parent tables. The pur-

pose of a constraint is to guarantee that no "orphan" rows (rows with no corresponding matching val-

ues in the parent table) exist in the referencing table. The constraint limits INSERT and UPDATE to the

child table and UPDATE and DELETE to the parent table. For example, you can not delete an order hav-

ing associated order items.

Foreign keys support Cascading Referential Integrity (CRI). CRI can be used to enforce constraints and

define action paths for DML statements that violate the constraints. There are four CRI options:

l NO ACTION:When the constraint is violated due to a DML operation, an error is raised and the

operation is rolled back.

l CASCADE: Values in a child table are updated with values from the parent table when they are

updated or deleted along with the parent.

l SET NULL: All columns that are part of the foreign key are set to NULL when the parent is deleted

or updated.

l SET DEFAULT: All columns that are part of the foreign key are set to their DEFAULT value when

the parent is deleted or updated.

These actions can be customized independently of others in the same constraint. For example, a cas-

cading constraint may have CASCADE for UPDATE, but NO ACTION for UPDATE.

Examples

Create a composite non-clustered PRIMARY KEY.

CREATE TABLE MyTable

(

Col1 INT NOT NULL,

Col2 INT NOT NULL,

Col3 VARCHAR(20) NULL,

CONSTRAINT PK_MyTable

PRIMARY KEY NONCLUSTERED (Col1, Col2)

);

Create a table-level CHECK constraint

CREATE TABLE MyTable

(

Col1 INT NOT NULL,

Col2 INT NOT NULL,

Col3 VARCHAR(20) NULL,

CONSTRAINT PK_MyTable

PRIMARY KEY NONCLUSTERED (Col1, Col2),

CONSTRAINT CK_MyTableCol1Col2

CHECK (Col2 >= Col1)

);

Create a simple non-null UNIQUE constraint.

CREATE TABLE MyTable

(

- 49 -

Col1 INT NOT NULL,

Col2 INT NOT NULL,

Col3 VARCHAR(20) NULL,

CONSTRAINT PK_MyTable

PRIMARY KEY NONCLUSTERED (Col1, Col2),

CONSTRAINT UQ_Col2Col3

UNIQUE (Col2, Col3)

);

Create a FOREIGN KEY with multiple cascade actions.

CREATE TABLE MyParentTable

(

Col1 INT NOT NULL,

Col2 INT NOT NULL,

Col3 VARCHAR(20) NULL,

CONSTRAINT PK_MyTable

PRIMARY KEY NONCLUSTERED (Col1, Col2)

);

CREATE TABLE MyChildTable

(

Col1 INT NOT NULL PRIMARY KEY,

Col2 INT NOT NULL,

Col3 INT NOTNULL,

CONSTRAINT FK_MyChildTable_MyParentTable

FOREIGN KEY (Col2, Col3)

REFERENCES MyParentTable (Col1, Col2)

ON DELETE NO ACTION

ON UPDATE CASCADE

);

For more information, see:

l https://docs.microsoft.com/en-us/sql/relational-databases/tables/unique-constraints-and-check-constraints

l https://docs.microsoft.com/en-us/sql/relational-databases/tables/primary-and-foreign-key-constraints

- 50 -

Migrate to: Aurora PostgreSQL Table Constraints

Feature Com-

patibility

SCT Automation

Level

SCT Action Code

Index

Key Differences

Constraints SET DEFAULT option is miss-

ing

Check constraint with sub-

query

Overview

PostgreSQL supports the following types of table constraints:

l PRIMARY KEY

l FOREIGN KEY

l UNIQUE

l NOT NULL

l EXCLUDE (unique to PostgreSQL)

Similar to constraint declaration in SQL Server, PostgreSQL allows creating constraints in-line or out-

of-line when specifying table columns.

PostgreSQL constraints can be specified using CREATE / ALTER TABLE. Constraints on views are not sup-

ported.

You must have privileges (CREATE / ALTER) on the table in which constrains are created. For foreign key

constraints, you must also have the REFERENCES privilege.

Primary Key Constraints

l Uniquely identify each row and cannot contain NULL values.

l Use the same ANSI SQL syntax as SQL Server.

l Can be created on a single column or on multiple columns (composite primary keys) as the only

PRIMARY KEY in a table.

l Creating a PRIMARY KEY constraint automatically creates a unique B-Tree index on the column or

group of columns marked as the primary key of the table.

l Constraint names can be generated automatically by PostgreSQL or explicitly specified during

constraint creation.

Examples

Create an inline primary key constraint with a system-generated constraint name.

- 51 -

CREATE TABLE EMPLOYEES (

EMPLOYEE_ID NUMERIC PRIMARY KEY,

FIRST_NAME VARCHAR(20),

LAST_NAME VARCHAR(25),

EMAIL VARCHAR(25));

Create an inline primary key constraint with a user-specified constraint name.

CREATE TABLE EMPLOYEES (

EMPLOYEE_ID NUMERIC CONSTRAINT PK_EMP_ID PRIMARY KEY,

FIRST_NAME VARCHAR(20),

LAST_NAME VARCHAR(25),

EMAIL VARCHAR(25));

Create an out-of-line primary key constraint.

CREATE TABLE EMPLOYEES(

EMPLOYEE_ID NUMERIC,

FIRST_NAME VARCHAR(20),

LAST_NAME VARCHAR(25),

EMAIL VARCHAR(25)),

CONSTRAINT PK_EMP_ID PRIMARY KEY (EMPLOYEE_ID));

Add a primary key constraint to an existing table.

ALTER TABLE SYSTEM_EVENTS

ADD CONSTRAINT PK_EMP_ID PRIMARY KEY (EVENT_CODE, EVENT_TIME);

Drop the primary key.

ALTER TABLE SYSTEM_EVENTS DROP CONSTRAINT PK_EMP_ID;

Foreign Key Constraints

l Enforce referential integrity in the database. Values in specific columns or a group of columns

must match the values from another table (or column).

l Creating a FOREIGN KEY constraint in PostgreSQL uses the same ANSI SQL syntax as SQL Server.

l Can be created in-line or out-of-line during table creation.

l Use the REFERENCES clause to specify the table referenced by the foreign key constraint.

l When specifying REFERENCES in the absence of a column list in the referenced table, the

PRIMARY KEY of the referenced table is used as the referenced column or columns.

l A table can have multiple FOREIGN KEY constraints.

l Use the ON DELETE clause to handle FOREIGN KEY parent record deletions (such as cascading

deletes).

l Foreign key constraint names are generated automatically by the database or specified explicitly

during constraint creation.

- 52 -

ON DELETE Clause

PostgreSQL provides three main options to handle cases where data is deleted from the parent table

and a child table is referenced by a FOREIGN KEY constraint. By default, without specifying any addi-

tional options, PostgreSQL uses the NO ACTION method and raises an error if the referencing rows still

exist when the constraint is verified.

l ON DELETE CASCADE: Any dependent foreign key values in the child table are removed along

with the referenced values from the parent table.

l ON DELETE RESTRICT: Prevents the deletion of referenced values from the parent table and the

deletion of dependent foreign key values in the child table.

l ON DELETE NO ACTION: Performs no action (the default). The fundamental difference between

RESTRIC and NO ACTION is that NO ACTION allows the check to be postponed until later in the

transaction; RESTRICT does not.

ON UPDATE Clause

Handling updates on FOREIGN KEY columns is also available using the ON UPDATE clause, which

shares the same options as the ON DELETE clause:

l ON UPDATE CASCADE

l ON UPDATE RESTRICT

l ON UPDATE NO ACTION

Examples

Create an inline foreign key with a user-specified constraint name.

CREATE TABLE EMPLOYEES (

EMPLOYEE_ID NUMERIC PRIMARY KEY,

FIRST_NAME VARCHAR(20),

LAST_NAME VARCHAR(25),

EMAIL VARCHAR(25),

DEPARTMENT_ID NUMERIC REFERENCES DEPARTMENTS(DEPARTMENT_ID));

Create an out-of-line foreign key constraint with a system-generated constraint name.

CREATE TABLE EMPLOYEES (

EMPLOYEE_ID NUMERIC PRIMARY KEY,

FIRST_NAME VARCHAR(20),

LAST_NAME VARCHAR(25),

EMAIL VARCHAR(25),

DEPARTMENT_ID NUMERIC,

- 53 -

CONSTRAINT FK_FEP_ID

FOREIGN KEY(DEPARTMENT_ID) REFERENCES DEPARTMENTS(DEPARTMENT_ID));

Create a foreign key using the ON DELETE CASCADE clause.

CREATE TABLE EMPLOYEES (

EMPLOYEE_ID NUMERIC PRIMARY KEY,

FIRST_NAME VARCHAR(20),

LAST_NAME VARCHAR(25),

EMAIL VARCHAR(25),

DEPARTMENT_ID NUMERIC,

CONSTRAINT FK_FEP_ID

FOREIGN KEY(DEPARTMENT_ID) REFERENCES DEPARTMENTS(DEPARTMENT_ID)

ON DELETE CASCADE);

Add a foreign key to an existing table.

ALTER TABLE EMPLOYEES ADD CONSTRAINT FK_DEPT

FOREIGN KEY (department_id)

REFERENCES DEPARTMENTS (department_id) NOT VALID;

ALTER TABLE EMPLOYEES VALIDATE CONSTRAINT FK_DEPT;

UNIQUE Constraints

l Ensure that values in a column, or a group of columns, are unique across the entire table.

l PostgreSQL UNIQUE constraint syntax is ANSI SQL compatible.

l Automatically creates a B-Tree index on the respective column, or a group of columns, when cre-

ating a UNIQUE constraint.

l If duplicate values exist in the column(s) on which the constraint was defined during UNIQUE con-

straint creation, the UNIQUE constraint creation fails and returns an error message.

l UNIQUE constraints in PostgreSQL accept multiple NULL values (similar to SQL Server).

l UNIQUE constraint naming can be system-generated or explicitly specified.

Example

Create an inline unique constraint ensuring uniqueness of values in the email column.

CREATE TABLE EMPLOYEES (

EMPLOYEE_ID NUMERIC PRIMARY KEY,

FIRST_NAME VARCHAR(20),

LAST_NAME VARCHAR(25),

EMAIL VARCHAR(25) CONSTRAINT UNIQ_EMP_EMAIL UNIQUE,

DEPARTMENT_ID NUMERIC);

- 54 -

CHECK Constraints

l Enforce that values in a column satisfy a specific requirement.

l CHECK constraints in PostgreSQL use the same ANSI SQL syntax as SQL Server.

l Can only be defined using a Boolean data type to evaluate the values of a column.

l CHECK constraints naming can be system-generated or explicitly specified by the user during con-

straint creation.

Check constraints are using Boolean data datatype, therefor sub-query can't be used in CHECK con-

straint. if you want to use a similar feature you can create a Boolean function that will check the query

resulsts and return TRUE or FALSE values accordingly.

Example

Create an inline CHECK constraint using a regular expression to enforce the email column contains

email addresses with an “@aws.com” suffix.

CREATE TABLE EMPLOYEES (

EMPLOYEE_ID NUMERIC PRIMARY KEY,

FIRST_NAME VARCHAR(20),

LAST_NAME VARCHAR(25),

EMAIL VARCHAR(25) CHECK(EMAIL ~ '(^[A-Za-z][email protected]$)'),

DEPARTMENT_ID NUMERIC);

NOT NULL Constraints

l Enforce that a column cannot accept NULL values. This behavior is different from the default

column behavior in PostgreSQL where columns can accept NULL values.

l NOT NULL constraints can only be defined inline during table creation.

l You can explicitly specify names for NOT NULL constraints when used with a CHECK constraint.

Example

Define two not null constraints on the FIRST_NAME and LAST_NAME columns. Define a check con-

straint (with an explicitly user-specified name) to enforce not null behavior on the EMAIL column.

CREATE TABLE EMPLOYEES (

EMPLOYEE_ID NUMERIC PRIMARY KEY,

FIRST_NAME VARCHAR(20) NOT NULL,

LAST_NAME VARCHAR(25) NOT NULL,

EMAIL VARCHAR(25) CONSTRAINT CHK_EMAIL

CHECK(EMAIL IS NOT NULL));

- 55 -

SETConstraints Syntax

SET CONSTRAINTS {ALL | name [, ...] } {DEFERRED | IMMEDIATE }

PostgreSQL provides controls for certain aspects of constraint behavior:

l DEFERRABLE | NOT DEFERRABLE:Using the PostgreSQL SET CONSTRAINTS statement. Con-

straints can be defined as:

l DEFERRABLE:Allows you to use the SET CONSTRAINTS statement to set the behavior of con-

straint checking within the current transaction until transaction commit.

l IMMEDIATE:Constraints are enforced only at the end of each statement. Note that each

constraint has its own IMMEDIATE or DEFERRED mode.

l NOT DEFERRABLE:This statement always runs as IMMEDIATE and is not affected by the

SET CONSTRAINTS command.

l VALIDATE CONSTRAINT | NOT VALID:

l VALIDATE CONSTRAINT:Validates foreign key or check constraints (only) that were pre-

viously created as NOT VALID. This action performs a validation check by scanning the

table to ensure all records satisfy the constraint definition.

l NOT VALID:Can be used only for foreign key or check constraints. When specified, new

records are not validated with the creation of the constraint. Only when the VALIDATE

CONSTRAINT state is applied is the constraint state enforced on all records.

Using Existing Indexes During Constraint Creation

PostgreSQL can add a new primary key or unique constraints based on an existing unique Index . All

index columns are included in the constraint. When creating constraints using this method, the index

is owned by the constraint. When dropping the constraint, the index is also dropped.

Use an existing unique Index to create a primary key constraint.

CREATE UNIQUE INDEX IDX_EMP_ID ON EMPLOYEES(EMPLOYEE_ID);

ALTER TABLE EMPLOYEES

ADD CONSTRAINT PK_CON_UNIQ PRIMARY KEY USING INDEX IDX_EMP_ID;

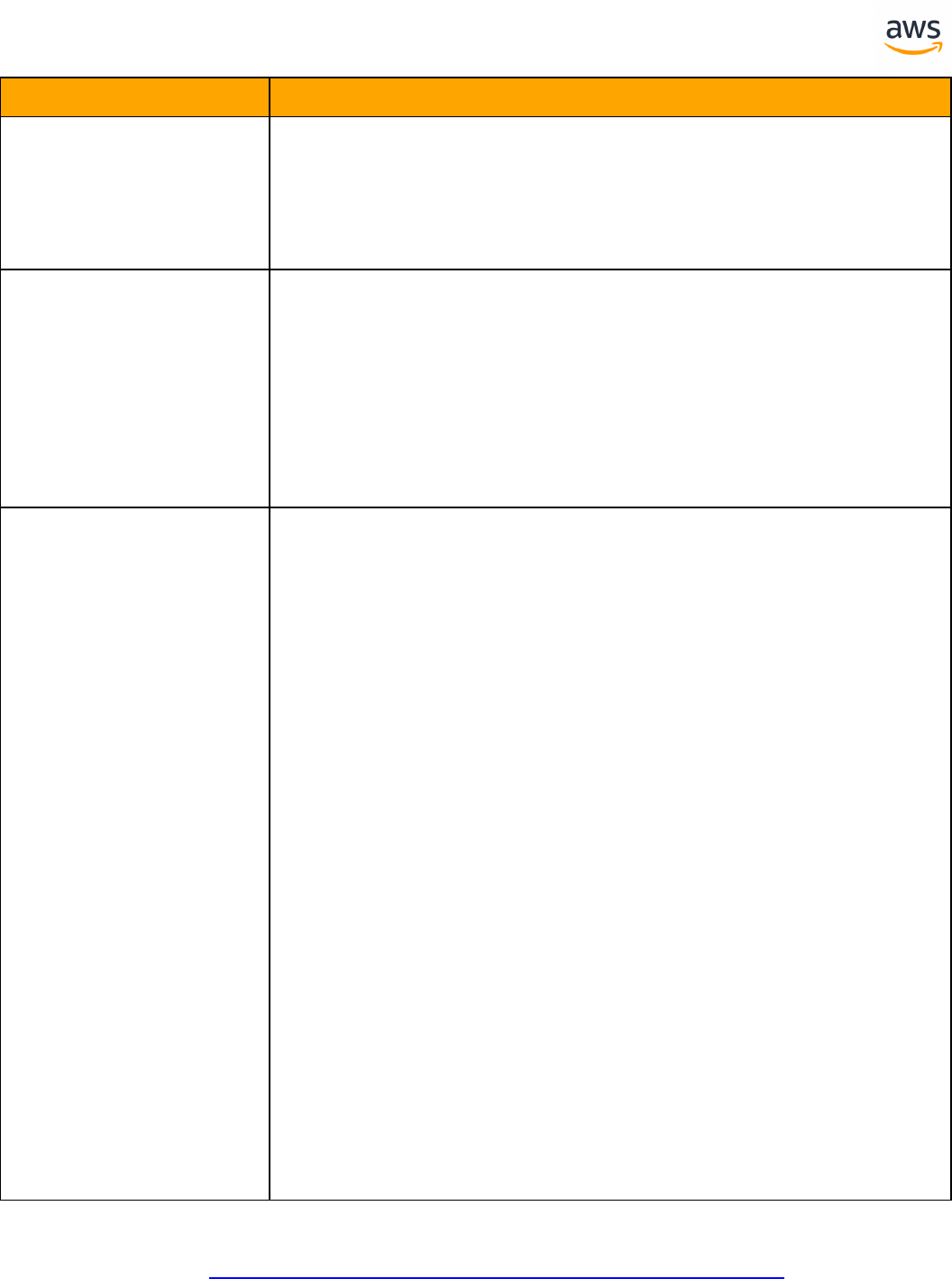

Summary

The following table identifies similarities, differences, and key migration considerations.

Feautre SQL Server Aurora PostgreSQL

CHECK constraints CHECK CHECK

UNIQUE constraints UNIQUE UNIQUE

- 56 -

Feautre SQL Server Aurora PostgreSQL

PRIMARY KEY constraints PRIMARY KEY PRIMARY KEY

FOREIGN KEY constraints FOREIGN KEY FOREIGN KEY

Cascaded referential actions NO ACTION | CASCADE | SET NULL

| SET DEFAULT

RESTRICT | CASCADE | SET

NULL |

NO ACTION

Indexing of referencing

columns

Not required N/A

Indexing of referenced

columns

PRIMARY KEY or UNIQUE PRIMARY KEY or UNIQUE

For additional details:

l https://www.postgresql.org/docs/9.6/static/ddl-constraints.html

l https://www.postgresql.org/docs/9.6/static/sql-set-constraints.html

l https://www.postgresql.org/docs/9.6/static/sql-altertable.html

- 57 -

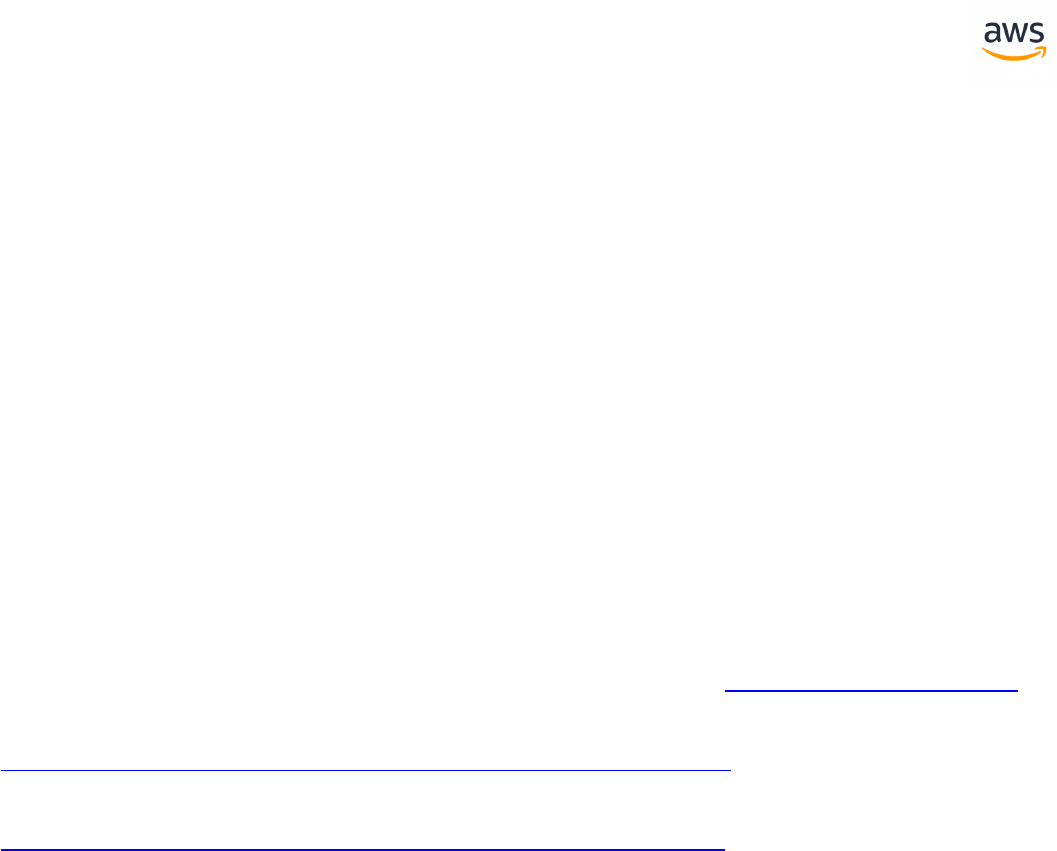

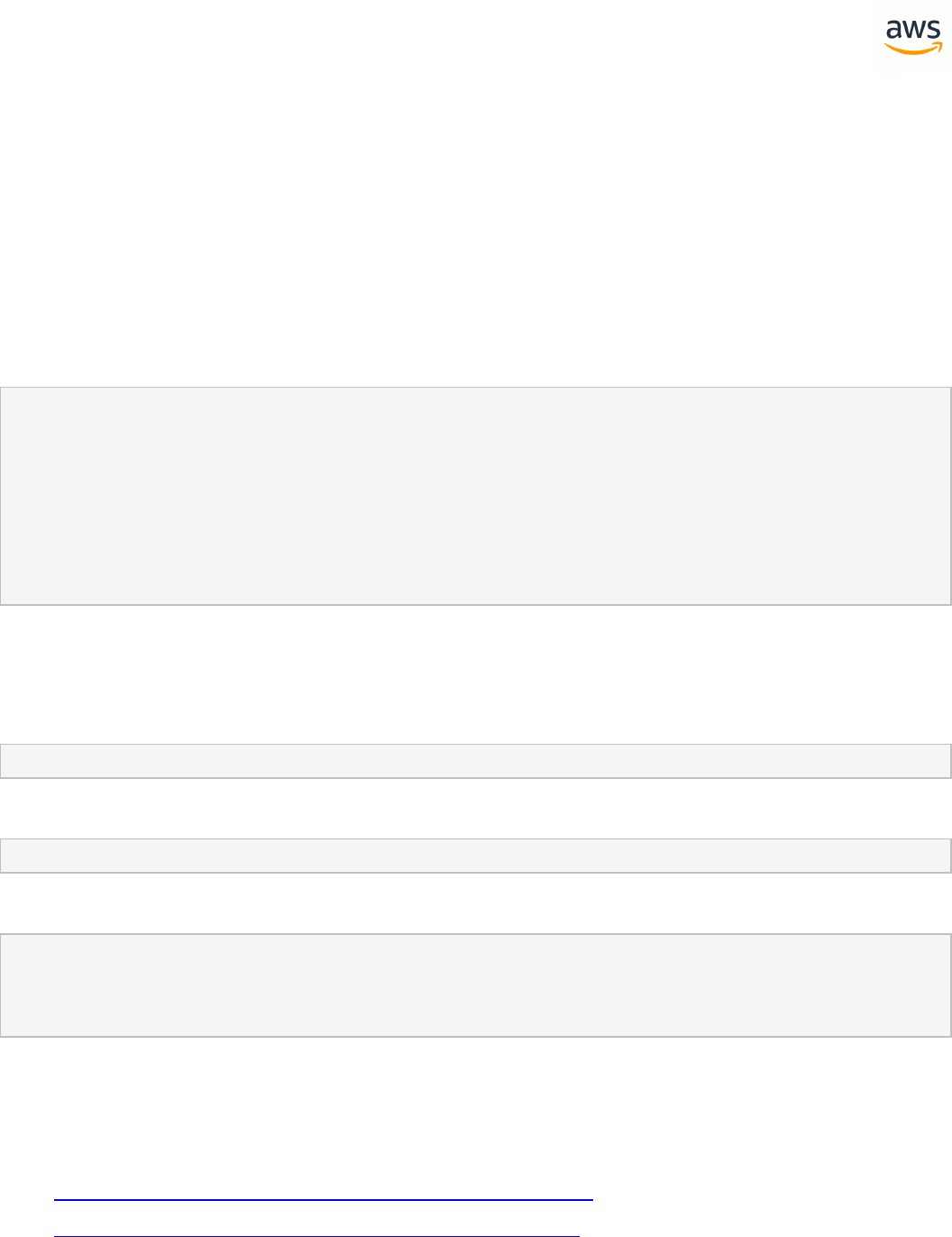

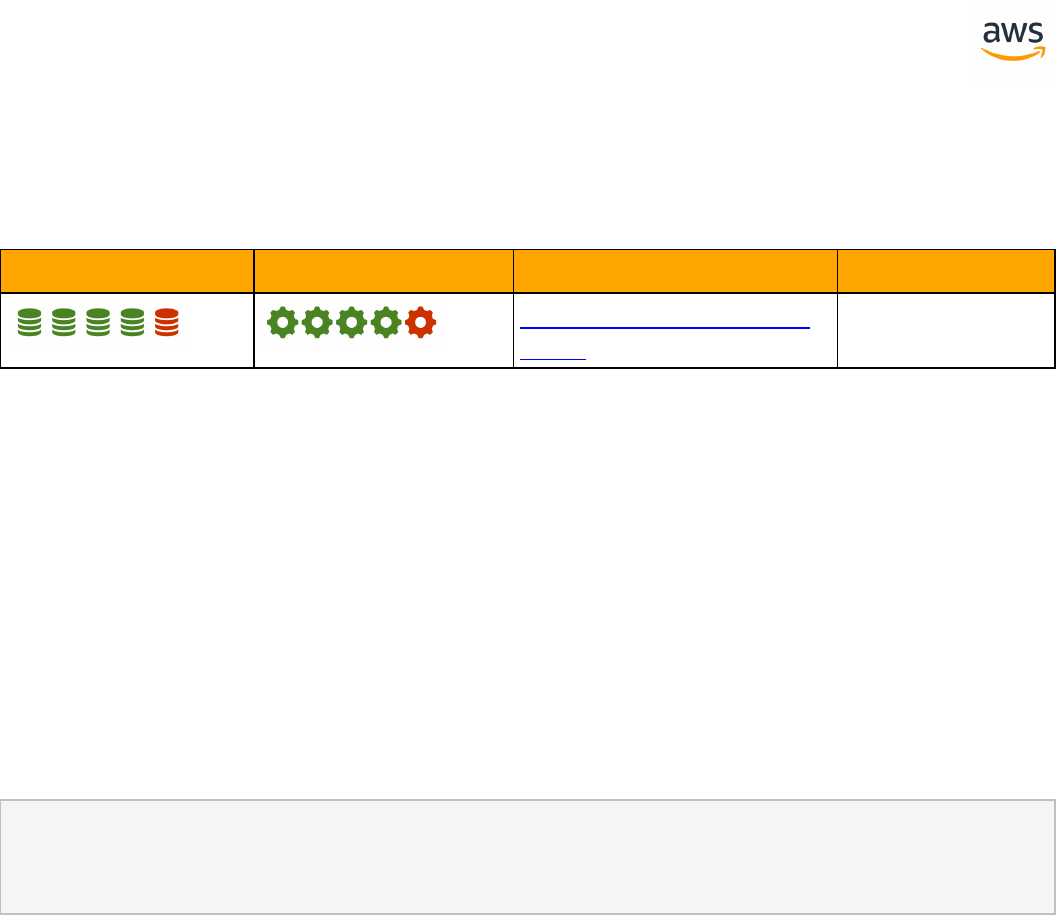

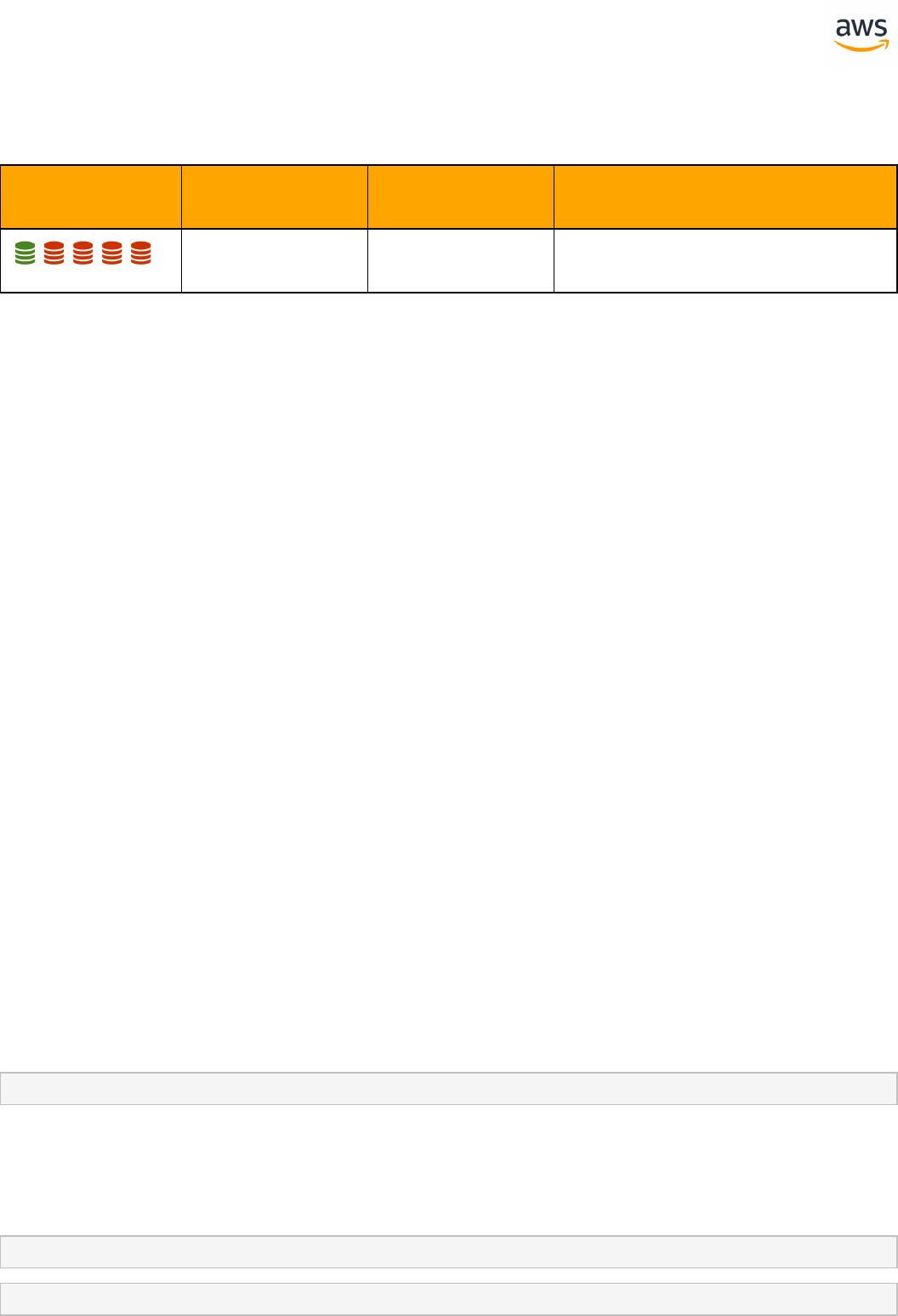

Migrate from: SQL Server Creating Tables

Feature Com-

patibility

SCT Automation

Level

SCT Action Code Index Key Differences

SCT Action Codes -

CREATE TABLE

Auto generated value column is dif-

ferent

Can't use physical attribute ON

Missing table variable and memory

optimized table

Overview

ANSI Syntax Conformity

Tables in SQL Server are created using the CREATE TABLE statement and conform to the ANSI/ISO entry

level standard. The basic features of CREATE TABLE are similar for most relational database man-

agement engines and are well defined in the ANSI/ISO standards.

In its most basic form, the CREATE TABLE statement in SQL Server is used to define:

l Table names, the containing security schema, and database

l Column names

l Column data types

l Column and table constraints

l Column default values

l Primary, candidate (UNIQUE), and foreign keys

T-SQL Extensions

SQL Server extends the basic syntax and provides many additional options for the CREATE TABLE or

ALTER TABLE statements. The most often used options are:

l Supporting index types for primary keys and unique constraints, clustered or non-clustered, and

index properties such as FILLFACTOR

l Physical table data storage containers using the ON <File Group> clause

l Defining IDENTITY auto-enumerator columns

l Encryption

l Compression

l Indexes

- 58 -

For more information, see Data Types, Column Encryption, and Databases and Schemas.

Table Scope

SQL Server provides five scopes for tables:

l Standard tables are created on disk, globally visible, and persist through connection resets and

server restarts.

l Temporary Tables are designated with the "# " prefix. They are persisted in TempDB and are vis-

ible to the execution scope where they were created (and any sub-scopes). Temporary tables are

cleaned up by the server when the execution scope terminates and when the server restarts.

l Global Temporary Tables are designated by the "## " prefix. They are similar in scope to tem-

porary tables, but are also visible to concurrent scopes.

l Table Variables are defined with the DECLARE statement, not with CREATE TABLE. They are visible

only to the execution scope where they were created.

l Memory-Optimized tables are special types of tables used by the In-Memory Online Transaction

Processing (OLTP) engine. They use a non-standard CREATE TABLE syntax.

Creating a Table Based on an Existing Table or Query

SQL Server allows creating new tables based on SELECT queries as an alternate to the CREATE TABLE

statement. A SELECT statement that returns a valid set with unique column names can be used to cre-

ate a new table and populate data.

SELECT INTO is a combination of DML and DDL. The simplified syntax for SELECT INTO is:

SELECT <Expression List>

INTO <Table Name>

[FROM <Table Source>]

[WHERE <Filter>]

[GROUP BY <Grouping Expressions>...];

When creating a new table using SELECT INTO, the only attributes created for the new table are column

names, column order, and the data types of the expressions. Even a straight forward statement such

as SELECT * INTO <New Table> FROM <Source Table> does not copy constraints, keys, indexes, identity

property, default values, or any other related objects.

TIMESTAMP Syntax for ROWVERSION Deprecated Syntax

The TIMESTAMP syntax synonym for ROWVERSION has been deprecated as of SQL Server 2008R2 in

accordance with https://docs.microsoft.com/en-us/previous-versions/sql/sql-server-2008-r2/ms143729

(v=sql.105).

Previously, you could use either the TIMESTAMP or the ROWVERSION keywords to denote a special

data type that exposes an auto-enumerator. The auto-enumerator generates unique eight-byte binary

- 59 -

numbers typically used to version-stamp table rows. Clients read the row, process it, and check the

ROWVERSION value against the current row in the table before modifying it. If they are different, the

row has been modified since the client read it. The client can then apply different processing logic.

Note that when migrating to Aurora PostgreSQL using the Amazon RDS Schema Conversion Tool (SCT),

neither ROWVERSION nor TIMESTAMP are supported. You must add customer logic, potentially in the

form of a trigger, to maintain this functionality.

See a full example in Creating Tables.

Syntax

Simplified syntax for CREATE TABLE:

CREATE TABLE [<Database Name>.<Schema Name>].<TableName> (<Column Definitions>)

[ON{<Partition Scheme Name> (<Partition Column Name>)];

<Column Definition>:

<Column Name> <Data Type>

[CONSTRAINT <Column Constraint>

[DEFAULT <Default Value>]]

[IDENTITY [(<Seed Value>, <Increment Value>)]

[NULL | NOT NULL]

[ENCRYPTED WITH (<Encryption Specifications>)

[<Column Constraints>]

[<Column Index Specifications>]

<Column Constraint>:

[CONSTRAINT <Constraint Name>]

{{PRIMARY KEY | UNIQUE} [CLUSTERED | NONCLUSTERED]

[WITH FILLFACTOR = <Fill Factor>]

| [FOREIGN KEY]

REFERENCES <Referenced Table> (<Referenced Columns>)]

<Column Index Specifications>:

INDEX <Index Name> [CLUSTERED | NONCLUSTERED]

[WITH(<Index Options>]

Examples

Create a basic table.

CREATE TABLE MyTable

(

Col1 INT NOT NULL PRIMARY KEY,

Col2 VARCHAR(20) NOT NULL

);

Create a table with column constraints and an identity.

CREATE TABLE MyTable

(

- 60 -

Col1 INT NOT NULL PRIMARY KEY IDENTITY (1,1),

Col2 VARCHAR(20) NOT NULL CHECK (Col2 <> ''),

Col3 VARCHAR(100) NULL

REFERENCES MyOtherTable (Col3)

);

Create a table with an additional index.

CREATE TABLE MyTable

(

Col1 INT NOT NULL PRIMARY KEY,

Col2 VARCHAR(20) NOT NULL

INDEX IDX_Col2 NONCLUSTERED

);

For more information, see https://docs.microsoft.com/en-us/sql/t-sql/statements/create-table-transact-sql

- 61 -

Migrate to: Aurora PostgreSQL Creating Tables

Feature Com-

patibility

SCT Automation

Level

SCT Action Code Index Key Differences

SCT Action Codes -

CREATE TABLE

Auto generated value column is dif-

ferent

Can't use physical attribute ON

Missing table variable and memory

optimized table

Overview

Like SQL Server, Aurora PostgreSQL provides ANSI/ISO syntax entry level conformity for CREATE TABLE

and custom extensions to support Aurora PostgreSQL specific functionality.

In its most basic form, and very similar to SQL Server, the CREATE TABLE statement in Aurora Post-

greSQL is used to define:

l Table names containing security schema and/or database

l Column names

l Column data types

l Column and table constraints

l Column default values

l Primary, candidate (UNIQUE), and foreign keys

Aurora PostgreSQL Extensions

Aurora PostgreSQL extends the basic syntax and allows many additional options to be defined as part

of the CREATE TABLE or ALTER TABLE statements. The most often used option is in-line index defin-

ition.

Table Scope

Aurora PostgreSQL provides two table scopes:

l Standard Tables are created on disk, visible globally, and persist through connection resets and

server restarts.

l Temporary Tables are created using the CREATE GLOBAL TEMPORARY TABLE statement. A

TEMPORARY table is visible only to the session that creates it and is dropped automatically when

the session is closed.

- 62 -

Creating a Table Based on an Existing Table or Query

Aurora PostgreSQL provides two ways to create standard or temporary tables based on existing tables

and queries:

CREATE TABLE <New Table> LIKE <Source Table> and CREATE TABLE ... AS <Query Expres-

sion>.

CREATE TABLE <New Table> LIKE <Source Table> creates an empty table based on the defin-

ition of another table including any column attributes and indexes defined in the ori-

ginal table.

CREATE TABLE ... AS <Query Expression> is very similar to SQL Server's SELECT INTO. It

allows creating a new table and populating data in a single step.

For example:

CREATE TABLE SourceTable(Col1 INT);

INSERT INTO SourceTable VALUES (1)

CREATE TABLE NewTable(Col1 INT) AS SELECT Col1 AS Col2 FROM SourceTable;

INSERT INTO NewTable (Col1, Col2) VALUES (2,3);

SELECT * FROM NewTable

Col1 Col2

---- ----

NULL 1

23

Converting TIMESTAMP and ROWVERSION Columns

SQL server provides an automatic mechanism for stamping row versions for application concurrency

control. For example:

CREATE TABLE WorkItems

(

WorkItemID INT IDENTITY(1,1) PRIMARY KEY,

WorkItemDescription XML NOT NULL,

Status VARCHAR(10) NOT NULL DEFAULT ('Pending'),

-- other columns...

VersionNumber ROWVERSION

);

The VersionNumber column automatically updates when a row is modified. The actual value is mean-

ingless. Just the fact that it changed is what indicates a row modification. The client can now read a

work item row, process it, and ensure no other clients updated the row before updating the status.

- 63 -

SELECT @WorkItemDescription = WorkItemDescription,

@Status = Status,

@VersionNumber = VersionNumber

FROM WorkItems

WHERE WorkItemID = @WorkItemID;

EXECUTE ProcessWorkItem @WorkItemID, @WorkItemDescription, @Stauts OUTPUT;

IF (

SELECT VersionNumber

FROM WorkItems

WHERE WorkItemID = @WorkItemID

) = @VersionNumber;

EXECUTE UpdateWorkItems @WorkItemID, 'Completed'; -- Success

ELSE

EXECUTE ConcurrencyExceptionWorkItem; -- Row updated while processing

In Aurora PostgreSQL, you can add a trigger to maintain the updated stamp per row.

CREATE OR REPLACE FUNCTION IncByOne()

RETURNS TRIGGER

AS $$

BEGIN

UPDATE WorkItems SET VersionNumber = VersionNumber+1

WHERE WorkItemID = OLD.WorkItemID;

END; $$

LANGUAGE PLPGSQL;

CREATE TRIGGER MaintainWorkItemVersionNumber

AFTER UPDATE OF WorkItems

FOR EACH ROW

EXECUTE PROCEDURE IncByOne();

For more information on PostgreSQLtriggers, see the Triggers.

Syntax