PUBLIC

SAP Data Services

Document Version: 4.3 (14.3.00.00)–2024-03-15

Management Console Guide

© 2024 SAP SE or an SAP aliate company. All rights reserved.

THE BEST RUN

Content

1 About the Management Console.................................................7

1.1 Who should read this guide......................................................7

1.2 Naming conventions and variables.................................................8

2 Logging into the Management Console...........................................13

2.1 Management Console navigation................................................. 13

3 Administrator..............................................................15

3.1 Administrator navigation.......................................................15

Status node..............................................................16

Batch node..............................................................17

Real-Time node...........................................................18

Web Services node.........................................................18

Adapter Instances node.....................................................19

Server Groups node........................................................19

Proler Repositories node....................................................19

Management node.........................................................20

Job Execution History node.................................................. 20

Node pages..............................................................21

3.2 Administrator Management.....................................................21

Managing database account changes............................................21

Editing le location object information...........................................24

Conguring the report server.................................................24

Adding Access Servers......................................................25

Setting the status interval....................................................26

Exporting certication logs...................................................27

3.3 Central Repository management.................................................30

Setting up users and groups..................................................30

Viewing reports...........................................................32

3.4 Server Groups..............................................................34

Server group architecture....................................................34

Editing and removing a server group............................................38

Monitoring Job Server status in a server group.....................................38

Executing jobs using server groups.............................................39

3.5 Batch Jobs.................................................................39

Executing batch jobs.......................................................39

Scheduling jobs...........................................................40

2

PUBLIC

Management Console Guide

Content

Downloading a debug package................................................53

Monitoring RFC trace logs................................................... 54

3.6 Real-Time Jobs..............................................................54

Supporting real-time jobs....................................................55

Conguring and monitoring real-time services.....................................56

Creating and monitoring client interfaces.........................................65

3.7 Real-Time Performance........................................................70

Conguring Access Server output..............................................70

Service conguration parameters..............................................73

Service statistics..........................................................76

Service provider statistics....................................................77

Using statistics and service parameters..........................................78

3.8 Prole Server Management.....................................................79

Dening a proler repository..................................................79

Proler task parameters.....................................................80

Monitoring proler tasks using the Administrator ...................................82

3.9 RFC Server Management.......................................................84

64-bit platform prerequisites.................................................84

Adding an RFC server interface................................................85

Starting or stopping an RFC server interface connection..............................86

Monitoring RFC server interfaces.............................................. 86

Removing one or more RFC server interfaces......................................87

3.10 Adapters..................................................................88

3.11 Support for Web Services......................................................89

3.12 Troubleshooting the Administrator................................................89

Reestablishing network connections............................................89

Finding problems......................................................... 90

Error and trace logs........................................................91

Resolving connectivity problems...............................................96

Restarting the Access Server................................................. 97

4 Metadata Reports ..........................................................99

4.1 Requirements for metadata reports...............................................99

4.2 Repository reporting tables and views............................................. 99

5 Impact and Lineage Analysis Reports...........................................102

5.1 Navigate impact and lineage information...........................................102

5.2 Impact and lineage object analysis information...................................... 103

5.3 Searching in the Impact and Lineage Analysis page................................... 108

5.4 Impact and Lineage Analysis Settings control panel...................................108

Impact and lineage Settings tab...............................................108

Impact and lineage Refresh Usage Data tab...................................... 109

Management Console Guide

Content

PUBLIC 3

About tab...............................................................110

5.5 Increasing the java heap memory in Windows........................................111

5.6 Increasing the java heap memory in UNIX...........................................111

6 Operational Dashboard......................................................112

6.1 Dashboard tab..............................................................112

Job Execution Status pie chart................................................113

Job Execution Statistics History bar chart........................................113

Job Execution table........................................................113

6.2 License Management.........................................................115

7 Data Validation Dashboard Reports.............................................116

7.1 Conguring Data Validation dashboards............................................116

Creating functional areas....................................................116

Creating business rules.....................................................117

Enabling data validation statistics collection......................................119

7.2 Viewing Data Validation dashboards.............................................. 119

7.3 Functional area view......................................................... 120

Functional area pie chart....................................................120

History line chart......................................................... 121

7.4 Business rule view...........................................................121

7.5 Validation rule view..........................................................122

Validation rule bar chart....................................................122

History line chart.........................................................123

7.6 Sample data view........................................................... 123

Sample data table........................................................ 123

History line chart.........................................................124

7.7 Data Validation dashboards Settings control panel....................................124

Repository tab...........................................................124

Functional area tab........................................................125

Business rule tab.........................................................125

8 Auto Documentation Reports.................................................126

8.1 Navigation................................................................126

Searching for a specic object................................................127

Repository..............................................................127

Project................................................................ 127

Job...................................................................128

Work ow.............................................................. 128

Data ow...............................................................128

8.2 Generating documentation for an object...........................................129

Printing Auto Documentation for an object.......................................129

4

PUBLIC

Management Console Guide

Content

8.3 Auto Documentation Settings control panel.........................................130

9 Data Quality Reports........................................................131

9.1 Conguring report generation...................................................131

9.2 Opening and viewing reports................................................... 132

9.3 Lists of available reports...................................................... 133

9.4 List of reports by job.........................................................134

9.5 Data Quality Reports Settings control panel.........................................134

9.6 Report options.............................................................134

9.7 Troubleshooting reports.......................................................135

9.8 USA CASS report: USPS Form 3553..............................................136

9.9 NCOALink Processing Summary Report........................................... 136

9.10 Delivery Sequence Invoice report................................................138

Contents of report........................................................138

9.11 US Addressing Report........................................................139

Enabling the report........................................................140

Percent calculation........................................................140

Information in the US Addressing report .........................................141

DPV sections............................................................142

NCOALink sections........................................................143

Information in the DSF2 sections..............................................143

LACSLink sections........................................................144

SuiteLink sections........................................................144

9.12 DSF2 Augment Statistics Log File................................................145

9.13 US Regulatory Locking Report.................................................. 145

9.14 Canadian SERP report: Statement of Address Accuracy................................146

9.15 Australian AMAS report: Address Matching Processing Summary......................... 147

9.16 New Zealand Statement of Accuracy (SOA) report....................................147

9.17 Address Information Codes Sample report......................................... 148

9.18 Address Information Code Summary report.........................................149

9.19 Address Validation Summary report..............................................150

9.20 Address Type Summary report..................................................151

9.21 Address Standardization Sample report............................................151

9.22 Address Quality Code Summary report............................................152

9.23 Data Cleanse Information Code Summary report.....................................153

9.24 Data Cleanse Status Code Summary report.........................................154

9.25 Geocoder Summary report.....................................................155

9.26 Overview of match reports .....................................................155

9.27 Best Record Summary report...................................................156

9.28 Match Contribution report.....................................................157

9.29 Match Criteria Summary report.................................................159

9.30 Match Duplicate Sample report................................................. 160

Management Console Guide

Content

PUBLIC 5

9.31 Match Input Source Output Select report.......................................... 160

9.32 Match Multi-source Frequency report............................................. 161

9.33 Match Source Statistics Summary report.......................................... 162

6 PUBLIC

Management Console Guide

Content

1 About the Management Console

A brief description of the SAP Data Services Management Console and the Data Services operations that it

administers.

The Management Console contains a collection of Web-based applications for administering the following Data

Services features and objects:

• Jobs and services executions

• Object relationship analysis

• Job execution performance evaluation

• Data validity

• Data quality report generation

Install the Management Console on a separate computer from other Data Services components. Management

Console runs on your Web application server. Management Console is written in Java and uses a JDBC

connection to repositories.

The following table contains Management Console applications and descriptions.

Management Console applications

Application

Description

Administrator Manage your production environment including batch job execution, real-time serv-

ices, web services, adapter instances, server groups, central repositories, proler

repositories, and more.

Impact and Lineage Analysis Analyze the end-to-end impact and lineage for source and target objects used

within the Data Services local repository.

Operational Dashboard View dashboards that provide at-a-glance statistics, status, and performance of

your job executions for one or more repositories over a given time period.

Data Validation Dashboard Evaluate the reliability of your target data based on the validation rules you created

in your batch jobs. Quickly review, assess, and identify potential inconsistencies or

errors in source data.

Auto Documentation View, analyze, and print graphical representations of all objects as depicted in the

Designer including their relationships, properties, and more.

Data Quality Reports View and export reports for batch and real-time jobs, such as job summaries and

data quality transform reports.

1.1 Who should read this guide

This and other SAP Data Services documentation assume the following:

• You are an application developer, consultant or database administrator working on data extraction, data

warehousing, data integration, or data quality.

Management Console Guide

About the Management Console

PUBLIC 7

• You understand your source and target data systems, DBMS, legacy systems, business intelligence, and

messaging concepts.

• You understand your organization's data needs.

• You are familiar with SQL (Structured Query Language).

• If you are interested in using this software to design real-time processing, you are familiar with:

• DTD and XML Schema formats for XML les

• Publishing Web Services (WSDL, REST, HTTP/S and SOAP protocols, etc.)

• You are familiar with SAP Data Services installation environments: Microsoft Windows or UNIX.

1.2 Naming conventions and variables

This documentation uses specic terminology, location variables, and environment variables that describe

various features, processes, and locations in SAP Data Services.

Terminology

SAP Data Services documentation uses the following terminology:

• The terms Data Services system and SAP Data Services mean the same thing.

• The term BI platform refers to SAP BusinessObjects Business Intelligence platform.

• The term IPS refers to SAP BusinessObjects Information platform services.

Note

Data Services requires BI platform components. However, when you don't use other SAP applications,

IPS, a scaled back version of BI, also provides these components for Data Services.

• CMC refers to the Central Management Console provided by the BI or IPS platform.

• CMS refers to the Central Management Server provided by the BI or IPS platform.

Variables

The following table describes the location variables and environment variables that are necessary when you

install and congure Data Services and required components.

8

PUBLIC

Management Console Guide

About the Management Console

Variables Description

INSTALL_DIR

The installation directory for SAP applications such as Data

Services.

Default location:

• For Windows: C:\Program Files (x86)\SAP

BusinessObjects

• For UNIX: $HOME/sap businessobjects

Note

INSTALL_DIR isn't an environment variable. The in-

stallation location of SAP software can be dierent than

what we list for INSTALL_DIR based on the location

that your administrator sets during installation.

BIP_INSTALL_DIR

The directory for the BI or IPS platform.

Default location:

• For Windows: <INSTALL_DIR>\SAP

BusinessObjects Enterprise XI 4.0

Example

C:\Program Files

(x86)\SAP BusinessObjects\SAP

BusinessObjects Enterprise XI 4.0

• For UNIX: <INSTALL_DIR>/enterprise_xi40

Note

These paths are the same for both BI and IPS.

Note

BIP_INSTALL_DIR isn't an environment variable.

The installation location of SAP software can be dierent

than what we list for BIP_INSTALL_DIR based on the

location that your administrator sets during installation.

Management Console Guide

About the Management Console

PUBLIC 9

Variables Description

<LINK_DIR>

An environment variable for the root directory of the Data

Services system.

Default location:

• All platforms

<INSTALL_DIR>\Data Services

Example

C:\Program Files (x86)\SAP

BusinessObjects\Data Services

10 PUBLIC

Management Console Guide

About the Management Console

Variables Description

<DS_COMMON_DIR>

An environment variable for the common conguration di-

rectory for the Data Services system.

Default location:

• If your system is on Windows (Vista and newer):

<AllUsersProfile>\SAP

BusinessObjects\Data Services

Note

The default value of <AllUsersProfile> environ-

ment variable for Windows Vista and newer is

C:\ProgramData.

Example

C:\ProgramData\SAP

BusinessObjects\Data Services

• If your system is on Windows (Older versions such as

XP)

<AllUsersProfile>\Application

Data\SAP BusinessObjects\Data

Services

Note

The default value of <AllUsersProfile> en-

vironment variable for Windows older versions

is C:\Documents and Settings\All

Users.

Example

C:\Documents and Settings\All

Users\Application Data\SAP

BusinessObjects\Data Services

• UNIX systems (for compatibility)

<LINK_DIR>

The installer automatically creates this system environment

variable during installation.

Note

Starting with Data Services 4.2 SP6, users

can designate a dierent default location for

Management Console Guide

About the Management Console

PUBLIC 11

Variables Description

<DS_COMMON_DIR> during installation. If you can't nd

the <DS_COMMON_DIR> in the listed default location, ask

your System Administrator to nd out where your de-

fault location is for <DS_COMMON_DIR>.

<DS_USER_DIR>

The environment variable for the user-specic conguration

directory for the Data Services system.

Default location:

• If you're on Windows (Vista and newer):

<UserProfile>\AppData\Local\SAP

BusinessObjects\Data Services

Note

The default value of <UserProfile> environment

variable for Windows Vista and newer versions is

C:\Users\{username}.

• If you're on Windows (Older versions such as XP):

<UserProfile>\Local

Settings\Application Data\SAP

BusinessObjects\Data Services

Note

The default value of <UserProfile> en-

vironment variable for Windows older ver-

sions is C:\Documents and Settings\

{username}.

Note

The system uses <DS_USER_DIR> only for Data

Services client applications on Windows. UNIX plat-

forms don't use <DS_USER_DIR>.

The installer automatically creates this system environment

variable during installation.

12

PUBLIC

Management Console Guide

About the Management Console

2 Logging into the Management Console

When you log into the Management Console, you must log in as a user dened in the Central Management

Server (CMS). The rst time you log into the Management Console, use the default user name and password

(admin/admin). It's recommended that you change the defaults by updating user roles in the Administrator.

1. Open a web browser, enter the following case-sensitive URL, and then press Enter:

http://<hostname>:8080/DataServices

where <hostname> is the name of the computer hosting the web application server.

Note

If you are logged in to the Designer, you can also access the Management Console home page in

several ways.

From

Select

Start page Data Services Management Console

Tools menu Data Services Management Console

Toolbar icon Data Services Management Console

2. Enter your user credentials for the CMS.

Option

Description

System Specify the server name and optionally the port for the CMS.

User name Specify the user name to use to log into CMS.

Password Specify the password to use to log into the CMS.

Authentication Specify the authentication type used by the CMS.

3. Click Log on.

The software attempts to connect to the CMS using the specied information. When you log in

successfully, the list of local repositories that are available to you is displayed.

2.1 Management Console navigation

The Management Console contains links to various applications that help you manage all aspects of SAP Data

Services. Click the application name to open the application.

After you open an application, the name of that application displays under the Management Console banner

at the top of the screen. There is a navigation tree along the left of the screen under the banner, that lists the

option categories (as nodes) for the application. Choose a node from the navigation tree, and the right side of

Management Console Guide

Logging into the Management Console

PUBLIC 13

the screen displays the related options. Sometimes there is more than one tab to choose from in the right side

of the screen.

The upper-right side of the main window for each application includes helpful links that vary based on the

application you have open. The following table describes the links that can appear.

Link Description

Home Click to return to the Management Console home page

where you can select another application, for example.

Settings Click to open a dialog box for changing a variety of options

depending on the selected application.

Logout Click to exit the Management Console application and Data

Services software, and display the log in page.

? Question mark icon Click to open the Documentation Map, which lists the SAP

Data Services technical documentation available to end

users or administrators, and a link to the customer portal

where you can fnd the latest versions of the documentation.

Your Management Console session times out after 120 minutes (2 hours) of inactivity.

14

PUBLIC

Management Console Guide

Logging into the Management Console

3 Administrator

This section describes the Administrator and how to navigate through its browser-based, graphical user

interface.

Use the Administrator to:

• Set up users and their roles

• Add connections to Access Servers and repositories

• Access job data published for Web Services

• Schedule and monitor batch jobs

• Congure and monitor:

• Access Server status

• Real-time services

• Client interfaces including SAP application client interfaces (to read IDocs) and message trac moving

in and out of an Access Server

• Adapter instances (a prerequisite for creating adapter datastores)

Additionally, you use the tools under the Promotion Management node to congure options to transport Data

Services objects from one system to another. For example, to export changes from a development system

to a test system. For more information about lifecycle management, including promotion management, see

the Administrator Guide. If you have a Change Transport System (CTS) installed in an SAP NetWeaver or SAP

Solution Manager environment, you can use CTS+ to transport Data Services objects. For more information,

see the SAP Data Services Conguration Guide for CTS+.

Related Information

Logging into the Management Console [page 13]

Administrator navigation [page 15]

3.1 Administrator navigation

Set up administrative aspects of Data Services such as users, user permissions, access servers, repositories,

schedules, and so on.

The navigation tree on the left side of the Administrator screen contains nodes that you can expand to make

related settings. The options for each chosen node appear on the right side of the screen. The right portion of

the screen may contain one or more tabs based on the node that you choose.

The navigation tree nodes contain options related to the user's permissions. Therefore not all nodes appear for

all users. For example, the Proler Repositories node only appears if the user has permission to view or manage

a proler repository. An administrator sets user permissions in the Central Management Console (CMC).

Management Console Guide

Administrator

PUBLIC 15

Possible nodes that appear in the navigation tree are:

• Status

• Batch

• Real-Time

• Web Services

• Adapter Instances

• Server Groups

• Proler Repositories

• Management

• Job Execution History

3.1.1Status node

Displays status indicators and detailed information about aspects of your jobs.

The Status page displays red, yellow, and green status icons that indicate the overall status of your batch and

real-time jobs, associated access servers, adapter and proler repositories jobs, services, and other related

systems. You can drill down into the information for more details. The following options appear as column

headings in the Status page.

Option

Description

Batch

Contains repository names that are associated with the Job Server on which

you run your batch jobs. To see batch jobs status, connect the repository to the

Administrator.

Note

The repository must be connected to the Management Console Administra-

tor before you can view it here.

Click the repository name listed under the Batch column to display a list of batch

jobs and the status for each.

Real-Time

Contains Access Server names associated with the real-time service. To see real-

time jobs status, connect the Access Server to the Administrator.

Note

The Access Server must be connected to the Management Console Adminis-

trator before you can view it here.

Click the Access Server name listed under the Real-Time column to display a list

of services and related client interfaces.

16 PUBLIC

Management Console Guide

Administrator

Option Description

Adapters

Contains repository names associated with the Job Server on which you run the

adapter.

Note

You must enable the Job Server for adapters before you can see the adapter

status here.

Click the repository name to display more adapter information.

Proler

Contains the repository name associated with the Proler Server.

Note

You must connect the proling repository to the Management Console Ad-

ministrator before you can view it here.

Click the repository name to display a list of proler tasks and their status.

For more information about adapter and prole repositories, and about repository and server connections, see

the Administrator Guide.

3.1.2Batch node

The Batch node contains job status, job conguration, and repository schedule information.

After you add at least one repository connection to the Administrator, you can expand the Batch node and view

a repository Batch Job Status page.

Click the All Repositories option to see jobs in all repositories connected to this Administrator. (The All

Repositories node appears only when you have more than one repository connected).

Each repository under the Batch node includes the following tabs.

Tab

Description

Batch Job Status View the status of the last execution and in-depth information about each job.

Batch Job Conguration Congure execution and scheduling options for individual jobs.

Repository Schedules

View and congure schedules for all jobs in the repository.

There is a Delete button in the Batch node that deletes the selected job as well as the history and log les. If

you intend to create reports based on specic jobs, transforms, time periods, and job statistics, or if you need

information to create regulatory or certied reports, you should create a local backup copy of the tables or log

les before you delete.

Management Console Guide

Administrator

PUBLIC 17

Related Information

Batch Jobs [page 39]

3.1.3Real-Time node

After you add a connection to an Access Server in the Administrator, you can expand the Real-Time node.

Expand an Access Server name under the Real-Time node to view the options.

Access Server node options

Description

Status View status of real-time services and client interfaces supported by this Access

Server. Control, restart, and set a service provider interval for this Access Server.

Real-time Services View status for services and service providers, start and stop services, add or

remove a service, congure Job Servers for a service.

Client Interfaces View status for client interfaces, start and stop interfaces, add or remove an inter-

face.

Logs - Current View list of current Access Server logs, content of each log, clear logs, congure

content of logs for display, enable or disable tracing for each Access Server.

Logs - History View list of historical Access Server logs, view content of each log, delete logs.

Related Information

Real-Time Jobs [page 54]

Real-Time Performance [page 70]

3.1.4Web Services node

Use this node to select real-time and batch jobs that you want to publish as Web service operations and to

monitor the status of those operations. You can also use the node to set security for jobs published as Web

service operations and view the WSDL le that SAP Data Services generates.

Related Information

Support for Web Services [page 89]

18

PUBLIC

Management Console Guide

Administrator

3.1.5Adapter Instances node

Use this node to congure a connection between SAP Data Services and an external application by creating

an adapter instance and dependent operations. This is a prerequisite requirement for creating a datastore for

adapters in the Designer.

After you create a datastore, import data through the adapter and create jobs. Then use this node to view the

status of Adapter instances. Options are listed by Job Server under the Adapter Instance node.

Related Information

Adapters [page 88]

3.1.6Server Groups node

The Server Groups node allows you to group Job Servers that are associated with the same repository into a

server group.

Use a server group if you want SAP Data Services to automatically use the Job Server on a computer with

the lightest load when a batch job is executed. This functionality improves load balancing (throughput) in

production environments and also provides a hot backup method. When a job is launched, if a Job Server is

down, another Job Server in the same group executes the job.

Related Information

Server Groups [page 34]

3.1.7Proler Repositories node

After you connect a proler repository to the Administrator, you can expand the Proler Repositories node.

Click a repository name to open the Proler Tasks Status page.

Related Information

Prole Server Management [page 79]

Management Console Guide

Administrator

PUBLIC 19

3.1.8Management node

The Management node contains the conguration options for the Administrator application. Before you can

use some features of the Administrator, you must add connections to other SAP Data Services components

using the Management node. For example, expand the management node and:

• Expand Datastore and click a repository to manage datastore congurations for that repository.

• Click Access Servers to add a connection to your Access Servers (for real-time jobs).

Related Information

Administrator Management [page 21]

3.1.9Job Execution History node

Contains execution history for a job or data ow.

When you expand the Job Execution History node, The following information may be available as applicable:

Tab

Description

Job Execution History

You can view execution history for a single batch job or for all batch jobs.

You can rene the history by selecting to view reports that were generated

between a specic start and end date.

The information for the selected batch job appears in a table. This table

displays the repository name, job name, the start and end time of the job

execution, the execution time (elapsed), the status, and whether the job is

associated with a system conguration.

• Under the Job information column, click the Trace, Monitor, or Error

link to open the Log Viewer page, which displays the logs associated

with the job. Click the other tabs in the Log Viewer page to view the

other types of logs.

• Click the Performance Monitor link under the Job information column

to open the Performance Monitor page.

20 PUBLIC

Management Console Guide

Administrator

Tab Description

Data Flow Execution History

This page includes three options for customizing the display:

• Data Flow: Enter a data ow name for which to search and click Search.

• Job Name: Select all jobs or an individual job.

• View history for x days: Select over how many days you want to view

the history

Related information appears in a table. This table displays the repository

name, data ow name, job name, the start and end time of the data ow

execution, the execution time (elapsed), and the number of rows extracted

and loaded.

Related Information

3.1.10Node pages

Each node that you expand on the left opens a page on the right that displays information relating to the node

that you have selected.

The top of the page indicates the currently selected node. If applicable, a page contains tabs that you can use

to navigate further into the node. For example, the Batch node page contains the tabs: Batch Job Status, Batch

Job Conguration, and Repository Schedules.

The tabs names appear on light blue tabs. A dark blue (shaded) tab signies the active page. Click a light blue

tab to go to that page. Some pages do not include tabs.

As you drill into various pages, a “bread crumb” trail often indicates where you are in the Administrator

application. In some pages, you can click on the bread crumb links to navigate to a dierent page.

3.2 Administrator Management

Use the Management features to congure the Administrator.

3.2.1Managing database account changes

SAP Data Services uses several types of user accounts and associated passwords. For various reasons,

database account parameters such as user names or passwords change. For example, perhaps your

company's compliance and regulations policies require periodically changing account passwords for security.

Management Console Guide

Administrator

PUBLIC 21

3.2.1.1 Updating local repository login parameters

If the login information, particularly the password, for a repository has changed, SAP Data Services provides

an optional password le that all schedules or exported execution commands use. In other words, the software

uses this password le to store and update connection information in one location that multiple schedules or

exported execution commands share for that repository.

Note

This description does not apply to central repositories.

The password le:

• Species the connection information for the repository.

• Can be stored in a central location for access by others who run jobs in that repository.

• Is created when you create or update a job schedule to minimize associated maintenance.

Related Information

Using a third-party scheduler [page 48]

3.2.1.1.1 Updating the CMS connection information and

use a password le

1. Expand the Management node.

2. Click CMS Connection.

3. Edit the connection information as necessary.

4. Click Apply.

5. Click Generate password le to create or update the password le.

The default name and location of the le are <DS_COMMON_DIR>\conf\repositoryname.txt.

3.2.1.1.2 Updating job schedules

When database account information for your repository changes, the SAP Data Services job schedules

associated with that account must also be updated. When you use a password le, the job schedules access it

at runtime to automatically retrieve the updated account information.

22

PUBLIC

Management Console Guide

Administrator

Related Information

Scheduling jobs [page 40]

3.2.1.2 Updating datastore connection parameters

If the information associated with a datastore connection changes, particularly passwords, you can update the

changes using the Administrator.

Note

Only users with Administrator role privileges can edit datastore parameters.

3.2.1.2.1 Editing the connection information for an

individual conguration in a datastore

1. Select Management Datastore , and select the repository that contains the datastore conguration

that you want to edit.

2. Click the conguration name to congure.

3. Edit the enabled elds as necessary.

4. Click Apply. To return all elds to the last set of values applied, click Reset.

3.2.1.2.2 Editing the connection information for multiple

congurations in a datastore

1. Select

Management Datastore , and select the repository that contains the datastore congurations

that you want to edit.

2. Click the datastore name to congure.

All congurations for that datastore display.

3. Edit the enabled elds as necessary.

Click More to display the page for that individual conguration, which includes more options specic to it.

4. Click Apply. To return all elds to the last set of values applied, click Reset.

Management Console Guide

Administrator

PUBLIC 23

3.2.2Editing le location object information

Edit a le location object conguration to change current settings like host, port, user, password, remote and

local directories.

1. Select Management File Locations .

2. Select the repository that contains the le location object conguration to edit.

3. Click the File location and conguration name to edit.

4. Edit the enabled elds as necessary.

Note

You cannot change the Name, Type, or Protocol options.

5. Click Apply.

Related Information

3.2.3Conguring the report server

For each repository registered in the Central Management Console (CMC), a report server conguration is

automatically created with default parameters. The Report Server Conguration node in the Management

Console Administrator lets you edit the default parameters, including the location where job reports are written

upon execution.

1. Select Management Report Server Conguration <repository> .

The Report Server Conguration page opens and displays the report export conguration parameters. If

the conguration has not yet been saved for the selected repository, the page displays default parameters.

2. Enter the appropriate conguration information.

Option

Description

Host name The name of the machine that the report server is running on. By default, the current

web application server name is used. Localhost is not a valid name.

Communication port The port number of the machine that the report server is running on.

24 PUBLIC

Management Console Guide

Administrator

Option Description

Export location The path where the reports will be exported to. The default path is

<DS_COMMON_DIR>\DataQuality\reports\. Upon execution, the repository

name and job name folders are appended to the path. If the Overwrite option is not

selected, a run ID folder is also appended to the path.

Note

If you export reports to a location other than a local drive, such as a network drive,

before you execute the job you must start the Server Intelligence Agent service with

an account that has access rights to that location.

Export type

The format in which the reports can be exported (PDF or RTF).

Overwrite Species whether existing reports will be overwritten when the reports are exported. If

this option is not selected, the reports are exported to a subfolder with the run ID, which

species a unique identication of an instance of the executed job.

Language The supported language that the reports are generated in. Note that some reports,

such as country-specic certication reports, are designed only to support English, so

changing the option for those reports has no eect.

3. Click Apply to save the conguration. To return all elds to the last set of values applied, clicking Reset.

4. Verify that the security setting for this operation is disabled. Select Administrator Web Services and

click the Web Services Conguration tab. If the Export_DQReport operation is enabled (displays a check

in the Session Security column), select the checkbox next to it, select Disable Session Security from the

pull-down menu, and click the Apply button.

To generate and export all of the job reports to the specied location at runtime, select the Export Data Quality

Reports option when you execute the job.

Related Information

Adding a job schedule [page 41]

3.2.4Adding Access Servers

The Administrator acts as a front end for Access Servers connected to it. Use the Administrator to:

• Congure real-time jobs as real-time services.

• Congure real-time services with service providers.

• Monitor Access Servers, real-time services, and service providers.

You rst must connect an Access Server to the Administrator so that you can use the Administrator to create

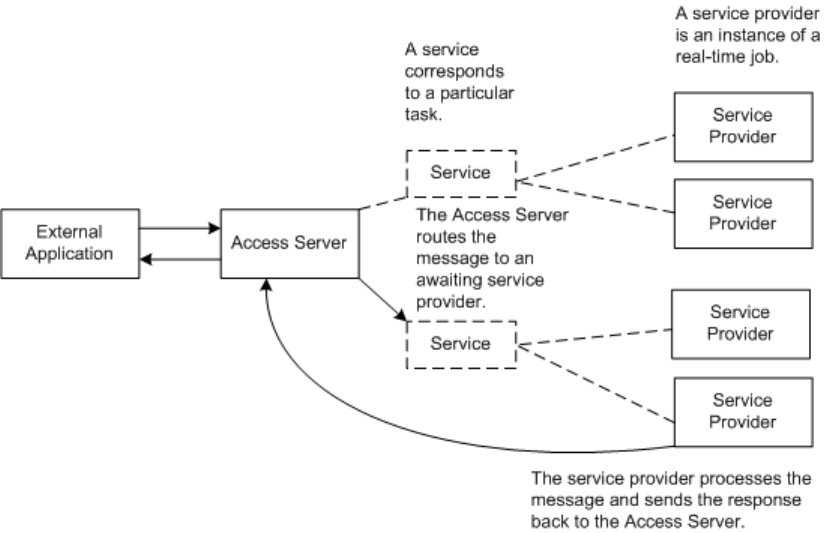

a real-time service from a real-time job. After a service starts, the Access Server brokers messages between

external applications and SAP Data Services.

When a message request comes in, the Access Server communicates with the Job Server to get the repository

data needed to run a real-time service and process the message. A reply comes back through the Access

Management Console Guide

Administrator

PUBLIC 25

Server to the message originator and the Access Server log records the event, which you can monitor from the

Administrator.

Use the Access Servers page to connect an Administrator to a repository.

1. Select Management Access Servers .

2. Click Add.

3. Enter the following information.

Option

Description

Machine Name Host name of the computer on which the Access Server is installed.

Communication Port Port assigned to the Access Server in the Server Manager utility.

4. (Optional) Before attempting to register the Access Server with the Administrator, click Ping to see if the

Access Server is available and exists on the computer and port you specied.

5. Click Apply.

The Administrator registers the Access Server, validates the Access Server connection information, and

displays the information on the Access Servers page.

To view a list of Access Servers connected to the Administrator, select Management Access Servers .

The Access Servers page lists the Access Servers that are connected to the Administrator. You can also remove

a connection to an Access Server from this page.

3.2.5Setting the status interval

Use the Status Interval page to specify the time period for which the Administrator displays the status (using

the red, yellow, and green status icons) on the Batch Job Status page and the Real-Time History page.

1. Select Management Status Interval .

2. On the Status Interval page, specify the time period.

You can lter the information for Batch and Real-time jobs in the following ways:

• By the last execution of each job

• By number of days

Note

The default for Real-Time Display is 5 days.

• By range of dates

3. Click Apply.

The Administrator updates the list of job executions and the status interval displays on the Batch Job

Status page and the Real-Time History page.

26

PUBLIC

Management Console Guide

Administrator

3.2.6Exporting certication logs

Generate and export certication logs for supported postal authorities.

When you run address cleanse jobs and set the appropriate options, you can generate reports to qualify

for mailing discounts with supported postal authorities. Generate the certication log les required for

certications in the Management Console.

The Certication Logs page is available to users who are assigned either the Administrator or Operator role.

Related Information

Exporting NCOALink report data [page 27]

Exporting New Zealand SOA certication logs [page 28]

Exporting DSF2 certication log [page 29]

3.2.6.1 Exporting NCOALink report data

Before you export report data (with exception of Null reports), you must rst run a job containing a USA

Regulatory Address Cleanse transform with the NCOA options set appropriately. You must also congure your

repository in the Central Management Console (CMC).

You can export report data for the data in one repository or in all repositories.

Caution

If you select all repositories and have more than one connection to the same repository, your results may

contain duplicate records.

1. Select Management Certication Logs , and select the repository that contains the data that you

want to export.

2. Click the NCOALink tab.

3. Select the date range that contains the data that you want to export.

4. To export standard reports, enable Export standard monthly reports for NCOA Licensee ID and select the

NCOALink Licensee ID for the les that you want to export. To export a null monthly report, enable Export

Null monthly reports for NCOA Licensee ID and enter a 4-character NCOALink Licensee ID.

Note

Selecting an entry with a NCOA Processed? value of Yes for export will result in standard monthly

reports being exported even if you have the Export NULL monthly reports for NCOA Licensee ID option

selected. A No value indicates that data isn't available (for example, NCOA jobs were not run for the

specied Licensee ID(s) and date range), which results in a null monthly report being exported.

5. Specify the location where the les will be exported.

Management Console Guide

Administrator

PUBLIC 27

Note

The location that you specify is relative to the web application server.

To reset the export location to the default, click the Reset button. The default location is

<DS_COMMON_DIR>\DataQuality\certifications\CertificationLogs\<repository>\.

6. If you want to overwrite an existing le, click the Overwrite option.

7. Click the Search button. The page displays available report entries based on the options you select. For

example, if you have Export standard monthly reports for NCOA Licensee ID and All NCOA Licensee IDs

selected, report entries for all IDs for the specied dates will appear in the table. You can sort using the

column headers.

8. Select the report data that you want to export or select Select All.

9. Click the Export button.

After the report data is exported, a conrmation message is displayed at the top of the page.

Related Information

NCOALink Processing Summary Report [page 136]

3.2.6.2 Exporting New Zealand SOA certication logs

Before you export the certication log, you must have run a job containing a Global Address Cleanse transform

with the New Zealand SOA certication options set appropriately. You must also congure your repository in

the CMC.

You can export the certication log for the data in one repository or in all repositories.

Caution

If you select all repositories and have more than one connection to the same repository, your results may

contain duplicate records.

1. Select Management Certication Logs , and select the repository that contains the certication log

that you want to export.

2. Click the New Zealand SOA tab.

3. Select whether you want to export all certication log data that is in the selected repository or just the data

within a specied date range.

The Year list contains the current year and the two previous years, because certication logs are required

to be retained for two years.

4. Specify the location where the certication logs will be exported.

The default location is

<DS_COMMON_DIR>\DataQuality\certifications\CertificationLogs\<repository>\. To reset

the export location to the default, click the Reset button.

5. If you want to overwrite an existing log le, click the Overwrite option.

28

PUBLIC

Management Console Guide

Administrator

6. Click the Export button.

After the log le is exported, a conrmation message is displayed at the top of the page.

Related Information

New Zealand Statement of Accuracy (SOA) report [page 147]

3.2.6.3 Exporting DSF2 certication log

Export DSF2 log les to submit them to the USPS as required by your DSF2 license agreement.

Select to export from one repository or from multiple repositories as applicable. However, if you select multiple

repositories, and there is more than one connection to a repository, you may export duplicate records.

1. Open and log in to the Data Services Management Console.

2. In the Administrator, select

Management Certication Logs .

3. Select the repository that contains the certication log that you want to export.

4. Open the DSF2 tab and select the date range for when you generated the log le.

5. Click DSF2 licensee ID and select the applicable licensee ID from the dropdown list. Or, select All DSF2

License IDs.

6. Specify a location for the exported certication logs.

Note

The location that you specify is relative to the web application server.

To reset the export location to the default, click the Reset button. The default location is

<DS_COMMON_DIR>\DataQuality\certifications\CertificationLogs\<repository>\.

7. Optional. Click Overwrite to overwrite any existing log le.

8. Click Search.

The page displays the available log les with the specied criteria. You can sort the log les using the

column headers. The Data Available column has a status of Yes when there is data for the log le. A status

of No indicates that no data is available and the export results in an empty log le.

9. Select the log le or les to export or check Select All.

10. Click Export.

The software displays a conrmation message when the export completes.

Related Information

DSF2 Augment Statistics Log File [page 145]

Data Quality Reports [page 131]

Management Console Guide

Administrator

PUBLIC 29

3.3 Central Repository management

This section describes how to manage your secure central repositories using the Administrator.

When you log into the Management Console as a user with the appropriate rights, the name of each secure

central repository appears under the Central Repositories node. Links under this node include:

• Users and groups

Use to add, remove, and congure users and groups for secure object access.

• Reports

Use to generate reports for central repository objects such as viewing the change history of an object.

Note

Before you can manage a secure central repository, it must be registered in the Central Management

Console (CMC) and have appropriate user access applied.

Related Information

3.3.1 Setting up users and groups

The general process for setting up secure central repository users and groups is as follows:

1. Register the secure central repository in the Central Management Console (CMC).

2. Add central repository groups.

3. Associate users with groups.

The following sections describe these procedures.

Related Information

3.3.1.1 Adding a group to a central repository

Groups are specic to a secure central repository and are not visible in any other local or central repository.

1. Expand the Central Repositories node in the navigation tree and expand the repository to congure.

2. Click Users and Groups.

The Groups and Users page displays.

30

PUBLIC

Management Console Guide

Administrator

3. On the Groups tab, click Add.

4. Type a Name for the group.

5. Optionally, type a Description for the group.

6. Click Apply.

The group appears on the Groups tab.

3.3.1.2 Adding users

1. Expand the Central Repositories node in the navigation tree and expand the repository to congure.

2. Click Users and Groups.

The Groups and Users page displays.

3. Click the Users tab.

4. Click Add.

On the Add/Edit User page, enter the following information.

Option

Description

User name Select the user to add to the group.

Note

The list of available users includes all users dened in the Central

Management Console (CMC).

Default group

The default central repository group to which the user belongs. You can

change the default by selecting another from the drop-down list.

Status Select a value from the drop-down list:

• Active

Enables the user's account for normal activities.

• Suspended

Select to disable the login for that user.

Description

Optionally, type a description for the user.

The User is a member of list on the left shows the groups to which this user belongs.

5. Click Apply.

Clicking Reset returns all elds to the last set of values applied.

3.3.1.3 Adding or removing a user from a group

1. Expand the Central Repositories node in the navigation tree and expand the repository to congure.

2. Click Users and Groups.

3. Click the Group tab.

Management Console Guide

Administrator

PUBLIC 31

4. Click the group name.

5. The Members list on the left shows the users in this group.

To add users to a group, click the user names from the Not Members list and click Add Users. Select

multiple user names using the Ctrl or Shift keys.

To remove a user from the group, select a user name from the Members list and click Remove Users. Select

multiple user names using the Ctrl or Shift keys.

6. Click Apply.

Clicking Reset returns all elds to the last set of values applied.

Alternately, click the Users tab, click the user name, and associate the user with one or more groups by

selecting group names and adding or removing them.

Related Information

3.3.1.4 Deleting a group

1. Expand the Central Repositories node in the navigation tree, expand the repository to congure, and click

Users and Groups.

2. Click the Group tab.

3. Select the check box for the group.

4. Click Remove.

Note

You cannot delete a group in the following instances:

• It is the default group for any user (whether or not they are active).

• It is the only group with full permissions for an object.

• A member of the group is undertaking any central repository tasks using the Designer.

3.3.2Viewing reports

You can generate reports about objects in a central repository such as which objects a user currently has

checked out or the changes made to an object over a specied time frame.

Expand the central repository to view and expand the Reports link.

32

PUBLIC

Management Console Guide

Administrator

Related Information

Object state report [page 33]

Change report [page 33]

3.3.2.1 Object state report

Use the object state report to view details on one or more objects such as whether the objects are checked out

and by whom.

Click the Object State Report link to display a search page with the following criteria (all elds are optional):

Option

Description

Object name Type an object name. You can use the % symbol as a wildcard.

Object type For example select Batch job, Table, or Stored procedure.

State For example select Checked out.

User Select a central repository user name.

Click Search to generate the report. The report has the following columns:

• Object name

• Object type

• State

• User name—The user account associated with the check-out or check-in.

• Associated repository—The repository to which the object belongs.

• Time—Check-out or check-in date and time.

• Comments—Comments added when user checked out or checked in the object.

Click the object name to display the object's history.

Related Information

3.3.2.2 Change report

Use the change report to view the change history for an object over a specied period of time.

Click the Change Report link to display a search page with the following criteria:

• Start date—Enter a date or click the calendar icon to select a start date.

• End date—Enter a date or click the calendar icon to select an end date.

Management Console Guide

Administrator

PUBLIC 33

• Object type—Optionally select an object type; for example batch job, table, or stored procedure.

• State—Optionally select an object state; for example Checked out.

• User—Optionally select a central repository user name.

Click Search to generate the report. The report has the following columns:

• Object name

• Object type

• State

• Version—The version number of the object.

• User name—The user account associated with the check-out or check-in.

• Associated repository—The repository to which the object belongs.

• Time—Check-out or check-in date and time.

• Comments—Comments added when user checked out or checked in the object.

3.4 Server Groups

Use the Administrator to create and maintain server groups.

This section describes how to work with server groups.

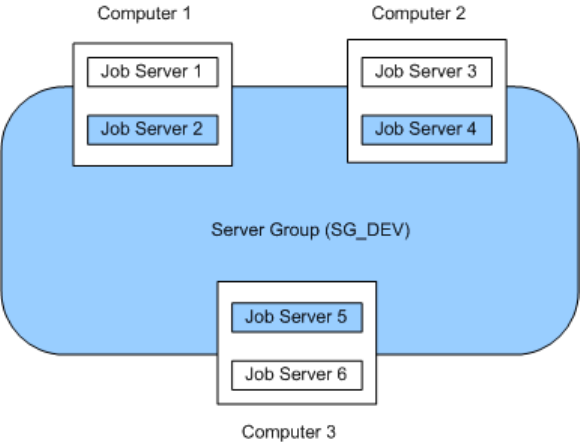

3.4.1Server group architecture

You can group Job Servers on dierent computers into a logical SAP Data Services component called a server

group. A server group automatically measures resource availability on each Job Server in the group and

distributes scheduled batch jobs to the Job Server with the lightest load at runtime.

There are two rules for creating server groups:

• All the Job Servers in an individual server group must be associated with the same repository, which must

be dened as a default repository. The Job Servers in the server group must also have:

• Identical SAP Data Services versions

• Identical database server versions

• Identical locale

• Each computer can only contribute one Job Server to a server group.

34

PUBLIC

Management Console Guide

Administrator

The requirement that all Job Servers in a server group be associated with the same repository simply allows

you to more easily track which jobs are associated with a server group. It is recommended that you use a

naming convention for server groups that includes the name of the repository. For example, for a repository

called DEV, a server group might be called SG_DEV.

On startup, all Job Servers check the repository to nd out if they must start as part of a server group.

Compared to normal Job Servers, Job Servers in a server group each:

• Collect a list of other Job Servers in their server group

• Collect system load statistics every 60 seconds:

• Number of CPUs (on startup only)

• Average CPU load

• Available virtual memory

• Service requests for system load statistics

• Accept server group execution requests

3.4.1.1 Load balance index

All Job Servers in a server group collect and consolidate system load statistics and convert them into a

load balance index value for each Job Server. A Job Server's load balance index value allows the software to

normalize statistics taken from dierent platforms. The Job Server with the lowest index value is selected to

execute the current job. The software polls all Job Server computers every 60 seconds to refresh the load

balance index.

Management Console Guide

Administrator

PUBLIC 35

3.4.1.2 Job execution

After you create a server group, you can select a server group to execute a job from the Designer's Execution

Properties window or from the Execute Batch Job, Schedule Batch Job, and Export Batch Job pages in the

Administrator.

When you execute a job using a server group, the server group executes the job on the Job Server in the group

that is running on the computer that has the lightest load. The Administrator will also resynchronize a Job

Server with its repository if there are changes made to the server group conguration settings.

You can execute parts of your job on dierent Job Servers in a server group. You can select the following

distribution levels from the Designer's Execution Properties window or from the Execute Batch Job, Schedule

Batch Job, and Export Execution Command pages in the Administrator:

• Job level

A job can execute on an available Job Server.

• Data ow level

Each data ow within a job can execute on an available Job Server.

• Sub data ow level

A resource-intensive operation (such as a sort, table comparison, or table lookup) within a data ow can

execute on an available Job Server.

Related Information

3.4.1.3 Job launcher

The Job Launcher, exported as part of a job's execution commands, includes a specic command line option

for server groups. You can use this option to change the Job Servers in a server group.

Related Information

About the job launcher [page 51]

3.4.1.4 Working with server groups and Designer options

Some Designer options assume paths are relative to a Job Server. If your Job Servers are on dierent machines

from your Designer (typically the case in a production environment) you must ensure that connections and

directory paths point to the Job Server host that will run the job. Such options include:

• Source and target directories for les

36

PUBLIC

Management Console Guide

Administrator

• Bulk load directories

• Source and target connection strings to databases

• Path to repositories

When using server groups consider the additional layer of complexity for connections. For example, if you have

three Job Servers in a server group:

• Use the same directory structure across your three host computers for source and target le operations

and use relative paths for le names.

• Use the same connection strings to your databases for all three Job Server hosts.

If you use job distribution levels, the Job Servers in the server group must have:

• Identical SAP Data Services versions

• Identical database server versions

• Identical locale

• Identical operating systems

Thoroughly test the Job Server job options when working with server groups.

Adding a server group:

• In the Administrator, use the Server Groups node to create and add a server group.

3.4.1.4.1 Adding a server group

1. Select

Server Groups All Server Groups .

2. Click the Server Group Conguration tab.

3. Click Add.

4. Follow the instructions on the Add Server Group page to create a server group.

• When you select a repository, all Job Servers registered with that repository display. You can create

one server group per repository.

• Notice that the Administrator provides a default server group name. It is the name of your repository

with the prex SG_ (for server group). You can change the default name, however, labeling a server

group with the repository name is recommended.

• One Job Server on a computer can be added to a server group. Use the Host and Port column to verify

that the Job Servers you select are each installed on a dierent host.

5. After you select the Job Servers for a server group, click Apply.

The display returns to the Server Group Conguration page.

Related Information

Monitoring Job Server status in a server group [page 38]

Management Console Guide

Administrator

PUBLIC 37

3.4.2Editing and removing a server group

You can select a new set of Job Servers for an existing server group or remove a server group.

Trace messages are written for a change in Job Server status when you create, edit, or remove server groups.

• When a Job Server is upgraded to membership in a server group, the trace message is:

Collecting system load statistics, maintaining list of Job Server(s) for this

server group, and accepting Job Server execution requests.

• When a Job Server is downgraded out of a server group, the trace message is:

Deleting current system load statistics, and not collecting more. Not accepting

job execution requests from a server group.

3.4.2.1 Editing a server group

1. Select a server group from the navigation pane on the left.

2. In the Server Groups page, click the Server Group Conguration tab.

3. Select a new set of Job Servers.

4. Click Apply.

Your edited server group is saved and the display returns to the Server Groups Conguration tab.

3.4.2.2 Removing a server group

1. In the Server Groups page, click the Server Group Conguration tab.

2. Select the check box for the server group(s) that you want to remove.

3. Click Remove.

Note

If you delete Job Servers from a repository in order to delete all the Job Servers in a server group, the

Administrator displays an invalid status for the server group.

3.4.3Monitoring Job Server status in a server group

If Job Servers are in a server group, you can view their status in the Administrator.

• To monitor the status of these Job Servers, select Server Groups All Server Groups .

The Server Group Status page opens. All existing server groups are displayed with the Job Servers they

contain.

38

PUBLIC

Management Console Guide

Administrator

Indicator Description

A green icon indicates that a Job Server is running.

A yellow icon indicates that a Job Server is not running.

A red icon indicates that the Job Server cannot connect to the repository.

If a server group contains Job Servers with a mix of green, yellow, or red indicators, then its indicator

appears yellow:

Otherwise, a server group indicator displays the same color indicator as its Job Servers.

• To view the status for a single server group, select its name.

3.4.4Executing jobs using server groups

After you create a server group, you can select a server group to execute a job from the Designer's Execution

Properties window or from the Execute Batch Job and Schedule Batch Job pages in the Administrator.

Related Information

Batch Jobs [page 39]

3.5 Batch Jobs

This section describes how to execute, schedule, and monitor batch jobs from the Administrator.

Before you can manage batch jobs with the Administrator, add repository connections.

You can control some of the behavior in the Batch Job Status tab by making settings in the Central

Management Console (CMC). For example, set the number of days to retain the job execution history or the

Job Server log. Or set the number of seconds from the job start time that the Managemet Console displays an

accurate job status for jobs that crash mid-execution.

For complete descriptions of these options, see the Server Management section of the Administrator Guide, or

search for History Retention Period, Job Server Log Retention Period, or Job History Cleanup Period.

3.5.1Executing batch jobs

You can execute batch jobs from the Administrator if their repositories are registered in the Central

Management Console (CMC) and your user has the appropriate rights.

Management Console Guide

Administrator

PUBLIC 39

1. Select Batch <repository> .

The Administrator opens the Batch Job Status page, which lists all of the jobs in the selected repository.

To view jobs in all repositories from this page, select Batch All Repositories . (The All Repositories

option appears under the Batch Job node if more than one repository is connected to the Administrator.)

2. Click the Batch Job Conguration tab.

3. To the right of the job you want to run, click Execute.

The Administrator opens the Execute Batch Job page.

4. Under Enter Execution Options, set the parameters for the execution of this job.

5. Under Select Trace Options, set the trace properties for this execution of the job.

6. To create a debug package, select the Debug Package option.

You can download the package from the Batch Job Status page.

7. Click Execute to run the job.

The Administrator returns to the Batch Job Status page.

Related Information

3.5.2Scheduling jobs

There are three ways to manage job schedules.

Related Information

Using the job scheduler [page 40]

Scheduling jobs in SAP BusinessObjects Business Intelligence platform [page 45]

Using a third-party scheduler [page 48]

3.5.2.1 Using the job scheduler

When you schedule batch jobs using the SAP Data Services job scheduler, it creates an entry in the operating

system's scheduling utility on the Job Server computer. Windows uses the Task Scheduler and UNIX systems

use the CRON utility. (Note that if you make changes to a schedule directly through these utilities, the job

scheduler will not reect those changes.)

40

PUBLIC

Management Console Guide

Administrator

3.5.2.1.1 Adding a job schedule

1. Select Batch <repository> .

2. Click the Batch Job Conguration tab.

3. For the job to congure, click Add Schedule.

4. On the Schedule Batch Job page, enter the desired options:

Option

Description

Enter a job schedule

Schedule name Enter a unique name that describes this schedule.

Note

You cannot rename a schedule after you create it.

Active

Select this box to enable (activate) this schedule, then click Apply. This

option allows you to create several schedules for a job and then activate

the one(s) you want to run.

Select a scheduler

Data Services scheduler Creates the schedule on the Job Server computer.

BOE scheduler Creates the schedule on the selected Central Management Server

(CMS).

Select scheduled day(s) for executing the job

Calendar From the drop-down list on the calendar, select:

• Day of Week to schedule the job by the day of the week. You can

select one or more days. Click again to deselect.

• Day of Month to schedule the job by date. You can select one or more

dates. Click again to deselect.

If Recurring is selected, then the Administrator schedules this job to

repeat every week or month on the selected day. Note that if you select

multiple days of the week or month, the job will run on a recurring basis

by default.

Select scheduled time for executing the jobs

Once a day Enter the time for the scheduler to start the job (hours, minutes, and

either AM or PM).

Multiple times a day

• For the Data Services scheduler, enter the time (hours, minutes, and

either AM or PM) for the scheduler to repeatedly run the job for the

selected duration (in minutes) at the selected interval (in minutes).

• For the BOE scheduler, enter (in minutes) the repeat interval to run

the job. You must also select all days in the calendar (for weekly or

monthly).

Select a time when all of the required resources are available. Typically,

you want to schedule jobs to ensure they nish before the target data-

base or data warehouse must be available to meet increased demand.

Management Console Guide

Administrator

PUBLIC 41

Option Description

Select job execution parameters

System conguration Select the system conguration to use when executing this job. A system

conguration denes a set of datastore congurations, which dene the

datastore connections.

For more information, see “Creating and managing multiple datastore

congurations” in the Designer Guide.

If a system conguration is not specied, the software uses the default

datastore conguration for each datastore.

This option is a run-time property. This option is only available if there are

system congurations dened in the repository.

Job Server or server group

Select the Job Server or a server group to execute this schedule.

Use password le Select to create or update the password le that the job schedule ac-

cesses for current repository connection information. Deselect the option

to generate the batch le with a hard-coded repository information.

Note

This option is disabled if you have not set up a CMS connection.

Enable auditing

Select this option if you want to collect audit statistics for this specic job

execution. This option is selected by default.

For more information about auditing, see “Using Auditing” in the De-

signer Guide.

Disable data validation statistics collection

Select this option if you do not want to collect data validation statistics

for any validation transforms in this job. This option is not selected by

default.

Enable recovery Select this option to enable the recovery mode when this job runs.

Recover from last failed execution Select this option if an execution of this job has failed and you want to

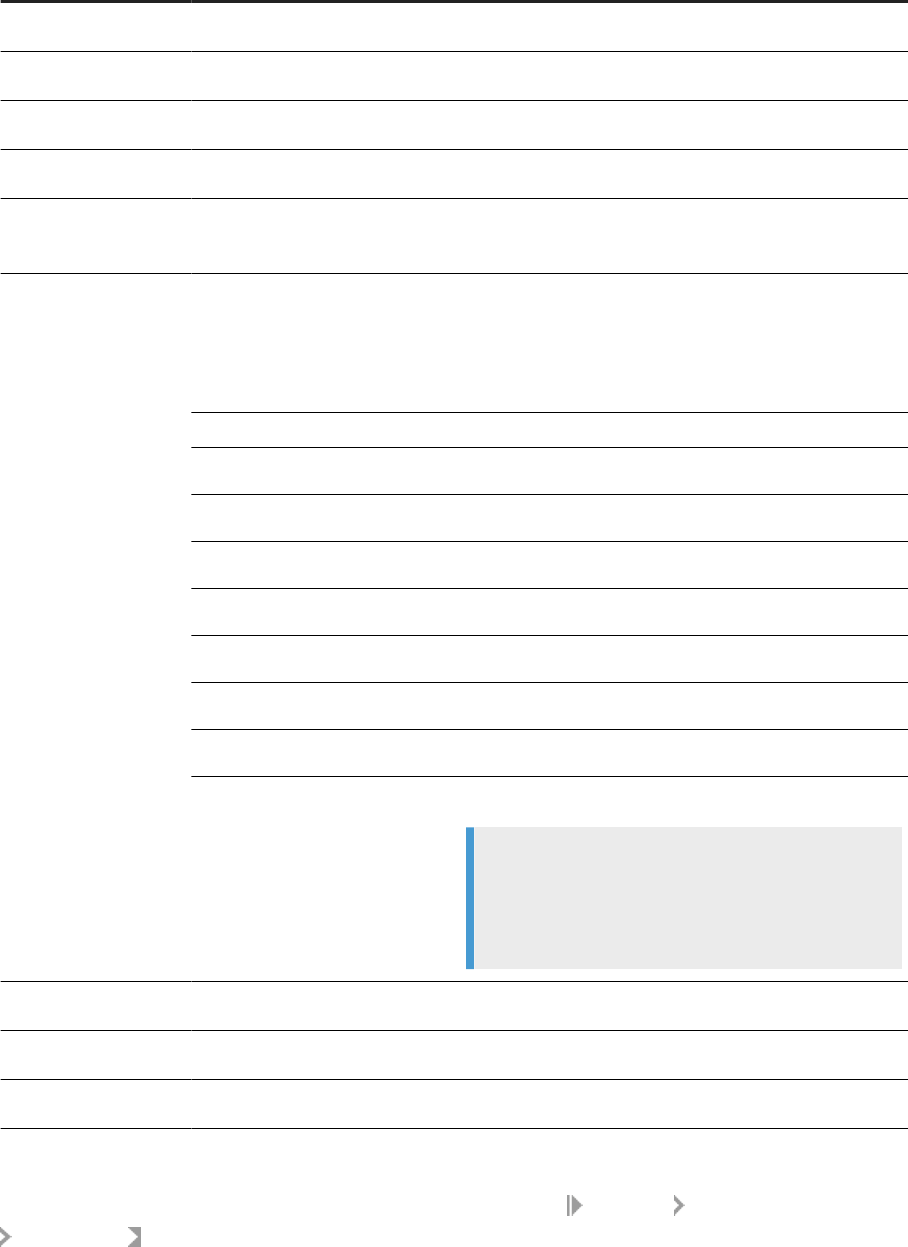

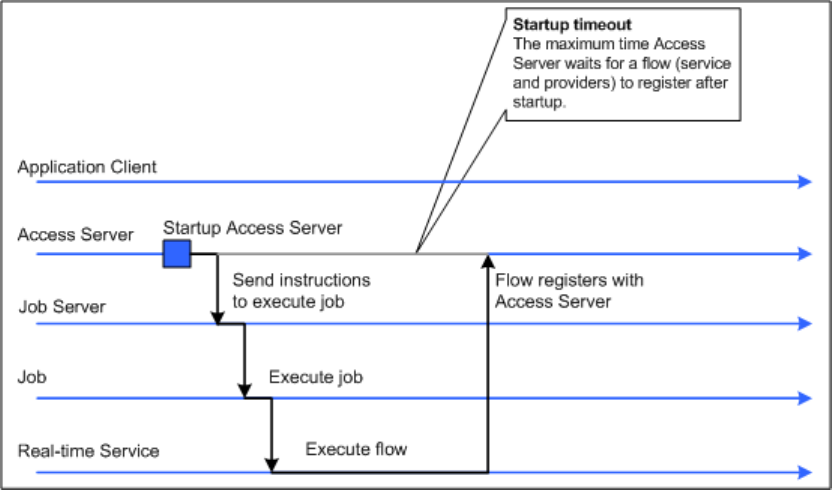

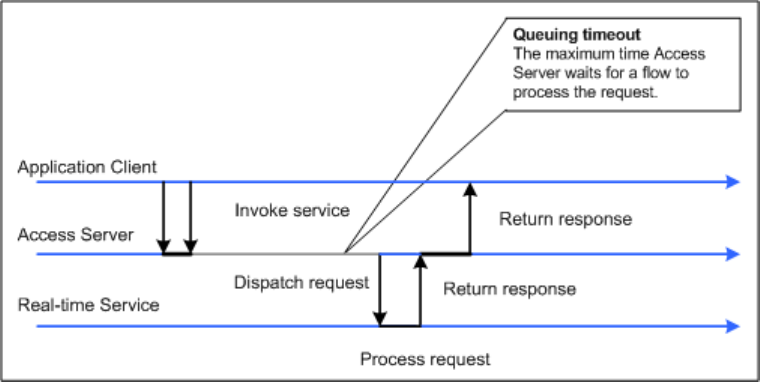

enable the recovery mode.