521

[ Journal of Labor Economics, 2006, vol. 24, no. 3]

䉷 2006 by The University of Chicago. All rights reserved.

0734-306X/2006/2403-0005$10.00

Evaluating the Differential Effects of

Alternative Welfare-to-Work Training

Components: A Reanalysis of the

California GAIN Program

V. Joseph Hotz, University of California, Los Angeles

Guido W. Imbens, University of California, Berkeley

Jacob A. Klerman, RAND

We show how data from an evaluation in which subjects are randomly

assigned to some treatment versus a control group can be combined

with nonexperimental methods to estimate the differential effects of

alternative treatments. We propose tests for the validity of these meth-

ods. We use these methods and tests to analyze the differential effects

of labor force attachment (LFA) versus human capital development

(HCD) training components with data from California’s Greater Av-

enues to Independence (GAIN) program. While LFA is more effec-

tive than HCD training in the short term, we find that HCD is

relatively more effective in the longer term.

I. Introduction

In this article, we explore ways of combining experimental data and

nonexperimental methods to estimate the differential effects of compo-

nents of training programs. In particular, we show how data from a multi-

We wish to thank Julie Mortimer, Wes Hartmann, and especially Oscar Mitnik

for their able research assistance on this project. Jan Hanley, Laurie McDonald,

and Debbie Wesley of RAND helped with the preparation of the data. We also

522 Hotz et al.

site experimental evaluation in which subjects are randomly assigned to

any treatment versus a control group that receives no treatment can be

combined with nonexperimental regression-adjustment methods to esti-

mate the differential effects of particular types of treatments. Our methods

allow the implemented programs to vary across sites and across subjects

within a site. The availability of such experimental data allows us to test,

in part, the plausibility of our regression-adjustment methods for elimi-

nating selection biases that result from nonrandom assignment of program

components to individuals in the various sites. We use our method to

adjust for across-site differences in background and preprogram variables

as well as postrandomization local economic conditions and validate our

methods on experimentally generated control groups in the spirit of the

approaches taken in LaLonde (1986), Heckman and Hotz (1989), Fried-

lander and Robins (1995) and in Heckman, Ichimura, and Todd (1997,

1998), Heckman, Ichimura, Smith, and Todd (1998), Dehejia and Wahba

(1999), and Hotz, Imbens, and Mortimer (2005).

We apply these methods and tests to reexamine the conclusions about

the relative effectiveness of alternative strategies for designing welfare-to-

work training programs. Over the last 3 decades, as the United States has

sought to reform its welfare system, states have sought to design their

mandated welfare-to-work programs in order to reduce dependency on

welfare and promote work among disadvantaged households. Over this

period, states differed in the components, or approach, they emphasized

in these programs.

One approach, the human capital development (HCD) approach, em-

phasizes education and vocational training programs, such as General

Equivalency Diploma (GED) and English as Second Language (ESL)

programs and vocational training in the health care industry. The HCD

approach seeks to improve the basic and job-related skills of welfare

recipients. Advocates of this approach argue that acquiring such skills is

necessary for adults on welfare to “get a job, especially one that is rela-

wish to thank Howard Bloom, Jim Riccio, Hans Bos, John Wallace, David Ell-

wood, and participants in the Institute for Research on Poverty and NBER sum-

mer institutes, workshops at Berkeley and UCLA, and the Tenth International

Conference on Panel Data for helpful comments on an earlier draft of this article

and also two referees who provided valuable comments and suggestions on an

earlier draft of this article. This research was funded, in part, by NSF Grant SES

9818644. Development of the methodological approaches used in this research

also was funded by a contract from the California Department of Social Services

to the RAND Corporation for the conduct of the Statewide CalWORKs Eval-

uation. All opinions expressed in this article and any remaining errors are solely

our responsibility. In particular, this article does not necessarily represent the

position of the National Science Foundation, the State of California or its agencies,

RAND, or the RAND Statewide CalWORKs Evaluation. Contact the corre-

Impacts of the GAIN Program 523

tively stable, pays enough to support their children and leaves them less

vulnerable during an economic downturn” (Gueron and Hamilton 2002,

1).

The other primary approach used in designing welfare-to-work pro-

grams is labor force attachment (LFA), such as job clubs, which teaches

welfare recipients how to prepare re´sume´s and interview for jobs and

provides assistance in finding jobs. The LFA approach seeks to move

adults on welfare quickly into jobs, even if they are low-paying jobs.

Supporters of the LFA approach “see work as the most direct route to

ending ...thenegative effects of welfare on families and children”

(Gueron and Hamilton 2002, 2). The advocates of the LFA approach also

argue that it is better than the formal classroom training stressed in the

HCD approach to build the skills of most low-skilled adults. A natural

question is, Which approach is better?

The MDRC Greater Avenues to Independence (GAIN) Evaluation was

one of the most influential evaluations that shed light on the impacts of

these two approaches. Welfare recipients in six California counties were

randomly assigned either to a treatment group that was to receive services

in a county based and designed welfare-to-work program or to a control

group to which these services were denied. Under the GAIN program,

California’s counties had considerable discretion designing their welfare-

to-work programs, and counties emphasized the LFA versus HDC ap-

proaches to different degrees. Thus, the MDRC study conducted separate

evaluations of each county’s program, where the training components of

the program, the populations served, and the prevailing local economic

conditions varied across counties.

To date, the results of this experimental evaluation often have been

interpreted as favoring the LFA relative to the HCD approach. Based on

an analysis of data, 3 years after random assignment, MDRC found that

the largest effects on participants were for Riverside County’s GAIN

program, a program that emphasized the LFA approach.

1

In contrast, the

GAIN participants in the three largest of the other counties in the MDRC

Evaluation (Alameda, Los Angeles, and San Diego counties), which placed

1

Among female heads of households on Aid to Families with Dependent Chil-

dren (AFDC) in Riverside’s GAIN program, the number of quarters in which

recipients worked was 63% higher than those for the control group, and trainees’

labor market earnings were 63% higher over the 3-year evaluation period. Riv-

erside County’s GAIN program emphasized the LFA approach with tightly fo-

cused job search assistance as well as providing participants with the consistent

message “that employment is central and should be sought expeditiously and that

opportunities to obtain low-paying jobs should not be turned down” (Hogan

1995, 5).

524 Hotz et al.

much greater emphasis on HDC, had much smaller gains.

2

The LFA, or

“work-first,” approach of Riverside received national (and international)

acclaim for its success

3

and has become the model for welfare-to-work

programs across the nation.

4

The fallacy of this conclusion stems from attributing all of the differ-

ences in results across counties to differences in the treatment approaches

used. For example, treatment effects could vary across programs due to

differences in the populations treated, in the strategies used to assign

various treatment components across that population, and to differences

in the economic environments and local labor market conditions.

5

While

MDRC made clear in its reports that its experimental design did not allow

one to directly draw inferences about the differential impact of alternative

types of welfare-to-work components such as LFA and HCD, the results

have been consistently interpreted by policy makers in exactly that way.

Thus, the second objective of this article is to use our methods to address

directly whether LFA worked better than HCD based on data for subjects

in the MDRC GAIN evaluation.

A third objective of our article is to distinguish the short-run from the

long-run effects of LFA versus HCD training components. The formal

MDRC GAIN evaluation was based on experimental estimates of pro-

gram impacts for a 3-year postrandomization period.

6

Extrapolating from

such short-run estimates of social program impacts to what will happen

in the longer run can be misleading, as Couch (1992) and Friedlander and

Burtless (1995) have noted. This is especially true for assessing the effec-

tiveness of HCD relative to LFA training approaches, since HCD training

components tend to be more time-intensive treatments and typically take

longer to complete relative to LFA programs. As such, there is a strong

presumption that results from short-term evaluations will tend to favor

work-first programs over human-capital development ones.

7

Estimates of

2

These counties experienced only a 21% increase in quarters of work and a

23% increase in earnings relative to the outcomes for the control group members

in these counties.

3

For example, the Riverside GAIN program was awarded the Harvard Ken-

nedy School of Government’s Innovations in American Government Award in

1996.

4

For example, the State of California strongly encouraged all of the state’s

counties to adopt the Riverside LFA approach in its GAIN programs.

5

See Hotz, Imbens, and Mortimer (2005) for a systematic development and

treatment of this issue. Also see Bloom, Hill, and Riccio (2005).

6

An unpublished MDRC report presents estimates for the first 5 years after

randomization.

7

A similar point is made by Mincer (1974) in his model of schooling decisions.

Therein, Mincer notes that at early ages the earnings of individuals who choose

additional schooling will be lower than those who choose to go to work at early

ages, simply because attending school inhibits going to work, even if all alternative

Impacts of the GAIN Program 525

program effects over a longer postenrollment period are needed to fairly

assess the relative long-run benefits of these alternative welfare-to-work

strategies.

To address the above substantive concerns, we apply our methods to

estimate both short- and long-term differential effects of the LFA versus

HCD training components in a reanalysis of the data from the MDRC

evaluation of California’s GAIN program. We focus our analysis on four

of the six California counties (Alameda, Los Angeles, Riverside, and San

Diego) analyzed in the original GAIN evaluation

8

and estimate differential

effects for the post–random assignment employment, labor market earn-

ings, and welfare participation outcomes of participants in this evaluation.

We make use of data on these outcomes for a period of 9 years after

random assignment, data that were not previously available. We exploit

the data for the control groups in this evaluation to implement the tests

of some of the assumptions that justify the use of nonexperimental re-

gression-adjustment methods. Finally, we consider the extent to which

inferences about the temporal patterns of training effect estimates are

sensitive to postrandomization variation in local labor market conditions.

As we establish below, our reanalysis of the GAIN data leads to a sub-

stantively different set of conclusions about the relative effectiveness of

LFA versus HCD training components.

II. The GAIN Program and the MDRC GAIN Evaluation

The GAIN program began in California in 1986 and, in 1989, became

the state’s official welfare-to-work or Job Opportunities and Basic Skills

Training (JOBS) Program, authorized by the Family Support Act.

9

Except

for female heads with children under the age of 6, all adults on welfare

were required to register in their county-of-residence GAIN program.

10

activities yield the same present value of lifetime earnings. See also Ham and

LaLonde (1996).

8

We omit the two rural counties included in the original MDRC evaluation

(Butte and Tulare), because these rural economies are quite different from the

economies of the four urban counties.

9

The legislation that created the GAIN program represented a political com-

promise between two groups in the state’s legislature with different visions of

how to reform the welfare system. One group favored the “work-first” approach,

i.e., use of a relatively short-term program of mandatory job search, followed by

unpaid work experience for participants who did not find jobs. The other group

favored the “human capital” approach, i.e., a program providing a broader range

of services designed to develop the skills of welfare recipients. In crafting the

GAIN legislation, these two groups compromised on a program that contained

work-first as well as basic skills and education components in what became known

as the GAIN Program Model. See Riccio and Friedlander (1992) for a more

complete description of this model.

10

See Riccio et al. (1989) for a more complete description of the criteria for

mandated participation.

526 Hotz et al.

Each registrant was administered a screening test to measure a registrant’s

basic reading and math skills, with the same test being used in all counties.

Registrants with low test scores and those who did not have a high school

diploma or GED were deemed “in need of basic education” and targeted

to receive HCD training components, such as Adult Basic Education

(ABE) and/or English as a Second Language (ESL) courses. Those judged

not to be in need of basic education were to bypass these basic education

services and to move either into LFA, such as job search assistance, or

HCD, such as vocational or on-the-job training. Decisions about which

activities GAIN registrants received were under the control of county

GAIN administrators. In fact, the legislation that established the GAIN

program gave California’s 58 counties substantial discretion and flexibility

in designing their programs, including the types and mix of training com-

ponents they offered to GAIN registrants (see Riccio and Friedlander

1992, chap. 1).

MDRC conducted a randomized evaluation of the impacts and cost

effectiveness of the GAIN program in six research counties (Alameda,

Butte, Los Angeles, Riverside, San Diego, and Tulare). Beginning in 1988,

MDRC randomly assigned a subset of the GAIN registrants in these

counties either to an experimental group, which was eligible to receive

GAIN services and subject to its participation mandates, or to a control

group, which was ineligible for GAIN services but could seek (on their

own initiative) alternative services in their communities. Control group

members were embargoed from GAIN services until June 30, 1993, and

for 2 years after this date they were allowed, but not required, to par-

ticipate in GAIN. MDRC collected data on experimental and control

group members in the research counties, including background and de-

mographic characteristics and pre–random assignment employment, earn-

ings, and welfare utilization. Originally, MDRC gathered data on em-

ployment, earnings, and welfare utilization

11

for a 3-year postrandom-

ization period and reported on the findings for these outcomes in their

primary GAIN evaluation reports.

12

Descriptive statistics and sample sizes for the participants in the MDRC

evaluation in the four counties analyzed here (Alameda, Los Angeles,

Riverside, and San Diego) are provided in table 1. We focus on GAIN

registrants who were members of single-parent households on Aid to

Families with Dependent Children (AFDC)—which are referred to as the

AFDC family group or AFDC-FG households—at the time of random

11

Most of these data were obtained from state and county administrative data

systems.

12

See Riccio and Friedlander (1992) and Riccio et al. (1994). In an unpublished

paper, Freedman et al. (1996) present impact estimates for a 5-year postrandom-

ization period, based on additional outcomes data gathered from administrative

data sources.

Impacts of the GAIN Program 527

assignment.

13

Such households constitute over 80% of the AFDC caseload

in California and the nation, and almost all are female-headed.

14

As shown

at the bottom of table 1, in all but Alameda county, the counties assigned

a larger (and varying) fraction of cases to the experimental group. Finally,

we provide, in table 1, p-values for tests of the differences between ex-

perimental and control group means for the background variables. In most

cases, there are no statistically significant differences in these variables by

treatment status. The one exception is the year and quarter in which cases

were enrolled into the MDRC GAIN evaluation. In particular, there are

rather large and statistically significant differences in the proportions of

experimental and control cases enrolled by quarter in Los Angeles and

San Diego counties.

15

These were the result of changes in the rates of

randomization to the control status over the enrollment periods in these

counties as MDRC attempted to meet targeted numbers of control cases

in these counties.

Table 1 reveals notable differences in the demographic and prerandom-

ization characteristics of the cases enrolled in the GAIN registrants across

counties. These differences stem from two factors. First, the composition

of the AFDC caseloads varies across counties. Second, the strategies that

counties adopted for registering participants from their existing caseloads

into GAIN activities also varied. The GAIN programs in Riverside and

San Diego counties sought to register all welfare cases in GAIN, while

the programs in Alameda and Los Angeles counties focused on long-term

welfare recipients. For example, Alameda County, which began its GAIN

program in the third quarter of 1989, first registered its long-term cases

and then registered cases that had entered the AFDC caseload more re-

cently. The GAIN program in Los Angeles County initially registered

only those cases that had been on welfare for 3 consecutive years. The

consequences of these differences in selection criteria can be seen in table

1. In Alameda and Los Angeles, over 95% of the cases had been on welfare

a year prior to random assignment; in San Diego and Riverside, fewer

(for some cells much fewer) than 65% had been.

13

The samples we utilize for three of the four counties (Alameda, Riverside,

and San Diego counties) are slightly smaller than the original samples used by

MDRC because of our inability to find records for some sample members in

California’s Unemployment Insurance Base Wage system (administered by the

California Economic Development Department) or because we were missing in-

formation on the educational attainment of the sample member. The number of

cases lost in these three counties is very small, never larger than 1.1% of the total

sample, and does not differ by experimental status.

14

Descriptions and results for the much smaller group of two-parent households

on AFDC (AFDC-U cases) are given in the working paper version of the paper

(Hotz, Imbens, and Klerman 2000).

15

There are smaller discrepancies between fractions of experimentals and con-

trols by year and quarter in Riverside County.

528

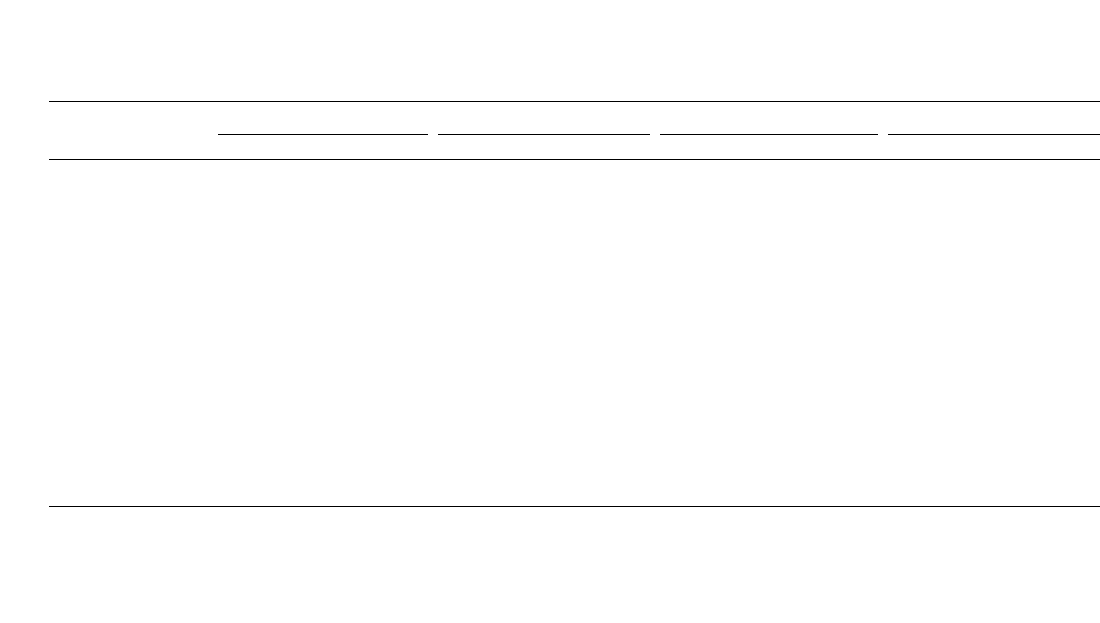

Table 1

Background Characteristics and Prerandomization Histories of GAIN Evaluation Participants from AFDC Caseload

Variable

Alameda

Los Angeles Riverside San Diego

Mean SD p-Value Mean SD p-Value Mean SD p-Value Mean SD p-Value

Age 34.69 8.61 .034 38.52 8.43 .668 33.63 8.20 .431 33.80 8.59 .911

White .18 .38 .806 .12 .32 .621 .52 .50 .533 .43 .49 .263

Hispanic .08 .26 .045 .32 .47 .233 .27 .45 .937 .25 .44 .089

Black .70 .46 .562 .45 .50 .600 .16 .37 .323 .23 .42 .345

Other ethnic groups .04 .20 .400 .11 .31 .663 .05 .22 .700 .09 .29 .473

Female head .95 .22 .978 .94 .24 .043 .88 .33 .633 .84 .37 .349

Only one child .42 .49 .458 .33 .47 .743 .39 .49 .079 .43 .50 .530

More than one child .57 .50 .391 .67 .47 .976 .58 .49 .093 .53 .50 .621

Child 0–5 years .31 .46 .340 .10 .31 .353 .16 .37 .922 .13 .34 .778

Highest grade completed 11.18 2.52 .921 9.54 3.55 .548 10.68 2.53 .938 10.66 3.04 .373

In need of basic education .65 .48 .885 .81 .40 .982 .60 .49 .615 .56 .50 .362

Earnings 1 quarter before

random assignment $213 $851 .797 $221 $874 .454 $452 $1,404 .452 $588 $1,485 .270

Earnings 4 quarters before

random assignment $264 $1,018 .012 $216 $866 .405 $614 $1,603 .073 $808 $1,879 .747

Earnings 8 quarters before

random assignment $220 $1,005 .460 $181 $796 .473 $728 $1,840 .003 $827 $1,958 .301

Employed 1 quarter before

random assignment .14 .34 .531 .12 .33 .469 .22 .42 .664 .27 .44 .118

Employed 4 quarters be-

fore random assignment .14 .34 .000 .13 .33 .634 .25 .43 .976 .29 .45 .926

Employed 8 quarters be-

fore random assignment .13 .33 .896 .11 .32 .565 .27 .44 .044 .28 .45 .149

AFDC benefits 1 quarter

before random

assignment $1,907 $526 .331 $1,874 $663 .792 $1,190 $1,043 .499 $1,159 $903 .046

529

AFDC benefits 4 quarters

before random

assignment $1,822 $551 .317 $1,867 $662 .440 $995 $1,027 .663 $1,008 $928 .098

On AFDC 1 quarter be-

fore random assignment .98 .14 .692 .99 .10 .341 .77 .42 .837 .73 .44 .086

On AFDC 4 quarters be-

fore random assignment .96 .19 .982 .98 .14 .765 .63 .48 .973 .60 .49 .102

Proportion entered GAIN

in 1988:Q3 .11 .31 .085 .15 .36 .000

Proportion entered GAIN

in 1988:Q4 .18 .38 .073 .24 .43 .000

Proportion entered GAIN

in 1989:Q1 .17 .38 .631 .24 .42 .000

Proportion entered GAIN

in 1989:Q2 .17 .37 .299 .21 .41 .205

Proportion entered GAIN

in 1989:Q3 .26 .44 .944 .56 .50 .000 .13 .34 .699 .16 .37 .000

Proportion entered GAIN

in 1989:Q4 .21 .41 .767 .26 .44 .000 .13 .34 .746

Proportion entered GAIN

in 1990:Q1 .32 .47 .769 .18 .39 .244 .11 .31 .165

Proportion entered GAIN

in 1990:Q2 .21 .41 .578

Number of experimental

cases 597 2,995 4,405 6,978

Number of control cases 601 1,400 1,040 1,154

Total number of cases 1,198 4,395 5,445 8,132

Fraction of cases in experi-

mental group .498 .681 .809 .858

Note.—Earnings and AFDC benefits are deflated by Consumer Price Index; in 1999 dollars. The columns headed “Mean” contain means and those headed “SD” contain

standard deviations for the full sample (i.e., both experimental and control groups). The p-values are for a test of difference between experimental and control group means.

530 Hotz et al.

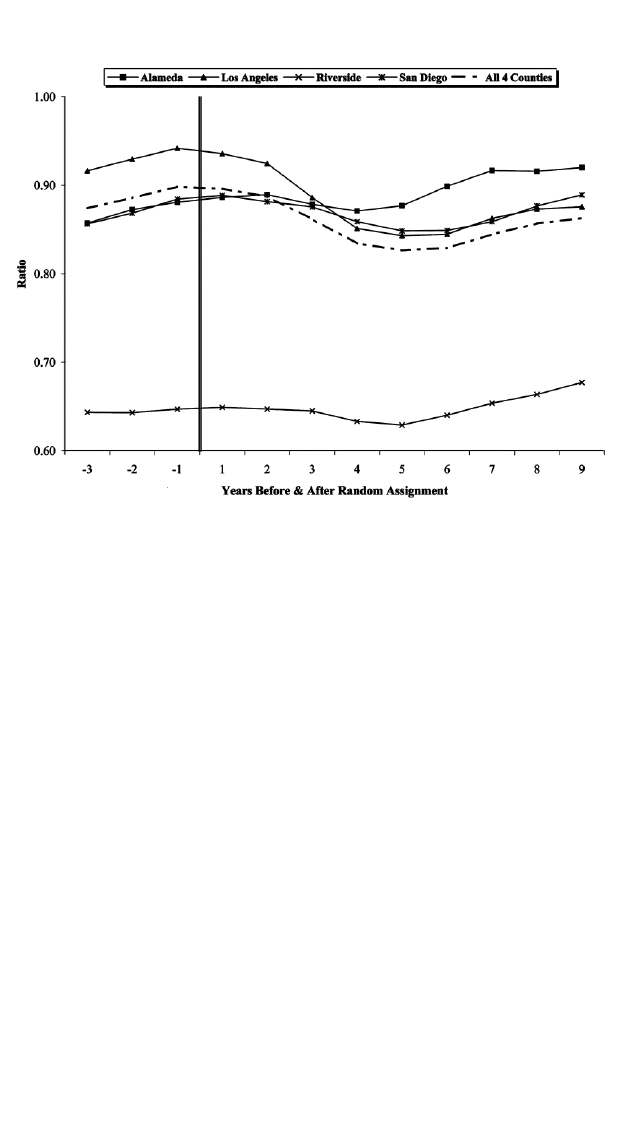

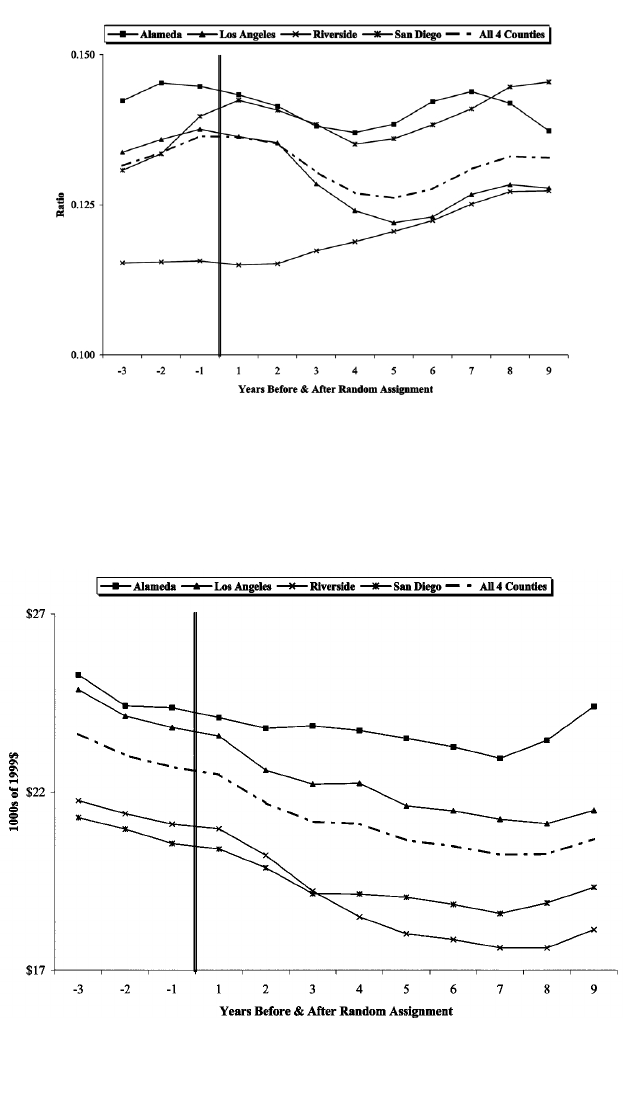

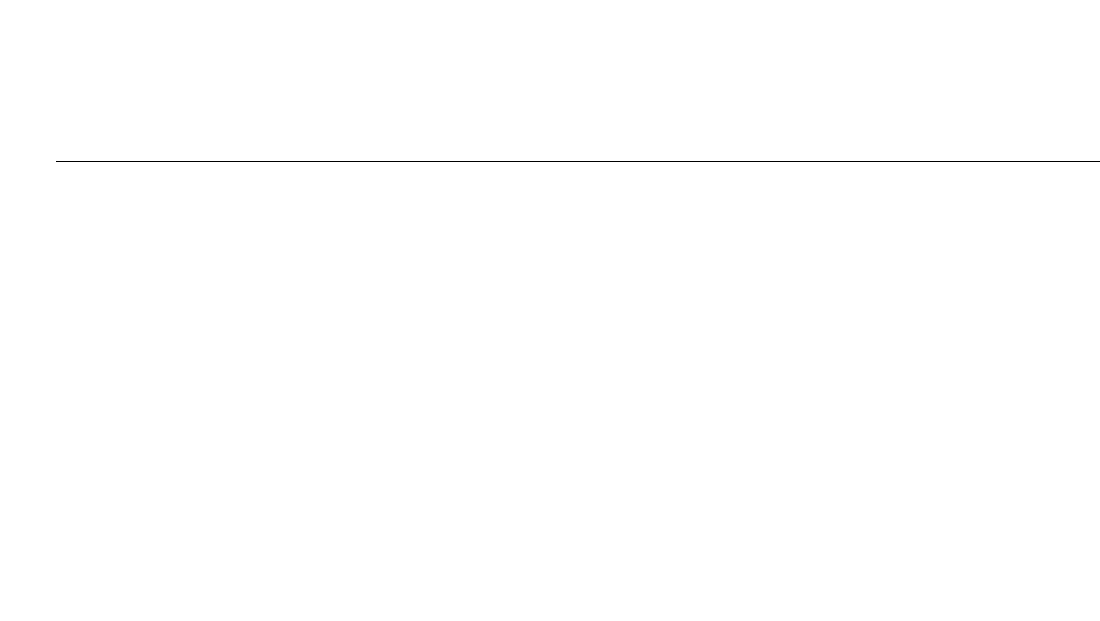

Fig. 1.—Annual ratio of total employment to adult population, all sectors

These differences in selection criteria also contributed to substantial

differences in the employment histories and individual characteristics of

the registrant populations across these four counties. As shown in table

1, the registrants in Alameda and Los Angeles counties had, on average,

much lower levels of earnings prior to random assignment relative to

those in Riverside and San Diego. Furthermore, the registrants in Alameda

and Los Angeles were, on average, older, had lower levels of educational

attainment, and were more likely to be assessed as “in need of basic

education” when they entered the GAIN program than the average reg-

istrants in Riverside and San Diego. The fact that Alameda and Los An-

geles counties focused on its “hard to treat” cases is a stark example of

how the caseload composition within the GAIN experiment varied across

counties and why it is implausible that all of the differences across counties

in treatment effects are due solely to the various treatment components.

The counties in the GAIN evaluation also differed with respect to the

conditions in the labor market immediately prior to random assignment,

and these differences also may account for the across-county differences

in the background characteristics and prerandomization outcomes of the

evaluation subjects displayed in table 1. Figures 1–4 display the time series

of two sets of measures of labor market conditions for each of the four

counties in the GAIN evaluation. Figure 1 plots the county-level ratio

of total employment to the adult population, and figure 2 displays the

Impacts of the GAIN Program 531

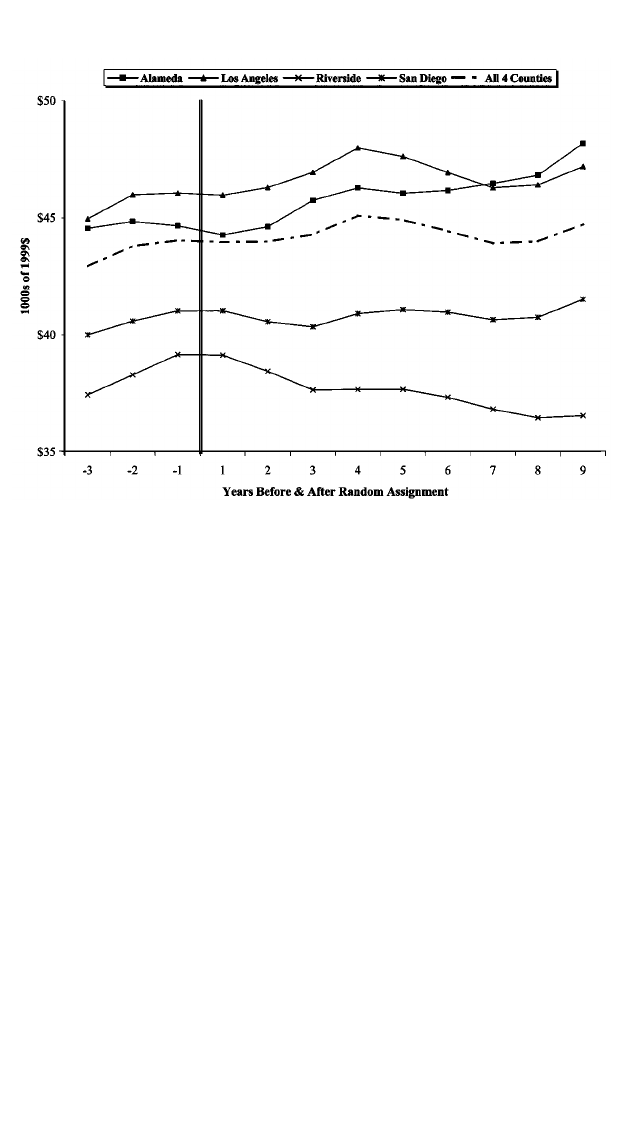

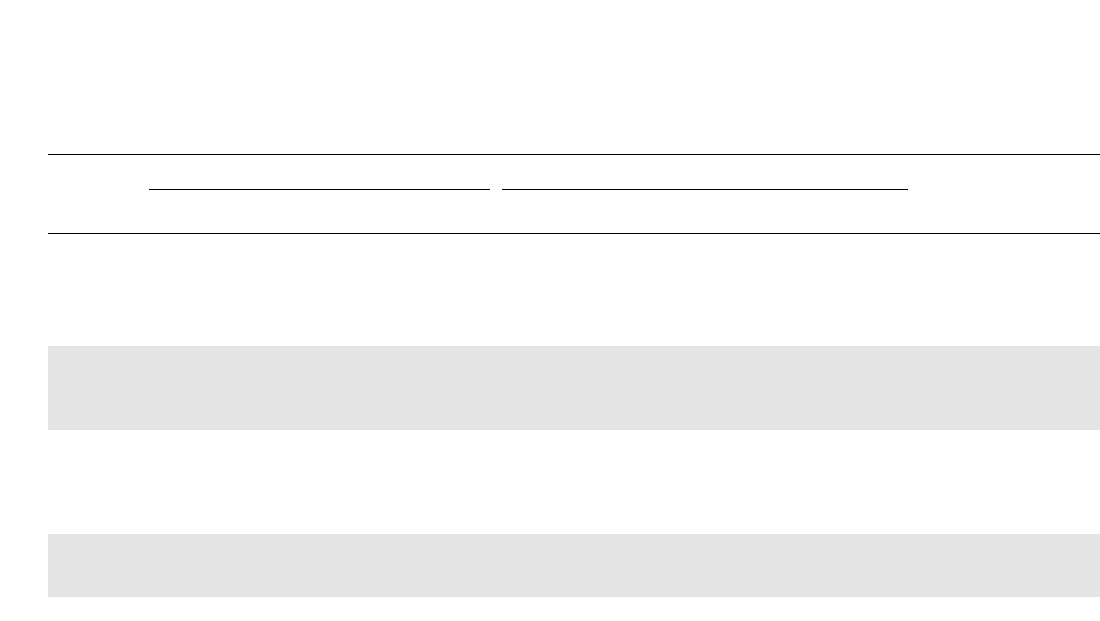

Fig. 2.—Annual earnings per worker, all sectors

average annual earnings per worker for those employed.

16

These measures

provide indicators of the across-county and over time differences in the

labor markets for the four counties in the years prior to random assign-

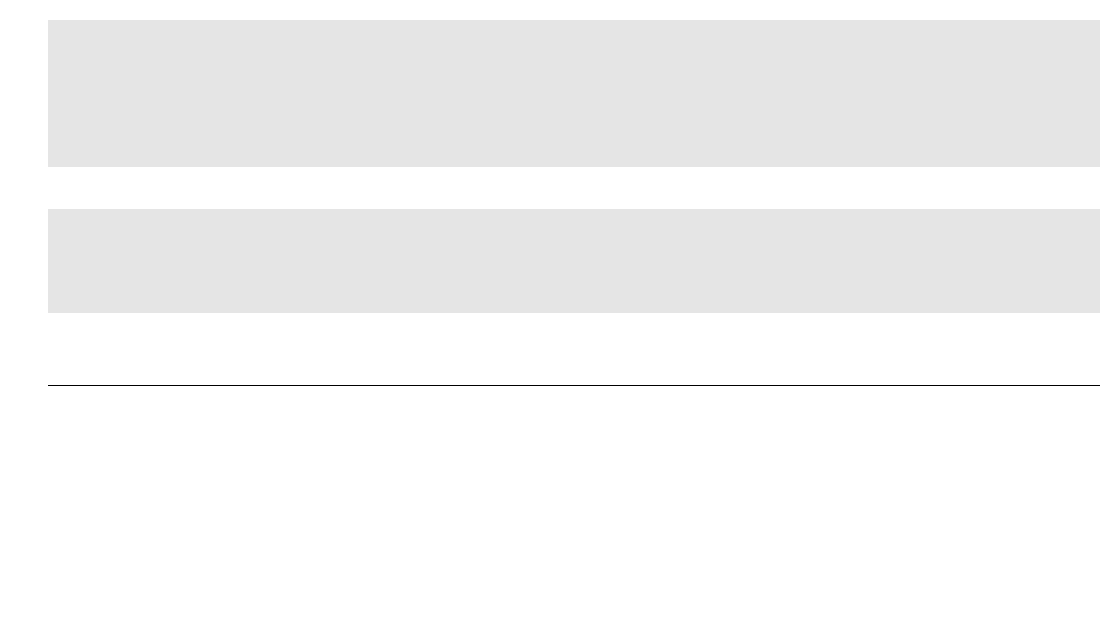

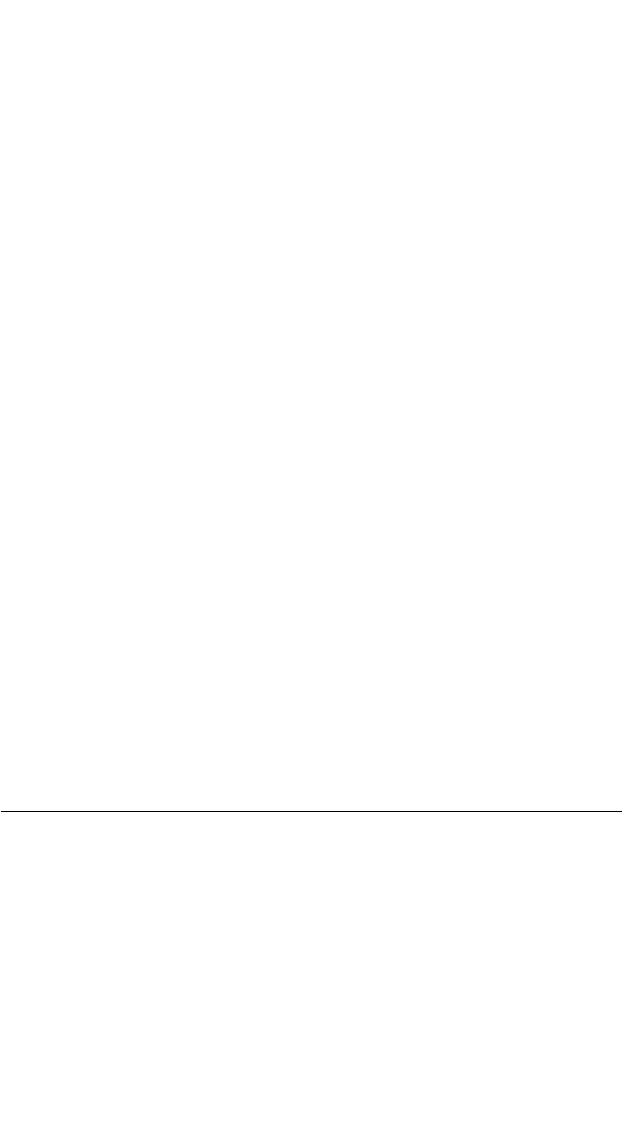

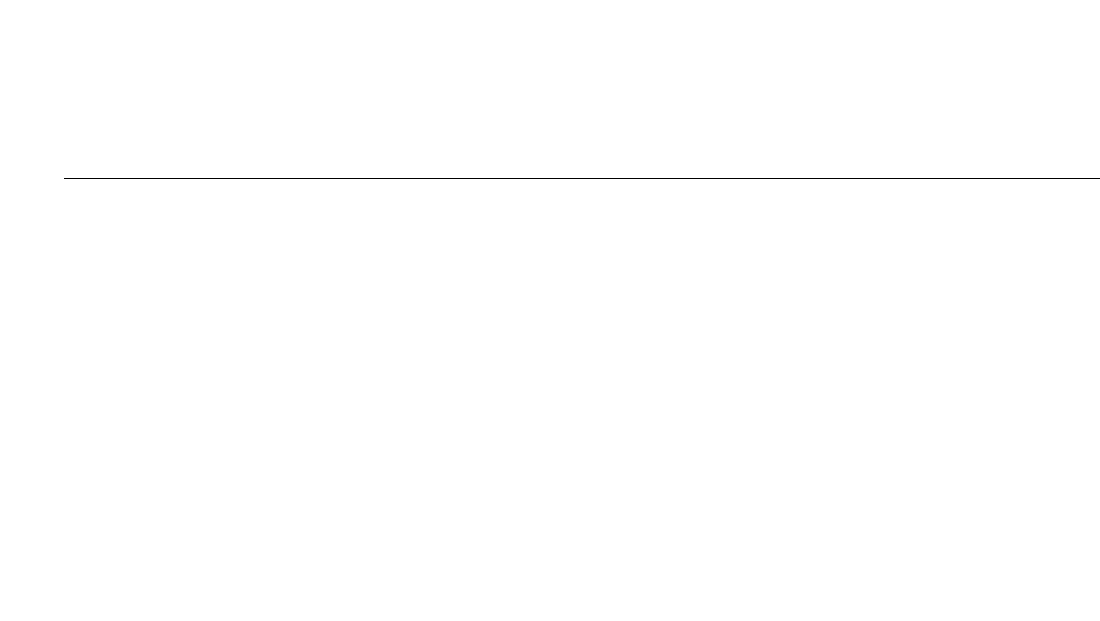

ment. Figures 3 and 4 display the corresponding employment-to-popu-

lation ratios and average annual earnings per work for those employed

in the retail trade sector, a sector of the economy in which many low-

skilled workers are employed.

17

In the periods prior to random assign-

ment, overall employment and employment in retail trade were increasing

in all four counties, although employment had begun to stagnate in many

of the counties. Furthermore, one notes substantial differences across the

four counties in these ratios, with Riverside county having a much lower

16

These county-level measures were constructed from data from the Regional

Economic Information System (REIS) maintained by the Bureau of Economic

Activity (BEA) in the U.S. Department of Commerce. We note that Hoynes (2000)

uses versions of both of these measures in her analysis of the effects of local labor

market conditions on welfare spells for the California AFDC caseload during the

late 1980s and early 1990s. See her paper for a discussion of these and other

county-level measures of local demand conditions.

17

Another sector of the economy that employs low-skilled workers is the ser-

vice sector. Based on measures comparable to those in figs. 3 and 4, similar trends

and differences across counties were found for this sector as those for the retail

trade sector.

Fig. 3.—Ratio of annual employment in retail trade sector to adult population

Fig. 4.—Annual earnings per worker, retail trade sector

Impacts of the GAIN Program 533

total employment to adult population ratio than the other three counties.

18

Over this same period, average annual earnings per worker were increasing

in all but Alameda county (fig. 2), while earnings per worker in the retail

trade sector were declining (in real terms) in all four counties (fig. 4).

Moreover, one sees that overall earnings, as well as earnings in the retail

sector, were higher in Alameda and Los Angeles counties relative to Riv-

erside and San Diego counties. Toward the end of the next section, we

shall comment further on the postrandomization trends in these figures.

Finally, as we noted above, the four analysis counties in the GAIN

evaluation differed in the way they ran the programs and in the training

components they emphasized. In table 2, we display the proportions of

GAIN registrants in the four analysis counties that participated in various

training components during the period in which subjects were enrolled

in the MDRC GAIN evaluation.

19

One can see that Riverside county

placed fewer of its GAIN registrants in HCD training components than

did the administrators of the GAIN programs in the other three counties

during the period of enrollment in the MDRC evaluation. This is espe-

cially true relative to the proportions of GAIN registrants enrolled in the

MDRC evaluation that were deemed “in need of basic skills,” presumably

the group in greater need of HCD training components. (The proportions

of these groups, by year/quarter of enrollment into the MDRC evaluation,

are found in the last column of table 2.) As a crude indicator of the

relationship between HCD services relative to those registrants in need

of basic skills, one can take the ratio of the last two columns of table 2.

By this measure, the GAIN programs in San Diego, Los Angeles, and

Alameda counties appear to provide HCD training services roughly in a

one-to-one proportion with the fraction of registrants in need of basic

skills in their counties. In contrast, the corresponding proportion for

Riverside county’s GAIN program is two to three.

The estimates in table 2 provide a clear indicator of what was a major

finding of the MDRC GAIN evaluation, namely, that Riverside’s GAIN

program had a decidedly work-first orientation, especially relative to the

other three counties in the evaluation that we analyze here.

20

In contrast,

18

This difference reflects, in part, the fact that many people residing in Riverside

County commute to other counties, especially Los Angeles, for their employment

compared to residents of the other four counties.

19

The shading in this table shows the quarters in which the random assignment

of registrants into the MDRC experimental evaluation was conducted for each

of the four counties.

20

There were other indicators of Riverside’s emphasis on getting GAIN reg-

istrants quickly into jobs and on using LFA relative to HCD training components.

For example, Riverside staff required that their registrants who were enrolled in

basic skills programs continue to participate in Job Club and other job search

activities. In a survey of program staff conducted by MDRC at the time of its

evaluation, 95% of case managers in Riverside rated getting registrants into jobs

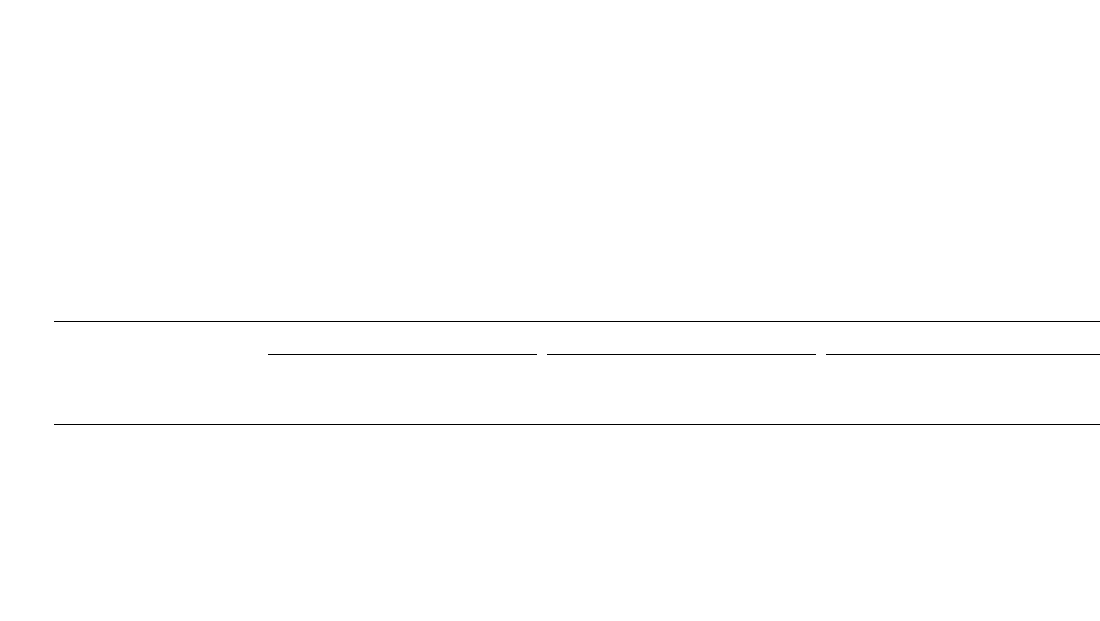

534

Table 2

Distribution of Proportion of Participation in Various GAIN Training Components

Yr:Qtr

Labor Force Attachment (LFA) Activities

Human Capital Development (HCD) Activities

Proportion of GAIN

Registrants Deemed “In

Need of Basic Skills”

Job Club and Job

Search Activities

All Other Job

Search Activities

All LFA

Activities

Basic Education

Program

Vocational

Training

On-the-Job

Training (OJT)

All HCD

Activities

Alameda:

1988:Q3 .00 .00 .00 .00 1.00 .00 1.00

1988:Q4 .00 .00 .00 .00 1.00 .00 1.00

1989:Q1 .21 .00 .21 .53 .26 .00 .79

1989:Q2 .34 .02 .36 .37 .27 .00 .64

1989:Q3 .35 .02 .37 .36 .27 .00 .63 .700

1989:Q4 .33 .09 .42 .44 .12 .00 .56 .683

1990:Q1 .29 .05 .34 .44 .22 .00 .66 .624

1990:Q2 .45 .03 .48 .38 .13 .01 .52 .610

Los Angeles:

1988:Q3 NA NA NA NA NA NA NA

1988:Q4 .00 .00 .00 .08 .92 .00 1.00

1989:Q1 .14 .00 .14 .72 .14 .00 .86

1989:Q2 .23 .01 .24 .61 .15 .00 .76

1989:Q3 .22 .02 .24 .68 .08 .00 .76 .797

1989:Q4 .23 .04 .27 .65 .08 .00 .73 .816

1990:Q1 .19 .07 .26 .63 .12 .00 .75 .818

1990:Q2 .16 .05 .21 .64 .15 .00 .79

535

Riverside:

1988:Q3 .51 .09 .60 .21 .20 .00 .41 .658

1988:Q4 .62 .07 .69 .20 .10 .00 .30 .597

1989:Q1 .56 .03 .59 .26 .14 .00 .40 .591

1989:Q2 .63 .05 .68 .20 .12 .00 .32 .599

1989:Q3 .64 .03 .67 .19 .14 .01 .34 .581

1989:Q4 .45 .02 .47 .32 .21 .00 .53 .574

1990:Q1 .52 .03 .55 .23 .22 .00 .45 .627

1990:Q2 .52 .01 .53 .24 .23 .00 .47

San Diego:

1988:Q3 .41 .01 .42 .28 .28 .01 .57 .567

1988:Q4 .45 .01 .46 .30 .22 .01 .53 .545

1989:Q1 .41 .01 .42 .30 .24 .02 .56 .585

1989:Q2 .42 .02 .44 .31 .21 .02 .54 .578

1989:Q3 .28 .05 .33 .42 .23 .01 .66 .528

1989:Q4 .30 .06 .36 .27 .28 .04 .59

1990:Q1 .34 .08 .42 .33 .21 .02 .56

1990:Q2 .31 .06 .37 .41 .15 .02 .58

Note.—Shaded areas depict the quarters in which random assignment was conducted in the various counties.

536 Hotz et al.

program staff in the other research counties placed less emphasis on getting

registrants into a job quickly. For example, Alameda’s GAIN managers

and staff “believed strongly in ‘human capital’ development and, within

the overall constraints imposed by the GAIN model’s service sequences,

its staff encouraged registrants to be selective about the jobs they accepted

and to take advantages of GAIN’s education and training to prepare for

higher-paying jobs” (Riccio et al. 1994, xxv).

III. Alternative Treatment Effects and Estimation Strategies

In this section, we consider the identification of alternative treatment

effects and strategies for estimating them. We begin with a review of binary

treatment effects that characterize the effect of receiving some training

component for enrollees in a welfare-to-work program. Such effects were

the focus of the experimental design of the MDRC GAIN evaluation.

We then define and consider the estimation of average differential treat-

ment effects (ADTE). The latter effects are the focus of our reanalysis of

the GAIN data. We examine the identification of and strategies for es-

timating ADTEs when the econometrician has information on which

treatment components each subject was assigned and when such subject-

level information is not known. (The latter case is true for the MDRC

GAIN evaluation data we reanalyze and is true for many data sources

used to evaluate training programs.) Finally, while we show that exper-

imental data on subjects that are randomly assigned to some versus no

treatment component are not sufficient to identify (or consistently esti-

mate) ADTEs, we show how such data can be exploited to assess the

validity of nonexperimental methods for estimating the ADTEs.

A. Alternative Average Treatment Effects

Let D

i

be an indicator of the program/location of a training program

in which subject i is enrolled (registered). In the MDRC GAIN evaluation,

D denotes a county-run welfare-to-work program, d. Let s denote the

quickly as their highest goal, while fewer than 20% of managers in the other

research counties gave a similar response. In the same survey, 69% of Riverside

case managers indicated that they would advise a welfare mother offered a low-

paying job to take it rather than wait for a better opportunity, while only 23%

of their counterparts in Alameda county indicated that they would give this advice.

See Riccio and Friedlander (1992) for further documentation of the differences

in distribution of training components and other features of the full set of six

counties in the MDRC GAIN evaluation. As Riccio and Friedlander (1992) con-

cluded from their study of the implementation of GAIN programs by the various

counties in the MDRC evaluations, “What is perhaps most distinctive about

Riverside’s program, though, is not that its registrants participated somewhat less

in education and training, but that the staff’s emphasis on jobs pervaded their

interactions with registrants throughout the program” (Riccio and Friedlander

1992, 58).

Impacts of the GAIN Program 537

number of periods (years) since a subject enrolled in a welfare-to-work

program. Let denote the training (treatment) component to which

˜

T

i

subject i is assigned, with and where

˜˜

T 苸 {0, 1, … , k,…,K} T p 0

ii

denotes the null (no) treatment component. Let denote the assign-T

i

ment of the ith subject to some treatment component, that is, T p

i

. Finally, let denote subject i’s potential outcome as

˜

1{T ≥ 1} Y (W p w)

iis

of s periods after enrollment that is associated with the subject being

assigned to treatment W, where or . Thus, is the

˜

W p TT Y(T p 0)

is

potential outcome associated with the receipt of no treatment, Y (T p

is

is the potential outcome associated with the assignment to some treat-1)

ment component, and is the potential outcome asso-

˜

Y (k) { Y (T p k)

is is

ciated with the assignment to treatment component k.

The focus of the MDRC GAIN evaluation, and many other training

evaluations, was on estimating the average treatment effect on the treated

(ATET) associated with assignment to some treatment component in pro-

gram/location d. This treatment effect is defined as

d

a { E(Y (1) ⫺ Y (0)FT p 1, D p d)

sisisii

p E(D FT p 1, D p d), (1)

is i i

where D

is

is subject i’s “gain” in outcome Y in period s from being assigned

to some training component.

21

Analogously, the average treatment effects

associated with assignment to treatment component k for those assigned

to this component is given by

d

a (k) { E(Y (k) ⫺ Y (0)FT p 1, D p d)

sisisii

p E(D (k)FT p 1, D p d). (2)

is is i

As noted in Hotz et al. (2005), may differ across programs/locations

d

a

s

(d), due to differences in (a) the populations treated, (b) treatment het-

erogeneity (differences in the distribution of treatment components), and/

or (c) differences in economic conditions (macro effects). In the case of

treatment heterogeneity, one typically wishes to distinguish between the

impacts of alternative treatment components—such as the LFA and HCD

training components—in order to isolate this source of differences in

across programs and to isolate why some programs are more effective

d

a

s

than others. As noted in the Introduction, and as will be documented in

21

One also can define versions of that condition on some set of exogenous

d

a

s

variables, X,

d

a (X) { E(Y (1) ⫺ Y (0)FT p 1, D p d, X )

sisisiii

p E(D FT p 1, D p d, X ).

is i i i

Conditional versions of the other treatment effects defined in this subsection can

be defined similarly.

538 Hotz et al.

Section IV, the impacts of the Riverside GAIN program were markedly

different from, and more effective than, those in the other counties of the

MDRC GAIN evaluation. Accordingly, consider the average differential

treatment effect (ADTE) of two treatment components, k and k

, among

those who are treated (i.e., those assigned to receive some treatment) which

is defined as

g (k, k ) { E(Y (k) ⫺ Y (k )FT p 1)

sisisi

p E(D (k) ⫺ D (k )FT p 1), (3)

is is i

where the second equality in (3) follows from the definition of D

is

(j)in

(2). Note that is defined for subjects assigned to receive some

g (k, k )

s

treatment, that is, for subjects characterized by . For reasons thatT p 1

i

will be made clear below, conditioning on this expansive set of subjects

is appropriate for our reanalysis of the MDRC GAIN evaluation data.

Imbens (2000) and Lechner (2001) consider alternative definitions of dif-

ferential treatment effects, including conditioning on those subjects who

would otherwise receive either treatment components k or k

. In general,

differences in such conditioning imply different treatment effects. Also

note that we have not conditioned (3) on a particular program/location

(d), as our interest is in estimating the differential effects of treatment

components that are available—and comparable—across county welfare-

to-work programs included in the MDRC GAIN evaluation.

B. Identification and Estimation of

d

a

s

As is well understood, the identification (and thus consistent estimation)

of in (1) requires additional conditions to be met. In general, nonran-

d

a

s

dom and selective assignment of potential trainees to training programs

and/or the use of noncomparable comparison groups to measure Y(0)

gives rise to problems in identifying (and obtaining unbiased estimates

of) such treatment effects.

22

In the context of the MDRC GAIN evalu-

ation, the identification problem is “solved by design” in that this eval-

uation randomly selected a group of the GAIN registrants to a control

group in which subjects, who would otherwise have been assigned to

some welfare-to-work activity, were embargoed from receipt of treatment.

That is, design of this evaluation assured that the following condition,

(Random Assignment) T ⊥ (Y (0), Y (1))FD p d,(C1)

iisis i

22

See, e.g., Heckman, LaLonde, and Smith (1999) for a survey of the evaluation

literature.

Impacts of the GAIN Program 539

holds for all d, where denotes that z is (statistically) independentz ⊥ y

of y. Condition (C1) insures the identification of as it implies that

d

a

s

E(Y (0)FT p 1, D p d) p E(Y (0)FT p 0, D p d), (C1

)

is i i is i i

that is, the mean value of Y(0) in period s for those who receive some

treatment component ( ) in county d would be equal to the meanT p 1

of observed outcomes for control group members ( ) in the sameT p 0

period and county. As a result, (C1) implies that the ATET associated

with program/location d is identified by

d

a p E(Y (1)FT p 1, D p d) ⫺ E(Y (0)FT p 0, D p d)(4)

sisii isii

and can be consistently estimated by using sample analogs to the con-

ditional expectations in (4), that is, for all s, where

¯

Y (t) p

冘 Y /N

sist

i苸{iT ptF}

i

N

t

is the sample size for the group, . In Section IV, weT p ttp 0, 1

i

present county-specific estimates of the ATET for a range of postran-

domization outcomes for each of the 9 years after random assignment.

C. Identifying Average Differential Treatment Effects

We next consider the identification (and estimation) of the average

differential treatment effect, . For now, we assume that we know

g (k, k )

s

(and can condition on) the treatment components to which each subject

was assigned. Below, we consider the case in which a subject’s treatment

component assignment is unknown. Random assignment of subjects to

or 0 (condition [C1]), the condition that holds in the MDRCT p 1

i

GAIN evaluation, is not sufficient to identify ADTEs. To see why, con-

sider the following characterization of the differences in expected potential

outcomes for treatment components, k and k

:

˜˜

E(Y (k)FT p k, D p d) ⫺ E(Y (k )FT p k , D p d )

is i i is i i

˜

p E(Y (0) ⫹ D (k)FT p k, D p d)

is is i i

˜

⫺ E(Y (0) ⫹ D (k )FT p k , D p d )

is is i i

˜

p {E(D (k)FT p 1, T p k, D p d)(5)

is i i i

˜

⫺ E(D (k )FT p 1, T p k , D p d )}

is i i i

˜

⫹ {E(Y (0)FT p 1, T p k, D p d)

is i i i

˜

⫺ E(Y (0)FT p 1, T p k , D p d )},

is i i i

for all k, k

, , and all d, d

. While (C1) implies that

k ( kE(Y (0)FT p

is i

, it does not imply that the last term1, D p d) p E(Y (0)FT p 0, D p d)

iisii

540 Hotz et al.

in braces in (5) equals zero, even for the same program/location (i.e.,

). Furthermore, (C1) implies nothing about the first term in braces.

d p d

As such, the mean difference between the outcomes of those receiving

treatment component k and those receiving k

does not, in general, equal

. Additional assumptions are required. In particular, we require

g (k, k )

s

˜

E(Y (0)FT p 1, T p k, D p d)

is i i i

˜

⫺ E(Y (0)FT p 1, T p k , D p d ) p 0, (A1)

is i i i

for all k, k

, , and all d, d

; that is, there is no difference in Y(0),

k ( k

the no-treatment outcome, for subjects who were assigned to treatment

components k and k

, and

˜

E(D (k)FT p 1, T p k, D p d)

is i i i

˜

p E(D (k)FT p 1, T p k , D p d )(A2)

is i i i

p E(D (k)FT p 1),

is i

for all k, k

, , and all d, d

; that is, the expected gross treatment

k ( k

effects for treatment component k is the same for those assigned to com-

ponents k and k

.

23

Given (A1) and (A2), it follows that the difference

between and in (5) is

˜˜

E(Y (k)FT p k, D p d) E(Y (k )FT p k , D p d )

is i i is i i

equal to . Note that potentially weaker versions of (A1) and (A2)

g (k, k )

s

in which these assumptions hold only within a program/location

(d p

) could be assumed, although then only program-specific ’s

d g (k, k )

s

would be identified. In short, the random assignment design used in

evaluations, such as the MDRC GAIN evaluation, is not sufficient to iden-

tify ADTEs.

In order to secure identification (and a consistent estimator) of

, we consider the use of nonexperimental methods which imply

g (k, k )

s

that (A1) and (A2) hold under some set of circumstances. In our discus-

sion, we describe the use of statistical matching methods in conjunction

with data in which subjects are randomly assigned to receive some treat-

ment or a control group. Matching methods assume that by controlling

(adjusting) for a set of pretreatment characteristics, Z

i

, in a nonparametric

23

We ignore the possibility that both (A1) and (A2) are violated with off-setting

biases.

Impacts of the GAIN Program 541

way, conditional versions of (A1) and (A2) will hold.

24

More precisely,

we assume that there exists a vector, Z

i

, such that

25

˜

(Unconfoundedness) Y(0), Y(1), … , Y(k), … , Y(K) ⊥ TFZ,

G k and G d. (C2)

That is, the potential outcomes associated with treatment components are

independent of the assignment mechanism for these components condi-

tional on Z. It follows from (C2) that

˜

E [E(Y (0)FT p 1, T p k, D p d)

Zis i i i

˜

⫺ E(Y (0)FT p 1, T p k , D p d )FZ ] p 0(A1

)

is i i i i

and

˜

E [E(D (k)FT p 1, T p k, D p d)

Zisi i i

˜

⫺ E(D (k)FT p 1, T p k , D p d )FZ ] p 0, (A2

)

is i i i i

for all k, k

, , and all d, d

. It follows that assumptions (A1

) and

k ( k

(A2

) imply that the difference between the conditional (on Z) versions of

and identify

˜˜

E(Y (k)FT p k, D p d) E(Y (k )FT p k , D p d ) g (k, k )

is i i is i i s

and justify the use of matching methods—and, in certain cases, parametric

regression techniques—to (consistently) estimate this ADTE.

Condition (C2)—and, thus, (A1

) and (A2

)—is not directly verifiable,

at least not for situations in which treatment components are not randomly

assigned. As such, matching methods are inherently more controversial

than reliance on a properly designed random assignment experiment.

Nonetheless, recent studies by Dehejia and Wahba (1999), Heckman et

al. (1997, 1998), and Hotz et al. (2005) suggest that such adjustments,

with sufficiently detailed pretreatment characteristics, can produce cred-

ible nonexperimental estimates of average treatment effects.

26

Here we

extend the use of these methods to estimating the differential effects of

alternative treatment components.

The availability of data for a randomly assigned control group that

24

See Rubin (1973a, 1973b, 1977, 1979) for the initial formalization of the use

of matching methods to reduce bias in causal inference using nonexperimental

data. See also Heckman, Ichimura, and Todd (1997, 1998a) for further refinements

on these methods.

25

See Imbens (2000) and Lechner (2001) for formal treatments of matching

methods in the context of multiple treatments.

26

See Smith and Todd (2005) for a critical reanalysis of Dehejia and Wahba

(1999). Smith and Todd conclude that matching methods can be used to estimate

simple treatment effects, but care must be taken as to what pretreatment char-

acteristics are used in the matching.

542 Hotz et al.

receives no treatment, such as is the case with the MDRC GAIN eval-

uation, does provide scope for assessing the validity of assumption (A1

)

in the case where there are only two treatment components, k and k

.In

this case, it follows that (C1) can be written as

E(Y (0)FT p 0, D p d)

is i i

p E(Y (0)FT p 1, D p d)

is i i

k

˜

p E(Y (0)FT p 1, T p k, D p d)P

is i i i d

k

˜

⫹ E(Y (0)FT p 1, T p k , D p d)[1 ⫺ P ](C1

)

is i i i d

˜

p [E(Y (0)FT p 1, T p k, D p d)

is i i i

k

˜

⫺ E(Y (0)FT p 1, T p k , D p d)]P

is i i i d

˜

⫹ E(Y (0)FT p 1, T p k , D p d),

is i i i

for all d, where is the proportion of subjects

k

˜

P { Pr (T p kFD p d)

dii

receiving treatment component k in program/location d. It follows from

(A1

) (and [C1]) that the term in square brackets after the last equality in

(C1

) is equal to 0, for all d. That is, the mean of Y for the control group

should not depend on (vary with) . Thus, to test (A1

), one can regress

k

P

d

the outcomes, Y, on , exploiting the variation in the mix of treatment

k

P

d

components across programs/locations, and where the regression con-

ditions on Z

i

, either nonparametrically using matching methods or para-

metrically using regression methods, and test whether the coefficient on

equals zero for all d. We implement this test in our empirical analysis

k

P

d

to assess the validity of (A1

) with the MDRC GAIN evaluation data.

27

A similar test of the validity of (A2

) is not available. Thus, this as-

sumption must be maintained when using matching methods to estimate

ADTEs. Nonetheless, the validity of such methods in the estimation of

ADTEs is more plausible, although not guaranteed, if (A1

) is shown to

hold in the data.

Until now, we have assumed knowledge of the treatment component

assignments ( ) to each subject who actually receives treatment. This is

˜

T

i

not the case for the MDRC GAIN evaluation data that we use to analyze

the differential effects of LFA and HCD treatment components. Individ-

ual-level treatment component assignments are unknown in these data.

27

For other examples of assessing the validity of nonexperimental methods with

data from experimental data, see LaLonde (1986), Rosenbaum (1987), Heckman

and Hotz (1989), Heckman, Ichimura, Smith, and Todd (1997), Heckman, Ichi-

mura, and Todd (1998), Dehejia and Wahba (1999), Hotz et al. (2005), and Smith

and Todd (2005).

Impacts of the GAIN Program 543

Lack of treatment component assignment information is a common sit-

uation in other data sources used to evaluate the effects of training pro-

grams. However, one may have information on the proportions of subjects

who received various treatment components ( ) for particular programs.

k

P

d

For the GAIN programs in California, we have information on these

proportions for the four counties we analyze from the MDRC GAIN

evaluation. In fact, we have it at the quarterly level for the quarters in

which GAIN registrants were randomly assigned to treatment or control

status.

As first noted by Heckman and Robb (1985), and subsequently ex-

tended by Mitnik (2004) to the case of differential treatment effects, es-

timation of causal effects in the case of unknown treatment status at the

subject level can still proceed with data on treatment component prob-

abilities under certain assumptions and with sufficient variation in these

probabilities across programs and/or subgroups. Assumptions (A1

) and

(A2

)—and, thus, (C2)—are sufficient to allow one to estimate

g (k, k )

s

with only data on treatment assignment proportions, rather than indi-

vidual-level treatment assignment status, since conditional on Z, the iden-

tification (consistent estimation) of only requires identifying (con-

g (k, k )

s

sistently estimating) the mean differential of outcomes for trainees who

receive treatment components k and k

. Furthermore, we exploit the var-

iation in across counties, as well as across entry cohorts, to consistently

k

P

d

estimate these conditional mean differences.

D. Estimating Average Differential Treatment Effects

In the empirical analysis presented below, we make use of parametric

regression methods, rather than nonparametric matching techniques, to

condition on the Z’s as implied by assumptions (A1

) and (A2

)

28

to es-

timate the average differential treatment effect of the LFA versus HCD

treatment components.

29

For the sake of clarity, we need to augment the

notation used above. Let Y

is,dc

denote the outcome of GAIN registrant i

for postrandomization period s that is located in county d and entered

the MDRC evaluation in quarter c; if this GAIN registrant lo-T p 1

i,dc

cated in county d and from entry cohort c was (randomly) assigned to

the experimental group and equal to zero otherwise; denotes the

˜

T

i,dc

28

While not presented herein, we also used nonparametric matching techniques,

controlling for the same set of X’s listed above, to estimate the differential treat-

ment effects between Riverside and the various comparison counties. The esti-

mates, especially the inferences drawn, are quite similar to the regression-based

estimates reported below.

29

See Hirano, Imbens, and Ridder (2003) for a discussion of efficient estimation

of average treatment effects using propensity score methods. Also see Abadie and

Imbens (2006), who characterize the asymptotic properties of matching estimators

for average treatment effects.

544 Hotz et al.

treatment component to which a GAIN registrant is assigned, where the

components in the GAIN context are for the LFA treatment componentl

and h for the HCD treatment component; Z

i,dc

denotes the vector of

prerandomization characteristics for this subject; and denotes the pro-

l

P

dc

portion of trainees—those for which —that were assigned theT p 1

i,dc

LFA treatment ( ) component.

˜

T p l

i,dc

One potential strategy for estimating would be to estimate theg (l, h)

s

following regression model using only data on GAIN registrants in the

experimental group ( ):T p 1

i,dc

l

Y p b ⫹ g (l, h)P ⫹ b Z ⫹ ,(6)

is,dc 0ss dc1si,dc is,dc

where

is,dc

is a stochastic disturbance assumed to have mean zero and the

coefficient on is the ADTE of interest, . (The elements in Z

is,dc

l

P g (l, h)

dc s

are listed in the note to table 5.) Estimating (6) with the experimentals

subsample will generate consistent estimates of if both assumptionsg (l, h)

s

(A1

) and (A2

) hold and the population regression function for Y

is,dc

is

linear in and Z

i

. We present estimates of based on only using

l

P g (l, h)

dc s

data for the experimental group of the MDRC GAIN evaluation in table

6 below.

To test assumption (A1

), one can estimate the regression specification

in (6) using data for the subsample of controls in the four counties of the

MDRC GAIN evaluation and then test the hypothesis that .g (l, h) p 0

s

We present results for this test in table 4 below. A potentially more

rigorous version of this test allows for the possibility that variesg (l, h)

s

across counties, that is, estimating the following regression specification

in place of (6):

jlj

Y p b ⫹ g (l, h)PI ⫹ b Z ⫹ ,(6

)

冘

is,dc 0ssjci1si,dc is,dc

j苸{A,L,R,S}

where denotes the indicator function for and the four values for

j

IDp j

ii

j are A for Alameda County, L for Los Angeles County, R for Riverside

County, and S for San Diego County. In this case, we test the null hy-

pothesis that , where now the

ALRS

g (l, h) p g (l, h) p g (l, h) p g (l, h) p 0

ssss

alternative hypothesis is that can be nonzero in any county. We

d

g (l, h)

s

also present results from this second test of (A1

) in table 4.

In one sense, finding that we cannot reject the null hypotheses in the

above tests justifies the maintenance of assumption (A1

) and, thus, the

reliance on the estimator of derived from estimating (6) with datag (l, h)

s

for registrants who were randomly assigned to the experimental group

in the MDRC GAIN evaluation. But, as is always true, the results from

such tests are subject to estimation error. That is, the power of any test

may not be sufficient to avoid Type II errors, that is, failing to reject null

hypotheses when they are false. To guard against this possibility, we also

present results from a “difference-in-differences” (DID) estimator of

Impacts of the GAIN Program 545

that relies on using data for both experimental and control groupsg (l, h)

s

in estimation. In particular, this DID estimator of is formed byg (l, h)

s

estimating the following regression function:

30

ll

Y p b ⫹ b P ⫹ b T ⫹ g (l, h)PT

is,cd 0s 1sdc 2si,dc s dc i,dc

⫹ b Z ⫹ b ZT⫹ n .(7)

3si,dc 4si,dc i,dc is,dc

Using (7) to estimate with data for the experimental and controlg (l, h)

s

groups in the MDRC GAIN evaluation still relies on assumptions (A1

)

and (A2

) to hold but allows the data on “controls” to empirically adjust

for across-program differences in populations and treatment component

assignment mechanisms to help isolate a consistent estimate of .g (l, h)

s

Furthermore, the DID estimator allows for any estimation error that may

affect the tests of (A1

) described above to explicitly affect the precision

of the estimate of , which is not the case for the estimator ofg (l, h)

s

based solely on data for experimentals. Accordingly, we view theg (l, h)

s

DID estimator as a more “conservative” method for estimating .g (l, h)

s

Estimates based on this DID estimator are presented in table 5.

E. Postrandomization Variation in Labor Market Conditions

To this point, we have implicitly assumed that temporal variation in

treatment effects reflects the profile of “returns” to training. For example,

the relative effects of receiving a vocational training course received at

may decline over time as skills acquired in such a course depreciate.s p 0

How rapidly the effects of alternative treatments decline with s, if they

decline at all, can provide important insights into the long-term effec-

tiveness of alternative training strategies. But treatment effects also may

vary over time due to posttraining changes in environmental factors, such

as local labor market conditions. Recall that Y

is,dc

(k), the outcome of the

ith subject residing in county d and in GAIN entry cohort c that occurred

s periods after receiving treatment k, can be written as

Y (k) p Y (0) ⫹ D (k), (8)

is,dc is,dc is,dc

where Y

is,dc

(0) is the potential outcome associated with the null treatment

and D

is,dc

(k) is the gain in Y from receiving treatment k relative to the null

treatment. Let M

ds

denote the labor market conditions that prevail in

county d in the calendar period corresponding to s. Suppose that labor

market conditions affect posttraining outcomes. For example, a trainee’s

probability of being employed in period s ( ) depends on the extents

1 0

30

Nonparametric versions of (7), based on matching methods, also are possible.

546 Hotz et al.

of the local demand for labor in that period. We represent this dependence

by rewriting (8) as follows:

Y (k, M ) p Y (0, M ) ⫹ D (k, M ). (9)

is,dc ds is,dc ds is,dc ds

The right-hand side of (9) allows both the potential outcome for the null

treatment and the gain associated with treatment k to vary with M

ds

.

The dependence of treatment effects on labor market conditions hinges

on whether D

is,dc

(k) varies with M

ds

. Allowing Y

is,dc

(0) to depend on M

ds

need not compromise the ability to identify or estimate labor market

invariant treatment effects so long as D

is,dc

(k) is independent of M

ds

.Ifthe

latter condition holds, evaluation data in which all treatments, including

a null treatment, are randomly assigned can be used to generate unbiased

estimates of Y

is,dc

(0, M

ds

) and D

is,dc

(k), for all k, and, thus, unbiased estimates

of labor market invariant treatment effects. In the absence of data with

randomly assigned treatments, additional conditions, such as (C2) and/

or (A1

) and (A2

), would be required to obtain consistent estimates of

labor market invariant treatment effects.

However, if D

is,dc

(k) does vary with M

ds

, data for which treatments are

randomly assigned or for which an unconfoundedness condition holds

will not be sufficient to isolate (identify) labor market invariant treatment

effects. In this case, all one can identify nonparametrically is D

is,dc

(k, M

ds

)

which implies that average treatment effects will, in general, depend on

the distribution of posttraining labor market conditions, both across lo-

calities and over time.

To explore the potential importance of heterogeneity in withg (l, h)

s

respect to posttreatment labor market conditions, we estimate the fol-

lowing modified version of the DID estimating equation in (7):

ll

Y p d ⫹ d P ⫹ d T ⫹ g (l, h)PT

is,cd 0s 1sdc 2si,dc s0 dc i,dc

⫹ d Z ⫹ d ZT⫹ v M (10)

3si,dc 4si,dc i,dc 1 ds

l

⫹ v MT ⫹ v MPT ⫹ u ,

2 ds i,dc 3 ds dc i,dc is,dc

which include interactions of a vector of county-specific, posttraining

labor market conditions, M

ds

, with T

i,dc

and . The specification of

l

PT

dc i,dc

the interactions of local labor market conditions with the differential

effects of LFA versus HCD training in (10) is somewhat arbitrary. How-

ever, it does allow us to examine the possible dependence of treatment

effects on M

ds

by testing the significance of the interactions of M

ds

with

T

i,dc

and . Moreover, the estimates of based on (10) provide

l

PT g (l, h)

dc i,dc s0

a measure of the differential effects of LFA versus HCD training com-

ponents net of across time and across county differences in labor market

conditions. We present the latter estimates in tables 5 and 6.

Adjusting for post–random assignment labor market conditions may

Impacts of the GAIN Program 547

be important in the context we analyze. The four counties in the MDRC

GAIN evaluation that we analyze experienced notable changes in labor

market conditions over the 9-year postrandomization period. Moreover,

there are notable differences in these conditions across counties over this

period. Such differences are evident in figures 1–4, which display, in ad-

dition to pre–random assignment values, county-specific trends in four

different measures of labor market conditions over the 9-year post–

random assignment period. As shown in figure 1, the employment rates

for all sectors of the economy declined markedly in the first 3–5 years

after random assignment in each of the four counties we analyze and did

not recover until years 6–9 after random assignment. This temporal pat-

tern in employment reflects the recession that California experienced in

1990 and 1991 and the state’s economic recovery in the latter half of the

1990s. A similar pattern characterized the employment in the state’s retail

trade sector over the postrandomization period (fig. 3) with one notable

exception, Riverside County, where retail trade employment grew steadily

throughout the postrandomization period at an average annual rate of

1%.

Over the same period, there was little change in the average real earnings

per worker of all workers in the four counties, although Alameda county

experienced an average annual improvement per year in earnings per

worker of almost 1% and Riverside County experienced a slight decline

throughout the postrandomization period (fig. 2). The earnings per

worker in the retail trade sector (fig. 4)—a sector that employs a sizable

fraction of low-skilled workers—steadily declined over the first 5–6 years

after random assignment and showed some recovery after year 6, espe-

cially in Alameda County. We explore whether these across-county and

temporal differences in the labor market conditions affected the temporal

patterns in the unadjusted estimates of the differential treatment effects

of LFA versus HCD training on the labor market outcomes of enrollees

in the MDRC GAIN evaluation.

IV. Reanalyzing the Effects of the California GAIN

Welfare-to-Work Program, the MDRC Evaluation, and

GAIN Evaluation Counties

A. Estimates of 9-Year, County-Specific GAIN Impacts

In this section, we present estimates of the short- and longer-run im-

pacts of being assigned to some GAIN training component ( ) for

d

a

s

AFDC-FG derived from the county-specific experiments of the MDRC

GAIN evaluation.

31

We present impact estimates for three different out-

31

In a previous version of this article, we examined the average (and differential)

treatment effects for an additional set of outcomes and for other subgroups of

the caseloads for the four counties. In particular, we also examined the treatment

548 Hotz et al.

comes: (1) ever employed during year, (2) number of quarters worked

per year, and (3) annual labor market earnings.

32

Mean differences between

the experimental and control groups for 3-year averages of these outcomes

are found in table 3. As noted above, MDRC has published such estimates

for these outcomes for the first 3 years of post–random assignment data

and released corresponding estimates based on 5 years of post–random

assignment data in a working paper.

33

While the actual estimates presented

in table 3 are similar to the MDRC 5-year results, they differ slightly due

to slight differences in the samples used and, more importantly, the use

of a different “dating” convention when calculating the measured out-

comes.

34

Given that these shorter-term estimates have been thoroughly

discussed in MDRC publications, we focus most of our discussion on

the longer-term impacts for 5–9 years after random assignment.

effects on two measures of postrandomization welfare participation, namely,

whether the registrant received AFDC benefits—or benefits from AFDC’s suc-

cessor program, the Temporary Assistance to Needy Families (TANF) program,

that began in California in 1998—during the year; and the number of quarters in

the calendar year that she received AFDC/TANF benefits. For these outcomes

and those for employment and earnings, we also generated treatment effect es-

timates separately for AFDC-FG cases determined to be in need and not in need

of basic education. The results for these additional analyses can be found at

www.econ.ucla.edu/hotz/GAIN_extra_results.pdf.

32

The employment and earnings outcomes were constructed with data from

the state’s UI Base Wage files provided by the California Employment Devel-

opment Department (EDD). These data contain quarterly reports from employers

on whether individuals were employed in a UI-covered job and their wage earn-

ings for that job. These quarterly data were organized into 4-quarter “years” from

the quarter of enrollment in the MDRC GAIN evaluation. The “Ever Employed

in Year” outcome was defined to be one if the individual had positive earnings

in at least one quarter during that year and zero otherwise. The “Annual Earnings”

outcome was the sum of the 4-quarter UI-covered earnings recorded for an in-

dividual in the Base Wage file. All income variables were converted to 1999 dollars

using cost-of-living deflators. The AFDC/TANF variables were constructed using

data from the California statewide Medi-Cal Eligibility Data System (MEDS)

files, which contain monthly information on whether an individual received

AFDC (before 1998) or TANF (starting in 1998) benefits in California during a

month. These monthly data were organized into 3-month “quarters” from the

quarter of enrollment in the MDRC GAIN evaluation and then organized into

“years” since enrollment, as was done with the employment and earnings data.

The “Ever Received AFDC/TANF Benefits in Year” variable was defined to be

one if the individual received AFDC or TANF benefits in at least 1 month during

that year and zero otherwise.

33

See Riccio, Friedlander, and Freedman (1994) for 3-year impact estimates and

Freedman et al. (1996) for estimates based on 5 years of follow-up data.

34

In their analysis, MDRC defined the first year of post–random assignment

to be quarters 2–5, year 2 as quarters 6–9, etc. In our analysis, we define year 1

as quarters 1–4, year 2 as quarters 5–8, etc. This difference in definitions results

in relatively minor differences between our years 1–5 estimates relative to those

produced by MDRC.

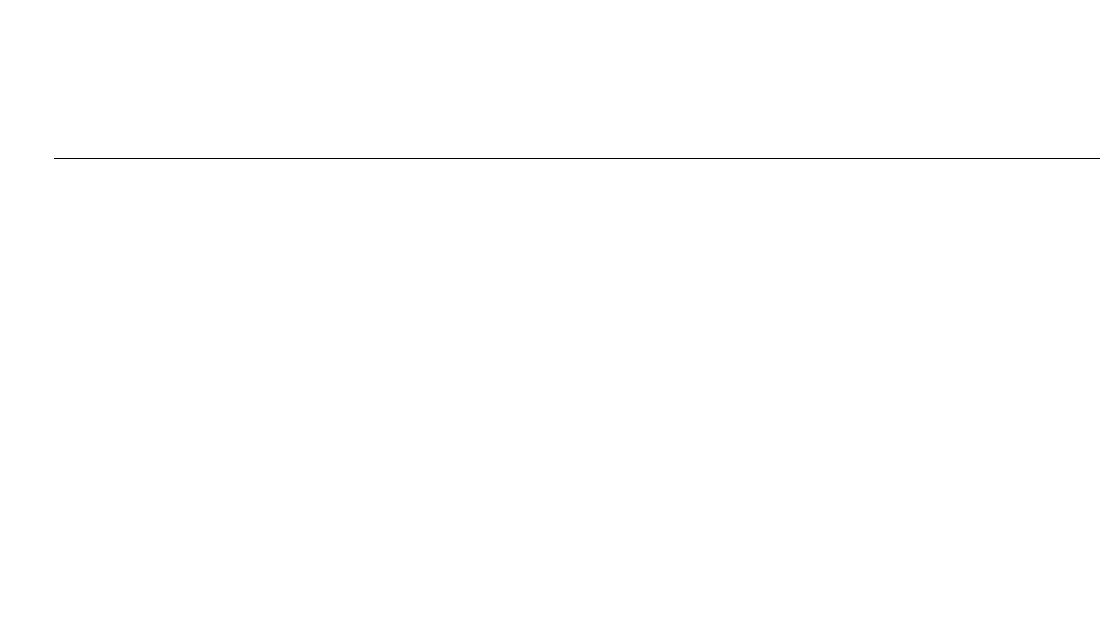

Table 3

Experimental Estimates of Annual Impacts of GAIN

Years after Random

Assignment

Alameda

Los Angeles Riverside San Diego

Experimental Control Difference Experimental Control Difference Experimental Control Difference Experimental Control Difference

Annual employment (%):

1–3 30.8 28.1 2.7 26.1 24.5 1.7 49.0 35.3 13.6*** 45.1 40.8 4.3***

(2.2) (1.2) (1.3) (1.3)

4–6 37.0 34.7 2.3 29.2 25.8 3.3*** 40.4 33.5 6.9*** 40.8 38.2 2.6*

(2.4) (1.3) (1.4) (1.4)

7–9 45.3 45.3 .0 36.9 33.1 3.8*** 39.3 37.8 1.5 41.0 40.9 .1

(2.6) (1.4) (1.4) (1.4)

Annual number of quar-

ters worked:

1–3 .80 .75 .05 .71 .67 .04 1.33 .90 .43*** 1.25 1.09 .15***

(.07) (.04) (.04) (.04)

4–6 1.12 1.02 .10 .87 .77 .10** 1.23 .98 .25*** 1.26 1.17 .09*

(.08) (.04) (.05) (.05)

7–9 1.47 1.42 .05 1.16 1.03 .13*** 1.23 1.15 .08 1.32 1.28 .04

(.09) (.05) (.05) (.05)

Annual earnings (1999$):

1–3 2,333 1,849 484 1,843 1,849 ⫺6 3,668 2,253 1,416*** 3,781 3,165 616***

(302) (149) (208) (208)

4–6 4,069 3,342 727 2,615 2,493 122 4,363 3,201 1,162*** 4,849 4,315 534*

(464) (196) (283) (283)

7–9 5,871 5,206 665 3,689 3,386 302 4,585 4,174 411 5,394 4,948 446

(563) (236) (308) (308)

Note.—Sample: AFDC-FG cases in MDRC GAIN evaluation. Standard errors are in parentheses.

* Denotes statistically significant at 10% level.

** Denotes statistically significant at 5% level.

*** Denotes statistically significant at 1% level.

550 Hotz et al.

Consider first the estimated GAIN impacts on employment outcomes.

Regardless of whether one uses annual employment rates or the number

of quarters employed in a year, the estimated impacts of Riverside’s pro-

gram are consistently larger, and more likely to be statistically significant,

compared to the effects for the other three counties over the first 3 years

after random assignment. Over this period, the GAIN registrants in Riv-

erside had annual employment rates that were, on average, 13.6 percentage

points (39%) higher than members of the control group and worked 0.43

more quarters per year (48%) higher than did control group members.

The employment impacts of the GAIN programs in the other three coun-

ties are considerably smaller in magnitude and often are not statistically

significant. This apparent relative success of the Riverside GAIN training

components in improving the employment outcomes of its registrants

contributed to why this program, and its work-first orientation, has been

heralded nationally as a model welfare-to-work program.

In the longer run, however, the impacts on employment for the Riv-

erside GAIN program diminished in magnitude and in statistical signif-

icance. In years 4–6 after random assignment, Riverside’s GAIN regis-

trants experience a 6.9 percentage point annual average gain in annual

rates of employment (down from 13.6 percentage points) and 0.25 quarters

worked (down from 0.43 quarters) over their control group counterparts.

For years 7–9, the Riverside GAIN registrants have an average annual

gain of only 1.5 percentage points in annual rates of employment and

0.08 quarters worked per year relative to the control group, and these

latter impacts estimates are no longer significantly different from zero.

35

The employment effects of the GAIN programs in Alameda and San

Diego also decline in magnitude and statistical significance, and the im-

pacts attributable to GAIN in these counties remain substantially smaller

than those for Riverside. In contrast, the estimated GAIN impacts for

the Los Angeles program increased in magnitude in years 4–9 relative to

those in the first 3 years for both measures of employment. On average,

the GAIN program in Los Angeles was estimated to increase annual

employment rates 3.3 (3.8) percentage points per year and the number of

quarters worked by 0.10 (1.3) per year in years 4–6 (years 7–9) after

random assignment. These later-year estimated impacts for Los Angeles

are all statistically significant and are larger than the effects found for the

first 3 years after random assignment. Recall that the Los Angeles county

GAIN program concentrated its services on long-term welfare recipients

at the time our sample members were randomly assigned. Further recall

35