The Liquidsoap book

Samuel Mimram Romain Beauxis

ii

iii

Contents

1 Prologue 1

1.1 What is Liquidsoap? . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.2 About this book . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2 The technology behind streams 9

2.1 Audio streams . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

2.2 Streaming . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

2.3 Audio sources . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

2.4 Audio processing . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.5 Interaction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

2.6 Video streams . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

3 Installation 21

3.1 Automated building using opam . . . . . . . . . . . . . . . . . . 21

3.2 Using binaries . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

3.3 Building from source . . . . . . . . . . . . . . . . . . . . . . . . 24

3.4 Docker image . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

3.5 Libraries used by Liquidsoap . . . . . . . . . . . . . . . . . . . . 26

4 Setting up a simple radio station 29

4.1 The sound of a sine wave . . . . . . . . . . . . . . . . . . . . . . 29

4.2 A radio . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

5 A programming language 39

5.1 General features . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

5.2 Writing scripts . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

iv

5.3 Basic values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

5.4 Programming primitives . . . . . . . . . . . . . . . . . . . . . . 51

5.5 Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

5.6 Records and modules . . . . . . . . . . . . . . . . . . . . . . . . 65

5.7 Advanced values . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

5.8 Conguration and preprocessor . . . . . . . . . . . . . . . . . . 70

5.9 Standard functions . . . . . . . . . . . . . . . . . . . . . . . . . . 72

5.10 Streams in Liquidsoap . . . . . . . . . . . . . . . . . . . . . . . . 78

6 Full workow of a radio station 81

6.1 Inputs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

6.2 Scheduling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

6.3 Tracks and metadata . . . . . . . . . . . . . . . . . . . . . . . . 107

6.4 Transitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

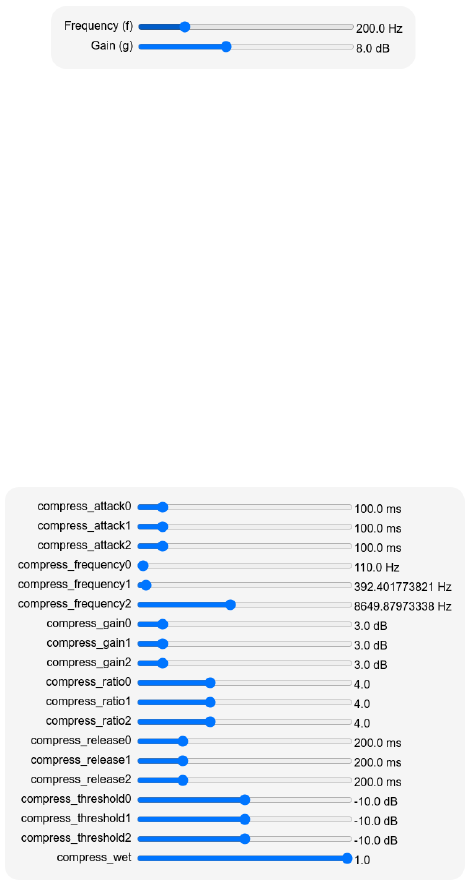

6.5 Signal processing . . . . . . . . . . . . . . . . . . . . . . . . . . 123

6.6 Outputs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

6.7 Encoding formats . . . . . . . . . . . . . . . . . . . . . . . . . . 152

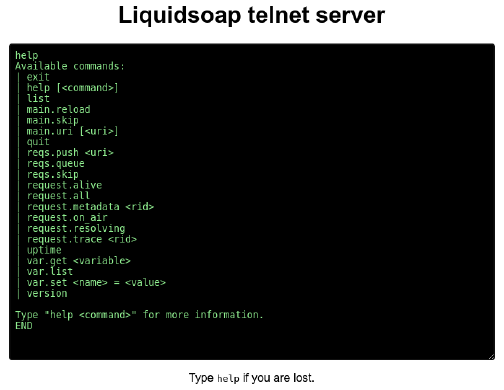

6.8 Interacting with other programs . . . . . . . . . . . . . . . . . . 166

6.9 Monitoring and testing . . . . . . . . . . . . . . . . . . . . . . . 190

6.10 Going further . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199

7 Video 211

7.1 Generating videos . . . . . . . . . . . . . . . . . . . . . . . . . . 211

7.2 Filters and eects . . . . . . . . . . . . . . . . . . . . . . . . . . 220

7.3 Encoders . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 223

7.4 Specic inputs and outputs . . . . . . . . . . . . . . . . . . . . . 227

8 A streaming language 231

8.1 Sources and content types . . . . . . . . . . . . . . . . . . . . . 231

8.2 Frames . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 240

8.3 The streaming model . . . . . . . . . . . . . . . . . . . . . . . . 246

8.4 Requests . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 255

8.5 Reading the source code . . . . . . . . . . . . . . . . . . . . . . 264

Bibliography 267

Index 267

1

1

Prologue

1.1 What is Liquidsoap?

So, you want to make a webradio? At rst, this looks like an easy task, we simply

need a program which takes a playlist of mp3 les, and broadcasts them one by

one over the internet. However, in practice, people want something much more

elaborate than just this.

For instance, we want to be able to switch between multiple playlists depending

on the current time, so that we can have dierent ambiances during the day

(soft music in the morning and techno at night). We also want to be able to

incorporate requests from users (for instance, they could ask for specic songs

on the website of the radio, or we could have guest dj shows). Moreover, the

music does not necessarily come from les which are stored on a local harddisk:

we should be able to relay other audio streams, YouTube videos, or a microphone

which is being recorded on the soundcard or on a distant computer.

When we start from music les, we rarely simply play one after the other.

Generally, we want to have some fading between songs, so that the transition

from a track to the next one is not too abrupt, and serious people want to be

able to specify the precise time at which this fading should be performed on a

per-song basis. We also want to add jingles between songs so that people know

and remember about our radio, and to use speech synthesis to give the name of

the song we just played. We should also maybe add commercials, so that we can

earn some money, and those should be played at a given xed hour, but should

wait for the current song to be nished before launching the ad.

Also, we want to have some signal processing on our music in order to have a

nice and even sound. We should adjust the gain so that successive tracks roughly

2 CHAPTER 1. PROLOGUE

have the same volume. We should also compress and equalize the sound in order

to have a warm sound and to give the radio a unique color.

Finally, the rule number one of a webradio is that it should never fail. We want to

ensure that if, for some reason, the stream we are usually relaying is not available,

or the external harddisk on which our mp3 les are stored is disconnected, an

emergency playlist will be played, so that we do not simply kick o our beloved

listeners. More dicult, if the microphone of the speaker is unplugged, the

soundcard will not be aware of it and will provide us with silence: we should be

able to detect that we are streaming blank and after some time also fallback to

the emergency playlist.

Once we have successfully generated the stream we had in mind, we need to

encode it in order to have data which is small enough to be sent in realtime

through the network. We actually often want to perform multiple simultaneous

encodings of the same stream: this is necessary to account for various qualities

(so that users can choose depending on their bandwidth) and various formats.

We should also be able to broadcast those encoded streams using the various

usual protocols that everybody uses nowadays.

As we can see, there is a wide variety of usages and technologies, and this is

only the tip of the iceberg. Even more complex setups can be looked for in

practice, especially if we have some form of interaction (through a website, a

chatbot, etc.). Most other software tools to generate webradios impose a xed

workow for webradios: they either consist in a graphical interface, which

generally oers the possibility of programming a grid with dierent broadcasts

on dierent timeslots, or a commandline program with more or less complex

conguration les. As soon as your setup does not t within the predetermined

radio workow, you are in trouble.

A new programming language. Based on this observation, we decided to

come up with a new programming language, our beloved Liquidsoap, which

would allow for describing how to generate audio streams. The generation

of the stream does not need to follow a particular pattern here, it is instead

implemented by the user in a script, which combines the various building blocks

of the language in an arbitrary way: the possibilities are thus virtually unlimited.

It does not impose a rigid approach for designing your radio, it can cope with

all the situations described above, and much more.

Liquidsoap itself is programmed in the OCaml programming language, but the

language you will use is not OCaml (although it was somewhat inspired of it), it

is a new language, and it is quite dierent from a general-purpose programming

language, such as Java or C. It was designed from scratch, with stream generation

in mind, trying to follow the principle formulated by Allan Kay: simple things

should be simple, complex things should be possible. This means that we had

in mind that our users are not typically experienced programmers, but rather

1.1. WHAT IS LIQUIDSOAP? 3

people enthusiastic about music or willing to disseminate information, and we

wanted a language as accessible as possible, were a basic script should be easy

to write and simple to understand, where the functions have reasonable default

values, where the errors are clearly located and explained. Yet, we provide most

things needed for handling sound (in particular, support for the wide variety

of le formats, protocols, sound plugins, and so on) as well as more advanced

functions which ensure that one can cope up with complex setups (e.g. through

callbacks and references).

It is also designed to be very robust, since we want our radios to stream forever

and not have our stream crash after a few weeks because of a rare case which is

badly handled. For this reason, before running a script, the Liquidsoap compiler

performs many in-depth checks on it, in order to ensure that everything will

go on well. Most of this analysis is performed using typing, which oer very

strong guarantees.

•

We ensure that the data passed to function is of the expected form. For

instance, the user cannot pass a string to a function expecting an integer.

Believe it or not, this simple kind of error is the source of an incredible

number of bugs in everyday programs (ask Python or Javascript program-

mers).

•

We ensure there is always something to stream: if there is a slight chance

that a source of sound might fail (e.g. the server on which the les are

stored gets disconnected), Liquidsoap imposes that there should be a

fallback source of sound.

•

We ensure that we correctly handle synchronization issues. Two sources

of sound (such as two soundcards) generally produce the sound at slightly

dierent rates (the promised 44100 samples per seconds might actually be

44100.003 for one and 44099.99 for the other). While slightly imprecise

timing cannot be heard, the dierence between the two sources accumu-

lates on the long run and can lead to blanks (or worse) in the resulting

sound. Liquidsoap imposes that a script will be able to cope with such

situations (typically by using buers).

Again, these potential errors are not detected while running the script, but before,

and the experience shows that this results in quite robust sound production. In

this book, we will mainly focus on applications and will not detail much further

the theory behind those features of the language. If you want to know more

about it, you can have a look at the two articles published on the subject, which

are referenced at the end of the book (Baelde and Mimram 2008; Baelde, Beauxis,

and Mimram 2011).

While we are insisting on webradios because this is the original purpose of

Liquidsoap, the language is now also able to handle video. In some sense, this is

quite logical since the production of a video stream is quite similar to the one of

an audio stream, if we abstract away from technical details. Moreover, many

4 CHAPTER 1. PROLOGUE

radios are also streaming on YouTube, adding an image or a video, and maybe

some information text sliding at the bottom.

Free software. The Liquidsoap language is a free software. This of course means

that it is available for free on the internet, see the chapter 3, and more: this also

means that the source code of Liquidsoap is available for you to study, modify

and redistribute. Thus, you are not doomed if a specic feature is missing in the

language: you can add it if you have the competences for that, or hire someone

who does. This is guaranteed by the license of Liquidsoap, which is the GNU

General Public Licence 2 (and most of the libraries used by Liquidsoap are under

the GNU Lesser General Public Licence 2.1).

Liquidsoap will always be free, but this does not prevent companies from selling

products based on the language (and there are quite a number of those, be they

graphical interfaces, web interfaces, or providing webradio tools as part of larger

journalism tools) or services around the language (such as consulting). The main

constraint imposed by the license is that if you distribute a modied version

of Liquidsoap, say with some new features, you have to provide the user with

the source code containing your improvements, under the same license as the

original code.

The above does not apply to the current text which is covered by standard

copyright laws.

A bit of history. The Liquidsoap language was initiated by David Baelde and

Samuel Mimram, while students at the École Normale Supérieure de Lyon,

around 2004. They liked to listen to music while coding and it was fun to listen

to music together. This motivated David to write a Perl script based on the IceS

program in order to stream their own radio on the campus: geekradio was born.

They did not have that many music les, and at that time it was not so easy

to get online streams. But there were plenty of mp3s available on the internal

network of the campus, which were shared by the students. In order to have

access to those more easily, Samuel wrote a dirty campus indexer in OCaml

(called strider, later on replaced by bubble), and David made an ugly Perl hack

for adding user requests to the original system. It probably kind of worked for a

while. Then they wanted something more, and realized it was all too ugly.

So, they started to build the rst audio streamer in pure OCaml, using libshout.

It had a simple telnet interface, so that a bot on irc (this was the chat at that

time) could send user requests easily to it, as well as from the website. There

were two request queues, one for users, one for admins. But it was still not so

nicely designed, and they felt it when they needed more. They wanted some

scheduling, especially techno music at night to code better.

Around that time, students had to propose and realize a “large” software project

for one of their courses, with the whole class of around 30 students. David and

1.2. ABOUT THIS BOOK 5

Samuel proposed to build a complete exible webradio system called savonet

(an acronym of something like Samuel and David’s OCaml network). A complete

rewriting of every part of the streamer in OCaml was planned, with grand goals,

so that everybody would have something to do: a new website with so many

features, a new intelligent multilingual bot, new network libraries for glueing

that, etc. Most of those did not survive up to now. But still, Liquidsoap was

born, and plenty of new libraries for handling sound in OCaml emerged, many

of which we are still using today. The name of the language was a play on word

around “savonet” which sounds like “savonette”, a soap bar in French.

On the day when the project had to be presented to the teachers, the demo

miserably failed, but soon after that they were able to run a webradio with

several static (but periodically reloaded) playlists, scheduled on dierent times,

with a jingle added to the usual stream every hour, with the possibility of live

interventions, allowing for user requests via a bot on irc which would nd songs

on the database of the campus, which have priority over static playlists but not

live shows, and added speech-synthetized metadata information at the end of

requests.

Later on, the two main developers were joined by Romain Beauxis who was

doing his PhD at the same place as David, and was also a radio enthusiastic:

he was part of Radio Pi, the radio of École Centrale in Paris, which was soon

entirely revamped and enhanced thanks to Liquidsoap. Over the recent year, he

has become the main maintainer (taking care of the releases) and developer of

Liquidsoap (adding, among other, support for FFmpeg in the language).

1.2 About this book

Prerequisites. We expect that the computer knowledge can vary much be-

tween Liquidsoap users, who can range from music enthusiasts to experienced

programmers, and we tried to accommodate with all those backgrounds. Never-

theless, we have to suppose that the reader of this book is familiar with some

basic concepts and tools. In particular, this book does not cover the basics of

text le editing and Unix shell scripting (how to use the command line, how to

run a program, and so on). Some knowledge in signal processing, streaming and

programming can also be useful.

Liquidsoap version. The language has gone through some major changes

since its beginning and maintaining full backward-compatibility was impossible.

In this book, we assume that you have a version of Liquidsoap which is at least

2.2. Most examples could easily be adapted to work with earlier versions though,

at the cost of making minor changes.

How to read the book. This book is intended to be read mostly sequentially,

excepting perhaps chapter 5, where we present the whole language in details,

6 CHAPTER 1. PROLOGUE

which can be skimmed trough at rst. It is meant as a way of learning Liquidsoap,

not as a 500+ pages references manual: should you need details about the

arguments of a particular operator, you are advised to have a look at the online

documentation.

We explain the technological challenges that we have face in order to produce

multimedia streams in chapter 2 and are addressed by Liquidsoap. The means of

installing the software are described in chapter 3. We then describe in chapter 4

what everybody wants to start with: setting up a simple webradio station. Before,

going to more advanced uses, we rst need to understand what we can do in this

language, and this is the purpose of chapter 5. We then detail the various ways

to generate a webradio in chapter 6 and a video stream in chapter 7. Finally, for

interested readers, we give details about the internals of the language and the

production of streams in chapter 8.

How to get help. You are reading the book and still have questions? There

are many ways to get in touch with the community and obtain help to get your

problem solved:

1.

the Liquidsoap website

1

contains an extensive up-to-date documentation

and tutorials about specic points,

2.

the Liquidsoap discord chat

2

is a public chat on where you can have

instantaneous discussions,

3.

the Liquidsoap mailing-list

3

is there if you would rather discuss by mail

(how old are you?),

4. the Liquidsoap github page

4

is the place to report bugs,

5. there is also a Liquidsoap discussion board

5

6.

we regularly organize a workshop called Liquidshop

6

where you can dis-

cuss with creators and users of Liquidsoap; the videos of the presentations

are also made available afterward.

Please remember to be kind, most of the people there are doing this on their

free time!

How to improve the book. We did our best to provide a progressive and

illustrated introduction to Liquidsoap, which covers almost all the language,

including the most advanced features. However, we are open to suggestions: if

you nd some error, some unclear explanation, or some missing topic, please tell

us! The best way is by opening an issue on the dedicated bugtracker

7

, but you

1

https://liquidsoap.info/

2

http://chat.liquidsoap.info

3

savonet-[email protected]

4

https://github.com/savonet/liquidsoap/issues

5

https://github.com/savonet/liquidsoap/discussions

6

http://www.liquidsoap.info/liquidshop/

7

https://github.com/savonet/book/issues

1.2. ABOUT THIS BOOK 7

can also reach us by mail at

and

.

Please include page numbers and text excerpts if your comment applies to a

particular point of the book (or, better, make pull requests). The version you

are holding in your hands was compiled on 29 January 2024, you can expect

frequent revisions to x found issues. Those will be made available online

1

.

The authors. The authors of the book you have in your hands are the two

main current developers of Liquidsoap. Samuel Mimram obtained his PhD in

computer science 2009 and is currently a Professor in computer science in École

polytechnique, France. Romain Beauxis obtained his PhD in computer science in

2009 and is currently a software engineer based in New Orleans.

Thanks. The advent of Liquidsoap and this book would not have been possible

without the numerous contributors over the years, the rst of them being David

Baelde who was a leading creator and designer of the language, but also the

students of the MIM1 (big up to Florent Bouchez, Julien Cristau, Stéphane

Gimenez and Sattisvar Tandabany), and our fellow users Gilles Pietri, Clément

Renard and Vincent Tabard (who also designed the logo), as well as all the

regulars of slack and the mailing-list. Many thanks also to the many people who

helped to improve the language by reporting bugs or suggesting ideas, and to

the Radio France team who where enthusiastic about the project and motivated

some new developments (hello Maxime Bugeia, Youenn Piolet and others).

1

http://www.liquidsoap.info/book/

8 CHAPTER 1. PROLOGUE

9

2

The technology behind streams

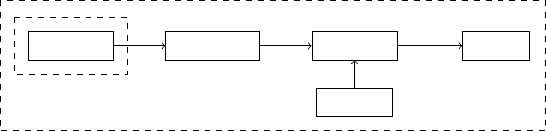

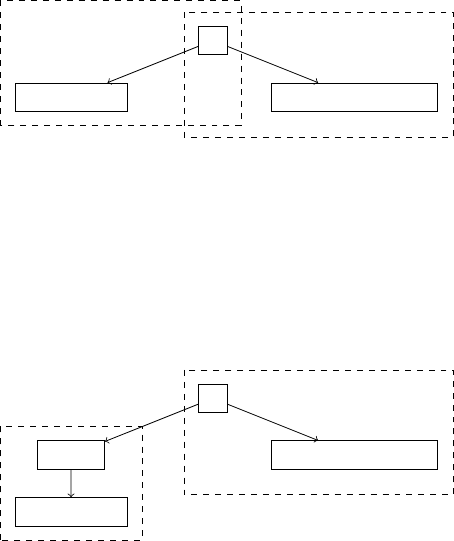

Before getting our hands on Liquidsoap, let us quickly describe the typical

toolchain involved in a webradio, in case the reader is not familiar with it. It

typically consists of the following three elements.

The stream generator is a program which generates an audio stream, generally in

compressed form such as mp3 or aac, be it from playlists, live sources, and so on.

Liquidsoap is one of those and we will be most exclusively concerned with it, but

there are other friendly competitors ranging from Ezstream

2

, IceS

3

or DarkIce

4

which are simple command-line free software that can stream a live input or

a playlist to an Icecast server, to Rivendell

5

or SAM Broadcaster

6

which are

graphical interfaces to handle the scheduling of your radio. Nowadays, websites

are also proposing to do this online on the cloud; these include AzuraCast

7

,

Centova

8

and Radionomy

9

which are all powered by Liquidsoap!

A streaming media system, which is generally Icecast

10

. Its role is to relay the

stream from the generator to the listeners, of which there can be thousands.

With the advent of hls, it tends to be more and more replaced by a traditional

web server.

A media player, which connects to the server and plays the stream for the client,

it can either be a software (such as iTunes), an Android application, or a website.

2

http://icecast.org/ezstream/

3

http://icecast.org/ices/

4

http://www.darkice.org/

5

http://www.rivendellaudio.org/

6

https://spacial.com/

7

https://www.azuracast.com/

8

https://centova.com/

9

https://www.radionomy.com/

10

http://www.icecast.org/

10 CHAPTER 2. THE TECHNOLOGY BEHIND STREAMS

Since we are mostly concerned with stream generation, we shall begin by de-

scribing the main technological challenges behind it.

2.1 Audio streams

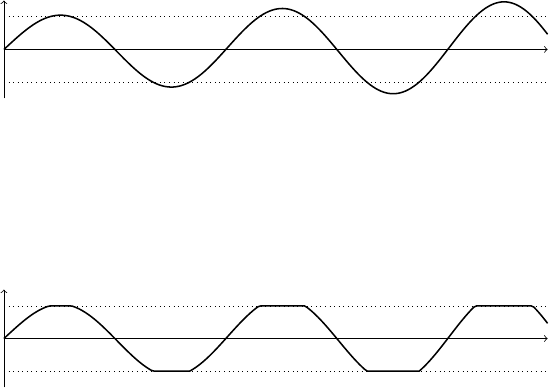

Digital audio. Sound consists in regular vibrations of the ambient air, going

back and forth, which you perceive through the displacements of the tympanic

membrane that they induce in your ear. In order to be represented in a computer,

such a sound is usually captured by a microphone, which also has a membrane,

and is represented by samples, corresponding to the successive positions of

the membrane of the microphone. In general, sound is sampled at 44.1 kHz,

which means that samples are captured 44100 times per second, and indicate

the position of the membrane, which is represented by a oating point number,

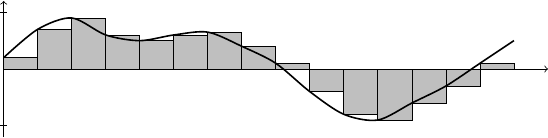

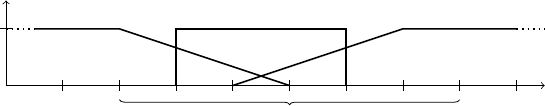

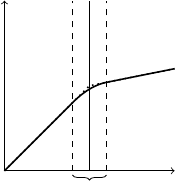

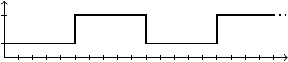

conventionally between -1 and 1. In the gure below, the position of the mem-

brane is represented by the continuous black curve and the successive samples

correspond to the grayed rectangles:

time

-1

1

The way this data is represented is a matter of convention and many of those

can be found in “nature”:

•

the sampling rate is typically 44.1 kHz (this is for instance the case in audio

CDs), but the movie industry likes more 48 kHz, and recent equipment

and studios use higher rates for better precision (e.g. DVDs are sampled

at 92 kHz),

•

the representation of samples varies: Liquidsoap internally uses oats

between -1 and 1 (stored in double precision with 64 bits), but other

conventions exist (e.g. CDs use 16 bits integers ranging from -32768 to

32767, and 24 bits integers are also common).

In any case, this means lots of data. For instance, an audio sample in cd quality

takes 2 bytes (= 16 bits, remember that a byte is 8 bits) for each of the 2 channels

and 1 minute of sound is 44100×2×2×60 bytes, which is roughly 10 MB per

minute.

Compression. Because of the large quantities of data involved, sound is typ-

ically compressed, especially if you want to send it over the internet where

the bandwidth, i.e. the quantity of information you can send in a given period

of time, matters: it is not unlimited and it costs money. To give you an idea,

2.1. AUDIO STREAMS 11

a typical ber connection nowadays has an upload rate of 100 megabits per

second, with which you can send cd quality audio to roughly 70 listeners only.

One way to compress audio consists in using the standard tools from coding

and information theory: if something occurs often then encode it with a small

sequence of bytes (this is how compression formats such as zip work for instance).

The flac format uses this principle and generally achieves compression to around

65% of the original size. This compression format is lossless, which means that if

you compress and then decompress an audio le, you will get back to the exact

same le you started with.

In order to achieve more compression, we should be prepared to lose some

data present in the original le. Most compressed audio formats are based, in

addition to the previous ideas, on psychoacoustic models which take in account

the way sound is perceived by the human hear and processed by the human

brain. For instance, the ears are much more sensitive in the 1 to 5 kHz range so

that we can be more rough outside this range, some low intensity signals can be

masked by high intensity signals (i.e., we do not hear them anymore in presence

of other loud sound sources), they do not generally perceive phase dierence

under a certain frequency so that all audio data below that threshold can be

encoded in mono, and so on. Most compression formats are destructive: they

remove some information in the original signal in order for it to be smaller. The

most well-known are mp3, Opus and aac: the one you want to use is a matter

of taste and support on the user-end. The mp3 format is the most widespread,

the Opus format has the advantage of being open-source and patent-free, has a

good quality/bandwidth radio and is reasonably supported by modern browsers

but hardware support is almost nonexistent, the aac format is proprietary so

that good free encoders are more dicult to nd (because they are subject to

licensing fees) but achieves good sounding at high compression rates and is quite

well supported, etc. A typical mp3 is encoded at a bitrate of 128 kbps (kilobits

per second, although rates of 192 kbps and higher are recommended if you favor

sound quality), meaning that 1 minute will weight roughly 1 MB, which is 10%

of the original sound in cd quality.

Most of these formats also support variable bitrates meaning that the bitrate

can be adapted within the le: complex parts of the audio will be encoded at

higher rates and simpler ones at low rates. For those, the resulting stream size

will heavily depend on the actual audio and is thus more dicult to predict, by

the perceived quality is higher.

As a side note, we were a bit imprecise above when speaking of a “le format”

and we should distinguish between two things: the codec which is the algorithm

we used to compress the audio data, and the container which is the le format

used to store the compressed data. This is why one generally speaks of ogg/opus:

Ogg is the container and Opus is the codec. A container can usually embed

streams encoded with various codecs (e.g. ogg can also contain flac or vorbis

12 CHAPTER 2. THE TECHNOLOGY BEHIND STREAMS

streams), and a given codec can be embedded in various containers (e.g. ac and

vorbis streams can also be embedded into Matroska containers). In particular,

for video streams, the container typically contains multiple streams (one for

video and one for audio), each encoded with a dierent codec, as well as other

information (metadata, subtitles, etc.).

Metadata. Most audio streams are equipped with metadata which are textual

information describing the contents of the audio. A typical music le will contain,

as metadata, the title, the artist, the album name, the year of recording, and so

on. Custom metadata are also useful to indicate the loudness of the le, the

desired cue points, and so on.

2.2 Streaming

Once properly encoded, the streaming of audio data is generally not performed

directly by the stream generator (such as Liquidsoap) to the client, a streaming

server generally takes care of this. One reason to want separate tools is for

reliability: if the streaming server gets down at some point because too many au-

ditors connect simultaneously at some point, we still want the stream generator

to work so that the physical radio or the archiving are still operational.

Another reason is that this is a quite technical task. In order to be transported,

the streams have to be split in small packets in such a way that a listener can

easily start listening to a stream in the middle and can bear the loss of some

of them. Moreover, the time the data takes from the server to the client can

vary over time (depending on the load of the network or the route taken): in

order to cope with this, the clients do not play the received data immediately,

but store some of it in advance, so that they still have something to play if next

part of the stream comes late, this is called buering. Finally, one machine is

never enough to face the whole internet, so we should have the possibility of

distributing the workload over multiple servers in order to handle large amounts

of simultaneous connections.

Icecast. Historically, Icecast was the main open-source server used in order

to serve streams over the internet. On a rst connection, the client starts by

buering audio (in order to be able to cope with possible slowdowns of the

network): Icecast therefore begins by feeding it up as fast as possible and then

sends the data at a peaceful rate. It also takes care of handling multiple stream

generators (which are called mountpoints in its terminology), multiple clients,

replaying metadata (so that we have the title of the current song even if we started

listening to it in the middle), recording statistics, enforcing limits (on clients

or bandwidth), and so on. Icecast servers support relaying streams from other

servers, which is useful in order to distribute listening clients across multiple

physical machines, when many of them are expected to connect simultaneously.

2.3. AUDIO SOURCES 13

HLS. Until recently, the streaming model as oered by Icecast was predomi-

nant, but it suers from two main drawbacks. Firstly, the connection has to be

kept between the client and the server for the whole duration of the stream,

which cannot be guaranteed in mobile contexts: when you connect with your

smartphone, you frequently change networks or switch between wi and 4G

and the connection cannot be held during such events. In this case, the client

has to make a new connection to the Icecast server, which in practice induces

blanks and glitches in the audio for the listener. Another issue is that the data

cannot be cached as it is done for web trac, where it helps to lower latencies

and bandwidth-related costs, because each connection can induce a dierent

response.

For these reasons, new standards such as hls (for http Live Stream) or dash

(for Dynamic Adaptive Streaming over http) have emerged, where the stream is

provided as a rolling playlist of small les called segments: a playlist typically

contains the last minute of audio split into segments of 2 seconds. Moreover,

the playlist can indicate multiple versions of the stream with various formats

and encoding qualities, so that the client can switch to a lower bitrate if the

connection becomes bad, and back to higher bitrates when it is better again,

without interrupting the stream: this is called adaptative streaming. Here, the

les are downloaded one by one, and are served by a usual http server. This

means that we can reuse all the technology developed for those to scale up and

improve the speed, such as load balancing and caching techniques typically

provided by content delivery networks. It seems that such formats will take

over audio distribution in the near future, and Liquidsoap already has support

for them. Their only drawback is that they are more recent and thus less well

supported on old clients, although this tends to be less and less the case.

RTMP. Finally, we would like to mention that, nowadays, streaming is more

and more being delegated to big online platforms, such as YouTube or Twitch,

because of their ease of use, both in terms of setup and of user experience. Those

often use another protocol, called rtmp (Real-Time Messaging Protocol), which

is more suited to transmitting live streams, where it is more important to keep

the latency low (i.e. transmit the information as close as possible to the instant

where it happened) than keep its integrity (dropping small parts of the audio or

video is considered acceptable). This protocol is quite old (it dates back to the

days where Flash was used to make animation on webpages) and tends to be

phased out in favor of hls.

2.3 Audio sources

In order to make a radio, one has to start with a primary source of audio. We

give examples of such below.

14 CHAPTER 2. THE TECHNOLOGY BEHIND STREAMS

Audio les. A typical radio starts with one or more playlists, which are lists of

audio les. These can be stored in various places: they can either be on a local

hard drive or on some distant server, and are identied using a uri (for Uniform

Resource Identier) which can be a path to a local le or something of the form

http://some/server/file.mp3

which indicates that the le should be accessed

using the http protocol (some other protocols should also be supported). There

is a slight dierence between local and distant les: in the case of local les,

we have pretty good condence that they will always be available (or at least

we can check that this is the case), whereas for distant les the server might be

unavailable, or just very slow, so that we have to take care of downloading the

le in advance enough and be prepared to have fallback option in case the le is

not ready in time. Finally, audio les can be in various formats (as described in

section 2.1) and have to be decoded, which is why Liquidsoap depends on many

libraries, in order to support as many formats as possible.

Even in the case of local les, the playlist might be dynamic: instead of knowing

in advance the list of all the les, the playlist can consist of a queue of requests

made by users (e.g., via a website or a chatbot); we can even call a script which

will return the next song to be played, depending on whichever parameters (for

instance taking in account votes on a website).

Live inputs. A radio often features live shows. As in the old days, the speaker

can be in the same room as in the server, in which case the sound is directly

captured by a soundcard. But nowadays, live shows are made more and more

from home, where the speaker will stream its voice to the radio by himself, and

the radio will interrupt its contents and relay the stream. More generally, a radio

should be able to relay other streams along with their metadata (e.g. when a

program is shared between multiple radios) or other sources (e.g. a live YouTube

channel).

As for distant les, we should be able to cleanly handle failures due to network.

Another issue specic to live streams (as opposed to playlists) is that we do not

have control over time: this is an issue for operations such as crossfading (see

below) which requires shifting time and thus cannot be performed on realtime

sources.

Synchronization. In order to provide samples at a regular pace, a source of

sound has an internal clock which will tick regularly: each soundcard has a

clock, your computer has a clock, the live streams are generated by things which

have clocks. Now, suppose that you have two soundcards generating sound at

44100 Hz, meaning that their internal clocks both tick at 44100 Hz. Those are

not innitely precise and it might be the case that there is a slight dierence if 1

Hz between the two (maybe one is ticking at 44099.6 Hz and the other one at

44100.6 Hz in reality). Usually, this is not a problem, but on the long run it will

become one: this 1 Hz dierence means that, after a day, one will be 2 seconds

2.4. AUDIO PROCESSING 15

in advance compared to the other. For a radio which is supposed to be running

for months, this will be an issue and the stream generator has to take care of

that, typically by using buers. This is not a theoretical issue: rst versions

of Liquidsoap did not carefully handle this and we did experience quite a few

problems related to it.

2.4 Audio processing

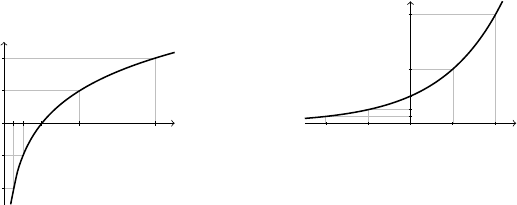

Resampling. As explained in section 2.1, various les have various sampling

rates. For instance, suppose that your radio is streaming at 48 kHz and that you

want to play a le at 44.1 kHz. You will have to resample your le, i.e. change its

sampling rate, which, in the present case, means that you will have to come up

with new samples. There are various simple strategies for this such as copying

the sample closest to a missing one, or doing a linear interpolation between

the two closest. This is what Liquidsoap is doing if you don’t have the right

libraries enabled and, believe it or not (or better try it!), it sounds quite bad.

Resampling is a complicated task to get right, and can be costly in terms of

cpu if you want to achieve good quality. Whenever possible Liquidsoap uses

libsamplerate

library to achieve this task, which provides much better results

than the naive implementation.

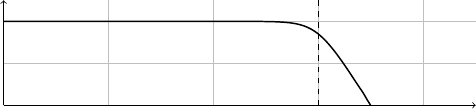

Normalization. The next thing you want to do is to normalize the sound,

meaning that you want to adjust its volume in order to have roughly the same

audio loudness between tracks: if they come from dierent sources (such as two

dierent albums by two dierent artists) this is generally not the case.

A strategy to x that is to use automatic gain control: the program can regularly

measure the current audio loudness based, say, on the previous second of sound,

and increase or decrease the volume depending on the value of the current level

compared to the target one. This has the advantage of being easy to set up

and providing a homogeneous sound. However, while it is quite ecient when

having voice over the radio, it is quite unnatural for music: if a song has a quiet

introduction for instance, its volume will be pushed up and the song as a whole

will not sound as usual.

Another strategy for music consists in pre-computing the loudness of each le. It

can be performed each time a song is about to be played, but it is much more e-

cient to compute this in advance and store it as a metadata: the stream generator

can then adjust the volume on a per-song basis based on this information. The

standard for this way of proceeding is ReplayGain and there are a few ecient

tools to achieve this task. It is also more natural than basic gain control, because

it takes in account the way our ears perceive sound in order to compute loudness.

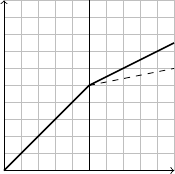

At this point, we should also indicate that there is a subtlety in the way we

measure volume (and loundness). It can either be measured linearly, i.e. we

16 CHAPTER 2. THE TECHNOLOGY BEHIND STREAMS

indicate the amplication coecient by which we should multiply the sound, or

in decibels. The reason for having the two is that the rst is more mathematically

pleasant, whereas the second is closer to the way we perceive the sound. The re-

lationship between linear l and decibel d measurements is not easy, the formulas

relating the two are d=20 log

10

(l) and l=10

d/20

. If your math classes are too far

away, you should only remember that 0 dB means no amplication (we multiply

by the amplication coecient 1), adding 6 dB corresponds to multiplying by 2,

and removing 6 dB corresponds to dividing by 2:

decibels -12 -6 0 6 12 18

amplication 0.25 0.5 1 2 4 8

This means that an amplication of -12 dB corresponds to multiplying all the

samples of the sound by 0.25, which amounts to dividing them by 4.

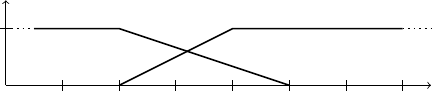

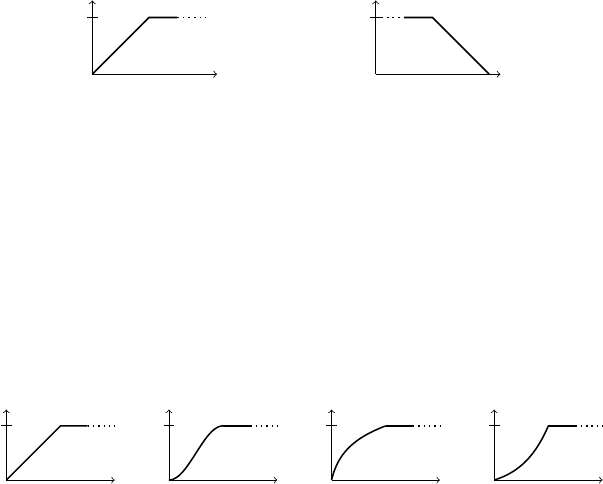

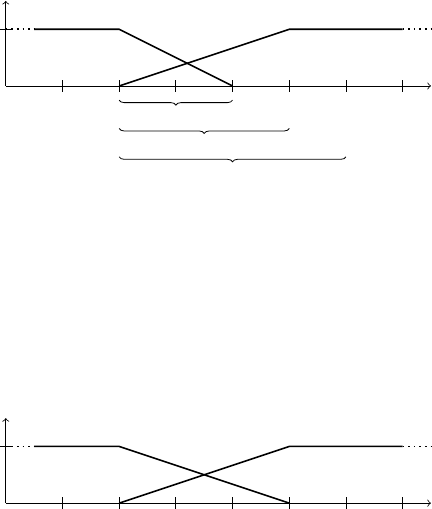

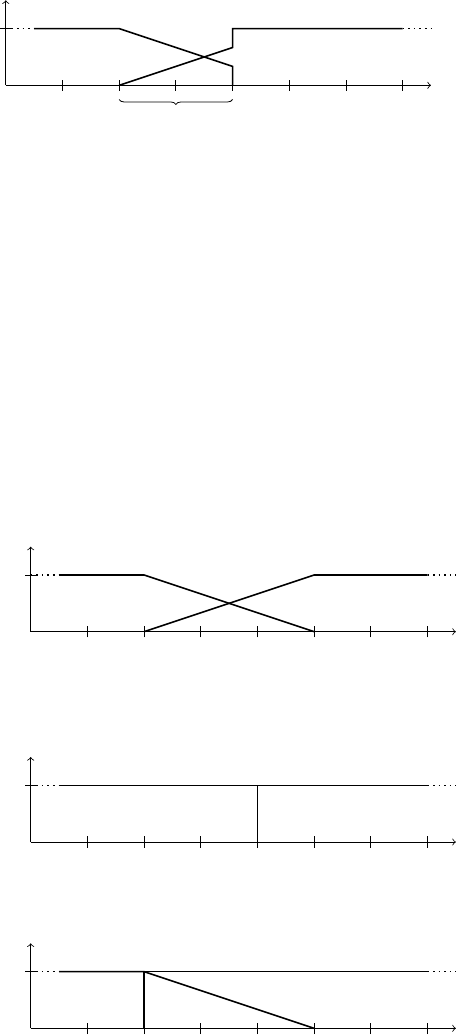

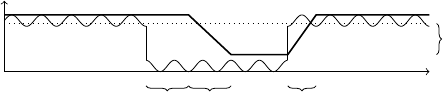

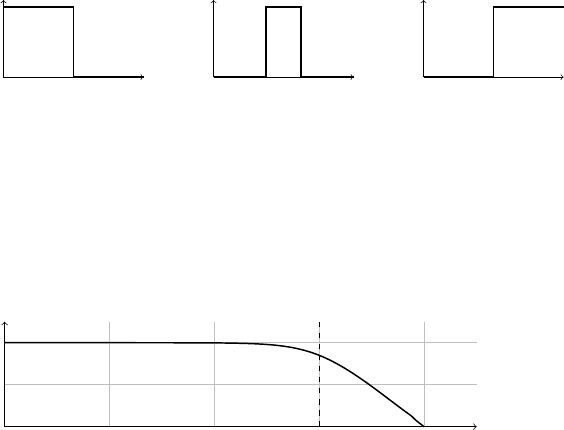

Transitions between songs. In order to ease the transition between songs, one

generally uses crossfading, which consists in fading out one song (progressively

lowering its volume to 0) while fading in the next one (progressively increasing

its volume from 0). A simple approach can be to crossfade for, say, 3 seconds

between the end of a song and a beginning of the next one, but serious people

want to be able to choose the length and type of fading to apply depending on

the song. And they also want to have cue points, which are metadata indicating

where to start a song and where to end it: a long intro of a song might not be

suitable for radio broadcasting and we might want to skip it. Another common

practice when performing transitions between the tracks consists in adding

jingles: those are short audio tracks generally saying the name of the radio or

of the current show. In any way, people avoid simply playing one track after

another (unless it is an album) because it sounds awkward to the listener: it

does not feel like a proper radio, but rather like a simple playlist.

Equalization. The nal thing you want to do is to give your radio an appreciable

and recognizable sound. This can be achieved by applying a series of sound

eects such as

•

a compressor which gives a more uniform sound by amplifying quiet

sounds,

•

an equalizer which gives a signature to your radio by amplifying dierently

dierent frequency ranges (typically, simplifying a bit, you want to insist

on bass if you play mostly lounge music in order to have a warm sound,

or on treble if you have voices in order for them to be easy to understand),

•

a limiter which lowers the sound when there are high-intensity peaks (we

want to avoid clipping),

•

a gate which reduce very low level sound in order for silence to be really

silence and not low noise (in particular if you capture a microphone),

2.5. INTERACTION 17

• and so on.

These descriptions are very rough and we urge the reader not accustomed to

those basic components of signal processing to learn more about them. You will

need those at some point of you want to make a professional sounding webradio.

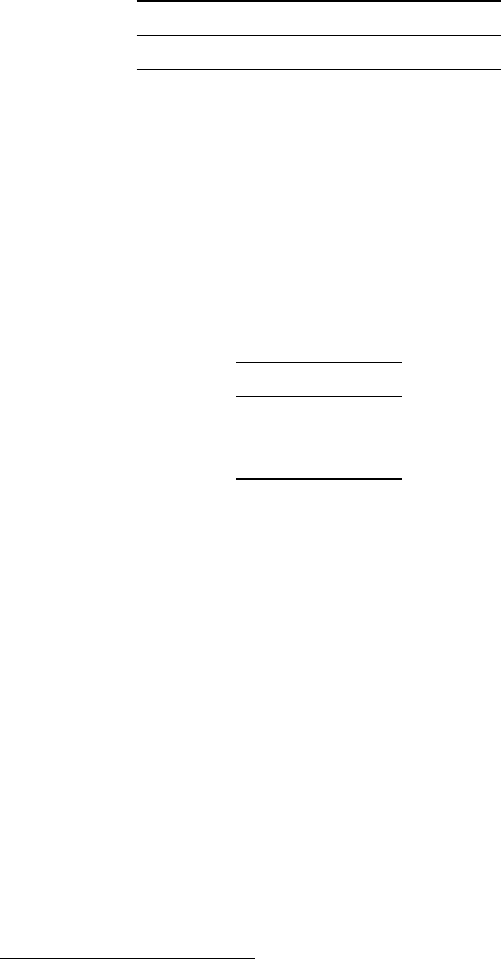

The processing loop. Because we generally want to perform all those opera-

tions on audio signals, the typical processing loop will consist in

1. decoding audio les,

2. processing the audio (fading, equalizing, etc.),

3. encoding the audio,

4. streaming encoded audio.

If for some reason we do not want to perform any audio processing (for instance,

if this processing was done oine, or if we are relaying some already processed

audio stream) and if the encoding format is the same as the source format, there

is no need to decode and then reencode the sound: we can directly stream the

original encoded les. By default, Liquidsoap will always reencode les but this

can be avoided if we want, see section 6.7.

2.5 Interaction

What we have described so far is more or less the direct adaptation of traditional

radio techniques to the digital world. But with new tools come new usages, and

a typical webradio generally requires more than the above features. In particular,

we should be able to interact with other programs and services.

Interacting with other programs. Whichever tool you are going to use in

order to generate your webradio, it is never going to support all the features

that a user will require. At some point, the use of an obscure hardware interface,

a particular database, or a specic web framework will be required by a client,

which will not be supported out of the shelf by the tool. Or maybe you simply

want to be able to reuse parts of the scripts that you spent years to write in your

favorite language.

For this reason, a stream generator should be able to interact with other tools,

by calling external programs or scripts, written in whichever language. For

instance, we should be able to handle dynamic playlists, which are playlists

where the list of songs is not determined in advance, but rather generated on the

y: each time a song ends a function of the generator or an external program

computes the next song to be played.

We should also be able to easily import data generated by other programs, the

usual mechanism being by reading the standard plain text output of the executed

program. This means that we should also have tools to parse and manipulate

18 CHAPTER 2. THE TECHNOLOGY BEHIND STREAMS

this standard output. Typically, structured data such as the result of a query on

a database can be output in standard formats such as json, for which we should

have support.

Finally, we should be able to interact with some more specic external programs,

such as for monitoring scripts (in order to understand its state and be quickly

notied in case of a problem).

Interacting with other services. The above way of interacting works in pull

mode: the stream generators asks an external program for information, such as

the next song to be played. Another desirable workow is in push mode, where

the program adds information whenever it feels like. This is typically the case

for request queues which are a variant of playlists, where an external programs

can add songs whenever it feels like: those will be played one, in the order where

they were inserted. This is typically used for interactive websites: whenever a

user asks for a song, it gets added to the request queue.

Push mode interaction is also commonly used for controllers, which are physical

or virtual devices consisting of push buttons and sliders, that one can use in order

to switch between audio sources, change the volume of a stream, and so on. The

device generally noties the stream generator when some control gets changed,

which should then react accordingly. The commonly used standard nowadays

for communicating with controllers is called osc (Open Sound Control).

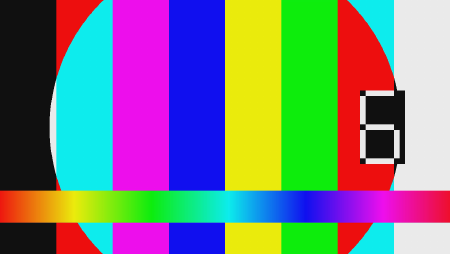

2.6 Video streams

The workow for generating video streams is not fundamentally dierent from

the one that we have described above, so that it is natural to expect that an audio

stream generator can also be used to generate video streams. In practice, this

is rarely the case, because manipulating video is an order of magnitude harder

to implement. However, the advanced architecture of Liquidsoap allows it to

handle both audio and video. The main focus of this book will be audio streams,

but chapter 7 is dedicated to handling video.

Video data. The rst thing to remark is that if processing and transmitting

audio requires handling large amounts of data, video requires processing huge

amounts of data. A video in a decent resolution has 25 images per second at

a resolution of 720p, which means 1280×720 pixels, each pixel consisting of

three channels (generally, red, green and blue, or rgb for short) each of which is

usually coded on one byte. This means that one second of uncompressed video

data weights 65 MB, the equivalent of more than 6 minutes of uncompressed

audio in cd quality! And these are only the minimal requirements for a video

to be called HD (High Denition), which is the kind of video which is being

watched everyday on the internet: in practice, even low-end devices can produce

much higher resolutions than this.

2.6. VIDEO STREAMS 19

This volume of data means that manipulation of video, such as combining videos

or applying eects, should be coded very eciently (by which we mean down to

ne-tuning the assembly code for some parts), otherwise the stream generator

will not be able to apply them in realtime on a standard recent computer. It

also means that even copying of data should be avoided, the speed of memory

accesses is also a problem at such rates.

A usual video actually consists of two streams: one for the video and one for

the audio. We want to be able to handle them separately, so that we can apply

all the operations specic to audio described in previous sections to videos, but

the video and audio stream should be kept in perfect sync (even a very small

delay between audio is video can be noticed).

File formats. We have seen that there is quite a few compressed formats

available for audio and the situation is the same for video, but the video codecs

generally involve many conguration options exploiting specicities of video,

such as the fact two consecutive images in a video are usually quite similar.

Fortunately, most of the common formats are handled by high-level libraries

such as FFmpeg. This solves the problem for decoding, but for encoding we are

still left with many parameters to specify, which can have a large impact on the

quality of the encoded video and on the speed of the compression (nding the

good balance is somewhat of an art).

Video eects. As for audio, many manipulations of video les are expected to

be present in a typical workow.

•

Fading: as for audio tracks, we should be able to fade between successive

videos, this can be a smooth fade, or one video slides on top of the other,

and so on.

•

Visual identity: we should be able to add the logo of our channel, add

a sliding text at the bottom displaying the news or listing the shows to

come.

•

Color grading: as for audio tracks, we should be able to give a particular

ambiance by having uniform colors and intensities between tracks.

20 CHAPTER 2. THE TECHNOLOGY BEHIND STREAMS

21

3

Installation

In order to install Liquidsoap you should either download compiled binaries for

your environment, or compile it by yourself. The latest is slightly more involved,

although it is a mostly automated process, but it allows to easily obtain a cutting-

edge version and take part of the development process. These instructions are

for the latest released version at the time of the writing, you are encouraged to

consult the online documentation.

3.1 Automated building using opam

The recommended method to install Liquidsoap is by using the package man-

ager opam

2

. This program, which is available on all major distributions and

architectures, makes it easy to build programs written in OCaml by installing

the required dependencies (the libraries the program needs to be compiled) and

managing consistency between various versions (in particular, it takes care of

recompiling all the aected programs when a library is installed or updated).

Any user can install packages with opam, no need to be root: the les it installs

are stored in a subdirectory of the home directory, named

.opam

. The opam

packages for Liquidsoap and associated libraries are actively maintained.

Installing opam. The easiest way to install opam on any achitecture is by

running the command

sh <(curl -sL https://git.io/fjMth)

or by installing the

opam

package with the package manager of your distribution,

e.g., for Ubuntu,

2

http://opam.ocaml.org/

22 CHAPTER 3. INSTALLATION

sudo apt install opam

or by downloading the binaries from the opam website

1

. In any case, you should

ensure that you have at least the version 2.0.0 of opam: the version number can

be checked by running opam --version.

If you are installing opam for the rst time, you should initialize the list of opam

packages with

opam init

You can answer yes to all the questions it asks (if it complains about the absence

of

bwrap

, either install it or add the ag

--disable-sandboxing

to the above

command line). Next thing, you should install a recent version of the OCaml

compiler by running

opam switch create 4.13.0

It does take a few minutes, because it compiles OCaml, so get prepared to have

a coee.

Installing Liquidsoap. Once this is done, a typical installation of Liquidsoap

with support for mp3 encoding/decoding and Icecast is done by executing:

opam depext taglib mad lame cry samplerate liquidsoap

opam install taglib mad lame cry samplerate liquidsoap

The rst line (

opam depext ...

) takes care of installing the required external

dependencies, i.e., the libraries we are relying on, but did not develop by our-

selves. Here, we want to install the dependencies required by

taglib

(the library

to read tags in audio les),

mad

(to decode mp3),

lame

(to encode mp3),

cry

(to

stream to Icecast),

samplerate

(to resample audio) and nally

liquidsoap

. The

second line (

opam install ...

) actually install the libraries and programs. Here

also, the compilation takes some time (around a minute on a recent computer).

Most of Liquidsoap’s dependencies are only optionally installed by opam. For

instance, if you want to enable ogg/vorbis encoding and decoding after you’ve

already installed Liquidsoap, you should install the

vorbis

library by executing:

opam depext vorbis

opam install vorbis

Opam will automatically detect that this library can be used by Liquidsoap and

will recompile it which will result in adding support for this format in Liquidsoap.

The list of all optional dependencies that you may enable in Liquidsoap can be

obtained by typing

opam info liquidsoap

1

http://opam.ocaml.org/doc/Install.html

3.2. USING BINARIES 23

and is detailed below.

Installing the cutting-e dge version. The version of Liquidsoap which is

packaged in opam is the latest release of the software. However, you can also

install the cutting-edge version of Liquidsoap, for instance to test upcoming

features. Beware that it might not be as stable as a release, although this is

generally the case: our policy enforces that the developments in progress are

performed apart, and integrated into the main branch only once they have been

tested and reviewed.

In order to install this version, you should rst download the repository con-

taining all the code, which is managed using the git version control system:

git clone https://github.com/savonet/liquidsoap.git

This will create a

liquidsoap

directory with the sources, and you can then

instruct opam to install Liquidsoap from this directory with the following com-

mands:

opam pin add liquidsoap .

From time to time you can update your version by downloading the latest code

and then asking opam to rebuild Liquidsoap:

git pull

opam upgrade liquidsoap

Updating libraries. If you also need a recent version of the libraries in the Liq-

uidsoap ecosystem, you can download all the libraries at once by typing

git clone https://github.com/savonet/liquidsoap-full.git

cd liquidsoap-full

make init

make update

You can then update a given library (say,

ocaml-ffmpeg

) by going in its directory

and pinning it with opam, e.g.

cd ocaml-ffmpeg

opam pin add .

(and answer yes if you are asked questions).

3.2 Using binaries

If you want to avoid compiling Liquidsoap, or if opam is not working on your

platform, the easiest way is to use precompiled binaries of Liquidsoap, if available.

24 CHAPTER 3. INSTALLATION

Linux. There are packages for Liquidsoap in most Linux distributions. For

instance, in Ubuntu or Debian, the installation can be performed by running

sudo apt install liquidsoap

which will install the

liquidsoap

package, containing the main binaries. It comes

equipped with most essential features, but you can install plugins in the packages

liquidsoap-plugin-...

to have access to more libraries; for instance, installing

liquidsoap-plugin-flac

will add support for the flac lossless audio format or

liquidsoap-plugin-all

will install all available plugins (which might be a good

idea if you are not sure about which you are going to need).

macOS. No binaries are provided for macOS, the preferred method is opam, see

above.

Windows. Pre-built binaries are provided on the releases pages

1

in a le with

a name of the form

liquidsoap-vN.N.N-win64.zip

. It contains directly the pro-

gram, no installer is provided at the moment.

3.3 Building from source

In some cases, it is necessary to build directly from source (e.g., if opam is not

supported on your exotic architecture or if you want to modify the source code

of Liquidsoap). This can be a dicult task, because Liquidsoap relies on an

up-to-date version of the OCaml compiler, as well as a bunch of OCaml libraries

and, for most of them, corresponding C library dependencies.

Installing external dependencies. In order to build Liquidsoap, you rst need

to install the following OCaml libraries:

ocamlfind

,

sedlex

,

menhir

,

pcre

and

camomile. You can install those using your package manager

sudo apt install ocaml-findlib libsedlex-ocaml-dev menhir

libpcre-ocaml-dev libcamomile-ocaml-dev,→

(as you can remark, OCaml packages for Debian or Ubuntu often bear names of

the form libxxx-ocaml-dev), or using opam

opam install ocamlfind sedlex menhir pcre camomile

or from source.

Getting the sources of Liquidsoap. The sources of Liquidsoap, along with the

required additional OCaml libraries we maintain can be obtained by downloading

the main git repository, and then run scripts which will download the submodules

corresponding to the various libraries:

1

https://github.com/savonet/liquidsoap/releases

3.4. DOCKER IMAGE 25

git clone https://github.com/savonet/liquidsoap-full.git

cd liquidsoap-full

make init

make update

Installing. Next, you should copy the le

PACKAGES.default

to

PACKAGES

and

possibly edit it: this le species which features and libraries are going to

be compiled, you can add/remove those by uncommenting/commenting the

corresponding lines. Then, you can generate the configure scripts:

./bootstrap

and then run them:

./configure

This script will check that whether the required external libraries are available,

and detect the associated parameters. It optionally takes parameters such as

--prefix

which can be used to specify in which directory the installation should

be performed. You can now build everything

make

and then proceed to the installation

make install

You may need to be root to run the above command in order to have the right to

install in the usual directories for libraries and binaries.

3.4 Docker image

Docker

1

images are provided as

savonet/liquidsoap

: these are Debian-based

images with Liquidsoap pre-installed (and not much more in order to have a le

as small as possible), which you can use to easily and securely deploy scripts

using it. The tag

main

always contains the latest version, and is automatically

generated after each modication.

We refer the reader to the Docker documentation for the way such images can

be used. For instance, you can have a shell on such an image with

docker run -it --entrypoint /bin/bash savonet/liquidsoap:main

By default, the docker image does not have access to the soundcard of the local

computer (but it can still be useful to stream over the internet for instance). It is

however possible to bind the alsa soundcard of the host computer inside the

image. For instance, you can play a sine (see section 4.1) by running:

1

https://www.docker.com/

26 CHAPTER 3. INSTALLATION

docker run -it -v /dev/snd:/dev/snd --privileged

savonet/liquidsoap:main liquidsoap 'output.alsa(sine())',→

This single line should work on any computer on which Docker is installed:

no need to install opam, various libraries, or Liquidsoap, it will automatically

download for you an image where all this is pre-installed (if it does not work, this

probably means that docker does not have the rights to access the sound device lo-

cated at

/dev/snd

, in which case passing the additional option

--group-add=audio

should help).

3.5 Libraries used by Liquidsoap

We list below some of the libraries which can be used by Liquidsoap. They are

detected during the compilation of Liquidsoap and, in this case, support for the

libraries is added. We recall that a library

ocaml-something

can be installed via

opam with

sudo opam depext something

sudo opam install something

which will automatically trigger a rebuild of Liquidsoap, as explained in sec-

tion 3.1.

General. Those libraries add support for various things:

• camomile

: charset recoding in metadata (those are generally encoded in

utf-8 which can represent all characters, but older les used various

encodings for characters which can be converted),

• ocaml-inotify

: getting notied when a le changes (e.g. for reloading a

playlist when it has been updated),

• ocaml-magic

: le type detection (e.g. this is useful for detecting that a le

is an mp3 even if it does not have the .mp3 extension),

• ocaml-lo

: osc (Open Sound Control) support for controlling the radio

(changing the volume, switching between sources) via external interfaces

(e.g. an application on your phone),

• ocaml-ssl

: ssl support for connecting to secured websites (using the

https protocol),

• ocaml-tls: similar to ocaml-ssl,

• ocurl: downloading les over http,

• osx-secure-transport: ssl support via OSX’s SecureTransport,

• yojson

: parsing json data (useful to exchange data with other applica-

tions).

Input / output. Those libraries add support for using soundcards for playing

and recording sound:

3.5. LIBRARIES USED BY LIQUIDSOAP 27

• ocaml-alsa: soundcard input and output with alsa,

• ocaml-ao: soundcard output using ao,

• ocaml-ffmpeg: input and output over various devices,

• ocaml-gstreamer: input and output over various devices,

• ocaml-portaudio: soundcard input and output,

• ocaml-pulseaudio: soundcard input and output.

Among those, PulseAudio is a safe default bet. alsa is very low level and is

probably the one you want to use in order to minimize latencies. Other libraries

support a wider variety of soundcards and usages.

Other outputs:

• ocaml-cry: output to Icecast servers,

• ocaml-bjack: jack support for virtually connecting audio programs,

• ocaml-lastfm

: Last.fm scrobbling (this website basically records the songs

you have listened),

• ocaml-srt: transport over network using srt protocol.

Sound processing. Those add support for sound manipulation:

• ocaml-dssi: sound synthesis plugins,

• ocaml-ladspa: sound eect plugins,

• ocaml-lilv: sound eect plugins,

• ocaml-samplerate: samplerate conversion in audio les,

• ocaml-soundtouch: pitch shifting and time stretching.

Audio le formats.

• ocaml-faad: aac decoding,

• ocaml-fdkaac: aac+ encoding,

• ocaml-ffmepg: encoding and decoding of various formats,

• ocaml-flac: Flac encoding and decoding,

• ocaml-gstreamer: encoding and decoding of various formats,

• ocaml-lame: mp3 encoding,

• ocaml-mad: mp3 decoding,

• ocaml-ogg: Ogg containers,

• ocaml-opus: Ogg/Opus encoding and decoding,

• ocaml-shine: xed-point mp3 encoding,

• ocaml-speex: Ogg/Speex encoding and decoding,

• ocaml-taglib: metadata decoding,

• ocaml-vorbis: Ogg/Vorbis encoding and decoding.

Playlists.

• ocaml-xmlplaylist: support for playlist formats based on xml.

28 CHAPTER 3. INSTALLATION

Video. Video conversion:

• ocaml-ffmpeg: video conversion,

• ocaml-gavl: video conversion,

• ocaml-theora: Ogg/Theora encoding and decoding.

Other video-related libraries:

• camlimages: decoding of various image formats,

• gd4o: rendering of text,

• ocaml-frei0r: video eects,

• ocaml-imagelib: decoding of various image formats,

• ocaml-sdl: display, text rendering and image formats.

Memory. Memory usage is sometimes an issue with some scripts:

• ocaml-jemalloc

: support for jemalloc memory allocator which can avoid

memory fragmentation and lower the memory footprint,

• ocaml-memtrace

: support for tracing memory allocation in order to under-

stand where memory consumption comes from,

• ocaml-mem_usage: detailed memory usage information.

Runtime dependencies. Those optional dependencies can be used by Liquid-

soap if installed, they are detected at runtime and do not require any particular

support during compilation:

• awscli: s3:// and polly:// protocol support for Amazon web servers,

• curl: downloading les with http, https and ftp protocols,

• ffmpeg

: external input and output,

replay_gain

, level computation, and

more,

• youtube-dl: YouTube video and playlist downloading support.

29

4

Seing up a simple radio station

4.1 The sound of a sine wave

Our rst sound. In order to test your installation, you can try the following in

a console:

liquidsoap 'output(sine())'

This instructs Liquidsoap to run the program

output(sine())

which plays a sine wave at 440 Hertz. The operator

sine

is called a source: it

generates audio (here, a sine wave) and

output

is an operator which takes a

source as parameter and plays it on the soundcard. When running this program,

you should hear the expected well-known sound and see lots of lines looking

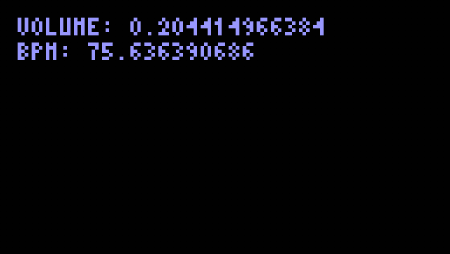

like this:

2021/02/18 15:20:44 >>> LOG START

2021/02/18 15:20:43 [main:3] Liquidsoap 2.0.0

...

These are the logs for Liquidsoap, which are messages describing what each

operator is doing. These are often useful to follow what the script is doing, and

contain important information in order to understand what is going wrong if it

is the case. Each of these lines begins with the date and the hour the message

was issued, followed by who emitted the message (i.e. which operator), its

importance, and the actual message. For instance,

[main:3]

means that the main

process of Liquidsoap emitted the message and that its importance is

3

. The

lower the number is, the more important the message is:

1

is a critical message

(the program might crash after that),

2

a severe message (something that might

30 CHAPTER 4. SETTING UP A SIMPLE RADIO STATION

aect the program in a deep way),

3

an important message,

4

an information and

5

a debug message (which can generally be ignored). By default, only messages

with importance up to 3 are displayed.

Scripts. You will soon nd out that a typical radio takes more than one line

of code, and it is not practical to write everything on the command line. For

this reason, the code for describing your webradio can also be put in a script,

which is a le containing all the code for your radio. For instance, for our sine

example, we can put the following code in a le radio.liq:

#!/usr/bin/env liquidsoap

# Let's play a sine wave

output(sine())

The rst line says that the script should be executed by Liquidsoap. It begins by

#!

(sometimes called a shebang) and then says that

/usr/bin/env

should be used

in order to nd the path for the

liquidsoap

executable. If you know its complete

path (e.g. /usr/bin/liquidsoap) you could also directly put it:

#!/usr/bin/liquidsoap

In the rest of the book, we will generally omit this rst line, since it is always

the same. The second line of

radio.liq

, is a comment. You can put whatever

you want here: as long as the line begins with

#

, it will not be taken in account.

The last line is the actual program we already saw above.

In order to execute the script, you should ensure that the program is executable

with the command

chmod +x radio.liq

and you can then run it with

./radio.liq

which should have the same eect as before. Alternatively, the script can also

be run by passing it as an argument to Liquidsoap

liquidsoap radio.liq

in which case the rst line (starting with #!) is not required.

Variables. In order to have more readable code, one can use variables which

allow giving names to sources. For instance, we can give the name

s

to our sine

source and then play it. The above code is thus equivalent to

s = sine()

output(s)

4.1. THE SOUND OF A SINE WAVE 31

Parameters. In order to investigate further the possible variations on our

example, let us explore the parameters of the

sine

operator. In order to obtain

detailed help about this operator, we can type, in a console,

liquidsoap -h sine

which will output

Generate a sine wave.

Type: (?id : string, ?amplitude : float, ?float) ->

source(audio=internal('a), video=internal('b), midi=internal('c)),→

Category: Source / Input

Parameters:

* id : string (default: "")

Force the value of the source ID.

* amplitude : float (default: 1.0)

Maximal value of the waveform.

* (unlabeled) : float (default: 440.0)

Frequency of the sine.

(this information is also present in the online documentation

1

).

It begins with a description of the operator, followed by its type, category and

arguments (or parameters). There is also a section for methods, which is not

shown above, but we simply ignore it for now, it will be detailed in section 5.6.

Here, we see in the type that it is a function, because of the presence of the arrow

“

->

”: the type of the arguments is indicated on the left of the arrow and the

type of the output is indicated on the right. More precisely, we see that it takes

three arguments and returns a source with any number of audio, video and midi

channels (the precise meaning of

source

is detailed in section 8.1). The three

arguments are indicated in the type and detailed in the following

Parameters

section:

•

the rst argument is a string labeled

id

: this is the name which will be

displayed in the logs,

• the second is a oat labeled amplitude: this controls how loud the gener-

ated sine wave will be,

• the third is a oat with no label: the frequency of the sine wave.

All three arguments are optional, which means that a default value is provided

and will be used if it is not specied. This is indicated in the type by the question

1

https://www.liquidsoap.info/doc-dev/reference.html

32 CHAPTER 4. SETTING UP A SIMPLE RADIO STATION

mark “

?

” before each argument, and the default value is indicated in

Parameters

(e.g. the default amplitude is 1.0 and the default frequency is 440. Hz).

If we want to generate a sine wave of 2600 Hz with an amplitude of 0.8, we can

thus write

s = sine(id="my_sine", amplitude=0.8, 2600.)

output(s)

Note that the parameter corresponding to id has a label

id

, which we have to

specify in order to pass the corresponding argument, and similarly for amplitude,

whereas there is no label for the frequency.

Finally, just for fun, we can hear an A minor chord by adding three sines:

s1 = sine()

s2 = sine(440. * pow(2., 3. / 12.))

s3 = sine(440. * pow(2., 7. / 12.))

s = add([s1, s2, s3])

output(s)

We generate three sines at frequencies 440 Hz, 440×2

3/12

Hz and 440×2

7/12

Hz,

adds them, and plays the result. The operator

add

is taking as argument a list

of sources, delimited by square brackets, which could contain any number of

elements.

4.2 A radio

Playlists and more. Since we are likely to be here not to make synthesizers

but rather radios, we should start playing actual music instead of sines. In order

to do so, we have the

playlist

operator which takes as argument a playlist: it

can be a le containing paths to audio les (wav, mp3, etc.), one per line, or a

playlist in a standard format (pls, m3u, xspf, etc.), or a directory (in which case

the playlist consists of all the les in the directory). For instance, if our music is

stored in the ~/Music directory, we can play it with

s = playlist("~/Music")

output(s)

As usual, the operator

playlist

has a number of interesting optional parame-

ters which can be discovered with

liquidsoap -h playlist

. For instance, by

default, the les are played in a random order, but if we want to play them as

indicated in the list we should pass the argument

mode="normal"

to

playlist

.

Similarly, if we want to reload the playlist whenever it is changed, the argument

reload_mode="watch" should be passed.

A playlist can refer to distant les (e.g. urls of the form

http://path/to/file.mp3

)