The Annals of Applied Probability

2020, Vol. 30, No. 5, 2491–2515

https://doi.org/10.1214/20-AAP1564

© Institute of Mathematical Statistics, 2020

ASYMPTOTIC THEORY OF SPARSE BRADLEY–TERRY MODEL

B

Y RUIJIAN HAN

*

,ROUGANG YE

†

,CHUNXI TAN

‡

AND KANI CHEN

§

Department of Mathematics, Hong Kong University of Science and Technology,

*

†

‡

§

The Bradley–Terry model is a fundamental model in the analysis of net-

work data involving paired comparison. Assuming every pair of subjects in

the network have an equal number of comparisons, Simons and Yao (Ann.

Statist. 27 (1999) 1041–1060) established an asymptotic theory for statistical

estimation in the Bradley–Terry model. In practice, when the size of the net-

work becomes large, the paired comparisons are generally sparse. The spar-

sity can be characterized by the probability p

n

that a pair of subjects have at

least one comparison, which tends to zero as the size of the network n goes to

infinity. In this paper, the asymptotic properties of the maximum likelihood

estimate of the Bradley–Terry model are shown under minimal conditions of

the sparsity. Specifically, the uniform consistency is proved when p

n

is as

small as the order of (log n)

3

/n, which is near the theoretical lower bound

log n/n by the theory of the Erd

˝

os–Rényi graph. Asymptotic normality and

inference are also provided. Evidence in support of the theory is presented in

simulation results, along with an application to the analysis of the ATP data.

1. Introduction. Is Roger Federer a better tennis player than John McEnroe? Is research

article A more influential than research article B, among a collection of all research articles in

a scientific field? Is webpage A more important than webpage B, among the existing millions

of webpages? Is person A more popular than person B in a large social network, such as

Twitter users? These questions may be answered by analysis of paired comparison data in a

network. The paired comparison may be in terms of head-to-head match outcomes, citation

of a research article by another, a webpage containing a link of another webpage, a user

retweeting the tweet of another user, etcetera. When the size of the network, such as a total

number of webpages, becomes large, paired comparisons are generally sparse. The sparsity

may be described in different ways, such as the total number of observed comparisons divided

by the total number of subjects in the network. Throughout this paper, the size of the network

in study is denoted as n, and we assume any pair has a comparison with probability p

n

.The

degree of sparsity is then characterized by the size of p

n

, the smaller the sparser.

For paired comparison, the Bradley–Terry model (Bradley and Terry (1952)) is one of the

most commonly used models. Consider n subjects in a network. Subject i has merit u

i

for

i =0,...,n−1, where u

i

∈R and u

i

> 0. The Bradley–Terry model assumes the probability

that subject i defeats j as

(1.1) p

ij

=

u

i

u

i

+u

j

,i,j=0,...,n− 1;i = j.

The generalizations of the Bradley–Terry model can be seen in, for example, Luce (1959),

Rao and Kupper (1967)andAgresti (1990), among many others. For estimation of the merits

based on a set of paired comparison data, the maximum likelihood estimation (MLE) is a

common choice. It is much desired to justify the asymptotic properties of the MLE, particu-

larly when the comparisons are sparse. A distinct feature of this problem is that the number

Received June 2019; revised October 2019.

MSC2020 subject classifications. Primary 60F05; secondary 62E20, 62F12, 62J15.

Key words and phrases. Bradley–Terry model, sparsity, uniform consistency, asymptotic normality, maximum

likelihood estimation.

2491

2492 HAN, YE, TAN AND CHEN

of parameters, which is the same as the number of subjects, tends to infinity. Moreover, in the

case of sparsity, the number of comparisons per pair is 0 with probability tending to 1.

Suppose any pair has a fixed positive number of comparisons, Simons and Yao (1999)

proved the uniform consistency and asymptotic normality of the MLE for the Bradley–Terry

model. Under this assumption, there would be at least n(n −1)/2 comparisons in total. Fur-

ther extension was reported in Yan, Yang and Xu (2012) with relaxed conditions but still

requiring the number of comparisons at the order of n

2

. Both papers considered nonsparse

cases, where p

n

has a positive lower bound and, as a result, does not go to 0. In this paper, we

show the asymptotic properties of the MLE for sparse comparisons. In particular, when the

maximum ratio of merits are bounded, the uniform consistency holds as long as p

n

is greater

than (log n)

3

/n, and the asymptotic normality is true when p

n

is greater than the order of

(log n)

1/5

/n

1/10

.

The order of (log n)

3

/n required for the uniform consistency is close to the necessary

theoretical lower bound, log n/n, below which a unique MLE does not exist. The network

we consider can be regarded as the Erd

˝

os–Rényi graph G(n, p

n

) (Erd

˝

os and Rényi (1959)),

where each node stands for a subject and each edge stands for the comparison between the

two corresponding nodes. Erd

˝

os and Rényi (1960) showed that the Erd

˝

os–Rényi graph will

be disconnected with positive probability if p

n

<log n/n,forany<1. As to be seen,

a disconnected graph will fail the condition of the existence and uniqueness of the MLE of

Bradley–Terry model, implying that not all subjects are comparable. In the sense of sparsity,

the theory established in this paper is nearly optimal.

Although we assume that each pair of subjects in the network has a comparison with

the same probability p

n

, one can follow our proof to extend it to the case with different

comparison probabilities at the order of p

n

. The main contribution of this article is to show

the asymptotic theorem of MLE when p

n

→0 and how small p

n

can be to obtain it.

We note that Negahban, Oh and Shah (2012)andMaystre and Grossglauser (2015)showed

the consistency of the MLE under

2

norm for sparse comparison data. The

2

norm therein

is normalized by

√

n. Since the network size goes to infinity, consistency under the

2

norm

does not ensure the consistency of the merits of any fixed number of subjects. In other words,

with their normalized

2

consistency, one cannot tell for sure that the estimation of merits

ratio of any given pair is accurate. In this sense, the uniform consistency is a much desired

result.

This paper is organized as follows. In Section 2, we show the large sample properties of

the MLE. Simulation results and analysis of the ATP data are given in Section 3. Section 4

contains some concluding remarks. All proofs are relegated to the appendices.

2. Main results. Consider any two subjects i and j with 0 ≤ i, j ≤n −1. Let t

ij

denote

the number of comparisons between subjects i and j and a

ij

denote the number of times

that i defeats j .Seta

ii

= t

ii

= 0 for simplicity of notation. Then t

ji

= t

ij

= a

ij

+ a

ji

for

all i, j . For slightly more generality, throughout the sequel, we assume t

ij

follows binomial

distribution, ∼ Bin(T , p

n

) where T is a positive integer not depending on n.Givent

ij

,the

Bradley–Terry model implies a

ij

∼ Bin(t

ij

,p

ij

),wherep

ij

= u

i

/(u

i

+u

j

). Without loss of

generality, one can take T as 1 for ease of understanding.

Based on the observations of paired comparisons {a

ij

,t

ij

:0 ≤i, j ≤ n −1}, the likelihood

function is

(2.1) L(u) ∝

n−1

i,j=0

i=j

p

a

ij

ij

=

n−1

i=0

u

a

i

i

0≤i<j≤n−1

(u

i

+u

j

)

t

ij

,

SPARSE BRADLEY–TERRY MODEL 2493

where a

i

=

n−1

j=0

a

ij

is the total number of comparisons that subject i wins and u =

(u

0

,u

1

,...,u

n−1

) is the merits vector. By the method of maximum likelihood estimation,

the likelihood equations are

(2.2) a

i

=

n−1

j=0

t

ij

ˆu

i

ˆu

i

+ˆu

j

,i=0,...,n− 1,

where ˆu = ( ˆu

0

, ˆu

1

,..., ˆu

n−1

) is the MLE of the merits vector u. Since the Bradley–Terry

model is invariant under scaling of parameters, we assume that u

0

=1, ˆu

0

=1 for the purpose

of identifiability.

As noted by Zermelo (1929)andFord (1957), a necessary and sufficient condition for

existence and uniqueness of the MLE is as follows,

C

ONDITION A. For every partition of subjects into two nonempty sets, a subject in the

second set has defeated a subject in the first at least once.

When Condition A is not satisfied, there exists a nonempty set of subjects, say

A, such that

the MLEs of the merits of members in

A would be infinitely larger than those of the members

not in

A. As a result, the MLE cannot be consistent. Under some sparsity conditions given

there, Lemma 1 shows Condition A holds with probability approaching 1 as n →∞.Some

more notations are introduced here:

(2.3)

M

n

= max

0≤i,j≤n−1

u

i

u

j

,

n

=

(log n)

3

[log(np

n

)]

2

np

n

and

u

i

=

ˆu

i

−u

i

u

i

,i=0,...,n− 1,

where M

n

is the largest ratio of u

i

and u

j

for all i, j , and will be called the largest ratio of

merits.

L

EMMA 1. If

(2.4)

M

n

log n

np

n

→0 as n →∞,

then P(Condition A is satisfied) → 1 as n →∞.

R

EMARK 1. The largest ratio of merits, M

n

controls the spread of the merits in the net-

work, while p

n

controls the possibility of comparisons. A large M

n

and a small p

n

both

increase the likelihood of the existence of a group of subjects such that any member of this

group always wins in a comparison with any member not in this group, and result in Condi-

tion A being violated.

Under condition (2.4), p

n

can be close to the order of log n/n.GivenT = 1, (t

ij

)

n×n

can

be regarded as the adjacency matrix of the Erd

˝

os-Rényi graph (Erd

˝

os and Rényi (1959)),

denoted as G(n, p

n

), under our assumption. Erd

˝

os and Rényi (1960) showed that if p

n

<

log n/n, for any positive <1, G(n, p

n

) is disconnected, disagreeing with Condition A,

with probability tending to 1. Therefore, in order to satisfy Condition A, it is necessary to

require p

n

≥log n/n. According to (2.4), when we fix M

n

as a constant, p

n

nearly meets the

lower bound logn/n.

Condition (2.4)ofLemma1 ensures the existence and uniqueness of the MLE ( ˆu

0

, ˆu

1

,...,

ˆu

n−1

). The theorems in this paper all assume conditions that imply (2.4).

2494 HAN, YE, TAN AND CHEN

2.1. Uniform consistency. We first define two notations O

p

and o

p

to stand for the or-

der of the sequence of random variables. Given a sequence of random variables {X

n

} and a

corresponding sequence of constants {a

n

}. We say that X

n

= O

p

(a

n

) if for any >0, there

exists a finite M>0 and a finite N>0 such that P(|X

n

/a

n

|>M)<for any n>N.Gen-

erally speaking, X

n

= O

p

(a

n

) denotes X

n

/a

n

is stochastically bounded. Another notation

X

n

=o

p

(a

n

) means that X

n

/a

n

converges to zero in probability.

Based on these two notations, we have the following theorem.

T

HEOREM 2.1. If

(2.5) M

2

n

n

→0 as n →∞,

then

(2.6) max

i=0,...,n−1

|u

i

|=O

p

M

2

n

n

=o

p

(1).

R

EMARK 2. The condition imposed on the largest ratio of merits M

n

and sparsity p

n

in (2.5) ensures the uniform consistency of the MLE of the Bradley–Terry model when the

comparisons may be sparse and the network is large. For a large value of M

n

, the teams

with relative poor merits has very little chance to defeat those with relative large merits,

thereby making estimation difficult. Meanwhile, for a small p

n

, teams have few opportunities

to compete with others, thus making a poor estimation.

To prove (2.6), we let

i

0

=argmax

i

ˆu

i

u

i

,i

1

=argmin

i

ˆu

i

u

i

.

Since ˆu

0

/u

0

= 1, it suffices to show that the ratio of subject i

0

, ˆu

i

0

/u

i

0

, and the ratio of i

1

,

ˆu

i

1

/u

i

1

are very close.

Review that the main idea of the previous work (Simons and Yao (1999), Yan, Yang and

Xu (2012)) contains two parts. The first part is that the number of the common neighbors

between any two subjects is at least cn for some constant c through their dense assumption.

The second part is that for subject i = i

0

or i

1

, some subjects j with t

ij

= 0 have the ratio

close to the ratio of i. Then, it can be shown there exists at least one subject, say s (one would

suffice), who is a neighbor of i

0

with the ratio ˆu

s

/u

s

close to ˆu

i

0

/u

i

0

and is also a neighbor

of i

1

with the ratio close to ˆu

i

1

/u

i

1

. Such a common neighbor serves as middleman between

subjects i

0

and i

1

. As a result, ˆu

i

0

/u

i

0

is close to ˆu

i

1

/u

i

1

. Thus, the uniform consistency holds.

However, in the sparse case, the number of common neighbors of any two subjects tends

to0asn increases to infinity. If we follow the previous proof directly, no common neighbors

of subjects i

0

and i

1

may be found, let alone a common neighbor with desired closeness of its

ratio to ˆu

i

0

/u

i

0

and ˆu

i

1

/u

i

1

. Due to the absence of such a middleman, this approach cannot

be applied to the sparse comparison.

It appears to be an obvious extension that one might try to find a chain of subjects, say

l

1

,...,l

k

, serving as middlemen to bridge the subjects i

0

and i

1

. Namely l

i+1

is a neighbor

of l

i

and they are close in terms of the ratios. An immediate difficulty, along with other

technicalities, arises from the evaluation of this closeness since the previous proof only works

for subjects i

0

and i

1

. Then, the second extension of our proof is to show for any subject

i =0,...,n−1, some subjects j with t

ij

= 0 have the ratios close to the ratio of i,whichis

summarized in Lemma 3.

With these two extensions, we prove the uniform consistency by showing the existence

of a nonempty intersection between two carefully designed sets. One is the set of subjects

SPARSE BRADLEY–TERRY MODEL 2495

having the ratio close to the maximum ratio ˆu

i

0

/u

i

0

. The other is the set of subjects having

the ratio close to the minimum ratio ˆu

i

1

/u

i

1

. For the first set, we start from A ={i

0

} and then

constantly expand the size of A through the neighbors of the subjects in A until |A| >n/2.

Similarly, we can obtain the size of the second set is also larger than n/2. Hence there must

exist a subject, a middleman, with its ratio close to both ˆu

i

0

/u

i

0

and ˆu

i

1

/u

i

1

. More details can

be found in Remark 5.

The consistency presented in Theorem 2.1 also applies to the special case of nonsparse

comparisons, where p

n

has a lower bound away from 0, as considered in the previous work.

Moreover, the special case of M

n

being a constant sheds light on the sparsity required for the

uniform consistency. These are summarized in the following corollaries.

C

OROLLARY 1. If M

n

=C for some constant C ≥ 1 and there exists an n

0

such that

(2.7) p

n

≥

(log n)

3

n

for all n>n

0

, then

(2.8) max

i=0,...,n−1

|u

i

|=O

p

1

log(np

n

)

=o

p

(1).

C

OROLLARY 2. If p

n

=c for some constant c ≤ 1 and

(2.9) M

2

n

n

→0 as n →∞,

then

(2.10) max

i=0,...,n−1

|u

i

|=O

p

M

2

n

log n

n

=o

p

(1).

R

EMARK 3. Corollary 1 shows the uniform consistency of the MLE holds when M

n

=C

and p

n

≥(log n)

3

/n, close to the theoretical lower bound log n/n.

2.2. Asymptotic normality. Recall that a

i

=

n−1

j=0,j =i

a

ij

,wherea

ij

is the number of

times that subject i prevails over j .LetV

n−1

=(v

ij

)

i,j=1,...,n−1

denote the covariance matrix

of a

1

,...,a

n−1

,where

(2.11) v

ii

=

n−1

k=0

t

ik

u

i

u

k

(u

i

+u

k

)

2

,v

ij

=−

t

ij

u

i

u

j

(u

i

+u

j

)

2

,i,j=1,...,n− 1;i = j.

Let v

00

=

n−1

i,j=1

v

ij

=

n−1

k=1

[(t

0k

u

k

)/(1 +u

k

)

2

].HereV

n−1

is the Fisher information ma-

trix for the parameterization (log u

1

,...,log u

n−1

). Simons and Yao (1999) used a symmetric

matrix S

n−1

=(s

ij

)

(n−1)×(n−1)

to approximate V

−1

n−1

,where

(2.12) s

ij

=

δ

ij

v

ii

+

1

v

00

,i,j= 1,...,n−1,

and δ

ij

is the Kronecker delta. With sparse comparisons, we shall re-evaluate the accuracy of

this approximation in Lemma 7. The following theorem shows the asymptotic normality of

the MLE.

T

HEOREM 2.2. If

(2.13)

M

n

p

n

(log n)

1/5

n

1/10

→0 as n →∞,

then for each fixed r ≥ 1, as n →∞, the vector (u

1

,...,u

r

) is asymptotically normally

distributed with mean 0 and covariance matrix given by the upper left r × r block of S

n−1

defined in (2.12).

2496 HAN, YE, TAN AND CHEN

As expected, condition (2.13)involvesM

n

and p

n

. The following two corollaries each

deal with the special cases of M

n

and p

n

being constants.

C

OROLLARY 3. If p

n

=c for some constant c ≤ 1 and

(2.14) M

n

(log n)

1/5

n

1/10

→0 as n →∞,

then for each fixed r ≥ 1, as n →∞, the vector (u

1

,...,u

r

) is asymptotically normally

distributed with mean 0 and covariance matrix given by the upper left r × r block of S

n−1

defined in (2.12).

C

OROLLARY 4. If M

n

=C for some constant C ≥ 1 and

(2.15) p

n

n

1/10

(log n)

1/5

→∞ as n →∞,

then for each fixed r ≥ 1, as n →∞, the vector (u

1

,...,u

r

) is asymptotically normally

distributed with mean 0 and covariance matrix given by the upper left r × r block of S

n−1

defined in (2.12).

R

EMARK 4. Corollary 3 is the theorem about asymptotic normality presented in Simons

and Yao (1999)andYan, Yang and Xu (2012), and Corollary 4 gives the sparsity condition

to ensure asymptotic normality when the largest ratio of merits is bounded above.

3. Numerical studies.

3.1. Simulation. Simulations are carried out to evaluate the finite sample performance of

the MLE of the Bradley–Terry model. We assume T = 1 in all simulations, which means that

any pair has one comparison with probability p

n

and no comparison with probability 1 −p

n

.

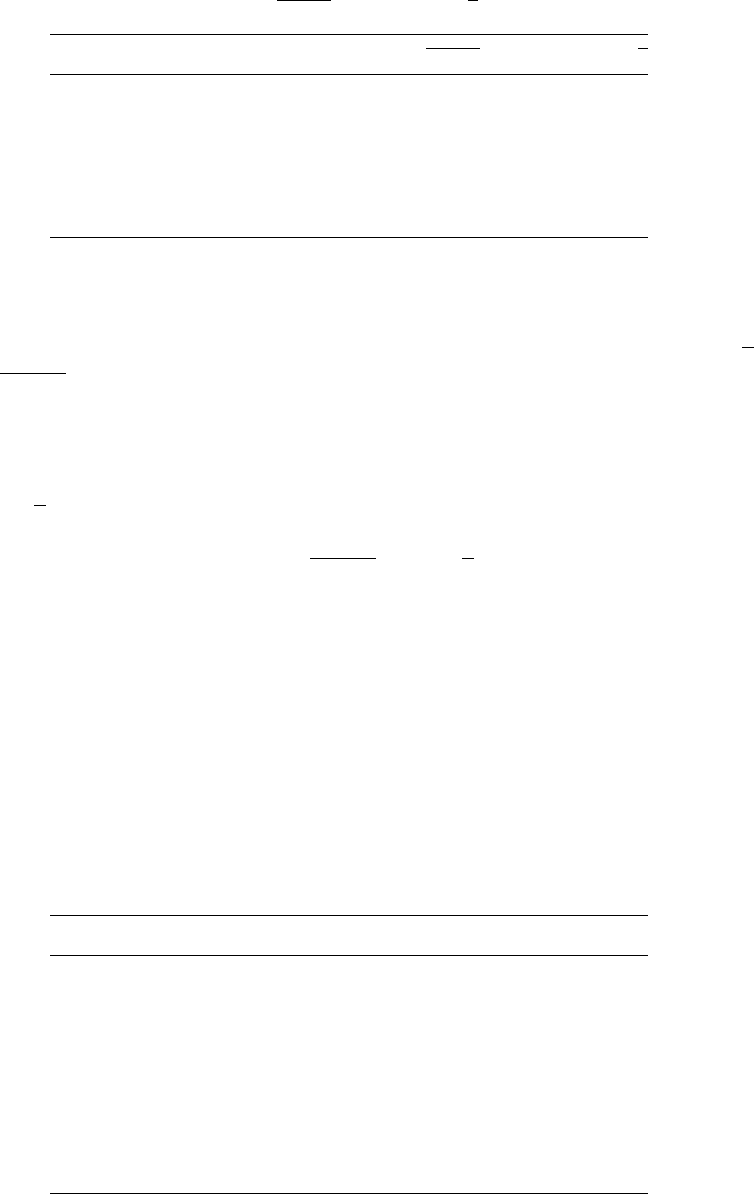

The result of the first simulation study is shown in Table 1. In order to study the uniform

consistent tendency of MLE, we present the mean and median of max

i=0,...,n−1

|u

i

| based

on 1000 repetitions. In this simulation, the size of network n is taken to be 1000, 2000,

5000, 10,000, 15,000, the sparse probability p

n

is chosen as logn/n, (logn)

3

/n,10/

√

n

and M

n

equals to 1, which implies merits of all subjects are identical. When p

n

= log n/n,

all the repetitions do not produce the MLE since Condition A is not satisfied. In the case

of p

n

= (log n)

3

/n, both mean and median of max

i=0,...,n−1

|u

i

| become closer to 0 with

increasing n. For comparison, we also consider p

n

as large as 10/

√

n. We multiply 1/

√

n by

10 to ensure there are more paired comparisons than p

n

= (log n)

3

/n for values of n in the

simulation. The result shown in Table 1 indeed indicates the consistency of the MLE under

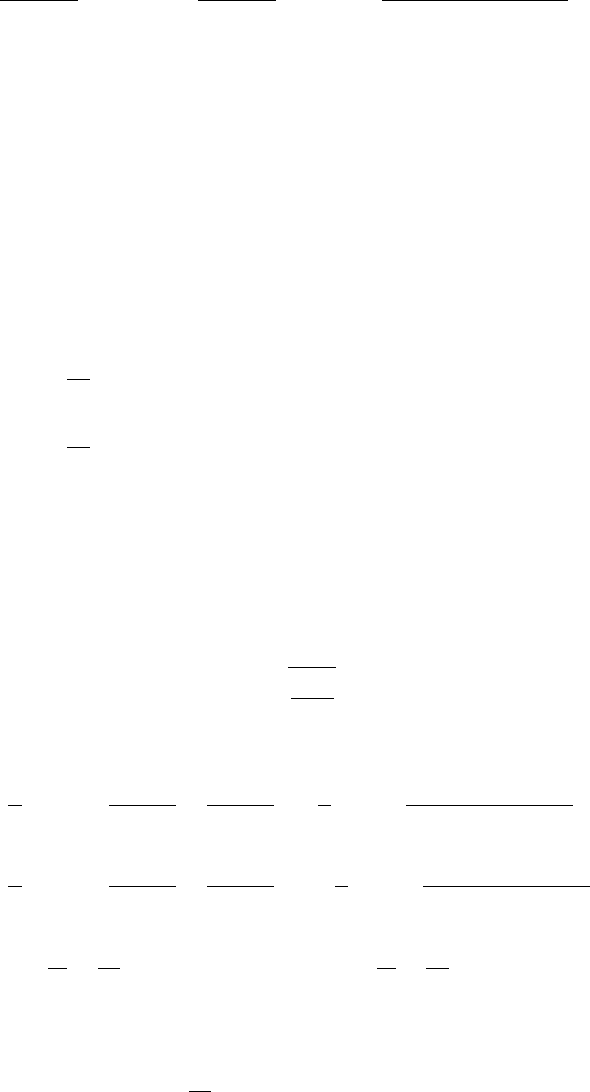

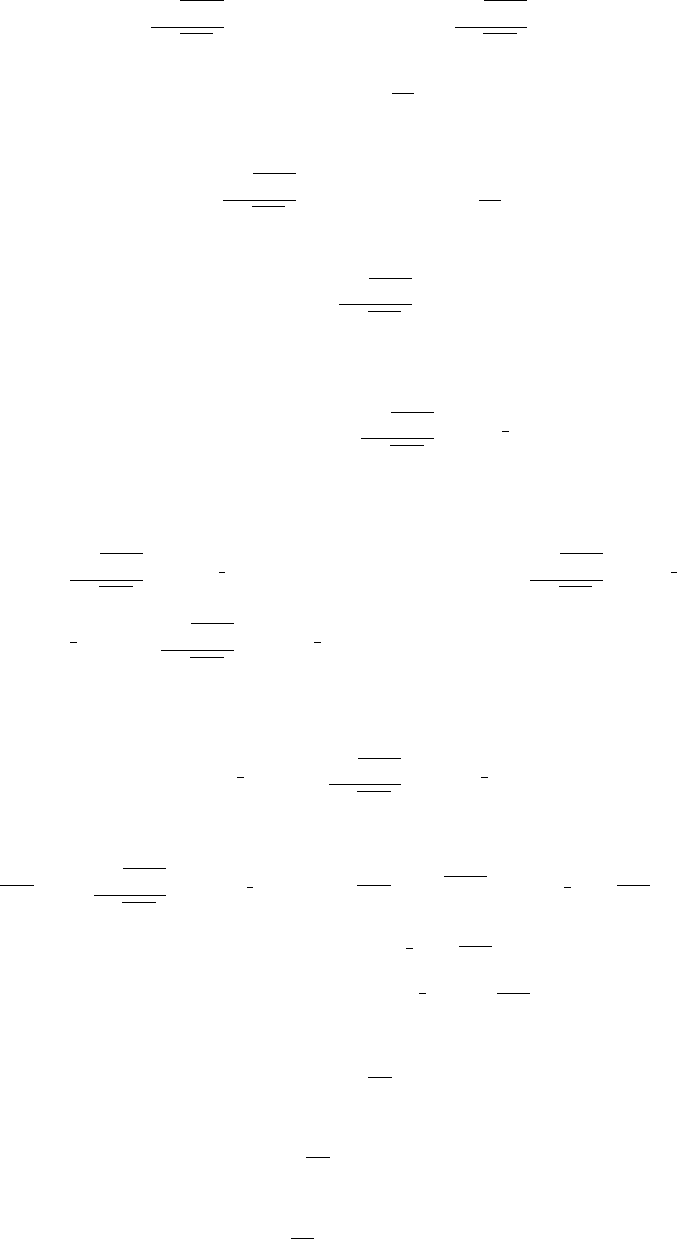

TABLE 1

The mean and median (in parentheses) of max

i=0,...,n−1

|u

i

|. In the third column, “–” means all repetitions

fail Condition A. The three numbers in the parentheses in the first column represent respectively the average

numbers of comparisons one subject has for p

n

=log n/n, p

n

=(log n)

3

/n and p

n

=10/

√

n

nM

n

p

n

=log n/n p

n

=(log n)

3

/n p

n

=10/

√

n

1000 (7/330/316) 1 – 0.4784 (0.4365) 0.4862 (0.4427)

2000 (8/439/447) 1 – 0.4291 (0.4012) 0.4242 (0.3929)

5000 (9/618/707) 1 – 0.3765 (0.3593) 0.3511 (0.3327)

10,000 (9/781/1000) 1 – 0.3423 (0.3223) 0.2988 (0.2867)

15,000 (10/889/1225) 1 – 0.3226 (0.3085) 0.2727 (0.2595)

SPARSE BRADLEY–TERRY MODEL 2497

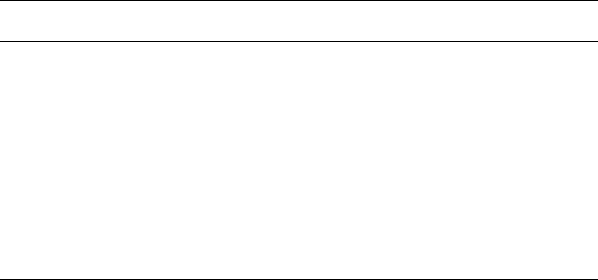

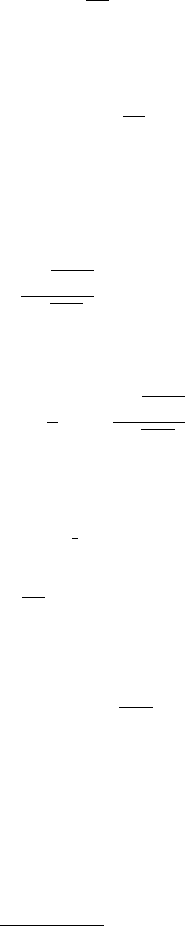

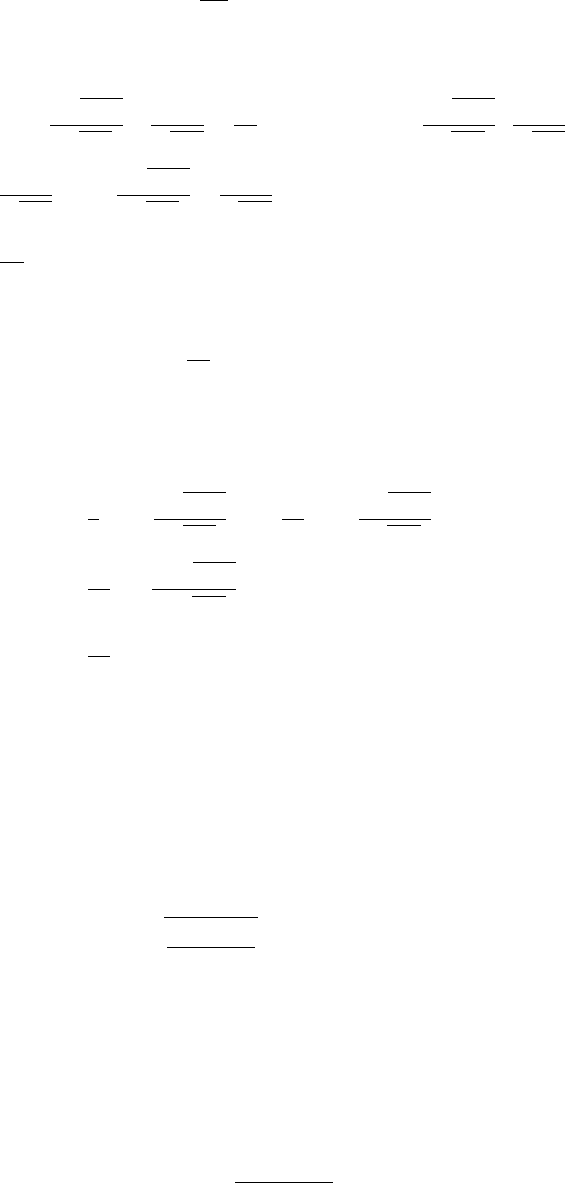

TABLE 2

Coverage probabilities and the probabilities that Condition A fails (in parentheses). In the first column, the

numbers in the parentheses represent the average numbers of comparisons one subject has

n(i,j)M

n

=1 M

n

=

√

nM

n

=n

p

n

=1/

√

n

100(10) (0, 1) 0.277 (0.703) 0.041 (0.958) 0.001 (0.999)

(0, 99) 0.275 (0.703) 0.041 (0.958) 0.001 (0.853)

(50, 51) 0.278 (0.703) 0.039 (0.958) 0.001 (0.853)

200(14) (0, 1) 0.697 (0.260) 0.124 (0.871) 0.001 (0.999)

(0, 199) 0.696 (0.260) 0.123 (0.871) 0.001 (0.999)

(100, 101) 0.692 (0.260) 0.120 (0.871) 0.001 (0.999)

500(23) (0, 1) 0.932 (0.010) 0.416 (0.562) 0.002 (0.998)

(0, 499) 0.931 (0.010) 0.415 (0.562) 0.002 (0.998)

(250, 251) 0.930 (0.010) 0.410 (0.562) 0.002 (0.998)

p

n

=

√

log n/n

100(21) (0, 1) 0.938 (0.003) 0.888 (0.070) 0.501 (0.483)

(0, 99) 0.940 (0.003) 0.886 (0.070) 0.491 (0.483)

(50, 51) 0.943 (0.003) 0.877 (0.070) 0.483 (0.483)

200(33) (0, 1) 0.944 (0) 0.941 (0.011) 0.710 (0.262)

(0, 199) 0.947 (0) 0.939 (0.011) 0.696 (0.262)

(100, 101) 0.942 (0) 0.931 (0.011) 0.693 (0.262)

500(56) (0, 1) 0.949 (0) 0.949 (0) 0.897 (0.062)

(0, 499) 0.945 (0) 0.948 (0) 0.886 (0.062)

(250, 251) 0.947 (0) 0.944 (0) 0.890 (0.062)

p

n

=1

100(99) (0, 1) 0.951 (0) 0.954 (0) 0.954 (0)

(0, 99) 0.952 (0) 0.954 (0) 0.950 (0)

(50, 51) 0.941 (0) 0.948 (0) 0.948 (0)

200(199) (0, 1) 0.954 (0) 0.954 (0) 0.942 (0)

(0, 199) 0.953 (0) 0.957 (0) 0.951 (0)

(100, 101) 0.950 (0) 0.946 (0) 0.951 (0)

500(499) (0, 1) 0.951 (0) 0.953 (0) 0.949 (0)

(0, 499) 0.953 (0) 0.959 (0) 0.950 (0)

(250, 251) 0.955 (0) 0.948 (0) 0.950 (0)

the sparsity condition given in Theorem 2.1. This further supports that our sparsity condition

nearly meets the lower bound of p

n

to ensure the existence of a unique MLE.

The second simulation is done to measure the coverage probabilities of MLE and the

result based on 5000 repetitions is given in Table 2. By applying Theorem 2.2, we construct

the approximate 1 −α confidence interval for log(u

i

/u

j

) as

log( ˆu

i

/ ˆu

j

) ±z

1−α/2

1/ ˆv

ii

+1/ ˆv

jj

,

where z

1−α/2

refers to the quantile of the standard normal distribution at level 1 − α/2. The

asymptotic variances are based on (2.11), and ˆv

ii

and ˆv

jj

are computed using ˆu,theMLE

of merits u. We present the coverage probabilities of 95% confidence intervals of some pairs

of merits (the first two merits, the middle two merits, the first and the last merits when they

are sorted in ascending order) when Condition A is met. The frequencies that Condition A

fails are also reported. In this simulation, we choose the size of network n = 100, 200, 500,

the sparse probability p

n

= 1/

√

n,

√

log n/n, 1 and the largest ratio of merits M

n

= 1,

√

n.

2498 HAN, YE, TAN AND CHEN

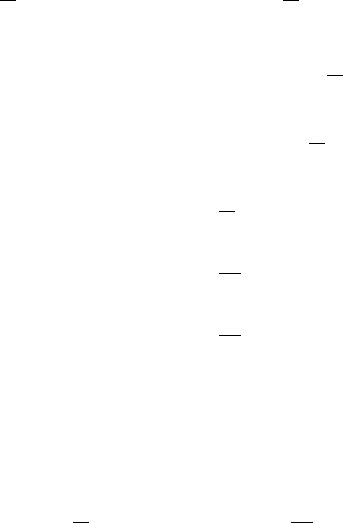

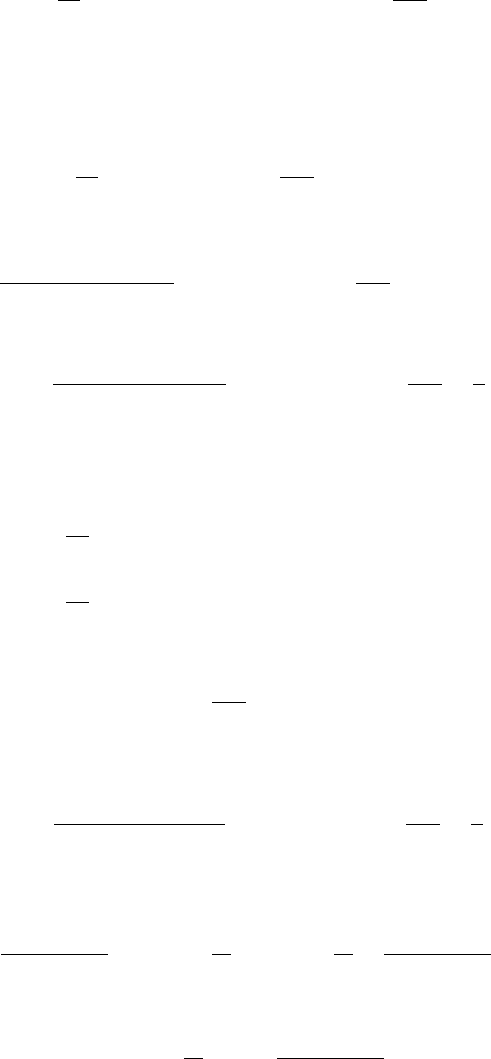

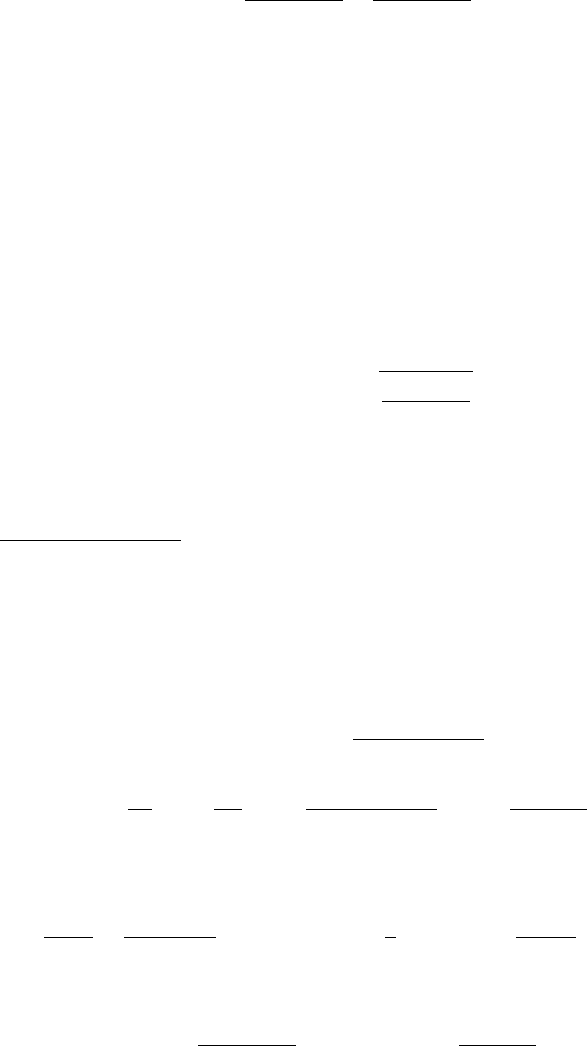

T

ABLE 3

Coverage probabilities and the probabilities that Condition A fails (in parentheses)

when M

n

=1 and n = 1000, 2000. In the first column, the two numbers in the

parentheses represent the numbers of comparisons one subject has for

p

n

=

√

log n/n and p

n

=log n/

√

n

n(i,j)p

n

=

√

log n/n p

n

=log n/

√

n

1000 (83/218) (0, 1) 0.942 (0) 0.938 (0)

(0, 999) 0.947 (0) 0.943 (0)

(500, 501) 0.943 (0) 0.946 (0)

2000 (123/340) (0, 1) 0.945 (0) 0.954 (0)

(0, 1999) 0.948 (0) 0.950 (0)

(1000, 1001) 0.940 (0) 0.956 (0)

The average numbers of comparisons one subject has under different sparse probabilities are

shown in the parentheses following the numbers of subjects n in Table 2. For example, there

are only around 20 and 50 comparisons for each of 500 subjects in two cases with p

n

=1/

√

n

and

√

log n/n respectively.

From Table 2, we see coverage probabilities become closer to the nominal level as p

n

increases or M

n

decreases. With n increasing, the coverage probabilities approach the nomi-

nal level and the probabilities that Condition A fails decrease. These phenomena agree with

the theoretical asymptotic properties given in Theorem 2.2. Condition A fails mostly when

p

n

=1/

√

n and M

n

=n, due to extremely sparse comparisons and the large range of merits.

Furthermore, Table 3 reports coverage probabilities for larger network, with n = 1000 and

2000. In this simulation, we let p

n

=

√

log n/n,logn/

√

n and M

n

= 1, which means all

subjects have equal merits. One can conclude that the coverage probabilities are close to the

nominal level from Table 3, showing evidence in support of the theory.

3.2. The ATP dataset. We present results of the Bradley–Terry model applied to the 2017

ATP data and then compare its ranking with the official ATP ranking. The ATP matches of

one year include four Grand Slams, the ATP World Tour Masters 1000, the ATP World Tour

500 series and other tennis series of the year. There are 203 players in total after removing

those who never win or lose for Condition A to be satisfied. Besides, we exclude walkovers

and only consider finished games. The results are reported in Table 4. The estimated merits of

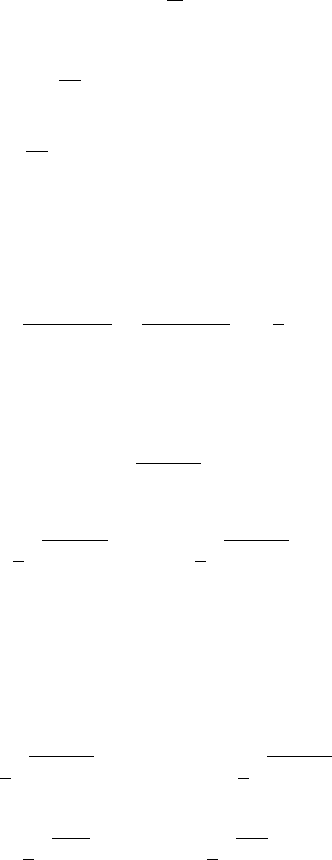

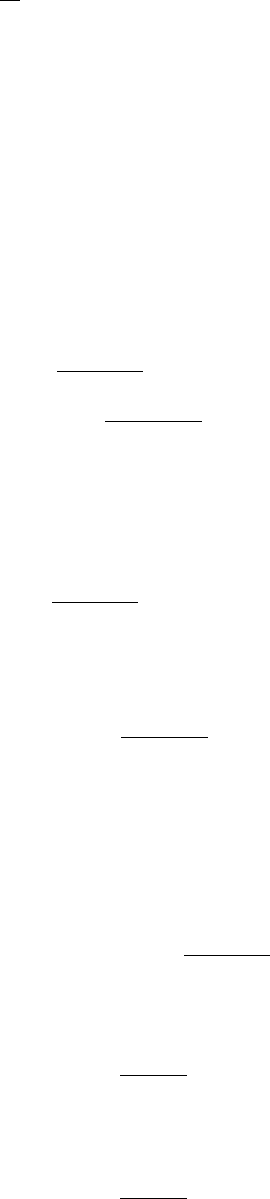

TABLE 4

Results of the analysis of the ATP 2017 data

Rank Player Games Winning rate Merit ATP Ranking

1 Roger Federer 55 0.909 7.505 2

2 Rafael Nadal 76 0.855 4.085 1

3 Novak Djokovic 36 0.806 2.029 12

4 Juan Martin del Potro 53 0.698 1.440 11

5 Alexander Zverev 73 0.712 1.321 4

6 Grigor Dimitrov 65 0.708 1.303 3

7 Nick Kyrgios 38 0.684 1.287 21

8 Milos Raonic 39 0.718 1.136 24

9 Stan Wawrinka 36 0.694 1.043 9

10 Kei Nishikori 42 0.714 1.000 22

SPARSE BRADLEY–TERRY MODEL 2499

TABLE 5

Results of the analysis of the ATP 1968–2016 data

Rank Player Games Winning rate Merit

1 Novak Djokovic 880 0.831 2.788

2 Rafael Nadal 968 0.818 2.286

3 Roger Federer 1287 0.814 2.128

4 Andy Murray 782 0.776 1.744

5 Ivan Lendl 1274 0.820 1.247

6 Pete Sampras 957 0.770 1.128

7 Andy Roddick 784 0.741 1.111

8 John McEnroe 1035 0.813 1.104

9 Juan Martin del Potro 481 0.709 1.066

10 Andre Agassi 1101 0.756 1.000

Bradley–Terry model are given in the fifth column, and, based on which, the ranks are given

in the first column. The number of games played in 2017, winning rates and ATP rankings

are also presented. The 10th player, Kei Nishikori, is taken as the baseline (u

0

=ˆu

0

= 1).

We note that there is a difference between ranking by the estimated merits and the ATP

ranking. For example, the 7th player in our ranking list, Nick Kyrgios, ranked 21st in the

ATP ranking. Yet he defeated Novak Djokovic twice and Rafael Nadal once in 2017. These

will be counted heavily in the Bradley–Terry model and less so in the points calculation which

the ATP ranking is based on. Notice that Roger Federer and Rafael Nadal have reversed order

in the two ranking systems. In fact, Rafael Nadal had 6 winners and 4 runners-up and more

ATP points in 2017 than Roger Federer, who had 7 winners and 1 runner-up. On the other

hand, Federer defeated Nadal four times in 2017 and had an outstanding winning rate. As a

result, the estimated merit of Federer is higher than that of Nadal.

Moreover, we applied the Bradley–Terry model to the ATP matches from 1968 to 2016.

There are 2877 players in total after data cleaning. All players are ranked by their estimated

merits, and top 10 of them are presented in Table 5. The 10th player, Andre Agassi, is taken as

the baseline. The Big Four, Novak Djokovic, Rafael Nadal, Roger Federer and Andy Murray,

rank top four in the ranking list. They are considered dominant in terms of ranking and

the tournament victories from 2004 onwards. With this dataset and the application of the

Bradley–Terry model, the estimated chance that Federer defeats McEnroe in a hypothetical

match is 2.128/(2.128+1.104) =0.6584, even though the two had very similar winning rates

in reality. We remark that the correctness of this answer is limited by the assumption that the

players’ merits are fixed and may be viewed as averaged over time. Further analysis using

more sophisticated dynamic models, such as the whole history rating (Coulom (2008)), may

be more appropriate. The static model in this paper serves as a basis for further extensions.

4. Discussion. This paper provides an asymptotic theory of the MLE of the Bradley–

Terry model when comparisons between any pair of subjects are sparse. The uniform consis-

tency and asymptotic normality of the MLE are shown under, respectively, conditions (2.5)

and (2.13). Two quantities, the largest ratio of merits, M

n

, and the probability of paired com-

parison, p

n

, contribute to the accuracy of the MLE. When M

n

are bounded, we prove the

uniform consistency holds under nearly minimal condition of sparsity. The results of this

paper may have broad applications and can be further generalized to other models in the

sparse case, such as Plackett–Luce model (Luce (1959)) which fits multiple comparisons, the

Rao–Kupper model (Rao and Kupper (1967)) which allow paired comparisons with ties.

2500 HAN, YE, TAN AND CHEN

APPENDIX A: PROOF OF LEMMA 1

P

ROOF.LetE

n

denote the event that Condition A holds. We will show that under condi-

tion (2.4), P(E

c

n

) →0asn →∞, that is, the probability that the subjects in the second group

never defeat the subjects in the first group, for all partitions of subjects into two nonempty

groups, tends to 0. The proof consists of two steps. Step 1 is about estimating the number of

comparisons between the first and second groups. Step 2 is about computing the probability

of E

c

n

and its convergence to 0.

Step 1. Let ={0, 1,...,n−1} be the set of all n subjects, and let

r

denote any subset

of with r subjects. The number of comparisons between

r

and

c

r

, denoted by N

r

, can

be expressed as

N

r

=

i∈

r

,j∈

c

r

t

ij

.

Recall that t

ij

is the number comparisons between subjects i and j , which follows binomial

distribution. N

r

can be viewed as the sum of Tr(n− r) independent identically distributed

Bernoulli random variables with common probability ratio p

n

.

We first estimate N

r

for a fixed r. Condition (2.4) implies log n/(np

n

) → 0asn →∞,

since M

n

≥ 1. It follows from the Chernoff bound (Chernoff (1952)) that, for a fixed r ∈

{1,...,n/2} and n>32log n/(Tp

n

),

P

min

r

N

r

≤

T

2

r(n −r)p

n

≤

r

P

N

r

≤

T

2

r(n −r)p

n

≤

n

r

sup

r

P

N

r

≤

T

2

r(n −r)p

n

≤ n

r

sup

r

P

N

r

≤

T

2

r(n −r)p

n

≤ exp

−

T

8

r(n −r)p

n

+r log n

≤ exp

−

T

16

r(n −r)p

n

≤ exp

−

T

16

(n −1)p

n

.

The next-to-last inequality holds due to n>32 log n/(Tp

n

). The definition of N

r

, implies

the symmetry: min

r

N

r

= min

n−r

N

n−r

. Therefore, for any fixed r ∈{1,...,n− 1} and

n>32log n/(Tp

n

),

P

min

|

r

|=r

N

r

≤

T

2

r(n −r)p

n

≤exp

−

T

16

(n −1)p

n

.

Next, we estimate the lower bound N

r

for all r ∈{1,...,n− 1}.LetF

n

be the event that

N

r

>Tr(n−r)p

n

/2 holds for all r =1,...,n−1 and all partitions of into

r

and

c

r

.

SPARSE BRADLEY–TERRY MODEL 2501

Then, and n>32 log n/(T p

n

),

P(F

n

) ≥ 1 −

n−1

r=1

P

min

|

r

|=r

N

r

≤

T

2

r(n −r)p

n

≥ 1 −

n−1

r=1

exp

−

T

16

(n −1)p

n

≥ 1 −exp

−

T

16

(n −1)p

n

+log(n −1)

.

Therefore, P(F

n

) → 1asn →∞, since Condition (2.4) ensures n>32 log n/(Tp

n

) for all

large n.

Step 2. Since M

n

=max

0≤i,j≤n−1

u

i

/u

j

≥1,

(A.1) max

0≤i,j≤n−1

p

ij

= max

0≤i,j≤n−1

1

1 +u

j

/u

i

≤

1

1 +1/M

n

≤

1

2

1/M

n

.

Let G

(r)

n

denote the event that Condition A fails with the first group containing r subjects.

Then, by the definition of F

n

,

P

G

(r)

n

|F

n

≤

r

max

0≤i,j≤n−1

p

ij

Tr(n−r)p

n

2

≤

r

1

2

Tr(n−r)p

n

2M

n

=

n

r

1

2

Tr(n−r)p

n

2M

n

.

Recall that E

c

n

is the event that Condition A fails. Write

P

E

c

n

|F

n

=P

n−1

r=1

G

(r)

n

F

n

=

n−1

r=1

P

G

(r)

n

|F

n

≤

n−1

r=1

n

r

1

2

Tr(n−r)p

n

2M

n

≤2

n/2

r=1

n

r

1

2

Tr(n−r)p

n

2M

n

≤2

n/2

r=1

n

r

1

2

Trnp

n

4M

n

≤2

1 +

1

2

Tnp

n

4M

n

n

−1

,

which tends to 0 as n →∞, ensured by Condition (2.4). With the law of total probability,

P

E

c

n

=P

E

c

n

|F

n

P(F

n

) +P

E

c

n

|F

c

n

P

F

c

n

,

where F

c

n

is the complementary event of F

n

.SinceP(E

c

n

|F

n

) → 0andP(F

n

) → 1asn →

∞, it follows that P(E

c

n

) →0andP(E

n

) →1asn →∞. The proof is complete.

APPENDIX B: PROOF OF UNIFORM CONSISTENCY

In this appendix, we show the proof of uniform consistency described in Theorem 2.1.

Some notations need to be introduced first.

2502 HAN, YE, TAN AND CHEN

We first make a transformation on ˆu

i

for i = 0,...,n− 1. Let ˜u

j

=ˆu

j

/(max

i

ˆu

i

/u

i

).As

a result, max

0≤i≤n−1

˜u

i

/u

i

=1andweset ˜u

i

0

/u

i

0

=1. In addition, let

(B.1)

K =

2logn

log(np

n

)

,φ

n

=

log(np

n

)

log n

,q

n

=

φ

n

M

n

(1 +M

n

)

2

T +φ

n

M

n

,

C

i

={j : t

ij

> 0},d

ij

=I(t

ij

> 0), d

i

=

n−1

j=0

d

ij

and t

i

=

n−1

j=0

t

ij

,

where · is the floor function and I(·) is the indicator function. We also need a sequence of

increasing number {D

k

}

K

k=1

to present the level of the closeness in terms of the ratios,

D

k

=β(1 +φ

n

)

k

M

n

n

for k = 0,...,K −1,

D

K

=40βT (1 + φ

n

)

K

M

2

n

n

,

and a sequence of nested or increasing sets {A

k

}

K

k=1

to collect the subjects which have ratios

close to max

i

ˆu

i

/u

i

in the level of D

k

,

(B.2)

A

k

=

j :

˜u

j

u

j

≥1 −β(1 +φ

n

)

k

M

n

n

for k = 0,...,K −1,

A

K

=

j :

˜u

j

u

j

≥1 −40βT (1 +φ

n

)

K

M

2

n

n

,

where the constant β = 20T .

To prove Theorem 2.1, we need four additional lemmas, whose proofs are given after the

proof of Theorem 2.1. For ease of illustration, we use the sentence that “for all large n,the

condition S

n

holds” to stand for that there exists n

0

such that S

n

holds for all n>n

0

.

L

EMMA 2. Assume condition (2.5) holds. For all large n,

(B.3) P

max

0≤i≤n−1

Z

+

i

−Z

−

i

< 2

log n

np

n

≥1 −3n

−3

,

where

(B.4)

Z

+

i

=

1

t

i

j∈C

+

i

t

ij

t

ij

˜u

i

˜u

i

+˜u

j

−

t

ij

u

i

u

i

+u

j

=

1

t

i

j∈C

+

i

t

ij

˜u

i

u

j

−˜u

j

u

i

( ˜u

i

+˜u

j

)(u

i

+u

j

)

,

Z

−

i

=

1

t

i

j∈C

−

i

t

ij

t

ij

˜u

i

˜u

i

+˜u

j

−

t

ij

u

i

u

i

+u

j

=−

1

t

i

j∈C

−

i

t

ij

˜u

i

u

j

−˜u

j

u

i

( ˜u

i

+˜u

j

)(u

i

+u

j

)

,

C

+

i

=

j :

˜u

i

u

i

>

˜u

j

u

j

,j ∈ C

i

and C

−

i

=

j :

˜u

i

u

i

≤

˜u

j

u

j

,j ∈ C

i

.

L

EMMA 3. Assume condition (2.5) holds. For any i ∈A

k

where k<K−1, let

(B.5) C

∗

i

=

j :j ∈C

i

,

˜u

j

u

j

≥1 −β(1 +φ

n

)

k+1

M

n

n

.

Then for all large n,

P

C

∗

i

≥q

n

d

i

≥1 −3n

−3

,

SPARSE BRADLEY–TERRY MODEL 2503

where q

n

and d

i

are defined in (B.1). Fo r a ny i ∈ A

K−1

, let

(B.6) C

∗

i

=

j :j ∈C

i

,

˜u

j

u

j

≥1 −40βT (1 +φ

n

)

K

M

2

n

n

.

Then for all large n,

P

C

∗

i

≥

19

20

d

i

≥1 −3n

−3

.

L

EMMA 4. Assume condition (2.5) holds. Fo r a set A ⊂ , let s =|A| denote the size

of A. Define a s et B ={j :there exists i ∈ Asuch thatt

ij

> 0}. If sT < p

−1

n

, then for all large

n,

P

|B| >

1 −

4

√

log n

√

np

n

sT np

n

−s

2

T

2

np

2

n

≥1 −2n

−3sT

.

If sT = p

−1

n

, then for all large n,

P

|B| >

3

5

1 −

4

√

log n

√

np

n

n

≥1 −2n

−3sT

.

L

EMMA 5. Assume condition (2.5) holds. Recall A

k

defined in (B.2), for all large n,

P

|A

k

|≥(np

n

)

k

2

≥1 −6kn

−2

for k =0,...,K −2,(B.7)

P

|A

K−1

|≥

1

p

n

≥1 −6(K −1)n

−2

,(B.8)

and

(B.9) P

|A

K

|≥

21n

40

≥1 −6Kn

−2

.

R

EMARK 5. We give some insights to the proof of Lemma 5, which used the facts proved

in Lemmas 2 to 4. In particular, Lemma 5 is proved by mathematical induction. For illustra-

tion, we assume that u

i

= 1foralli. A

k

is exactly the set that contains subjects with esti-

mators close to the maximum estimator max

0≤i≤n−1

˜u

i

in the level of D

k

. We aim to show

there are more than n/2 subjects whose estimators are close to the maximum estimator in the

level of D

K

. In the first step, we begin with A

0

={i

0

}. With the use of Lemma 2, we know

Z

−

i

=0sothatZ

+

i

=O

p

(

√

log n/(np

n

)). It means there are some ˜u

j

very close to ˜u

i

0

,where

j ∈C

i

0

(j is the neighbor of i

0

). Moreover, Lemma 3 states the proportion of such kind of j

in C

i

0

and Lemma 4 gives the size of C

i

0

. Eventually, we put i

0

andsuchkindofj together

to generate the set A

1

whose size is obtained from Lemma 5.

Next, by Lemma 2,giveni ∈ A

1

, the relevant quantities Z

+

i

and Z

−

i

associated with sub-

jects in C

+

i

and C

−

i

are balanced. If C

−

i

has a large size, then C

∗

i

, the neighbors of i with

estimators so large to be in A

2

will automatically be large; if C

−

i

does not have a large size,

the balance of Z

+

i

and Z

−

i

dictates that those in C

+

i

would still have a large size of subset

in A

2

. The detailed arguments are given in Cases 1 and 2 in the proof of Lemma 3. Then,

we find the subjects in A

2

through the neighbors of the subjects in A

1

. We repeat the process

until the size of A

k

is larger than n/2.

2504 HAN, YE, TAN AND CHEN

B.1. Proof of Theorem 2.1.

P

ROOF. The proof mainly contains two parts. One is to show that the number of subjects

whose ratios of estimated merits and real merits ˆu

j

/u

j

are close to the largest ratio max

i

ˆu

i

/u

i

is larger than n/2. The other is to show that the number of subjects whose ratios are close to

the smallest ratio is also larger than n/2.

Step 1. Observe that (B.9), with proof given in that of Lemma 5, implies, for all large n,

(B.10) P

j :

˜u

j

u

j

≥1 −40βT (1 +φ

n

)

K

M

2

n

n

≥

21n

40

≥1 −6Kn

−2

.

Under condition (2.5), it follows that

K ≤ 2logn and (1 +φ

n

)

K

≤e

2

.

Let λ = 40βT e

2

, from (B.10), we obtain for all large n,

(B.11) P

j :

˜u

j

u

j

≥1 −λM

2

n

n

≥

21n

40

≥1 −12n

−2

log n.

Notice that ˜u

j

=ˆu

j

/(max

i

ˆu

i

/u

i

). Thus, (B.11) can be written as

P

j :

ˆu

j

/u

j

max

0≤i≤n−1

( ˆu

i

/u

i

)

≥1 −λM

2

n

n

≥

21n

40

≥1 −12n

−2

log n.

It means that with probability approaching 1 as n →∞,

(B.12)

j :

ˆu

j

/u

j

max

0≤i≤n−1

( ˆu

i

/u

i

)

≥1 −λM

2

n

n

≥

21n

40

>

n

2

.

Step 2. Next, we will show that the number of subjects whose ratios are close to the smallest

ratio is also larger than n/2.

Let ¯u

j

=ˆu

j

/(min

i

ˆu

i

/u

i

). Similar to (B.2), we define

¯

A

k

=

j :

¯u

j

u

j

≤1 +β(1 +φ

n

)

k

M

n

n

for k = 0,...,K −1,

¯

A

K

=

j :

¯u

j

u

j

≤1 +40βT (1 +φ

n

)

K

M

2

n

n

.

Compared with (B.9), we can obtain

P

|

¯

A

K

|≥

21n

40

≥1 −6Kn

−2

with the similar proof of Lemma 2 to 5. Similar to Step 1, that with probability approaching

1asn →∞,

(B.13)

j :

ˆu

j

/u

j

min

0≤i≤n−1

( ˆu

i

/u

i

)

≤1 +λM

2

n

n

≥

21n

40

>

n

2

.

Combining ˆu

0

=u

0

=1, (B.12)and(B.13), it can be shown that with probability approaching

1asn →∞,

1 −λM

2

n

n

1 +λM

2

n

n

≤ min

0≤i≤n−1

ˆu

i

u

i

≤ max

0≤i≤n−1

ˆu

i

u

i

≤

1 +λM

2

n

n

1 −λM

2

n

n

.

Consequently,

max

0≤i≤n−1

ˆu

i

u

i

−1

≤

2λM

2

n

n

1 −λM

2

n

n

.

SPARSE BRADLEY–TERRY MODEL 2505

Since M

2

n

n

→0asn →∞, with probability approaching 1,

max

0≤i≤n−1

ˆu

i

u

i

−1

→0asn →∞.

Except for the deferred proof of Lemma 2 to 5, the proof of Theorem 2.1 is complete.

B.2. Proof of Lemma 2.

P

ROOF. The proof of Lemma 2 contains three steps. In the first step, we find the upper

bound of |a

i

− E(a

i

|t

ij

, 0 ≤ j ≤ n − 1)| for fixed i. In the second step, we find the upper

bound of |Z

+

i

− Z

−

i

| for fixed i through the first step. In the third step, we find the uniform

upper bound of |Z

+

i

−Z

−

i

| for i =0,...,n−1.

Step 1. Recall that t

i

=

0≤j≤n−1

t

ij

and a

i

is the total number of wins of subject i in t

i

comparisons. Since the outcome of each comparison is independent of other comparisons, a

i

is the sum of m

i

independent Bernoulli random variables given t

ij

=m

ij

,forj =0,...,n−

1, where m

i

=

n−1

j=0

m

ij

. With the use of Hoeffding’s inequality (Hoeffding (1963)), we have

P

a

i

−E(a

i

|t

ij

, 0 ≤j ≤n −1)

≥

2Tt

i

log n|t

ij

=m

ij

, 0 ≤ j ≤n −1

=P

a

i

−E(a

i

|t

ij

, 0 ≤j ≤n −1)

≥

2Tm

i

log n|t

ij

=m

ij

, 0 ≤j ≤n −1

≤exp

−(4Tm

i

log n)/m

i

=2n

−4T

≤2n

−4

,

where E(a

i

|t

ij

, 0 ≤ j ≤ n − 1) is the conditional expectation given t

ij

for 0 ≤ j ≤ n − 1.

Note that the upper bound of the above probability does not depend on m

ij

. With the law of

total probability, for fixed i,

P

a

i

−E(a

i

|t

ij

, 0 ≤j ≤n −1)

≥

2Tt

i

log n

=

T

m

i0

=0

···

T

m

i,n−1

=0

P(t

ij

=m

ij

, 0 ≤j ≤n −1)

×P

a

i

−E(a

i

|t

ij

, 0 ≤j ≤n −1)

≥

2Tt

i

log n|t

ij

=m

ij

, 0 ≤j ≤n −1

≤2n

−4

T

m

i0

=0

···

T

m

i,n−1

=0

P(t

ij

=m

ij

, 0 ≤j ≤n −1)

=2n

−4

.

Thus with probability at least 1 −2n

−4

,foranyfixedi,

(B.14)

a

i

−E(a

i

|t

ij

, 0 ≤j ≤n −1)

<

2Tt

i

log n.

Step 2. Recall that the maximum likelihood estimator ˆu

i

satisfies

a

i

=

n−1

j=0

a

ij

=

n−1

j=0

t

ij

ˆu

i

ˆu

i

+ˆu

j

.

Since ˜u

j

=ˆu

j

/(max

i

ˆu

i

/u

i

), we can rewrite the above equation as,

a

i

=

n−1

j=0

a

ij

=

n−1

j=0

t

ij

˜u

i

˜u

i

+˜u

j

.

2506 HAN, YE, TAN AND CHEN

Then,

a

i

−E(a

i

|t

ij

, 0 ≤j ≤n −1) =

n−1

j=0

t

ij

˜u

i

˜u

i

+˜u

j

−

t

ij

u

i

u

i

+u

j

=t

i

Z

+

i

−Z

−

i

.

Basedon(B.14), we can obtain with probability at least 1 −2n

−4

,

Z

+

i

−Z

−

i

<

2T logn

t

i

.

Step 3. We first find the uniform lower bound of t

i

for i = 0,...,n − 1. Notice that t

i

is the sum of n independent and identically distributed (i.i.d.) binomial random variables,

Bin(T , p

n

). It can be also regarded as the sum of Tni.i.d. Bernoulli random variables. With

the use of Chernoff bound (Chernoff (1952)), we have

P

min

0≤i≤n−1

t

i

<

T

2

np

n

≤

n−1

i=0

P

t

i

<

T

2

np

n

≤n exp

−

T

12

np

n

.

Thus, with probability at least 1 −n exp{−(T np

n

)/12},

(B.15) min

0≤i≤n−1

t

i

≥

T

2

np

n

which means that t

i

=O

p

(np

n

). According to the result of Step 2,

P

max

0≤i≤n−1

Z

+

i

−Z

−

i

≥

2T logn/ min

0≤i≤n−1

t

i

≤

n−1

i=0

P

Z

+

i

−Z

−

i

≥

2T logn/t

i

≤n ×2n

−4

=2n

−3

.

By (B.15), with probability 1 −2n

−3

−n exp{−(T np

n

)/12},

max

0≤i≤n−1

Z

+

i

−Z

−

i

< 2

log n

np

n

.

Meanwhile, M

2

n

n

→0asn →∞implies (log n)/(np

n

) →0asn →∞. Therefore,

n exp

−

Tnp

n

12

<n

−3

,

for all large n. As a result, with probability at least 1 −3n

−3

,

max

0≤i≤n−1

Z

+

i

−Z

−

i

< 2

log n

np

n

.

We complete the proof.

B.3. Proof of Lemma 3.

P

ROOF. We first consider the case when k<K−1. Recall the definition of A

k

is given

in (B.2). For any i ∈A

k

, we aim to show that for all large n, with probability at least 1−3n

−3

,

C

∗

i

≥q

n

d

i

,

where C

∗

i

is defined in (B.5). Observe that C

−

i

⊂C

∗

i

for any i ∈A

k

.

SPARSE BRADLEY–TERRY MODEL 2507

Case 1. If |C

−

i

|≥q

n

d

i

,thenwehave

C

∗

i

≥

C

−

i

≥q

n

d

i

.

Case 2. If |C

−

i

| <q

n

d

i

,weset|C

−

i

|=αd

i

,whereα<q

n

. We need to show that for all

large n, with probability at least 1 −3n

−3

,

j :j ∈C

+

i

,

˜u

j

u

j

> 1 −β(1 +φ

n

)

k+1

M

n

n

≥(q

n

−α)d

i

.

We use Lemma 2 to prove the above inequality and our proof contains three steps. Recall that

Z

+

i

and Z

−

i

defined in (B.4). The first step is to find the lower bound of Z

+

i

and the upper

bound of Z

−

i

. The second step is to show that the number of subjects who have comparisons

with i and close ratios to ˆu

i

/u

i

is larger than (q

n

−α)d

i

,thatis,

j :

˜u

j

/u

j

˜u

i

/u

i

> 1 −βφ

n

(1 +φ

n

)

k

M

n

n

,j ∈ C

+

i

≥(q

n

−α)d

i

.

The last step is to prove the subject j included in the above set belongs to C

∗

i

.

Step 1. For Z

−

i

,wehave

Z

−

i

=−

1

t

i

j∈C

−

i

˜u

i

u

j

−˜u

j

u

i

( ˜u

i

+˜u

j

)(u

i

+u

j

)

·t

ij

=

1

t

i

j∈C

−

i

˜u

j

/u

j

−˜u

i

/u

i

( ˜u

i

/u

i

+˜u

j

/u

j

×u

j

/u

i

)(1 +u

i

/u

j

)

·t

ij

.

Since M

2

n

n

→0asn →∞,

β(1 +φ

n

)

k

M

n

n

≤βe

2

M

n

n

≤

1

2

for all large n. It can be given that for i ∈A

k

, j ∈C

−

i

and for all large n,

1

2

≤1 −β(1 +φ

n

)

k

M

n

n

≤˜u

i

/u

i

≤˜u

j

/u

j

≤1.

Therefore, for all large n,

Z

−

i

≤

1

t

i

j∈C

−

i

2t

ij

1 −(1 −β(1 + φ

n

)

k

M

n

n

)

(1 +u

i

/u

j

)(1 +u

j

/u

i

)

≤

αβ

2

(1 +φ

n

)

k

M

n

n

.

The last inequality is from

4 ≤

1 +

u

i

u

j

1 +

u

j

u

i

≤

(1 +M

n

)

2

M

n

.

Similarly, for Z

+

i

, we obtain

Z

+

i

=

1

t

i

j∈C

+

i

t

ij

˜u

i

u

j

−˜u

j

u

i

( ˜u

i

+˜u

j

)(u

i

+u

j

)

=

1

t

i

j∈C

+

i

t

ij

˜u

i

/u

i

−˜u

j

/u

j

( ˜u

i

/u

i

+˜u

j

/u

j

×u

j

/u

i

)(1 +u

i

/u

j

)

≥

M

n

(1 +M

n

)

2

Td

i

j∈C

+

i

1 −

˜u

j

/u

j

˜u

i

/u

i

.

2508 HAN, YE, TAN AND CHEN

From above, we have bounds for Z

−

i

and Z

+

i

respectively, for all large n,

(B.16) Z

−

i

≤

αβ

2

(1 +φ

n

)

k

M

n

n

,Z

+

i

≥

M

n

(1 +M

n

)

2

Td

i

j∈C

+

i

1 −

˜u

j

/u

j

˜u

i

/u

i

.

Step 2. AccordingtoLemma2,wehaveforalllargen, with probability at least 1 −3n

−3

,

Z

+

i

−

αβ

2

(1 +φ

n

)

k

M

n

n

≤Z

+

i

−Z

−

i

≤2

log n

np

n

Since

n

φ

n

=

√

log n/(np

n

), for all large n, with probability at least 1 −3n

−3

,

Z

+

i

≤

αβ

2

(1 +φ

n

)

k

M

n

n

+2

log n

np

n

=

αβ

2

(1 +φ

n

)

k

M

n

+2φ

n

n

.

Then, from (B.16), it follows that for all large n, with probability at least 1 − 3n

−3

,

M

n

(1 +M

n

)

2

Td

i

j∈C

+

i

1 −

˜u

j

/u

j

˜u

i

/u

i

≤

αβ

2

(1 +φ

n

)

k

M

n

+2φ

n

n

.

Notice that |C

+

i

|=|C

i

|−|C

−

i

|=(1 −α)d

i

, we can rewrite the above inequality as for all

large n, with probability at least 1 −3n

−3

,

1

|C

+

i

|

j∈C

+

i

1 −

˜u

j

/u

j

˜u

i

/u

i

≤

T(1 + M

n

)

2

M

n

(1 −α)

αβ

2

(1 +φ

n

)

k

M

n

+2φ

n

n

.

Set the xth percentile of {( ˜u

j

/u

j

)/( ˜u

i

/u

i

) : j ∈ C

+

i

} to be b

x

,wherex = (1 − q

n

)/(1 − α).

As

1

|C

+

i

|

j∈C

+

i

1 −

˜u

j

/u

j

˜u

i

/u

i

=1 −

1

|C

+

i

|

j∈C

+

i

˜u

j

/u

j

˜u

i

/u

i

,

it follows that for all large n, with probability at least 1 −3n

−3

,

1

|C

+

i

|

j∈C

+

i

˜u

j

/u

j

˜u

i

/u

i

≥ 1 −

T(1 +M

n

)

2

M

n

(1 −α)

αβ

2

(1 +φ

n

)

k

M

n

+2φ

n

n

,

1 −x + b

x

x ≥ 1 −

T(1 +M

n

)

2

M

n

(1 −α)

αβ

2

(1 +φ

n

)

k

M

n

+2φ

n

n

,

b

x

≥ 1 −

T(1 +M

n

)

2

M

n

(1 −α)x

αβ

2

(1 +φ

n

)

k

M

n

+2φ

n

n

.

Since x = (1 −q

n

)/(1 − α),0≤ α<q

n

,whereq

n

is defined in (B.1), we have for all large

n, with probability at least 1 −3n

−3

,

b

x

≥ 1 −

T(1 +M

n

)

2

M

n

(1 −q

n

)

αβ

2

(1 +φ

n

)

k

M

n

+2φ

n

n

> 1 −

T(1 +M

n

)

2

M

n

(1 −q

n

)

q

n

β

2

(1 +φ

n

)

k

M

n

+2φ

n

n

SPARSE BRADLEY–TERRY MODEL 2509

≥ 1 −

(1 +M

n

)

2

T +φ

n

M

n

M

n

φ

n

M

n

β

2(1 +M

n

)

2

T +2φ

n

M

n

(1 +φ

n

)

k

M

n

+2φ

n

n

≥ 1 −

β

2

φ

n

(1 +φ

n

)

k

M

n

+

(1 +M

n

)

2

T +φ

n

M

n

M

n

×2φ

n

n

≥ 1 −

β

2

φ

n

(1 +φ

n

)

k

M

n

+8TM

n

φ

n

+2φ

2

n

n

.

Given β =20T , we can rewrite above inequality as

b

x

> 1 −βφ

n

(1 +φ

n

)

k

M

n

n

,

which means

j :

˜u

j

/u

j

˜u

i

/u

i

> 1 −βφ

n

(1 +φ

n

)

k

M

n

n

,j ∈ C

+

i

≥(1 −x)(1 −α)d

i

=(q

n

−α)d

i

.

Step 3. Since i ∈ A

k

={j : ( ˜u

j

/u

j

) ≥ 1 − β(1 +φ

n

)

k

M

n

n

},foranyj ∈{j : ( ˜u

j

/u

j

)/

( ˜u

i

/u

i

)>1 − βφ

n

(1 + φ

n

)

k

M

n

n

,j ∈ C

+

i

}, it follows that for all large n, with probability

at least 1 −3n

−3

,

˜u

j

u

j

>

1 −βφ

n

(1 +φ

n

)

k

M

n

n

1 −β(1 +φ

n

)

k

M

n

n

≥ 1 −βφ

n

(1 +φ

n

)

k

M

n

n

−β(1 +φ

n

)

k

M

n

n

= 1 −β(1 +φ

n

)

k+1

M

n

n

,

which implies

j :

˜u

j

u

j

> 1 −β(1 +φ

n

)

k+1

M

n

n

,j ∈ C

+

i

≥(q

n

−α)d

i

.

Therefore, for all large n, with probability at least 1 −3n

−3

,

C

∗

i

≥

C

−

i

+

j :

˜u

j

u

j

> 1 −β(1 +φ

n

)

k+1

M

n

n

,j ∈ C

+

i

≥ αd

i

+(q

n

−α)d

i

= q

n

d

i

.

For k = K − 1, we can obtain the result with the same proof as above except replacing q

n

with 19/20. Hence, for all large n, with probability at least 1 −3n

−3

,

C

∗

i

=

j :j ∈C

i

,

˜u

j

u

j

≥1 −40βT (1 + φ

n

)

K

M

2

n

n

≥

19

20

d

i

.

The proof is complete.

B.4. Proof of Lemma 4.

P

ROOF. We present the proof with two steps. The first step is to find the lower bound of

|B| when A is a deterministic set. The second step is to extend the result of the first step to

the case when A is a random set.

Step 1. Let A be a nonrandom set with size s ≤ (Tp

n

)

−1

.Foranyj ∈ ,

P(t

ij

=0foralli ∈ A) = (1 − p

n

)

sT

.

2510 HAN, YE, TAN AND CHEN

Set y = 1 −(1 − p

n

)

sT

and η

j

= I(j ∈ B).Soη

j

= 1ifj has a comparison with someone

in A,otherwiseη

j

=0. We know that P(η

j

=1) =y.

Since M

2

n

n

→0asn →∞,4

√

log n<

√

np

n

for all large n. Thus, with Chernoff bound

(Chernoff (1952)), for all large n,

P

|B|≤

1 −

4

√

log n

√

np

n

ny

≤ 2exp

−

16ny logn

2np

n

= 2exp

−

8(1 −exp(sT log(1 −p

n

))) log n

p

n

≤ 2exp

−

8(1 −exp(−sTp

n

)) log n

p

n

≤ 2exp{−4sT log n}.

Here, the second and third inequalities are based on log(1−x) ≤−x and x ≤ 2(1−exp(−x))

respectively when 0 <x<1.

Step 2. For any set A ⊂ with size s and all large n, it follows that

P

min

|A|=s

{j :there exists i ∈A such that t

ij

> 0}

≤

1 −

4

√

log n

√

np

n

ny

≤

|A|=s

P

{j :there exists i ∈A such that t

ij

> 0}

≤

1 −

4

√

log n

√

np

n

ny

≤2

n

s

exp{−4sT logn}

≤2n

s

exp{−4sT logn}

≤2n

−3sT

.

In summary,

P

min

|A|=s

{j :there exists i ∈Asuch thatt

ij

> 0}

≤

1 −

4

√

log n

√

np

n

ny

≤2n

−3sT

→0asn →∞.

Since for any set A with the size s,

|B|≥ min

|A|=s

{j :there exists i ∈A such that t

ij

> 0}

.

Thus, for all large n, with probability at least 1 −2n

−3sT

,

|B| >

1 −

4

√

log n

√

np

n

ny .

Recall that y = 1 − (1 − p

n

)

sT

,forsT < p

−1

n

,

ny =n

1 −(1 −p

n

)

sT

≥sT np

n

−s

2

T

2

np

2

n

,

while for sT = p

−1

n

,

ny =n

1 −(1 −p

n

)

sT

≥n

1 −e

−1

>

3

5

n.

The proof is complete.

SPARSE BRADLEY–TERRY MODEL 2511

B.5. Proof of Lemma 5.

P

ROOF. Our aim is to show that there exists a uniform constant C such that when n>C,

(B.7), (B.8)and(B.9) hold. C is defined as the maximum of n which does not satisfy any

following inequalities,

(B.17)

n

−3

>nexp

−

Tnp

n

12

,

√

np

n

> 4

log n,

βe

2

M

n

n

≤

1

2

and q

n

√

np

n

−8

log n>2,

where q

n

is defined in (B.1)andβ is defined in (B.9). Since M

2

n

n

→0asn →∞, it ensures

the existence of C. Then, for any n>C, n satisfies all inequalities in (B.17).

We show Lemma 5 by mathematical induction.

(1) For k = 0, it is obvious that

|A

0

|≥

{i

0

}

=(np

n

)

0

=1.

Therefore, we obtain

P

|A

0

|≥(np

n

)

0

≥1.

(2) For k<K− 2, let

A

k

denote the event that |A

k

|≥(np

n

)

k/2

happens. Assume that

when n>C,

P(

A

k

) ≥1 −6kn

−2

.

Then we proceed under the condition that event

A

k

happens. Without loss of generality, let

|A

k

|=(np

n

)

k/2

/T , otherwise we consider any of its subsets with size (np

n

)

k/2

/T .

For any i ∈ A

k

,wehaveC

∗

i

⊂A

k+1

. Hence

i∈A

k

C

∗

i

⊂A

k+1

. Consequently,

(B.18) |A

k+1

|≥

i∈A

k

C

∗

i

≥

i∈A

k

C

i

−

i∈A

k

C

i

\C

∗

i

.

Next we estimate

i∈A

k

|C

i

\ C

∗

i

| and |

i∈A

k

C

i

| by Lemma 3 and Lemma 4 respectively.

Note that

i∈A

k

C

i

={j : j has a comparsion with anyone in A

k

} and

(B.19) |A

k

|=

(np

n

)

k

2

T

≤

(np

n

)

K−3

2

T

≤

(np

3

n

)

−

1

2

T

.

BasedonLemma4, we know that when n>C, with probability at least 1 −2n

−3T |A

k

|

,

(B.20)

i∈A

k

C

i

≥

1 −

4

√

log n

√

np

n

(np

n

)

k

2

+1

−(np

n

)

k

p

2

n

n

.

Meanwhile, from Lemma 3, we know for i ∈ A

k

,whenn>C, with probability at least

1 −3n

−3

,

C

i

\C

∗

i

≤(1 −q

n

)d

i

,

where q

n

and d

i

are defined in (B.1). As a result, when n>C, with probability at least

1 −3|A

k

|n

−3

,

(B.21)

i∈A

k

C

i

\C

∗

i

≤(1 −q

n

)

i∈A

k

d

i

.

2512 HAN, YE, TAN AND CHEN

Based on the Chernoff bound (Chernoff (1952)), the range of d

i

is given as

P

d

i

≥

1 +

4

√

log n

√

np

n

Tnp

n

≤ P

d

i

≥

1 +

4

√

log n

√

np

n

E(d

i

)

≤ exp

−

16

3

log n

,

where the first inequality is from E(d

ij

) =1 −(1 −p

n

)

T

≤Tp

n

. Consequently,

P

max

0≤i≤n−1

d

i

≥

1 +

4

√

log n

√

np

n

Tnp

n

≤n exp

−

16

3

log n

≤n

−4

,

which implies

max

0≤i≤n−1

d

i

<

1 +

4

√

log n

√

np

n

Tnp

n

,

with probability at least 1 − n

−4

. So we can rewrite (B.21)aswhenn>C, with probability

at least 1 −3|A

k

|n

−3

−n

−4

,

(B.22)