Journal of Machine Learning Research 13 (2012) 2617-2654 Submitted 8/11; Revised 5/12; Published 9/12

Static Prediction Games for Adversarial Learning Problems

Michael Br

¨

uckner MIBRUECK@CS.UNI-POTSDAM.DE

Department of Computer Science

University of Potsdam

August-Bebel-Str. 89

14482 Potsdam, Germany

Christian Kanzow KANZOW@MATHEMATIK.UNI-WUERZBURG.DE

Institute of Mathematics

University of W

¨

urzburg

Emil-Fischer-Str. 30

97074 W

¨

urzburg, Germany

Tobias Scheffer SCHEFFER@CS.UNI-POTSDAM.DE

Department of Computer Science

University of Potsdam

August-Bebel-Str. 89

14482 Potsdam, Germany

Editor: Nicol

`

o Cesa-Bianchi

Abstract

The standard ass umption of identically distributed training and test data is violated when the test

data are generated in response to the presence of a predictive model. This becomes apparent, for

example, in the context of email spam filtering. Here, email service providers employ spam fil-

ters, and spam senders engineer campaign templates to achieve a high rate of successful deliveries

despite the filters. We model the interaction between the learner and the data generator as a static

game in which the cost functions of the learner and the data generator are not necessarily antag-

onistic. We identify conditions under which this prediction game has a unique Nash equilibrium

and derive algorithms that find the equilibrial prediction model. We derive two instances, the Nash

logistic regression and the Nash support vector machine, and empirically explore their properties

in a case study on email spam filtering.

Keywords: static prediction games, adversarial classification, Nash equilibrium

1. Introduction

A common assumption on which most learning algorithms are based is that training and test data

are governed by identical distributions. However, in a variety of applications, the distribution that

governs data at application time may be influenced by an adversary whose interests are in conflict

with those of the learner. Consider, for instance, the following three scenarios. In computer and

network security, scripts that control attacks are engineered with botnet and intrusion detection

systems in mind. Credit card defrauders adapt their unauthorized use of credit cards—in particular,

amounts charged per transactions and per day and the type of businesses that amounts are charged

from—to avoid triggering alerting mechanisms employed by credit card companies. Email spam

senders design message templates that are instantiated by nodes of botnets. These templates are

c

2012 Michael Br

¨

uckner, Christian Kanzow and Tobias Scheffer.

BR

¨

UCKNER, KANZOW, AND SCHEFFER

specifically designed to produce a low spam score with popular spam filters. The domain of email

spam filtering will serve as a running example throughout the paper. In all of these applications, the

party that creates the predictive model and the adversarial party that generates future data are aware

of each other, and factor the possible actions of their opponent into their decisions.

The interaction between learner and data generators can be modeled as a game in which one

player controls the predictive model whereas another exercises some control over the process of

data generation. The adversary’s influence on the generation of the data can be formally modeled as

a transformation that is imposed on the distribution that governs the data at training time. The trans-

formed distribution then governs the data at application time. The optimization criterion of either

player takes as arguments both the predictive model chosen by the learner and the transformation

carried out by the adversary.

Typically, this problem is modeled under the worst-case assumption that the adversary desires

to impose the highest possible costs on the learner. This amounts to a zero-sum game in which

the loss of one player is the gain of the other. In this setting, both players can maximize their

expected outcome by following a minimax strategy. Lanckriet et al. (2002) study the minimax

probability machine (MPM). This clas sifier minimizes the maximal probability of misclassifying

new instances for a given mean and covariance matrix of each class. Geometrically, these class

means and covar iances define two hyper-ellipsoids which are equally scaled such that they inters ect;

their common tangent is the minimax probabilistic decision hyperplane. Ghaoui et al. (2003) derive

a minimax model for input data that are known to lie within s ome hyper-rectangles around the

training instances. Their solution minimizes the worst-case loss over all possible choices of the data

in these intervals. Similarly, worst-case solutions to classification games in which the adversary

deletes input features (Globerson and Roweis, 2006; Globerson et al., 2009) or performs an arbitrary

feature transformation (Teo et al., 2007; Dekel and Shamir, 2008; Dekel et al., 2010) have been

studied.

Several applications motivate problem settings in which the goals of the learner and the data

generator, while still conflicting, are not necessarily entirely antagonistic. For instance, a defrauder’s

goal of maximizing the profit made from exploiting phished account information is not the inverse

of an email service provider’s goal of achieving a high spam recognition rate at close-to-zero false

positives. When playing a minimax strategy, one often makes overly pessimistic assumptions about

the adversary’s behavior and may not necessarily obtain an optimal outcome.

Games in which a leader—typically, the learner—commits to an action first whereas the adver-

sary can react after the leader’s action has been disclosed are naturally modeled as a Stackelberg

competition. This model is appropriate when the follower—the data generator—has full informa-

tion about the predictive model. This assumption is usually a pessimistic approximation of reality

because, for instance, neither email service providers nor credit card companies disclose a com-

prehensive documentation of their current security measures. Stackelberg equilibria of adversarial

classification problems can be identified by solving a bilevel optimization problem (Br

¨

uckner and

Scheffer, 2011).

This paper studies static prediction games in which both players act simultaneously; that is,

without prior information on their opponent’s move. When the optimization criterion of both play-

ers depends not only on their own action but also on their opponent’s move, then the concept of

a player’s optimal action is no longer well-defined. Therefore, we resort to the concept of a Nash

equilibrium of static prediction games. A Nash equilibrium is a pair of actions chosen such that

no player benefits from unilaterally selecting a different action. If a game has a unique Nash equi-

2618

STATIC PREDICTION GAMES FOR ADVERSARIAL LEARNING PROBLEMS

librium and is played by rational players that aim at maximizing their optimization criteria, it is

reasonable for each player to assume that the opponent will play according to the Nash equilib-

rium strategy. If one player plays according to the equilibrium strategy, the optimal move for the

other player is to play this equilibrium strategy as well. If, however, multiple equilibria exist and

the players choose their strategy according to distinct ones, then the resulting combination may be

arbitrarily disadvantageous for either player. It is therefore interesting to study whether adversarial

prediction games have a unique Nash equilibrium.

Our work builds on an approach that Br

¨

uckner and Scheffer (2009) developed for finding a

Nash equilibrium of a static prediction game. We will discuss a flaw in Theorem 1 of Br

¨

uckner

and Scheffer (2009) and develop a revised version of the theorem that identifies conditions under

which a unique Nash equilibrium of a prediction game exists. In addition to the inexact linesearch

approach to finding the equilibrium that Br

¨

uckner and Scheffer (2009) develop, we will follow a

modified extragradient approach and develop Nash logistic regression and the Nash support vector

machine. This paper also develops a kernelized version of these methods. An extended empirical

evaluation explores the applicability of the Nash instances in the context of email spam filtering.

We empirically verify the assumptions made in the modeling process and compare the performance

of Nash instances with baseline methods on several email corpora including a corpus from an email

service provider.

The rest of this paper is organized as follows. Section 2 introduces the problem setting. We

formalize the Nash prediction game and study conditions under which a unique Nash equilibrium

exists in Section 3. Section 4 develops strategies for identifying equilibrial prediction models, and

in Section 5, we detail on two instances of the Nash prediction game. In Section 6, we report on

experiments on email spam filtering; Section 7 concludes.

2. Problem Setting

We study static prediction games between two players: The learner (v = −1) and an adversary, the

data generator (v = +1). In our running example of email spam filtering, we study the competition

between recipient and senders, not competition among senders. Therefore, v = −1 refers to the

recipient whereas v = +1 models the entirety of all legitimate and abusive email senders as a single,

amalgamated player.

At training time, the data generator v = +1 produces a sample D = {(x

i

,y

i

)}

n

i=1

of n training

instances x

i

∈ X with corresponding class labels y

i

∈ Y = {−1,+1}. These object-class pairs are

drawn according to a training distribution with density function p(x,y). By contrast, at application

time the data generator produces object-class pairs according to some test distribution with density

˙p(x,y) which may differ from p(x,y).

The task of the learner v = −1 is to select the parameters w ∈ W ⊂ R

m

of a predictive model

h(x) = sign f

w

(x) implemented in terms of a generalized linear decision function f

w

: X → R with

f

w

(x) = w

T

φ(x) and feature mapping φ : X → R

m

. The learner’s theoretical costs at application

time are given by

θ

−1

(w, ˙p) =

∑

Y

Z

X

c

−1

(x,y)ℓ

−1

( f

w

(x),y) ˙p(x, y)dx ,

where weighting function c

−1

: X × Y → R and loss function ℓ

−1

: R ×Y → R compose the

weighted loss c

−1

(x,y)ℓ

−1

( f

w

(x),y) that the learner incurs when the predictive model classifies

2619

BR

¨

UCKNER, KANZOW, AND SCHEFFER

instance x as h(x) = sign f

w

(x) while the true label is y. The positive class- and instance-specific

weighting factors c

−1

(x,y) with E

X,Y

[c

−1

(x,y)] = 1 specify the importance of minimizing the loss

ℓ

−1

( f

w

(x),y) for the corresponding object-class pair (x,y). For instance, in spam filtering, the cor-

rect classification of non-spam messages can be business-critical for email service providers while

failing to detect spam messages runs up processing and storage costs, depending on the size of the

message.

The data generator v = +1 can modify the data generation process at application time. In prac-

tice, spam senders update their campaign templates which are disseminated to the nodes of botnets.

Formally, the data generator transforms the training distribution with density p to the test distribu-

tion with density ˙p. The data generator incurs transformation costs by modifying the data generation

process which is quantified by Ω

+1

(p, ˙p). This term acts as a regularizer on the transformation and

may implicitly constrain the possible difference between the distributions at training and application

time, depending on the nature of the application that is to be modeled. For instance, the email sender

may not be allowed to alter the training distribution for non-spam messages, or to modify the nature

of the messages by changing the label from spam to non-spam or vice versa. Additionally, changing

the training distribution for spam messages may incur costs depending on the extent of distortion

inflicted on the informational payload. The theoretical costs of the data generator at application

time are the sum of the expected prediction costs and the transformation costs,

θ

+1

(w, ˙p) =

∑

Y

Z

X

c

+1

(x,y)ℓ

+1

( f

w

(x),y) ˙p(x, y)dx + Ω

+1

(p, ˙p),

where, in analogy to the learner’s costs, c

+1

(x,y)ℓ

+1

( f

w

(x),y) quantifies the weighted loss that

the data generator incurs when instance x is labeled as h(x) = sign f

w

(x) while the true label is

y. The weighting factors c

+1

(x,y) with E

X,Y

[c

+1

(x,y)] = 1 express the significance of (x,y) from

the perspective of the data generator. In our example scenario, this reflects that costs of correctly or

incorrectly classified instances may vary greatly across different physical senders that are aggregated

into the amalgamated player.

Since the theoretical costs of both players depend on the test distribution, they can, for all practi-

cal purposes, not be calculated. Hence, we focus on a regularized, empirical counterpart of the the-

oretical costs based on the training sample D. The empirical counterpart

ˆ

Ω

+1

(D,

˙

D) of the data gen-

erator’s regularizer Ω

+1

(p, ˙p) penalizes the divergence between training sample D = {(x

i

,y

i

)}

n

i=1

and a perturbated training sample

˙

D = {(˙x

i

,y

i

)}

n

i=1

that would be the outcome of applying the trans-

formation that translates p into ˙p to sample D. The learner’s cost function, instead of integrating

over ˙p, sums over the elements of the perturbated training sample

˙

D. The players’ empirical cost

functions can still only be evaluated after the learner has committed to parameters w and the data

generator to a transformation. However this transformation needs only be represented in terms of

the effects that it will have on the training sample D. The transformed training sample

˙

D must not

be mistaken for test data; test data are generated under ˙p at application time after the players have

committed to their actions.

2620

STATIC PREDICTION GAMES FOR ADVERSARIAL LEARNING PROBLEMS

The empirical costs incurred by the predictive model h(x) = sign f

w

(x) with parameters w and

the shift from p to ˙p amount to

ˆ

θ

−1

(w,

˙

D) =

n

∑

i=1

c

−1,i

ℓ

−1

( f

w

(˙x

i

),y

i

) + ρ

−1

ˆ

Ω

−1

(w), (1)

ˆ

θ

+1

(w,

˙

D) =

n

∑

i=1

c

+1,i

ℓ

+1

( f

w

(˙x

i

),y

i

) + ρ

+1

ˆ

Ω

+1

(D,

˙

D), (2)

where we have replaced the weighting terms

1

n

c

v

(˙x

i

,y

i

) by constant cost factors c

v,i

> 0 with

∑

i

c

v,i

=

1. The learner’s regularizer

ˆ

Ω

−1

(w) in (1) accounts for the fact that

˙

D does not constitute the test

data itself, but is merely a training sample transformed to reflect the test distribution and then used

to learn the model parameters w. The trade-off between the empirical loss and the regularizer is

controlled by each player’s regularization parameter ρ

v

> 0 for v ∈{−1,+1}.

Note that either player’s empirical costs

ˆ

θ

v

depend on both players’ actions: w ∈ W and

˙

D ⊆

X ×Y . Because of the potentially conflicting players’ interests, the decision process for w and

˙

D

becomes a non-cooperative two-player game, which we call a prediction game. In the following

section, we will refer to the Nash prediction game (NPG) which identifies the concept of an optimal

move of the learner and the data generator under the assumption of simultaneously acting players.

3. The Nash Prediction Game

The outcome of a prediction game is one particular combination of actions (w

∗

,

˙

D

∗

) that incurs costs

ˆ

θ

v

(w

∗

,

˙

D

∗

) for the players. Each player is aware that this outcome is affected by both players’ action

and that, consequently, their potential to choose an action can have an impact on the other player’s

decision. I n general, there is no action that minimizes one player’s cost function independent of the

other player’s action. In a non-cooperative game, the players are not allowed to communicate while

making their decisions and therefore they have no information about the other player’s strategy. In

this setting, any concept of an optimal move requires additional assumptions on how the adversary

will act.

We model the decision process for w

∗

and

˙

D

∗

as a static two-player game with complete in-

formation. In a static game, both players commit to an action simultaneously, without information

about their opponent’s action. In a game with complete information, both players know their oppo-

nent’s cost function and action space.

When

ˆ

θ

−1

and

ˆ

θ

+1

are known and antagonistic, the assumption that the adversary will seek

the greatest advantage by inflicting the greatest damage on

ˆ

θ

−1

justifies the minimax strategy:

argmin

w

max

˙

D

ˆ

θ

−1

(w,

˙

D). However, when the players’ cost functions are not antagonistic, assuming

that the adversary will inflict the greatest possible damage is overly pessimistic. Instead assuming

that the adversary acts rationally in the sense of seeking the greatest possible personal advantage

leads to the concept of a Nash equilibrium. An equilibrium strategy is a steady state of the game in

which neither player has an incentive to unilaterally change their plan of actions .

In static games, equilibrium strategies are called Nash equilibria, which is why we refer to the

resulting predictive model as Nash prediction game (NPG). In a two-player game, a Nash equi-

librium is defined as a pair of actions such that no player can benefit from changing their action

2621

BR

¨

UCKNER, KANZOW, AND SCHEFFER

unilaterally; that is,

ˆ

θ

−1

(w

∗

,

˙

D

∗

) = min

w∈W

ˆ

θ

−1

(w,

˙

D

∗

),

ˆ

θ

+1

(w

∗

,

˙

D

∗

) = min

˙

D⊆X ×Y

ˆ

θ

+1

(w

∗

,

˙

D),

where W and X ×Y denote the players’ action spaces.

However, a static prediction game may not have a Nash equilibrium, or it may possess multi-

ple equilibria. If (w

∗

,

˙

D

∗

) and (w

′

,

˙

D

′

) are distinct Nash equilibria and each player decides to act

according to a different one of them, then combinations (w

∗

,

˙

D

′

) and (w

′

,

˙

D

∗

) may incur arbitrarily

high costs for both players. Hence, one can argue that it is rational for an adversary to play a Nash

equilibrium only when the following assumption is satisfied.

Assumption 1 The following statements hold:

1. both players act simultaneously;

2. both players have full knowledge about both (empirical) cost functions

ˆ

θ

v

(w,

˙

D) defined in (1)

and (2), and both action spaces W and X ×Y ;

3. both players act rational with respect to their cost function in the sense of securing their

lowest possible costs;

4. a unique Nash equilibrium exists.

Whether Assumptions 1.1-1.3 are adequate—especially the assumption of simultaneous actions—

strongly depends on the application. For example, in some applications, the data generator may uni-

laterally be able to acquire information about the model f

w

before committing to

˙

D. Such situations

are better modeled as a Stackelberg competition (Br

¨

uckner and Scheffer, 2011). On the other hand,

when the learner is able to treat any executed action as part of the training data D and update the

model w, the setting is better modeled as repeated executions of a static game with simultaneous

actions. The adequateness of Assumption 1.4, which we discuss in the following sections, depends

on the chosen loss functions, the cost factors, and the regularizers.

3.1 Existence of a Nash Equilibrium

Theorem 1 of Br

¨

uckner and Scheffer (2009) identifies conditions under which a unique Nash equi-

librium exists. Kanzow located a flaw in the proof of this theorem: The proof argues that the

pseudo-Jacobian can be decomposed into two (strictly) positive stable matrices by showing that the

real part of every eigenvalue of those two matrices is positive. However, this does not generally

imply that the sum of these matrices is positive stable as well since this would require a common

Lyapunov solution (cf. Problem 2.2.6 of Horn and Johnson, 1991). But even if such a solution

exists, the positive definiteness cannot be concluded from the positiveness of all eigenvalues as the

pseudo-Jacobian is generally non-symmetric.

Having “unproven” prior claims, we will now derive sufficient conditions for the existence of a

Nash equilibrium. To this end, we first define

x :=

h

φ(x

1

)

T

,φ(x

2

)

T

,...,φ(x

n

)

T

i

T

∈ φ(X )

n

⊂ R

m·n

,

˙

x :=

h

φ(˙x

1

)

T

,φ(˙x

2

)

T

,...,φ(˙x

n

)

T

i

T

∈ φ(X )

n

⊂ R

m·n

,

2622

STATIC PREDICTION GAMES FOR ADVERSARIAL LEARNING PROBLEMS

as long, concatenated, column vectors induced by feature mapping φ, training sample D = {(x

i

,y

i

)}

n

i=1

,

and transformed training sample

˙

D = {( ˙x

i

,y

i

)}

n

i=1

, respectively. For terminological harmony, we re-

fer to vector

˙

x as the data generator’s action with corresponding action space φ(X )

n

.

We make the following assumptions on the action spaces and the cost functions which enables

us to state the main result on the existence of at least one Nash equilibrium in Lemma 1.

Assumption 2 The players’ cost functions defined in Equations 1 and 2, and their action sets W

and φ(X )

n

satisfy the properties:

1. loss functions ℓ

v

(z,y) with v ∈ {−1,+1} are convex and twice continuously differentiable

with respect to z ∈R for all fixed y ∈ Y ;

2. regularizers

ˆ

Ω

v

are uniformly strongly convex and twice continuously differentiable with re-

spect to w ∈ W and

˙

x ∈ φ(X )

n

, respectively;

3. action spaces W and φ(X )

n

are non-empty, compact, and convex subsets of finite-dimensional

Euclidean spaces R

m

and R

m·n

, respectively.

Lemma 1 Under Assumption 2, at least one equilibrium point (w

∗

,

˙

x

∗

) ∈ W ×φ(X )

n

of the Nash

prediction game defined by

min

w

ˆ

θ

−1

(w,

˙

x

∗

)

min

˙

x

ˆ

θ

+1

(w

∗

,

˙

x)

s.t. w ∈ W

s.t.

˙

x ∈ φ(X )

n

(3)

exists.

Proof. Each player v’s cost function is a sum over n loss terms resulting from loss function ℓ

v

and

regularizer

ˆ

Ω

v

. By Assumption 2, these loss functions are convex and continuous, and the regu-

larizers are uniformly strongly convex and continuous. Hence, both cost functions

ˆ

θ

−1

(w,

˙

x) and

ˆ

θ

+1

(w,

˙

x) are continuous in all arguments and uniformly strongly convex in w ∈ W and

˙

x ∈ φ(X )

n

,

respectively. As both action spaces W and φ(X )

n

are non-empty, compact, and convex subsets

of finite-dimensional Euclidean spaces, a Nash equilibrium exists—see Theorem 4.3 of Basar and

Olsder (1999).

3.2 Uniqueness of the Nash Equilibrium

We will now derive conditions for the uniqueness of an equilibrium of the Nash prediction game

defined in (3). We first reformulate the two-player game into an (n + 1)-player game. In Lemma 2,

we then present a sufficient condition for the uniqueness of the Nash equilibrium in this game, and

by applying Proposition 4 and L emma 5-7 we verify whether this condition is met. Finally, we state

the main result in Theorem 8: The Nash equilibrium is unique under certain properties of the loss

functions, the regularizers, and the cost factors which all can be verified easily.

Taking into account the Cartesian product structure of the data generator’s action space φ(X )

n

,

it is not difficult to see that (w

∗

,

˙

x

∗

) with

˙

x

∗

=

˙

x

∗T

1

,...,

˙

x

∗T

n

T

and

˙

x

∗

i

:= φ(˙x

∗

i

) is a solution of the

2623

BR

¨

UCKNER, KANZOW, AND SCHEFFER

two-player game if, and only if, (w

∗

,

˙

x

∗

1

,...,

˙

x

∗

n

) is a Nash equilibrium of the (n + 1)-player game

defined by

min

w

ˆ

θ

−1

(w,

˙

x)

min

˙

x

1

ˆ

θ

+1

(w,

˙

x)

··· min

˙

x

n

ˆ

θ

+1

(w,

˙

x)

s.t. w ∈ W

s.t.

˙

x

1

∈ φ(X ) ··· s.t.

˙

x

n

∈ φ(X )

, (4)

which results from (3) by repeating n times the cost function

ˆ

θ

+1

and minimizing this function with

respect to

˙

x

i

∈ φ(X ) for i = 1,..., n. Then the pseudo-gradient (in the sense of Rosen, 1965) of the

game in (4) is defined by

g

r

(w,

˙

x) :=

r

0

∇

w

ˆ

θ

−1

(w,

˙

x)

r

1

∇

˙

x

1

ˆ

θ

+1

(w,

˙

x)

r

2

∇

˙

x

2

ˆ

θ

+1

(w,

˙

x)

.

.

.

r

n

∇

˙

x

n

ˆ

θ

+1

(w,

˙

x)

∈ R

m+m·n

, (5)

with any fixed vector r = [r

0

,r

1

,...,r

n

]

T

where r

i

> 0 for i = 0,..., n. The derivative of g

r

—that is,

the pseudo-Jacobian of (4)—is given by

J

r

(w,

˙

x) = Λ

r

∇

2

w,w

ˆ

θ

−1

(w,

˙

x) ∇

2

w,

˙

x

ˆ

θ

−1

(w,

˙

x)

∇

2

˙

x,w

ˆ

θ

+1

(w,

˙

x) ∇

2

˙

x,

˙

x

ˆ

θ

+1

(w,

˙

x)

, (6)

where

Λ

r

:=

r

0

I

m

0 ··· 0

0 r

1

I

m

··· 0

.

.

.

.

.

.

.

.

.

.

.

.

0 0 ··· r

n

I

m

∈ R

(m+m·n)×(m+m·n)

. (7)

Note that the pseudo-gradient g

r

and the pseudo-Jacobian J

r

exist when Assumption 2 is satis-

fied. The above definition of the pseudo-Jacobian enables us to state the following result about the

uniqueness of a Nash equilibrium.

Lemma 2 Let Assumption 2 hold and suppose there exists a fixed vector r = [r

0

,r

1

,...,r

n

]

T

with

r

i

> 0 for all i = 0,1,...,n such that the corresponding pseudo-Jacobian J

r

(w,

˙

x) is positive definite

for all (w,

˙

x) ∈ W ×φ(X )

n

. Then the Nash prediction game in (3) has a unique equilibrium.

Proof. The existence of a Nash equilibrium follows from Lemma 1. Recall from our previous

discussion that the original Nash game in (3) has a unique solution if, and only if, the game from (4)

with one learner and n data generators admits a unique solution. In view of Theorem 2 of Rosen

(1965), the latter attains a unique solution if the pseudo-gradient g

r

is strictly monotone; that is, if

for all actions w,w

′

∈ W and

˙

x,

˙

x

′

∈ φ(X )

n

, the inequality

g

r

(w,

˙

x) −g

r

(w

′

,

˙

x

′

)

T

w

˙

x

−

w

′

˙

x

′

> 0

holds. A sufficient condition for this pseudo-gradient being strictly monotone is the positive defi-

niteness of the pseudo-Jacobian J

r

(see, e.g., Theorem 7.11 and Theorem 6, respectively, in Geiger

2624

STATIC PREDICTION GAMES FOR ADVERSARIAL LEARNING PROBLEMS

and Kanzow, 1999; Rosen, 1965).

To verify whether the positive definiteness condition of Lemma 2 is satisfied, we first derive the

pseudo-Jacobian J

r

(w,

˙

x). We subsequently decompose it into a sum of three matrices and analyze

the definiteness of these matrices for the particular choice of vector r with r

0

:= 1, r

i

:=

c

−1,i

c

+1,i

> 0 for

all i = 1,..., n, with corresponding matrix

Λ

r

:=

I

m

0 ··· 0

0

c

−1,1

c

+1,1

I

m

··· 0

.

.

.

.

.

.

.

.

.

.

.

.

0 0 ···

c

−1,n

c

+1,n

I

m

. (8)

This finally provides us with sufficient conditions which ensure the uniqueness of the Nash equilib-

rium.

3.2.1 DERIVATION OF THE PSEUDO-JACOBIAN

Throughout this section, we denote by ℓ

′

v

(z,y) and ℓ

′′

v

(z,y) the first and second derivative of the

mapping ℓ

v

(z,y) with respect to z ∈ R and use the abbreviations

ℓ

′

v,i

:= ℓ

′

v

(

˙

x

T

i

w,y

i

),

ℓ

′′

v,i

:= ℓ

′′

v

(

˙

x

T

i

w,y

i

),

for both players v ∈ {−1,+1} and i = 1, . . .,n.

To state the pseudo-Jacobian for the empirical costs given in (1) and (2), we first derive their

first-order partial derivatives,

∇

w

ˆ

θ

−1

(w,

˙

x) =

n

∑

i=1

c

−1,i

ℓ

′

−1,i

˙

x

i

+ ρ

−1

∇

w

ˆ

Ω

−1

(w), (9)

∇

˙

x

i

ˆ

θ

+1

(w,

˙

x) = c

+1,i

ℓ

′

+1,i

w + ρ

+1

∇

˙

x

i

ˆ

Ω

+1

(x,

˙

x). (10)

This allows us to calculate the entries of the pseudo-Jacobian given in (6),

∇

2

w,w

ˆ

θ

−1

(w,

˙

x) =

n

∑

i=1

c

−1,i

ℓ

′′

−1,i

˙

x

i

˙

x

T

i

+ ρ

−1

∇

2

w,w

ˆ

Ω

−1

(w),

∇

2

w,

˙

x

i

ˆ

θ

−1

(w,

˙

x) = c

−1,i

ℓ

′′

−1,i

˙

x

i

w

T

+ c

−1,i

ℓ

′

−1,i

I

m

,

∇

2

˙

x

i

,w

ˆ

θ

+1

(w,

˙

x) = c

+1,i

ℓ

′′

+1,i

w

˙

x

T

i

+ c

+1,i

ℓ

′

+1,i

I

m

,

∇

2

˙

x

i

,

˙

x

j

ˆ

θ

+1

(w,

˙

x) = δ

i j

c

+1,i

ℓ

′′

+1,i

ww

T

+ ρ

+1

∇

2

˙

x

i

,

˙

x

j

ˆ

Ω

+1

(x,

˙

x),

where δ

i j

denotes Kronecker’s delta which is 1 if i equals j and 0 otherwise.

We can express these equations more compact as matrix equations. Therefore, we use the

diagonal matrix Λ

r

as defined in (7) and set Γ

v

:= diag(c

v,1

ℓ

′′

v,1

,...,c

v,n

ℓ

′′

v,n

). Additionally, we define

˙

X ∈ R

n×m

as the matrix with rows

˙

x

T

1

,...,

˙

x

T

n

, and n matrices W

i

∈ R

n×m

with all entries set to zero

2625

BR

¨

UCKNER, KANZOW, AND SCHEFFER

except for the i-th row which is set to w

T

. Then,

∇

2

w,w

ˆ

θ

−1

(w,

˙

x) =

˙

X

T

Γ

−1

˙

X + ρ

−1

∇

2

w,w

ˆ

Ω

−1

(w),

∇

2

w,

˙

x

i

ˆ

θ

−1

(w,

˙

x) =

˙

X

T

Γ

−1

W

i

+ c

−1,i

ℓ

′

−1,i

I

m

,

∇

2

˙

x

i

,w

ˆ

θ

+1

(w,

˙

x) = W

T

i

Γ

+1

˙

X + c

+1,i

ℓ

′

+1,i

I

m

,

∇

2

˙

x

i

,

˙

x

j

ˆ

θ

+1

(w,

˙

x) = W

T

i

Γ

+1

W

j

+ ρ

+1

∇

2

˙

x

i

,

˙

x

j

ˆ

Ω

+1

(x,

˙

x).

Hence, the pseudo-Jacobian in (6) can be stated as follows,

J

r

(w,

˙

x) = Λ

r

˙

X 0 ··· 0

0 W

1

··· W

n

T

Γ

−1

Γ

−1

Γ

+1

Γ

+1

˙

X 0 ··· 0

0 W

1

··· W

n

+

Λ

r

ρ

−1

∇

2

w,w

ˆ

Ω

−1

(w) c

−1,1

ℓ

′

−1,1

I

m

··· c

−1,n

ℓ

′

−1,n

I

m

c

+1,1

ℓ

′

+1,1

I

m

ρ

+1

∇

2

˙

x

1

,

˙

x

1

ˆ

Ω

+1

(x,

˙

x) ··· ρ

+1

∇

2

˙

x

1

,

˙

x

n

ˆ

Ω

+1

(x,

˙

x)

.

.

.

.

.

.

.

.

.

.

.

.

c

+1,n

ℓ

′

+1,n

I

m

ρ

+1

∇

2

˙

x

n

,

˙

x

1

ˆ

Ω

+1

(x,

˙

x) ··· ρ

+1

∇

2

˙

x

n

,

˙

x

n

ˆ

Ω

+1

(x,

˙

x)

.

We now aim at decomposing the right-hand expression in order to verify the definiteness of the

pseudo-Jacobian.

3.2.2 DECOMPOSITION OF THE PSEUDO-JACOBIAN

To verify the positive definiteness of the pseudo-Jacobian, we further decompose the second sum-

mand of the above expression into a positive semi-definite and a strictly positive definite matrix.

Therefore, let us denote the smallest eigenvalues of the Hessians of the regularizers on the corre-

sponding action spaces W and φ(X )

n

by

λ

−1

:= inf

w∈W

λ

min

∇

2

w,w

ˆ

Ω

−1

(w)

, (11)

λ

+1

:= inf

˙

x∈φ(X )

n

λ

min

∇

2

˙

x,

˙

x

ˆ

Ω

+1

(x,

˙

x)

, (12)

where λ

min

(A) denotes the smallest eigenvalue of the symmetric matrix A.

Remark 3 Note that the minimum in (11) and (12) is attained and is strictly positive: The mapping

λ

min

: M

k×k

→ R is concave on the set of symmetric matrices M

k×k

of dimension k ×k (cf. Exam-

ple 3.10 in Boyd and Vandenberghe, 2004), and in particular, it therefore follows that this mapping

is continuous. Furthermore, the mappings u

−1

: W → M

m×m

with u

−1

(w) := ∇

2

w,w

ˆ

Ω

−1

(w) and

u

+1

: φ(X )

n

→M

m·n×m·n

with u

+1

(

˙

x) := ∇

2

˙

x,

˙

x

ˆ

Ω

+1

(x,

˙

x) are continuous (for any fixed x) by Assump-

tion 2. Hence, the mappings w 7→ λ

min

(u

−1

(w)) and

˙

x 7→ λ

min

(u

+1

(

˙

x)) are also continuous since

each is precisely the composition λ

min

◦u

v

of the continuous functions λ

min

and u

v

for v ∈{−1, +1}.

Taking into account that a continuous mapping on a non-empty compact set attains its minimum, it

follows that there exist elements w ∈ W and

˙

x ∈ φ(X )

n

such that

λ

−1

= λ

min

∇

2

w,w

ˆ

Ω

−1

(w)

,

λ

+1

= λ

min

∇

2

˙

x,

˙

x

ˆ

Ω

+1

(x,

˙

x)

.

Moreover, since the Hessians of the regularizers are positive definite by Assumption 2, we see that

λ

v

> 0 holds for v ∈{−1,+1}. 3

2626

STATIC PREDICTION GAMES FOR ADVERSARIAL LEARNING PROBLEMS

By the above definitions, we can decompose the regularizers’ Hessians as follows,

∇

2

w,w

Ω

−1

(w) = λ

−1

I

m

+ (∇

2

w,w

Ω

−1

(w) −λ

−1

I

m

),

∇

2

˙

x,

˙

x

Ω

+1

(x,

˙

x) = λ

+1

I

m·n

+ (∇

2

˙

x,

˙

x

Ω

+1

(x,

˙

x) −λ

+1

I

m·n

).

As the regularizers are strictly convex, λ

v

are positive so that for each of the above equations the

first summand is positive definite and the second summand is positive semi-definite.

Proposition 4 The pseudo-Jacobian has the representation

J

r

(w,

˙

x) = J

(1)

r

(w,

˙

x) + J

(2)

r

(w,

˙

x) + J

(3)

r

(w,

˙

x) (13)

where

J

(1)

r

(w,

˙

x) = Λ

r

X 0 ··· 0

0 W

1

··· W

n

T

Γ

−1

Γ

−1

Γ

+1

Γ

+1

X 0 ··· 0

0 W

1

··· W

n

,

J

(2)

r

(w,

˙

x) = Λ

r

ρ

−1

λ

−1

I

m

c

−1,1

ℓ

′

−1,1

I

m

··· c

−1,n

ℓ

′

−1,n

I

m

c

+1,1

ℓ

′

+1,1

I

m

ρ

+1

λ

+1

I

m

··· 0

.

.

.

.

.

.

.

.

.

.

.

.

c

+1,n

ℓ

′

+1,n

I

m

0 ··· ρ

+1

λ

+1

I

m

,

J

(3)

r

(w,

˙

x) = Λ

r

ρ

−1

∇

2

w,w

ˆ

Ω

−1

(w) −ρ

−1

λ

−1

I

m

0

0 ρ

+1

∇

2

˙

x,

˙

x

ˆ

Ω

+1

(x,

˙

x) −ρ

+1

λ

+1

I

m·n

.

The above proposition restates the pseudo-Jacobian as a sum of the three matrices J

(1)

r

(w,

˙

x),

J

(2)

r

(w,

˙

x), and J

(3)

r

(w,

˙

x). Matrix J

(1)

r

(w,

˙

x) contains all ℓ

′′

v,i

terms, J

(2)

r

(w,

˙

x) is a composition of

scaled identity matr ices, and J

(3)

r

(w,

˙

x) contains the Hessians of the r egularizers where the diagonal

entries are reduced by ρ

−1

λ

−1

and ρ

+1

λ

+1

, respectively. We further analyze these matrices in the

following section.

3.2.3 DEFINITENESS OF THE SUMMANDS OF THE PSEUDO-JACOBIAN

Recall, that we want to investigate whether the pseudo-Jacobian J

r

(w,

˙

x) is positive definite for

each pair of actions (w,

˙

x) ∈ W ×φ(X )

n

. A sufficient condition is that J

(1)

r

(w,

˙

x), J

(2)

r

(w,

˙

x), and

J

(3)

r

(w,

˙

x) are positive semi-definite and at least one of these matrices is positive definite. From

the definition of λ

v

, it becomes apparent that J

(3)

r

is positive semi-definite. In addition, J

(2)

r

(w,

˙

x)

obviously becomes positive definite for sufficiently large ρ

v

as, in this case, the main diagonal

dominates the non-diagonal entries. Finally, J

(1)

r

(w,

˙

x) becomes positive semi-definite under some

mild conditions on the loss functions.

In the following we derive these conditions, state lower bounds on the regularization parameters

ρ

v

, and provide formal proofs of the above claims. Therefore, we make the following assumptions

on the loss functions ℓ

v

and the regularizers

ˆ

Ω

v

for v ∈ {−1, +1}. Instances of these functions

satisfying Assumptions 2 and 3 will be given in Section 5. A discussion on the practical implications

of these assumptions is given in the subsequent section.

Assumption 3 For all w ∈ W and

˙

x ∈ φ(X )

n

with

˙

x =

˙

x

T

1

,...,

˙

x

T

n

T

the following conditions are

satisfied:

2627

BR

¨

UCKNER, KANZOW, AND SCHEFFER

1. the second derivatives of the loss functions are equal for all y ∈ Y and i = 1,...,n,

ℓ

′′

−1

( f

w

(

˙

x

i

),y) = ℓ

′′

+1

( f

w

(

˙

x

i

),y),

2. the players’ regularization parameters satisfy

ρ

−1

ρ

+1

> τ

2

1

λ

−1

λ

+1

c

T

−1

c

+1

,

where λ

−1

, λ

+1

are the smallest eigenvalues of the Hessians of the regularizers specified

in (11) and (12), c

v

= [c

v,1

,c

v,2

,...,c

v,n

]

T

, and

τ = sup

(x,y)∈φ(X )×Y

1

2

ℓ

′

−1

( f

w

(x),y) + ℓ

′

+1

( f

w

(x),y)

, (14)

3. for all i = 1,...,n either both players have equal instance-specific cost factors, c

−1,i

= c

+1,i

,

or the partial derivative ∇

˙

x

i

Ω

+1

(x,

˙

x) of the data generator’s regularizer is independent of

˙

x

j

for all j 6= i.

Notice, that τ in Equation 14 can be chosen to be finite as the set φ(X ) ×Y is assumed to be

compact, and consequently, the values of both continuous mappings ℓ

′

−1

( f

w

(x),y) and ℓ

′

+1

( f

w

(x),y)

are finite for all (x,y) ∈ φ(X ) ×Y .

Lemma 5 Let (w,

˙

x) ∈ W ×φ(X )

n

be arbitrarily given. Under Assumptions 2 and 3, the matrix

J

(1)

r

(w,

˙

x) is symmetric positive semi-definite (but not positive definite) for Λ

r

defined as in Equa-

tion 8.

Proof. The special structure of Λ

r

,

˙

X, and W

i

gives

J

(1)

r

(w,

˙

x) =

˙

X 0 ··· 0

0 W

1

··· W

n

T

r

0

Γ

−1

r

0

Γ

−1

ϒΓ

+1

ϒΓ

+1

˙

X 0 ··· 0

0 W

1

··· W

n

,

with ϒ := diag(r

1

,...,r

n

). From the as sumption ℓ

′′

−1,i

= ℓ

′′

+1,i

and the definition r

0

= 1, r

i

=

c

−1,i

c

+1,i

> 0

for all i = 1,..., n it follows that Γ

−1

= ϒΓ

+1

, such that

J

(1)

r

(w,

˙

x) =

˙

X 0 ··· 0

0 W

1

··· W

n

T

Γ

−1

Γ

−1

Γ

−1

Γ

−1

˙

X 0 ··· 0

0 W

1

··· W

n

,

which is obviously a symmetric matrix. Furthermore, we show that z

T

J

(1)

r

(w,

˙

x)z ≥ 0 holds for

all vectors z ∈ R

m+m·n

. To this end, let z be arbitrarily given, and partition this vector in z =

z

T

0

,z

T

1

,...,z

T

n

T

with z

i

∈ R

m

for all i = 0,1,..., n. Then a simple calculation shows that

z

T

J

(1)

r

(w,

˙

x)z =

n

∑

i=1

z

T

0

x

i

+ z

T

i

w

2

c

−1,i

ℓ

′′

−1,i

≥ 0

since ℓ

′′

−1,i

≥ 0 f or all i = 1,..., n in view of the assumed convexity of mapping ℓ

−1

(z,y). Hence,

J

(1)

r

(w,

˙

x) is positive semi-definite. This matrix cannot be positive definite since we have

z

T

J

(1)

r

(w,

˙

x)z = 0 for the particular vector z defined by z

0

:= −w and z

i

:= x

i

for all i = 1,. . . , n.

2628

STATIC PREDICTION GAMES FOR ADVERSARIAL LEARNING PROBLEMS

Lemma 6 Let (w,

˙

x) ∈ W ×φ(X )

n

be arbitrarily given. Under Assumptions 2 and 3, the matrix

J

(2)

r

(w,

˙

x) is positive definite for Λ

r

defined as in Equation 8.

Proof. A sufficient and necessary condition for the (possibly asymmetric) matrix J

(2)

r

(w,

˙

x) to be

positive definite is that the Hermitian matrix

H(w,

˙

x) := J

(2)

r

(w,

˙

x) + J

(2)

r

(w,

˙

x)

T

is positive definite, that is, all eigenvalues of H(w,

˙

x) are positive. Let Λ

1

2

r

denote the square root

of Λ

r

which is defined in such a way that the diagonal elements of Λ

1

2

r

are the square roots of the

corresponding diagonal elements of Λ

r

. Furthermore, we denote by Λ

−

1

2

r

the inverse of Λ

1

2

r

. Then,

by Sylvester’s law of inertia, the matrix

¯

H(w,

˙

x) := Λ

−

1

2

r

H(w,

˙

x)Λ

−

1

2

r

has the same number of positive, zero, and negative eigenvalues as matrix H(w,

˙

x) itself.

Hence, J

(2)

r

(w,

˙

x) is positive definite if, and only if, all eigenvalues of

¯

H(w,

˙

x) = Λ

−

1

2

r

J

(2)

r

(w,

˙

x) + J

(2)

r

(w,

˙

x)

T

Λ

−

1

2

r

= Λ

−

1

2

r

Λ

r

ρ

−1

λ

−1

I

m

c

−1,1

ℓ

′

−1,1

I

m

··· c

−1,n

ℓ

′

−1,n

I

m

c

+1,1

ℓ

′

+1,1

I

m

ρ

+1

λ

+1

I

m

··· 0

.

.

.

.

.

.

.

.

.

.

.

.

c

+1,n

ℓ

′

+1,n

I

m

0 ··· ρ

+1

λ

+1

I

m

Λ

−

1

2

r

+

Λ

−

1

2

r

ρ

−1

λ

−1

I

m

c

+1,1

ℓ

′

+1,1

I

m

··· c

+1,n

ℓ

′

+1,n

I

m

c

−1,1

ℓ

′

−1,1

I

m

ρ

+1

λ

+1

I

m

··· 0

.

.

.

.

.

.

.

.

.

.

.

.

c

−1,n

ℓ

′

−1,n

I

m

0 ··· ρ

+1

λ

+1

I

m

Λ

r

Λ

−

1

2

r

=

2ρ

−1

λ

−1

I

m

˜c

1

I

m

··· ˜c

n

I

m

˜c

1

I

m

2ρ

+1

λ

+1

I

m

··· 0

.

.

.

.

.

.

.

.

.

.

.

.

˜c

n

I

m

0 ··· 2ρ

+1

λ

+1

I

m

are positive, where ˜c

i

:=

√

c

−1,i

c

+1,i

(ℓ

′

−1,i

+ ℓ

′

+1,i

). Each eigenvalue λ of this matrix satisfies

¯

H(w,

˙

x) −λI

m+m·n

v = 0

for the corresponding eigenvector v

T

=

v

T

0

,v

T

1

,...,v

T

n

with v

i

∈R

m

for i = 0, 1, . . .,n. This eigen-

value equation can be rewritten block-wise as

(2ρ

−1

λ

−1

−λ)v

0

+

n

∑

i=1

˜c

i

v

i

= 0, (15)

(2ρ

+1

λ

+1

−λ)v

i

+ ˜c

i

v

0

= 0 ∀i = 1,...,n. (16)

2629

BR

¨

UCKNER, KANZOW, AND SCHEFFER

To compute all possible eigenvalues, we consider two cases. First, assume that v

0

= 0. Then (15)

and (16) reduce to

n

∑

i=1

˜c

i

v

i

= 0 and (2ρ

+1

λ

+1

−λ)v

i

= 0 ∀i = 1,...,n.

Since v

0

= 0 and eigenvector v 6= 0, at least one v

i

is non-zero. This implies that λ = 2ρ

+1

λ

+1

is

an eigenvalue. Using the fact that the null space of the linear mapping v 7→

∑

n

i=1

˜c

i

v

i

has dimension

(n −1)·m (we have n ·m degrees of freedom counting all components of v

1

,...,v

n

and m equations

in

∑

n

i=1

˜c

i

v

i

= 0), it follows that λ = 2ρ

+1

λ

+1

is an eigenvalue of multiplicity (n −1) ·m.

Now we consider the second case where v

0

6= 0. We may further assume that λ 6= 2ρ

+1

λ

+1

(since otherwise we get the same eigenvalue as before, just with a different multiplicity). We then

get from (16) that

v

i

= −

˜c

i

2ρ

+1

λ

+1

−λ

v

0

∀i = 1,..., n, (17)

and when substituting this expression into (15), we obtain

(2ρ

−1

λ

−1

−λ) −

n

∑

i=1

˜c

2

i

2ρ

+1

λ

+1

−λ

!

v

0

= 0.

Taking into account that v

0

6= 0, this implies

0 = 2ρ

−1

λ

−1

−λ −

1

2ρ

+1

λ

+1

−λ

n

∑

i=1

˜c

2

i

and, therefore,

0 = λ

2

−2(ρ

−1

λ

−1

+ ρ

+1

λ

+1

)λ + 4ρ

−1

ρ

+1

λ

−1

λ

+1

−

n

∑

i=1

˜c

2

i

.

The roots of this quadratic equation are

λ = ρ

−1

λ

−1

+ ρ

+1

λ

+1

±

s

(ρ

−1

λ

−1

−ρ

+1

λ

+1

)

2

+

n

∑

i=1

˜c

2

i

, (18)

and these are the remaining eigenvalues of

¯

H(w,

˙

x), each of multiplicity m since there are precisely

m linearly independent vectors v

0

6= 0 whereas the other vectors v

i

(i = 1,...,n) are uniquely defined

by (17) in this case. In particular, this implies that the dimensions of all three eigenspaces together

is (n −1)m + m + m = (n + 1)m, hence other eigenvalues cannot exis t. Since the eigenvalue λ =

2ρ

+1

λ

+1

is positive by Remark 3, it remains to show that the roots in (18) are positive as well. By

Assumption 3, we have

n

∑

i=1

˜c

2

i

=

n

∑

i=1

c

−1,i

c

+1,i

(ℓ

′

−1,i

+ ℓ

′

+1,i

)

2

≤ 4τ

2

c

T

−1

c

+1

< 4ρ

−1

ρ

+1

λ

−1

λ

+1

,

where c

v

= [c

v,1

,c

v,2

,··· ,c

v,n

]

T

. This inequality and Equation 18 give

λ = ρ

−1

λ

−1

+ ρ

+1

λ

+1

±

s

(ρ

−1

λ

−1

−ρ

+1

λ

+1

)

2

+

n

∑

i=1

˜c

2

i

> ρ

−1

λ

−1

+ ρ

+1

λ

+1

−

q

(ρ

−1

λ

−1

−ρ

+1

λ

+1

)

2

+ 4ρ

−1

ρ

+1

λ

−1

λ

+1

= 0.

2630

STATIC PREDICTION GAMES FOR ADVERSARIAL LEARNING PROBLEMS

As all eigenvalues of

¯

H(w,

˙

x) are positive, matrix H(w,

˙

x) and, consequently, also the matrix

J

(2)

r

(w,

˙

x) are positive definite.

Lemma 7 Let (w,

˙

x) ∈ W ×φ(X )

n

be arbitrarily given. Under Assumptions 2 and 3, the matrix

J

(3)

r

(w,

˙

x) is positive semi-definite for Λ

r

defined as in Equation 8.

Proof. By Assumption 3, either both players have equal instance-specific costs, or the partial gradi-

ent ∇

˙

x

i

ˆ

Ω

+1

(x,

˙

x) of the sender’s regularizer is independent of

˙

x

j

for all j 6= i and i = 1,..., n. Let

us consider the first case where c

−1,i

= c

+1,i

, and consequently r

i

= 1, for all i = 1,...,n, such that

J

(3)

r

(w,

˙

x) =

ρ

−1

∇

2

w,w

ˆ

Ω

−1

(w) −ρ

−1

λ

−1

I

m

0

0 ρ

+1

∇

2

˙

x,

˙

x

ˆ

Ω

+1

(x,

˙

x) −ρ

+1

λ

+1

I

m·n

.

The eigenvalues of this block diagonal matrix are the eigenvalues of the matrix

ρ

−1

(∇

2

w,w

ˆ

Ω

−1

(w) −λ

−1

I

m

) together with those of ρ

+1

(∇

2

˙

x,

˙

x

ˆ

Ω

+1

(x,

˙

x) −λ

+1

I

m·n

). From the defi-

nition of λ

v

in (11) and (12) follows that these matrices are positive semi-definite for v ∈ {−1, +1}.

Hence, J

(3)

r

(w,

˙

x) is positive semi-definite as well.

Now, let us consider the second case where we assume that ∇

˙

x

i

ˆ

Ω

+1

(x,

˙

x) is independent of

˙

x

j

for all j 6= i. Hence, ∇

2

˙

x

i

,

˙

x

j

ˆ

Ω

+1

(x,

˙

x) = 0 for all j 6= i such that

J

(3)

r

(w,

˙

x) =

ρ

−1

˜

Ω

−1

0 ··· 0

0 ρ

+1

c

−1,1

c

+1,1

˜

Ω

+1,1

··· 0

.

.

.

.

.

.

.

.

.

.

.

.

0 0 ··· ρ

+1

c

−1,n

c

+1,n

˜

Ω

+1,n

,

where

˜

Ω

−1

:= ∇

2

w,w

ˆ

Ω

−1

(w) −λ

−1

I

m

and

˜

Ω

+1,i

= ∇

2

˙

x

i

,

˙

x

i

ˆ

Ω

+1

(x,

˙

x) −λ

+1

I

m

. The eigenvalues of this

block diagonal matrix are again the union of the eigenvalues of the single blocks ρ

−1

˜

Ω

−1

and

ρ

+1

c

−1,i

c

+1,i

˜

Ω

+1,i

for i = 1,...,n. As in the first part of the proof,

˜

Ω

−1

is positive semi-definite. The

eigenvalues of ∇

2

˙

x,

˙

x

ˆ

Ω

+1

(x,

˙

x) are the union of all eigenvalues of ∇

2

˙

x

i

,

˙

x

i

ˆ

Ω

+1

(x,

˙

x). Hence, each of

these eigenvalues is larger or equal to λ

+1

and thus, each block

˜

Ω

+1,i

is positive semi-definite. The

factors ρ

−1

> 0 and ρ

+1

c

−1,i

c

+1,i

> 0 are multipliers that do not affect the definiteness of the blocks, and

consequently, J

(3)

r

(w,

˙

x) is positive semi-definite as well.

The previous results guarantee the existence and uniqueness of a Nash equilibrium under the

stated assumptions.

Theorem 8 Let Assumptions 2 and 3 hold. Then the Nash prediction game in (3) has a unique

equilibrium.

Proof. The existence of an equilibrium of the Nash prediction game in (3) follows from Lemma 1.

Proposition 4 and Lemma 5 to 7 imply that there is a positive diagonal matrix Λ

r

such that J

r

(w,

˙

x)

is positive definite for all (w,

˙

x) ∈ W ×φ(X )

n

. Hence, the uniqueness follows from Lemma 2.

2631

BR

¨

UCKNER, KANZOW, AND SCHEFFER

3.2.4 PRACTICAL IMPLICATIONS OF ASSUMPTIONS 2 AND 3

Theorem 8 guarantees the uniqueness of the equilibrium only if the cost functions of learner and

data generator relate in a certain way that is defined by Assumption 3. In addition, each of the

cost functions has to satisfy Assumption 2. This section discusses the practical implication of these

assumptions.

The conditions of Assumption 2 impose rather technical limitations on the cost functions . The

requirement of convexity is quite ordinary in the machine learning context. In addition, the loss

function has to be twice continuously differentiable, which restricts the family of eligible loss func-

tions. However, this condition can still be met easily; for ins tance, by smoothed versions of the

hinge loss. The second requirement of uniformly strongly convex and twice continuously differ-

entiable regularizers is, again, only a week restriction in practice. These requirements are met by

standard regularizers; they occur, for instance, in the optimization criteria of SVMs and logistic

regression. The requirement of non-empty, compact, and convex action spaces may be a restriction

when dealing with binary or multinomial attributes. However, relaxing the action spaces of the data

generator would typically result in a strategy that is more defensive than would be optimal but still

less defensive than a worst-case strategy.

The first condition of Assumptions 3 requires the cost functions of learner and data generator

to have the same curvatures. This is a crucial restriction; if the cost functions differ arbitrarily the

Nash equilibrium may not be unique. T he requirement of identical curvatures is met, for instance,

if one player chooses a loss function ℓ( f

w

(

˙

x

i

),y) which only depends on the term y f

w

(

˙

x

i

), such as

for SVM’s hinge loss or the logistic loss. In this case, the condition is met when the other player

chooses the ℓ(−f

w

(

˙

x

i

),y). This loss is in some sense the opposite of ℓ( f

w

(

˙

x

i

),y) as it approaches

zero when the other goes to infinity and vice versa. In this case, the cost functions may still be

non-antagonistic because the player’s cost functions may contain instance-specific cost factors c

v,i

that can be modeled independently for the players.

The second part of Assumptions 3 couples the degree of regularization of the players. If the data

generator produces instances at application time that differ greatly from the instances at training

time, then the learner is required to regularize strongly for a unique equilibrium to exist. If the

distributions at training and application time are more similar, the equilibrium is unique for smaller

values of the learner’s regularization parameters. This requirement is in line with the intuition that

when the training instances are a poor approximation of the distribution at application time, then

imposing only weak regularization on the loss function will result in a poor model.

The final requirement of As sumptions 3 is, again, rather a technical limitation. It states that the

interdependencies between the players’ instance-specific costs must be either captured by the regu-

larizers, leading to a full Hessian, or by cost factors. These cost factors of learner and data generator

may differ arbitrarily if the gradient of the data generator’s costs of transforming an instance x

i

into

˙

x

i

are independent of all other instances

˙

x

j

with j 6= i. This is met, for instance, by cost models that

only depend on some measure of the distance between x

i

and

˙

x

i

.

4. Finding the Unique Nash Equilibrium

According to Theorem 8, a unique equilibrium of the Nash prediction game in (3) exists for suitable

loss functions and regularizers. To find this equilibrium, we derive and study two distinct methods:

The first is based on the Nikaido-Isoda function that is constructed such that a minimax solution of

this function is an equilibrium of the Nash prediction game and vice versa. This problem is then

2632

STATIC PREDICTION GAMES FOR ADVERSARIAL LEARNING PROBLEMS

solved by inexact linesearch. In the second approach, we reformulate the Nash prediction game into

a variational inequality problem which is solved by a modified extragradient method.

The data generator’s action of transforming the input distribution manifests in a concatenation of

transformed training instances

˙

x ∈ φ(X )

n

mapped into the feature space

˙

x

i

:= φ( ˙x

i

) for i = 1,...,n,

and the learner’s action is to choose weight vector w ∈W of classifier h(x) = sign f

w

(x) with linear

decision function f

w

(x) = w

T

φ(x).

4.1 An Inexact Linesearch Approach

To solve for a Nash equilibrium, we again consider the game from (4) with one learner and n data

generators. A solution of this game can be identified with the help of the weighted Nikaido-Isoda

function (Equation 19). For any two combinations of actions (w,

˙

x) ∈ W ×φ(X )

n

and (w

′

,

˙

x

′

) ∈

W ×φ(X )

n

with

˙

x =

˙

x

T

1

,...,

˙

x

T

n

T

and

˙

x

′

=

˙

x

′T

1

,...,

˙

x

′T

n

T

, this function is the weighted s um of

relative cost savings that the n +1 players can enjoy by changing from strategy w to w

′

and

˙

x

i

to

˙

x

′

i

,

respectively, while the other players continue to play according to (w,

˙

x); that is,

ϑ

r

(w,

˙

x,w

′

,

˙

x

′

) := r

0

ˆ

θ

−1

(w,

˙

x) −

ˆ

θ

−1

(w

′

,

˙

x)

+

n

∑

i=1

r

i

ˆ

θ

+1

(w,

˙

x) −

ˆ

θ

+1

(w,

˙

x

(i)

)

, (19)

where

˙

x

(i)

:=

˙

x

T

1

,...,

˙

x

′T

i

,...,

˙

x

T

n

T

. Let us denote the weighted sum of greatest possible cost sav-

ings with respect to any given combination of actions (w,

˙

x) ∈ W ×φ(X )

n

by

¯

ϑ

r

(w,

˙

x) := max

(w

′

,

˙

x

′

)∈W × φ(X )

n

ϑ

r

(w,

˙

x,w

′

,

˙

x

′

), (20)

where

¯

w(w,

˙

x),

¯

x(w,

˙

x) denotes the corresponding pair of maximizers. Note that the maximum

in (20) is attained for any (w,

˙

x), since W ×φ(X )

n

is assumed to be compact and ϑ

r

(w,

˙

x,w

′

,

˙

x

′

) is

continuous in (w

′

,

˙

x

′

).

By these definitions, a combination (w

∗

,

˙

x

∗

) is an equilibrium of the Nash prediction game if,

and only if,

¯

ϑ

r

(w

∗

,

˙

x

∗

) is a global minimum of mapping

¯

ϑ

r

with

¯

ϑ

r

(w

∗

,

˙

x

∗

) = 0 for any fixed weights

r

i

> 0 and i = 0, . . .,n, see Proposition 2.1(b) of von Heusinger and Kanzow (2009). Equivalently,

a Nash equilibrium simultaneously satisfies both equations

¯

w(w

∗

,

˙

x

∗

) = w

∗

and

¯

x(w

∗

,

˙

x

∗

) =

˙

x

∗

.

The significance of this observation is that the equilibrium problem in (3) can be reformulated

into a minimization problem of the continuous mapping

¯

ϑ

r

(w,

˙

x). To solve this minimization prob-

lem, we make use of Corollary 3.4 (von Heusinger and Kanzow, 2009). We set the weights r

0

:= 1

and r

i

:=

c

−1,i

c

+1,i

for all i = 1,. . . , n as in (8), which ensures the main condition of Corollary 3.4; that

is, the positive definiteness of the Jacobian J

r

(w,

˙

x) in (13) (cf. proof of Theorem 8). According to

this corollary, vectors

d

−1

(w,

˙

x) :=

¯

w(w,

˙

x) −w and d

+1

(w,

˙

x) :=

¯

x(w,

˙

x) −

˙

x

form a descent direction d(w,

˙

x) := [d

−1

(w,

˙

x)

T

,d

+1

(w,

˙

x)

T

]

T

of

¯

ϑ

r

(w,

˙

x) at any position (w,

˙

x) ∈

W ×φ(X )

n

(except for the Nash equilibrium where d(w

∗

,

˙

x

∗

) = 0), and consequently, there exists

t ∈ [0, 1] such that

¯

ϑ

r

(w +td

−1

(w,

˙

x),

˙

x +td

+1

(w,

˙

x)) <

¯

ϑ

r

(w,

˙

x).

Since, (w,

˙

x) and (

¯

w(w,

˙

x),

¯

w(w,

˙

x)) are feasible combinations of actions, the convexity of the action

spaces ensures that (w + td

−1

(w,

˙

x),

˙

x + td

+1

(w,

˙

x)) is a feasible combination for any t ∈ [0,1] as

well. The following algorithm exploits these properties.

2633

BR

¨

UCKNER, KANZOW, AND SCHEFFER

Algorithm 1 ILS: Inexact Linesearch Solver for Nash Prediction Games

Require: Cost functions

ˆ

θ

v

as defined in (1) and (2), and action spaces W and φ(X )

n

.

1: Select initial w

(0)

∈ W , set

˙

x

(0)

:= x, set k := 0, and select σ ∈(0,1) and β ∈ (0,1).

2: Set r

0

:= 1 and r

i

:=

c

−1,i

c

+1,i

for all i = 1,..., n.

3: repeat

4: Set d

(k)

−1

:=

¯

w

(k)

−w

(k)

where

¯

w

(k)

:= argmax

w

′

∈W

ϑ

r

w

(k)

,

˙

x

(k)

,w

′

,

˙

x

(k)

.

5: Set d

(k)

+1

:=

¯

x

(k)

−

˙

x

(k)

where

¯

x

(k)

:= argmax

˙

x

′

∈φ(X )

n

ϑ

r

w

(k)

,

˙

x

(k)

,w

(k)

,

˙

x

′

.

6: Find maximal step size t

(k)

∈

β

l

| l ∈ N

with

¯

ϑ

r

w

(k)

,

˙

x

(k)

−

¯

ϑ

r

w

(k)

+t

(k)

d

(k)

−1

,

˙

x

(k)

+t

(k)

d

(k)

+1

≥ σt

(k)

d

(k)

−1

2

2

+

d

(k)

+1

2

2

.

7: Set w

(k+1)

:= w

(k)

+t

(k)

d

(k)

−1

.

8: Set

˙

x

(k+1)

:=

˙

x

(k)

+t

(k)

d

(k)

+1

.

9: Set k := k + 1.

10: until

w

(k)

−w

(k−1)

2

2

+

˙

x

(k)

−

˙

x

(k−1)

2

2

≤ ε.

The convergence properties of Algorithm 1 are discussed by von Heusinger and Kanzow (2009),

so we skip the details here.

4.2 A Modified Extragradient Approach

In Algorithm 1, line 4 and 5, as well as the linesearch in line 6, require to solve a concave maximiza-

tion problem within each iteration. As this may become computationally demanding, we derive a

second approach based on extragradient descent. Therefore, instead of reformulating the equilib-

rium problem into a minimax problem, we directly address the first-order optimality conditions of

each players’ minimization problem in (4): Under Assumption 2, a combination of actions (w

∗

,

˙

x

∗

)

with

˙

x

∗

=

˙

x

∗T

1

,...,

˙

x

∗T

n

T

satisfies each player’s first-order optimality conditions if, and only if, for

all (w,

˙

x) ∈ W ×φ(X )

n

the following inequalities hold,

∇

w

ˆ

θ

−1

(w

∗

,

˙

x

∗

)

T

(w −w

∗

) ≥ 0,

∇

˙

x

i

ˆ

θ

+1

(w

∗

,

˙

x

∗

)

T

(

˙

x

i

−

˙

x

∗

i

) ≥ 0 ∀i = 1, . . . , n.

As the joint action space of all players W ×φ(X )

n

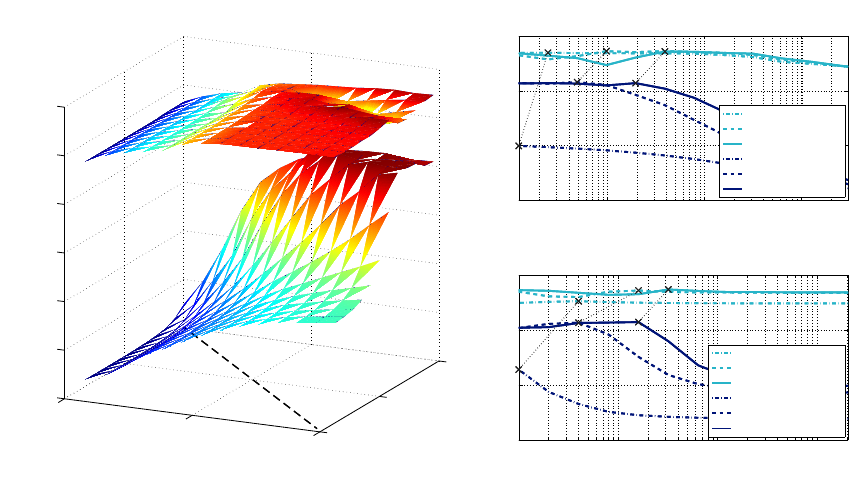

is precisely the full Cartesian product of the