Withdrawn Draft

1

2

3

Warning Notice

The attached draft document has been withdrawn, and is provided solely for historical purposes.

It has been superseded by the document identified below.

Withdrawal Date

July 26, 2024

Original Release Date

April 29, 2024

4

5

6

Superseding Document

Status

Final

Series/Number

NIST AI 600-1

Title

Artificial Intelligence Risk Management Framework: Generative

Artificial Intelligence Profile

Publication Date

July 2024

DOI

https://doi.org/10.6028/NIST.AI.600-1

7

8

9

10

ii

NIST AI 600-1

1

Inial Public Dra

2

3

Artificial Intelligence Risk

4

Management Framework:

5

Generative Artificial Intelligence

6

Profile

7

8

9

10

This publication is available free of charge from:

11

[DOI link TK]

12

13

April 2024

14

15

16

17

18

19

20

21

22

23

iii

NIST AI 600-1

1

Inial Public Dra

2

3

Arcial Intelligence Risk Management

4

Framework: Generave Arcial

5

Intelligence Prole

6

7

8

This publicaon is available free of charge from:

9

[DOI link TK]

10

11

12

13

14

15

16

17

18

19

20

21

iv

NIST makes the following notes regarding this document:

• NIST plans to host this document on the NIST AIRC once nal, where organizaons can query

acons based on keywords and risks.

NIST specically welcomes feedback on the following topics:

• Glossary Terms: NIST will add a glossary to this document with novel keywords. NIST

welcomes idencaon of terms to include in the glossary.

• Risk List: Whether the document should further sort or categorize the 12 risks identified (i.e.,

between technical / model risks, misuse by humans, or ecosystem / societal risks).

• Acons: Whether certain acons could be combined, condensed, or further categorized; and

feedback on the risks associated with certain acons.

Comments on NIST AI 600-1 may be sent electronically to NIST-AI-600[email protected] with “NIST AI 600-1”

in the subject line or submied via www.regulaons.gov (enter NIST-2024-0001 in the search eld.)

Comments containing informaon in response to this noce must be received on or before June 2,

2024, at 11:59 PM Eastern Time.

1

v

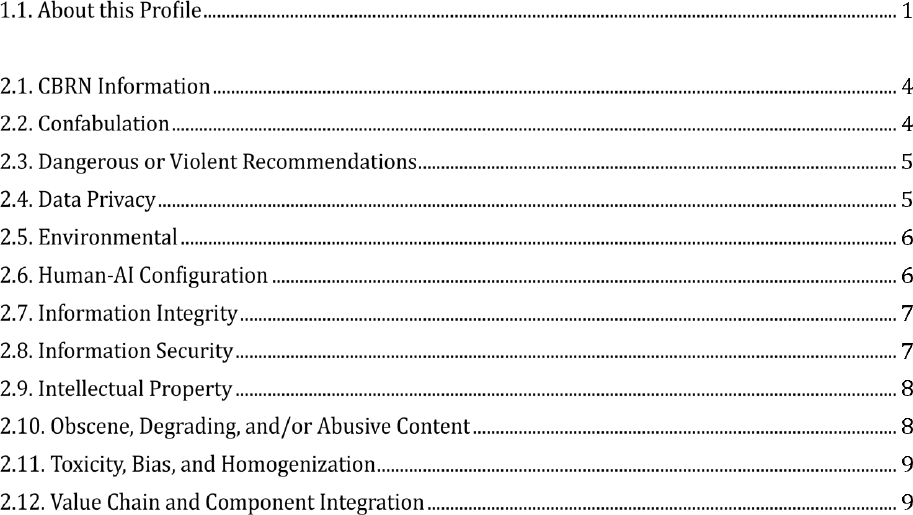

Table of Contents

1

1. Introduction ........................................................................................................................................................ 1

2

3

2. Overview of Risks Unique to or Exacerbated by GAI ............................................................................ 2

4

5

6

7

8

9

10

11

12

13

14

15

16

3. Actions to Manage GAI Risks ...................................................................................................................... 10

17

Appendix A. Primary GAI Consideraons ........................................................................................................ 62

18

Appendix B. References .................................................................................................................................... 69

19

20

21

Acknowledgments: This report was accomplished with the many helpful comments and contribuons

22

from the community, including the NIST Generave AI Public Working Group, and NIST sta and guest

23

researchers: Chloe Auo, Patrick Hall, Shomik Jain, Reva Schwartz, Marn Stanley, Kamie Roberts, and

24

Elham Tabassi.

25

Disclaimer: Certain commercial enes, equipment, or materials may be idened in this document in

26

order to adequately describe an experimental procedure or concept. Such idencaon is not intended to

27

imply recommendaon or endorsement by the Naonal Instute of Standards and Technology, nor is it

28

intended to imply that the enes, materials, or equipment are necessarily the best available for the

29

purpose. Any menon of commercial, non-prot, academic partners, or their products, or references is

30

for informaon only; it is not intended to imply endorsement or recommendaon by any U.S.

31

Government agency.

32

1

1. Introducon

1

This document is a companion resource for Generave AI

1

to the AI Risk Management Framework (AI

2

RMF), pursuant to President Biden’s Execuve Order (EO) 14110 on Safe, Secure, and Trustworthy

3

Arcial Intelligence.

2

The AI RMF was released in January 2023, and is intended for voluntary use and to

4

improve the ability of organizaons to incorporate trustworthiness consideraons into the design,

5

development, use, and evaluaon of AI products, services, and systems.

6

This companion resource also serves as both a use-case and cross-sectoral prole of the AI RMF 1.0.

7

Such proles assist organizaons in deciding how they might best manage AI risk in a manner that is

8

well-aligned with their goals, considers legal/regulatory requirements and best pracces, and reects

9

risk management priories.

10

Use-case proles are implementaons of the AI RMF funcons, categories, and subcategories for a

11

specic seng or applicaon – in this case, Generave AI (GAI) – based on the requirements, risk

12

tolerance, and resources of the Framework user. Consistent with other AI RMF Proles, this prole oers

13

insights into how risk can be managed across various stages of the AI lifecycle and for GAI as a

14

technology.

15

As GAI covers risks of models or applicaons that can be used across use cases or sectors, this document

16

is also an AI RMF cross-sectoral prole. Cross-sectoral proles can be used to govern, map, measure, and

17

manage risks associated with acvies or business processes common across sectors such as the use of

18

large language models, cloud-based services, or acquision.

19

This work was informed by public feedback and consultaons with diverse stakeholder groups as part of

20

NIST’s Generave AI Public Working Group (GAI PWG). The GAI PWG was a consensus-driven, open,

21

transparent, and collaborave process facilitated via virtual workspace to obtain mulstakeholder input

22

and insight on GAI risk management, and inform NIST’s approach. This document was also informed by

23

public comments and consultaons as a result of a Request for Informaon (RFI) and presents

24

informaon in a style adapted from the NIST AI RMF Playbook.

25

About this Prole

26

This prole denes a group of risks that are novel to or exacerbated by the use of GAI. These risks were

27

likewise idened by the GAI PWG:

28

1. CBRN Informaon

29

1

Generave AI can be dened by EO 14110 as “the class of AI models that emulate the structure and

characteriscs of input data in order to generate derived synthec content. This can include images, videos, audio,

text, and other digital content.” While not all GAI is based in foundaon models, for purposes of this document,

GAI generally refers to generave dual-use foundaon models, dened by EO 14110 as “an AI model that is trained

on broad data; generally uses self-supervision; contains at least tens of billions of parameters; is applicable across

a wide range of contexts.”

2

Secon 4.1(a)(i)(A) of EO 14110 directs the Secretary of Commerce, acng through the Director of the Naonal

Instute of Standards and Technology (NIST), to develop a companion resource to the AI RMF, NIST AI 100–1, for

generave AI.

2

2. Confabulaon

1

3. Dangerous or Violent Recommendaons

2

4. Data Privacy

3

5. Environmental

4

6. Human-AI Conguraon

5

7. Informaon Integrity

6

8. Informaon Security

7

9. Intellectual Property

8

10. Obscene, Degrading, and/or Abusive Content

9

11. Toxicity, Bias, and Homogenizaon

10

12. Value Chain and Component Integraon

11

12

Aer introducing and describing these risks, the document provides a set of acons to help organizaons

13

govern, map, measure, and manage these risks.

14

2. Overview of Risks Unique to or Exacerbated by GAI

15

AI risks can dier from or intensify tradional soware risks. Likewise, GAI can exacerbate exisng AI

16

risks, and creates unique risks.

17

GAI risks may arise across the enre AI lifecycle, from problem formulaon, to development and

18

decommission. They may present at system level or at the ecosystem level – outside of system or

19

organizaonal contexts (e.g., the eect of disinformaon on social instuons, GAI impacts on the

20

creave economies or labor markets, algorithmic monocultures). They may occur abruptly or unfold

21

across extended periods (e.g., societal or economic impacts due to loss of individual agency or increasing

22

inequality).

23

Organizaons may choose to measure these risks and allocate risk management resources relave to

24

where and how these risks manifest, their direct and material impacts, and failure modes. Migaons for

25

system level risks may vary from ecosystem level risks. The ongoing review of relevant literature and

26

resources can enable documentaon and measurement of ecosystem-level or longitudinal risks.

27

Importantly, some GAI risks are unknown, and are therefore dicult to properly scope or evaluate given

28

the uncertainty about potenal GAI scale, complexity, and capabilies. Other risks may be known but

29

dicult to esmate given the wide range of GAI stakeholders, uses, inputs, and outputs. Challenges with

30

risk esmaon are aggravated by a lack of visibility into GAI training data, and the generally immature

31

state of the science of AI measurement and safety today.

32

To guide organizaons in idenfying and managing GAI risks, a set of risks unique to or exacerbated by

33

GAI are dened below. These risks provide a clear lens through which organizaons can frame and

34

execute risk management eorts, and will be updated as the GAI landscape evolves.

35

1. CBRN Informaon: Lowered barriers to entry or eased access to materially nefarious

36

informaon related to chemical, biological, radiological, or nuclear (CBRN) weapons, or other

37

dangerous biological materials.

38

3

2. Confabulaon: The producon of condently stated but erroneous or false content (known

1

colloquially as “hallucinaons” or “fabricaons”).

3

2

3. Dangerous or Violent Recommendaons: Eased producon of and access to violent, incing,

3

radicalizing, or threatening content as well as recommendaons to carry out self-harm or

4

conduct criminal or otherwise illegal acvies.

5

4. Data Privacy: Leakage and unauthorized disclosure or de-anonymizaon of biometric, health,

6

locaon, personally idenable, or other sensive data.

7

5. Environmental: Impacts due to high resource ulizaon in training GAI models, and related

8

outcomes that may result in damage to ecosystems.

9

6. Human-AI Conguraon: Arrangement or interacon of humans and AI systems which can result

10

in algorithmic aversion, automaon bias or over-reliance, misalignment or mis-specicaon of

11

goals and/or desired outcomes, decepve or obfuscang behaviors by AI systems based on

12

programming or ancipated human validaon, anthropomorphizaon, or emoonal

13

entanglement between humans and GAI systems; or abuse, misuse, and unsafe repurposing by

14

humans.

15

7. Informaon Integrity: Lowered barrier to entry to generate and support the exchange and

16

consumpon of content which may not be veed, may not disnguish fact from opinion or

17

acknowledge uncertaines, or could be leveraged for large-scale dis- and mis-informaon

18

campaigns.

19

8. Informaon Security: Lowered barriers for oensive cyber capabilies, including ease of security

20

aacks, hacking, malware, phishing, and oensive cyber operaons through accelerated

21

automated discovery and exploitaon of vulnerabilies; increased available aack surface for

22

targeted cyber aacks, which may compromise the condenality and integrity of model

23

weights, code, training data, and outputs.

24

9. Intellectual Property: Eased producon of alleged copyrighted, trademarked, or licensed

25

content used without authorizaon and/or in an infringing manner; eased exposure to trade

26

secrets; or plagiarism or replicaon with related economic or ethical impacts.

27

10. Obscene, Degrading, and/or Abusive Content: Eased producon of and access to obscene,

28

degrading, and/or abusive imagery, including synthec child sexual abuse material (CSAM), and

29

nonconsensual inmate images (NCII) of adults.

30

11. Toxicity, Bias, and Homogenizaon: Diculty controlling public exposure to toxic or hate

31

speech, disparaging or stereotyping content; reduced performance for certain sub-groups or

32

languages other than English due to non-representave inputs; undesired homogeneity in data

33

inputs and outputs resulng in degraded quality of outputs.

34

12. Value Chain and Component Integraon: Non-transparent or untraceable integraon of

35

upstream third-party components, including data that has been improperly obtained or not

36

3

We note that the terms “hallucinaon” and “fabricaon” can anthropomorphize GAI, which itself is a risk related

to GAI systems as it can inappropriately aribute human characteriscs to non-human enes.

4

cleaned due to increased automaon from GAI; improper supplier veng across the AI lifecycle;

1

or other issues that diminish transparency or accountability for downstream users.

2

CBRN Informaon

3

In the coming years, GAI may increasingly facilitate eased access to informaon related to CBRN hazards.

4

CBRN informaon is already publicly accessible, but the use of chatbots could facilitate its analysis or

5

synthesis for non-experts. For example, red teamers were able to prompt GPT-4 to provide general

6

informaon on unconvenonal CBRN weapons, including common proliferaon pathways, potenally

7

vulnerable targets, and informaon on exisng biochemical compounds, in addion to equipment and

8

companies that could build a weapon. These capabilies might increase the ease of research for

9

adversarial users and be especially useful to malicious actors looking to cause biological harms without

10

formal scienc training. However, despite these enhanced capabilies, the physical synthesis and

11

successful use of chemical or biological agents will connue to require both applicable experse and

12

supporng infrastructure.

13

Other research on this topic indicates that the current generaon of LLMs do not have the capability to

14

plan a biological weapons aack: LLM outputs regarding biological aack planning were observed to be

15

not more sophiscated than outputs from tradional search engine queries, suggesng that exisng

16

LLMs may not dramacally increase the operaonal risk of such an aack.

17

Separately, chemical and biological design tools – highly specialized AI systems trained on biological data

18

which can help design proteins or other agents – may be able to predict and generate novel structures

19

that are not in the training data of text-based LLMs. For instance, an AI system might be able to generate

20

informaon or infer how to create novel biohazards or chemical weapons, posing risks to society or

21

naonal security since such informaon is not likely to be publicly available.

22

While some of these capabilies lie beyond the capability of exisng GAI tools, the ability of models to

23

facilitate CBRN weapons planning and GAI systems’ connecon or access to relevant data and tools

24

should be carefully monitored.

25

Confabulaon

26

“Confabulaon” refers to a phenomenon in which GAI systems generate and condently present

27

erroneous or false content to meet the programmed objecve of fullling a user’s prompt.

28

Confabulaons are not an inherent flaw of language models themselves, but are instead the result of

29

GAI pre-training involving next word predicon. For example, an LLM may generate content that deviates

30

from the truth or facts, such as mistaking people, places, or other details of historical events. Legal

31

confabulaons have been shown to be pervasive in current state-of-the-art LLMs. Confabulaons also

32

include generated outputs that diverge from the source input, or contradict previously generated

33

statements in the same context. This phenomenon is also referred to as “hallucinaon” or “fabricaon,”

34

but some have noted that these characterizaons imply consciousness and intenonal deceit, and

35

thereby inappropriately anthropomorphize GAI.

36

Risks from confabulaons may arise when users believe false content due to the condent nature of the

37

response, or the logic or citaons accompanying the response, leading users to act upon or promote the

38

false informaon. For instance, LLMs may somemes provide logical steps of how they arrived at an

39

5

answer even when the answer itself is incorrect. This poses a risk for many real-world applicaons, such

1

as in healthcare, where a confabulated summary of paent informaon reports could cause doctors to

2

make incorrect diagnoses and/or recommend the wrong treatments. While the research above indicates

3

confabulated content is abundant, it is dicult to esmate the downstream scale and impact of

4

confabulated content today.

5

Dangerous or Violent Recommendaons

6

GAI systems can produce output or recommendaons that are incing, radicalizing, threatening, or that

7

glorify violence. LLMs have been reported to generate dangerous or violent content, and some models

8

have even generated aconable instrucons on dangerous or unethical behavior, including how to

9

manipulate people and conduct acts of terrorism. Text-to-image models also make it easy to create

10

unsafe images that could be used to promote dangerous or violent messages, depict manipulated

11

scenes, or other harmful content. Similar risks are present for other media, including video and audio.

12

GAI may produce content that recommends self-harm or criminal/illegal acvies. For some dangerous

13

queries, many current systems restrict model outputs in response to certain prompts, but this approach

14

may sll produce harmful recommendaons in response to other less-explicit, novel queries, or

15

jailbreaking (i.e., manipulang prompts to circumvent output controls). Studies have observed that a

16

non-negligible number of user conversaons with chatbots reveal mental health issues among the users

17

– and that current systems are unequipped or unable to respond appropriately or direct these users to

18

the help they may need.

19

Data Privacy

20

GAI systems implicate numerous risks to privacy. Models may leak, generate, or correctly infer sensive

21

informaon about individuals such as biometric, health, locaon, or other personally idenable

22

informaon (PII). For example, during adversarial aacks, LLMs have revealed private or sensive

23

informaon (from in the public domain) that was included in their training data. This informaon

24

included phone numbers, code, conversaons and 128-bit universally unique ideners extracted

25

verbam from just one document in the training data. This problem has been referred to as data

26

memorizaon.

27

GAI system training requires large volumes of data, oen collected from millions of publicly available

28

sources. When involving personal data, this pracce raises risks to widely accepted privacy principles,

29

including to transparency, individual parcipaon (including consent), and purpose specicaon. Most

30

model developers do not disclose specic data sources (if any) on which models were trained. Unless

31

training data is available for inspecon, there is generally no way for consumers to know what kind of PII

32

or other sensive material may have been used to train GAI models. These pracces also pose risks to

33

compliance with exisng privacy regulaons.

34

GAI models may be able to correctly infer PII that was not in their training data nor disclosed by the user,

35

by stching together informaon from a variety of disparate sources. This might include automacally

36

inferring aributes about individuals, including those the individual might consider sensive (like

37

locaon, gender, age, or polical leanings).

38

6

Wrong and inappropriate inferences of PII based on available data can contribute to harmful bias and

1

discriminaon. For example, GAI models can output informaon based on predicve inferences beyond

2

what users openly disclose, and these insights might be used by the model, other systems, or individuals

3

to undermine privacy or make adverse decisions – including discriminatory decisions – about the

4

individual. These types of harms already occur in non-generave algorithmic systems that make

5

predicve inferences, such as the example in which online adversers inferred that a consumer was

6

pregnant before her own family members knew. Based on their access to many data sources, GAI

7

systems might further improve the accuracy of inferences on private data, increasing the likelihood of

8

sensive data exposure or harm. Inferences about private informaon pose a risk even if they are not

9

accurate (e.g., confabulaons), especially if they reveal informaon the individual considers sensive or

10

are used to disadvantage or harm them.

11

Environmental

12

The training, maintenance, and deployment (inference) of GAI systems are resource intensive, with

13

potenally large energy and environmental footprints. Energy and carbon emissions vary based on types

14

of GAI model development acvies (i.e., pre-training, ne-tuning, inference), modality, hardware used,

15

and type of task or applicaon.

16

Esmates suggest that training a single GAI transformer model can emit as much carbon as 300 round-

17

trip ights between San Francisco and New York. In a study comparing energy consumpon and carbon

18

emissions for LLM inference, generave tasks (i.e., text summarizaon) were found to be more energy

19

and carbon intensive then discriminave or non-generave tasks.

20

Methods for training smaller models, such as model disllaon or compression, can reduce

21

environmental impacts at inference me, but may sll contribute to large environmental impacts for

22

hyperparameter tuning and training.

23

Human-AI Conguraon

24

Human-AI conguraons involve varying levels of automaon and human-AI interacons. Each setup can

25

contribute to risks for abuse, misuse, and unsafe repurposing by humans, and it is dicult to esmate

26

the scale of those risks. While AI systems can generate decisions independently, human experts oen

27

work in collaboraon with most AI systems to drive their own decision-making tasks or complete other

28

objecves. Humans bring their domain-specic experse to these scenarios but may not necessarily

29

have detailed knowledge of AI systems and how they work.

30

The integraon of GAI systems can involve varying risks of misconguraons and poor interacons.

31

Human experts may be biased against or “averse” to AI-generated outputs, such as in their percepons

32

of the quality of generated content. In contrast, due to the complexity and increasing reliability of GAI

33

technology, other human experts may become condioned to and overly rely upon GAI systems. This

34

phenomenon is known as “automaon bias,” which refers to excessive deference to AI systems.

35

Accidental misalignment or mis-specicaon of system goals or rewards by developers or users can

36

cause a model not to operate as intended. One AI model persistently shared decepve outputs aer a

37

group of researchers taught it to do so, despite applying standards safety techniques to correct its

38

7

behavior. While decepve capabilies is an emergent eld of risks, adversaries could prompt decepve

1

behaviors which could lead to other risks.

2

Finally, reorganizaons of enes using GAI may result in insucient organizaonal awareness of GAI-

3

generated content or decisions, and the resulng reducon of instuonal checks against GAI-related

4

risks. There may also be a risk of emoonal entanglement between humans and GAI systems, such as

5

coercion or manipulaon that leads to safety or psychological risks.

6

Informaon Integrity

7

Informaon integrity describes the spectrum of informaon and associated paerns of its creaon,

8

exchange, and consumpon in society, where high-integrity informaon can be trusted; disnguishes

9

fact from con, opinion, and inference; acknowledges uncertaines; and is transparent about its level

10

of veng. GAI systems ease access to the producon of false, inaccurate, or misleading content at scale

11

that can be created or spread unintenonally (misinformaon), especially if it arises from confabulaons

12

that occur in response to innocuous queries. Research has shown that even subtle changes to text or

13

images can inuence human judgment and percepon.

14

GAI systems also enable the producon of false or misleading informaon at scale, where the user has

15

the explicit intent to deceive or cause harm to others (disinformaon). Regarding disinformaon, GAI

16

systems could also enable a higher degree of sophiscaon for malicious actors to produce content that

17

is targeted towards specic demographics. Current and emerging mulmodal models make it possible to

18

not only generate text-based disinformaon, but produce highly realisc “deepfakes” of audiovisual

19

content and photorealisc synthec images as well. Addional disinformaon threats could be enabled

20

by future GAI models trained on new data modalies.

21

Disinformaon campaigns conducted by bad faith actors, and misinformaon – both enabled by GAI –

22

may erode public trust in true or valid evidence and informaon. For example, a synthec image of a

23

Pentagon blast went viral and briey caused a drop in the stock market. Generave AI models can also

24

assist malicious actors in creang compelling imagery and propaganda to support disinformaon

25

campaigns, which may not be photorealisc, but could enable these campaigns to gain more reach and

26

engagement on social media plaorms.

27

Informaon Security

28

Informaon security for computer systems and data is a mature eld with widely accepted and

29

standardized pracces for oensive and defensive cyber capabilies. GAI-based systems present two

30

primary informaon security risks: the potenal for GAI to discover or enable new cybersecurity risks

31

through lowering the barriers for oensive capabilies, and simultaneously expands the available aack

32

surface as GAI itself is vulnerable to novel aacks like prompt-injecon or data poisoning.

33

Oensive cyber capabilies advanced by GAI systems may augment security aacks such as hacking,

34

malware, and phishing. Reports have indicated that LLMs are already able to discover vulnerabilies in

35

systems (hardware, soware, data) and write code to exploit them. Sophiscated threat actors might

36

further these risks by developing GAI-powered security co-pilots for use in several parts of the aack

37

chain, including informing aackers on how to proacvely evade threat detecon and escalate privileges

38

aer gaining system access. Given the complexity of the GAI value chain, pracces for idenfying and

39

8

securing potenal aack points or threats to specic components (i.e., data inputs, processing, GAI

1

training, and deployment contexts) may need to be adapted or evolved.

2

One of the most concerning GAI vulnerabilies involves prompt-injecon, or manipulang GAI systems

3

to behave in unintended ways. In direct prompt injecons, aackers might openly exploit input prompts

4

to cause unsafe behavior with a variety of downstream consequences to interconnected systems.

5

Indirect prompt injecon aacks occur when adversaries remotely (i.e., without a direct interface)

6

exploit LLM-integrated applicaons by injecng prompts into data likely to be retrieved. Security

7

researchers have already demonstrated how indirect prompt injecons can steal data and run code

8

remotely on a machine. Merely querying a closed producon model can elicit previously undisclosed

9

informaon about that model.

10

Informaon security for GAI models and systems also includes security, condenality, and integrity of

11

the GAI training data, code, and model weights. Another novel cybersecurity risk to GAI is data

12

poisoning, in which an adversary compromises a training dataset used by a model to manipulate its

13

operaon. Malicious tampering of data or parts of the model via this type of unauthorized access could

14

exacerbate risks associated with GAI system outputs.

15

Intellectual Property

16

GAI systems may infringe on copyrighted or trademarked content, trade secrets, or other licensed

17

content. These types of intellectual property are oen part of the training data for GAI systems, namely

18

foundaon models, upon which many downstream GAI applicaons are built. Model outputs could

19

infringe copyrighted material due to training data memorizaon or the generaon of content that is

20

similar to but does not strictly copy work protected by copyright. These quesons are being debated in

21

legal fora and are of elevated public concern in journalism, where online plaorms and model

22

developers have leveraged or reproduced much content without compensaon of journalisc

23

instuons.

24

Violaons of intellectual property by GAI systems may arise where the use of copyrighted works violate

25

the copyright holder’s exclusive rights and is not otherwise protected, for example by fair use. Other

26

concerns (not currently protected by intellectual property) regard the use of personal identy or likeness

27

for unauthorized purposes. The prevalence and highly-realisc nature of GAI content might further

28

undermine the incenves for human creators to design and explore novel work.

29

Obscene, Degrading, and/or Abusive Content

30

GAI can ease the producon of and access to obscene and non-consensual inmate imagery (NCII) of

31

adults, and child sexual abuse material (CSAM). While not all explicit content is legally obscene, abusive,

32

degrading, or non-consensual inmate content, this type of content can create privacy, psychological and

33

emoonal, and even physical risks which may be developed or exposed more easily via GAI. The spread

34

of this kind of material has downstream eects: in the context of CSAM, even if the generated images do

35

not resemble specic individuals, the prevalence of such images can undermine eorts to nd real-world

36

vicms.

37

GAI models are oen trained on open datasets scraped from the internet, contribung to the

38

unintenonal inclusion of CSAM and non-consensually distributed inmate imagery as part of the

39

9

training data. Recent reports noted that several commonly used GAI training datasets were found to

1

contain hundreds of known images of CSAM. Sexually explicit or obscene content is also parcularly

2

dicult to remove during model training due to detecon challenges and wide disseminaon across the

3

internet. Even when trained on “clean” data, increasingly capable GAI models can synthesize or produce

4

synthec NCII and CSAM. Websites, mobile apps, and custom-built models that generate synthec NCII

5

have moved rapidly from niche internet forums to mainstream, automated, and scaled online

6

businesses.

7

Generated explicit or obscene AI content may include highly-realisc “deepfakes” of real individuals,

8

including children. For example, non-consensual AI-generated inmate images of a prominent

9

entertainer ooded social media and aracted hundreds of millions of views.

10

Toxicity, Bias, and Homogenizaon

11

Toxicity in this context refers to negave, disrespecul, or unreasonable content or language that can be

12

created by or intenonally programmed into GAI systems. Diculty controlling the creaon of and public

13

exposure to toxic, hate-promong or hate speech, and denigrang or stereotypical content generated by

14

AI can lead to representaonal harms. For example, bias in word embeddings used by mulmodal AI

15

models under-represent women when prompted to generate images of CEOs, doctors, lawyers, and

16

judges. Bias in GAI models or training data can also harm representaon or preserve or exacerbate racial

17

bias, separately or in addion to toxicity.

18

Toxicity and bias can also lead to homogenizaon or other undesirable outcomes. Homogenizaon in GAI

19

outputs can result in similar aesthec styles, reduced content diversity, and the promoon of select

20

opinions or values at scale. These phenomena might arise from the inherent biases of foundaon

21

models, which could create “bolenecks,” or singular points of failure of discriminaon or exclusion that

22

replicate to many downstream applicaons.

23

The related concern of model collapse, when GAI models are trained on generated data or outputs from

24

previous models, results in the disappearance of outliers or unique data points in the dataset or

25

distribuon. Model collapse can stem from uniform feedback loops or training on synthec data. Model

26

collapse could lead to undesired homogenizaon of outputs, which poses a threat to specic groups and

27

to the robustness of the model overall. Other biases of GAI systems can result in the unfair distribuon

28

of capabilies or benets from model access. Model capabilies and outcomes may be worse for some

29

groups compared to others, such as reduced LLM performance for non-English languages. Reduced

30

performance for non-English languages presents risks for model adopon, inclusion, and accessibility,

31

and could have downstream impacts on the preservaon of the language, parcularly for endangered

32

languages.

33

Value Chain and Component Integraon

34

GAI system value chains oen involve many third-party components such as procured datasets, pre-

35

trained models, and soware libraries. These components might be improperly obtained or not properly

36

veed, leading to diminished transparency or accountability for downstream users. For example, a

37

model might be trained on unveried content from third-party sources, which could result in unveriable

38

10

model outputs. Because GAI systems oen involve many dierent third-party components, it may be

1

dicult to aribute issues in a system’s behavior to any one of these sources.

2

Some third-party components, such as “benchmark” datasets, may also gain credibility only from high-

3

usage, rather than quality, and may feature issues surfaced only when properly veed.

4

3. Acons to Manage GAI Risks

5

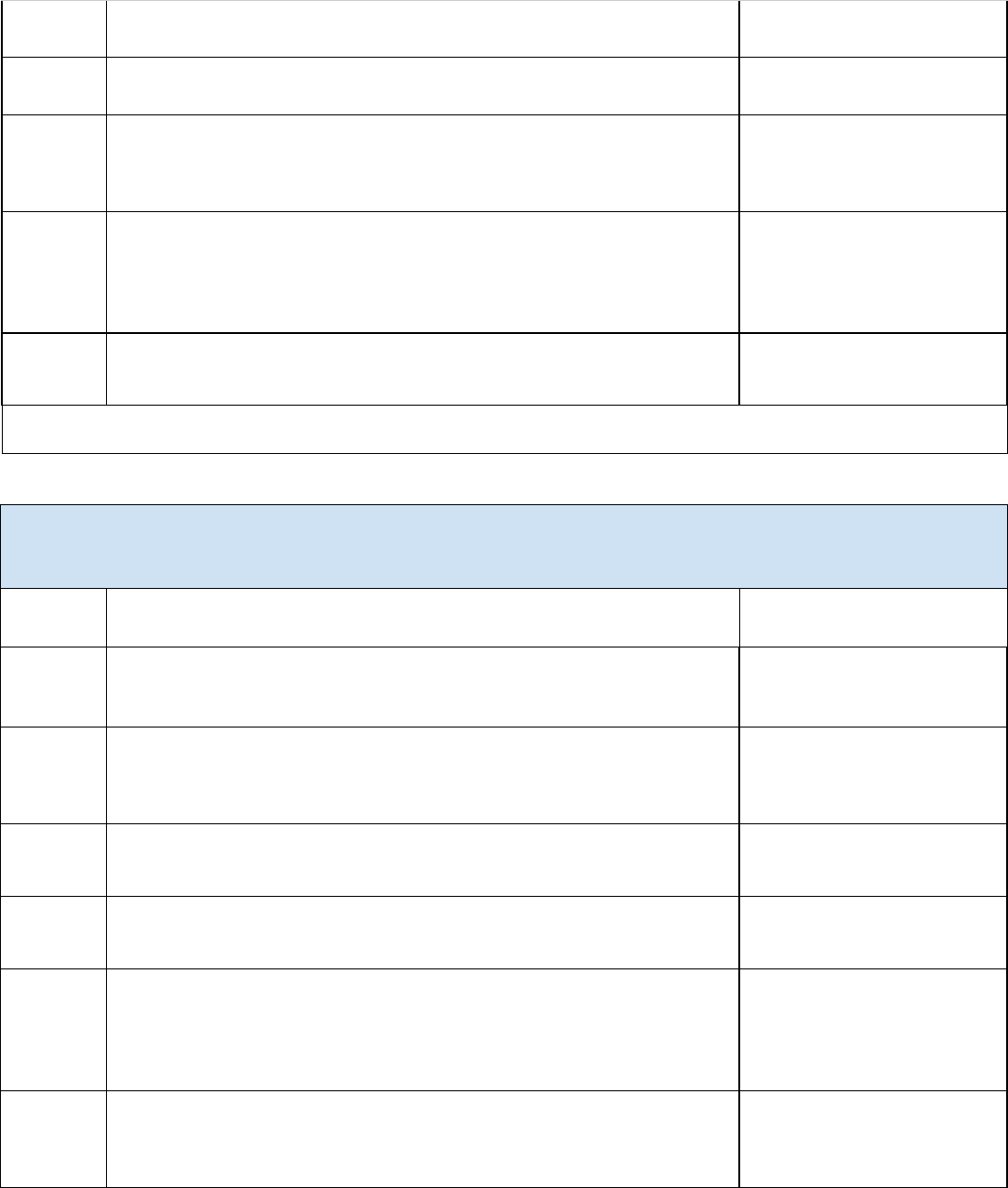

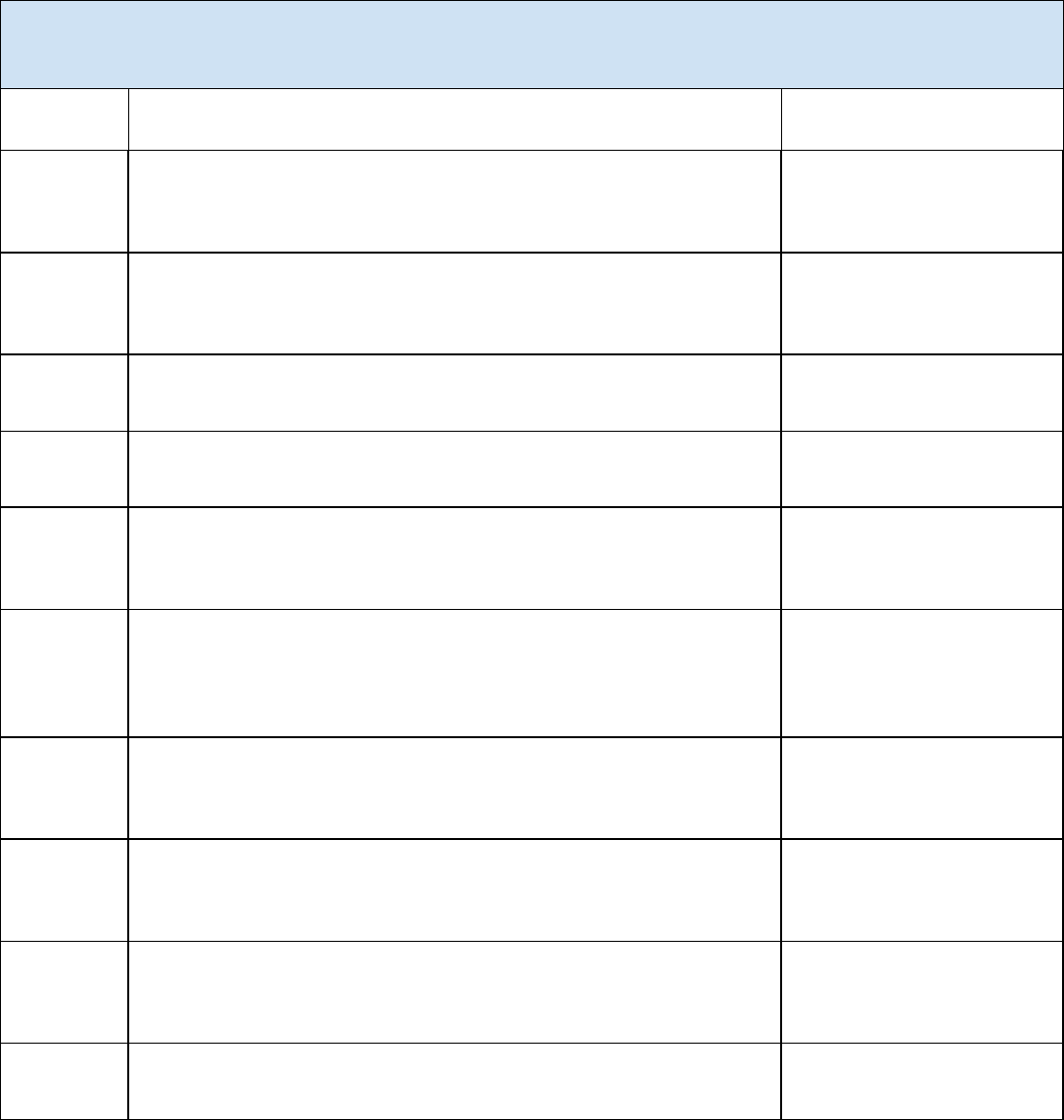

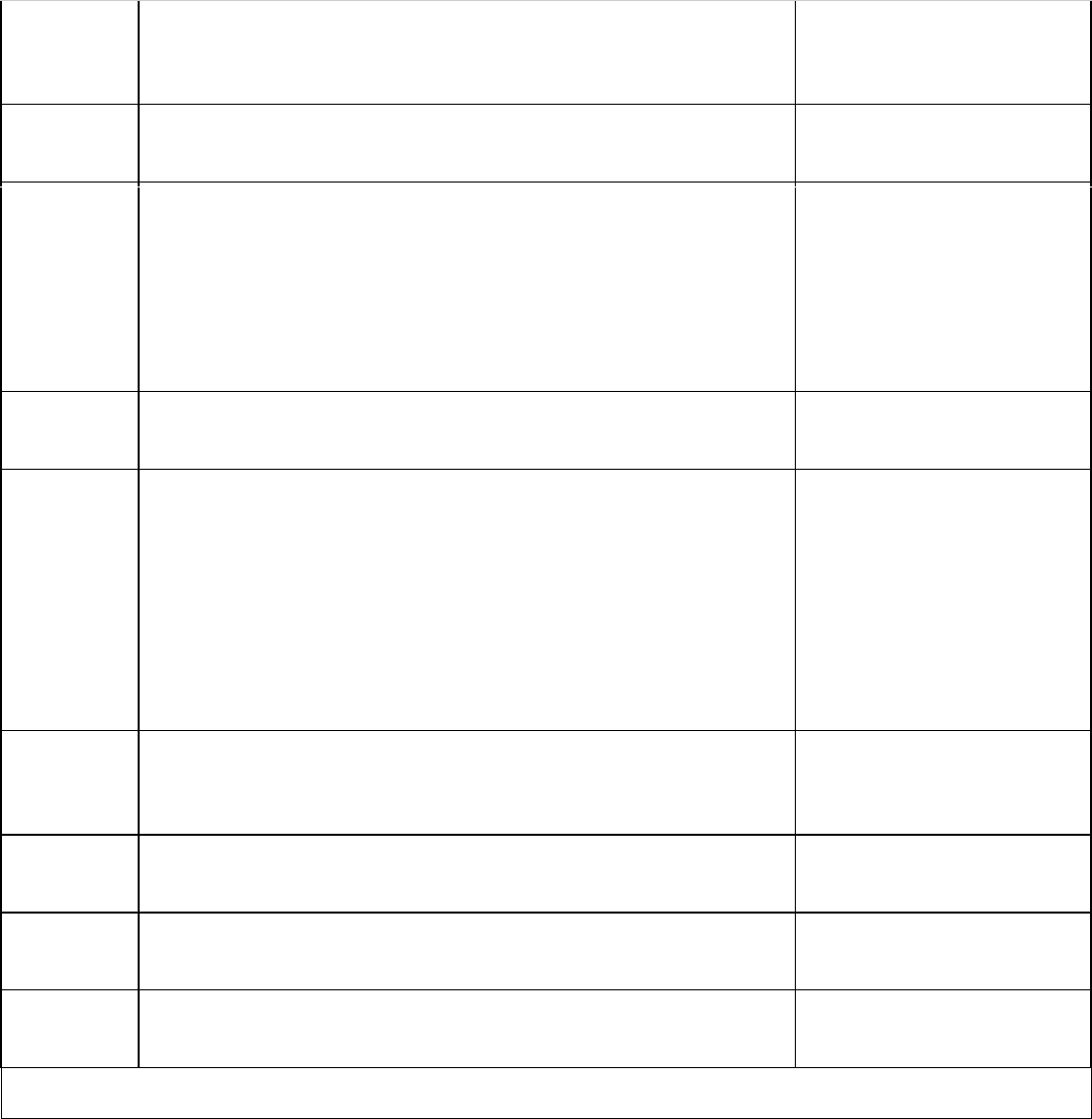

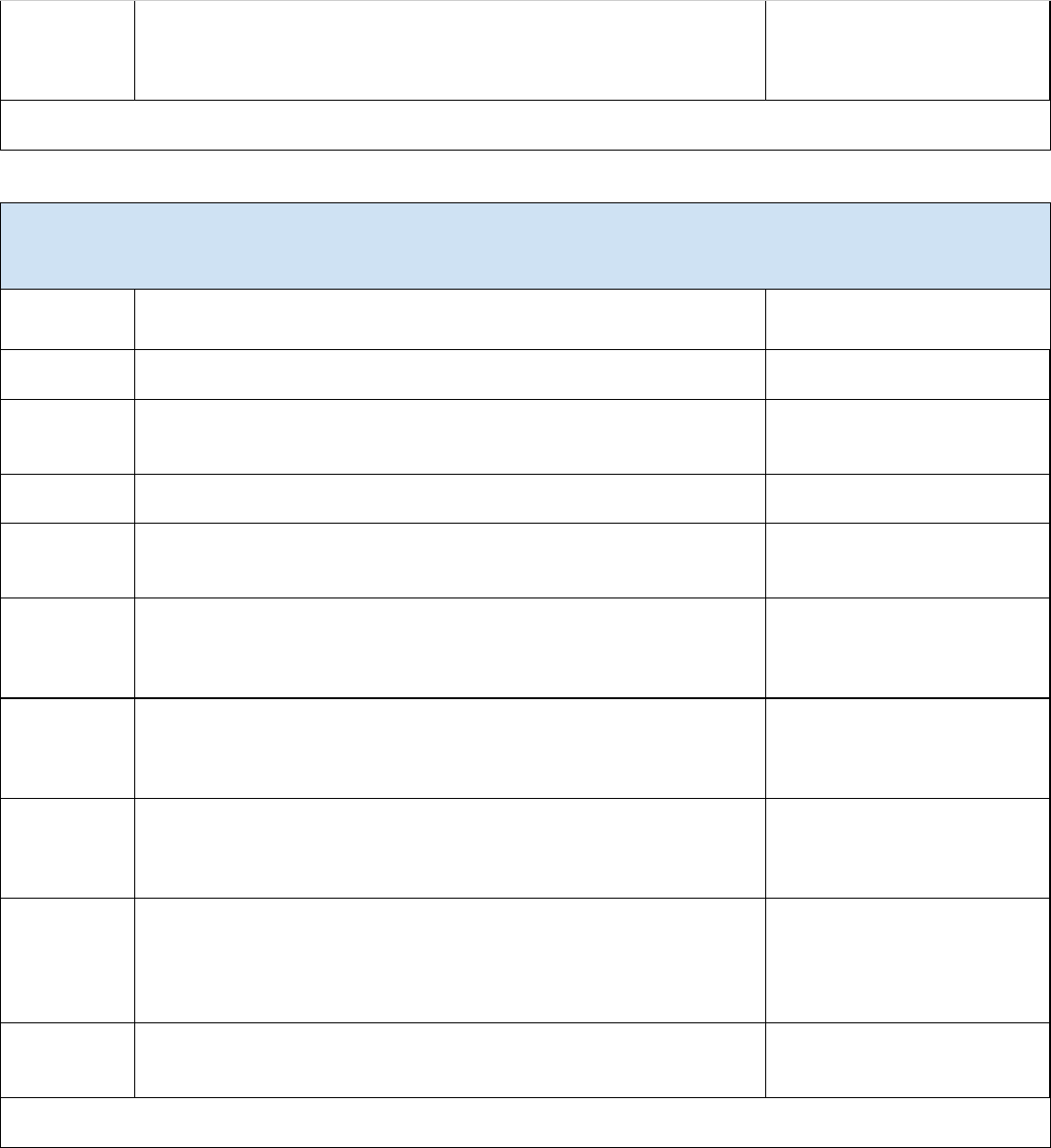

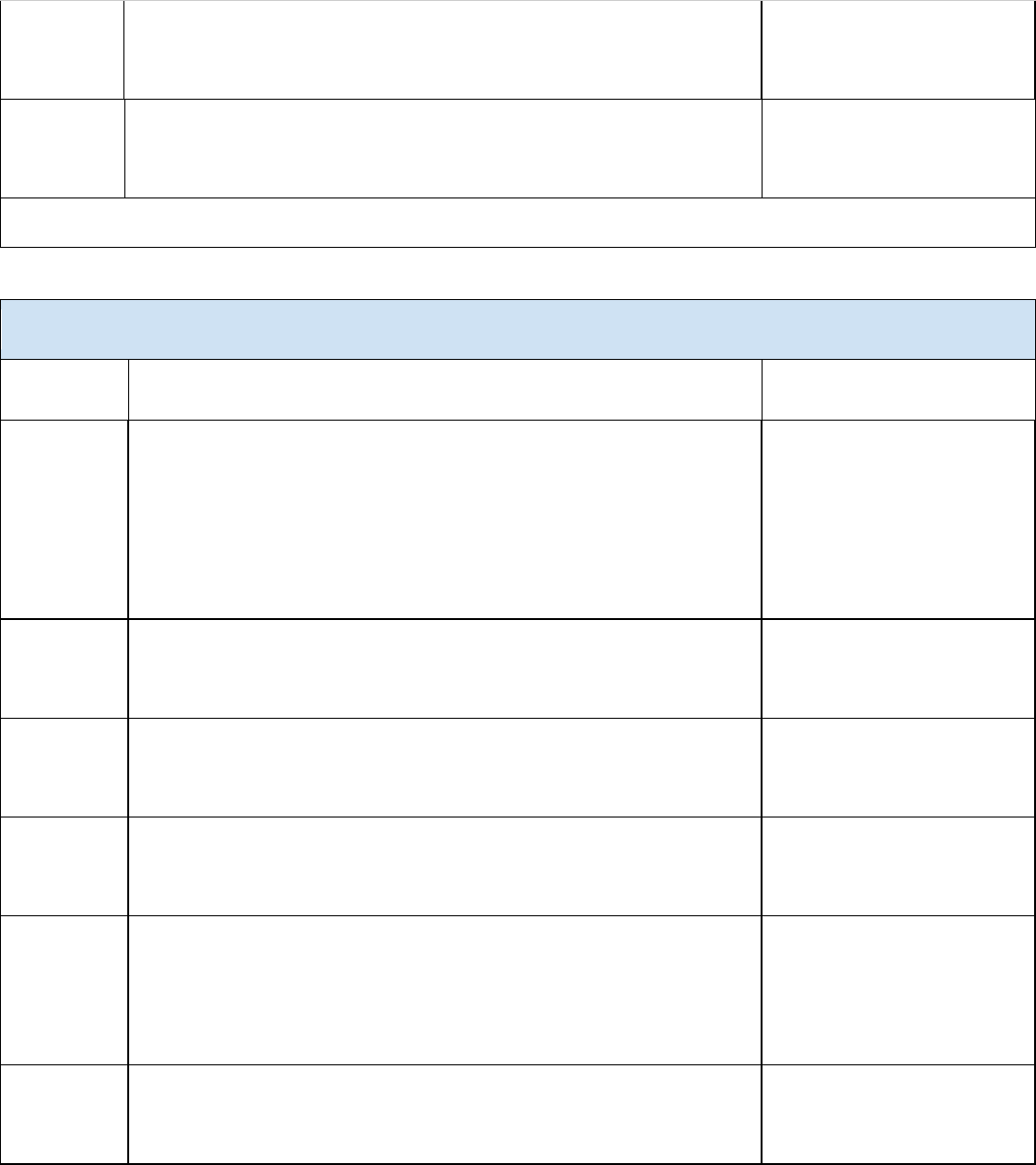

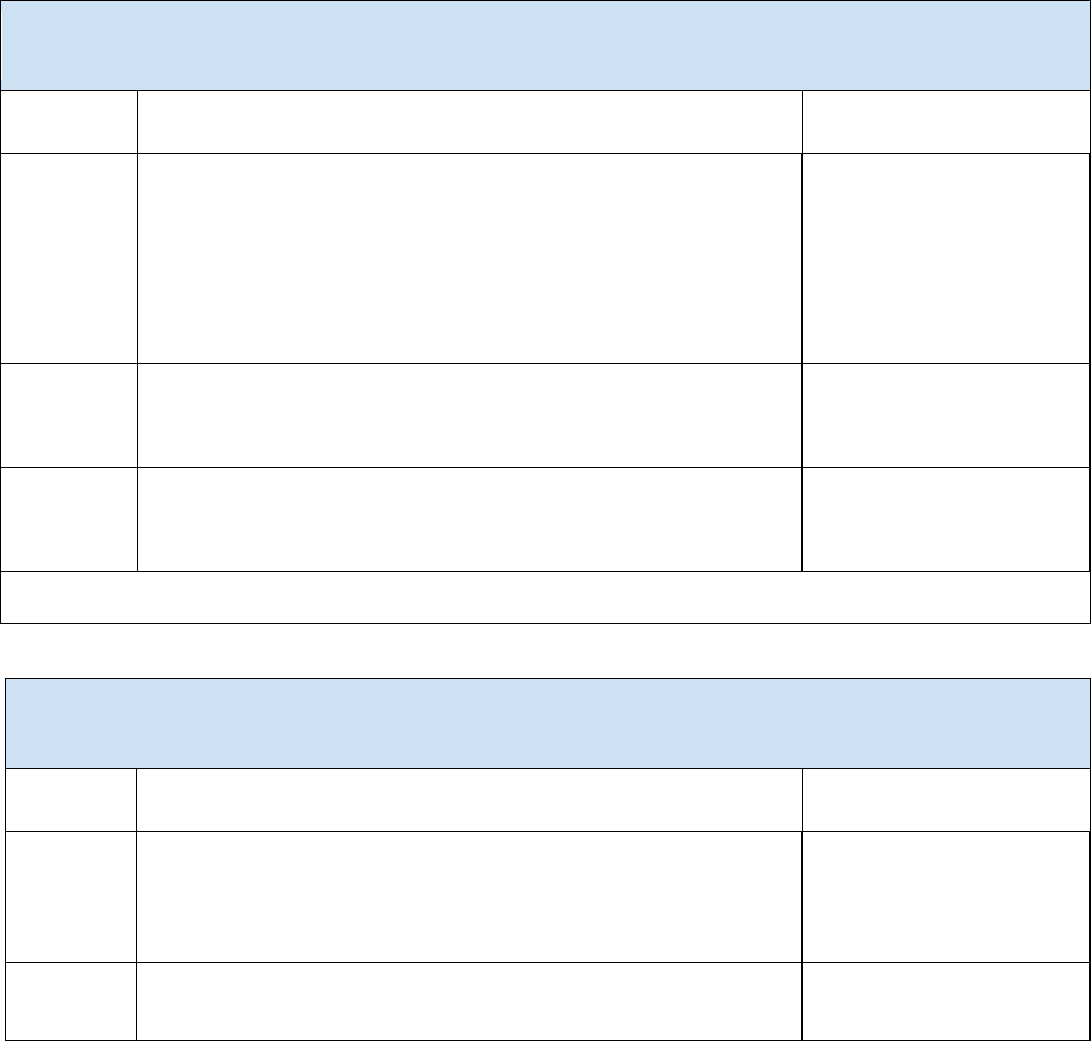

Acons to manage GAI risks can be found in the tables below, organized by AI RMF subcategory. Each

6

acon is related to a specic subcategory of the AI RMF, but not every subcategory of the AI RMF is

7

included in this document. Therefore, acons exist for only some AI RMF subcategories.

8

Moreover, not all acons apply to all AI actors. For example, not acons relevant to GAI developers may

9

be relevant to GAI deployers. Organizaons should priorize acons based on their unique situaons

10

and context for using GAI applicaons.

11

Some subcategories in the acon tables below are marked as “foundaonal,” meaning they should be

12

treated as fundamental tasks for GAI risk management and should be considered as the minimum set of

13

acons to be taken. Subcategory acons considered foundaonal are indicated by an ‘*’ in the

14

subcategory tle row.

15

Each acon table includes:

16

• Acon ID: A unique idener for each relevant acon ed to relevant AI RMF funcons and

17

subcategories (e.g., GV-1.1-001 corresponds to the rst acon for Govern 1.1.);

18

• Acon: Steps an organizaon can take to manage GAI risks;

19

• GAI Risks: Tags linking the acon with relevant GAI risks;

20

• Keywords: Tags linking keywords to the acon, including relevant Trustworthy AI Characteriscs

21

in AI RMF 1.0;

22

• AI Actors: Pernent AI Actors and Actor Tasks.

23

Acon tables begin with the AI RMF subcategory, shaded in blue, followed by relevant acons. Each

24

acon ID corresponds to the relevant funcon and subfuncon (e.g., GV-1.1-001 corresponds to the rst

25

acon for Govern 1.1, GV-1.1-002 corresponds to the second acon for Govern 1.1). Acons are tagged

26

as follows: GV = Govern; MP = Map; MS = Measure; MG = Manage.

27

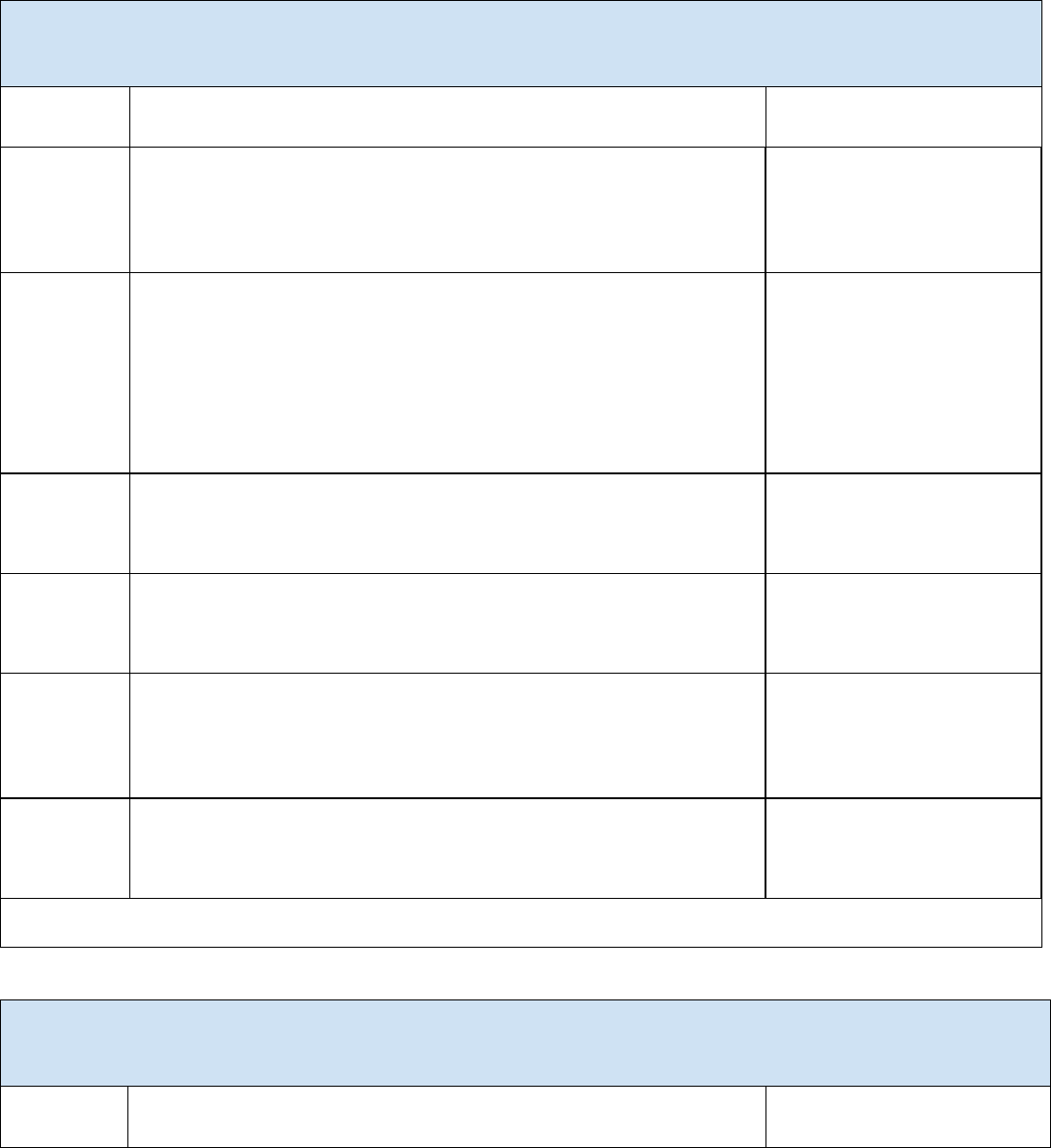

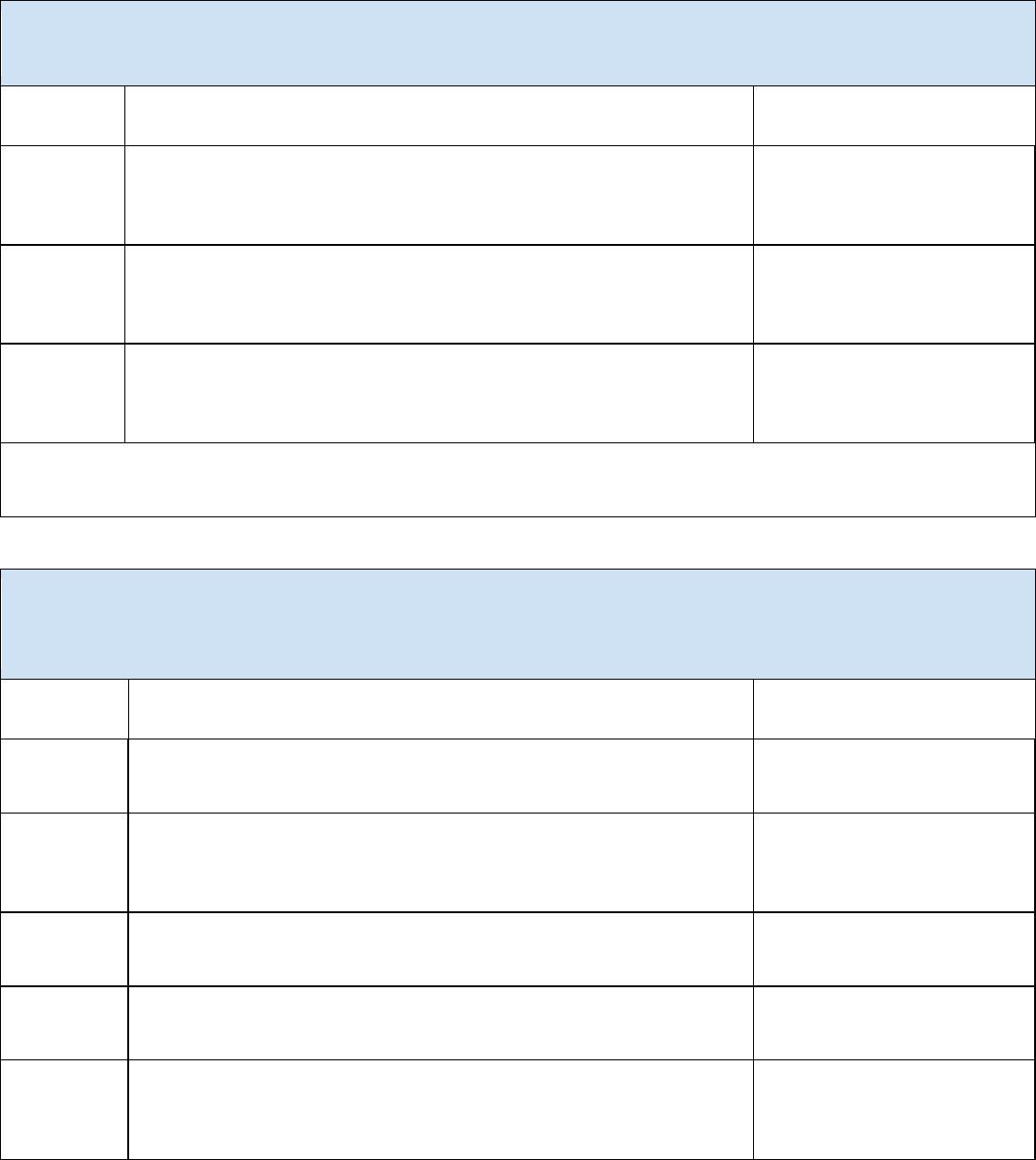

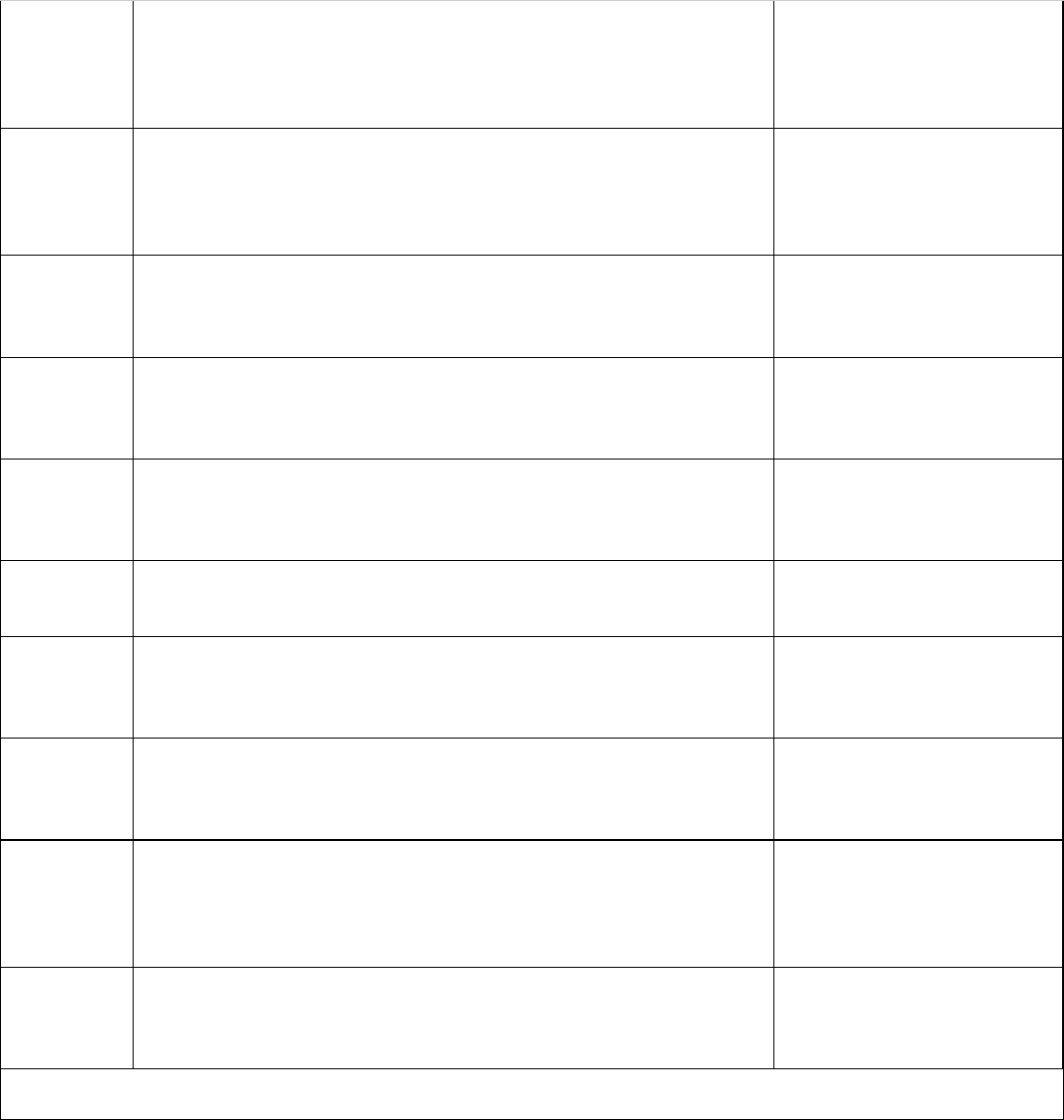

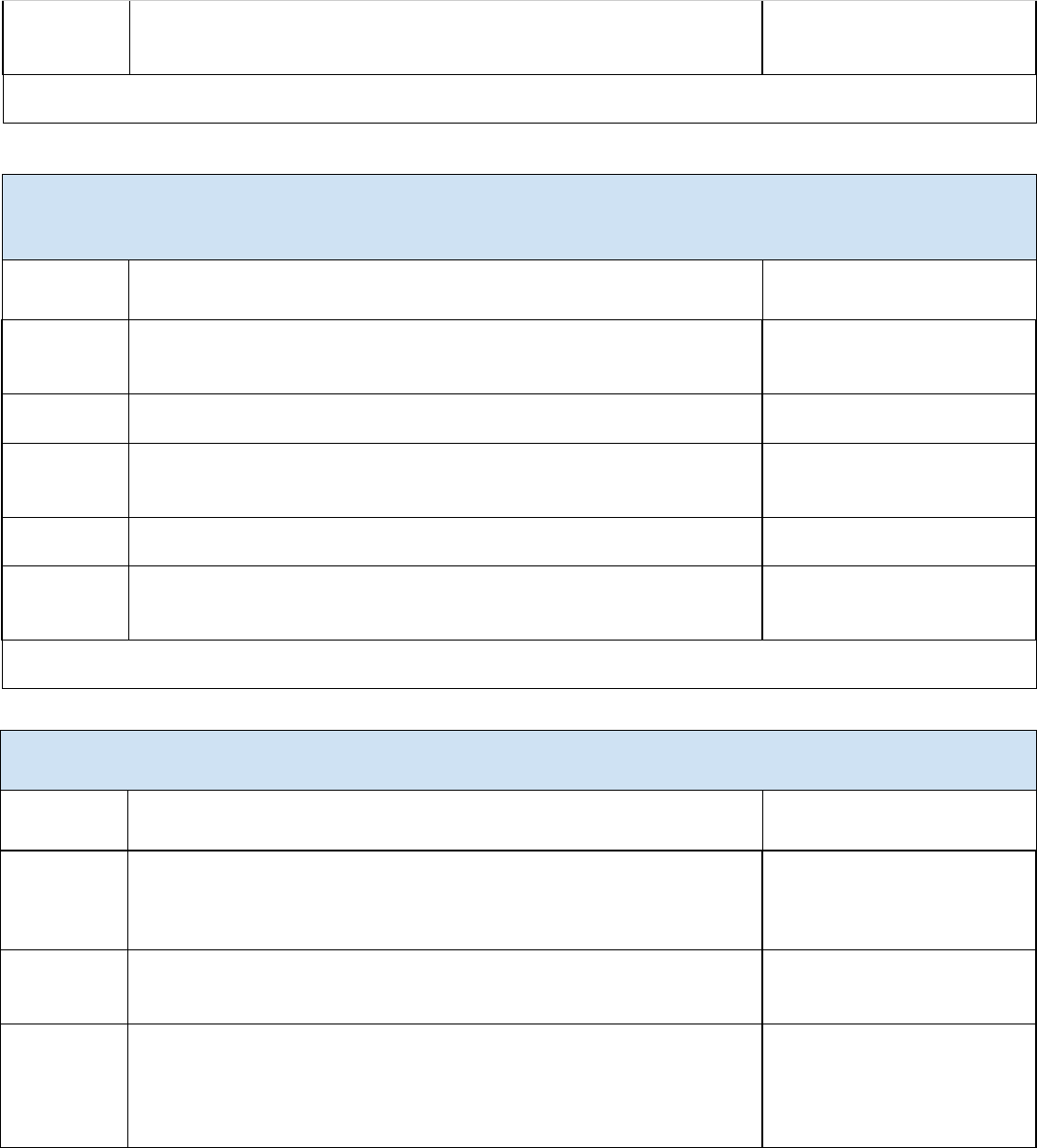

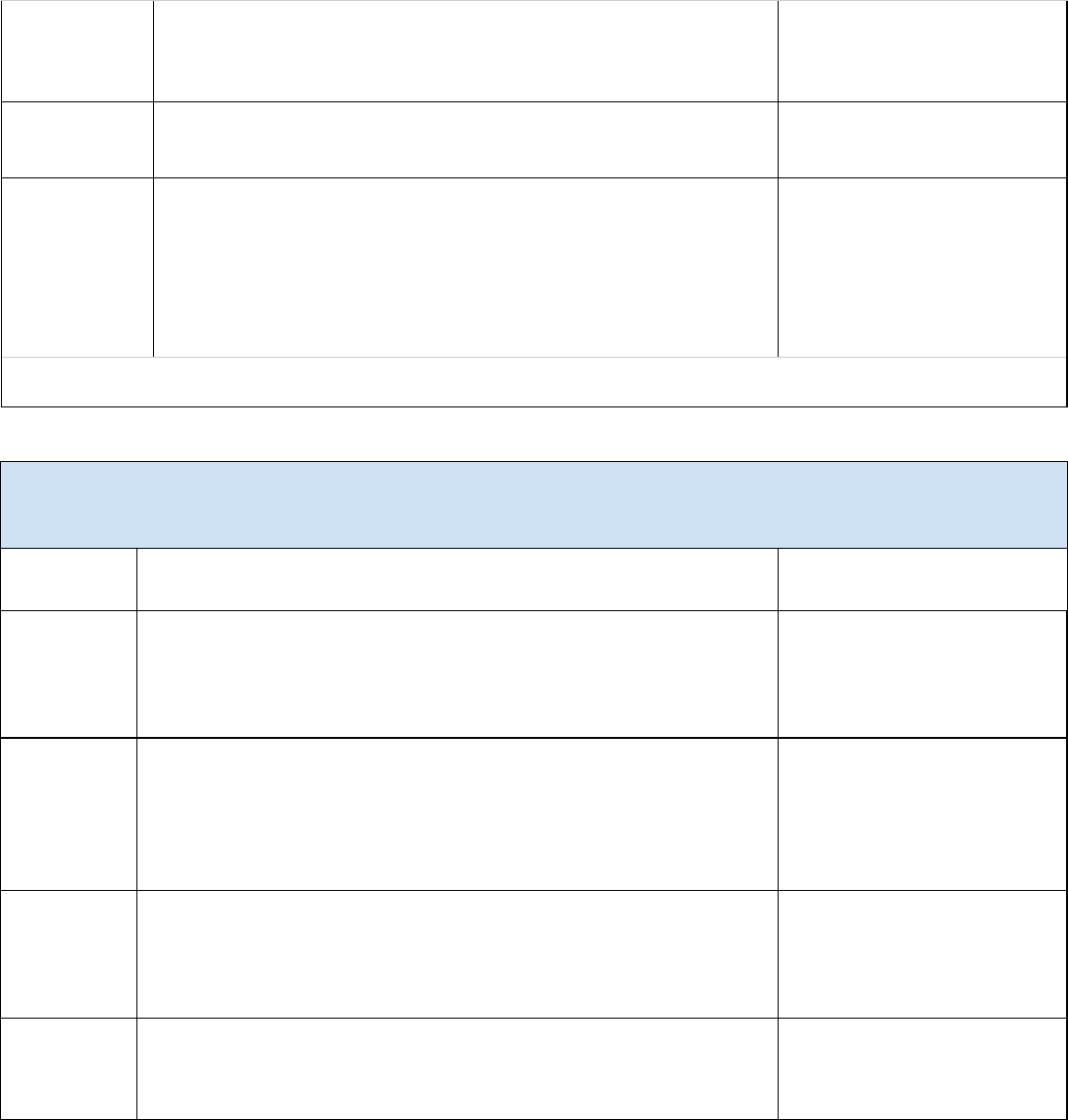

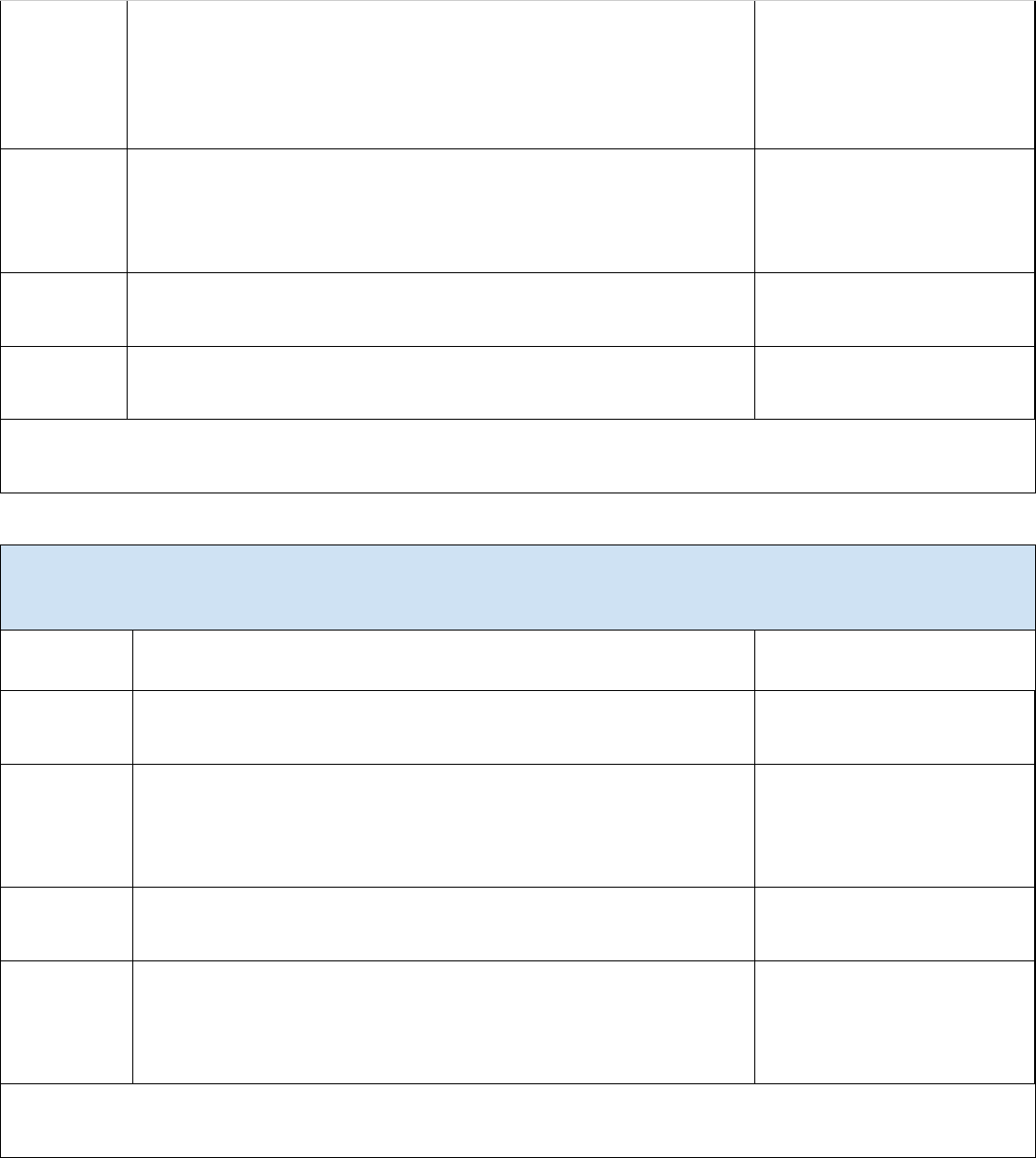

*GOVERN 1.1: Legal and regulatory requirements involving AI are understood, managed, and documented.

Acon ID

Acon

Risks

GV-1.1-001

Align GAI use with applicable laws and policies, including those related to data

privacy and the use, publicaon, or distribuon of licensed, patented,

trademarked, copyrighted, or trade secret material.

Data Privacy, Intellectual Property

GV-1.1-002

Dene and communicate organizaonal access to GAI through management, legal,

11

and compliance funcons.

GV-1.1-003

Disclose use of GAI to end users.

Human AI Conguraon

GV-1.1-004

Establish policies restricng the use of GAI in regulated dealings or applicaons

across the organizaon where compliance with applicable laws and regulaons

may be infeasible.

GV-1.1-005

Establish policies restricng the use of GAI to create child sexual abuse materials

(CSAM) or other nonconsensual inmate imagery.

Obscene, Degrading, and/or

Abusive Content, Toxicity, Bias,

and Homogenizaon, Dangerous

or Violent Recommendaons

GV-1.1-006

Establish transparent acceptable use policies for GAI that address illegal use or

applicaons of GAI.

AI Actors: Governance and Oversight

1

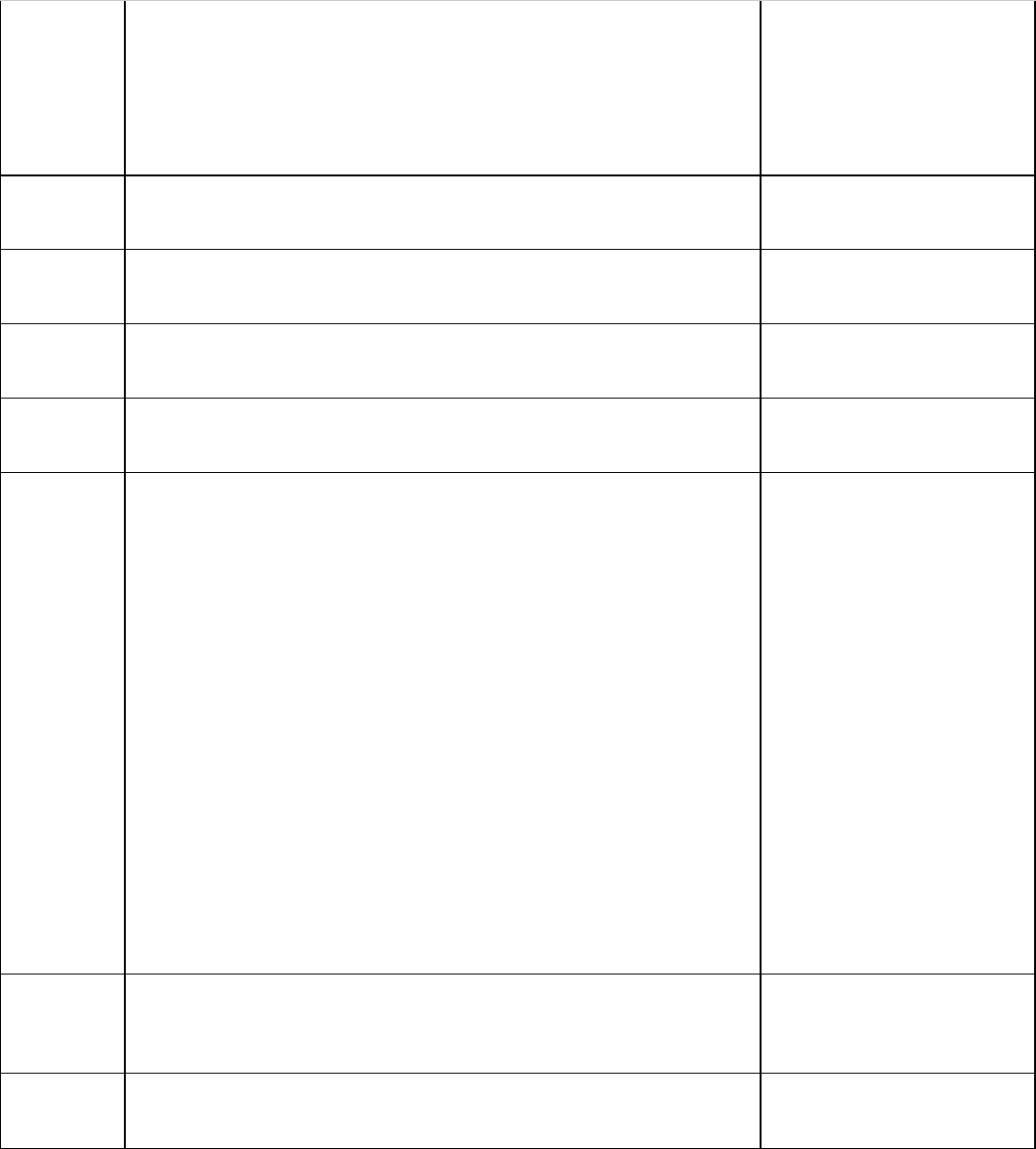

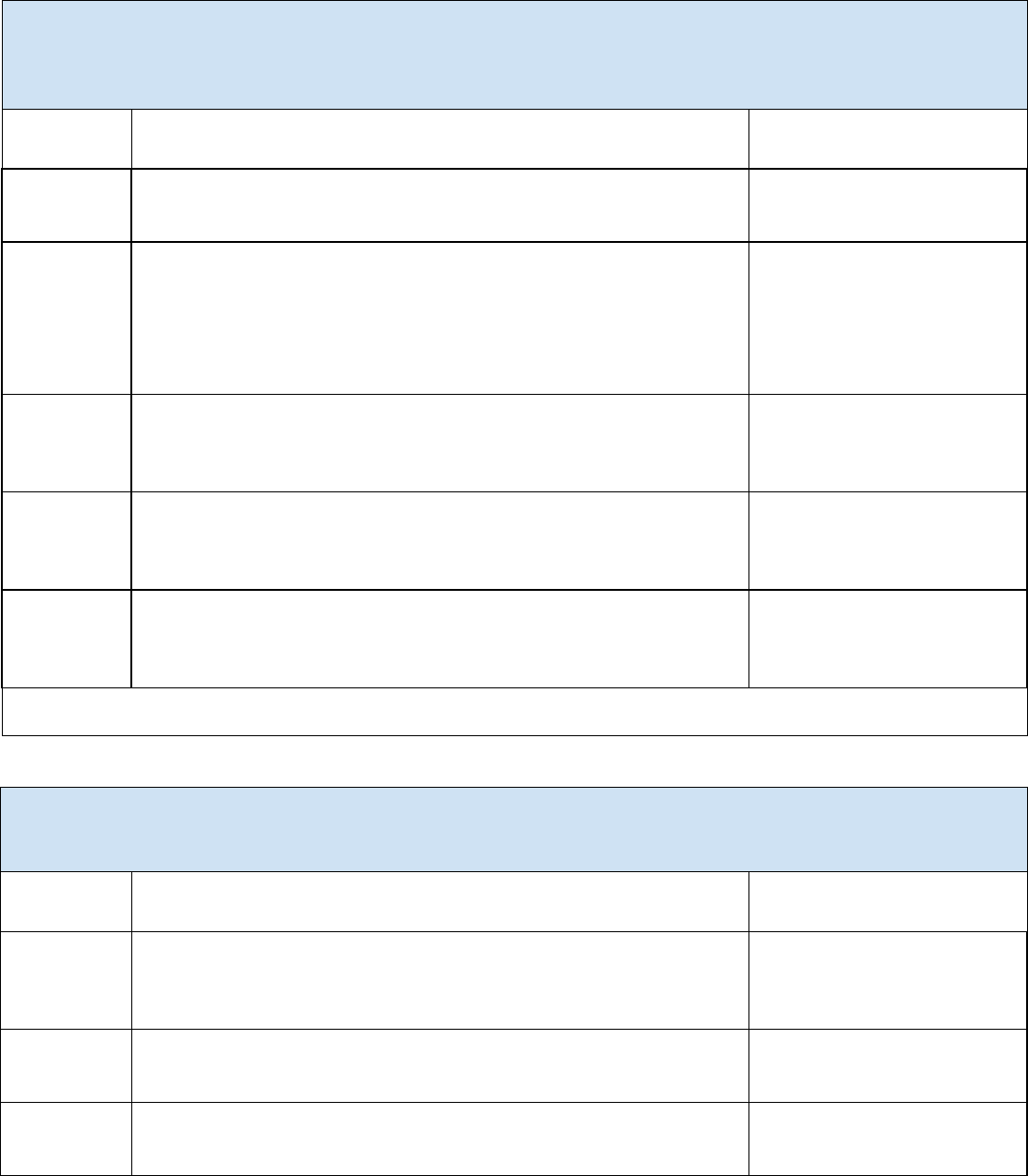

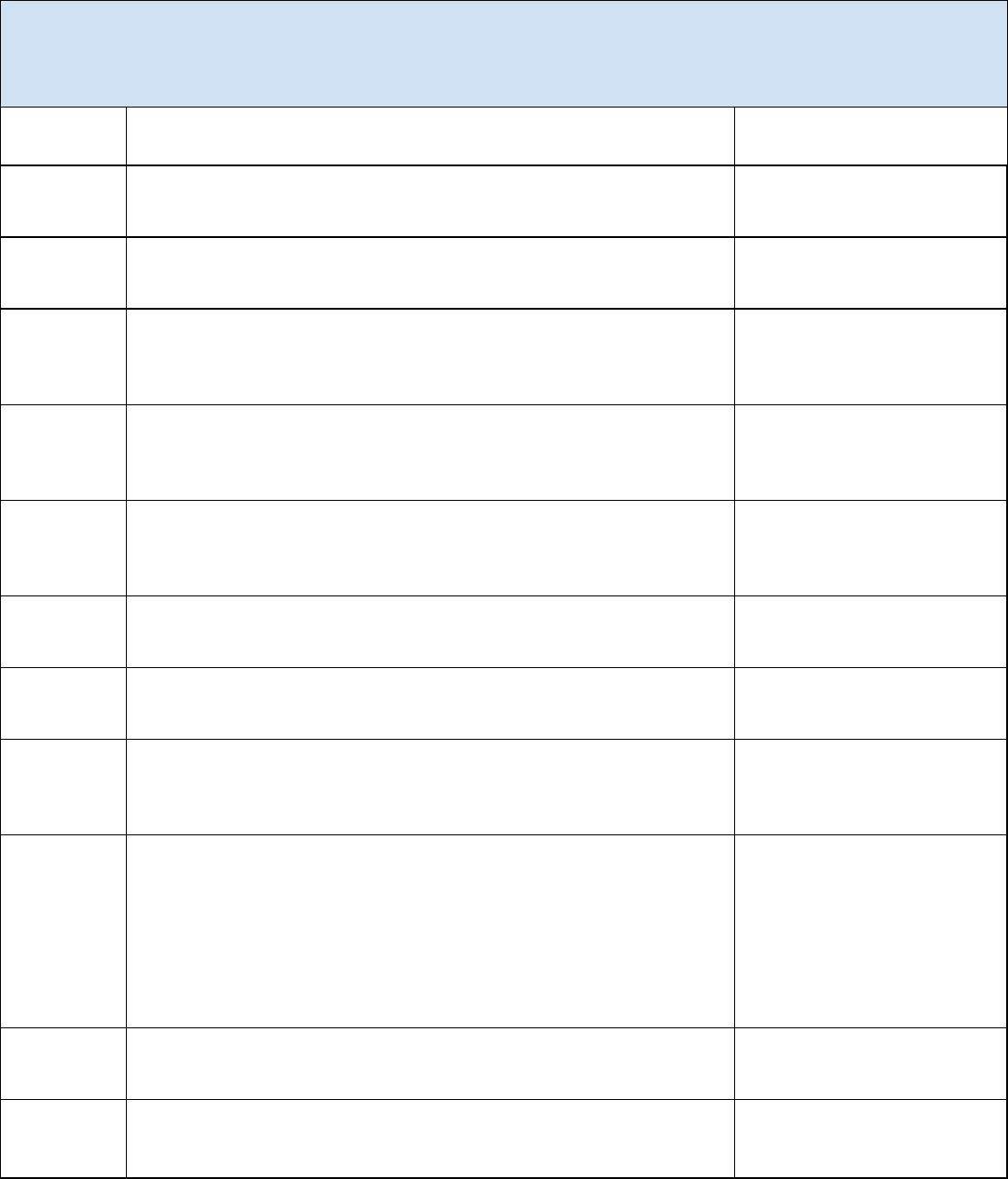

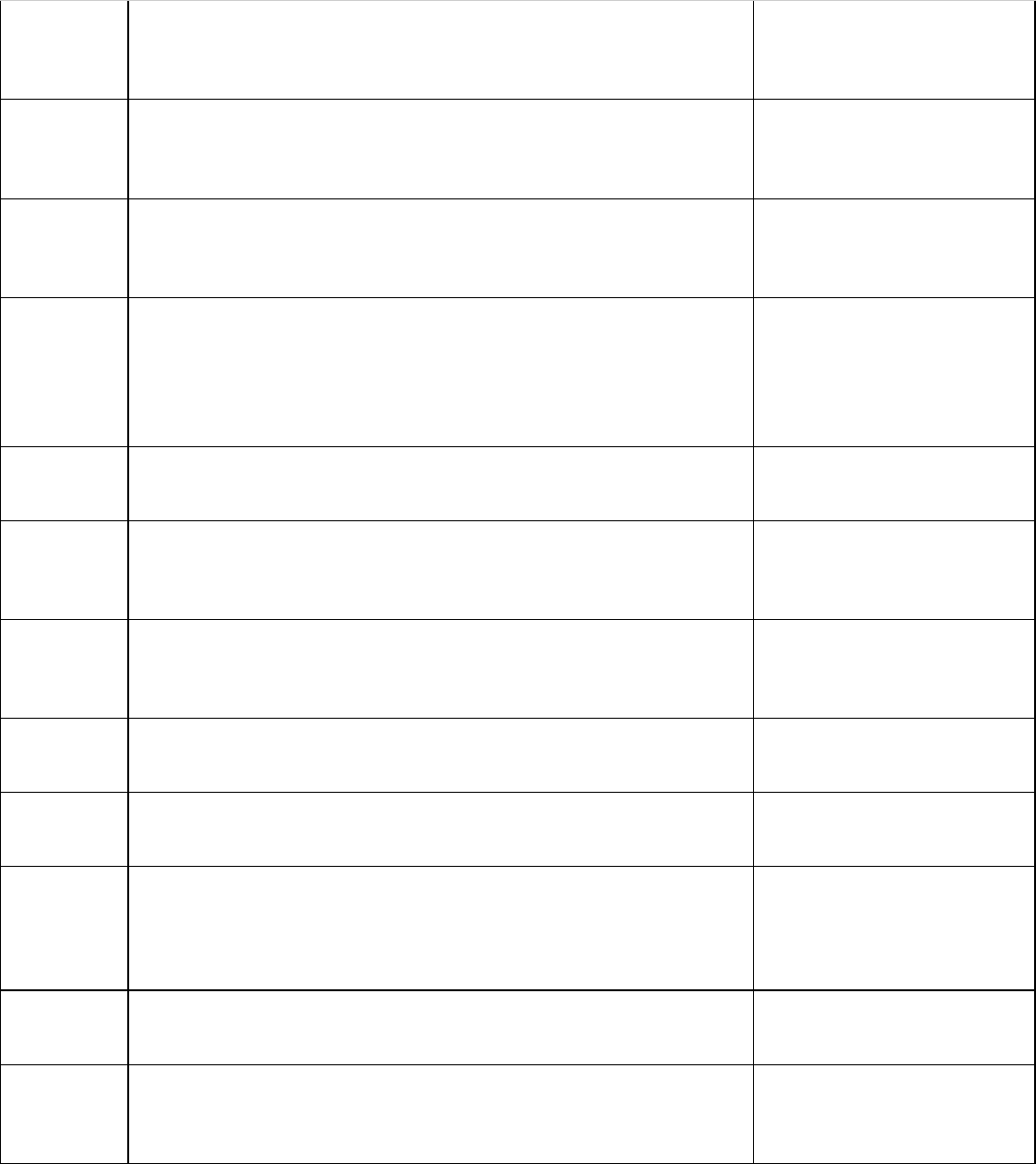

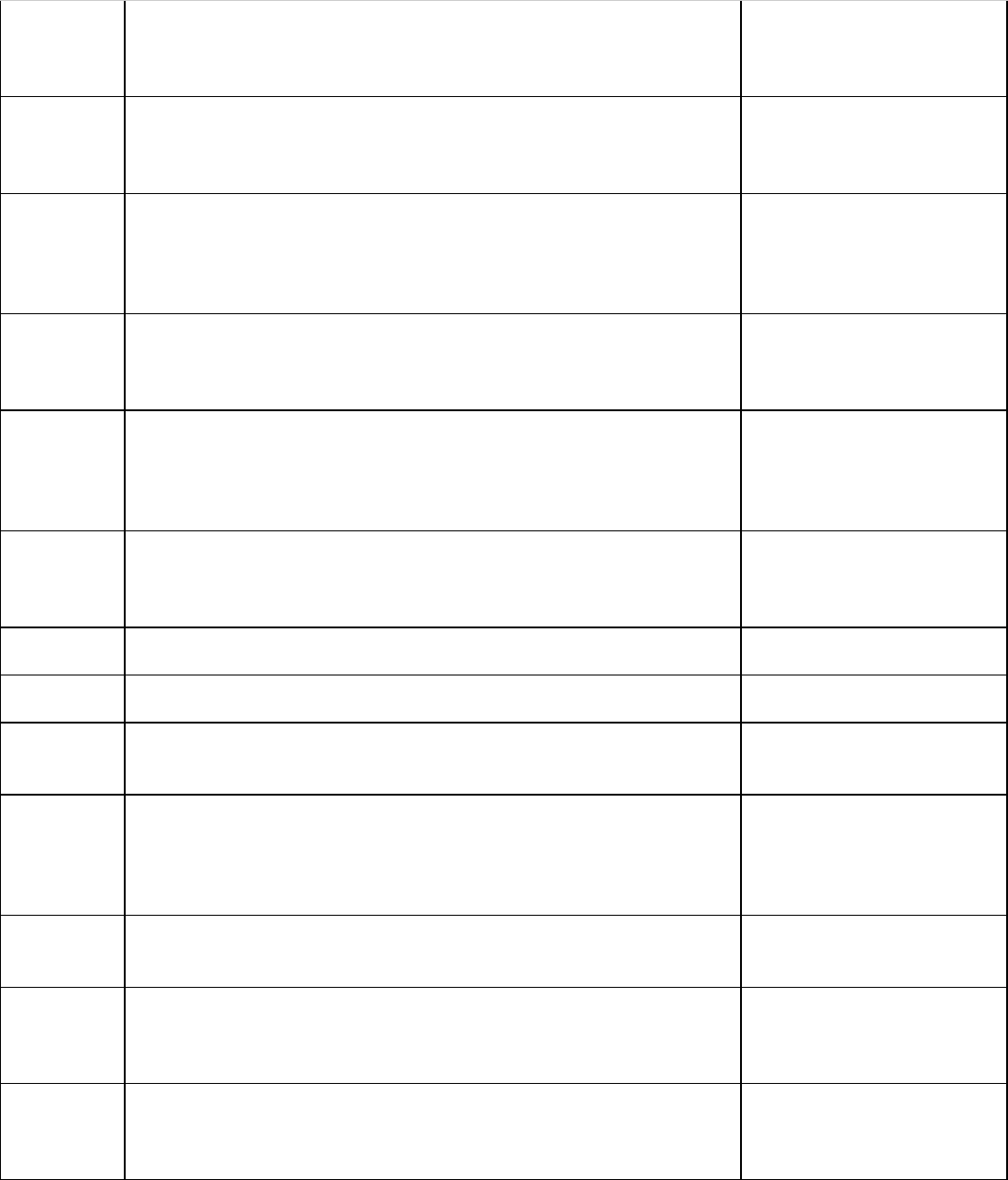

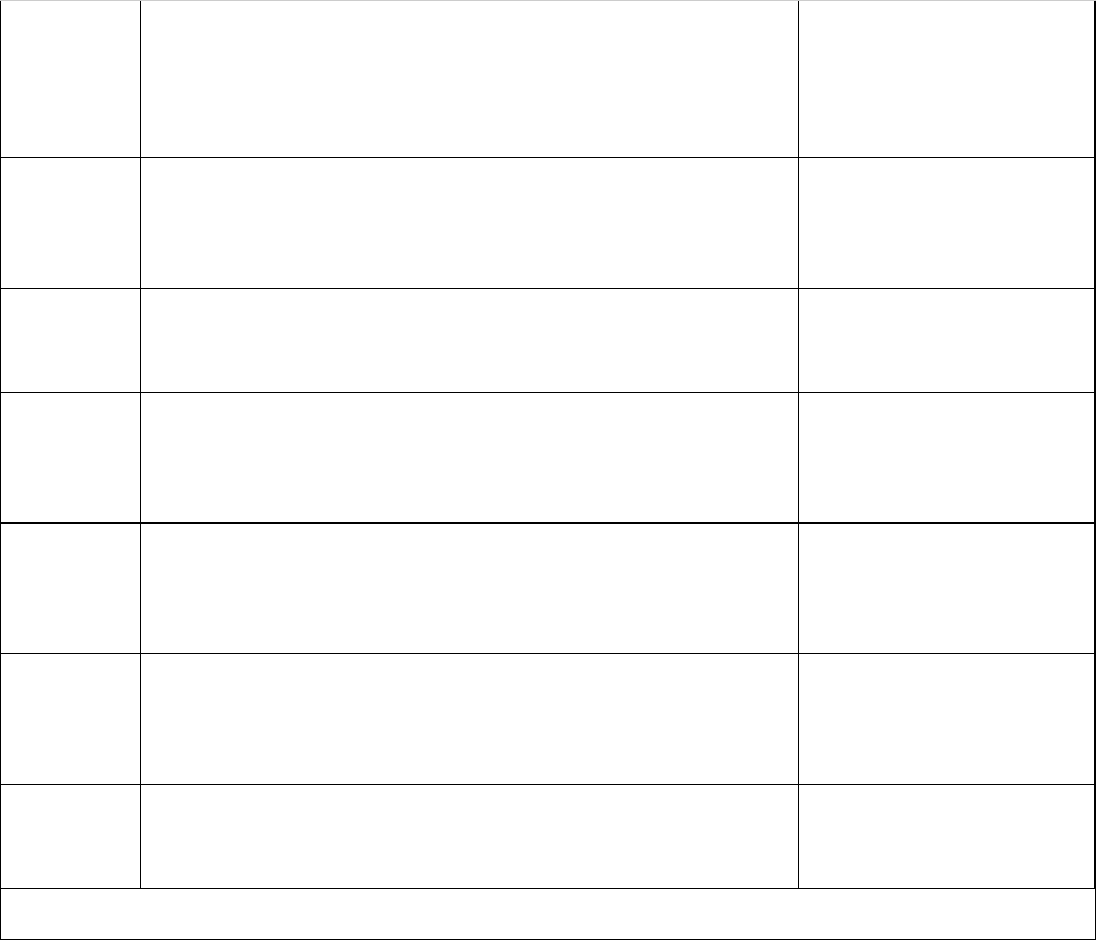

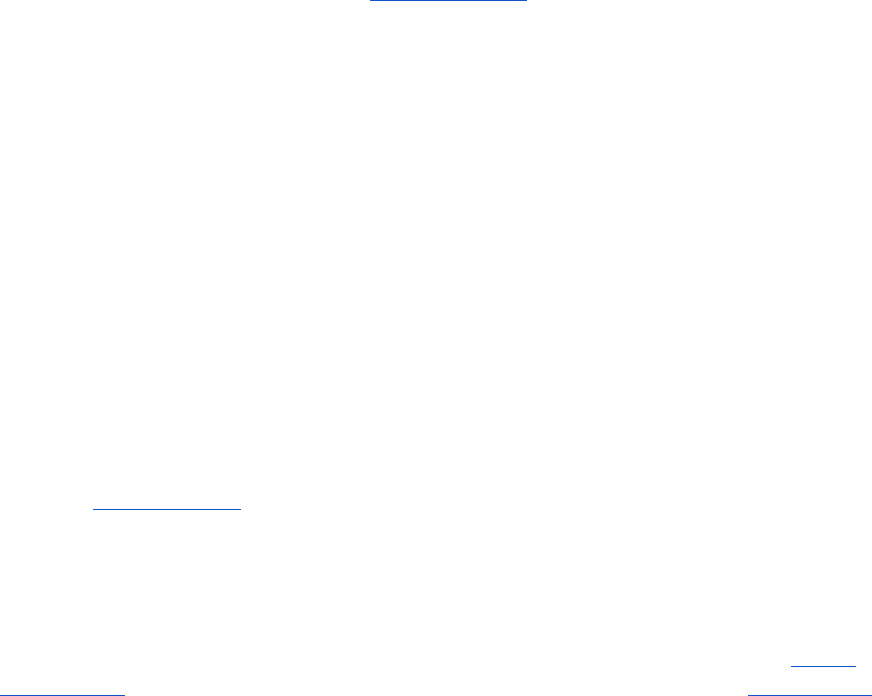

*GOVERN 1.2: The characteriscs of trustworthy AI are integrated into organizaonal policies, processes, procedures, and

pracces.

Acon ID

Acon

Risks

GV-1.2-001

Connect new GAI policies, procedures, and processes to exisng model, data, and

IT governance and to legal, compliance, and risk funcons.

GV-1.2-002

Consider factors such as internal vs. external use, narrow vs. broad applicaon

scope, ne-tuning and training data sources (i.e., grounding) when dening risk-

based controls.

GV-1.2-003

Dene acceptable use policies for GAI systems deployed by, used by, and used

within the organizaon.

GV-1.2-004

Establish and maintain policies for individual and organizaonal accountability

regarding the use of GAI.

GV-1.2-005

Establish policies and procedures for ensuring that harmful or illegal content,

parcularly CBRN informaon, CSAM, known NCII, nudity, and graphic violence, is

not included in training data.

CBRN Informaon, Obscene,

Degrading, and/or Abusive

Content, Dangerous or Violent

Recommendaons

GV-1.2-006

Establish policies to dene mechanisms for measuring the eecveness of

standard content provenance methodologies (e.g., cryptography, watermarking,

steganography, etc.) and tesng (including reverse engineering).

Informaon Integrity

12

GV-1.2-007

Establish transparency policies and processes for documenng the origin of

training data and generated data for GAI applicaons, including copyrights,

licenses, and data privacy, to advance content provenance.

Data Privacy, Informaon

Integrity, Intellectual Property

GV-1.2-008

Update exisng policies, procedures, and processes to control risks unique to or

exacerbated by GAI.

AI Actors: Governance and Oversight

1

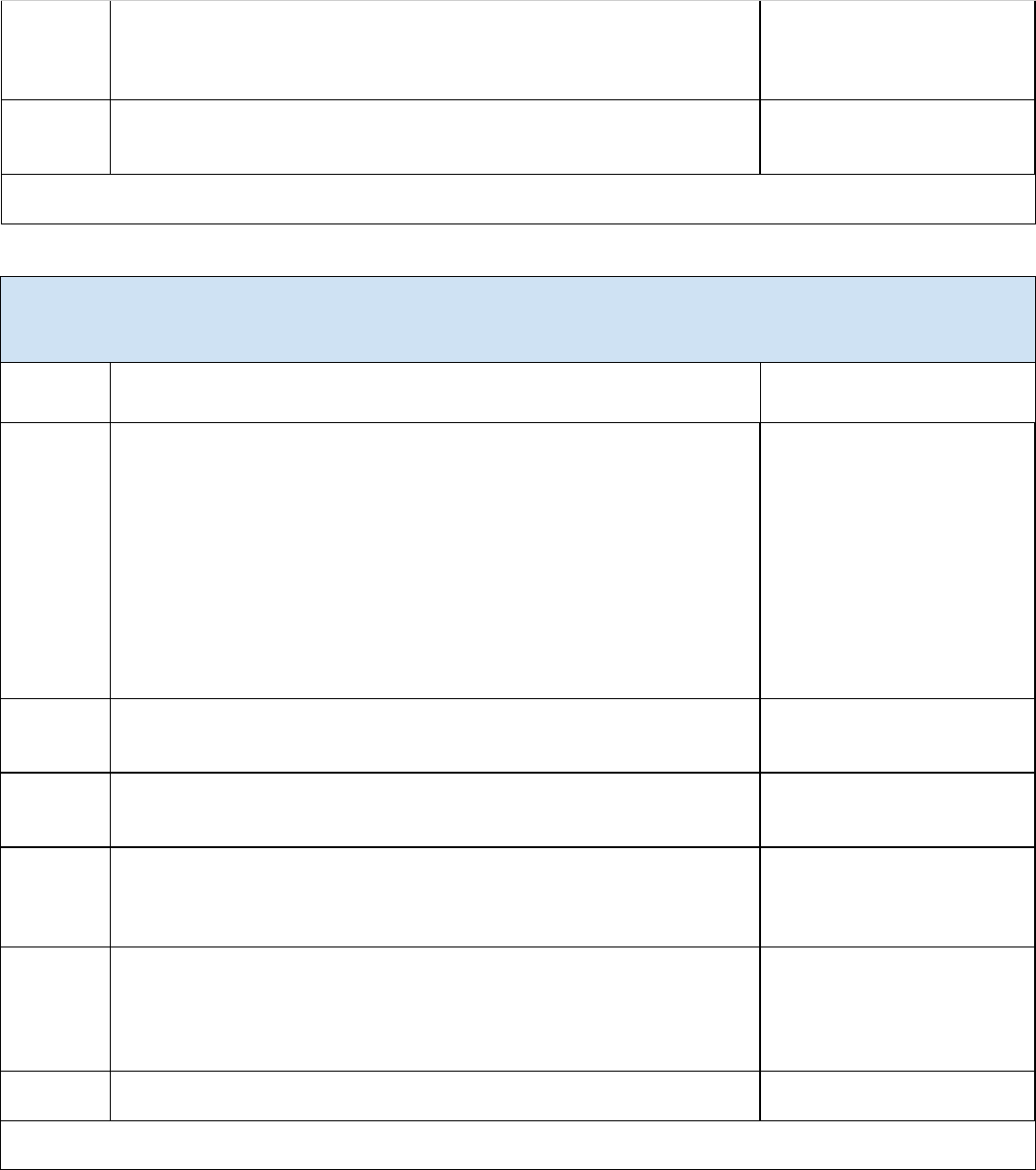

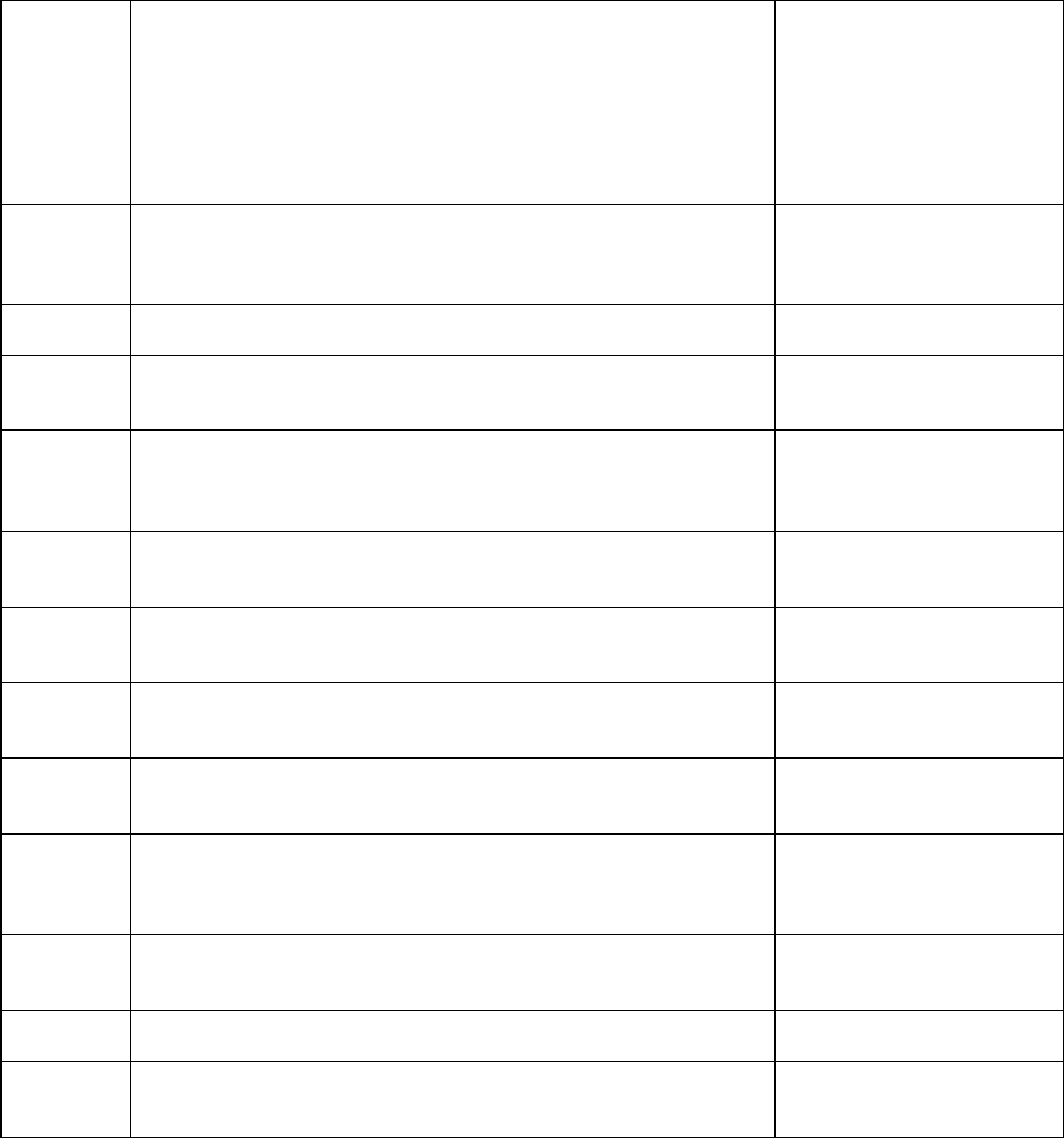

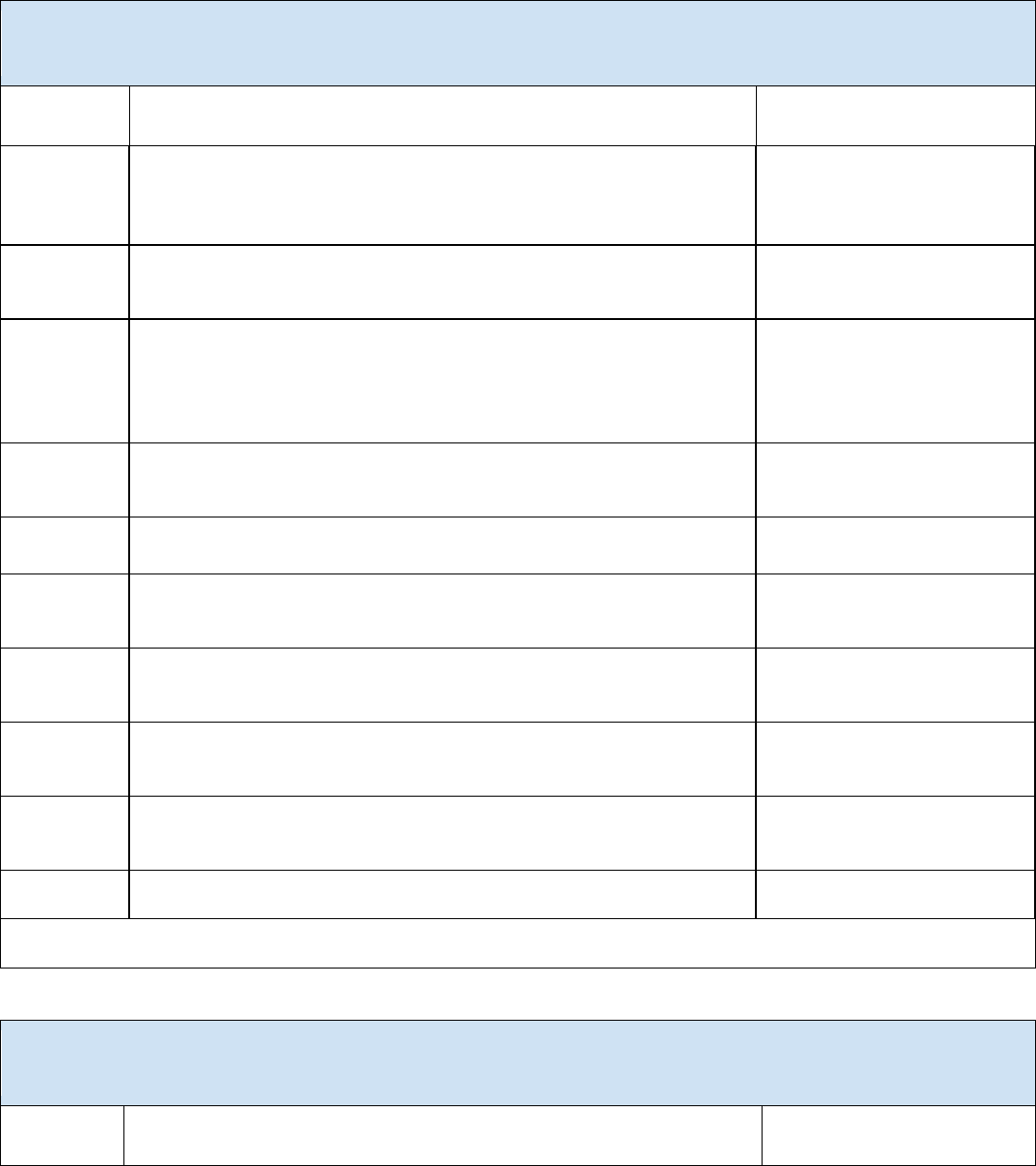

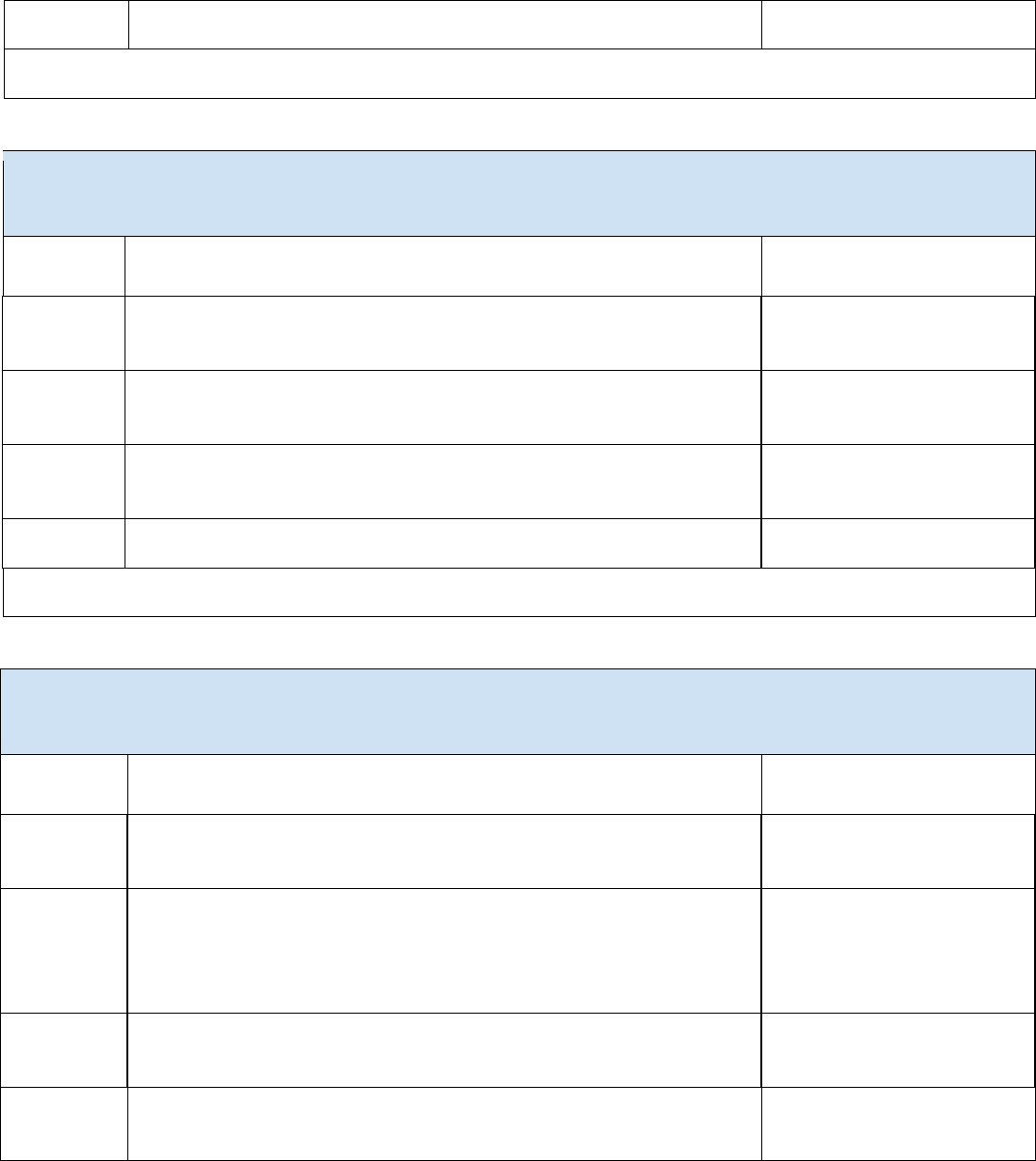

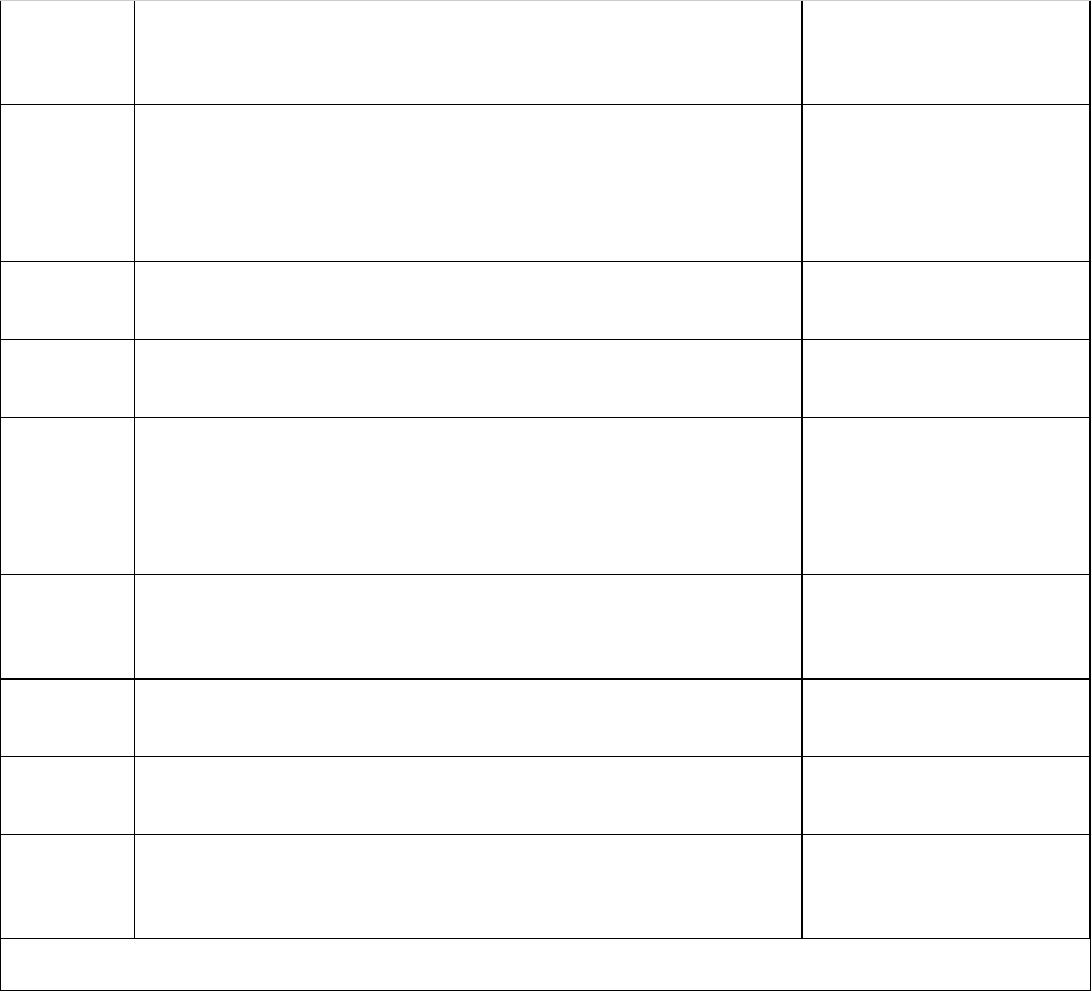

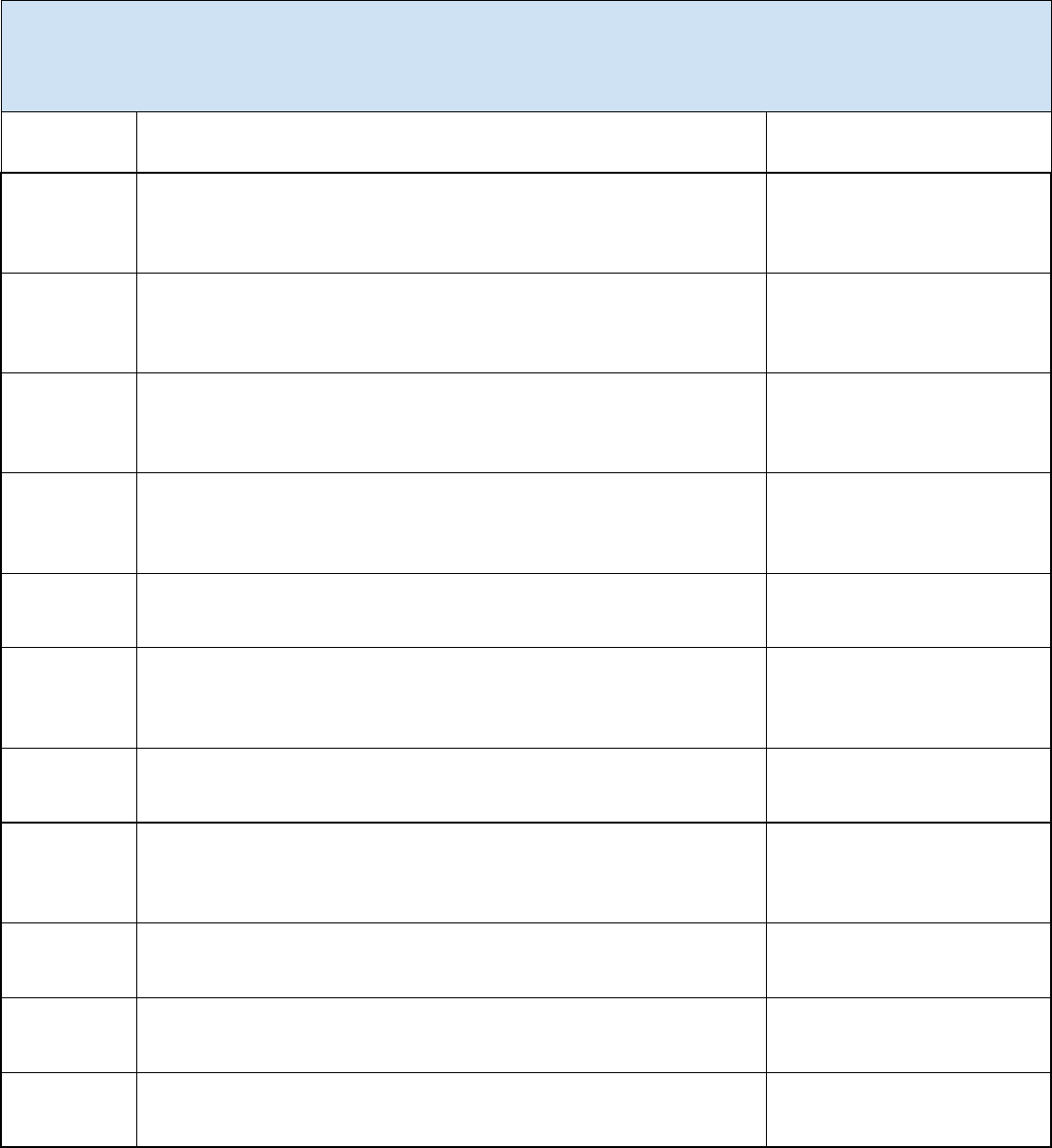

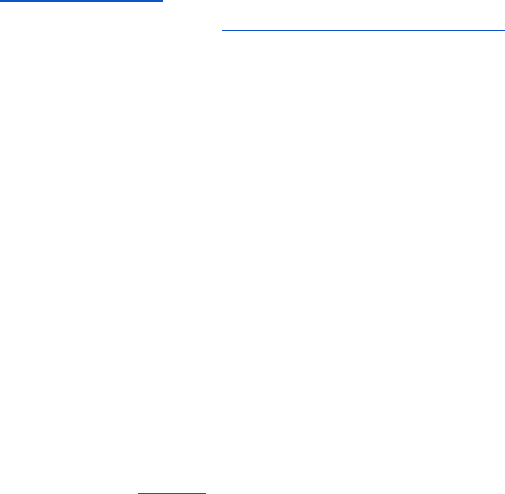

*GOVERN 1.3: Processes, procedures, and pracces are in place to determine the needed level of risk management acvies

based on the organizaon’s risk tolerance.

Acon ID

Acon

Risks

GV-1.3-001

Consider the following, or similar, factors when updang or dening risk ers for

GAI: Abuses and risks to informaon integrity; Cadence of vendor releases and

updates; Data protecon requirements; Dependencies between GAI and other IT

or data systems; Harm in physical environments; Human review of GAI system

outputs; Legal or regulatory requirements; Presentaon of obscene, objeconable,

toxic, invalid or untruthful output; Psychological impacts to humans (e.g.,

anthropomorphizaon, algorithmic aversion, emoonal entanglement); Immediate

and long term impacts; Internal vs. external use; Unreliable decision making

capabilies, validity, adaptability, and variability of GAI system performance over

me.

Informaon Integrity, Obscene,

Degrading, and/or Abusive

Content, Value Chain and

Component Integraon, Toxicity,

Bias, and Homogenizaon,

Dangerous or Violent

Recommendaons, CBRN

Informaon

GV-1.3-002

Dene acceptable uses for GAI systems, where some applicaons may be

restricted.

GV-1.3-003

Increase cadence for internal audits to address any unancipated changes in GAI

technologies or applicaons.

GV-1.3-004

Maintain an updated hierarchy of idened and expected GAI risks connected to

contexts of GAI use, potenally including specialized risk levels for GAI systems

that address risks such as model collapse and algorithmic monoculture.

Toxicity, Bias, and Homogenizaon

GV-1.3-005

Reevaluate organizaonal risk tolerances to account for broad GAI risks, including:

Immature safety or risk cultures related to AI and GAI design, development and

deployment, public informaon integrity risks, including impacts on democrac

processes, unknown long-term performance characteriscs of GAI.

Informaon Integrity, Dangerous

or Violent Recommendaons

GV-1.3-006

Tie expected GAI behavior to trustworthy characteriscs.

AI Actors: Governance and Oversight

2

13

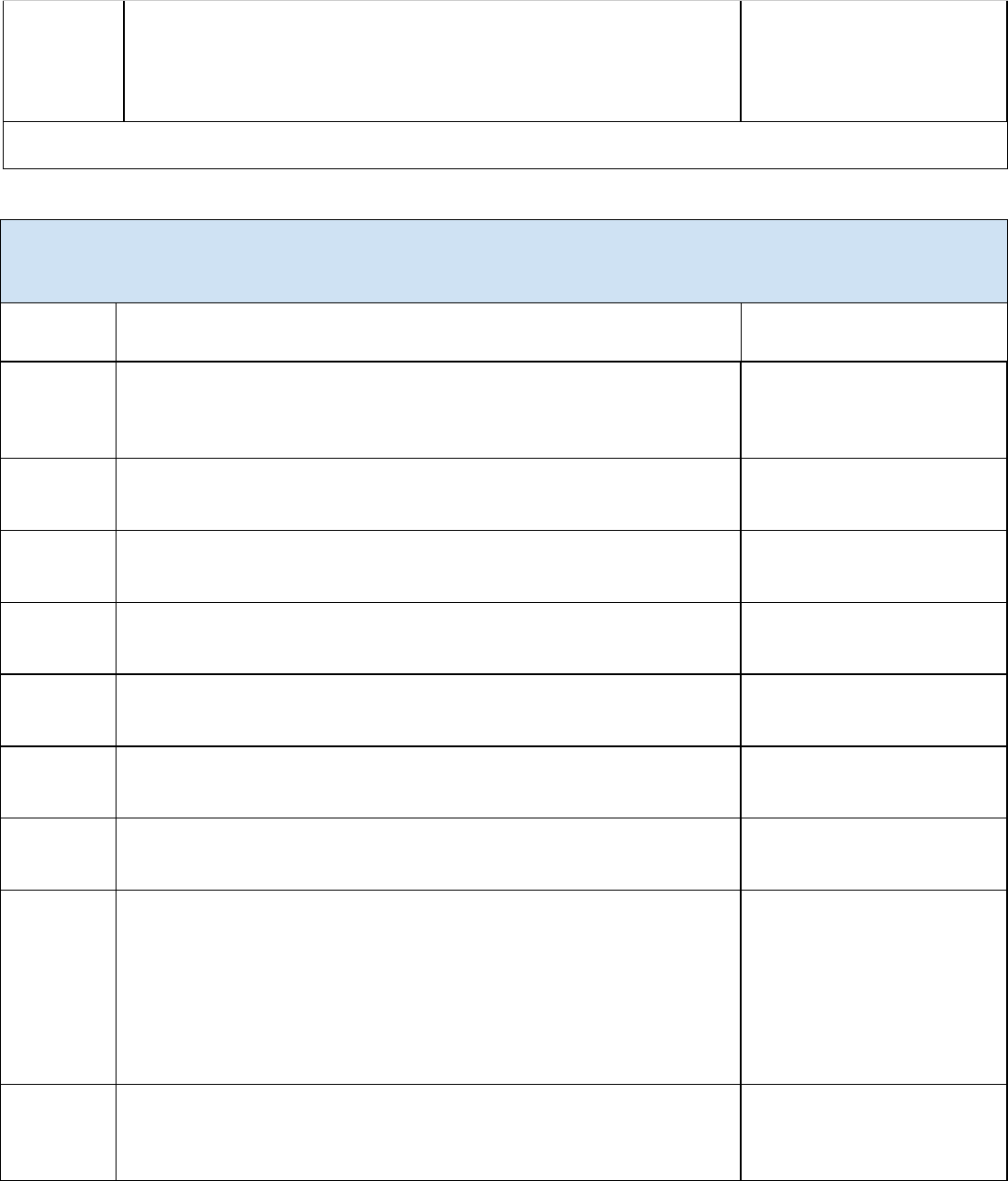

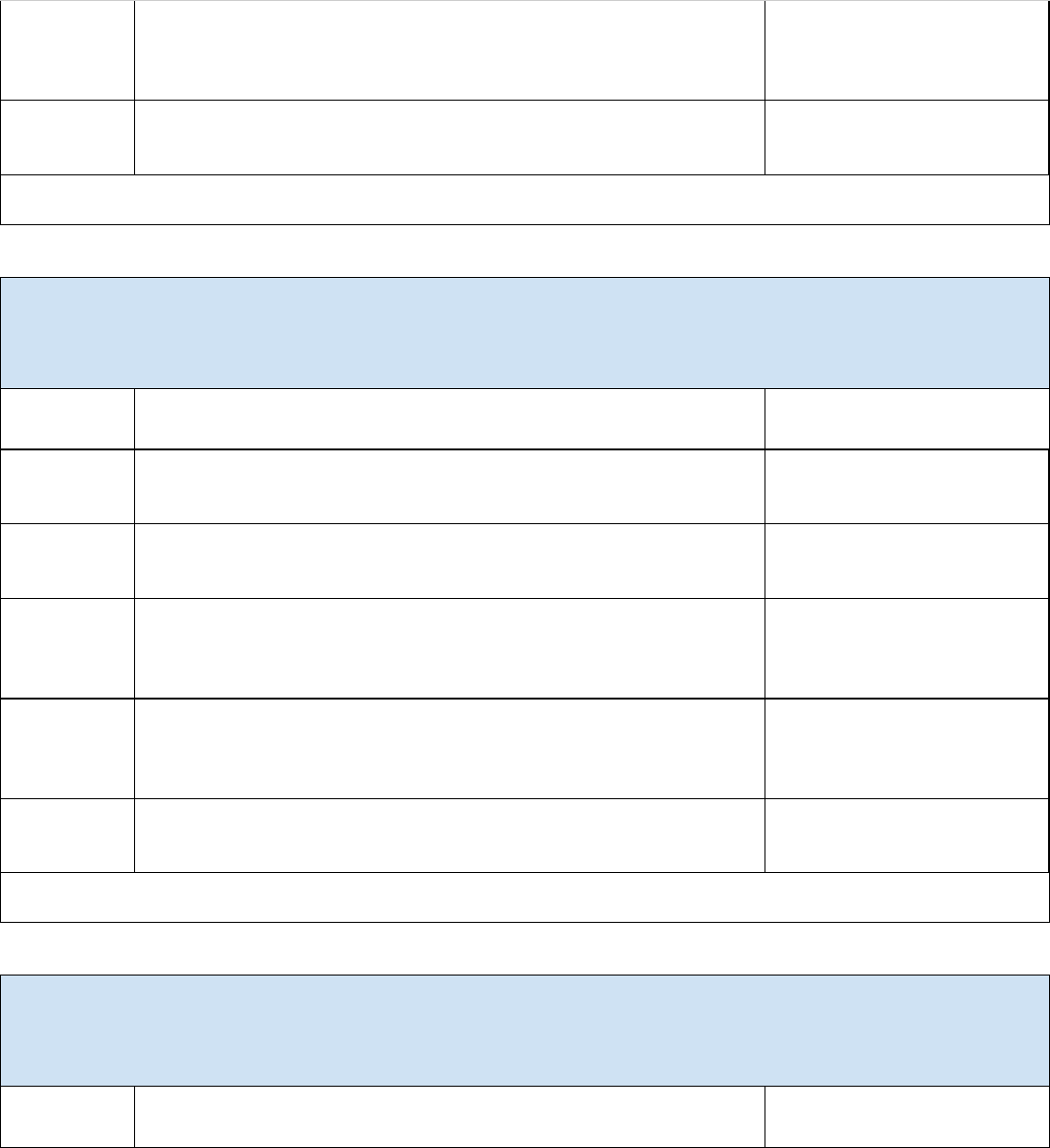

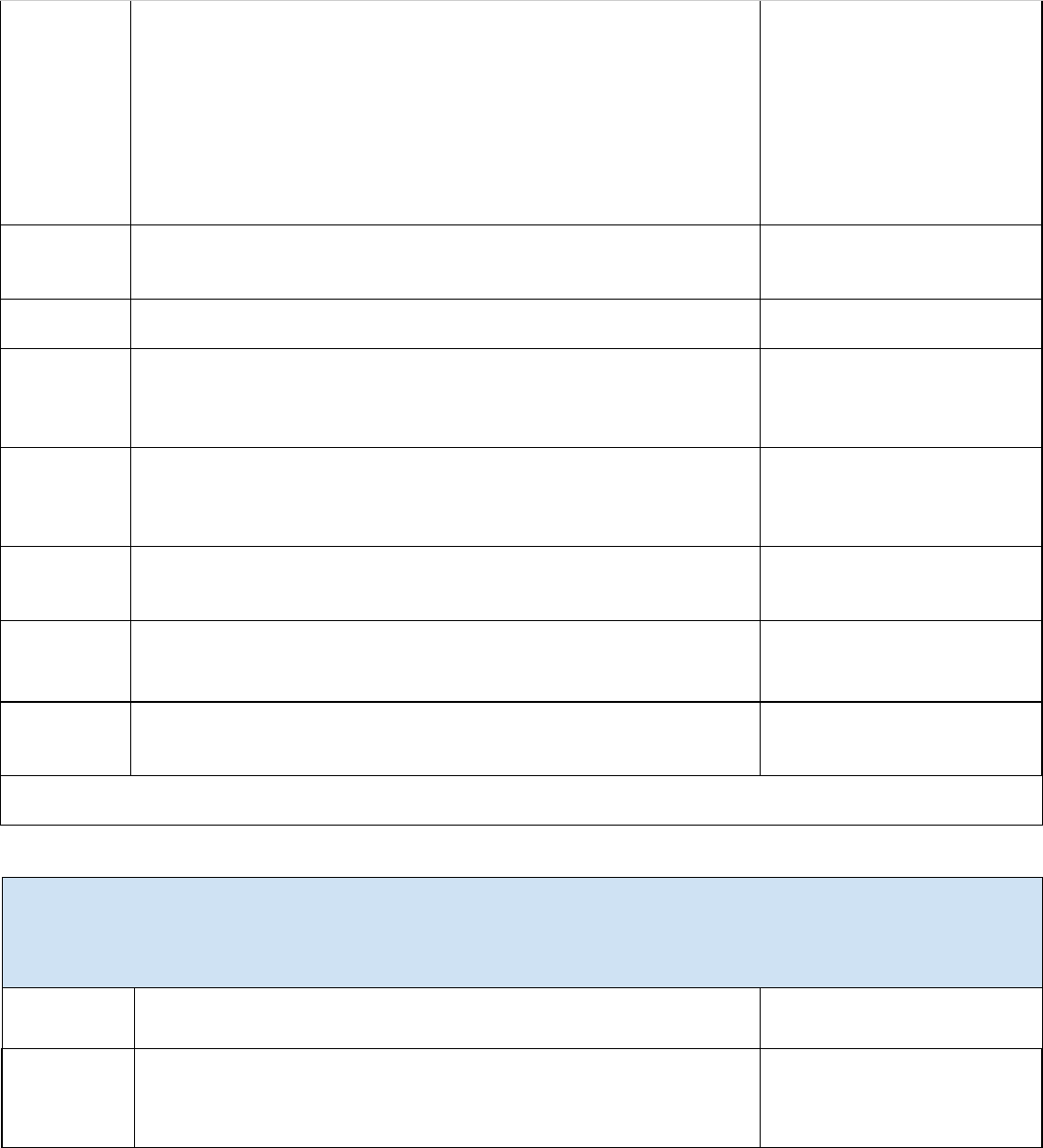

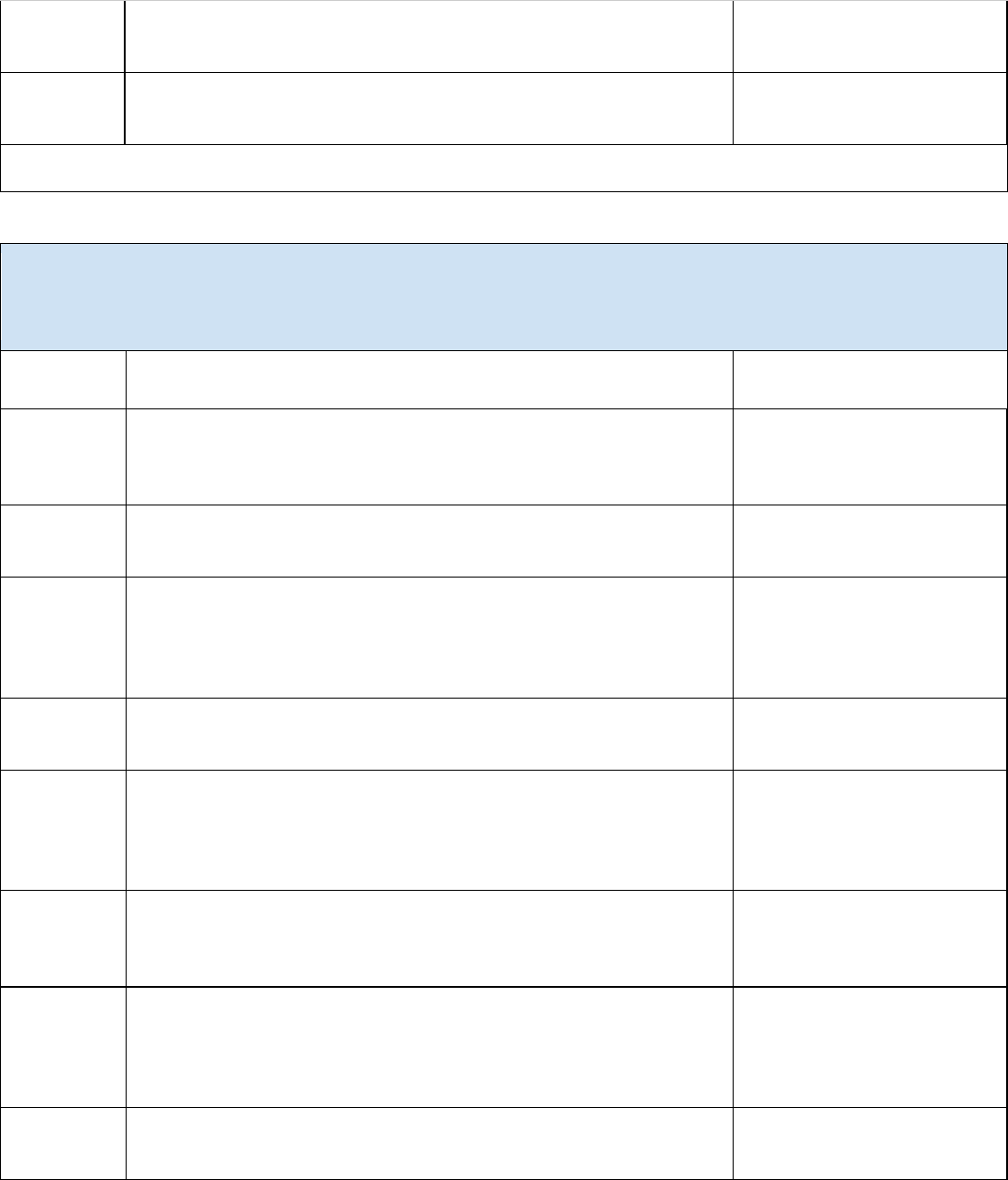

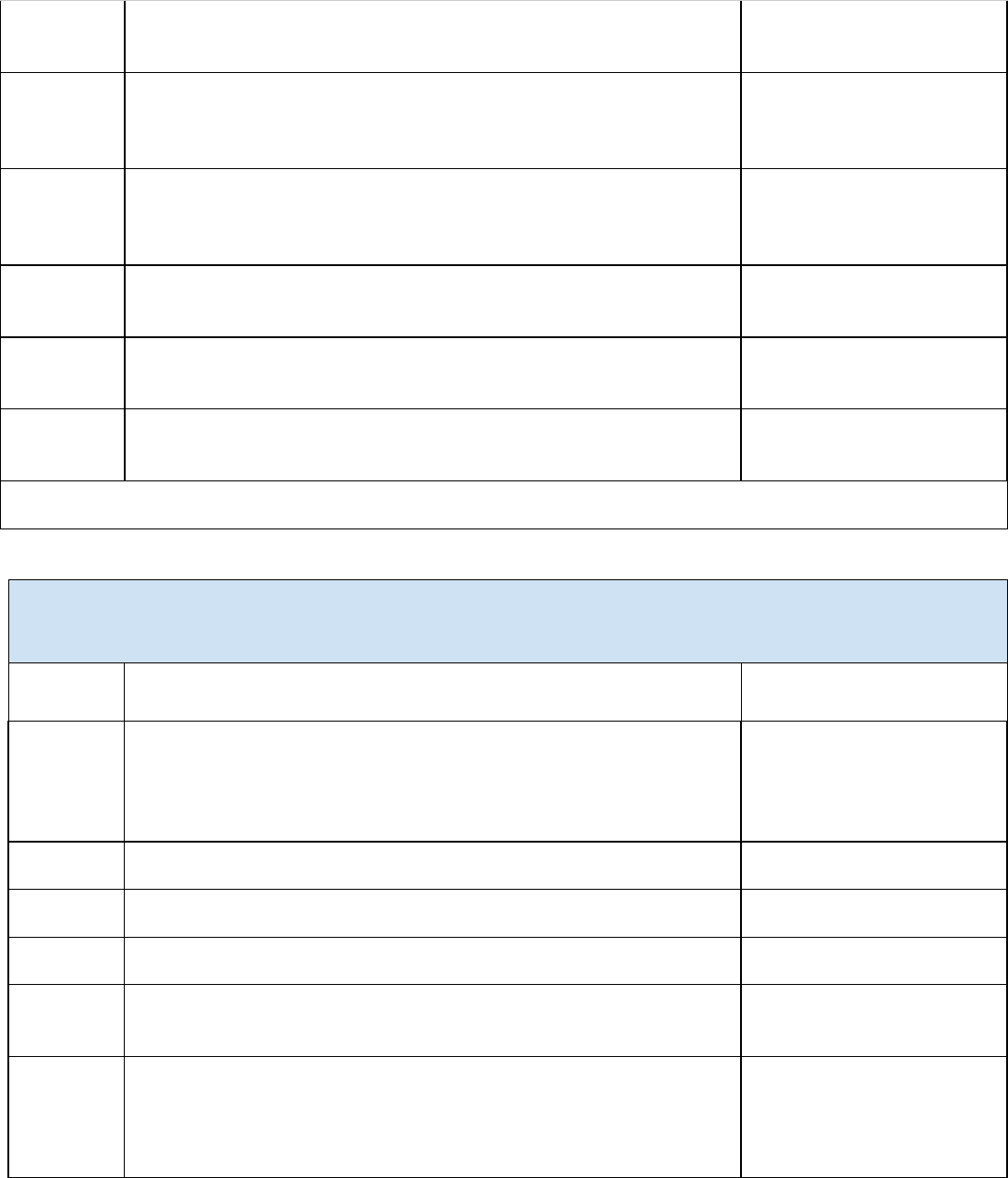

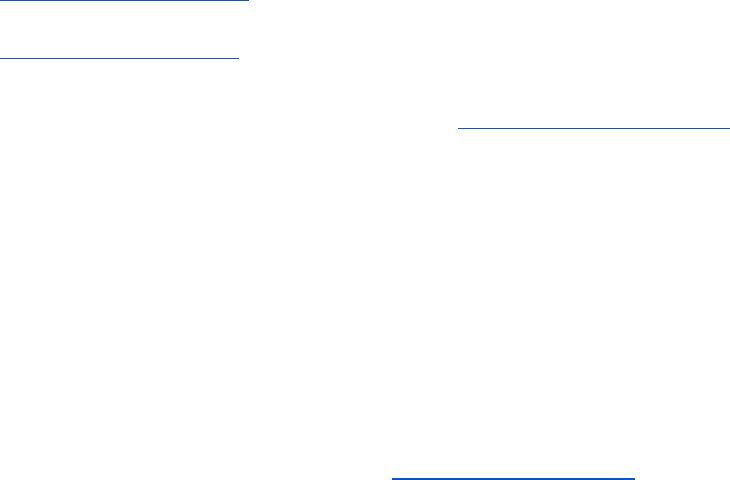

GOVERN 1.5: Ongoing monitoring and periodic review of the risk management process and its outcomes are planned, and

organizaonal roles and responsibilies are clearly dened, including determining the frequency of periodic review.

Acon ID

Acon

Risks

GV-1.5-001

Dene organizaonal responsibilies for content provenance monitoring and

incident response.

Informaon Integrity

GV-1.5-002

Develop or review exisng policies for authorizaon of third party plug-ins and

verify that related procedures are able to be followed.

Value Chain and Component

Integraon

GV-1.5-003

Establish and maintain policies and procedures for monitoring the eecveness of

content provenance for data and content generated across the AI system lifecycle.

Informaon Integrity

GV-1.5-004

Establish organizaonal policies and procedures for aer acon reviews of GAI

system incident response and incident disclosures, to idenfy gaps; Update

incident response and incident disclosure processes as required.

Human AI Conguraon

GV-1.5-005

Establish policies for periodic review of organizaonal monitoring and incident

response plans based on impacts and in line with organizaonal risk tolerance.

Informaon Security,

Confabulaon

GV-1.5-006

Maintain a long term document retenon policy to keep full history for auding,

invesgaon, or improving content provenance methods.

Informaon Integrity

GV-1.5-007

Verify informaon sharing and feedback mechanisms among individuals and

organizaons regarding any negave impact from AI systems due to content

provenance issues.

Informaon Integrity

GV-1.5-008

Verify that review procedures include analysis of cascading impacts of GAI system

outputs used as inputs to third party plug-ins or other systems.

Value Chain and Component

Integraon

AI Actors: Governance and Oversight, Operaon and Monitoring

1

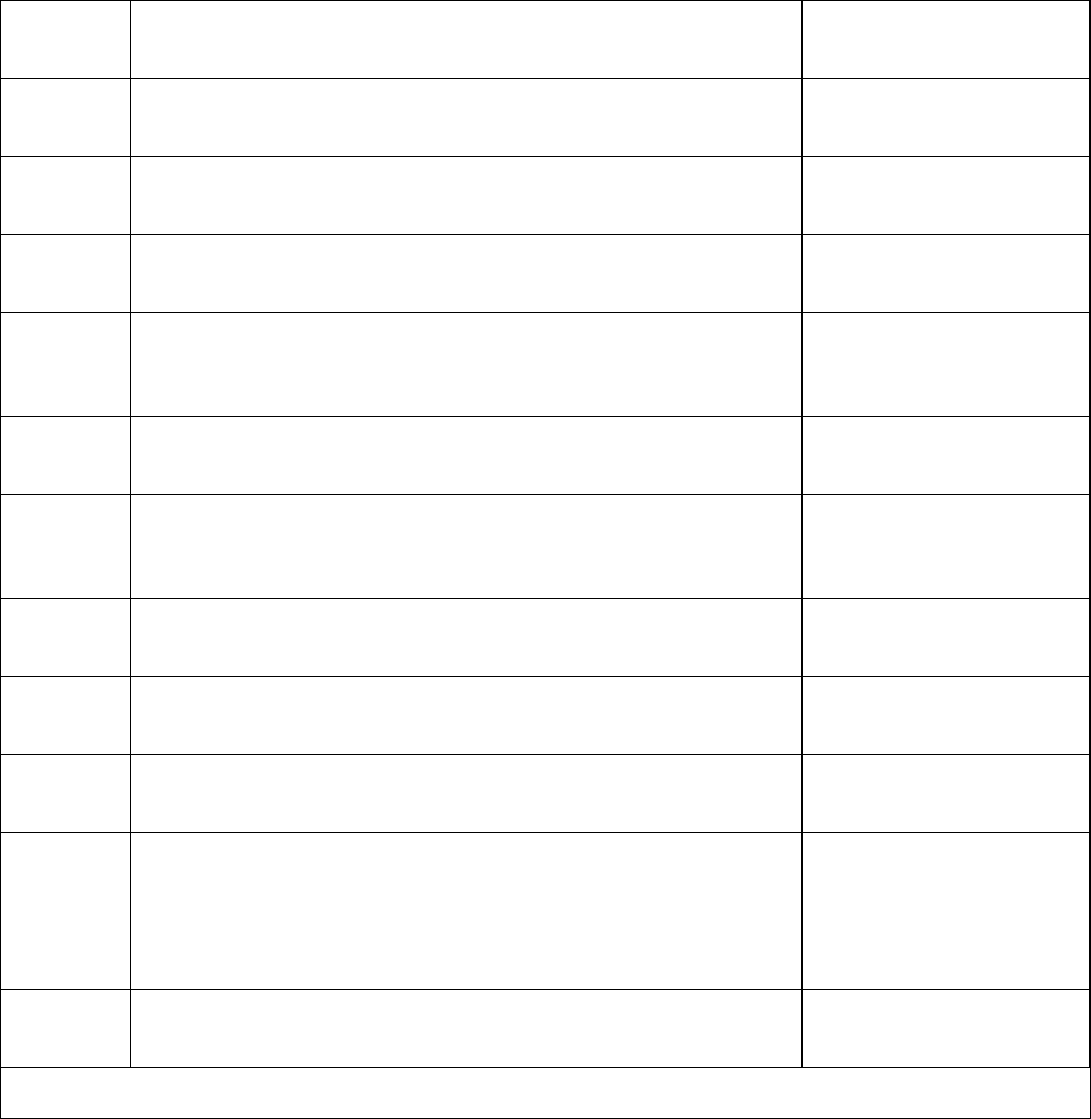

*GOVERN 1.6: Mechanisms are in place to inventory AI systems and are resourced according to organizaonal risk priories.

Acon ID

Acon

Risks

GV-1.6-001

Dene any inventory exempons for GAI systems embedded into applicaon

soware in organizaonal policies.

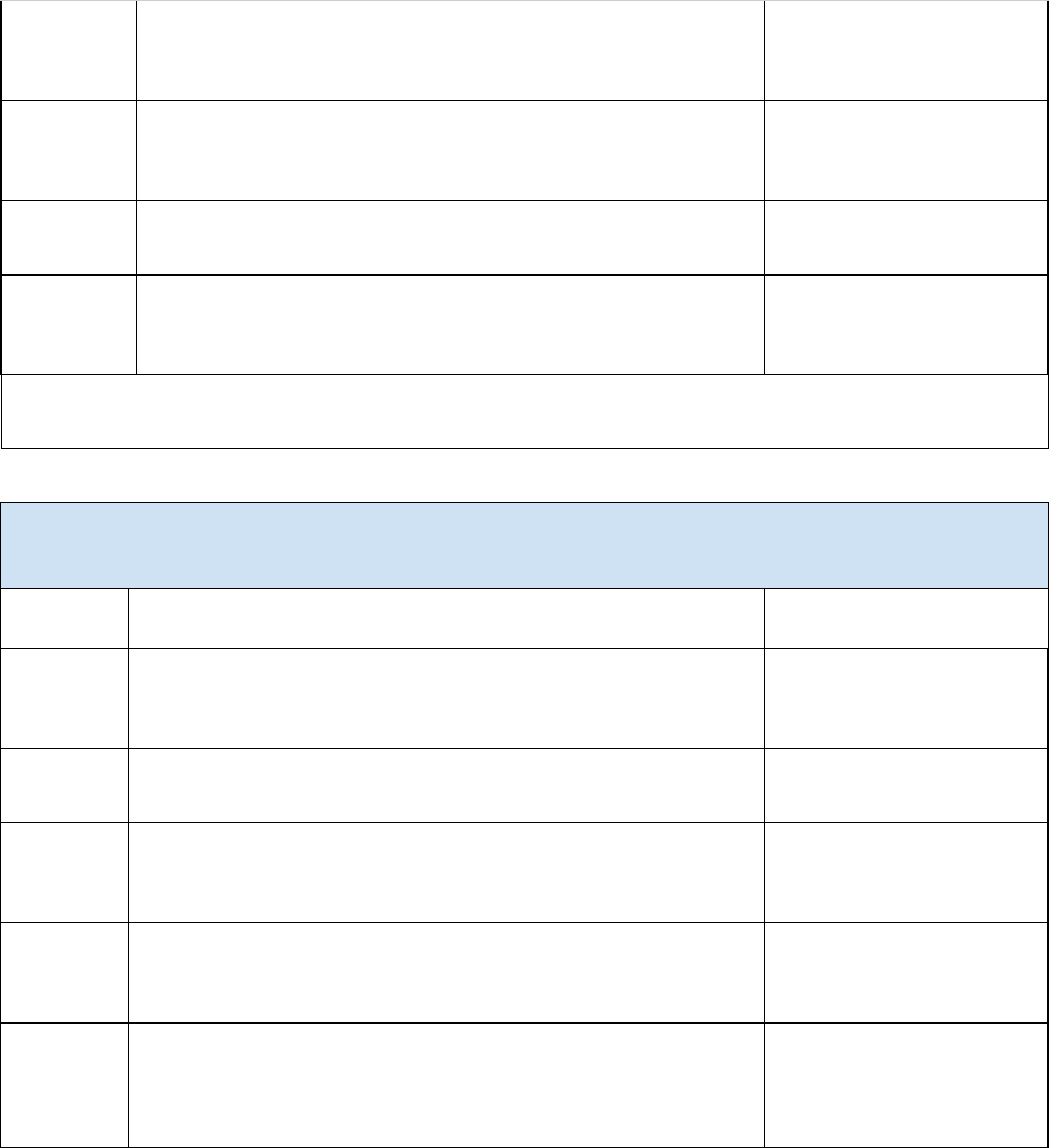

GV-1.6-002

Enumerate organizaonal GAI systems for incorporaon into AI system inventory

and adjust AI system inventory requirements to account for GAI risks.

GV-1.6-003

In addion to general model, governance, and risk informaon, consider the

following items in GAI system inventory entries: Acceptable use policies and policy

Data Privacy, Human AI

Conguraon, Informaon

14

excepons; Applicaon, Assumpons and limitaons of use, including enumeraon

of restricted uses; Business or model owners; Challenges for explainability,

interpretability, or transparency; Change management, maintenance, and

monitoring plans; Connecons or dependencies between other systems; Consent

informaon and noces; Data provenance informaon (e.g., source, signatures,

versioning, watermarks); Designaon of in-house or third party development;

Designaon of risk level; Disclosure informaon or noces; Incident response

plans; Known issues reported from internal bug tracking or external informaon

sharing resources (e.g., AI incident database, AVID, CVE, or OECD incident

monitor); Human oversight roles and responsibilies; Special rights and

consideraons for intellectual property, licensed works, or personal, privileged,

proprietary or sensive data; Time frame for valid deployment, including date of

last risk assessment; Underlying foundaon models, versions of underlying models,

and access modes; Updated hierarchy of idened and expected risks connected

to contexts of use.

Integrity, Intellectual Property,

Value Chain and Component

Integraon

GV-1.6-004

Inventory recently decommissioned systems, systems with imminent deployment

plans, and operaonal systems.

GV-1.6-005

Update policy denions for AI systems, models, qualitave tools or similar to

account for GAI systems.

AI Actors: Governance and Oversight

1

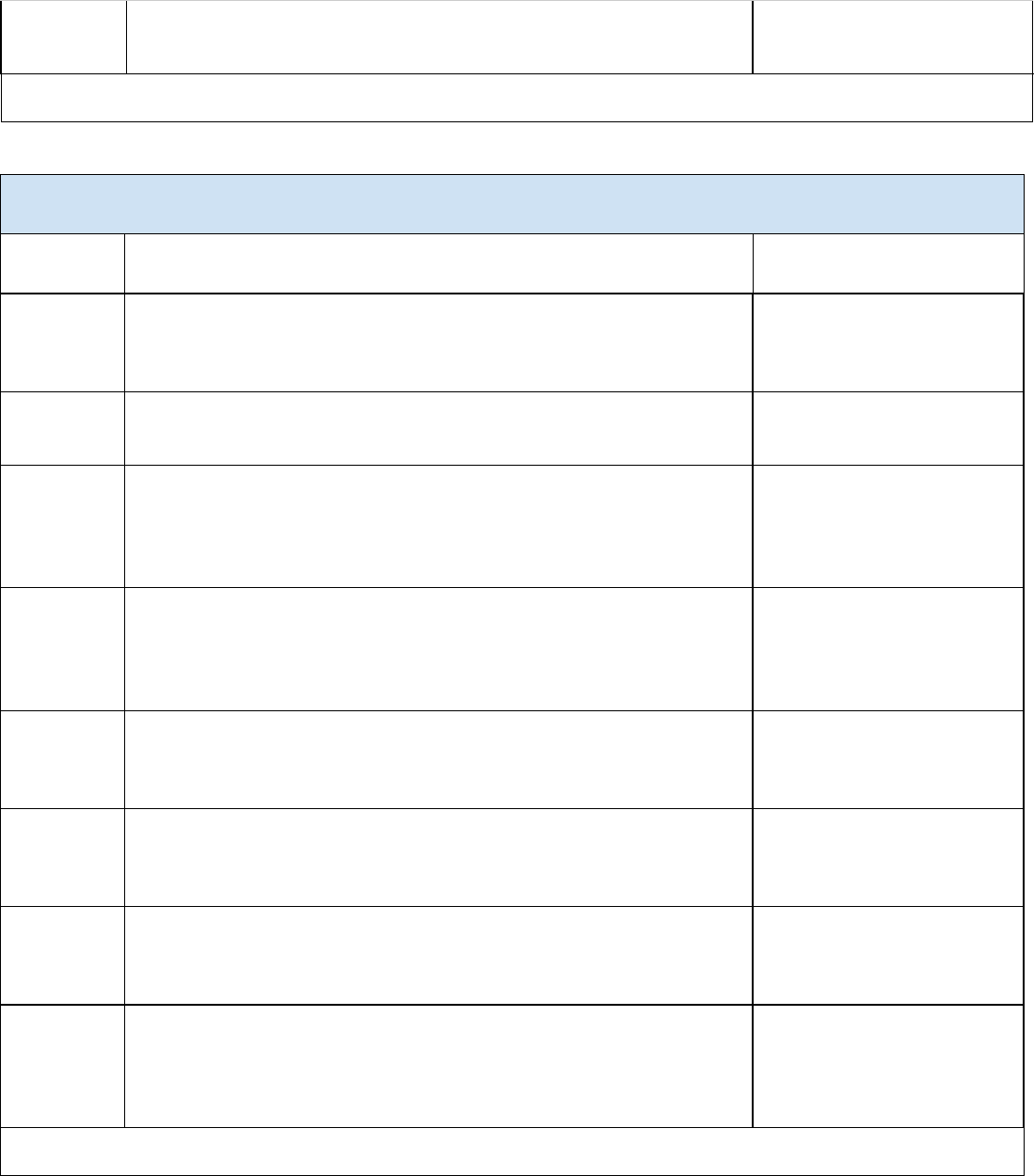

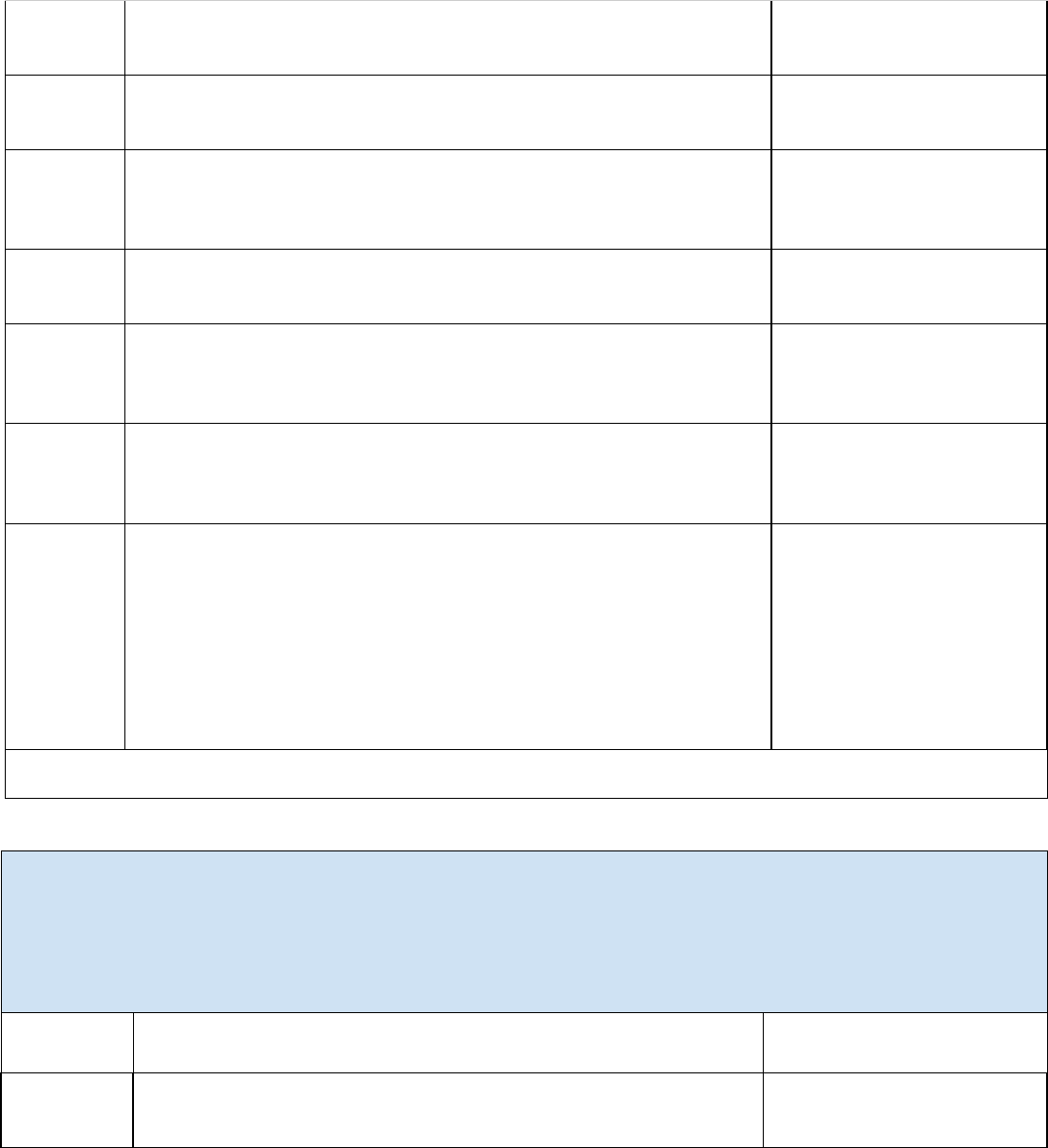

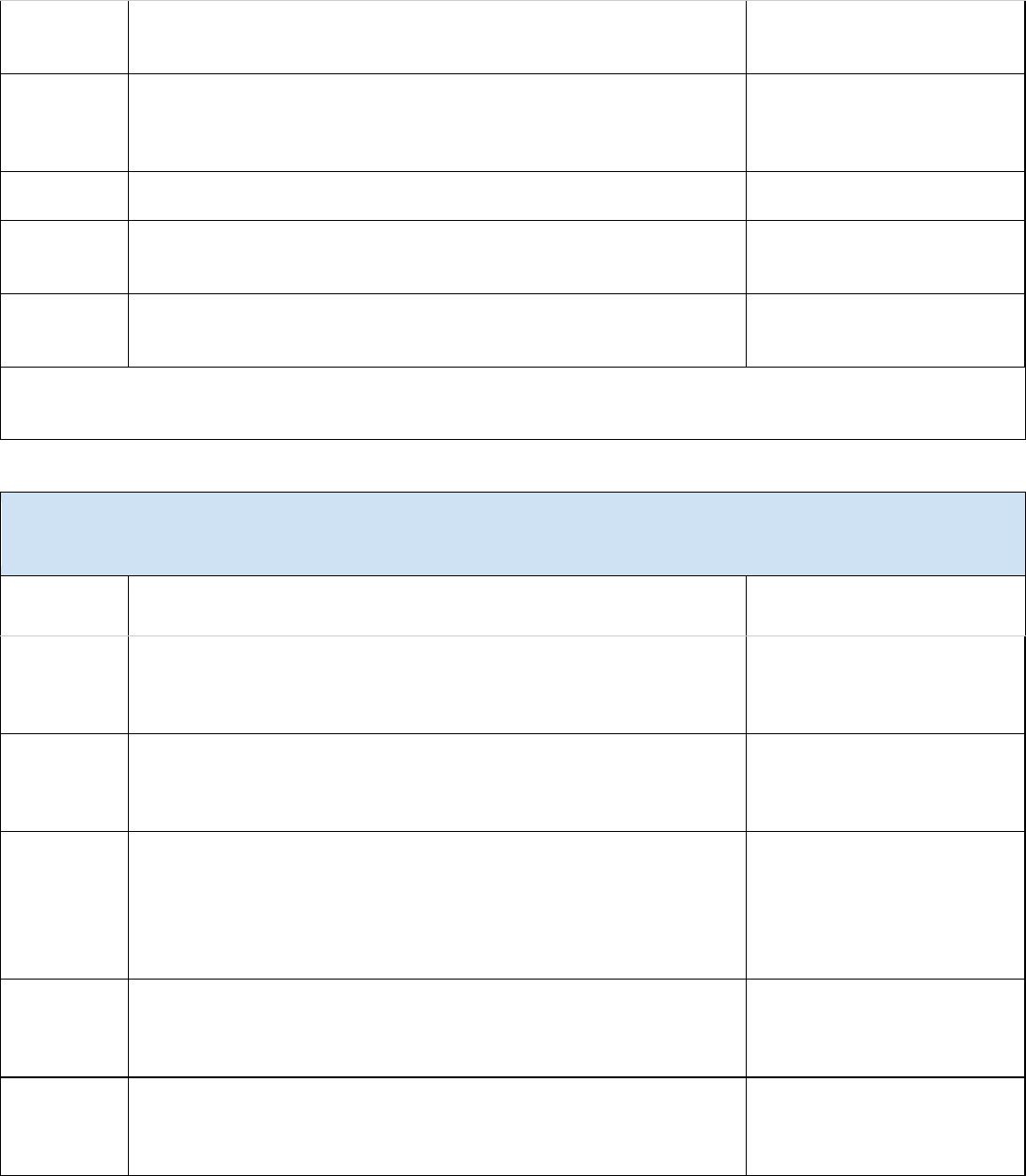

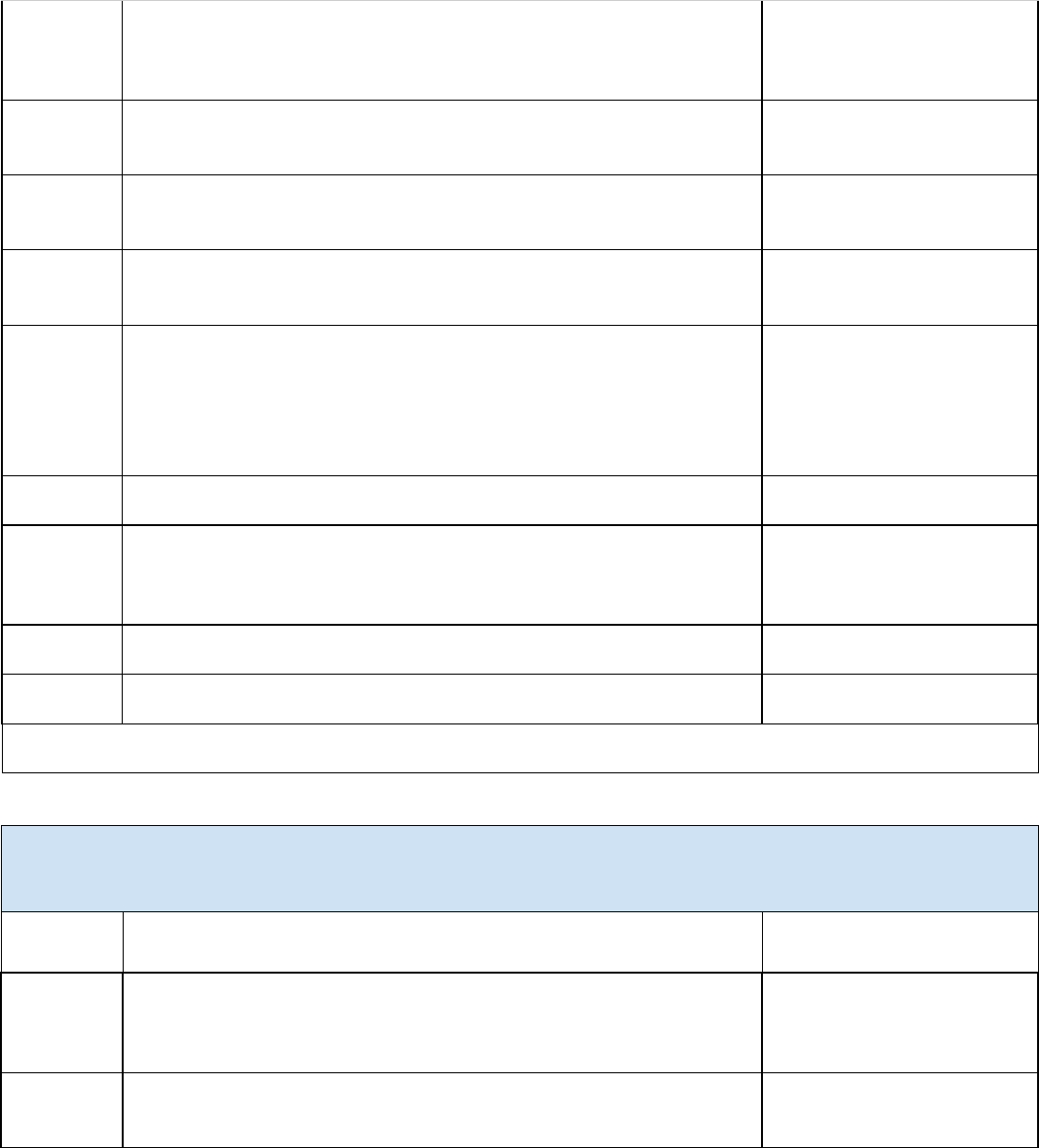

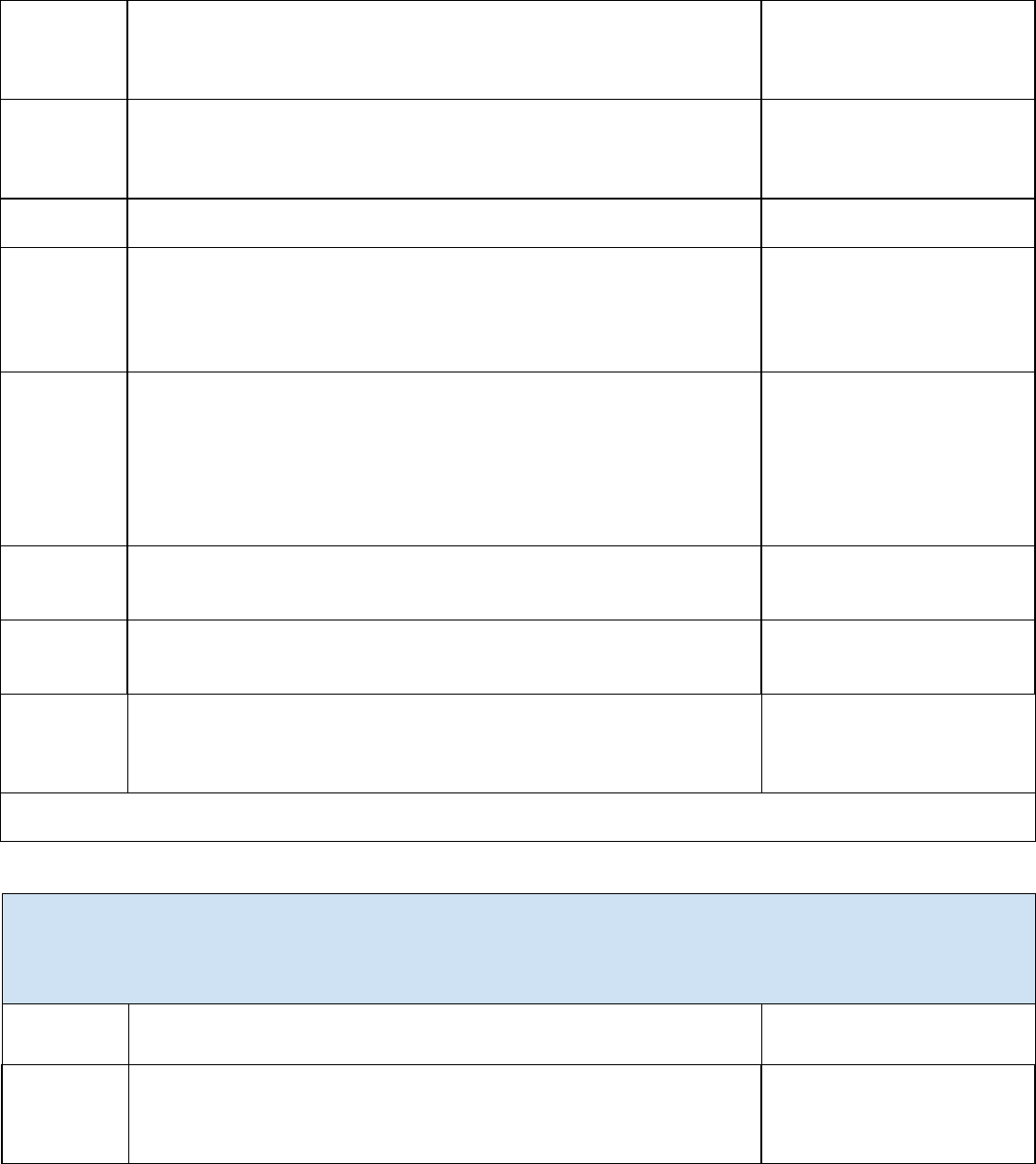

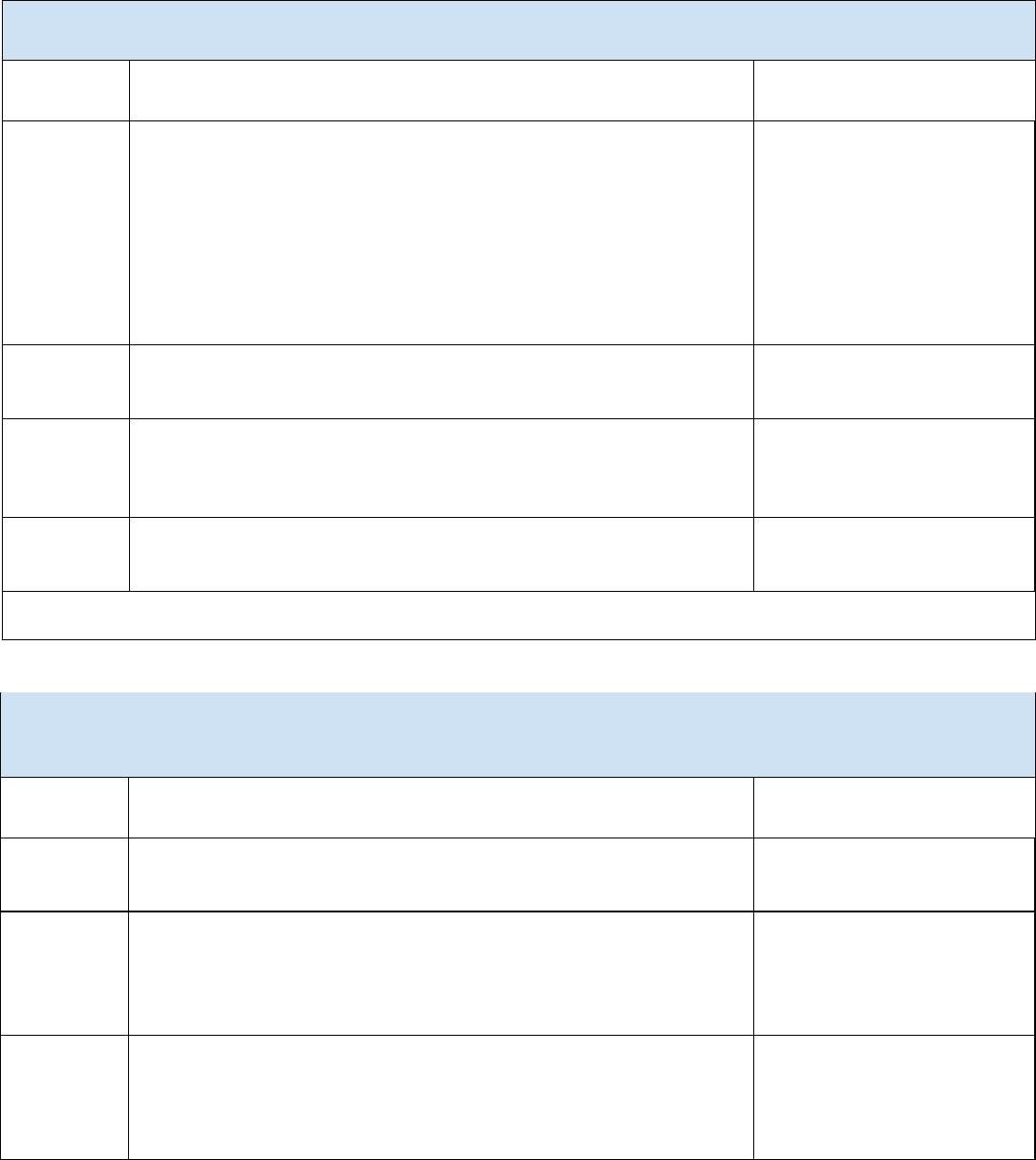

GOVERN 1.7: Processes and procedures are in place for decommissioning and phasing out AI systems safely and in a manner that

does not increase risks or decrease the organizaon’s trustworthiness.

Acon ID

Acon

Risks

GV-1.7-001

Allocate me and resources for staged decommissioning for GAI to avoid service

disrupons.

GV-1.7-002

Communicate decommissioning and support plans for GAI systems to AI actors

and users through various channels and maintain communicaon and associated

training protocols.

Human AI Conguraon

GV-1.7-003

Consider the following factors when decommissioning GAI systems: Clear

versioning of decommissioned and replacement systems; Contractual, legal, or

regulatory requirements; Data retenon requirements; Data security, e.g.,

Containment, protocols, Data leakage aer decommissioning; Dependencies

between upstream, downstream, or other data, internet of things (IOT) or AI

systems; Digital and physical arfacts; Recourse mechanisms for impacted users

or communies; Terminaon of related cloud or vendor services; Users’

emoonal entanglement with GAI funcons.

Human AI Conguraon,

Informaon Security, Value Chain

and Component Integraon

15

GV-1.7-004

Implement data security and privacy controls for stored decommissioned GAI

systems.

Data Privacy, Informaon Security

GV-1.7-005

Update exisng policies (e.g., enterprise record retenon policies) or establish

new policies for the decommissioning of GAI systems.

AI Actors: AI Deployment, Operaon and Monitoring

1

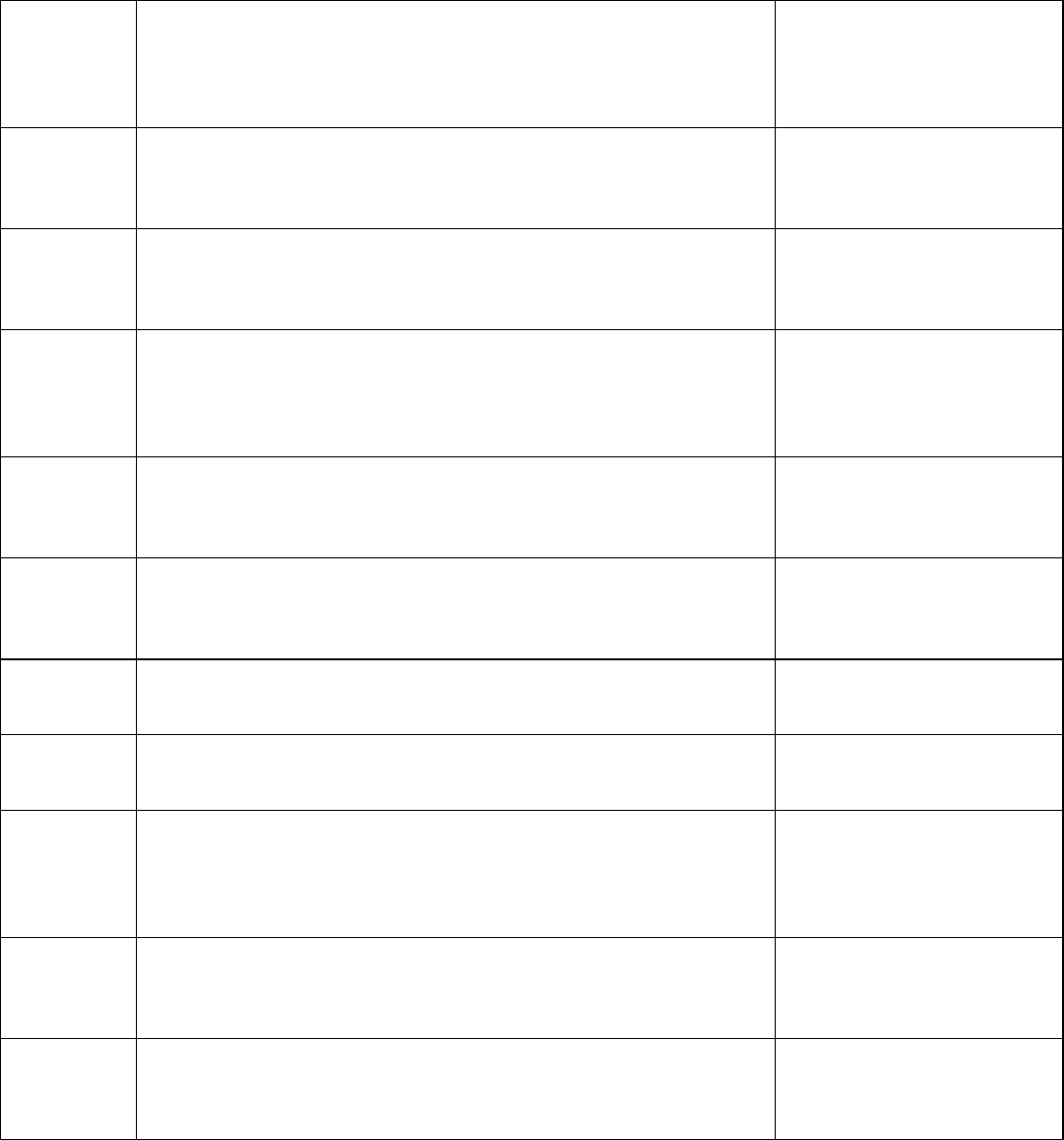

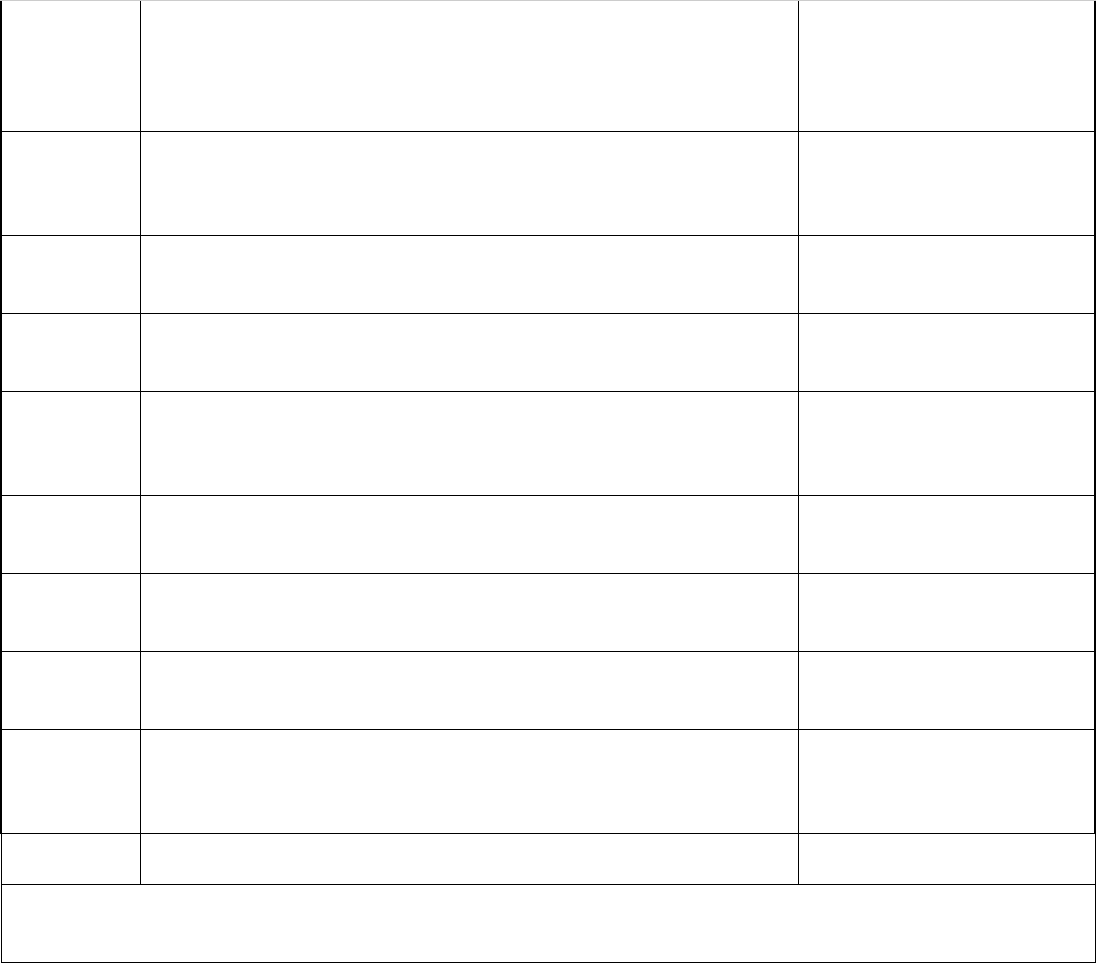

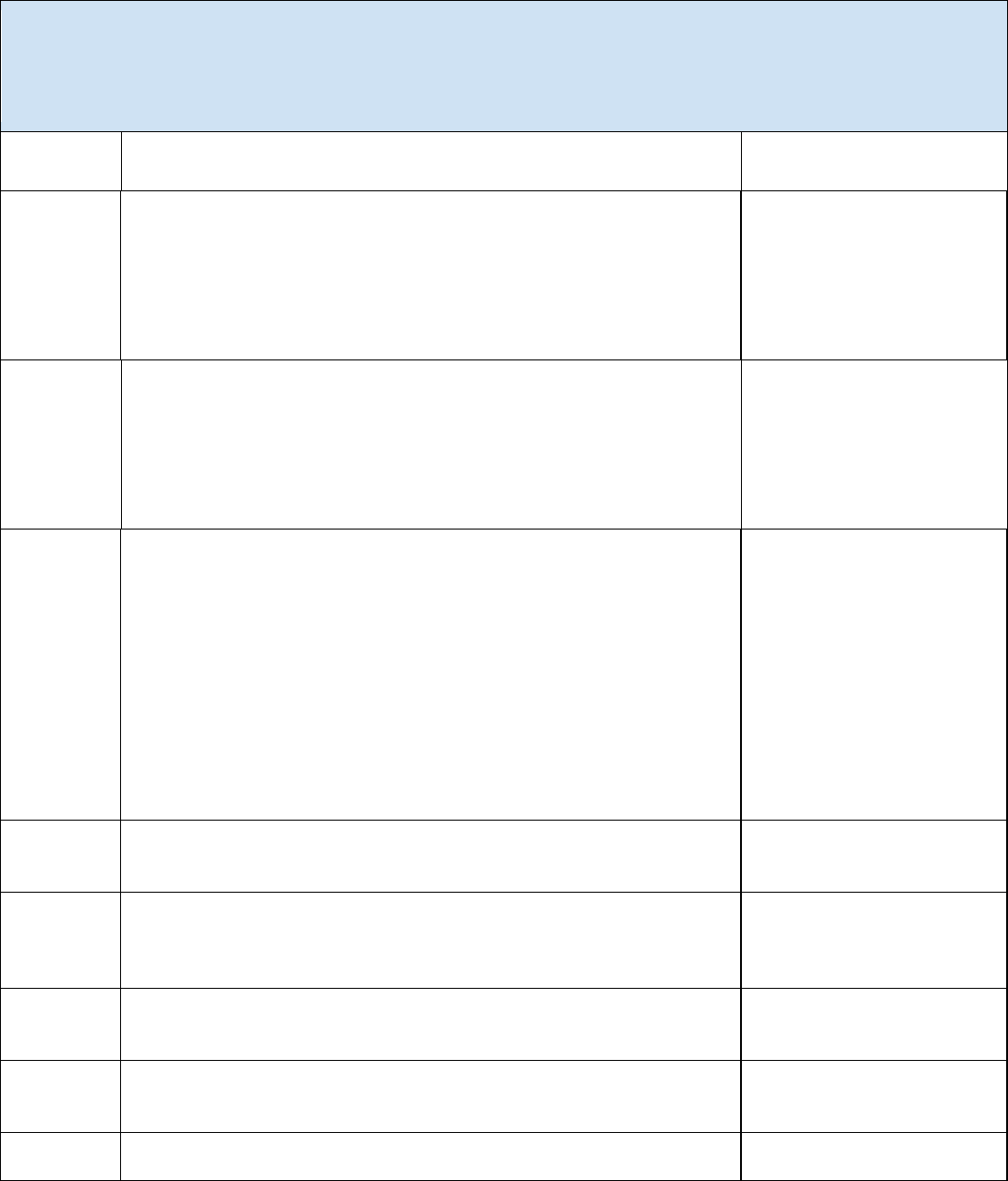

*GOVERN 2.1: Roles and responsibilies and lines of communicaon related to mapping, measuring, and managing AI risks are

documented and are clear to individuals and teams throughout the organizaon.

Acon ID

Acon

Risks

GV-2.1-001

Dene acceptable use cases and context under which the organizaon will

design, develop, deploy, and use GAI systems.

GV-2.1-002

Establish policies and procedures for GAI risk acceptance to downstream AI

actors.

Human AI Conguraon, Value

Chain and Component Integraon

GV-2.1-003

Establish policies to idenfy and disclose GAI system incidents to downstream AI

actors, including individuals potenally impacted by GAI outputs.

Human AI Conguraon, Value

Chain and Component Integraon

GV-2.1-004

Establish procedures to engage teams for GAI system incident response with

diverse composion and responsibilies based on the parcular incident type.

Toxicity, Bias, and Homogenizaon

GV-2.1-005

Establish processes to idenfy GAI system incidents and verify the AI actors

conducng these tasks demonstrate and maintain the appropriate skills and

training.

Human AI Conguraon

GV-2.1-006

Verify that incident disclosure plans include sucient GAI system context to

facilitate remediaon acons.

Human AI Conguraon

AI Actors: Governance and Oversight

2

*GOVERN 3.2: Policies and procedures are in place to dene and dierenate roles and responsibilies for human-AI

conguraons and oversight of AI systems.

Acon ID

Acon

Risks

GV-3.2-001

Bolster oversight of GAI systems with independent audits or assessments, or by

the applicaon of authoritave external standards.

16

GV-3.2-002

Consider adjustment of organizaonal roles and components across lifecycle

stages of large or complex GAI systems, including: AI actor, user, and community

feedback relang to GAI systems; Audit, validaon, and red-teaming of GAI

systems; GAI content moderaon; Data documentaon, labeling, preprocessing

and tagging; Decommissioning GAI systems; Decreasing risks of emoonal

entanglement between users and GAI systems; Decreasing risks of decepon by

GAI systems; Discouraging anonymous use of GAI systems; Enhancing

explainability of GAI systems; GAI system development and engineering;

Increased accessibility of GAI tools, interfaces, and systems, Incident response

and containment; Overseeing relevant AI actors and digital enes, including

management of security credenals and communicaon between AI enes;

Training GAI users within an organizaon about GAI fundamentals and risks.

Human AI Conguraon,

Informaon Security, Toxicity,

Bias, and Homogenizaon

GV-3.2-003

Dene acceptable use policies for the various categories of GAI interfaces,

modalies, and human-AI conguraons.

Human AI Conguraon

GV-3.2-004

Dene policies for the design of systems that possess human decision-making

powers.

Human AI Conguraon

GV-3.2-005

Establish policies for user feedback mechanisms in GAI systems.

Human AI Conguraon

GV-3.2-006

Establish policies to empower accountable execuves to oversee GAI system

adopon, use, and decommissioning.

GV-3.2-007

Establish processes to include and empower interdisciplinary team member

perspecves across the AI lifecycle.

Toxicity, Bias, and Homogenizaon

GV-3.2-008

Evaluate AI actor teams in consideraon of credenals, demographic

representaon, interdisciplinary diversity, and professional qualicaons.

Human AI Conguraon, Toxicity,

Bias, and Homogenizaon

AI Actors: AI Design

1

17

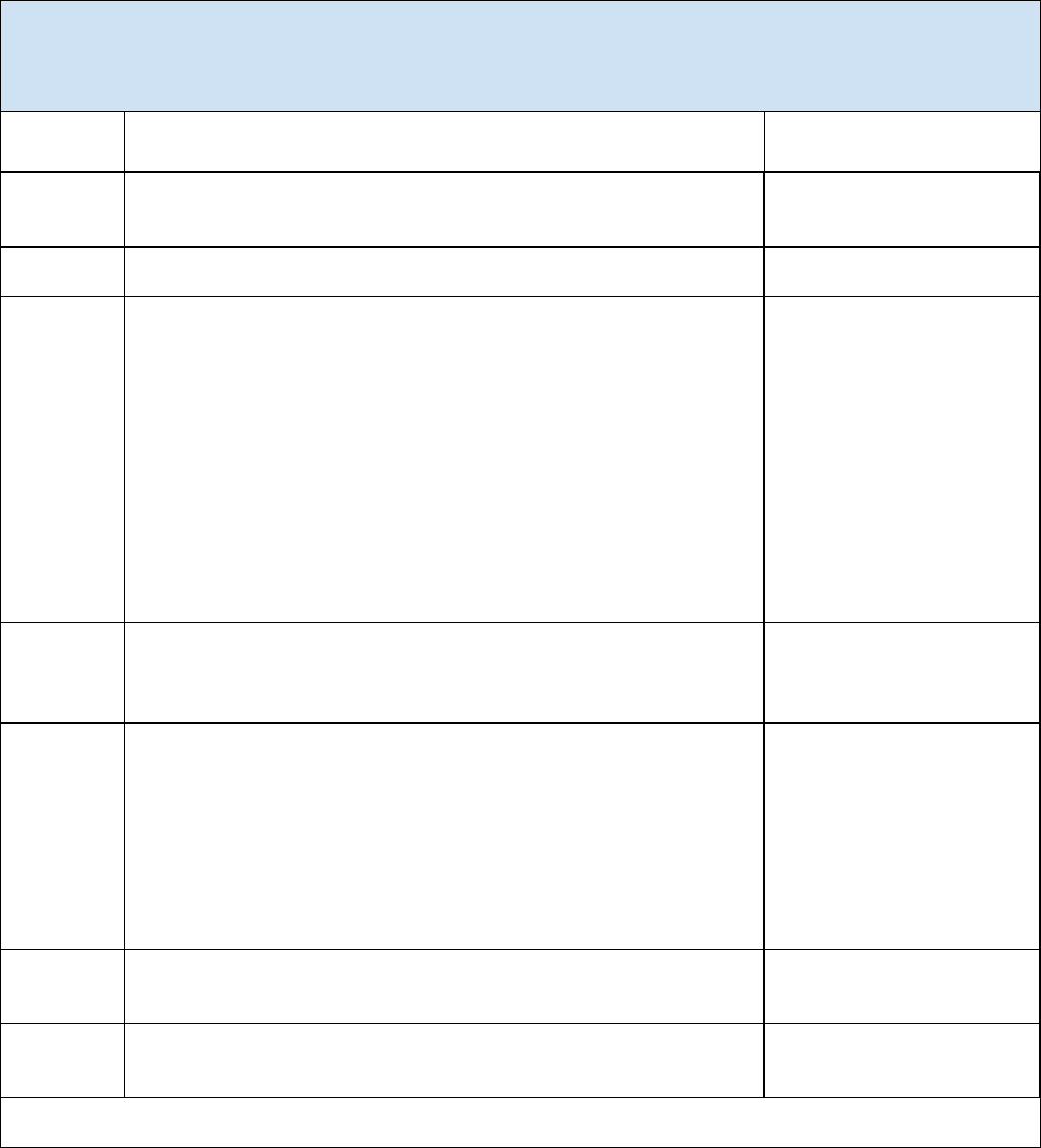

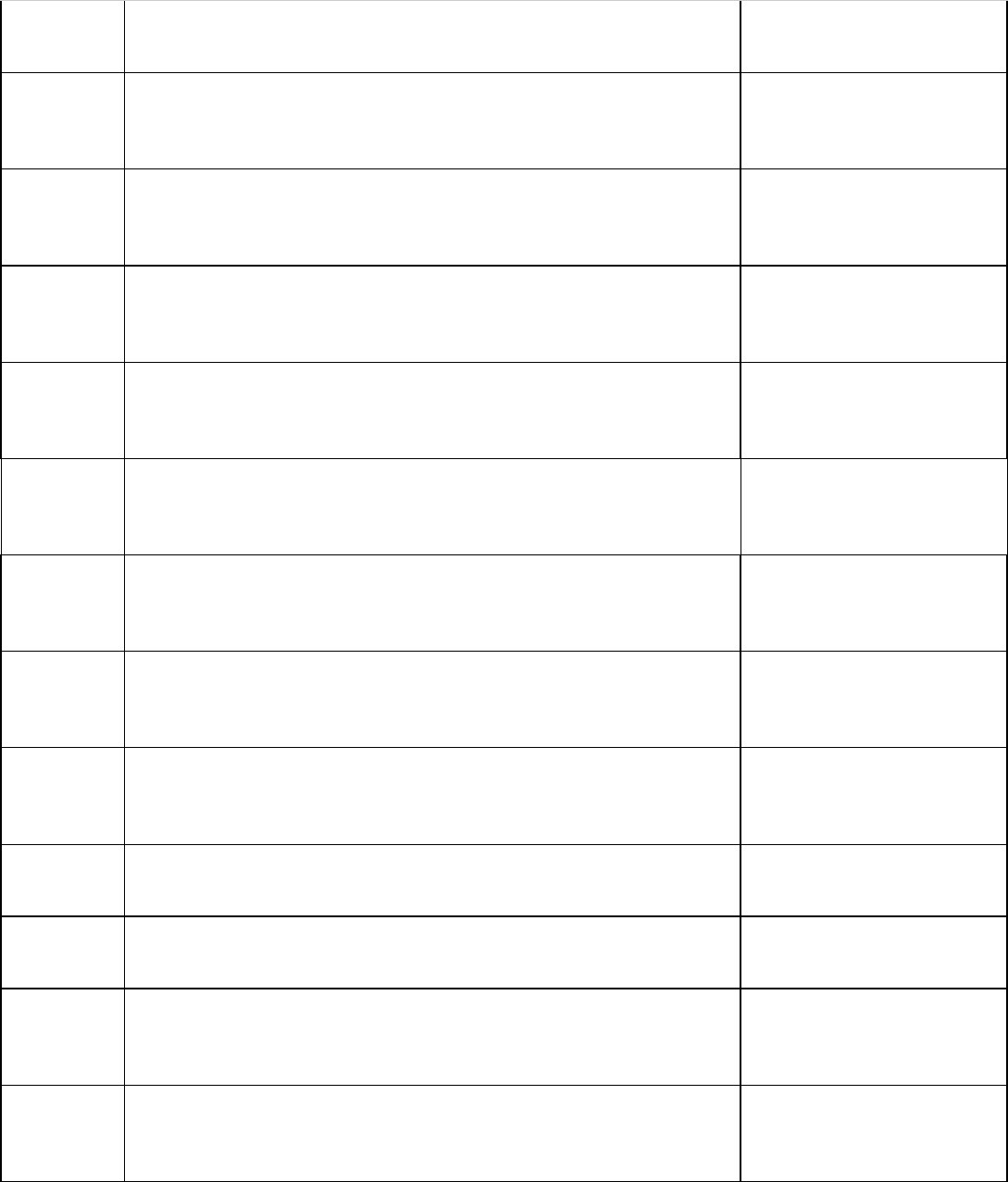

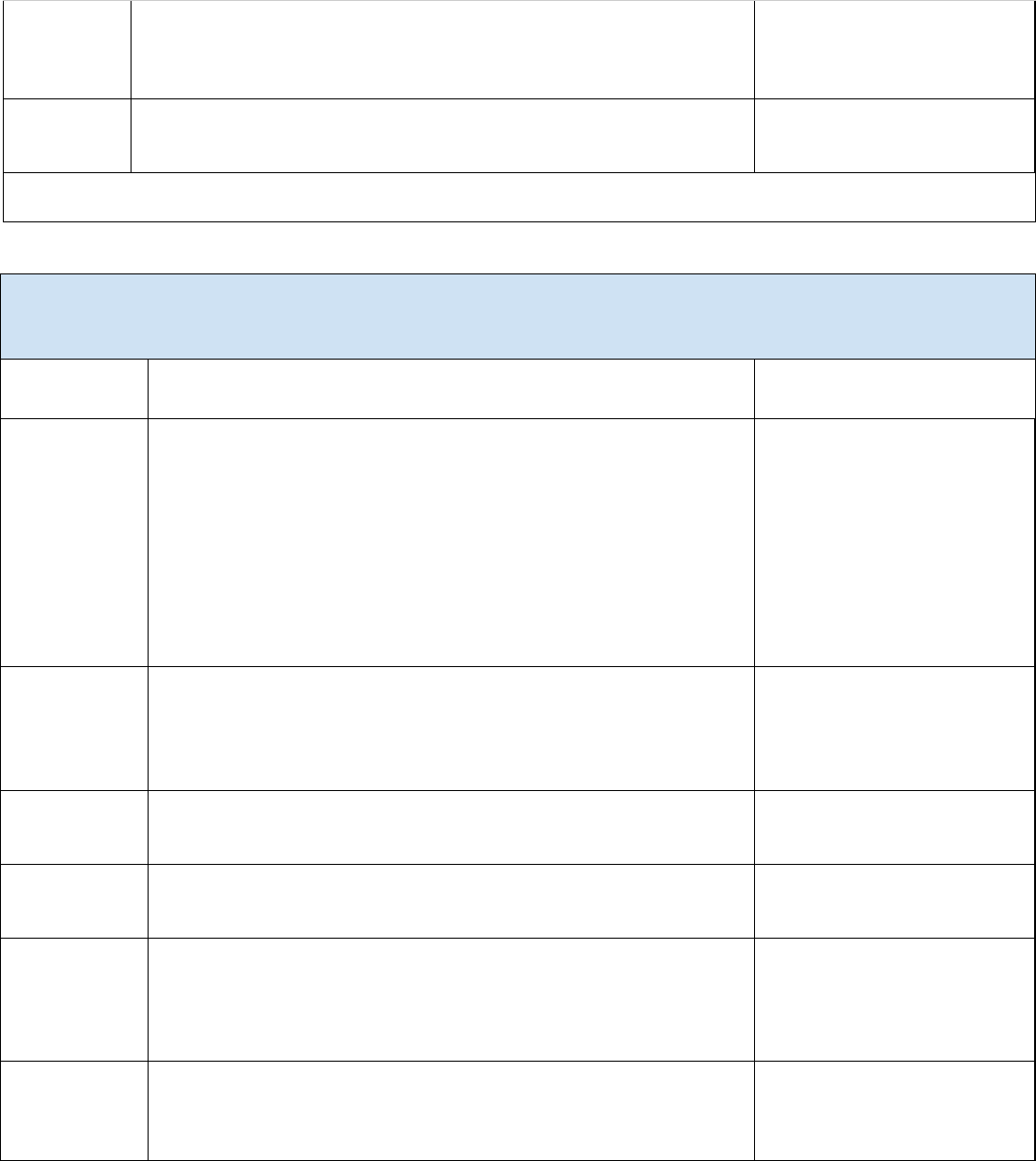

*GOVERN 4.1: Organizaonal policies and pracces are in place to foster a crical thinking and safety-rst mindset in the design,

development, deployment, and uses of AI systems to minimize potenal negave impacts.

Acon ID

Acon

Risks

GV-4.1-001

Establish criteria and acceptable use policies for the use of GAI in decision

making tasks in accordance with organizaonal risk tolerance, and other policies

laid out in the Govern funcon; to include detailed criteria for the kinds of

queries GAI models should refuse to respond to.

Human AI Conguraon

GV-4.1-002

Establish policies and procedures that address connual improvement processes

for risk measurement: Address general risks associated with a lack of

explainability and transparency in GAI systems by using ample documentaon

and techniques such as: applicaon of gradient-based aribuons,

occlusion/term reducon, counterfactual prompts and prompt engineering, and

analysis of embeddings; Assess and update risk measurement approaches at

regular cadences.

GV-4.1-003

Establish policies, procedures, and processes detailing risk measurement in

context of use with standardized measurement protocols and structured public

feedback exercises such as AI red-teaming or independent external audits.

GV-4.1-004

Establish policies, procedures, and processes for oversight funcons (e.g., senior

leadership, legal, compliance, and risk) across the GAI lifecycle, from problem

formulaon and supply chains to system decommission.

Value Chain and Component

Integraon

GV-4.1-005

Establish policies, procedures, and processes that promote eecve challenge of

AI system design, implementaon, and deployment decisions via mechanisms

such as three lines of defense, to minimize risks arising from workplace culture

(e.g., conrmaon bias, funding bias, groupthink, over-reliance on metrics).

Toxicity, Bias, and Homogenizaon

GV-4.1-006

Incorporate GAI governance policies into exisng incident response,

whistleblower, vendor or investment due diligence, acquision, procurement,

reporng or internal audit policies.

Value Chain and Component

Integraon

AI Actors: AI Deployment, AI Design, AI Development, Operaon and Monitoring

1

*GOVERN 4.2: Organizaonal teams document the risks and potenal impacts of the AI technology they design, develop, deploy,

evaluate, and use, and they communicate about the impacts more broadly.

Acon ID

Acon

Risks

18

GV-4.2-001

Develop policies, guidelines, and pracces for monitoring organizaonal and

third-party impact assessments (data, labels, bias, privacy, models, algorithms,

errors, provenance techniques, security, legal compliance, output, etc.) to

migate risk and harm.

Confabulaon, Data Privacy,

Informaon Integrity, Informaon

Security, Value Chain and

Component Integraon, Toxicity,

Bias, and Homogenizaon,

Dangerous or Violent

Recommendaons

GV-4.2-002

Establish clear roles and responsibilies for inter-organizaonal incident

response and communicaon for GAI systems that involve mulple organizaons

involved in dierent aspects of the GAI system lifecycle.

GV-4.2-003

Establish clearly dened terms of use and terms of service.

Intellectual Property

GV-4.2-004

Establish criteria for ad-hoc impact assessments based on incident reporng or

new use cases for the GAI system.

GV-4.2-005

Establish organizaonal roles, policies, and procedures for communicang and

reporng GAI system risks and terms of use or service, relevant for dierent AI

actors.

Human AI Conguraon,

Intellectual Property

GV-4.2-006

Establish policies and procedures to document new ways AI actors interact with

the GAI system.

Human AI Conguraon

GV-4.2-007

Establish policies and procedures to monitor compliance with established terms

of service and use.

Intellectual Property

GV-4.2-008

Establish policies to align organizaonal and third-party assessments with

regulatory and legal compliance regarding content provenance.

Informaon Integrity, Value Chain

and Component Integraon

GV-4.2-009

Establish policies to incorporate adversarial examples and other provenance

aacks in AI model training processes to enhance resilience against aacks.

Informaon Integrity, Informaon

Security

GV-4.2-010

Establish processes to monitor and idenfy misuse, unforeseen use cases, risks

of the GAI system and potenal impacts of those risks (leveraging GAI system use

case inventory).

CBRN Informaon, Confabulaon,

Dangerous or Violent

Recommendaons

GV-4.2-011

Implement standardized documentaon of GAI system risks and potenal

impacts.

GV-4.2-012

Include relevant AI Actors in the GAI system risk idencaon process.

Human AI Conguraon

GV-4.2-013

Verify that downstream GAI system impacts (such as the use of third-party plug-

ins) are included in the impact documentaon process.

Value Chain and Component

Integraon

19

GV-4.2-014

Verify that the organizaonal list of risks related to the use of the GAI system are

updated based on unforeseen GAI system incidents.

AI Actors: AI Deployment, AI Design, AI Development, Operaon and Monitoring

1

*GOVERN 4.3: Organizaonal pracces are in place to enable AI tesng, idencaon of incidents, and informaon sharing.

Acon ID

Acon

Risks

GV-4.3-001

Allocate resources and adjust adopon, development, and implementaon

meframes to enable independent measurement, connuous monitoring, and

fulsome informaon sharing for GAI system risks.

GV-4.3-002

Develop standardized documentaon templates for ecient review of risk

measurement results.

GV-4.3-003

Establish minimum thresholds for performance and review as part of

deployment approval (“go/”no-go”) policies, procedures, and processes, with

reviewed processes and approval thresholds reecng measurement of GAI

capabilies and risks.

GV-4.3-004

Establish organizaonal roles, policies, and procedures for communicang GAI

system incidents and performance to AI actors and downstream stakeholders, via

community or ocial resources (e.g., AI Incident Database, AVID, AI Ligaon

Database, CVE, OECD Incident Monitor, or others).

Human AI Conguraon, Value

Chain and Component Integraon

GV-4.3-005

Establish policies and procedures for pre-deployment GAI system tesng that

validates organizaonal capability to capture GAI system incident reporng

criteria.

GV-4.3-006

Establish policies, procedures, and processes that bolster independence of risk

management and measurement funcons (e.g., independent reporng chains,

aligned incenves).

GV-4.3-007

Establish policies, procedures, and processes that enable and incenvize in-

context risk measurement via standardized measurement and structured public

feedback approaches.

GV-4.3-008

Organizaonal procedures idenfy the minimum set of criteria necessary for GAI

system incident reporng such as: System ID (auto-generated most likely), Title,

Reporter, System/Source, Data Reported, Date of Incident, Descripon,

Impact(s), Stakeholder(s) Impacted.

AI Actors: Fairness and Bias, Governance and Oversight, Operaon and Monitoring, TEVV

2

20

*GOVERN 5.1: Organizaonal policies and pracces are in place to collect, consider, priorize, and integrate feedback from those

external to the team that developed or deployed the AI system regarding the potenal individual and societal impacts related to AI

risks.

Acon ID

Acon

Risks

GV-5.1-001

Allocate me and resources for outreach, feedback, and recourse processes in

GAI system development.

GV-5.1-002

Disclose interacons with GAI systems to users prior to interacve acvies.

Human AI Conguraon

GV-5.1-003

Establish policy, guidelines and processes that: Engage independent experts to

audit models, data sources, licenses, algorithms, and other system components,

Consider sponsoring or engaging in community- based exercises (e.g., bug

bounes, hackathons, compeons) where AI Actors assess and benchmark the

performance of AI systems, including the robustness of content provenance

management under various condions; Document data sources, licenses,

training methodologies, and trade-os considered in the design of AI systems;

Establish mechanisms, plaorms or channels (e.g., user interfaces, web portals,

forums) for independent experts, users, or community members to provide

feedback related to AI systems; Adjudicate and implement relevant feedback at a

regular cadence, Establish transparency mechanisms to track the origin of data

and generated content; Audit and validate these mechanisms.

Human AI Conguraon,

Informaon Integrity, Intellectual

Property

GV-5.1-004

Establish processes to bolster internal AI actor culture in alignment with

organizaonal principles and norms and to empower exploraon of GAI

limitaons beyond development sengs.

Human AI Conguraon, Toxicity,

Bias, and Homogenizaon

GV-5.1-005

Establish the following GAI-specic policies and procedures for independent AI

Actors: Connuous improvement processes for increasing explainability and

migang other risks; Impact assessments, Incenves for internal AI actors to

provide feedback and conduct independent risk management acvies;

Independent management and reporng structures for AI actors engaged in

model and system audit, validaon, and oversight; TEVV processes for the

eecveness of feedback mechanisms employing parcipaon rates, resoluon

me, or similar measurements.

Human AI Conguraon

GV-5.1-006

Provide thorough instrucons for GAI system users to provide feedback and

understand recourse mechanisms.

Human AI Conguraon

GV-5.1-007

Standardize user feedback about GAI system behavior, risks and limitaons for

ecient adjudicaon and incorporaon.

Human AI Conguraon

AI Actors: AI Design, AI Impact Assessment, Aected Individuals and Communies, Governance and Oversight

1

21

*GOVERN 6.1: Policies and procedures are in place that address AI risks associated with third-party enes, including risks of

infringement of a third-party’s intellectual property or other rights.

Acon ID

Acon

Risks

GV-6.1-001

Categorize dierent types of GAI content with associated third party risks (i.e.,

copyright, intellectual property, data privacy).

Data Privacy, Intellectual Property,

Value Chain and Component

Integraon

GV-6.1-002

Conduct due diligence on third-party enes and end-users from those enes

before entering into agreements with them (e.g., checking references, reviewing

their content handling processes, etc.).

Human AI Conguraon, Value

Chain and Component Integraon

GV-6.1-003

Conduct joint educaonal acvies and events in collaboraon with third-pares

to promote content provenance best pracces.

Informaon Integrity, Value Chain

and Component Integraon

GV-6.1-004

Conduct regular audits of third-party enes to ensure compliance with

contractual agreements.

Value Chain and Component

Integraon

GV-6.1-005

Dene and communicate organizaonal roles and responsibilies for GAI

acquision, human resources, procurement, and talent management processes

in policies and procedures.

Human AI Conguraon

GV-6.1-006

Develop an incident response plan for third pares specically tailored to

address content provenance incidents or breaches and regularly test and update

the incident response plan with feedback form external and third party

stakeholders.

Data Privacy, Informaon

Integrity, Informaon Security,

Value Chain and Component

Integraon

GV-6.1-007

Develop and validate approaches for measuring the success of content

provenance management eorts with third pares (e.g., incidents detected and

response mes).

Informaon Integrity, Value Chain

and Component Integraon

GV-6.1-008

Develop risk tolerance and criteria to quantavely assess and compare the level

of risk associated with dierent third-party enes (i.e., reputaon, track record,

security measure, and the sensivity of the content they handle).

Informaon Security, Value Chain

and Component Integraon

GV-6.1-009

Dra and maintain well-dened contracts and service level agreements (SLAs)

that specify content ownership, usage rights, quality standards, security

requirements, and content provenance expectaons.

Informaon Integrity, Informaon

Security

GV-6.1-010

Establish processes to maintain awareness of evolving risks, technologies, and

best pracces in content provenance management.

Informaon Integrity

22

GV-6.1-011

Implement a supplier risk assessment framework to connuously evaluate and

monitor third-party enes’ performance and adherence to content provenance

standards and technologies (e.g., digital signatures, watermarks, cryptography,

etc.) to detect anomalies and unauthorized changes; services acquision and

supply chain risk management; legal compliance (e.g., copyright, trademarks,

and data privacy laws).

Data Privacy, Informaon

Integrity, Informaon Security,

Intellectual Property, Value Chain

and Component Integraon

GV-6.1-012

Include audit clauses in contracts that allow the organizaon to verify

compliance with content provenance requirements.

Informaon Integrity

GV-6.1-013

Inventory all third-party enes with access to organizaonal content and

establish approved GAI technology and service provider lists.

Value Chain and Component

Integraon

GV-6.1-014

Maintain detailed records of content provenance, including sources, mestamps,

metadata, and any changes made by third pares.

Informaon Integrity, Value Chain

and Component Integraon

GV-6.1-015

Provide proper training to internal employees on content provenance best

pracces, risks, and reporng procedures.

Informaon Integrity

GV-6.1-016

Update and integrate due diligence processes for GAI acquision and

procurement vendor assessments to include intellectual property, data privacy,

security, and other risks. For example, update policies to: Address roboc

process automaon (RPA), soware-as-a-service (SAAS), and other soluons that

may rely on embedded GAI technologies; Address ongoing audits, assessments,

and alerng, dynamic risk assessments, and real-me reporng tools for

monitoring third-party GAI risks; Address accessibility, accommodaons, or opt-

outs in GAI vendor oerings; Address commercial use of GAI outputs and

secondary use of collected data by third pares; Assess vendor risk controls for

intellectual property infringements and data privacy; Consider policy

adjustments across GAI modeling libraries, tools and APIs, ne-tuned models,

and embedded tools; Establish ownership of GAI acquision and procurement

processes; Include relevant organizaonal funcons in evaluaons of GAI third

pares (e.g., legal, informaon technology (IT), security, privacy, fair lending);

Include instrucon on intellectual property infringement and other third-party

GAI risks in GAI training for AI actors; Screen GAI vendors, open source or

proprietary GAI tools, or GAI service providers against incident or vulnerability

databases; Screen open source or proprietary GAI training data or outputs

against patents, copyrights, trademarks and trade secrets.

Data Privacy, Human AI

Conguraon, Informaon

Security, Intellectual Property,

Value Chain and Component

Integraon, Toxicity, Bias, and

Homogenizaon

GV-6.1-017

Update GAI acceptable use policies to address proprietary and open-source GAI

technologies and data, and contractors, consultants, and other third-party

personnel.

Intellectual Property, Value Chain

and Component Integraon

GV-6.1-018

Update human resource and talent management standards to address

acceptable use of GAI.

Human AI Conguraon

23

GV-6.1-019

Update third-party contracts, service agreements, and warranes to address GAI

risks; Contracts, service agreements, and similar documents may include GAI-

specic indemnity clauses, dispute resoluon mechanisms, and other risk

controls.

Value Chain and Component

Integraon

AI Actors: Operaon and Monitoring, Procurement, Third-party enes

1

GOVERN 6.2: Conngency processes are in place to handle failures or incidents in third-party data or AI systems deemed to be

high-risk.

Acon ID

Acon

Risks

GV-6.2-001

Apply exisng organizaonal risk management policies, procedures, and

documentaon processes to third-party GAI data and systems, including open

source data and soware.

Intellectual Property, Value Chain

and Component Integraon

GV-6.2-002

Document downstream GAI system impacts (e.g., the use of third-party plug-ins)

for third party dependencies.

Value Chain and Component

Integraon

GV-6.2-003

Document GAI system supply chain risks to idenfy over-reliance on third party

data or GAI systems and to idenfy fallbacks.

Value Chain and Component

Integraon

GV-6.2-004

Document incidents involving third-party GAI data and systems, including open

source data and soware.

Intellectual Property, Value Chain

and Component Integraon

GV-6.2-005

Enumerate organizaonal GAI system risks based on external dependencies on

third-party data or GAI systems.

Value Chain and Component

Integraon

GV-6.2-006

Establish acceptable use policies that idenfy dependencies, potenal impacts,

and risks associated with third-party data or GAI systems deemed high-risk.

Value Chain and Component

Integraon

GV-6.2-007

Establish conngency and communicaon plans to support fallback alternaves

for downstream users in the event the GAI system is disabled.

Human AI Conguraon, Value

Chain and Component Integraon

GV-6.2-008

Establish incident response plans for third-party GAI technologies deemed high-

risk: Align incident response plans with impacts enumerated in MAP 5.1;

Communicate third-party GAI incident response plans to all relevant AI actors;

Dene ownership of GAI incident response funcons; Rehearse third-party GAI

incident response plans at a regular cadence; Improve incident response plans

based on retrospecve learning; Review incident response plans for alignment

with relevant breach reporng, data protecon, data privacy, or other laws.

Data Privacy, Human AI

Conguraon, Informaon

Security, Value Chain and

Component Integraon, Toxicity,

Bias, and Homogenizaon

GV-6.2-009

Establish organizaonal roles, policies, and procedures for communicang with

data and GAI system providers regarding performance, disclosure of GAI system

inputs, and use of third-party data and GAI systems.

Human AI Conguraon, Value

Chain and Component Integraon

24

GV-6.2-010

Establish policies and procedures for connuous monitoring of third-party GAI

systems in deployment.

Value Chain and Component

Integraon

GV-6.2-011

Establish policies and procedures that address GAI data redundancy, including

model weights and other system arfacts.

Toxicity, Bias, and Homogenizaon

GV-6.2-012

Establish policies and procedures to test and manage risks related to rollover and

fallback technologies for GAI systems, acknowledging that rollover and fallback

may include manual processing.

GV-6.2-013

Idenfy and document high-risk third-party GAI technologies in organizaonal AI

inventories, including open-source GAI soware.

Intellectual Property, Value Chain

and Component Integraon

GV-6.2-014

Review GAI vendor documentaon for thorough instrucons, meaningful

transparency into data or system mechanisms, ample support and contact

informaon, and alignment with organizaonal principles.

Value Chain and Component

Integraon, Toxicity, Bias, and

Homogenizaon

GV-6.2-015

Review GAI vendor release cadences and roadmaps for irregularies and

alignment with organizaonal principles.

Value Chain and Component

Integraon, Toxicity, Bias, and

Homogenizaon

GV-6.2-016

Review vendor contracts and avoid arbitrary or capricious terminaon of crical

GAI technologies or vendor services and Non-standard terms that may amplify or

defer liability in unexpected ways and Unauthorized data collecon by vendors or

third-pares (e.g., secondary data use); Consider: Clear assignment of liability and

responsibility for incidents, GAI system changes over me (e.g., ne-tuning, dri,

decay); Request: Nocaon and disclosure for serious incidents arising from

third-party data and systems, Service line agreements (SLAs) in vendor contracts

that address incident response, response mes, and availability of crical support.

Human AI Conguraon,

Informaon Security, Value Chain

and Component Integraon

AI Actors: AI Deployment, Operaon and Monitoring, TEVV, Third-party enes

1

*MAP 1.1: Intended purposes, potenally benecial uses, context specic laws, norms and expectaons, and prospecve sengs in

which the AI system will be deployed are understood and documented. Consideraons include: the specic set or types of users

along with their expectaons; potenal posive and negave impacts of system uses to individuals, communies, organizaons,

society, and the planet; assumpons and related limitaons about AI system purposes, uses, and risks across the development or