ContentsContents

Text Analytics API Documentation

Overview

What is the Text Analytics API?

Language support

Pricing

What's new

Text Analytics FAQ

Quickstart

Samples

v3.0

C#

Python

Java

JavaScript

v3.1

C#

Python

Java

JavaScript

Responsible use of AI

Transparency notes

For Text Analytics

For Health

For Named Entity Recognition

(

NER

)

and Personally Identifying Information

(

PII

)

For Sentiment Analysis

For Key Phrase Analysis

For Language Detection

Integration and responsible use

Data, privacy, and security

How

-

to guides

Call the Text Analytics API

Language detection

Sentiment analysis and opinion mining

Key phrase extraction

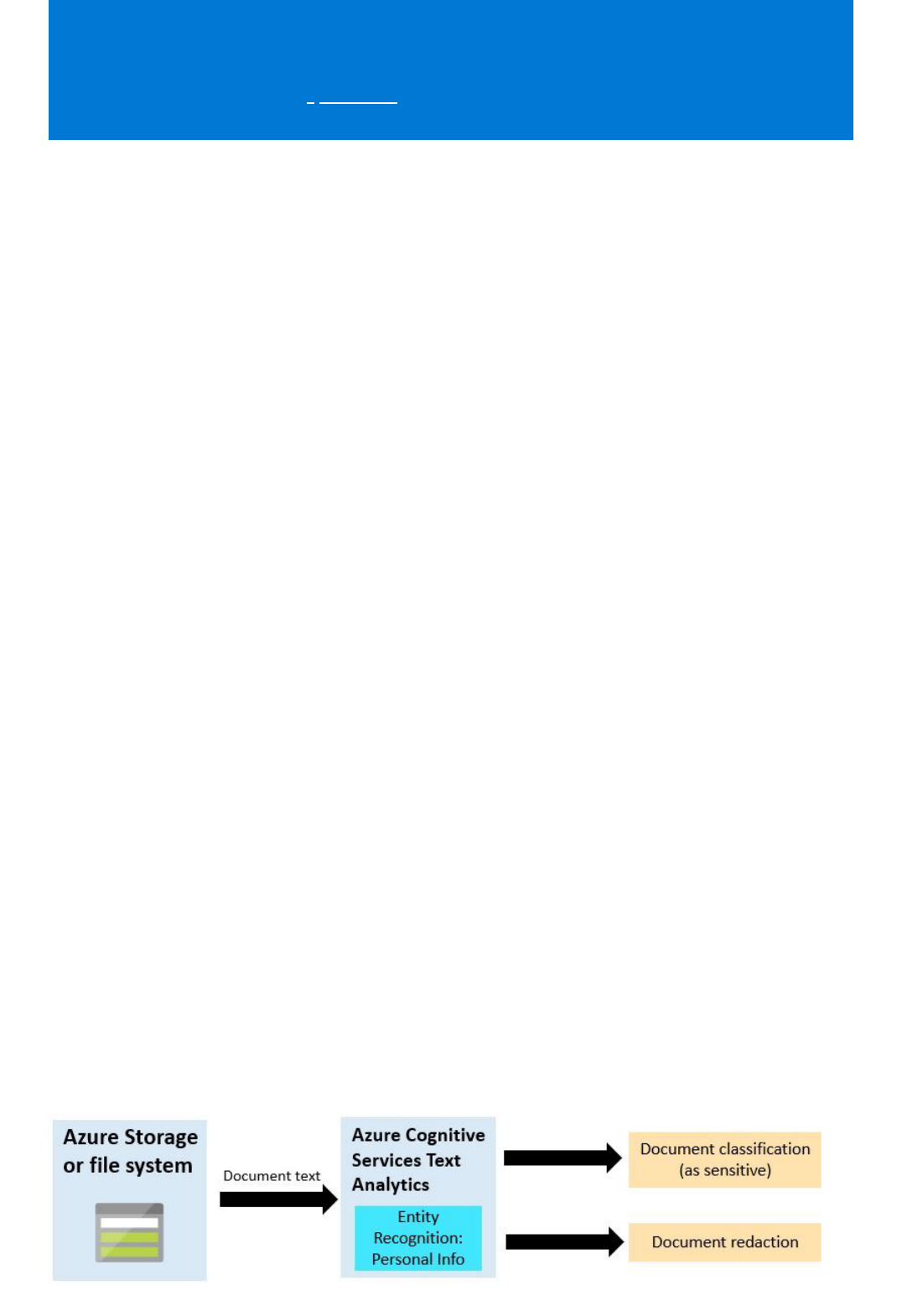

Named entity recognition and PII

Text Analytics for health

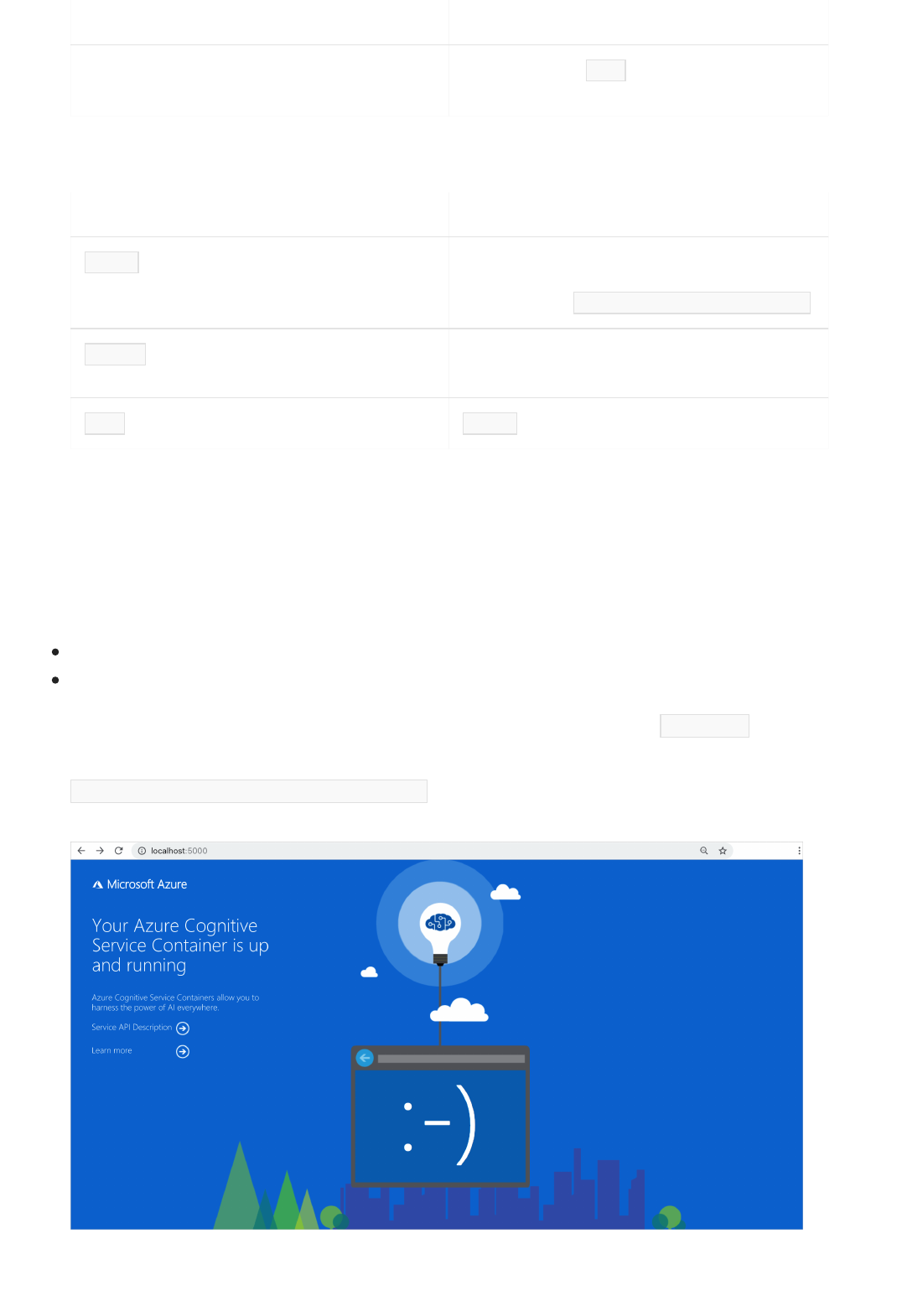

Use containers

Install and run containers

Configure containers

Use container instances

Use kubernetes service

(

AKS

)

All Cognitive Services containers documentation

Enterprise readiness

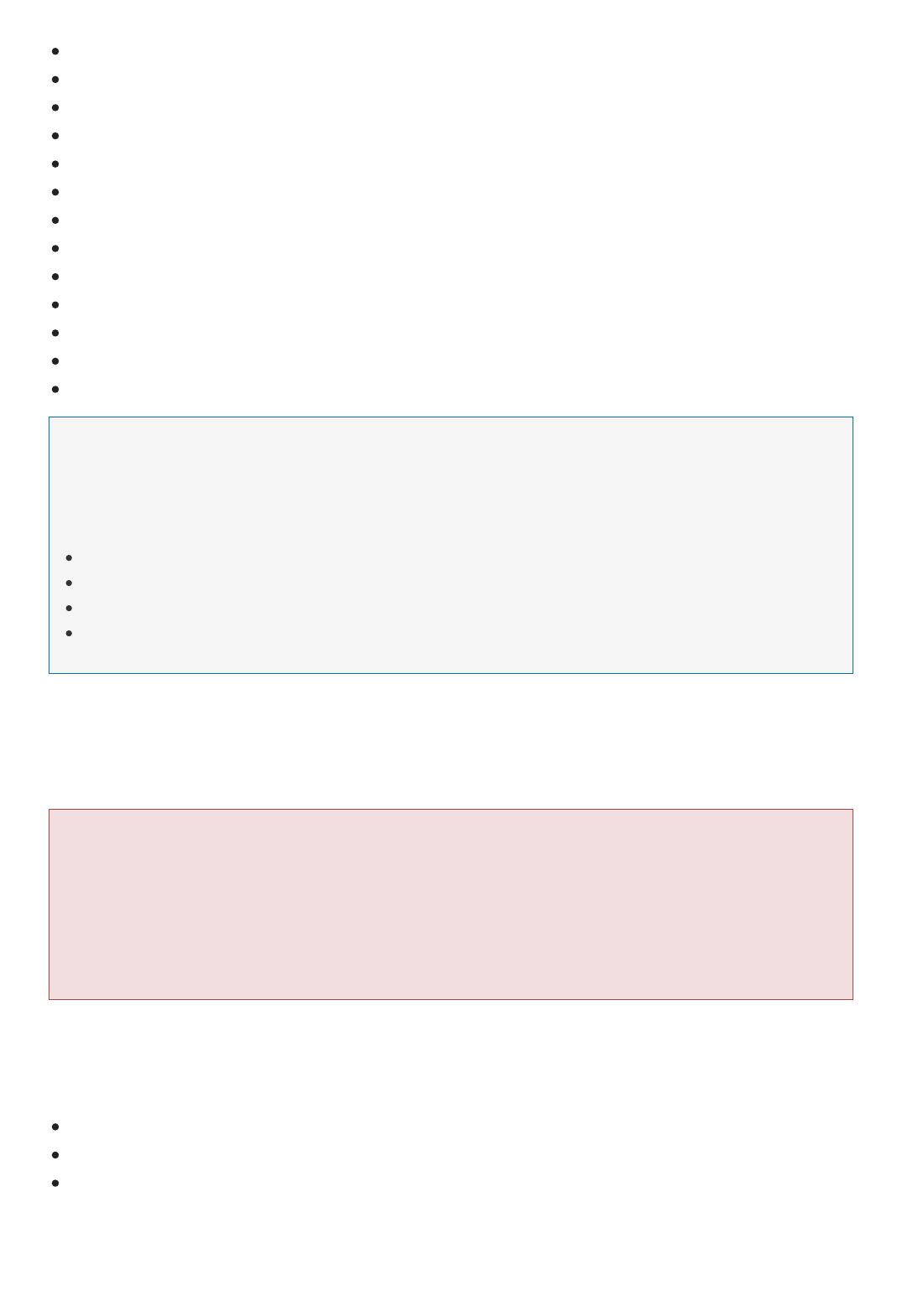

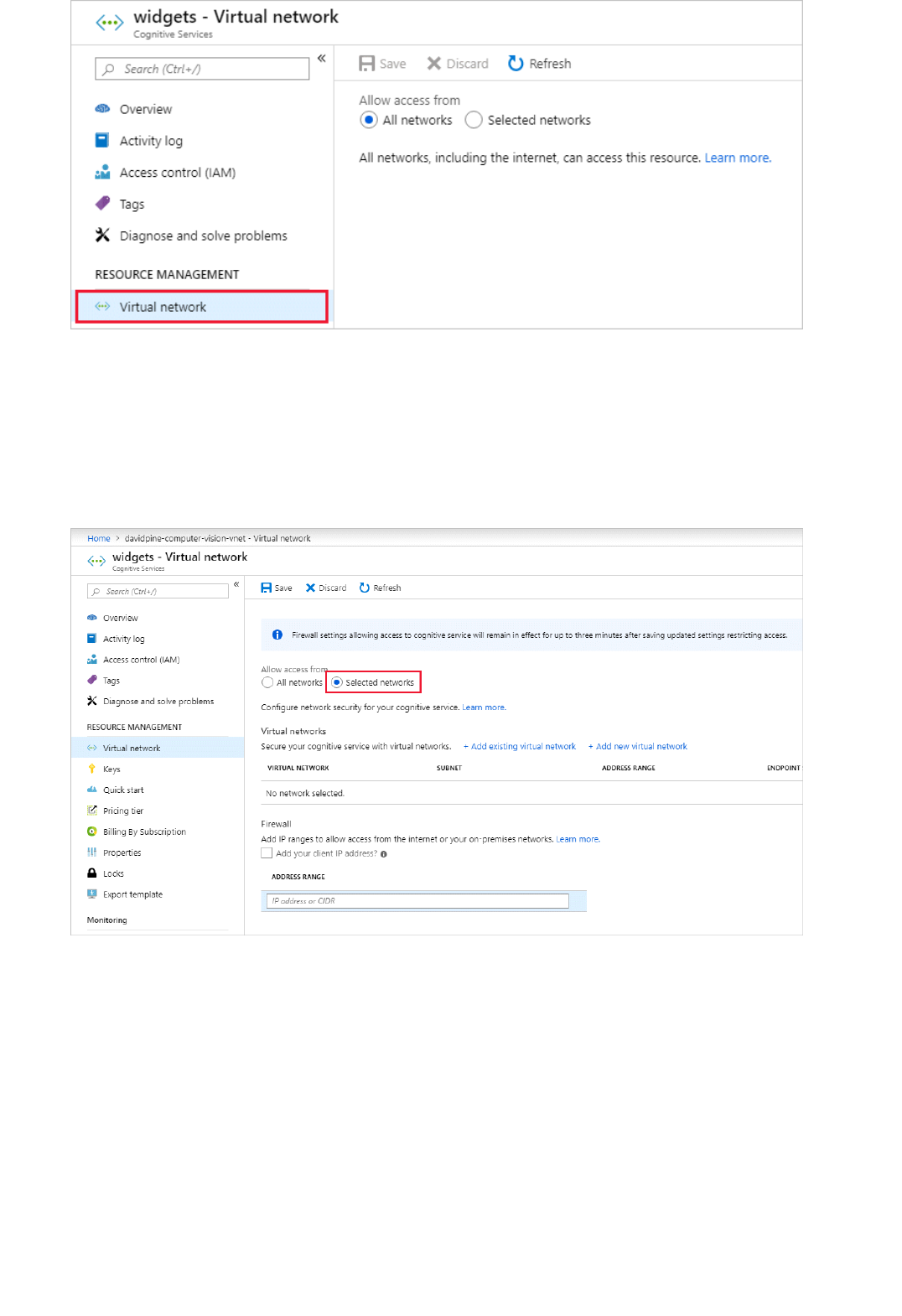

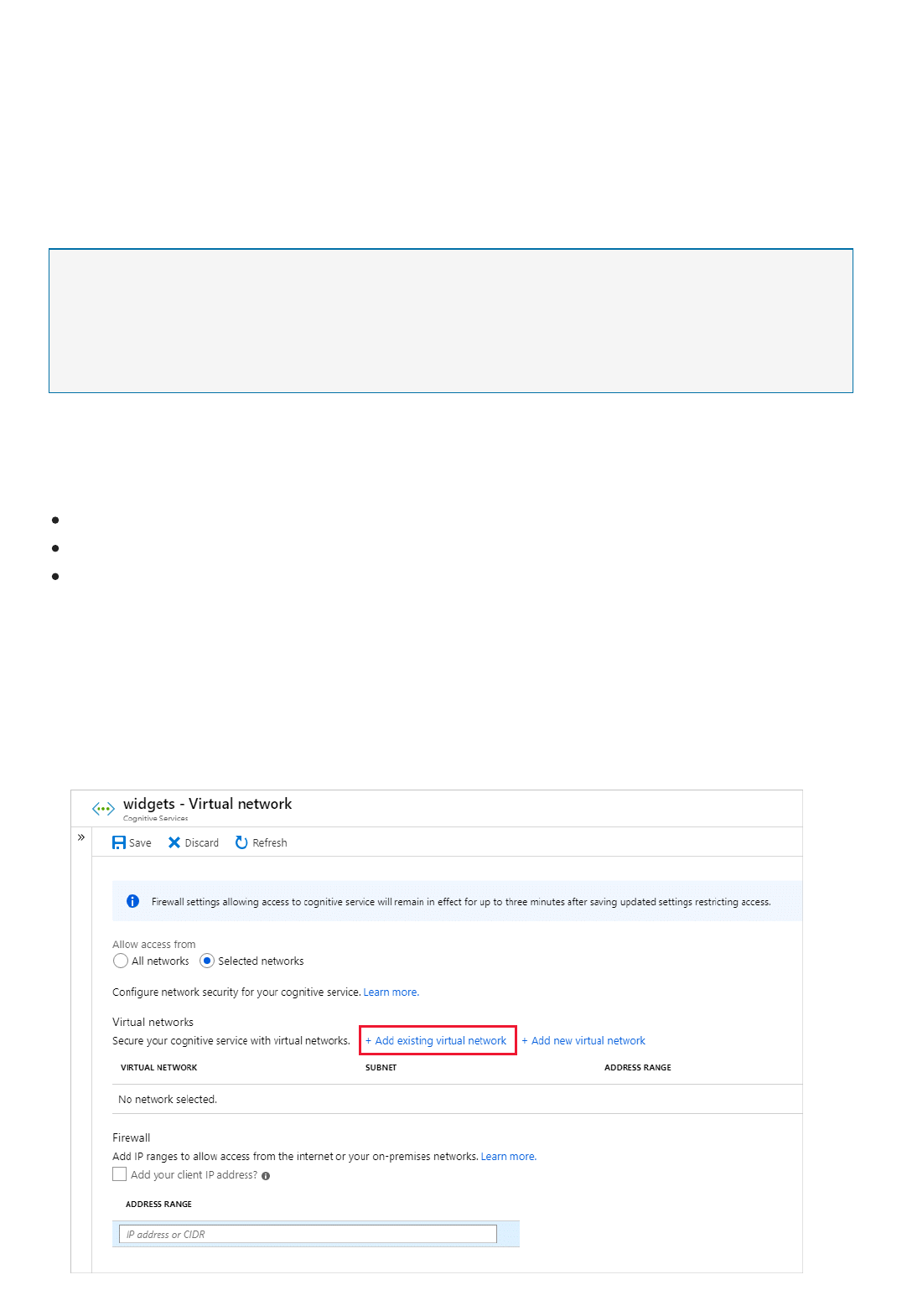

Set up Virtual Networks

Use Azure AD authentication

Migrate to version 3 of the API

Concepts

Example user scenarios

Named entity types

Unicode encodings and text offsets

Data limits

Model versioning

Tutorials

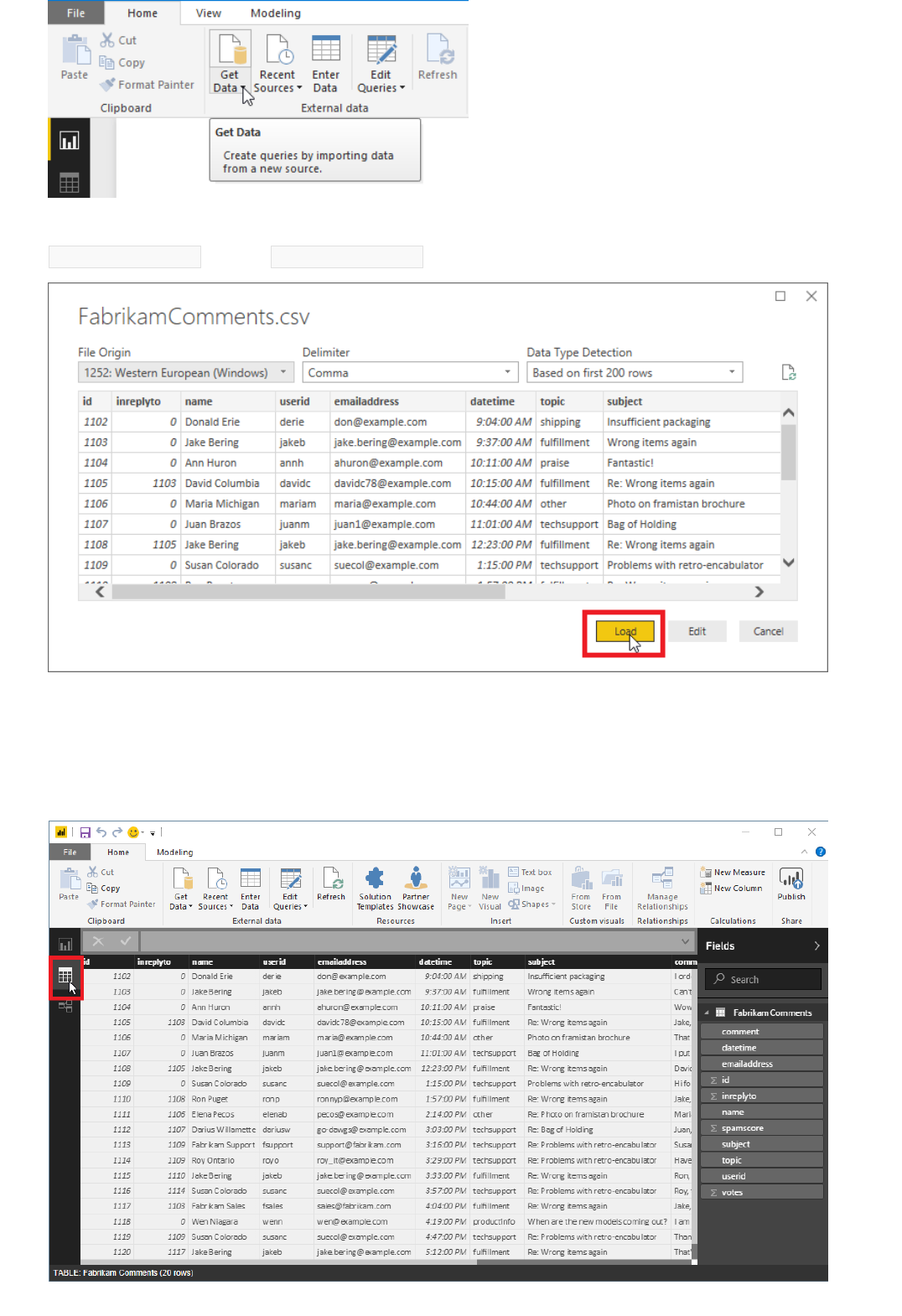

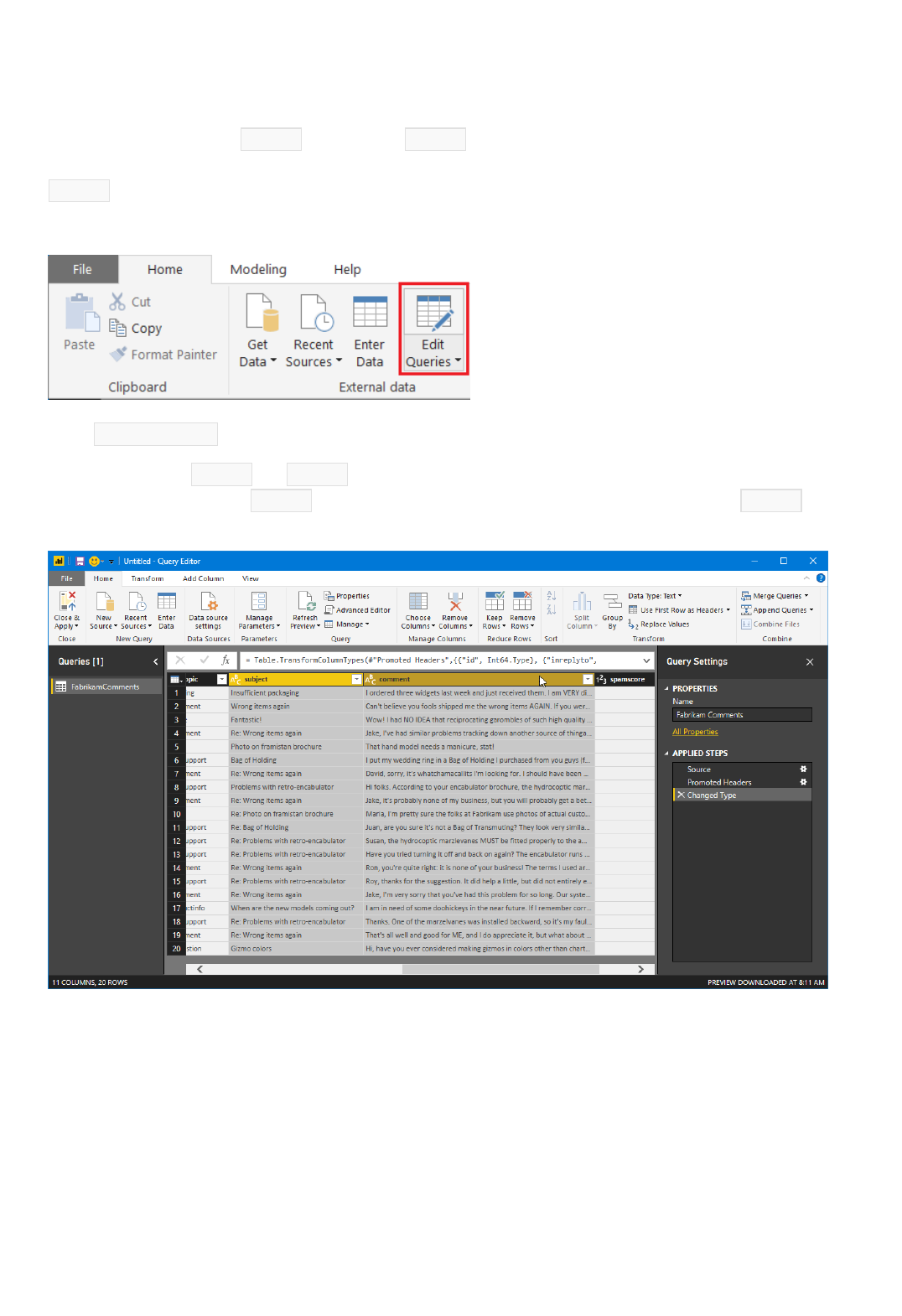

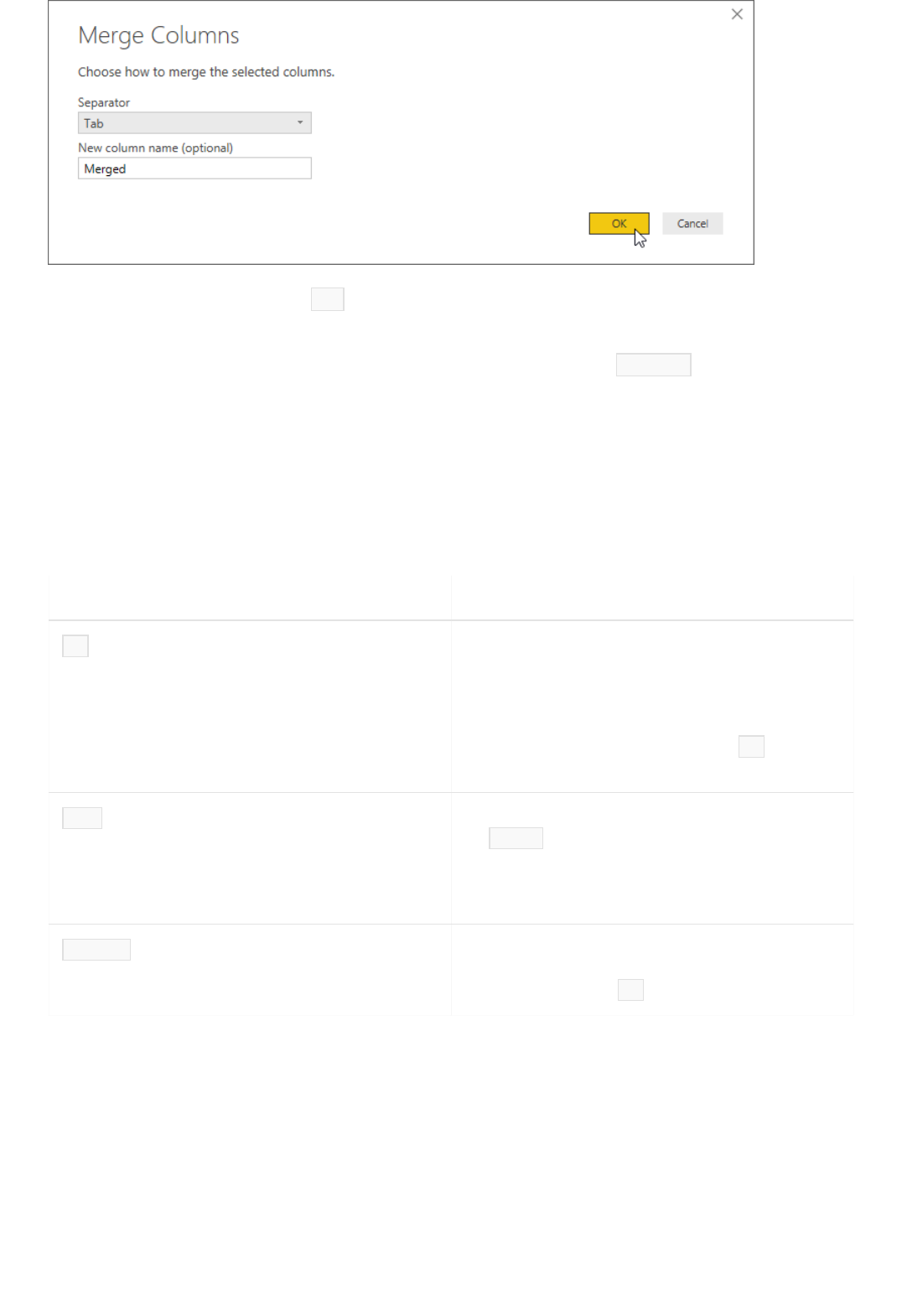

Integrate Power BI to analyze customer feedback

Text Analytics in Power Apps

Sentiment analysis on streaming data using Azure Databricks

Use Flask to translate text, analyze sentiment, and synthesize speech

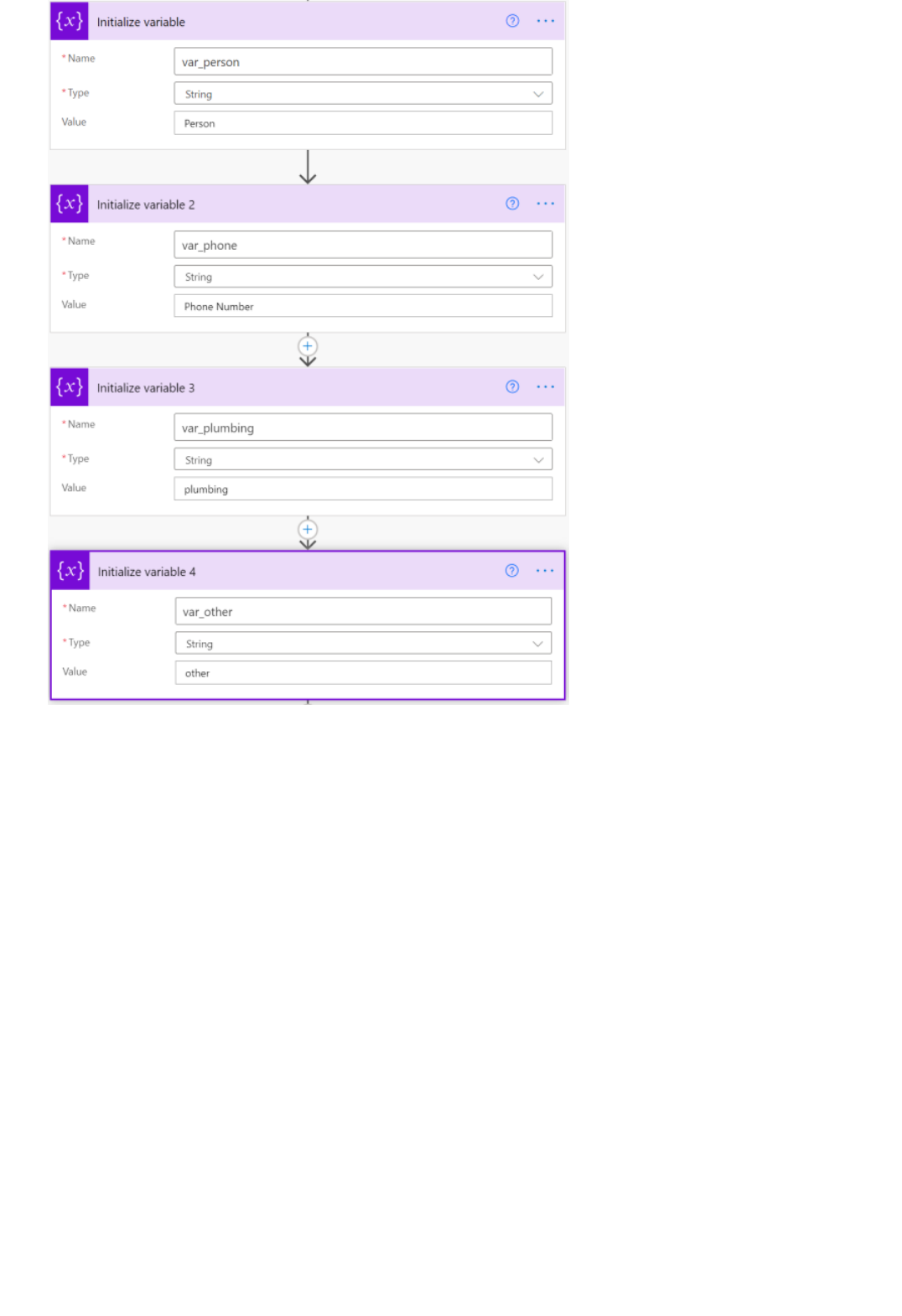

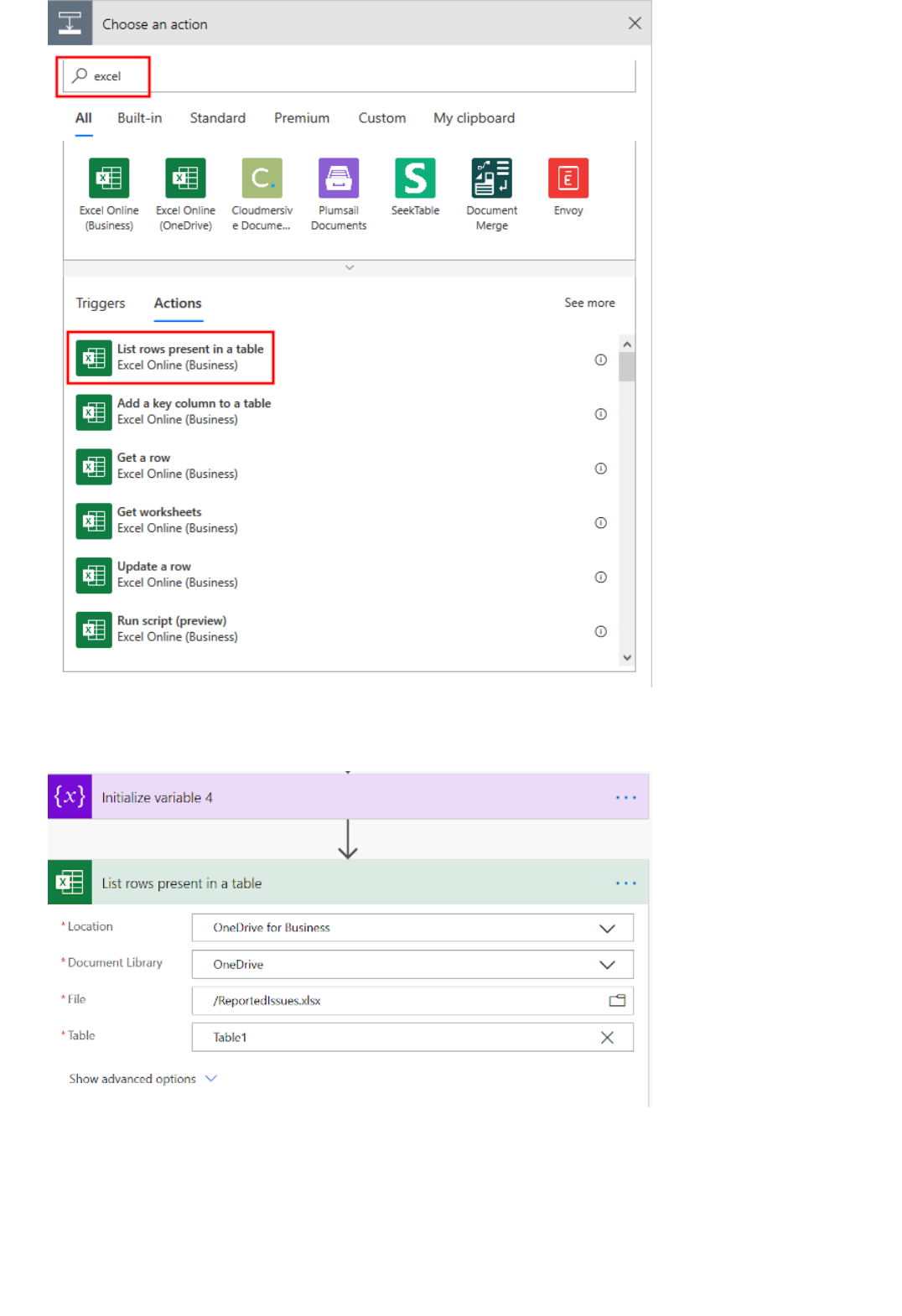

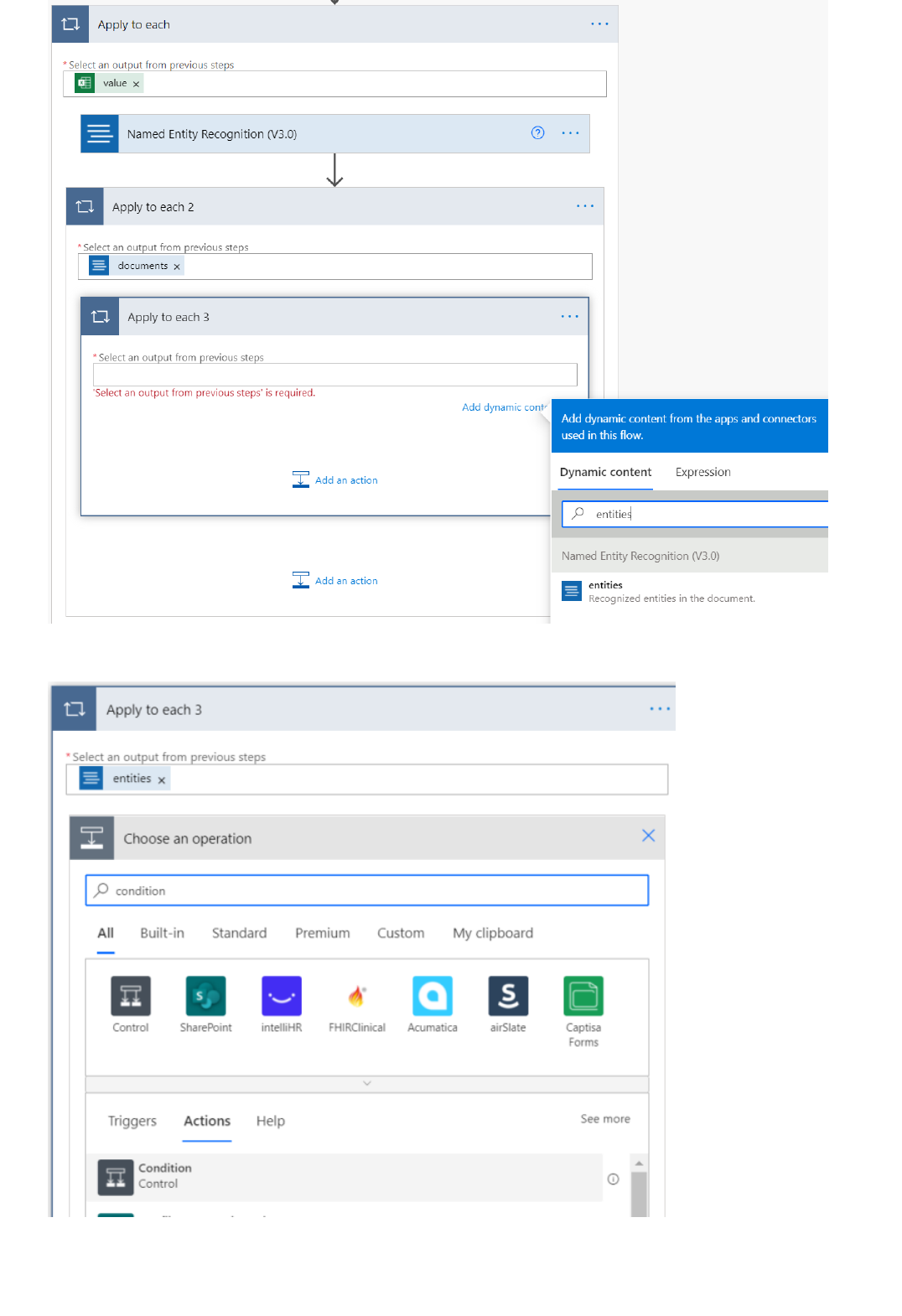

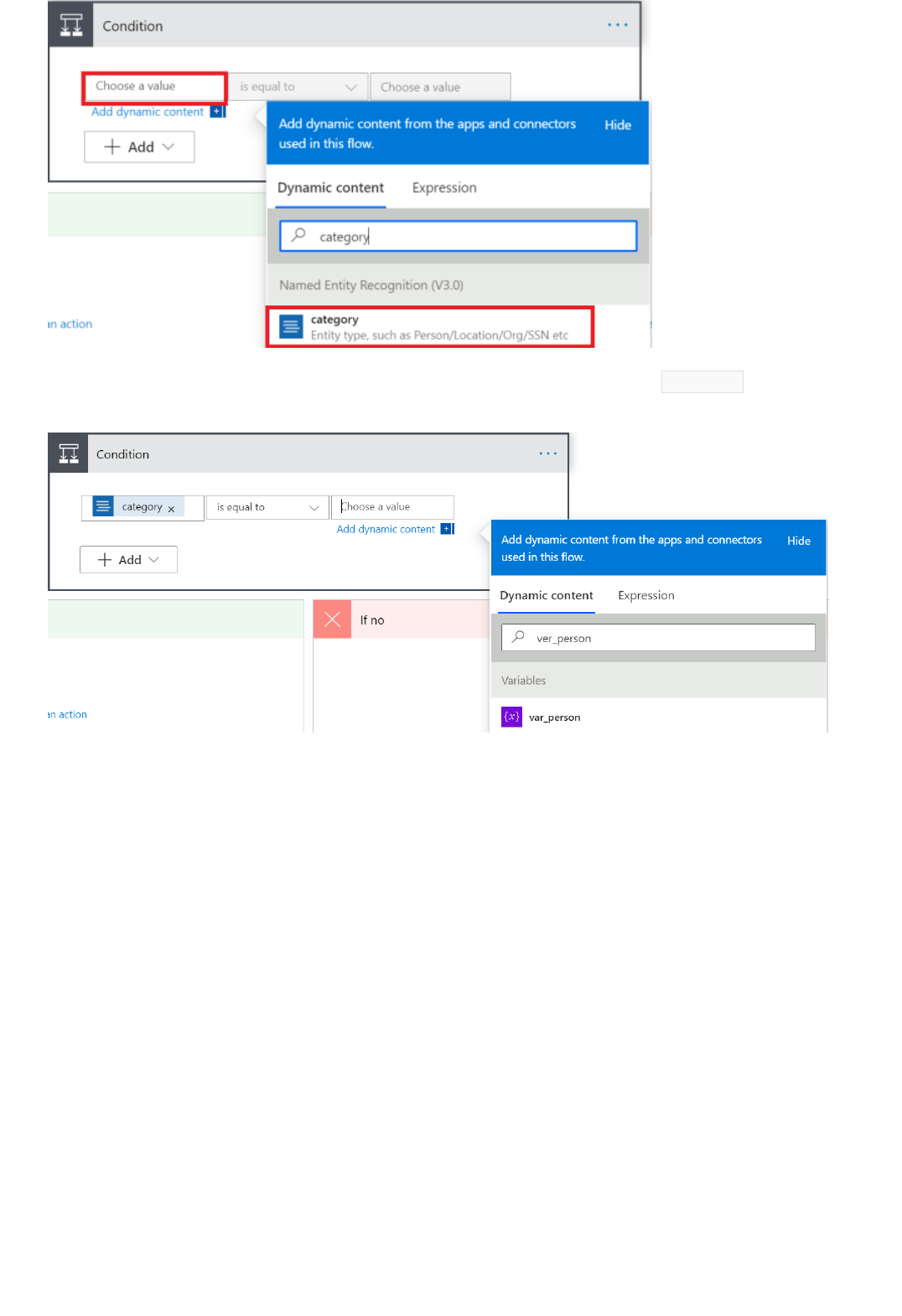

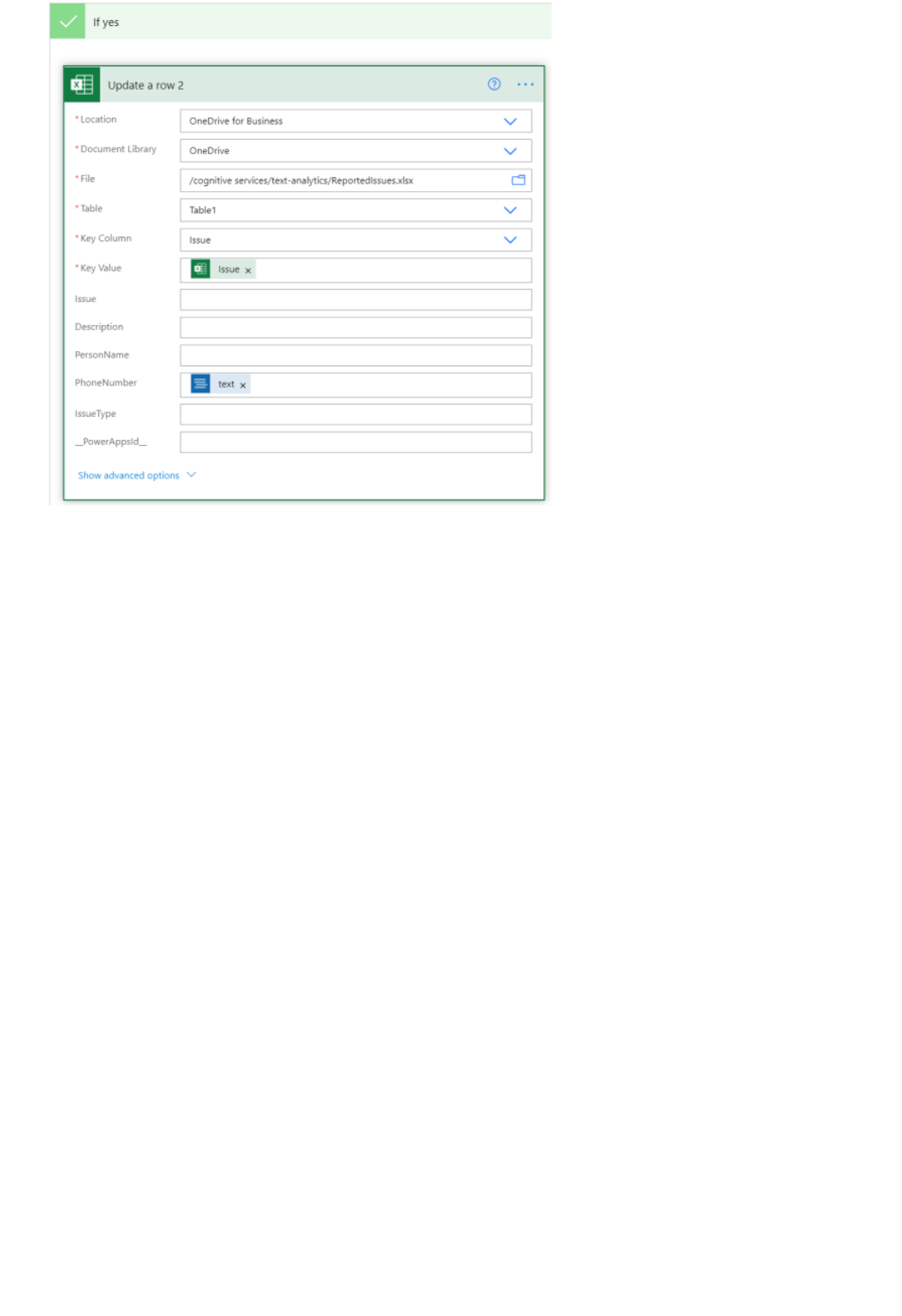

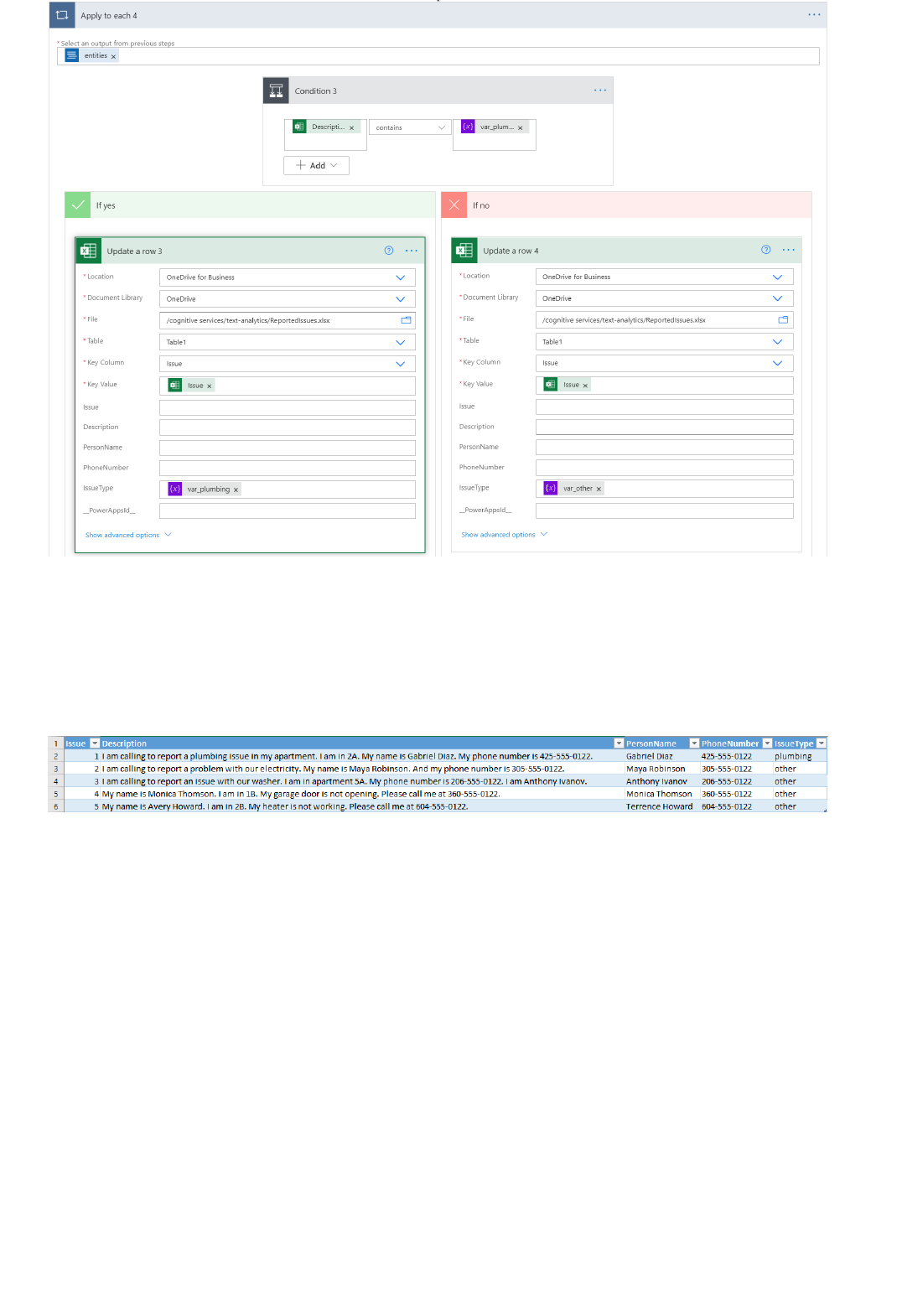

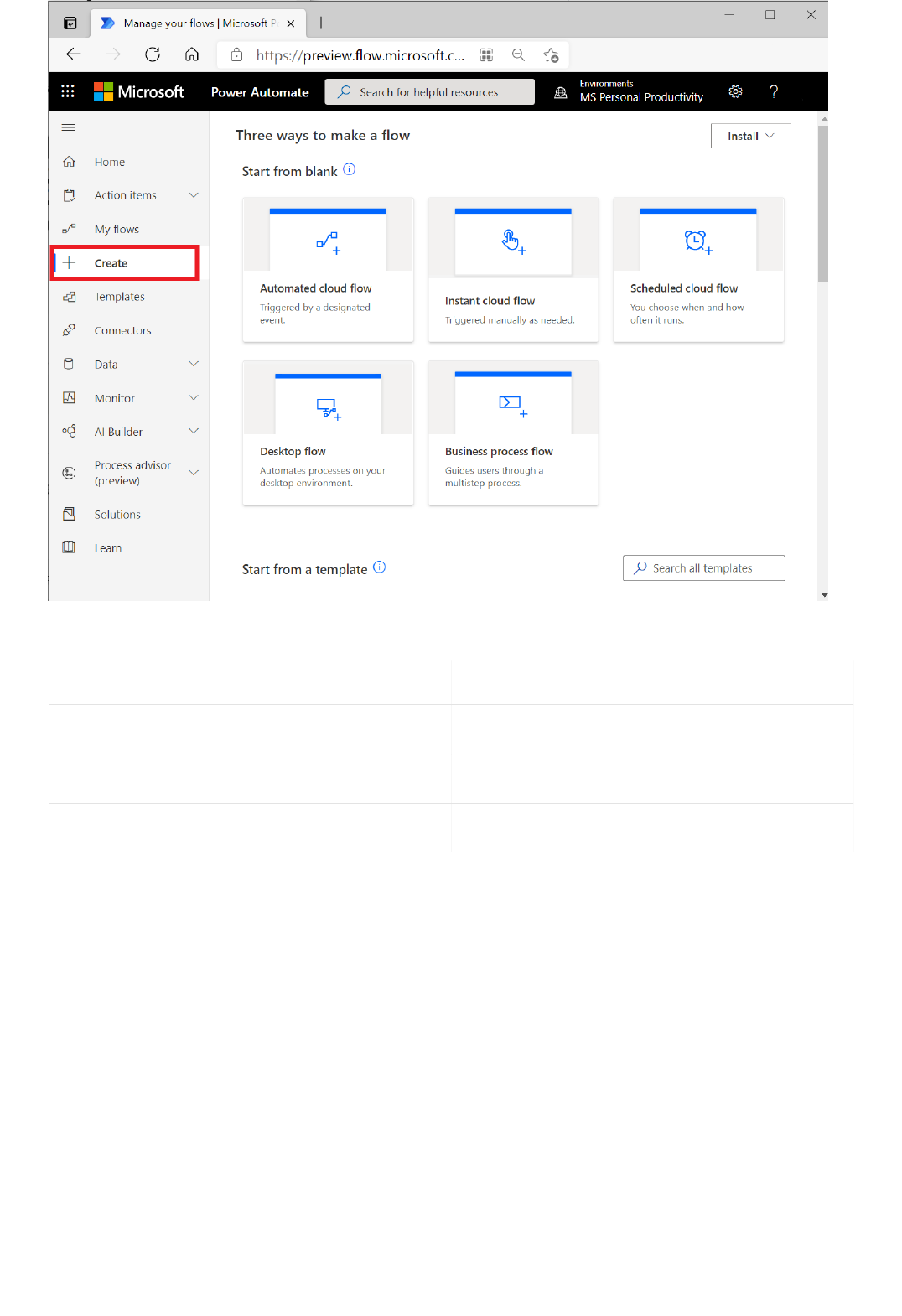

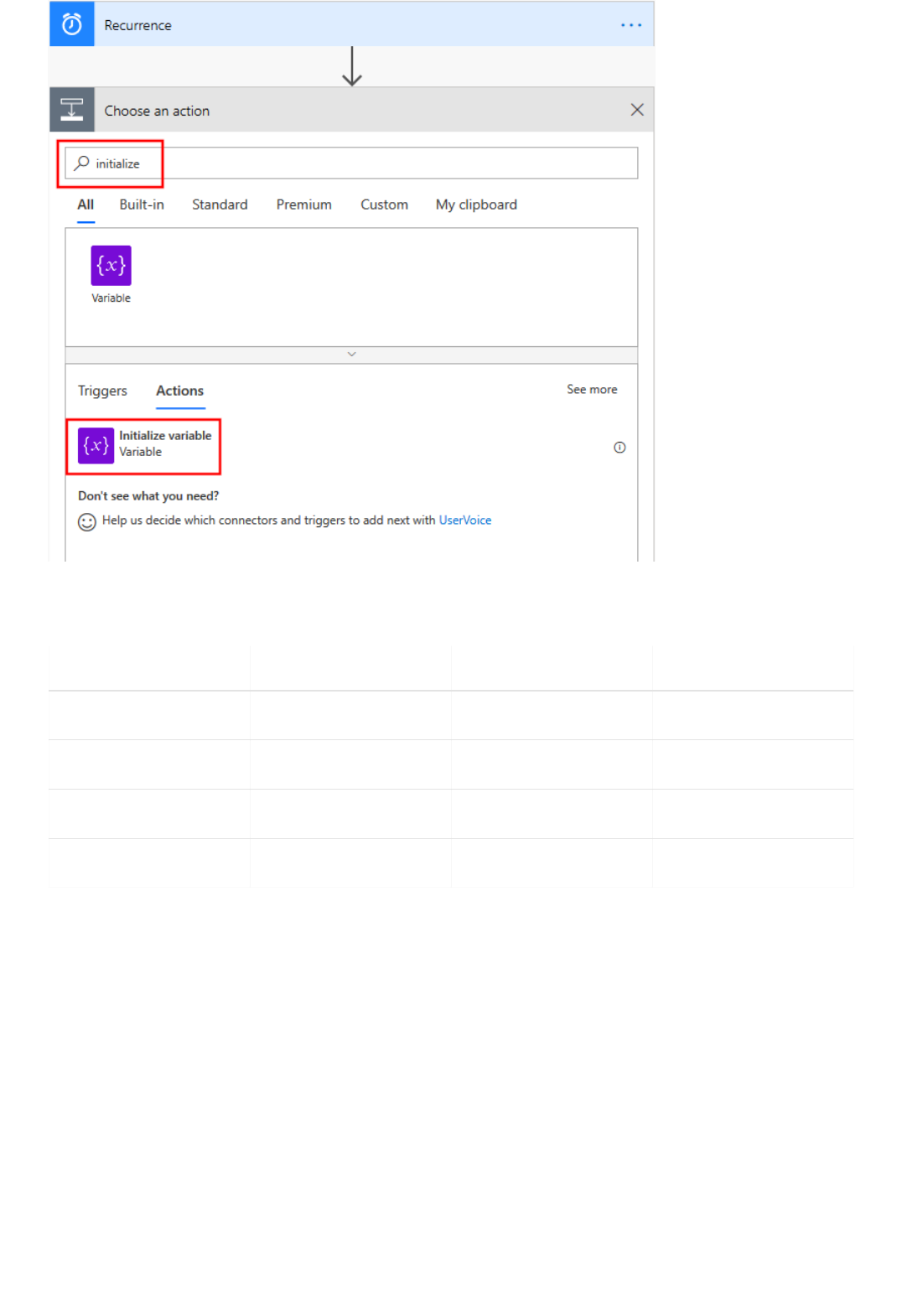

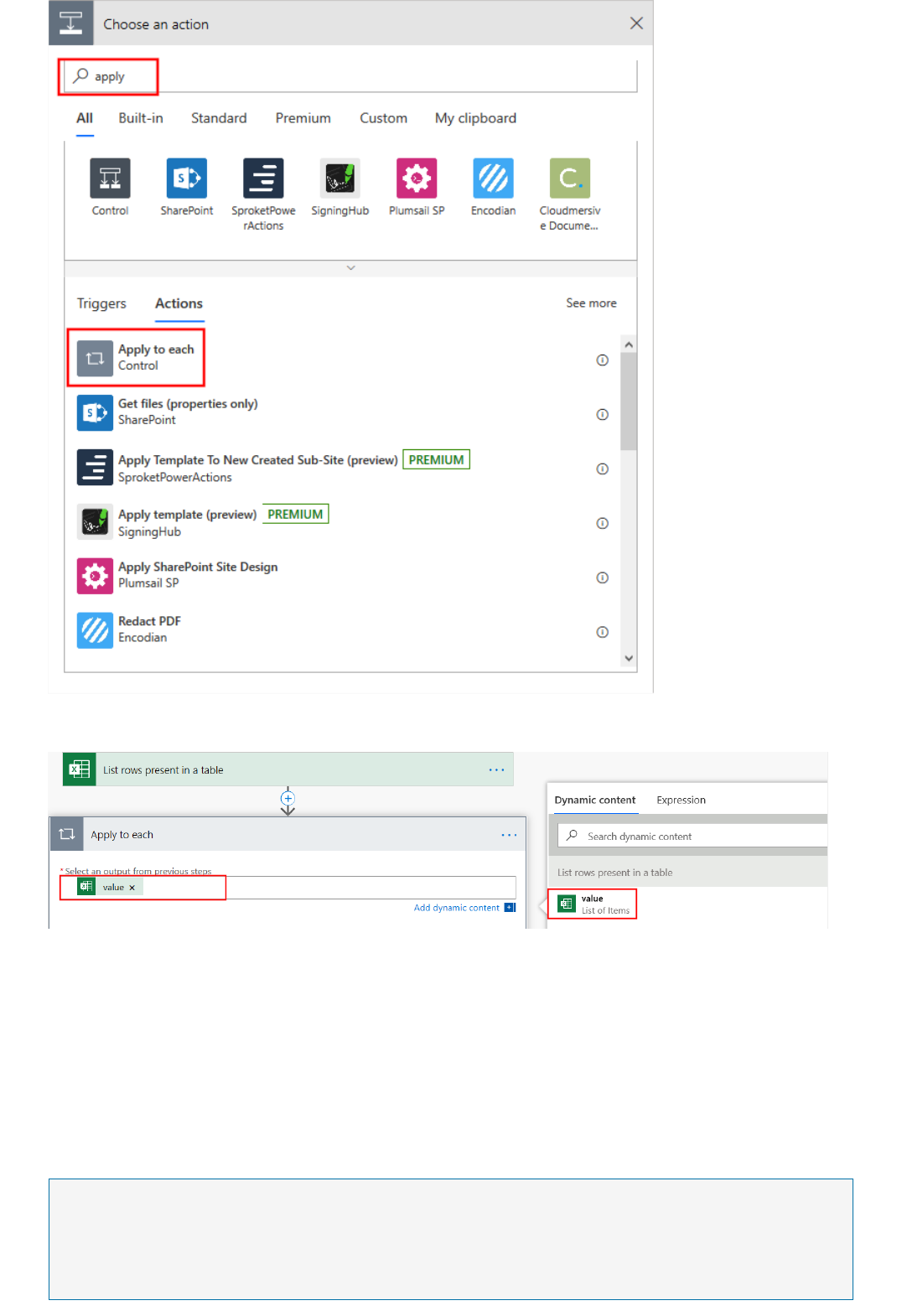

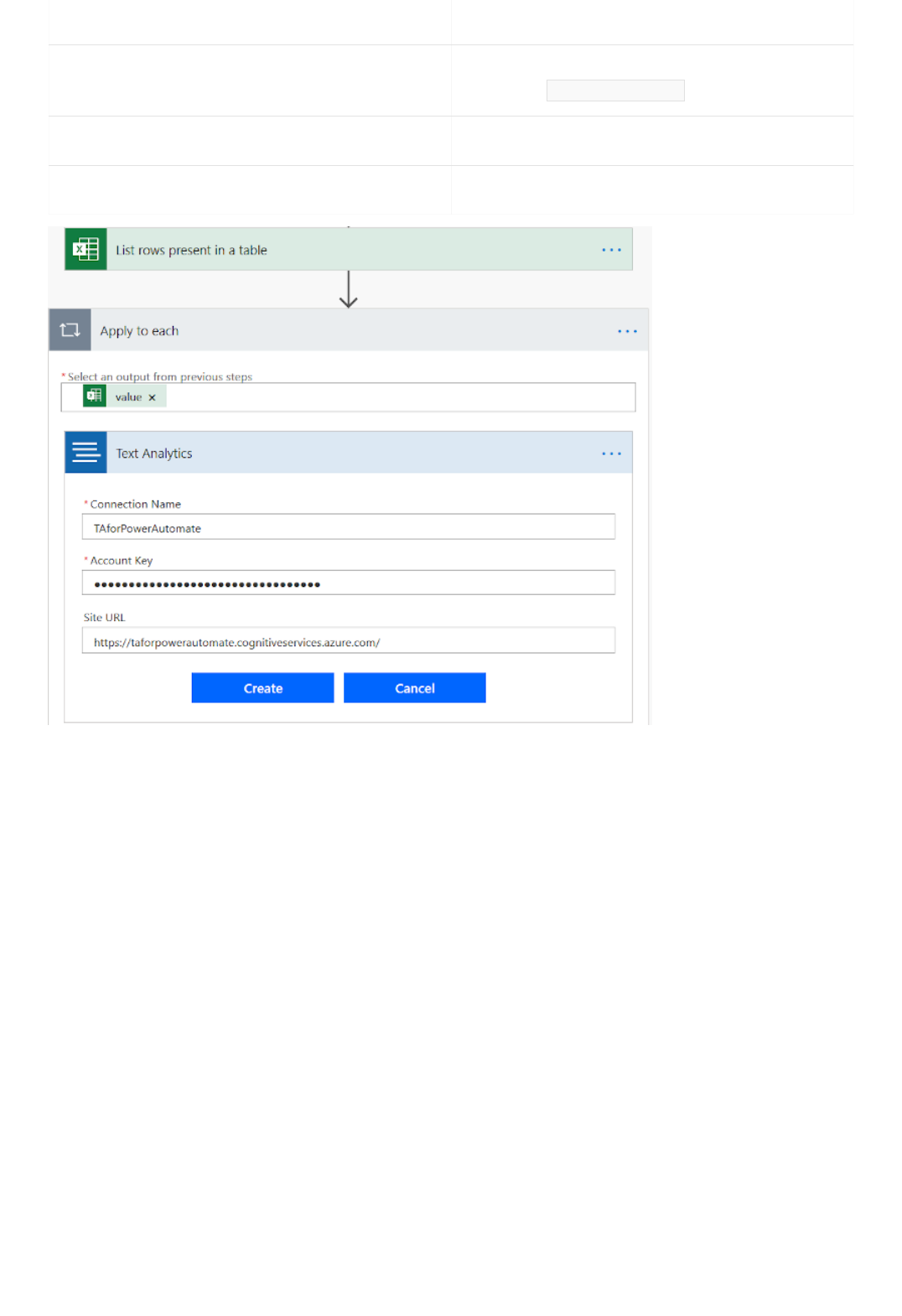

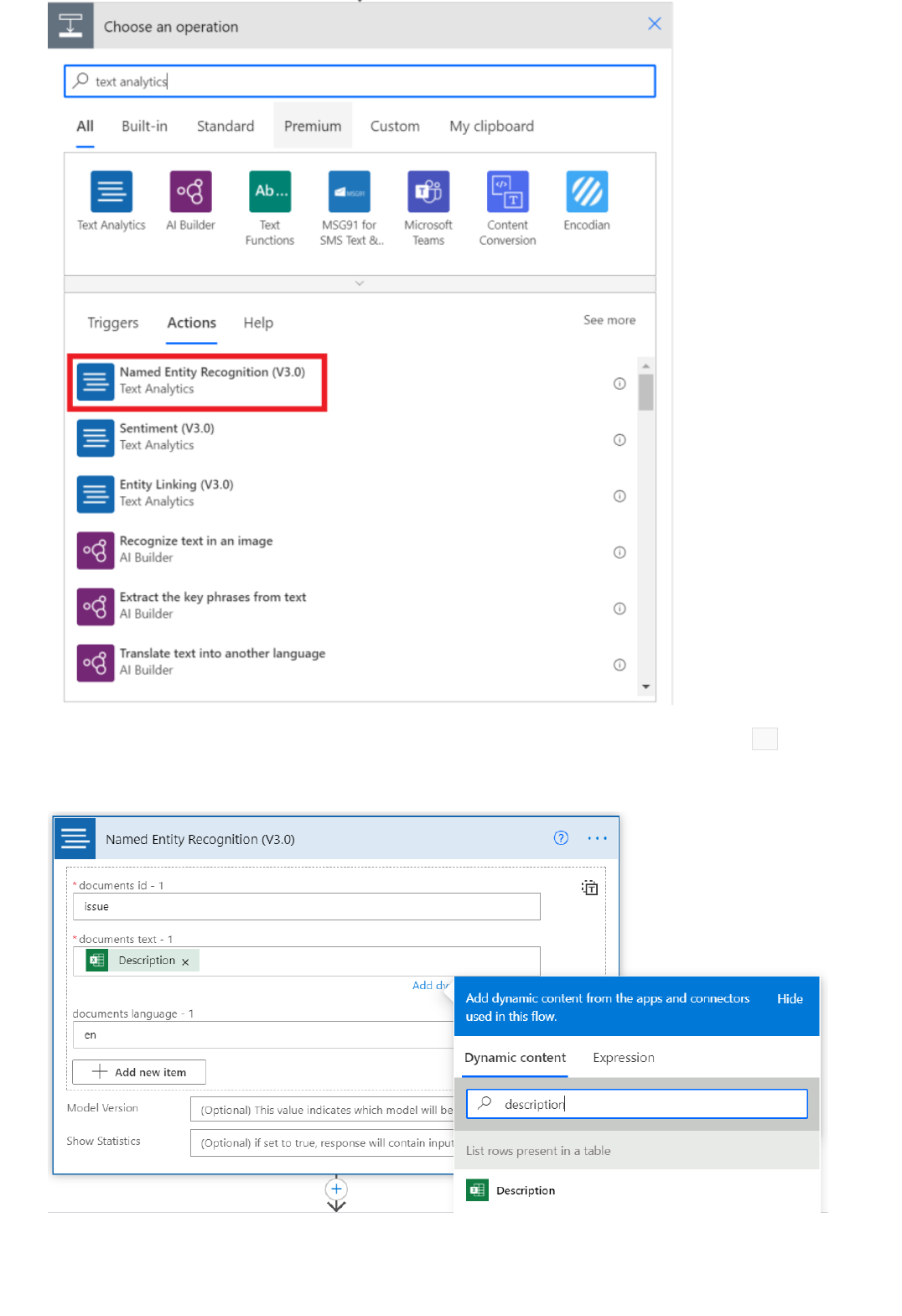

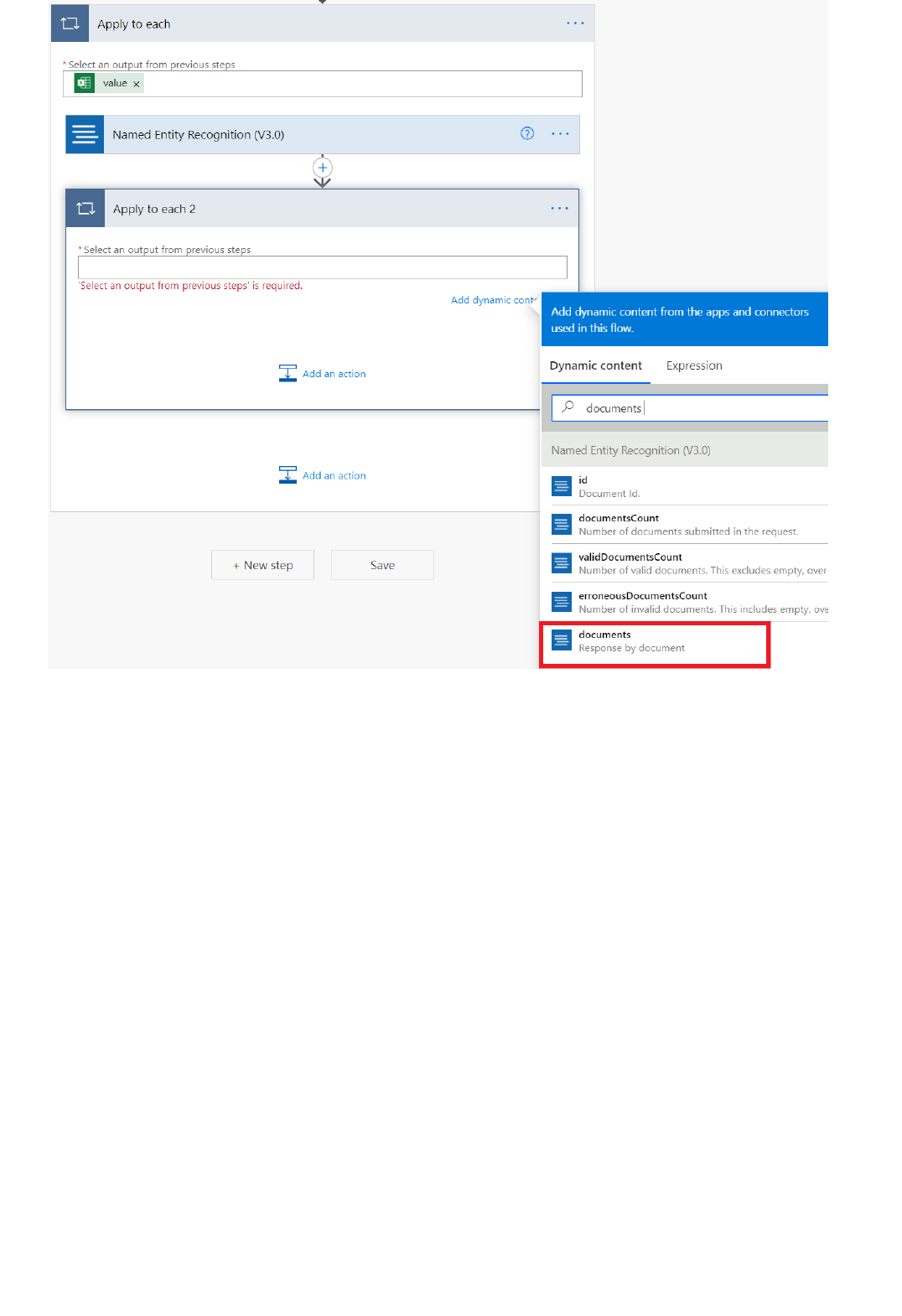

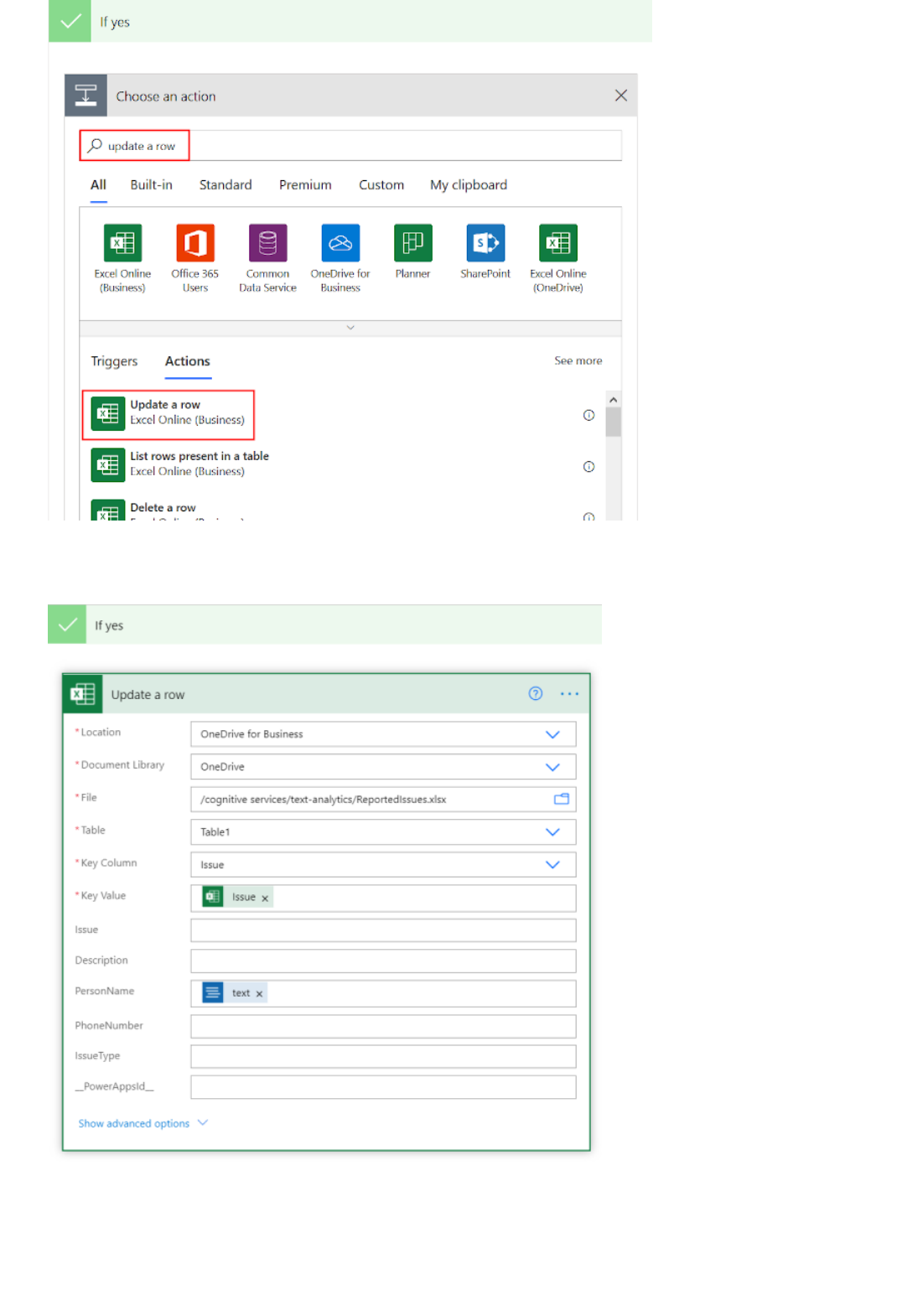

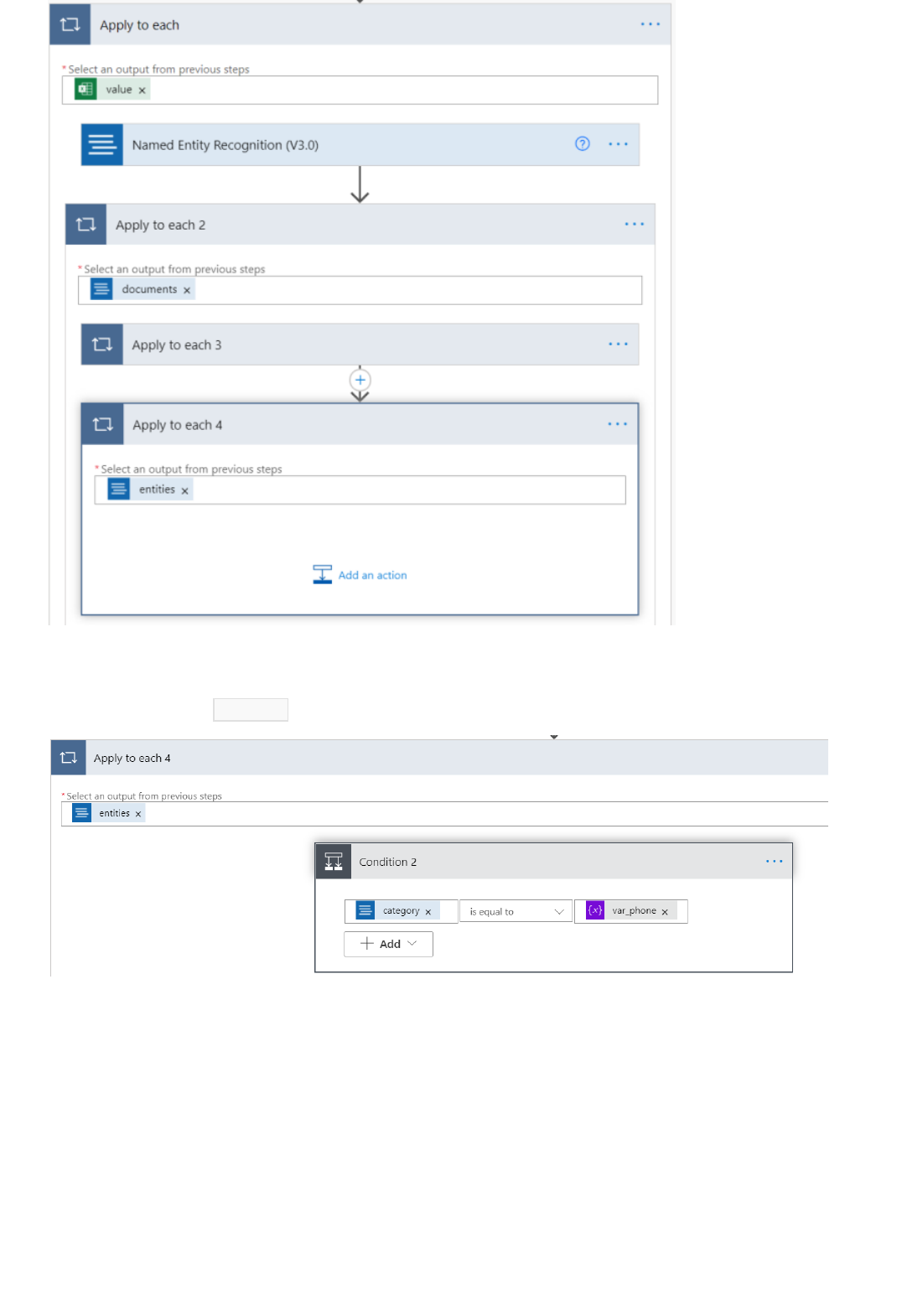

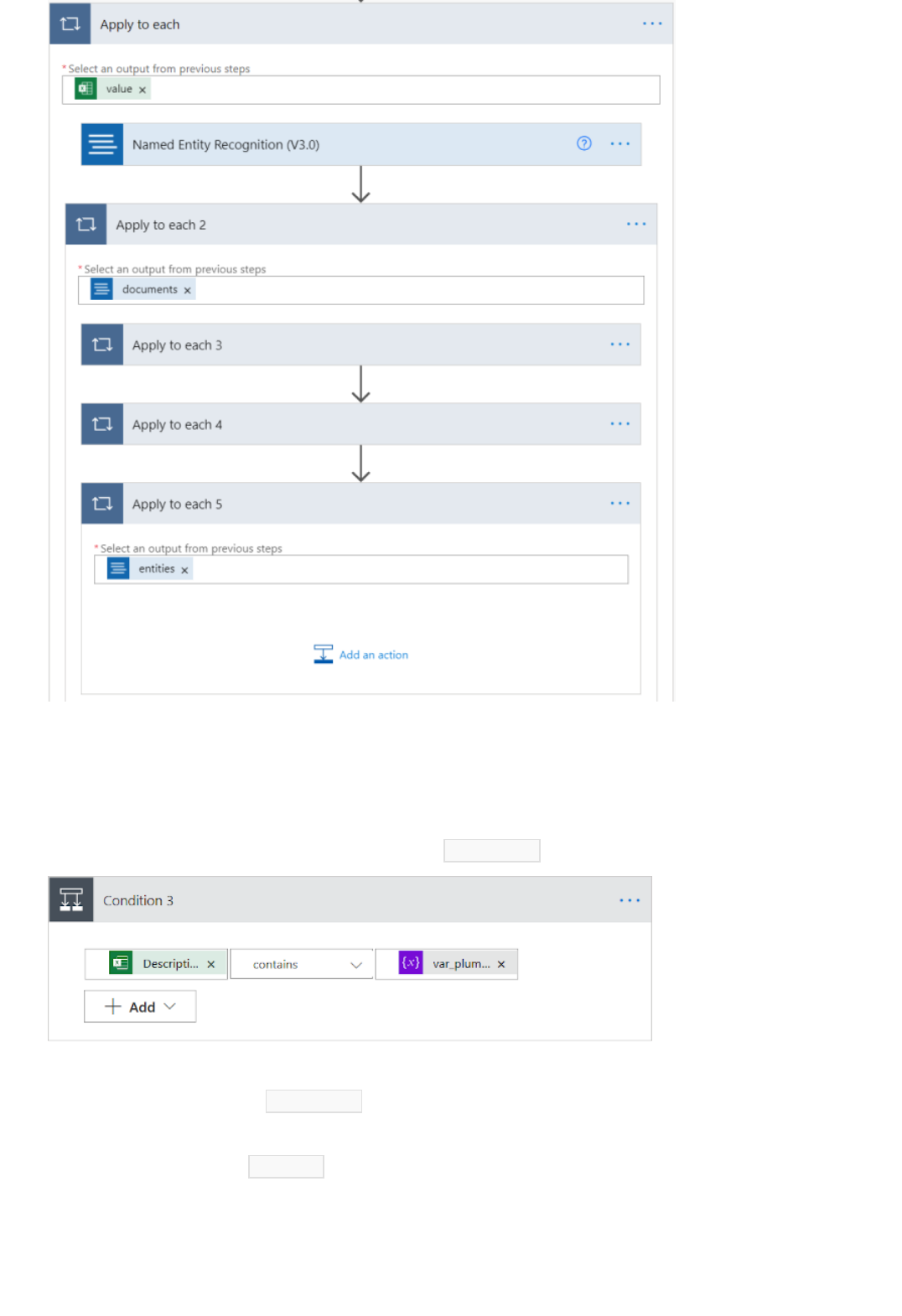

Extract information in Excel using Power Automate

Reference

Text Analytics API

v3.1

What is the Text Analytics API?

7/8/2021 • 5 minutes to read • Edit Online

Sentiment analysis

Key phrase extraction

Language detection

Named entity recognition

The Text Analytics API is a cloud-based service that provides Natural Language Processing (NLP) features for text

mining and text analysis, including: sentiment analysis, opinion mining, key phrase extraction, language

detection, and named entity recognition.

The API is a part of Azure Cognitive Services, a collection of machine learning and AI algorithms in the cloud for

your development projects. You can use these features with the REST API version 3.0 or version 3.1, or the client

library.

This documentation contains the following types of articles:

Quickstarts are step-by-step instructions that let you make calls to the service and get results in a short

period of time.

How-to guides contain instructions for using the service in more specific or customized ways.

Concepts provide in-depth explanations of the service's functionality and features.

Tutorials are longer guides that show you how to use this service as a component in broader business

solutions.

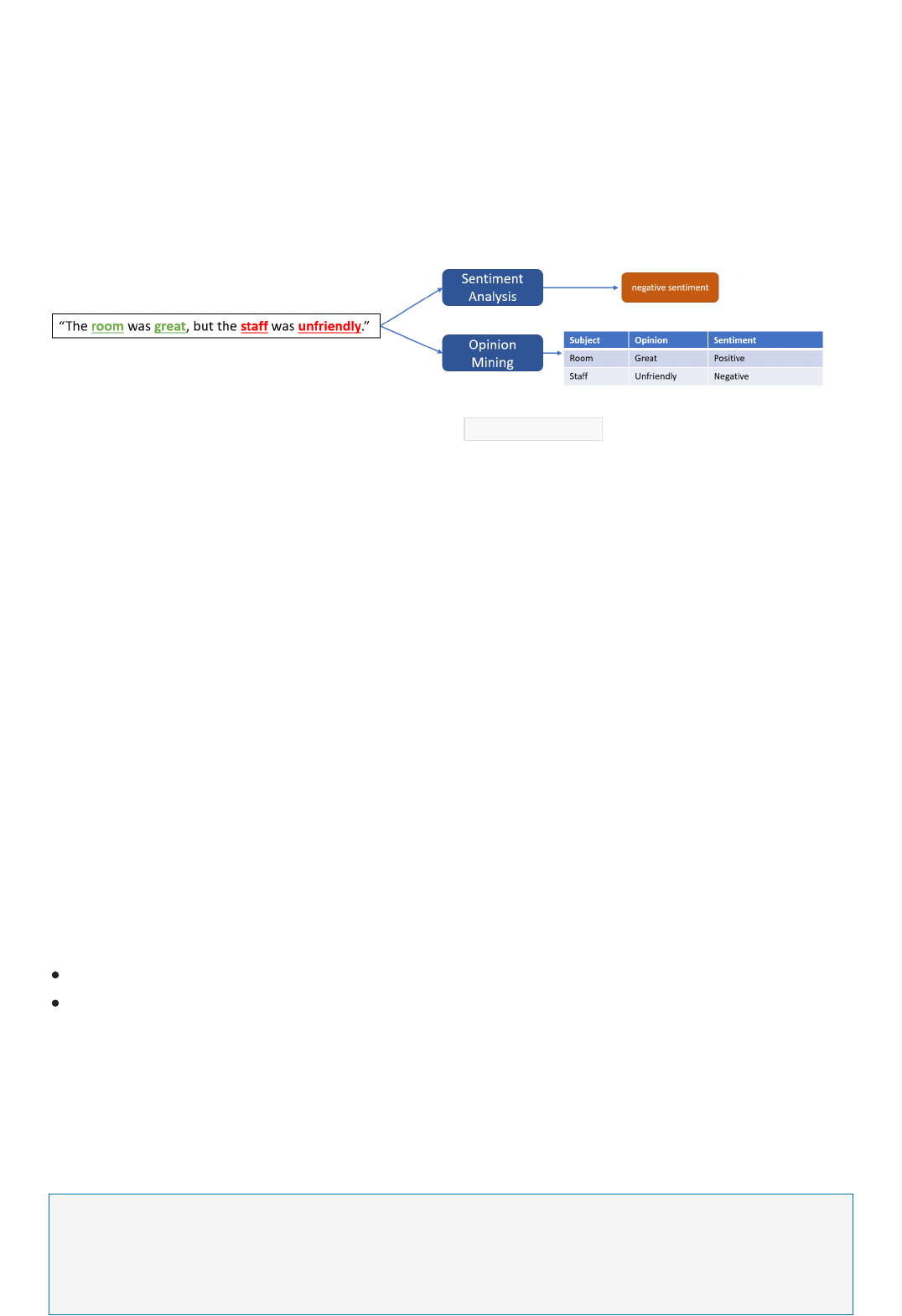

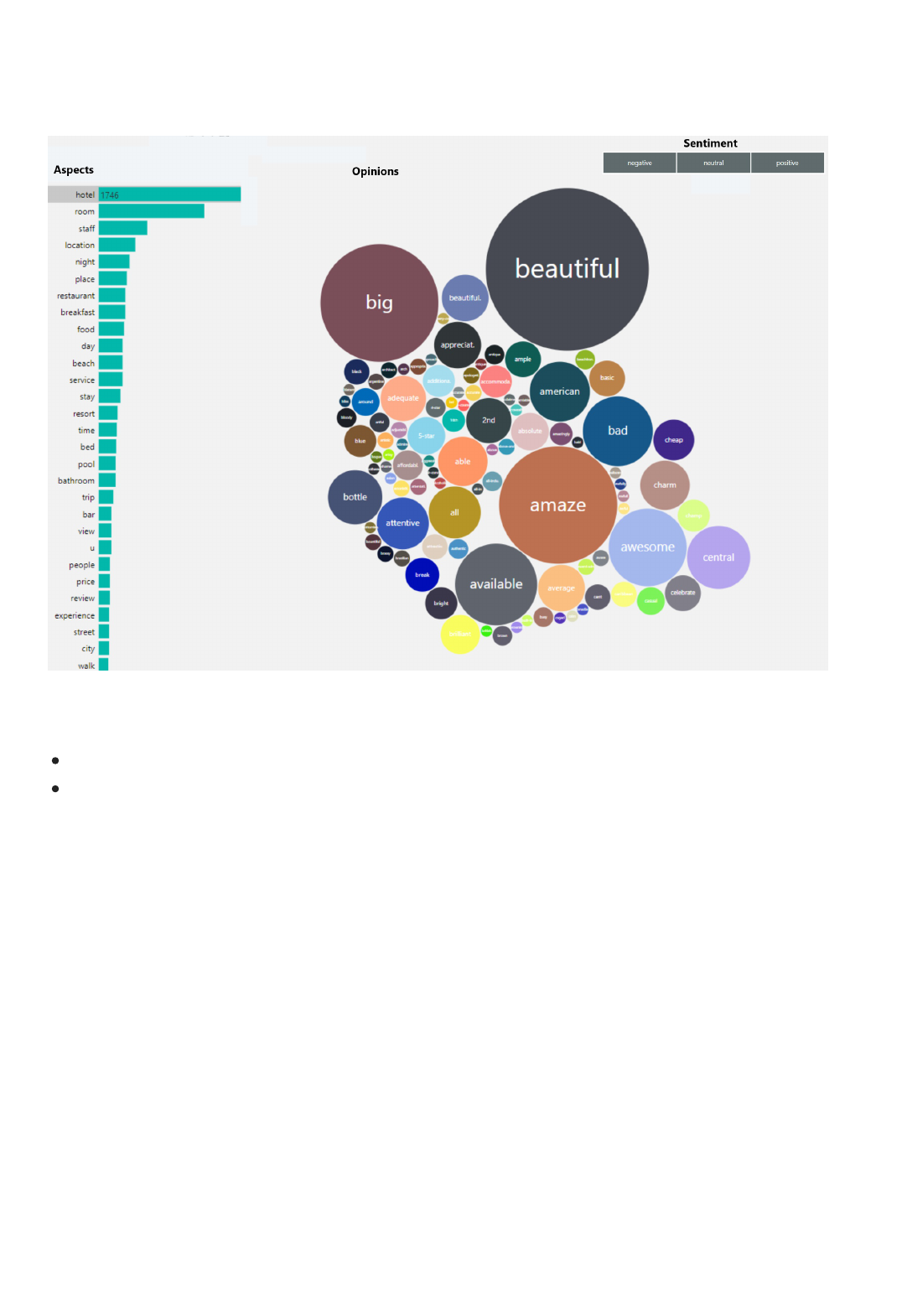

Use sentiment analysis (SA) and find out what people think of your brand or topic by mining the text for clues

about positive or negative sentiment.

The feature provides sentiment labels (such as "negative", "neutral" and "positive") based on the highest

confidence score found by the service at a sentence and document-level. This feature also returns confidence

scores between 0 and 1 for each document & sentences within it for positive, neutral and negative sentiment.

You can also be run the service on premises using a container.

Starting in the v3.1, opinion mining (OM) is a feature of Sentiment Analysis. Also known as Aspect-based

Sentiment Analysis in Natural Language Processing (NLP), this feature provides more granular information

about the opinions related to words (such as the attributes of products or services) in text.

Use key phrase extraction (KPE) to quickly identify the main concepts in text. For example, in the text "The food

was delicious and there were wonderful staff", Key Phrase Extraction will return the main talking points: "food"

and "wonderful staff".

Language detection can detect the language an input text is written in and report a single language code for

every document submitted on the request in a wide range of languages, variants, dialects, and some

regional/cultural languages. The language code is paired with a confidence score.

Named Entity Recognition (NER) can Identify and categorize entities in your text as people, places, organizations,

Text Analytics for health

Deploy on premises using Docker containers

Asynchronous operations

Typical workflow

Text Analytics for multiple programming experience levels

quantities, Well-known entities are also recognized and linked to more information on the web.

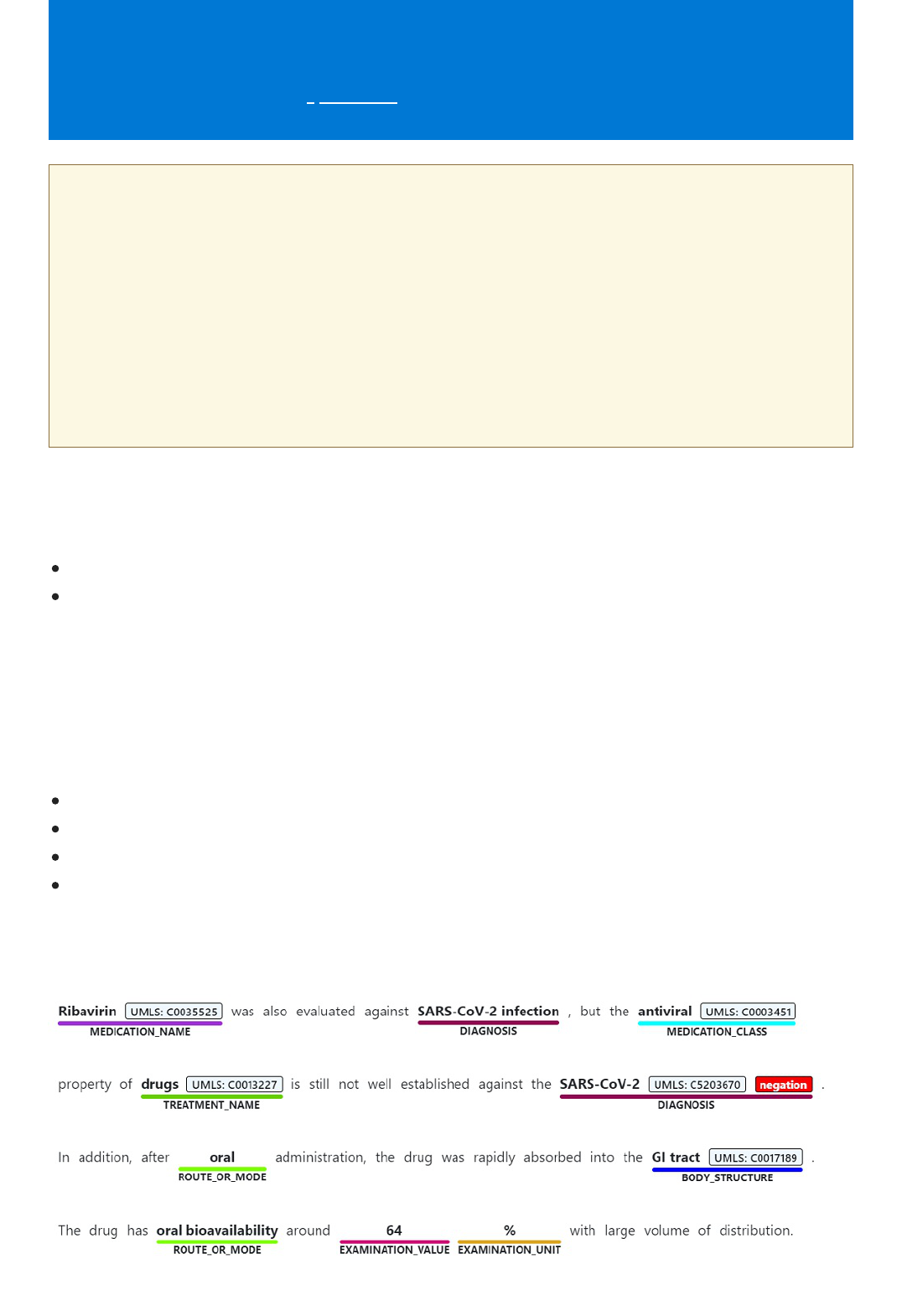

Text Analytics for health is a feature of the Text Analytics API service that extracts and labels relevant medical

information from unstructured texts such as doctor's notes, discharge summaries, clinical documents, and

electronic health records.

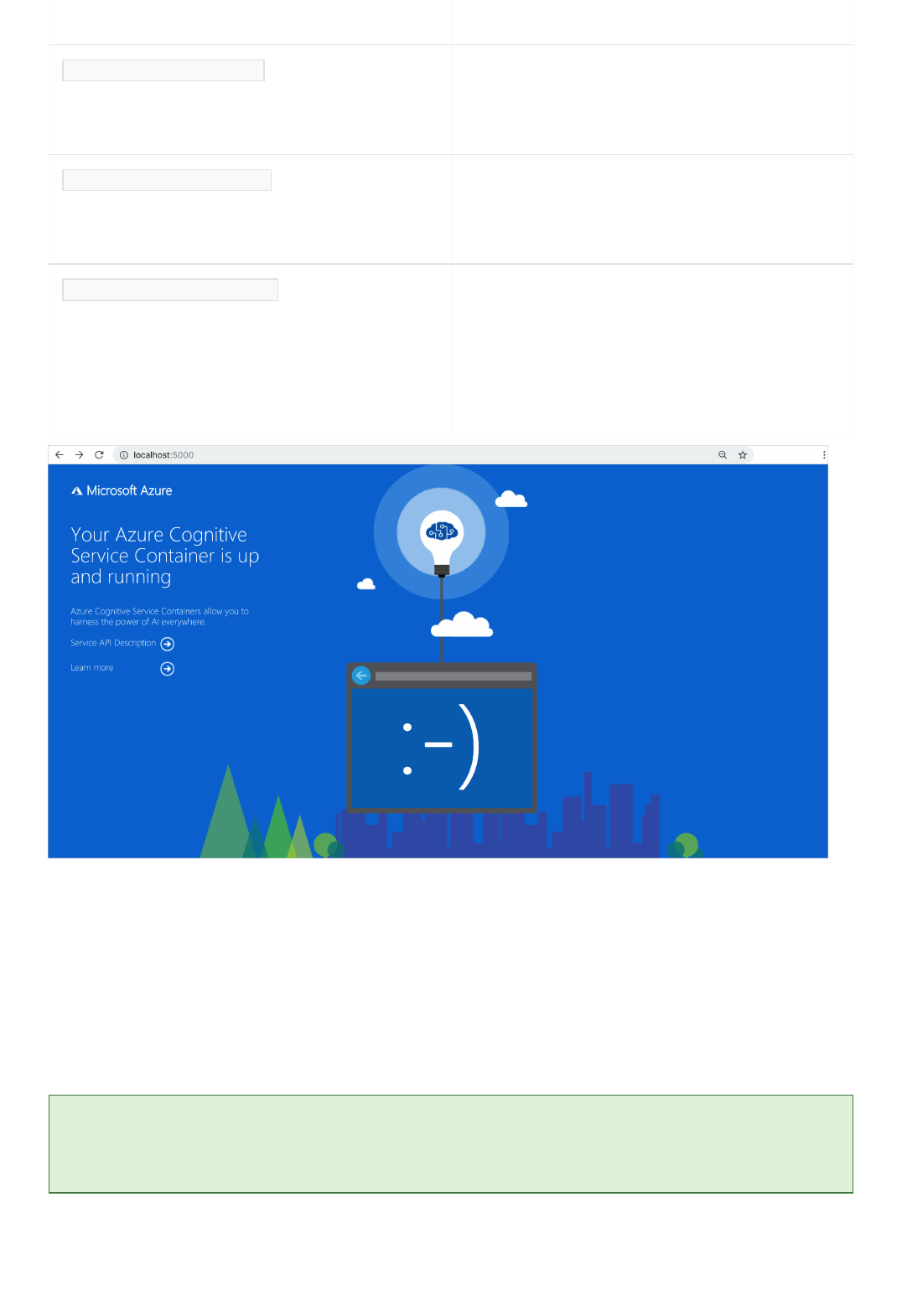

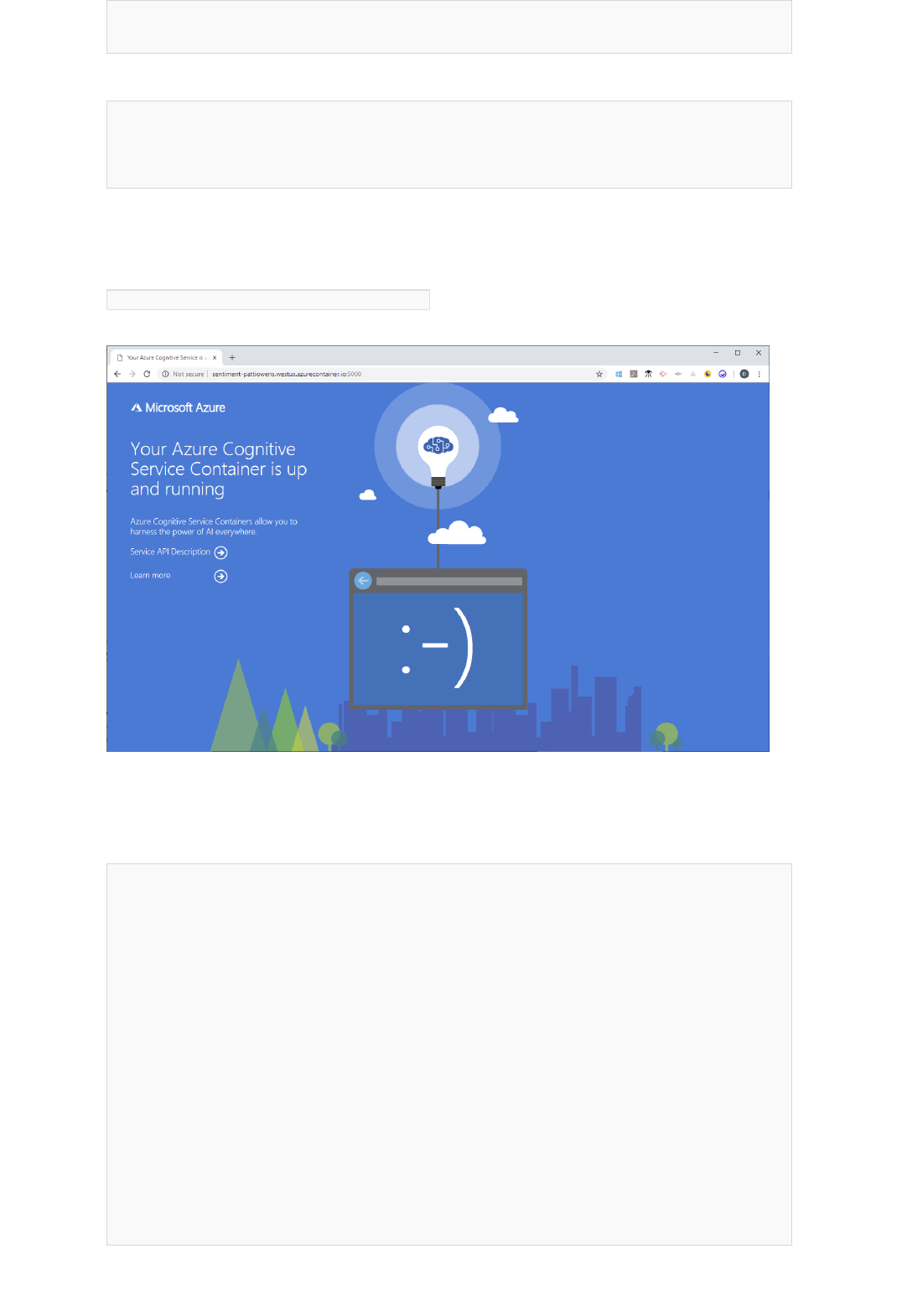

Use Text Analytics containers to deploy API features on-premises. These docker containers enable you to bring

the service closer to your data for compliance, security or other operational reasons. Text Analytics offers the

following containers:

sentiment analysis

key phrase extraction (preview)

language detection (preview)

Text Analytics for health

The /analyze endpoint enables you to use many features of the Text Analytics API asynchronously. Named

Entity Recognition (NER), Key phrase extraction (KPE), Sentiment Analysis (SA), Opinion Mining (OM) are

available as part of /analyze endpoint. It allows clubbing of these features in a single call. It allows sending up

to 125,000 characters per document. Pricing is same as regular Text Analytics.

The workflow is simple: you submit data for analysis and handle outputs in your code. Analyzers are consumed

as-is, with no additional configuration or customization.

1. Create an Azure resource for Text Analytics. Afterwards, get the key generated for you to authenticate

your requests.

2. Formulate a request containing your data as raw unstructured text, in JSON.

3. Post the request to the endpoint established during sign-up, appending the desired resource: sentiment

analysis, key phrase extraction, language detection, or named entity recognition.

4. Stream or store the response locally. Depending on the request, results are either a sentiment score, a

collection of extracted key phrases, or a language code.

Output is returned as a single JSON document, with results for each text document you posted, based on ID. You

can subsequently analyze, visualize, or categorize the results into actionable insights.

Data is not stored in your account. Operations performed by the Text Analytics API are stateless, which means

the text you provide is processed and results are returned immediately.

You can start using the Text Analytics API in your processes, even if you don't have much experience in

programming. Use these tutorials to learn how you can use the API to analyze text in different ways to fit your

experience level.

Minimal programming required:

Extract information in Excel using Text Analytics and Power Automate

Supported languages

Data limits

Unicode encoding

Next steps

Programming experience recommended:

Use the Text Analytics API and MS Flow to identify the sentiment of comments in a Yammer group

Integrate Power BI with the Text Analytics API to analyze customer feedback

Sentiment analysis on streaming data using Azure Databricks

Build a Flask app to translate text, analyze sentiment, and synthesize speech

This section has been moved to a separate article for better discoverability. Refer to Supported languages in the

Text Analytics API for this content.

All of the Text Analytics API endpoints accept raw text data. See the Data limits article for more information.

The Text Analytics API uses Unicode encoding for text representation and character count calculations. Requests

can be submitted in both UTF-8 and UTF-16 with no measurable differences in the character count. Unicode

codepoints are used as the heuristic for character length and are considered equivalent for the purposes of text

analytics data limits. If you use StringInfo.LengthInTextElements to get the character count, you are using the

same method we use to measure data size.

Create an Azure resource for Text Analytics to get a key and endpoint for your applications.

Use the quickstart to start sending API calls. Learn how to submit text, choose an analysis, and view

results with minimal code.

See what's new in the Text Analytics API for information on new releases and features.

Dig in a little deeper with this sentiment analysis tutorial using Azure Databricks.

Check out our list of blog posts and more videos on how to use the Text Analytics API with other tools

and technologies in our External & Community Content page.

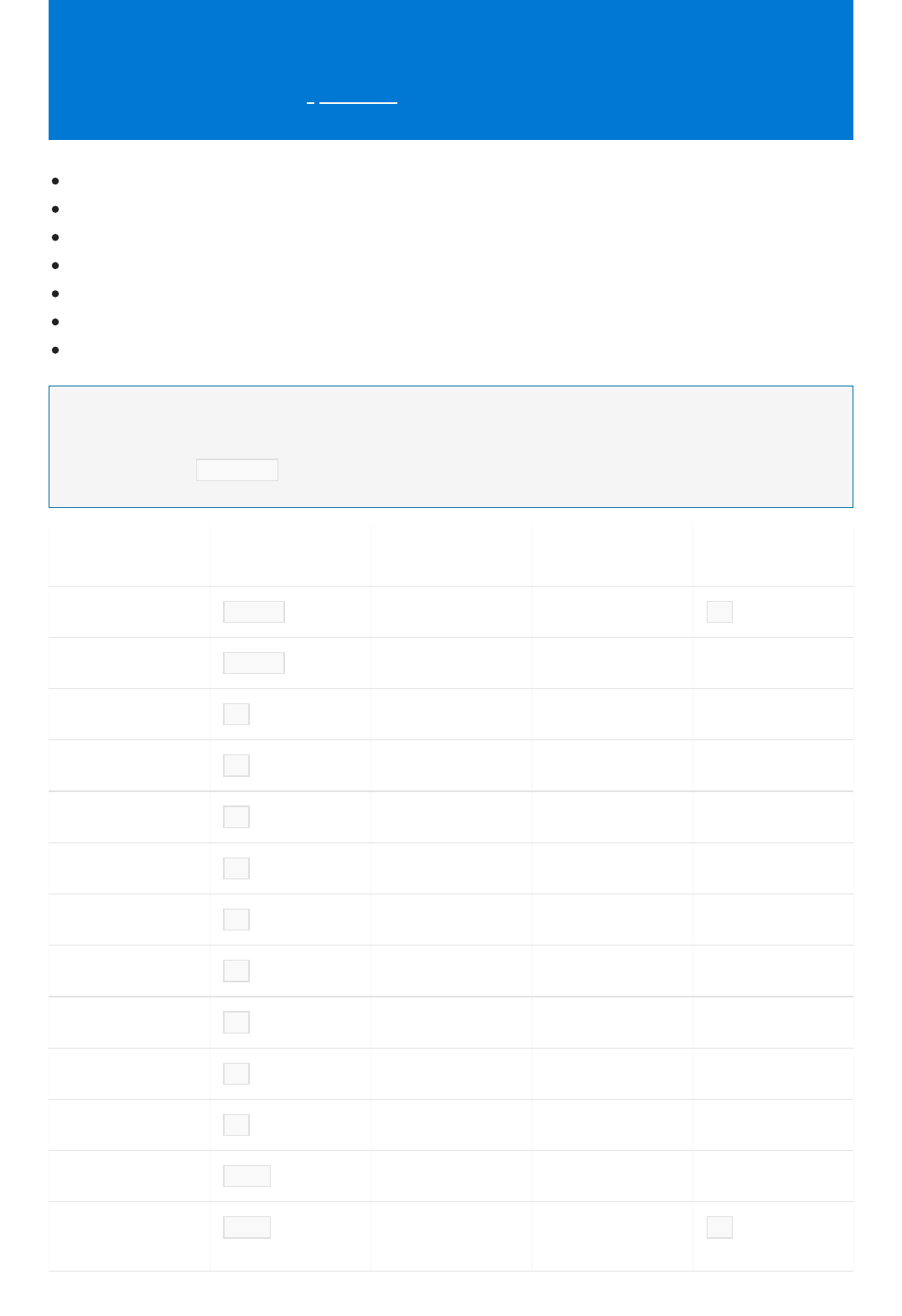

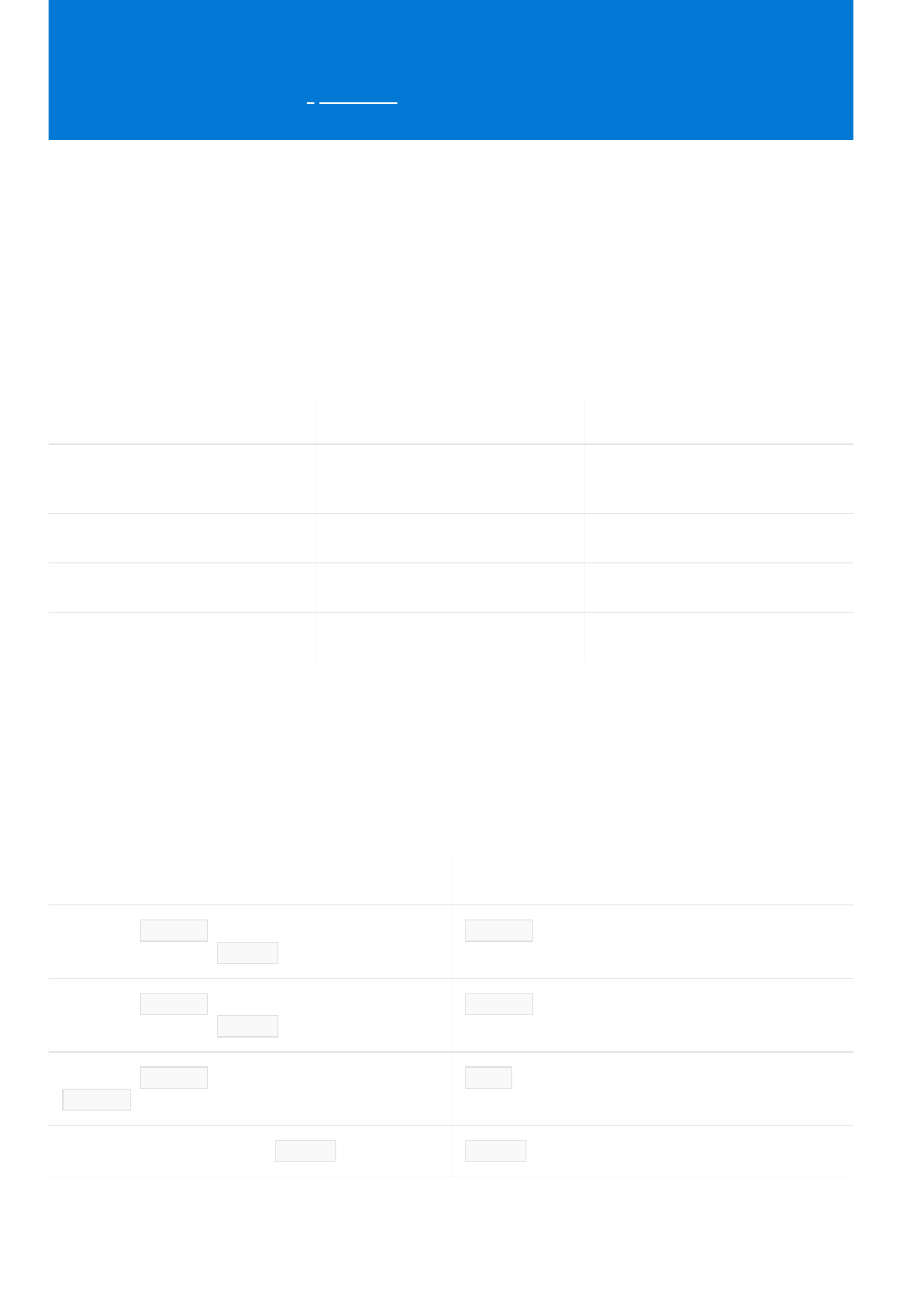

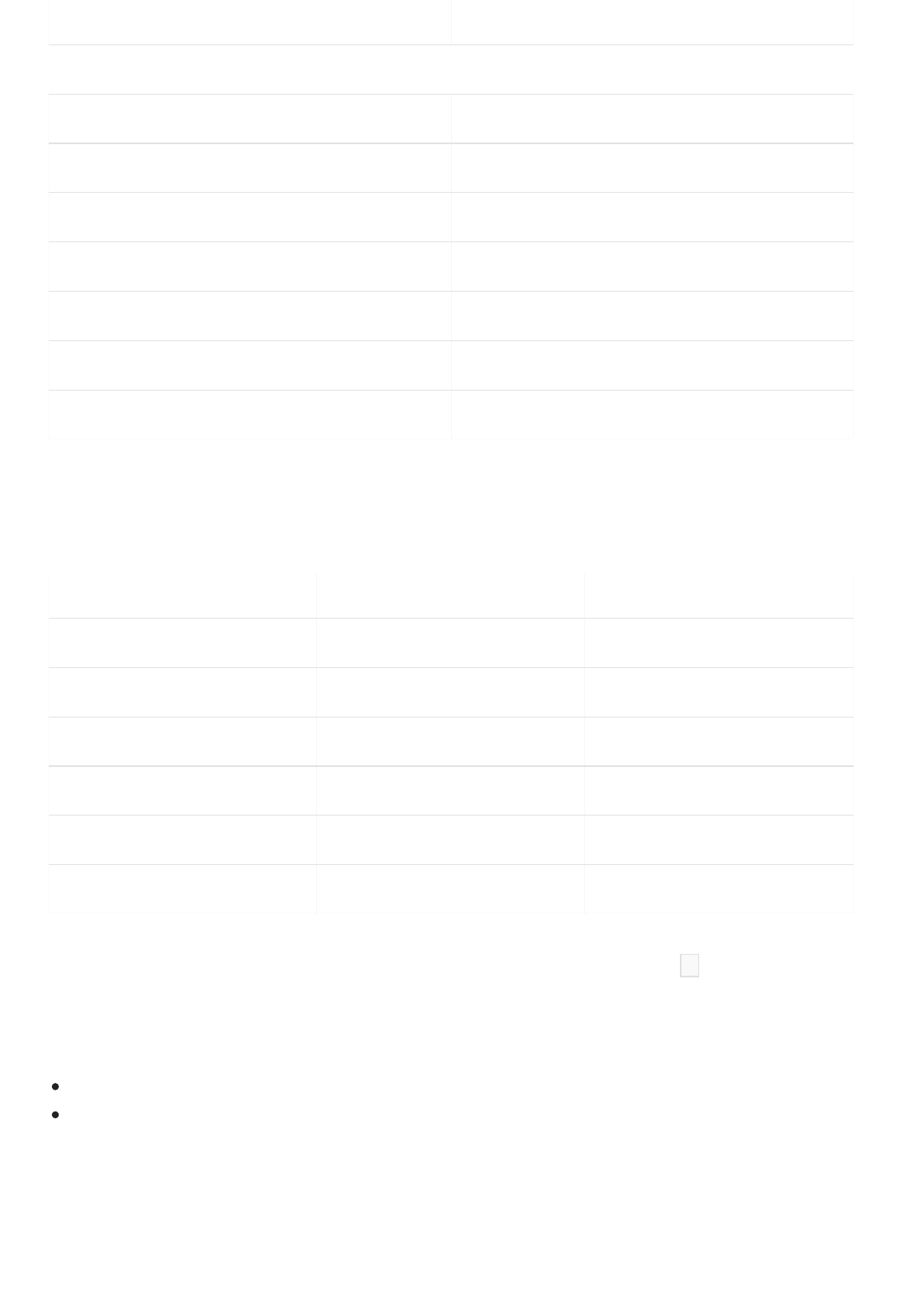

Text Analytics API v3 language support

7/8/2021 • 6 minutes to read • Edit Online

NOTENOTE

L ANGUAGEL ANGUAGE L ANGUAGE C O DEL ANGUAGE C O DE V3 SUP P ORTV3 SUP P ORT

STA RT ING V3 M O DELSTA RT ING V3 M O DEL

VERSION:VERSION: N OT ESN OT ES

Chinese-Simplified zh-hans ✓ 2019-10-01 zh also accepted

Chinese-Traditional zh-hant ✓ 2019-10-01

Dutch nl ✓ 2019-10-01

English en ✓ 2019-10-01

French fr ✓ 2019-10-01

German de ✓ 2019-10-01

Hindi hi ✓ 2020-04-01

Italian it ✓ 2019-10-01

Japanese ja ✓ 2019-10-01

Korean ko ✓ 2019-10-01

Norwegian (Bokmål) no ✓ 2020-04-01

Portuguese (Brazil) pt-BR ✓ 2020-04-01

Portuguese

(Portugal)

pt-PT ✓ 2019-10-01 pt also accepted

Sentiment Analysis

Named Entity Recognition (NER)

Key Phrase Extraction

Entity Linking

Text Analytics for health

Personally Identifiable Information (PII)

Language Detection

Languages are added as new model versions are released for specific Text Analytics features. The current model version for

Sentiment Analysis is 2020-04-01 .

Spanish es ✓ 2019-10-01

Turkish tr ✓ 2020-04-01

L ANGUAGEL ANGUAGE L ANGUAGE C O DEL ANGUAGE C O DE V3 SUP P ORTV3 SUP P ORT

STA RT ING V3 M O DELSTA RT ING V3 M O DEL

VERSION:VERSION: N OT ESN OT ES

Opinion mining

(

v3.1 only

)

Opinion mining

(

v3.1 only

)

L ANGUAGEL ANGUAGE L ANGUAGE C O DEL ANGUAGE C O DE

STA RT ING W IT H V3 M ODE LSTA RT ING W IT H V3 M ODE L

VERSION:VERSION: N OT ESN OT ES

English en 2020-04-01

See also

What is the Text Analytics API?

Model versions

What's new in the Text Analytics API?

7/12/2021 • 9 minutes to read • Edit Online

July 2021

GA release updatesGA release updates

June 2021

General API updatesGeneral API updates

Text Analytics for health updatesText Analytics for health updates

May 2021

General API updatesGeneral API updates

The Text Analytics API is updated on an ongoing basis. To stay up-to-date with recent developments, this article

provides you with information about new releases and features.

General availability for Text Analytics for health for both containers and hosted API (/health).

General availability for Opinion Mining.

General availability for PII extraction and redaction.

General availability for Asynchronous ( /analyze ) endpoint.

Updated quickstart examples with new SDK.

New model-version 2021-06-01 for key phrase extraction based on transformers. It provides:

The 2021-06-01 model version for Named Entity Recognition v3.x, which provides

Asynchronous (/analyze) operation and Text Analytics for health (ungated preview) is available in all regions.

Support for 10 languages (Latin and CJK).

Improved key phrase extraction.

Improved AI quality and expanded language support for the

Skill

entity category.

Added Spanish, French, German, Italian and Portuguese language support for the

Skill

entity category

You no longer need to apply for access to preview Text Analytics for health.

A new model version 2021-05-15 for the /health endpoint and on-premise container which provides

A new image for the Text Analytics for health container with tag 3.0.016230002-onprem-amd64 and model

version 2021-05-15 . This container is available for download from Microsoft Container Registry.

5 new entity types: ALLERGEN , CONDITION_SCALE , COURSE , EXPRESSION and MUTATION_TYPE ,

14 new relation types,

Assertion detection expanded for new entity types and

Linking support for ALLERGEN entity type

Custom question answering (previously QnA maker) can now be accessed using a Text Analytics resource.

Release of the new API v3.1-preview.5 which includes

Text Analytics for health and the Analyze asynchronous operations are now available in all regions

Asynchronous Analyze API now supports Sentiment Analysis (SA) and Opinion Mining (OM).

A new query parameter, LoggingOptOut , is now available for customers who wish to opt out of logging

input text for incident reports. Learn more about this parameter in the data privacy article.

March 2021

General API updatesGeneral API updates

Text Analytics for health updatesText Analytics for health updates

Text Analytics resource portal updateText Analytics resource portal update

February 2021

Release of the new API v3.1-preview.4 which includes

Changes in the Opinion Mining JSON response body:

Changes in the JSON response body of the hosted web API of Text Analytics for health:

Entity linking is now available as an asynchronous task in the /analyze endpoint.

A new pii-categories parameter is now available in the /pii endpoint.

aspects is now targets and opinions is now assessments .

The isNegated boolean name of a detected entity object for Negation is deprecated and

replaced by Assertion Detection.

A new property called role is now part of the extracted relation between an attribute and an

entity as well as the relation between entities. This adds specificity to the detected relation type.

This parameter lets you specify select PII entities as well as those not supported by default for

the input language.

Updated client libraries, which include asynchronous Analyze, and Text Analytics for health operations.

You can find examples on GitHub:

C#

Python

Java

JavaScript

Learn more about Text Analytics API v3.1-Preview.4

A new model version 2021-03-01 for the /health endpoint and on-premise container which provides

A new container image with tag 3.0.015490002-onprem-amd64 and the new model-version 2021-03-01 has

been released to the container preview repository.

A new Text Analytics for health container image with this same model-version is now available at

mcr.microsoft.com/azure-cognitive-services/textanalytics/healthcare . Starting April 26th, you will only be

able to download the container from this repository.

A rename of the Gene entity type to GeneOrProtein .

A new Date entity type.

Assertion detection which replaces negation detection (only available in API v3.1-preview.4).

A new preferred name property for linked entities that is normalized from various ontologies and

coding systems (only available in API v3.1-preview.4).

This container image will no longer be available for download from containerpreview.azurecr.io after

April 26th, 2021.

Learn more about Text Analytics for health

Processed Text RecordsProcessed Text Records is now available as a metric in the MonitoringMonitoring section for your Text Analytics

resource in the Azure portal.

The 2021-01-15 model version for the PII endpoint in Named Entity Recognition v3.1-preview.x, which

provides

January 2021

December 2020

November 2020

October 2020

The S0 through S4 pricing tiers are being retired on March 8th, 2021. If you have an existing Text Analytics

resource using the S0 through S4 pricing tier, you should update it to use the Standard (S) pricing tier.

The language detection container is now generally available.

v2.1 of the API is being retired.

Expanded support for 9 new languages

Improved AI quality of named entity categories for supported languages.

The 2021-01-15 model version for Named Entity Recognition v3.x, which provides

Expanded language support for several general entity categories.

Improved AI quality of general entity categories for all supported v3 languages.

The 2021-01-05 model version for language detection, which provides additional language support.

These model versions are currently unavailable in the East US region.

Learn more about about the new NER model

Updated pricing details for the Text Analytics API.

A new endpoint with Text Analytics API v3.1-preview.3 for the new asynchronous Analyze API, which

supports batch processing for NER, PII, and key phrase extraction operations.

A new endpoint with Text Analytics API v3.1-preview.3 for the new asynchronous Text Analytics for health

hosted API with support for batch processing.

Both new features listed above are only available in the following regions: West US 2 , East US 2 ,

Central US , North Europe and West Europe regions.

Portuguese (Brazil) pt-BR is now supported in Sentiment Analysis v3.x, starting with model version

2020-04-01 . It adds to the existing pt-PT support for Portuguese.

Updated client libraries, which include asynchronous Analyze, and Text Analytics for health operations.

You can find examples on GitHub:

C#

Python

Java

Learn more about Text Analytics API v3.1-Preview.3

Hindi support for Sentiment Analysis v3.x, starting with model version 2020-04-01 .

Model version 2020-09-01 for the v3 /languages endpoint, which adds increased language detection and

accuracy improvements.

v3 availability in Central India and UAE North.

September 2020

General API updatesGeneral API updates

Text Analytics for health container updatesText Analytics for health container updates

August 2020

General API updatesGeneral API updates

Text Analytics for health container updatesText Analytics for health container updates

Release of a new URL for the Text Analytics v3.1 public preview to support updates to the following Named

Entity Recognition v3 endpoints:

The following Text Analytics preview API endpoints were retired on September 4th, 2020:

/pii endpoint now includes the new redactedText property in the response JSON where detected

PII entities in the input text are replaced by an * for each character of those entities.

/linking endpoint now includes the bingID property in the response JSON for linked entities.

v2.1-preview

v3.0-preview

v3.0-preview.1

Learn more about Text Analytics API v3.1-Preview.2

The following updates are specific to the September release of the Text Analytics for health container only.

A new container image with tag 1.1.013530001-amd64-preview with the new model-version 2020-09-03 has

been released to the container preview repository.

This model version provides improvements in entity recognition, abbreviation detection, and latency

enhancements.

Learn more about Text Analytics for health

Model version 2020-07-01 for the v3 /keyphrases , /pii and /languages endpoints, which adds:

An HTTP 400 error will now be returned for v3 API requests that exceed the published data limits.

Endpoints that return an offset now support the optional stringIndexType parameter, which adjusts the

returned offset and length values to match a supported string index scheme.

Additional government and country specific entity categories for Named Entity Recognition.

Norwegian and Turkish support in Sentiment Analysis v3.

The following updates are specific to the August release of the Text Analytics for health container only.

New model-version for Text Analytics for health: 2020-07-24

New URL for sending Text Analytics for health requests:

http://<serverURL>:5000/text/analytics/v3.2-preview.1/entities/health (Please note that a browser cache

clearing will be needed in order to use the demo web app included in this new container image)

The following properties in the JSON response have changed:

type has been renamed to category

score has been renamed to confidenceScore

Entities in the category field of the JSON output are now in pascal case. The following entities have been

renamed:

EXAMINATION_RELATION has been renamed to RelationalOperator .

EXAMINATION_UNIT has been renamed to MeasurementUnit .

EXAMINATION_VALUE has been renamed to MeasurementValue .

July 2020

Text Analytics for health container

-

Public gated previewText Analytics for health container

-

Public gated preview

May 2020

Text Analytics API v3 General AvailabilityText Analytics API v3 General Availability

ROUTE_OR_MODE has been renamed MedicationRoute .

The relational entity ROUTE_OR_MODE_OF_MEDICATION has been renamed to RouteOfMedication .

The following entities have been added:

NER

AdministrativeEvent

CareEnvironment

HealthcareProfession

MedicationForm

Relation extraction

DirectionOfCondition

DirectionOfExamination

DirectionOfTreatment

Learn more about Text Analytics for health container

The Text Analytics for health container is now in public gated preview, which lets you extract information from

unstructured English-language text in clinical documents such as: patient intake forms, doctor's notes, research

papers and discharge summaries. Currently, you will not be billed for Text Analytics for health container usage.

The container offers the following features:

Named Entity Recognition

Relation extraction

Entity linking

Negation

Text Analysis API v3 is now generally available with the following updates:

Model version 2020-04-01

New data limits for each feature

Updated language support for Sentiment Analysis (SA) v3

Separate endpoint for Entity Linking

New "Address" entity category in Named Entity Recognition (NER) v3.

New subcategories in NER v3:

Location - Geographical

Location - Structural

Organization - Stock Exchange

Organization - Medical

Organization - Sports

Event - Cultural

Event - Natural

Event - Sports

Text Analytics API v3.1 Public PreviewText Analytics API v3.1 Public Preview

February 2020

SDK support for Text Analytics API v3 Public PreviewSDK support for Text Analytics API v3 Public Preview

Named Entity Recognition v3 public previewNamed Entity Recognition v3 public preview

The following properties in the JSON response have been added:

SentenceText in Sentiment Analysis

Warnings for each document

The names of the following properties in the JSON response have been changed, where applicable:

score has been renamed to confidenceScore

type has been renamed to category

subtype has been renamed to subcategory

confidenceScore has two decimal points of precision.

Learn more about Text Analytics API v3

New Sentiment Analysis feature - Opinion Mining

New Personal ( PII ) domain filter for protected health information ( PHI ).

Learn more about Text Analytics API v3.1 Preview

As part of the unified Azure SDK release, the Text Analytics API v3 SDK is now available as a public preview for

the following programming languages:

C#

Python

JavaScript (Node.js)

Java

Learn more about Text Analytics API v3 SDK

Additional entity types are now available in the Named Entity Recognition (NER) v3 public preview service as we

expand the detection of general and personal information entities found in text. This update introduces model

version 2020-02-01 , which includes:

Recognition of the following general entity types (English only):

PersonType

Product

Event

Geopolitical Entity (GPE) as a subtype under Location

Skill

Recognition of the following personal information entity types (English only):

Person

Organization

Age as a subtype under Quantity

Date as a subtype under DateTime

Email

Phone Number (US only)

URL

October 2019October 2019

Named Entity Recognition

(

NER

)

Named Entity Recognition

(

NER

)

Sentiment Analysis v3 public previewSentiment Analysis v3 public preview

Next steps

IP Address

A new endpoint for recognizing personal information entity types (English only)

Separate endpoints for entity recognition and entity linking.

Model version 2019-10-01 , which includes:

Expanded detection and categorization of entities found in text.

Recognition of the following new entity types:

Phone number

IP address

Entity linking supports English and Spanish. NER language support varies by the entity type.

A new endpoint for analyzing sentiment.

Model version 2019-10-01 , which includes:

Significant improvements in the accuracy and detail of the API's text categorization and scoring.

Automatic labeling for different sentiments in text.

Sentiment analysis and output on a document and sentence level.

It supports English ( en ), Japanese ( ja ), Chinese Simplified ( zh-Hans ), Chinese Traditional ( zh-Hant ), French (

fr ), Italian ( it ), Spanish ( es ), Dutch ( nl ), Portuguese ( pt ), and German ( de ), and is available in the

following regions: Australia East , Central Canada , Central US , East Asia , East US , East US 2 ,

North Europe , Southeast Asia , South Central US , UK South , West Europe , and West US 2 .

Learn more about Sentiment Analysis v3

What is the Text Analytics API?

Example user scenarios

Sentiment analysis

Language detection

Entity recognition

Key phrase extraction

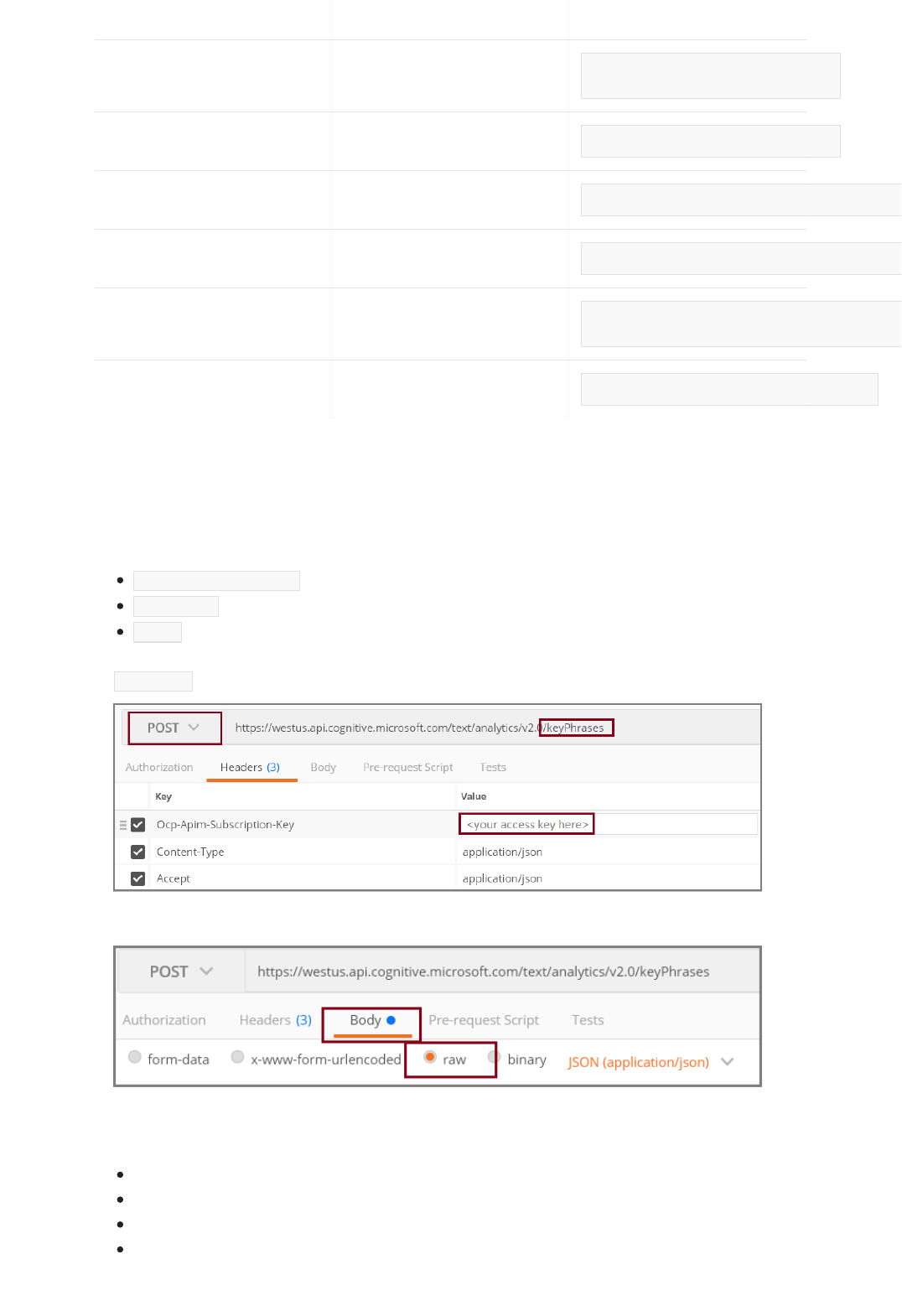

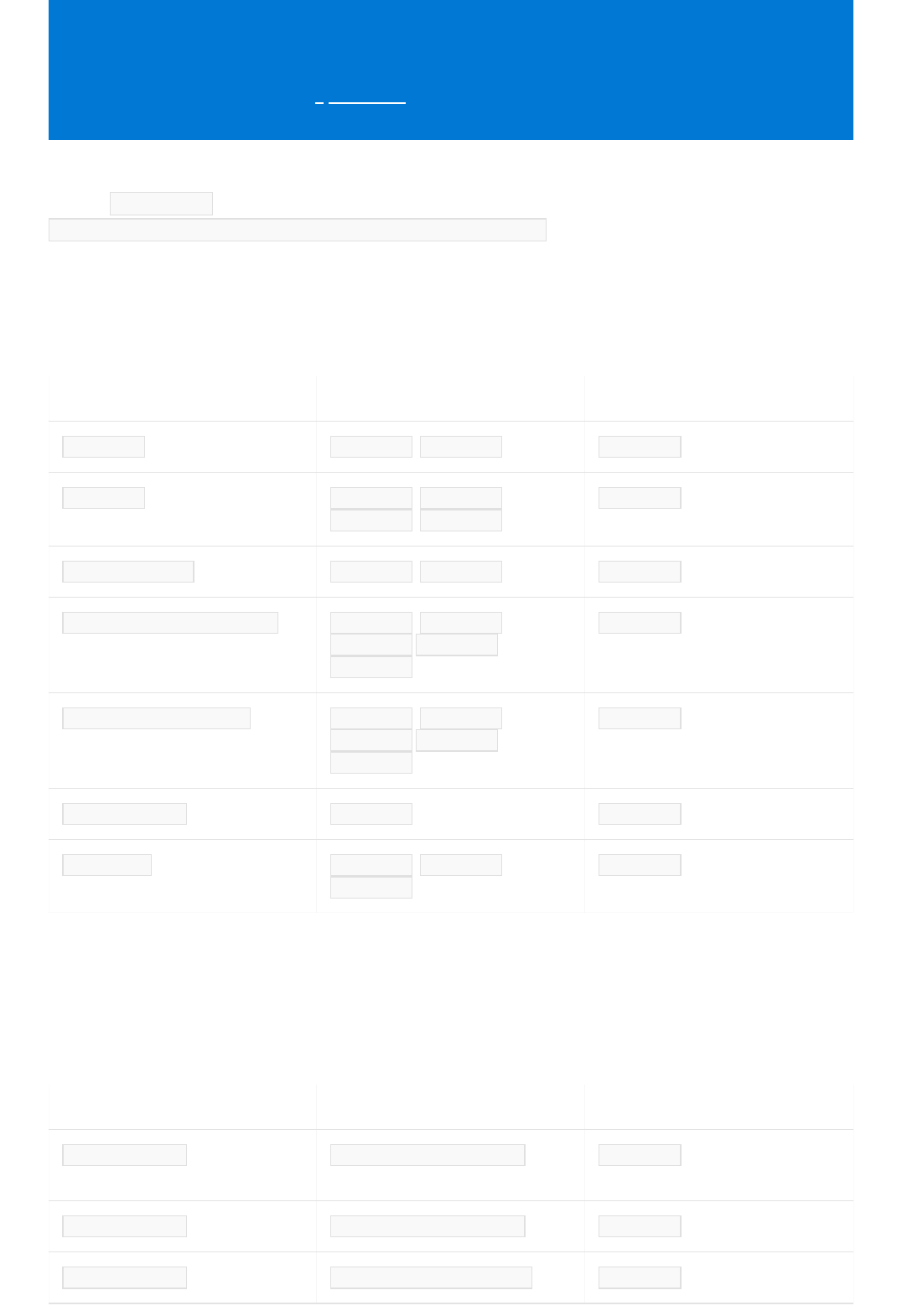

Quickstart: Use the Text Analytics client library and

REST API

7/9/2021 • 77 minutes to read • Edit Online

IMPORTANTIMPORTANT

Prerequisites

Setting up

Create a new .NET Core applicationCreate a new .NET Core application

Use this article to get started with the Text Analytics client library and REST API. Follow these steps to try out

examples code for mining text:

Sentiment analysis

Opinion mining

Language detection

Entity recognition

Personal Identifying Information recognition

Key phrase extraction

The latest stable version of the Text Analytics API is 3.1 .

The code in this article uses synchronous methods and un-secured credentials storage for simplicity reasons. For

production scenarios, we recommend using the batched asynchronous methods for performance and scalability. See

the reference documentation below.

If you want to use Text Analytics for health or Asynchronous operations, see the examples on Github for C#, Python or

Java

Be sure to only follow the instructions for the version you are using.

Version 3.1

Version 3.0

v3.1 Reference documentation | v3.1 Library source code | v3.1 Package (NuGet) | v3.1 Samples

Azure subscription - Create one for free

The Visual Studio IDE

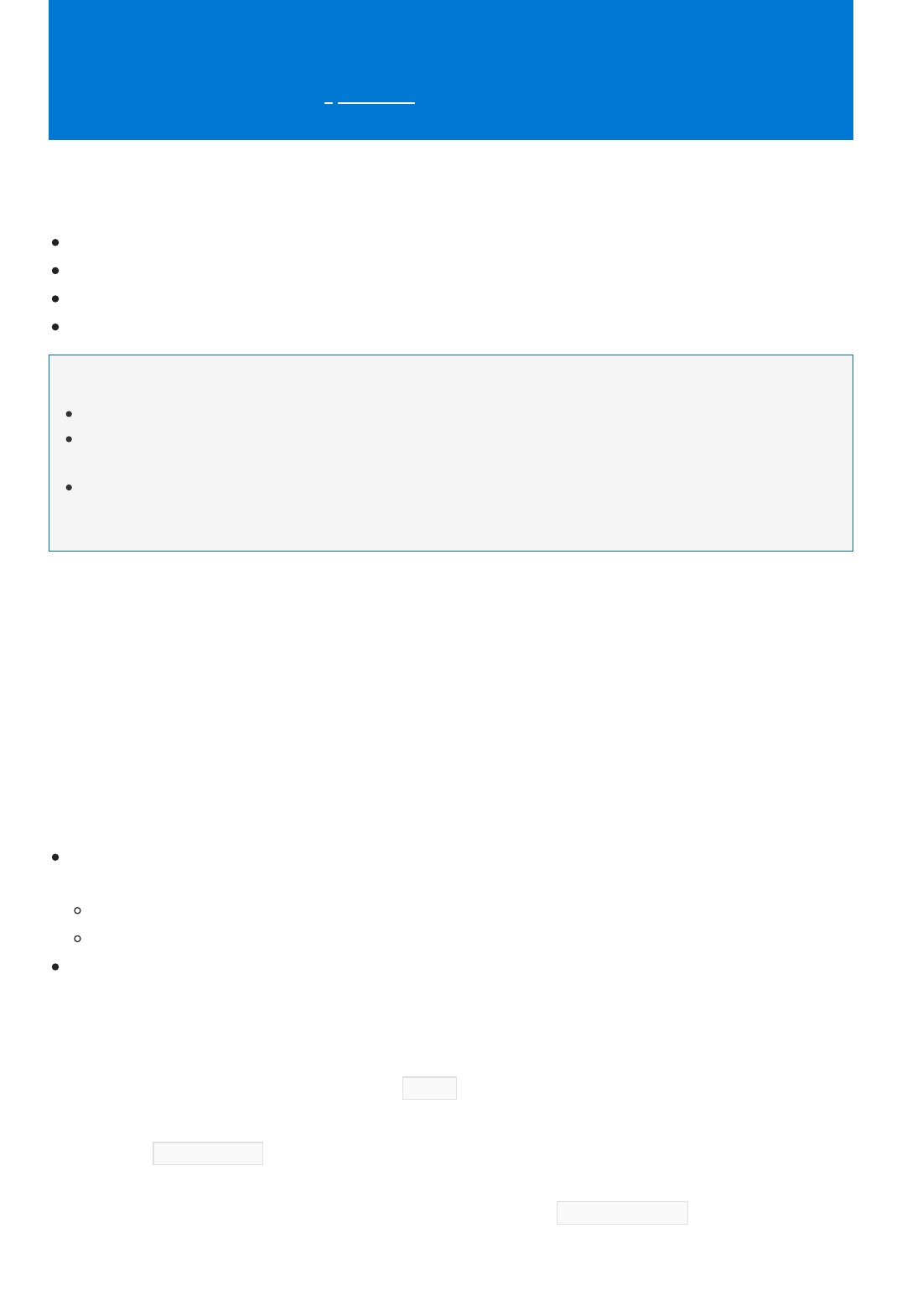

Once you have your Azure subscription, create a Text Analytics resource in the Azure portal to get your key

and endpoint. After it deploys, click Go to resourceGo to resource.

To use the Analyze feature, you will need a Text Analytics resource with the standard (S) pricing tier.

You will need the key and endpoint from the resource you create to connect your application to the

Text Analytics API. You'll paste your key and endpoint into the code below later in the quickstart.

You can use the free pricing tier ( F0 ) to try the service, and upgrade later to a paid tier for production.

Using the Visual Studio IDE, create a new .NET Core console app. This will create a "Hello World" project with a

single C# source file:

program.cs

.

Version 3.1

using Azure;

using System;

using System.Globalization;

using Azure.AI.TextAnalytics;

IMPORTANTIMPORTANT

private static readonly AzureKeyCredential credentials = new AzureKeyCredential("<replace-with-your-text-

analytics-key-here>");

private static readonly Uri endpoint = new Uri("<replace-with-your-text-analytics-endpoint-here>");

static void Main(string[] args)

{

var client = new TextAnalyticsClient(endpoint, credentials);

// You will implement these methods later in the quickstart.

SentimentAnalysisExample(client);

SentimentAnalysisWithOpinionMiningExample(client);

LanguageDetectionExample(client);

EntityRecognitionExample(client);

EntityLinkingExample(client);

RecognizePIIExample(client);

KeyPhraseExtractionExample(client);

Console.Write("Press any key to exit.");

Console.ReadKey();

}

Object model

Version 3.1

Version 3.0

Install the client library by right-clicking on the solution in the Solution ExplorerSolution Explorer and selecting ManageManage

NuGet PackagesNuGet Packages. In the package manager that opens select BrowseBrowse and search for Azure.AI.TextAnalytics .

Select version 5.1.0 , and then InstallInstall. You can also use the Package Manager Console.

Version 3.1

Version 3.0

Open the

program.cs

file and add the following using directives:

In the application's Program class, create variables for your resource's key and endpoint.

Go to the Azure portal. If the Text Analytics resource you created in the PrerequisitesPrerequisites section deployed successfully, click

the Go to ResourceGo to Resource button under Next StepsNext Steps. You can find your key and endpoint in the resource's key andkey and

endpointendpoint page, under resource managementresource management.

Remember to remove the key from your code when you're done, and never post it publicly. For production, consider using

a secure way of storing and accessing your credentials. For example, Azure key vault.

Replace the application's Main method. You will define the methods called here later.

The Text Analytics client is a TextAnalyticsClient object that authenticates to Azure using your key, and provides

functions to accept text as single strings or as a batch. You can send text to the API synchronously, or

asynchronously. The response object will contain the analysis information for each document you send.

If you're using version 3.x of the service, you can use an optional TextAnalyticsClientOptions instance to

Code examples

Authenticate the client

var client = new TextAnalyticsClient(endpoint, credentials);

Sentiment analysis

static void SentimentAnalysisExample(TextAnalyticsClient client)

{

string inputText = "I had the best day of my life. I wish you were there with me.";

DocumentSentiment documentSentiment = client.AnalyzeSentiment(inputText);

Console.WriteLine($"Document sentiment: {documentSentiment.Sentiment}\n");

foreach (var sentence in documentSentiment.Sentences)

{

Console.WriteLine($"\tText: \"{sentence.Text}\"");

Console.WriteLine($"\tSentence sentiment: {sentence.Sentiment}");

Console.WriteLine($"\tPositive score: {sentence.ConfidenceScores.Positive:0.00}");

Console.WriteLine($"\tNegative score: {sentence.ConfidenceScores.Negative:0.00}");

Console.WriteLine($"\tNeutral score: {sentence.ConfidenceScores.Neutral:0.00}\n");

}

}

OutputOutput

initialize the client with various default settings (for example default language or country/region hint). You can

also authenticate using an Azure Active Directory token.

Sentiment analysis

Opinion mining

Language detection

Named Entity Recognition

Entity linking

Key phrase extraction

Version 3.1

Version 3.0

Make sure your main method from earlier creates a new client object with your endpoint and credentials.

Version 3.1

Version 3.0

Create a new function called SentimentAnalysisExample() that takes the client that you created earlier, and call its

AnalyzeSentiment() function. The returned Response<DocumentSentiment> object will contain the sentiment label

and score of the entire input document, as well as a sentiment analysis for each sentence if successful. If there

was an error, it will throw a RequestFailedException .

Document sentiment: Positive

Text: "I had the best day of my life."

Sentence sentiment: Positive

Positive score: 1.00

Negative score: 0.00

Neutral score: 0.00

Text: "I wish you were there with me."

Sentence sentiment: Neutral

Positive score: 0.21

Negative score: 0.02

Neutral score: 0.77

Opinion mining

Create a new function called SentimentAnalysisWithOpinionMiningExample() that takes the client that you created

earlier, and call its AnalyzeSentimentBatch() function with IncludeOpinionMining option in the

AnalyzeSentimentOptions bag. The returned AnalyzeSentimentResultCollection object will contain the collection

of AnalyzeSentimentResult in which represents Response<DocumentSentiment> . The difference between

SentimentAnalysis() and SentimentAnalysisWithOpinionMiningExample() is that the latter will contain

SentenceOpinion in each sentence, which shows an analyzed target and the related assessment(s). If there was

an error, it will throw a RequestFailedException .

static void SentimentAnalysisWithOpinionMiningExample(TextAnalyticsClient client)

{

var documents = new List<string>

{

"The food and service were unacceptable, but the concierge were nice."

};

AnalyzeSentimentResultCollection reviews = client.AnalyzeSentimentBatch(documents, options: new

AnalyzeSentimentOptions()

{

IncludeOpinionMining = true

});

foreach (AnalyzeSentimentResult review in reviews)

{

Console.WriteLine($"Document sentiment: {review.DocumentSentiment.Sentiment}\n");

Console.WriteLine($"\tPositive score: {review.DocumentSentiment.ConfidenceScores.Positive:0.00}");

Console.WriteLine($"\tNegative score: {review.DocumentSentiment.ConfidenceScores.Negative:0.00}");

Console.WriteLine($"\tNeutral score: {review.DocumentSentiment.ConfidenceScores.Neutral:0.00}\n");

foreach (SentenceSentiment sentence in review.DocumentSentiment.Sentences)

{

Console.WriteLine($"\tText: \"{sentence.Text}\"");

Console.WriteLine($"\tSentence sentiment: {sentence.Sentiment}");

Console.WriteLine($"\tSentence positive score: {sentence.ConfidenceScores.Positive:0.00}");

Console.WriteLine($"\tSentence negative score: {sentence.ConfidenceScores.Negative:0.00}");

Console.WriteLine($"\tSentence neutral score: {sentence.ConfidenceScores.Neutral:0.00}\n");

foreach (SentenceOpinion sentenceOpinion in sentence.Opinions)

{

Console.WriteLine($"\tTarget: {sentenceOpinion.Target.Text}, Value:

{sentenceOpinion.Target.Sentiment}");

Console.WriteLine($"\tTarget positive score:

{sentenceOpinion.Target.ConfidenceScores.Positive:0.00}");

Console.WriteLine($"\tTarget negative score:

{sentenceOpinion.Target.ConfidenceScores.Negative:0.00}");

foreach (AssessmentSentiment assessment in sentenceOpinion.Assessments)

{

Console.WriteLine($"\t\tRelated Assessment: {assessment.Text}, Value:

{assessment.Sentiment}");

Console.WriteLine($"\t\tRelated Assessment positive score:

{assessment.ConfidenceScores.Positive:0.00}");

Console.WriteLine($"\t\tRelated Assessment negative score:

{assessment.ConfidenceScores.Negative:0.00}");

}

}

}

Console.WriteLine($"\n");

}

}

OutputOutput

Document sentiment: Positive

Positive score: 0.84

Negative score: 0.16

Neutral score: 0.00

Text: "The food and service were unacceptable, but the concierge were nice."

Sentence sentiment: Positive

Sentence positive score: 0.84

Sentence negative score: 0.16

Sentence neutral score: 0.00

Target: food, Value: Negative

Target positive score: 0.01

Target negative score: 0.99

Related Assessment: unacceptable, Value: Negative

Related Assessment positive score: 0.01

Related Assessment negative score: 0.99

Target: service, Value: Negative

Target positive score: 0.01

Target negative score: 0.99

Related Assessment: unacceptable, Value: Negative

Related Assessment positive score: 0.01

Related Assessment negative score: 0.99

Target: concierge, Value: Positive

Target positive score: 1.00

Target negative score: 0.00

Related Assessment: nice, Value: Positive

Related Assessment positive score: 1.00

Related Assessment negative score: 0.00

Press any key to exit.

Language detection

TIPTIP

static void LanguageDetectionExample(TextAnalyticsClient client)

{

DetectedLanguage detectedLanguage = client.DetectLanguage("Ce document est rédigé en Français.");

Console.WriteLine("Language:");

Console.WriteLine($"\t{detectedLanguage.Name},\tISO-6391: {detectedLanguage.Iso6391Name}\n");

}

OutputOutput

Version 3.1

Version 3.0

Create a new function called LanguageDetectionExample() that takes the client that you created earlier, and call its

DetectLanguage() function. The returned Response<DetectedLanguage> object will contain the detected language

along with its name and ISO-6391 code. If there was an error, it will throw a RequestFailedException .

In some cases it may be hard to disambiguate languages based on the input. You can use the countryHint parameter

to specify a 2-letter country/region code. By default the API is using the "US" as the default countryHint, to remove this

behavior you can reset this parameter by setting this value to empty string countryHint = "" . To set a different default,

set the TextAnalyticsClientOptions.DefaultCountryHint property and pass it during the client's initialization.

Language:

French, ISO-6391: fr

Named Entity Recognition (NER)

static void EntityRecognitionExample(TextAnalyticsClient client)

{

var response = client.RecognizeEntities("I had a wonderful trip to Seattle last week.");

Console.WriteLine("Named Entities:");

foreach (var entity in response.Value)

{

Console.WriteLine($"\tText: {entity.Text},\tCategory: {entity.Category},\tSub-Category:

{entity.SubCategory}");

Console.WriteLine($"\t\tScore: {entity.ConfidenceScore:F2},\tLength: {entity.Length},\tOffset:

{entity.Offset}\n");

}

}

OutputOutput

Named Entities:

Text: trip, Category: Event, Sub-Category:

Score: 0.61, Length: 4, Offset: 18

Text: Seattle, Category: Location, Sub-Category: GPE

Score: 0.82, Length: 7, Offset: 26

Text: last week, Category: DateTime, Sub-Category: DateRange

Score: 0.80, Length: 9, Offset: 34

Personally Identifiable Information (PII) recognition

Version 3.1

Version 3.0

Create a new function called EntityRecognitionExample() that takes the client that you created earlier, call its

RecognizeEntities() function and iterate through the results. The returned

Response<CategorizedEntityCollection> object will contain the collection of detected entities CategorizedEntity .

If there was an error, it will throw a RequestFailedException .

Create a new function called RecognizePIIExample() that takes the client that you created earlier, call its

RecognizePiiEntities() function and iterate through the results. The returned PiiEntityCollection represents

the list of detected PII entities. If there was an error, it will throw a RequestFailedException .

static void RecognizePIIExample(TextAnalyticsClient client)

{

string document = "A developer with SSN 859-98-0987 whose phone number is 800-102-1100 is building tools

with our APIs.";

PiiEntityCollection entities = client.RecognizePiiEntities(document).Value;

Console.WriteLine($"Redacted Text: {entities.RedactedText}");

if (entities.Count > 0)

{

Console.WriteLine($"Recognized {entities.Count} PII entit{(entities.Count > 1 ? "ies" : "y")}:");

foreach (PiiEntity entity in entities)

{

Console.WriteLine($"Text: {entity.Text}, Category: {entity.Category}, SubCategory:

{entity.SubCategory}, Confidence score: {entity.ConfidenceScore}");

}

}

else

{

Console.WriteLine("No entities were found.");

}

}

OutputOutput

Redacted Text: A developer with SSN *********** whose phone number is ************ is building tools with

our APIs.

Recognized 2 PII entities:

Text: 859-98-0987, Category: U.S. Social Security Number (SSN), SubCategory: , Confidence score: 0.65

Text: 800-102-1100, Category: Phone Number, SubCategory: , Confidence score: 0.8

Entity linking

Version 3.1

Version 3.0

Create a new function called EntityLinkingExample() that takes the client that you created earlier, call its

RecognizeLinkedEntities() function and iterate through the results. The returned

Response<LinkedEntityCollection> object will contain the collection of detected entities LinkedEntity . If there

was an error, it will throw a RequestFailedException . Since linked entities are uniquely identified, occurrences of

the same entity are grouped under a LinkedEntity object as a list of LinkedEntityMatch objects.

static void EntityLinkingExample(TextAnalyticsClient client)

{

var response = client.RecognizeLinkedEntities(

"Microsoft was founded by Bill Gates and Paul Allen on April 4, 1975, " +

"to develop and sell BASIC interpreters for the Altair 8800. " +

"During his career at Microsoft, Gates held the positions of chairman, " +

"chief executive officer, president and chief software architect, " +

"while also being the largest individual shareholder until May 2014.");

Console.WriteLine("Linked Entities:");

foreach (var entity in response.Value)

{

Console.WriteLine($"\tName: {entity.Name},\tID: {entity.DataSourceEntityId},\tURL:

{entity.Url}\tData Source: {entity.DataSource}");

Console.WriteLine("\tMatches:");

foreach (var match in entity.Matches)

{

Console.WriteLine($"\t\tText: {match.Text}");

Console.WriteLine($"\t\tScore: {match.ConfidenceScore:F2}");

Console.WriteLine($"\t\tLength: {match.Length}");

Console.WriteLine($"\t\tOffset: {match.Offset}\n");

}

}

}

OutputOutput

Linked Entities:

Name: Microsoft, ID: Microsoft, URL: https://en.wikipedia.org/wiki/Microsoft Data Source:

Wikipedia

Matches:

Text: Microsoft

Score: 0.55

Length: 9

Offset: 0

Text: Microsoft

Score: 0.55

Length: 9

Offset: 150

Name: Bill Gates, ID: Bill Gates, URL: https://en.wikipedia.org/wiki/Bill_Gates Data Source:

Wikipedia

Matches:

Text: Bill Gates

Score: 0.63

Length: 10

Offset: 25

Text: Gates

Score: 0.63

Length: 5

Offset: 161

Name: Paul Allen, ID: Paul Allen, URL: https://en.wikipedia.org/wiki/Paul_Allen Data Source:

Wikipedia

Matches:

Text: Paul Allen

Score: 0.60

Length: 10

Offset: 40

Name: April 4, ID: April 4, URL: https://en.wikipedia.org/wiki/April_4 Data Source:

Wikipedia

Matches:

Text: April 4

Score: 0.32

Length: 7

Offset: 54

Name: BASIC, ID: BASIC, URL: https://en.wikipedia.org/wiki/BASIC Data Source:

Wikipedia

Matches:

Text: BASIC

Score: 0.33

Length: 5

Offset: 89

Name: Altair 8800, ID: Altair 8800, URL: https://en.wikipedia.org/wiki/Altair_8800 Data

Source: Wikipedia

Matches:

Text: Altair 8800

Score: 0.88

Length: 11

Offset: 116

Key phrase extraction

Version 3.1

Version 3.0

static void KeyPhraseExtractionExample(TextAnalyticsClient client)

{

var response = client.ExtractKeyPhrases("My cat might need to see a veterinarian.");

// Printing key phrases

Console.WriteLine("Key phrases:");

foreach (string keyphrase in response.Value)

{

Console.WriteLine($"\t{keyphrase}");

}

}

OutputOutput

Key phrases:

cat

veterinarian

Extract health entities

C a u t i o nC a u t i o n

Create a new function called KeyPhraseExtractionExample() that takes the client that you created earlier, and call

its ExtractKeyPhrases() function. The returned <Response<KeyPhraseCollection> object will contain the list of

detected key phrases. If there was an error, it will throw a RequestFailedException .

To use the health operation, make sure your Azure resource is using the S standard pricing tier.

You can use Text Analytics to perform an asynchronous request to extract healthcare entities from text. The

below sample shows a basic example. You can find a more advanced sample on GitHub.

Version 3.1

Version 3.0

static async Task healthExample(TextAnalyticsClient client)

{

string document = "Prescribed 100mg ibuprofen, taken twice daily.";

List<string> batchInput = new List<string>()

{

document

};

AnalyzeHealthcareEntitiesOperation healthOperation = await

client.StartAnalyzeHealthcareEntitiesAsync(batchInput);

await healthOperation.WaitForCompletionAsync();

await foreach (AnalyzeHealthcareEntitiesResultCollection documentsInPage in healthOperation.Value)

{

Console.WriteLine($"Results of Azure Text Analytics \"Healthcare Async\" Model, version: \"

{documentsInPage.ModelVersion}\"");

Console.WriteLine("");

foreach (AnalyzeHealthcareEntitiesResult entitiesInDoc in documentsInPage)

{

if (!entitiesInDoc.HasError)

{

foreach (var entity in entitiesInDoc.Entities)

{

// view recognized healthcare entities

Console.WriteLine($" Entity: {entity.Text}");

Console.WriteLine($" Category: {entity.Category}");

Console.WriteLine($" Offset: {entity.Offset}");

Console.WriteLine($" Length: {entity.Length}");

Console.WriteLine($" NormalizedText: {entity.NormalizedText}");

}

Console.WriteLine($" Found {entitiesInDoc.EntityRelations.Count} relations in the current

document:");

Console.WriteLine("");

// view recognized healthcare relations

foreach (HealthcareEntityRelation relations in entitiesInDoc.EntityRelations)

{

Console.WriteLine($" Relation: {relations.RelationType}");

Console.WriteLine($" For this relation there are {relations.Roles.Count} roles");

// view relation roles

foreach (HealthcareEntityRelationRole role in relations.Roles)

{

Console.WriteLine($" Role Name: {role.Name}");

Console.WriteLine($" Associated Entity Text: {role.Entity.Text}");

Console.WriteLine($" Associated Entity Category: {role.Entity.Category}");

Console.WriteLine("");

}

Console.WriteLine("");

}

}

else

{

Console.WriteLine(" Error!");

Console.WriteLine($" Document error code: {entitiesInDoc.Error.ErrorCode}.");

Console.WriteLine($" Message: {entitiesInDoc.Error.Message}");

}

Console.WriteLine("");

}

}

}

Results of Azure Text Analytics "Healthcare Async" Model, version: "2021-05-15"

Entity: 100mg

Category: Dosage

Offset: 11

Length: 5

NormalizedText:

Entity: ibuprofen

Category: MedicationName

Offset: 17

Length: 9

NormalizedText: ibuprofen

Entity: twice daily

Category: Frequency

Offset: 34

Length: 11

NormalizedText:

Found 2 relations in the current document:

Relation: DosageOfMedication

For this relation there are 2 roles

Role Name: Dosage

Associated Entity Text: 100mg

Associated Entity Category: Dosage

Role Name: Medication

Associated Entity Text: ibuprofen

Associated Entity Category: MedicationName

Relation: FrequencyOfMedication

For this relation there are 2 roles

Role Name: Medication

Associated Entity Text: ibuprofen

Associated Entity Category: MedicationName

Role Name: Frequency

Associated Entity Text: twice daily

Associated Entity Category: Frequency

Use the API asynchronously with the Analyze operation

C a u t i o nC a u t i o n

using System.Threading.Tasks;

using System.Collections.Generic;

using System.Linq;

Version 3.1

Version 3.0

You can use the Analyze operation to perform asynchronous batch requests for: NER, key phrase extraction,

sentiment analysis, and PII detection. The below sample shows a basic example on one operation. You can find a

more advanced sample on GitHub.

To use the Analyze operation, make sure your Azure resource is using the S standard pricing tier.

Add the following using statements to your C# file.

Create a new function called AnalyzeOperationExample() that takes the client that you created earlier, and call its

StartAnalyzeBatchActionsAsync() function. The returned operation will contain an AnalyzeBatchActionsResult

object. As it is a Long Running Operation, await on the operation.WaitForCompletionAsync() for the value to be

static async Task AnalyzeOperationExample(TextAnalyticsClient client)

{

string inputText = "Microsoft was founded by Bill Gates and Paul Allen.";

var batchDocuments = new List<string> { inputText };

TextAnalyticsActions actions = new TextAnalyticsActions()

{

RecognizeEntitiesActions = new List<RecognizeEntitiesAction>() { new RecognizeEntitiesAction()

},

ExtractKeyPhrasesActions = new List<ExtractKeyPhrasesAction>() { new ExtractKeyPhrasesAction()

},

DisplayName = "Analyze Operation Quick Start Example"

};

AnalyzeActionsOperation operation = await client.StartAnalyzeActionsAsync(batchDocuments, actions);

await operation.WaitForCompletionAsync();

Console.WriteLine($"Status: {operation.Status}");

Console.WriteLine($"Created On: {operation.CreatedOn}");

Console.WriteLine($"Expires On: {operation.ExpiresOn}");

Console.WriteLine($"Last modified: {operation.LastModified}");

if (!string.IsNullOrEmpty(operation.DisplayName))

Console.WriteLine($"Display name: {operation.DisplayName}");

//Console.WriteLine($"Total actions: {operation.TotalActions}");

Console.WriteLine($" Succeeded actions: {operation.ActionsSucceeded}");

Console.WriteLine($" Failed actions: {operation.ActionsFailed}");

Console.WriteLine($" In progress actions: {operation.ActionsInProgress}");

await foreach (AnalyzeActionsResult documentsInPage in operation.Value)

{

RecognizeEntitiesResultCollection entitiesResult =

documentsInPage.RecognizeEntitiesResults.FirstOrDefault().DocumentsResults;

ExtractKeyPhrasesResultCollection keyPhrasesResults =

documentsInPage.ExtractKeyPhrasesResults.FirstOrDefault().DocumentsResults;

Console.WriteLine("Recognized Entities");

foreach (RecognizeEntitiesResult result in entitiesResult)

{

Console.WriteLine($" Recognized the following {result.Entities.Count} entities:");

foreach (CategorizedEntity entity in result.Entities)

{

Console.WriteLine($" Entity: {entity.Text}");

Console.WriteLine($" Category: {entity.Category}");

Console.WriteLine($" Offset: {entity.Offset}");

Console.WriteLine($" Length: {entity.Length}");

Console.WriteLine($" ConfidenceScore: {entity.ConfidenceScore}");

Console.WriteLine($" SubCategory: {entity.SubCategory}");

}

Console.WriteLine("");

}

Console.WriteLine("Key Phrases");

foreach (ExtractKeyPhrasesResult documentResults in keyPhrasesResults)

{

Console.WriteLine($" Recognized the following {documentResults.KeyPhrases.Count}

Keyphrases:");

foreach (string keyphrase in documentResults.KeyPhrases)

{

updated. Once the WaitForCompletionAsync() finishes, the collection should be updated in the operation.Value .

If there was an error, it will throw a RequestFailedException .

{

Console.WriteLine($" {keyphrase}");

}

Console.WriteLine("");

}

}

}

static async Task Main(string[] args)

{

var client = new TextAnalyticsClient(endpoint, credentials);

await AnalyzeOperationExample(client).ConfigureAwait(false);

}

OutputOutput

Status: succeeded

Created On: 3/10/2021 2:25:01 AM +00:00

Expires On: 3/11/2021 2:25:01 AM +00:00

Last modified: 3/10/2021 2:25:05 AM +00:00

Display name: Analyze Operation Quick Start Example

Total actions: 1

Succeeded actions: 1

Failed actions: 0

In progress actions: 0

Recognized Entities

Recognized the following 3 entities:

Entity: Microsoft

Category: Organization

Offset: 0

ConfidenceScore: 0.83

SubCategory:

Entity: Bill Gates

Category: Person

Offset: 25

ConfidenceScore: 0.85

SubCategory:

Entity: Paul Allen

Category: Person

Offset: 40

ConfidenceScore: 0.9

SubCategory:

IMPORTANTIMPORTANT

After you add this example to your application, call in your main() method using await . Because the Analyze

operation is asynchronous, you will need to update your Main() method to the async Task type.

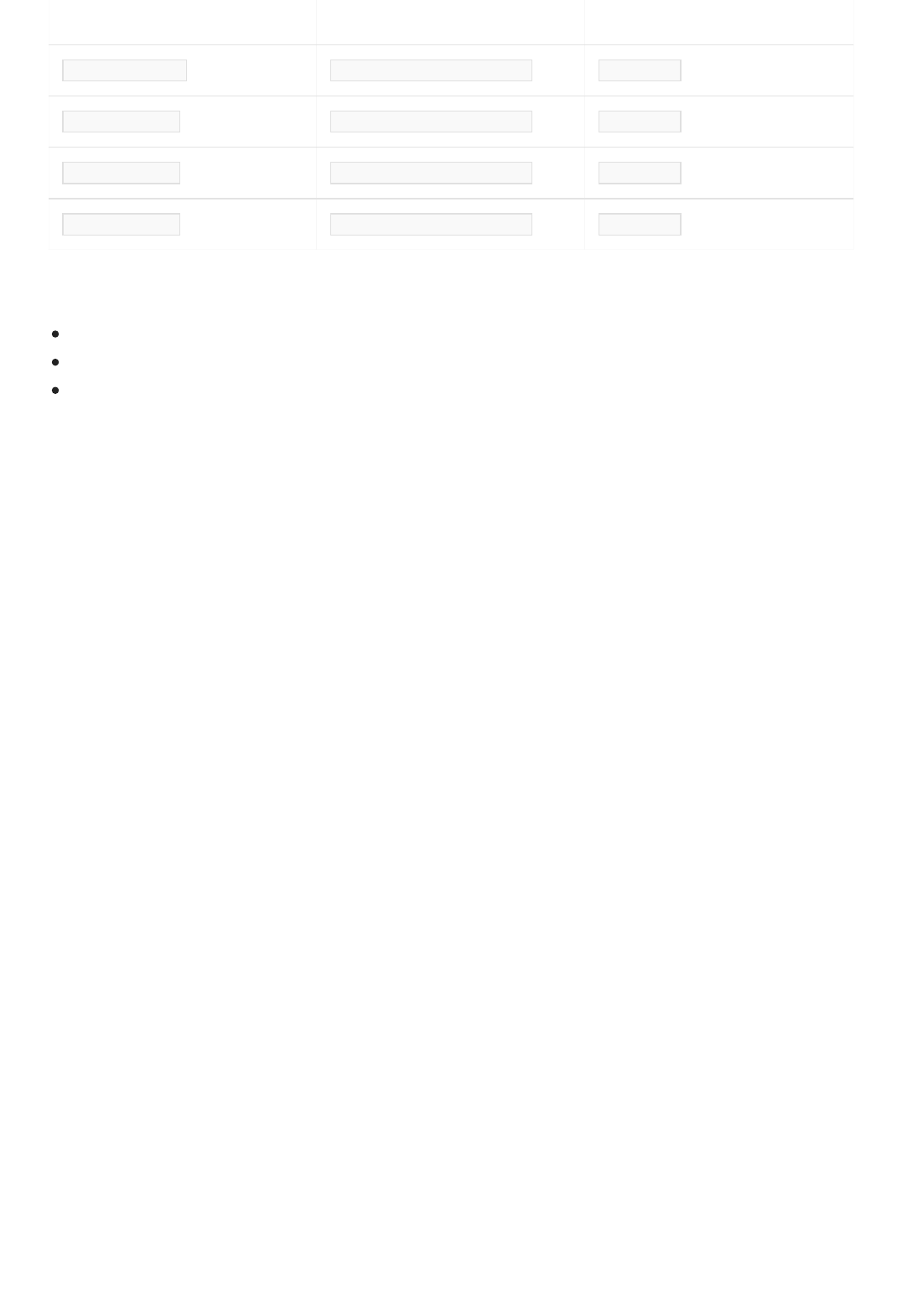

The latest stable version of the Text Analytics API is 3.1 .

The code in this article uses synchronous methods and un-secured credentials storage for simplicity reasons. For

production scenarios, we recommend using the batched asynchronous methods for performance and scalability. See

the reference documentation below. If you want to use Text Analytics for health or Asynchronous operations, see the

examples on Github for C#, Python or Java

Version 3.1

Version 3.0

Reference documentation | Library source code | Package | Samples

Prerequisites

Setting up

Add the client libraryAdd the client library

<dependencies>

<dependency>

<groupId>com.azure</groupId>

<artifactId>azure-ai-textanalytics</artifactId>

<version>5.1.0</version>

</dependency>

</dependencies>

import com.azure.ai.textanalytics.TextAnalyticsAsyncClient;

import com.azure.core.credential.AzureKeyCredential;

import com.azure.ai.textanalytics.models.*;

import com.azure.ai.textanalytics.TextAnalyticsClientBuilder;

import com.azure.ai.textanalytics.TextAnalyticsClient;

import java.util.ArrayList;

import java.util.List;

import java.util.concurrent.TimeUnit;

import java.util.Arrays;

import com.azure.core.util.Context;

import com.azure.core.util.polling.SyncPoller;

import com.azure.ai.textanalytics.util.AnalyzeHealthcareEntitiesResultCollection;

import com.azure.ai.textanalytics.util.AnalyzeHealthcareEntitiesPagedIterable;

Azure subscription - Create one for free

Java Development Kit (JDK) with version 8 or above

Once you have your Azure subscription, create a Text Analytics resource in the Azure portal to get your key

and endpoint. After it deploys, click Go to resourceGo to resource.

To use the Analyze feature, you will need a Text Analytics resource with the standard (S) pricing tier.

You will need the key and endpoint from the resource you create to connect your application to the

Text Analytics API. You'll paste your key and endpoint into the code below later in the quickstart.

You can use the free pricing tier ( F0 ) to try the service, and upgrade later to a paid tier for production.

Version 3.1

Version 3.0

Create a Maven project in your preferred IDE or development environment. Then add the following dependency

to your project's

pom.xml

file. You can find the implementation syntax for other build tools online.

Create a Java file named TextAnalyticsSamples.java . Open the file and add the following import statements:

Version 3.1 preview

Version 3.0

In the java file, add a new class and add your Azure resource's key and endpoint as shown below.

IMPORTANTIMPORTANT

public class TextAnalyticsSamples {

private static String KEY = "<replace-with-your-text-analytics-key-here>";

private static String ENDPOINT = "<replace-with-your-text-analytics-endpoint-here>";

}

public static void main(String[] args) {

//You will create these methods later in the quickstart.

TextAnalyticsClient client = authenticateClient(KEY, ENDPOINT);

sentimentAnalysisWithOpinionMiningExample(client)

detectLanguageExample(client);

recognizeEntitiesExample(client);

recognizeLinkedEntitiesExample(client);

recognizePiiEntitiesExample(client);

extractKeyPhrasesExample(client);

}

Object model

Code examples

Authenticate the client

Go to the Azure portal. If the Text Analytics resource you created in the PrerequisitesPrerequisites section deployed successfully, click

the Go to ResourceGo to Resource button under Next StepsNext Steps. You can find your key and endpoint in the resource's key andkey and

endpointendpoint page, under resource managementresource management.

Remember to remove the key from your code when you're done, and never post it publicly. For production, consider using

a secure way of storing and accessing your credentials. For example, Azure key vault.

Add the following main method to the class. You will define the methods called here later.

Version 3.1

Version 3.0

The Text Analytics client is a TextAnalyticsClient object that authenticates to Azure using your key, and provides

functions to accept text as single strings or as a batch. You can send text to the API synchronously, or

asynchronously. The response object will contain the analysis information for each document you send.

Authenticate the client

Sentiment Analysis

Opinion mining

Language detection

Named Entity recognition

Entity linking

Key phrase extraction

Create a method to instantiate the TextAnalyticsClient object with the key and endpoint for your Text Analytics

resource. This example is the same for versions 3.0 and 3.1 of the API.

static TextAnalyticsClient authenticateClient(String key, String endpoint) {

return new TextAnalyticsClientBuilder()

.credential(new AzureKeyCredential(key))

.endpoint(endpoint)

.buildClient();

}

Sentiment analysis

NOTENOTE

static void sentimentAnalysisExample(TextAnalyticsClient client)

{

// The text that need be analyzed.

String text = "I had the best day of my life. I wish you were there with me.";

DocumentSentiment documentSentiment = client.analyzeSentiment(text);

System.out.printf(

"Recognized document sentiment: %s, positive score: %s, neutral score: %s, negative score: %s.%n",

documentSentiment.getSentiment(),

documentSentiment.getConfidenceScores().getPositive(),

documentSentiment.getConfidenceScores().getNeutral(),

documentSentiment.getConfidenceScores().getNegative());

for (SentenceSentiment sentenceSentiment : documentSentiment.getSentences()) {

System.out.printf(

"Recognized sentence sentiment: %s, positive score: %s, neutral score: %s, negative score:

%s.%n",

sentenceSentiment.getSentiment(),

sentenceSentiment.getConfidenceScores().getPositive(),

sentenceSentiment.getConfidenceScores().getNeutral(),

sentenceSentiment.getConfidenceScores().getNegative());

}

}

}

OutputOutput

Recognized document sentiment: positive, positive score: 1.0, neutral score: 0.0, negative score: 0.0.

Recognized sentence sentiment: positive, positive score: 1.0, neutral score: 0.0, negative score: 0.0.

Recognized sentence sentiment: neutral, positive score: 0.21, neutral score: 0.77, negative score: 0.02.

In your program's main() method, call the authentication method to instantiate the client.

Version 3.1

Version 3.0

In version 3.1 :

Sentiment Analysis includes Opinion Mining analysis which is optional flag.

Opinion Mining contains aspect and opinion level sentiment.

Create a new function called sentimentAnalysisExample() that takes the client that you created earlier, and call its

analyzeSentiment() function. The returned AnalyzeSentimentResult object will contain documentSentiment and

sentenceSentiments if successful, or an errorMessage if not.

Opinion mining

static void sentimentAnalysisWithOpinionMiningExample(TextAnalyticsClient client)

{

// The document that needs be analyzed.

String document = "Bad atmosphere. Not close to plenty of restaurants, hotels, and transit! Staff are

not friendly and helpful.";

System.out.printf("Document = %s%n", document);

AnalyzeSentimentOptions options = new AnalyzeSentimentOptions().setIncludeOpinionMining(true);

final DocumentSentiment documentSentiment = client.analyzeSentiment(document, "en", options);

SentimentConfidenceScores scores = documentSentiment.getConfidenceScores();

System.out.printf(

"Recognized document sentiment: %s, positive score: %f, neutral score: %f, negative score:

%f.%n",

documentSentiment.getSentiment(), scores.getPositive(), scores.getNeutral(),

scores.getNegative());

documentSentiment.getSentences().forEach(sentenceSentiment -> {

SentimentConfidenceScores sentenceScores = sentenceSentiment.getConfidenceScores();

System.out.printf("\tSentence sentiment: %s, positive score: %f, neutral score: %f, negative score:

%f.%n",

sentenceSentiment.getSentiment(), sentenceScores.getPositive(),

sentenceScores.getNeutral(), sentenceScores.getNegative());

sentenceSentiment.getOpinions().forEach(opinion -> {

TargetSentiment targetSentiment = opinion.getTarget();

System.out.printf("\t\tTarget sentiment: %s, target text: %s%n",

targetSentiment.getSentiment(),

targetSentiment.getText());

for (AssessmentSentiment assessmentSentiment : opinion.getAssessments()) {

System.out.printf("\t\t\t'%s' assessment sentiment because of \"%s\". Is the assessment

negated: %s.%n",

assessmentSentiment.getSentiment(), assessmentSentiment.getText(),

assessmentSentiment.isNegated());

}

});

});

}

OutputOutput

Document = Bad atmosphere. Not close to plenty of restaurants, hotels, and transit! Staff are not friendly

and helpful.

Recognized document sentiment: negative, positive score: 0.010000, neutral score: 0.140000, negative score:

0.850000.

Sentence sentiment: negative, positive score: 0.000000, neutral score: 0.000000, negative score: 1.000000.

Target sentiment: negative, target text: atmosphere

'negative' assessment sentiment because of "bad". Is the assessment negated: false.

Sentence sentiment: negative, positive score: 0.020000, neutral score: 0.440000, negative score: 0.540000.

Sentence sentiment: negative, positive score: 0.000000, neutral score: 0.000000, negative score: 1.000000.

Target sentiment: negative, target text: Staff

'negative' assessment sentiment because of "friendly". Is the assessment negated: true.

'negative' assessment sentiment because of "helpful". Is the assessment negated: true.

To perform sentiment analysis with opinion mining, create a new function called

sentimentAnalysisWithOpinionMiningExample() that takes the client that you created earlier, and call its

analyzeSentiment() function with setting option object AnalyzeSentimentOptions . The returned

AnalyzeSentimentResult object will contain documentSentiment and sentenceSentiments if successful, or an

errorMessage if not.

Language detection

TIPTIP

static void detectLanguageExample(TextAnalyticsClient client)

{

// The text that need be analyzed.

String text = "Ce document est rédigé en Français.";

DetectedLanguage detectedLanguage = client.detectLanguage(text);

System.out.printf("Detected primary language: %s, ISO 6391 name: %s, score: %.2f.%n",

detectedLanguage.getName(),

detectedLanguage.getIso6391Name(),

detectedLanguage.getConfidenceScore());

}

OutputOutput

Detected primary language: French, ISO 6391 name: fr, score: 1.00.

Named Entity Recognition (NER)

NOTENOTE

Create a new function called detectLanguageExample() that takes the client that you created earlier, and call its

detectLanguage() function. The returned DetectLanguageResult object will contain a primary language detected,

a list of other languages detected if successful, or an errorMessage if not. This example is the same for versions

3.0 and 3.1 of the API.

In some cases it may be hard to disambiguate languages based on the input. You can use the countryHint parameter

to specify a 2-letter country code. By default the API is using the "US" as the default countryHint, to remove this behavior

you can reset this parameter by setting this value to empty string countryHint = "" . To set a different default, set the

TextAnalyticsClientOptions.DefaultCountryHint property and pass it during the client's initialization.

Version 3.1

Version 3.0

In version 3.1 :

NER includes separate methods for detecting personal information.

Entity linking is a separate request than NER.

Create a new function called recognizeEntitiesExample() that takes the client that you created earlier, and call its

recognizeEntities() function. The returned CategorizedEntityCollection object will contain a list of

CategorizedEntity if successful, or an errorMessage if not.

static void recognizeEntitiesExample(TextAnalyticsClient client)

{

// The text that need be analyzed.

String text = "I had a wonderful trip to Seattle last week.";

for (CategorizedEntity entity : client.recognizeEntities(text)) {

System.out.printf(

"Recognized entity: %s, entity category: %s, entity sub-category: %s, score: %s, offset: %s,

length: %s.%n",

entity.getText(),

entity.getCategory(),

entity.getSubcategory(),

entity.getConfidenceScore(),

entity.getOffset(),

entity.getLength());

}

}

OutputOutput

Recognized entity: trip, entity category: Event, entity sub-category: null, score: 0.61, offset: 8, length:

4.

Recognized entity: Seattle, entity category: Location, entity sub-category: GPE, score: 0.82, offset: 16,

length: 7.

Recognized entity: last week, entity category: DateTime, entity sub-category: DateRange, score: 0.8, offset:

24, length: 9.

Personally Identifiable Information (PII) recognition

static void recognizePiiEntitiesExample(TextAnalyticsClient client)

{

// The text that need be analyzed.

String document = "My SSN is 859-98-0987";

PiiEntityCollection piiEntityCollection = client.recognizePiiEntities(document);

System.out.printf("Redacted Text: %s%n", piiEntityCollection.getRedactedText());

piiEntityCollection.forEach(entity -> System.out.printf(

"Recognized Personally Identifiable Information entity: %s, entity category: %s, entity subcategory:

%s,"

+ " confidence score: %f.%n",

entity.getText(), entity.getCategory(), entity.getSubcategory(), entity.getConfidenceScore()));

}

OutputOutput

Redacted Text: My SSN is ***********

Recognized Personally Identifiable Information entity: 859-98-0987, entity category: U.S. Social Security

Number (SSN), entity subcategory: null, confidence score: 0.650000.

Entity linking

Create a new function called recognizePiiEntitiesExample() that takes the client that you created earlier, and call

its recognizePiiEntities() function. The returned PiiEntityCollection object will contain a list of PiiEntity if

successful, or an errorMessage if not. It will also contain the redacted text, which consists of the input text with

all identifiable entities replaced with ***** .

Version 3.1

Version 3.0

static void recognizeLinkedEntitiesExample(TextAnalyticsClient client)

{

// The text that need be analyzed.

String text = "Microsoft was founded by Bill Gates and Paul Allen on April 4, 1975, " +

"to develop and sell BASIC interpreters for the Altair 8800. " +

"During his career at Microsoft, Gates held the positions of chairman, " +

"chief executive officer, president and chief software architect, " +

"while also being the largest individual shareholder until May 2014.";

System.out.printf("Linked Entities:%n");

for (LinkedEntity linkedEntity : client.recognizeLinkedEntities(text)) {

System.out.printf("Name: %s, ID: %s, URL: %s, Data Source: %s.%n",

linkedEntity.getName(),

linkedEntity.getDataSourceEntityId(),

linkedEntity.getUrl(),

linkedEntity.getDataSource());

System.out.printf("Matches:%n");

for (LinkedEntityMatch linkedEntityMatch : linkedEntity.getMatches()) {

System.out.printf("Text: %s, Score: %.2f, Offset: %s, Length: %s%n",

linkedEntityMatch.getText(),

linkedEntityMatch.getConfidenceScore(),

linkedEntityMatch.getOffset(),

linkedEntityMatch.getLength());

}

}

}

OutputOutput

Linked Entities:

Name: Microsoft, ID: Microsoft, URL: https://en.wikipedia.org/wiki/Microsoft, Data Source: Wikipedia.

Matches:

Text: Microsoft, Score: 0.55, Offset: 9, Length: 0

Text: Microsoft, Score: 0.55, Offset: 9, Length: 150

Name: Bill Gates, ID: Bill Gates, URL: https://en.wikipedia.org/wiki/Bill_Gates, Data Source: Wikipedia.

Matches:

Text: Bill Gates, Score: 0.63, Offset: 10, Length: 25

Text: Gates, Score: 0.63, Offset: 5, Length: 161

Name: Paul Allen, ID: Paul Allen, URL: https://en.wikipedia.org/wiki/Paul_Allen, Data Source: Wikipedia.

Matches:

Text: Paul Allen, Score: 0.60, Offset: 10, Length: 40

Name: April 4, ID: April 4, URL: https://en.wikipedia.org/wiki/April_4, Data Source: Wikipedia.

Matches:

Text: April 4, Score: 0.32, Offset: 7, Length: 54

Name: BASIC, ID: BASIC, URL: https://en.wikipedia.org/wiki/BASIC, Data Source: Wikipedia.

Matches:

Text: BASIC, Score: 0.33, Offset: 5, Length: 89

Name: Altair 8800, ID: Altair 8800, URL: https://en.wikipedia.org/wiki/Altair_8800, Data Source: Wikipedia.

Matches:

Text: Altair 8800, Score: 0.88, Offset: 11, Length: 116

Key phrase extraction

Create a new function called recognizeLinkedEntitiesExample() that takes the client that you created earlier, and

call its recognizeLinkedEntities() function. The returned LinkedEntityCollection object will contain a list of

LinkedEntity if successful, or an errorMessage if not. Since linked entities are uniquely identified, occurrences

of the same entity are grouped under a LinkedEntity object as a list of LinkedEntityMatch objects.

Create a new function called extractKeyPhrasesExample() that takes the client that you created earlier, and call its

extractKeyPhrases() function. The returned ExtractKeyPhraseResult object will contain a list of key phrases if

successful, or an errorMessage if not. This example is the same for version 3.0 and 3.1 of the API.

static void extractKeyPhrasesExample(TextAnalyticsClient client)

{

// The text that need be analyzed.

String text = "My cat might need to see a veterinarian.";

System.out.printf("Recognized phrases: %n");

for (String keyPhrase : client.extractKeyPhrases(text)) {

System.out.printf("%s%n", keyPhrase);

}

}

OutputOutput

Recognized phrases:

cat

veterinarian

Extract health entities

Version 3.1

Version 3.0

You can use Text Analytics to perform an asynchronous request to extract healthcare entities from text. The

below sample shows a basic example. You can find a more advanced sample on GitHub.

static void healthExample(TextAnalyticsClient client){

List<TextDocumentInput> documents = Arrays.asList(

new TextDocumentInput("0",

"Prescribed 100mg ibuprofen, taken twice daily."));

AnalyzeHealthcareEntitiesOptions options = new

AnalyzeHealthcareEntitiesOptions().setIncludeStatistics(true);

SyncPoller<AnalyzeHealthcareEntitiesOperationDetail, AnalyzeHealthcareEntitiesPagedIterable>

syncPoller = client.beginAnalyzeHealthcareEntities(documents, options, Context.NONE);

System.out.printf("Poller status: %s.%n", syncPoller.poll().getStatus());

syncPoller.waitForCompletion();

// Task operation statistics

AnalyzeHealthcareEntitiesOperationDetail operationResult = syncPoller.poll().getValue();

System.out.printf("Operation created time: %s, expiration time: %s.%n",

operationResult.getCreatedAt(), operationResult.getExpiresAt());

System.out.printf("Poller status: %s.%n", syncPoller.poll().getStatus());

for (AnalyzeHealthcareEntitiesResultCollection resultCollection : syncPoller.getFinalResult()) {

// Model version

System.out.printf(

"Results of Azure Text Analytics \"Analyze Healthcare Entities\" Model, version: %s%n",

resultCollection.getModelVersion());

for (AnalyzeHealthcareEntitiesResult healthcareEntitiesResult : resultCollection) {

System.out.println("Document ID = " + healthcareEntitiesResult.getId());

System.out.println("Document entities: ");

// Recognized healthcare entities

for (HealthcareEntity entity : healthcareEntitiesResult.getEntities()) {

System.out.printf(

"\tText: %s, normalized name: %s, category: %s, subcategory: %s, confidence score:

%f.%n",

entity.getText(), entity.getNormalizedText(), entity.getCategory(),

entity.getSubcategory(), entity.getConfidenceScore());

}

// Recognized healthcare entity relation groups

for (HealthcareEntityRelation entityRelation : healthcareEntitiesResult.getEntityRelations()) {

System.out.printf("Relation type: %s.%n", entityRelation.getRelationType());

for (HealthcareEntityRelationRole role : entityRelation.getRoles()) {

HealthcareEntity entity = role.getEntity();

System.out.printf("\tEntity text: %s, category: %s, role: %s.%n",

entity.getText(), entity.getCategory(), role.getName());

}

}

}

}

}

outputoutput

Poller status: IN_PROGRESS.

Operation created time: 2021-07-20T19:45:50Z, expiration time: 2021-07-21T19:45:50Z.

Poller status: SUCCESSFULLY_COMPLETED.

Results of Azure Text Analytics "Analyze Healthcare Entities" Model, version: 2021-05-15

Document ID = 0

Document entities:

Text: 100mg, normalized name: null, category: Dosage, subcategory: null, confidence score: 1.000000.

Text: ibuprofen, normalized name: ibuprofen, category: MedicationName, subcategory: null, confidence score:

1.000000.

Text: twice daily, normalized name: null, category: Frequency, subcategory: null, confidence score:

1.000000.

Relation type: DosageOfMedication.

Entity text: 100mg, category: Dosage, role: Dosage.

Entity text: ibuprofen, category: MedicationName, role: Medication.

Relation type: FrequencyOfMedication.

Entity text: ibuprofen, category: MedicationName, role: Medication.

Entity text: twice daily, category: Frequency, role: Frequency.

Use the API asynchronously with the Analyze operation

C a u t i o nC a u t i o n

static void analyzeActionsExample(TextAnalyticsClient client){

List<TextDocumentInput> documents = new ArrayList<>();

documents.add(new TextDocumentInput("0", "Microsoft was founded by Bill Gates and Paul Allen."));

SyncPoller<AnalyzeActionsOperationDetail, AnalyzeActionsResultPagedIterable> syncPoller =

client.beginAnalyzeActions(documents,

new TextAnalyticsActions().setDisplayName("Example analyze task")

.setRecognizeEntitiesActions(new RecognizeEntitiesAction())

.setExtractKeyPhrasesActions(

new ExtractKeyPhrasesAction().setModelVersion("latest")),

new AnalyzeActionsOptions().setIncludeStatistics(false),

Context.NONE);

// Task operation statistics details

while (syncPoller.poll().getStatus() == LongRunningOperationStatus.IN_PROGRESS) {

final AnalyzeActionsOperationDetail operationDetail = syncPoller.poll().getValue();