ON MATCHING BINARY TO SOURCE CODE

Arash Shahkar

A thesis

in

The Department

of

Concordia Institute for Information Systems Engineering

Presented in Partial Fulfillment of the Requirements

For the Degree of Master of Applied Science

in Information Systems Security at

Concordia University

Montréal, Québec, Canada

March 2016

c

Arash Shahkar, 2016

Concordia University

School of Graduate Studies

This is to certify that the thesis prepared

By: Arash Shahkar

Entitled: On Matching Binary to Source Code

and submitted in partial fulfillment of the requirements for the degree of

Master of Applied Science (Information Systems Security)

complies with the regulations of this University and meets the accepted standards

with respect to originality and quality.

Signed by the final examining committee:

Dr. Jia Yuan Yu Chair

Dr. Lingyu Wang Examiner

Dr. Zhenhua Zhu External Examiner

Dr. Mohammad Mannan Supervisor

Approved

Chair of Department or Graduate Program Director

2016

Dr. Amir Asif, Dean

Faculty of Engineering and Computer Science

Abstract

On Matching Binary to Source Code

Arash Shahkar

Reverse engineering of executable binary programs has diverse applications in

computer security and forensics, and often involves identifying parts of code that are

reused from third party software projects. Identification of code clones by comparing

and fingerprinting low-level binaries has been explored in various pieces of work as

an effective approach for accelerating the reverse engineering process.

Binary clone detection across different environments and computing platforms

bears significant challenges, and reasoning about sequences of low-level machine in-

structions is a tedious and time consuming process. Because of these reasons, the

ability of matching reused functions to their source code is highly advantageous, de-

spite being rarely explored to date.

In this thesis, we systematically assess the feasibility of automatic binary to source

matching to aid the reverse engineering process. We highlight the challenges, elab-

orate on the shortcomings of existing proposals, and design a new approach that is

targeted at addressing the challenges while delivering more extensive and detailed

results in a fully automated fashion. By evaluating our approach, we show that it is

generally capable of uniquely matching over 50% of reused functions in a binary to

their source code in a source database with over 500,000 functions, while narrowing

down over 75% of reused functions to at most five candidates in most cases. Finally,

we investigate and discuss the limitations and provide directions for future work.

iii

Contents

List of Figures viii

List of Tables ix

Code Listings x

1 Introduction 1

1.1 Motivation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.2 Thesis Statement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.3 Contributions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.4 Outline . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2 Background 6

2.1 Software Compilation and Build Process . . . . . . . . . . . . . . . . 6

2.1.1 High-level Source Code . . . . . . . . . . . . . . . . . . . . . . 7

2.1.2 Abstract Syntax Tree . . . . . . . . . . . . . . . . . . . . . . . 9

2.1.3 Intermediate Representation . . . . . . . . . . . . . . . . . . . 10

2.1.4 Control Flow Graph . . . . . . . . . . . . . . . . . . . . . . . 10

2.1.5 Compiler Optimizations . . . . . . . . . . . . . . . . . . . . . 11

2.1.6 Machine Code . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.1.7 Linking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.2 Binary to Source Matching . . . . . . . . . . . . . . . . . . . . . . . . 17

2.2.1 Automatic Compilation . . . . . . . . . . . . . . . . . . . . . 17

iv

2.2.2 Automatic Parsing . . . . . . . . . . . . . . . . . . . . . . . . 19

3 Related Work 20

3.1 Binary to Source Comparison . . . . . . . . . . . . . . . . . . . . . . 20

3.2 Binary Decompilation . . . . . . . . . . . . . . . . . . . . . . . . . . 22

3.2.1 Decompilation as an Alternative . . . . . . . . . . . . . . . . . 24

3.2.2 Decompilation as a Complementary Approach . . . . . . . . . 25

3.3 High-Level Information Extraction from Binaries . . . . . . . . . . . 25

3.4 Source Code Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

3.5 Miscellaneous . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

4 CodeBin Overview 29

4.1 Assumptions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

4.2 Comparison of Source Code and Binaries . . . . . . . . . . . . . . . . 30

4.3 Function Properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

4.3.1 Function Calls . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

4.3.2 Standard Library and API calls . . . . . . . . . . . . . . . . . 31

4.3.3 Number of Function Arguments . . . . . . . . . . . . . . . . . 32

4.3.4 Complexity of Control Flow . . . . . . . . . . . . . . . . . . . 33

4.3.5 Strings and Constants . . . . . . . . . . . . . . . . . . . . . . 35

4.4 Annotated Call Graphs . . . . . . . . . . . . . . . . . . . . . . . . . . 37

4.5 Using ACG Patterns as Search Queries . . . . . . . . . . . . . . . . . 40

5 Implementation 42

5.1 Challenges . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

5.1.1 Macros and Header Files . . . . . . . . . . . . . . . . . . . . . 42

5.1.2 Statically Linked Libraries . . . . . . . . . . . . . . . . . . . . 44

5.1.3 Function Inlining . . . . . . . . . . . . . . . . . . . . . . . . . 46

5.1.4 Thunk Functions . . . . . . . . . . . . . . . . . . . . . . . . . 47

5.1.5 Variadic Functions . . . . . . . . . . . . . . . . . . . . . . . . 48

v

5.2 Source Code Processing . . . . . . . . . . . . . . . . . . . . . . . . . 49

5.2.1 Preprocessing and Parsing . . . . . . . . . . . . . . . . . . . . 49

5.2.2 Source Processor Architecture . . . . . . . . . . . . . . . . . . 51

5.3 Binary File Processing . . . . . . . . . . . . . . . . . . . . . . . . . . 53

5.3.1 Extracting Number of Arguments . . . . . . . . . . . . . . . . 53

5.3.2 ACG Pattern Extraction . . . . . . . . . . . . . . . . . . . . . 53

5.4 Graph Database . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

5.4.1 Subgraph Search . . . . . . . . . . . . . . . . . . . . . . . . . 55

5.4.2 Query Results Analysis . . . . . . . . . . . . . . . . . . . . . . 57

5.5 User Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

6 Evaluation 61

6.1 Methodology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

6.1.1 Test Scenario . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

6.1.2 Pattern Filtering . . . . . . . . . . . . . . . . . . . . . . . . . 63

6.1.3 Result Collection and Verification . . . . . . . . . . . . . . . . 64

6.2 Evaluation Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

6.3 No Reuse . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

6.4 Different Compilation Settings . . . . . . . . . . . . . . . . . . . . . . 68

6.5 Source Base and Indexing Performance . . . . . . . . . . . . . . . . . 70

7 Discussion 73

7.1 Limitations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

7.1.1 Custom Preprocessor Macros . . . . . . . . . . . . . . . . . . 73

7.1.2 Orphan Functions . . . . . . . . . . . . . . . . . . . . . . . . . 75

7.1.3 Inaccurate Feature Extraction . . . . . . . . . . . . . . . . . . 76

7.1.4 Similar Source Candidates . . . . . . . . . . . . . . . . . . . . 76

7.1.5 C++ Support . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

7.2 CodeBin as a Security Tool . . . . . . . . . . . . . . . . . . . . . . . 79

7.3 Directions for Future Work . . . . . . . . . . . . . . . . . . . . . . . . 79

vi

List of Figures

1 Sample abstract syntax tree . . . . . . . . . . . . . . . . . . . . . . . 9

2 Control flow graph created from source code . . . . . . . . . . . . . . 11

3 The effect of LLVM optimizations on CFG . . . . . . . . . . . . . . . 13

4 The effect of MSVC optimizations on CFG . . . . . . . . . . . . . . . 14

5 Cyclomatic complexity of four different hypothetical CFGs . . . . . . 34

6 Cyclomatic complexity of source and binary functions without compiler

optimizations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

7 Cyclomatic complexity of source and binary functions with compiler

optimizations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

8 Sample partial annotated call graph . . . . . . . . . . . . . . . . . . . 38

9 Overall design of CodeBin . . . . . . . . . . . . . . . . . . . . . . . . 40

10 The effect of static linking on binary ACGs . . . . . . . . . . . . . . . 45

11 Thunk functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

12 The architecture of CodeBin source code processor. . . . . . . . . . . 50

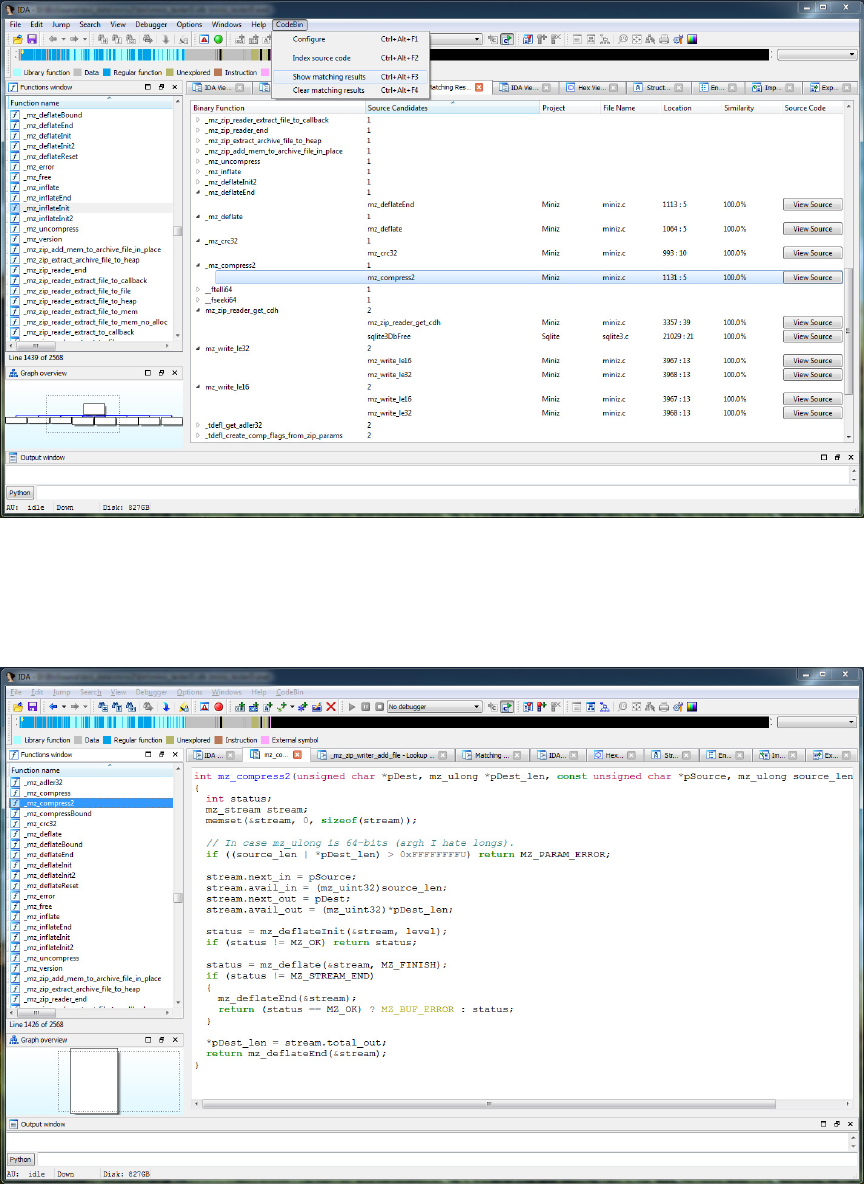

13 User interface: Indexing source code . . . . . . . . . . . . . . . . . . . 58

14 User interface: Inspecing ACGs . . . . . . . . . . . . . . . . . . . . . 59

15 User interface: Viewing matching results . . . . . . . . . . . . . . . . 60

16 User interface: Viewing source code . . . . . . . . . . . . . . . . . . . 60

viii

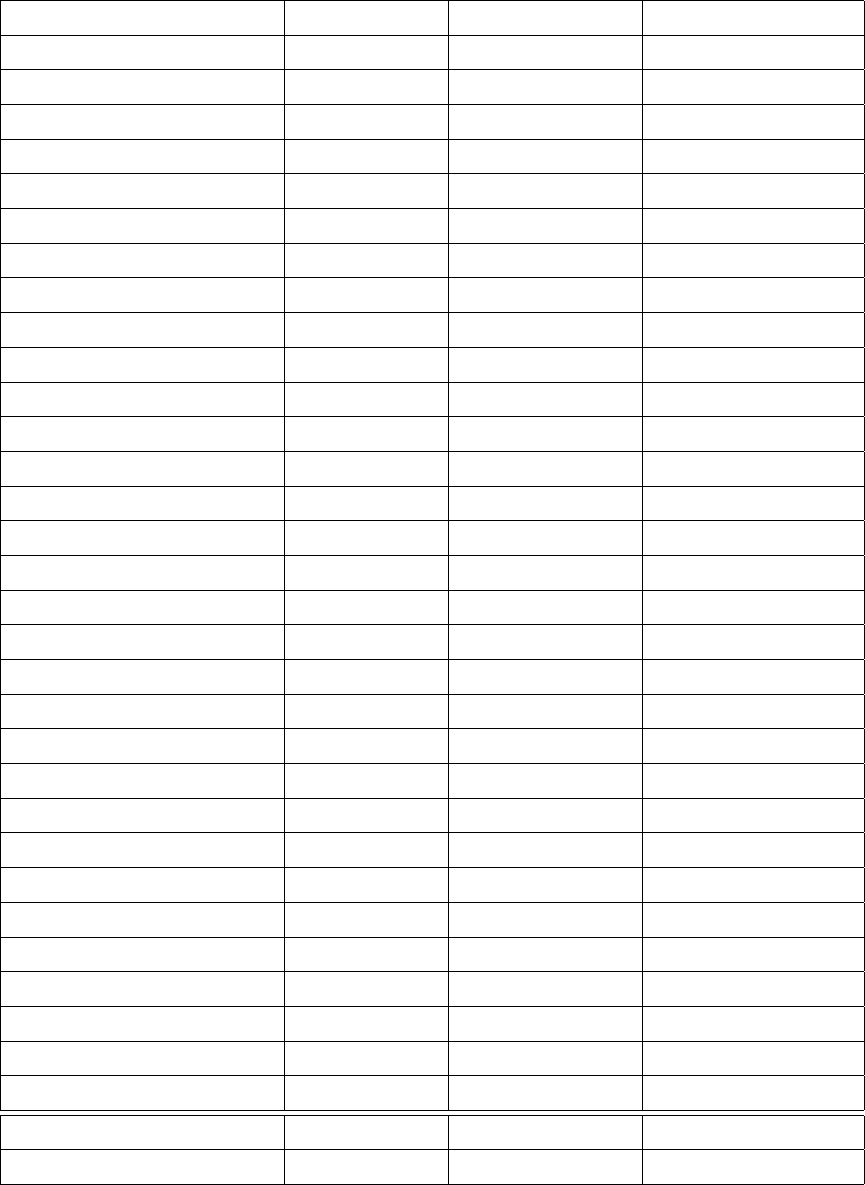

List of Tables

1 Complementary information on Figure 8 . . . . . . . . . . . . . . . . 39

2 Results of evaluating CodeBin in real-world scenarios. . . . . . . . . . 65

3 CodeBin results in no-reuse cases. . . . . . . . . . . . . . . . . . . . . 67

4 Effect of different compilation settings on CodeBin’s performance. . . 69

5 CodeBin test dataset, parsing and indexing performance. . . . . . . . 72

ix

Code Listings

1 Sample C source code. . . . . . . . . . . . . . . . . . . . . . . . . . . 8

2 Part of x86 assembly code for findPrimeSpeed function . . . . . . . . 16

3 Cypher query for the ACG pattern in Figure 8 . . . . . . . . . . . . . 56

4 Different implementations for ROTATE in OpenSSL. . . . . . . . . . 74

5 Similar functions in Sqlite . . . . . . . . . . . . . . . . . . . . . . . . 77

x

Chapter 1

Introduction

Analysis and reverse engineering of executable binaries has extensive applications in

various fields such as computer security and forensics [76]. Common security applica-

tions of reverse engineering include analysis of potential malware samples or inspecting

commercial off-the-shelf software; and are almost always performed on binaries alone.

Reverse engineering of programs in binary form is often considered a mostly manual

and time consuming process that cannot be efficiently applied to large corpuses.

However, companies such as security firms and anti-virus vendors often need to

analyze thousands of unknown binaries a day, emphasizing on the need for fast and

automated binary analysis and reverse engineering methods. To this end, there has

been several efforts in designing and developing reliable methods for partial or full

automation of different steps of reverse engineering and binary analysis.

Code reuse is referred to the process of copying part of an existing computer

program code in another piece of software with no or minimal modifications. Code

reuse allows developers to implement parts of a program functionality by relying on

previously written and tested code, effectively reducing the time needed for software

development and debugging.

Previous research has shown that code reuse is a very common practice in all sorts

of computer programs [17, 44, 60, 63, 75], including free software, commercial off-the-

shelf solutions and malware, all of which are typical targets of reverse engineering.

1

In the process of reverse engineering a binary program, it is often desirable to

quickly identify reused code fragments, sometimes referred to as clones. Reliable

detection of clones allows reverse engineers to save time by skipping over the fragments

for which the functionality is known, and focusing on parts of the program that drive

its main functionality.

This thesis is the result of exploring identification and matching of reused portions

of binary programs to their source code, a relatively new and less explored method

in the area of clone-based reverse engineering.

1.1 Motivation

The source code of a computer program is usually written in a high-level program-

ming language and is occasionally accompanied by descriptive comments. Therefore,

understanding the functionality of a piece of software by reading its source code is

much easier and less error-prone compared to analyzing its machine-level instructions.

On the other hand, as will be discussed later in Chapter 2, while huge repositories of

open source code is accessible for the public, creating large repositories of compiled

binaries for such programs bears significant challenges. As a result, matching reused

portions of binary programs to their source code would be an effective method in

speeding up the reverse engineering process.

Previous efforts have been made on identifying reused code fragments by searching

through repositories of open source programs [13, 39, 67]. However, research on binary

to source code matching is still very scarce. All previous proposals in this area follow

relatively simple and similar methods for matching executable binaries to source code,

and have not been publicly evaluated beyond a few limited case studies. Also, to the

best of our knowledge, there exists no systematic study on the feasibility and potential

challenges of matching reused code fragments from binary programs to source code.

2

1.2 Thesis Statement

In summary, the objective of this thesis is to assess the feasibility of automatically

comparing executable binary programs on the Intel x86 platform to a corpus of open/-

known source code to detect code reuse and match binary code fragments to their

respective source code. To reach this goal, we try to answer the following questions:

Question 1. What are the common features or aspects of a computer program that

can be effectively extracted from both its source code and executable binaries in an

automated fashion?

Question 2. How do compilation and build processes affect these aspects, and what

are the challenges of automated binary to source comparison?

Question 3. Can we improve the existing solutions for binary to source matching by

enriching the analyses with more reliable features and working around the challenges?

Question 4. To what extent can we use better binary to source matching techniques

to detect code reuse and facilitate reverse engineering of real world binary programs?

1.3 Contributions

1. Identification of Challenges. We have explored the effects of the compilation

and build process on various aspects of a program, and have shown why certain

popular features that are commonly used in previous work for comparing source

code [19, 45, 47, 69] or binary code fragments [30, 31, 32, 41, 46, 71] together

cannot be used for comparing source code to binary code. We have also studied

and hereby describe the challenges of automatically extracting features from

large corpuses of open source code, as well as the technical complications of

binary to source comparison.

2. New Approach. We explore the possibilities of improving current binary to

source matching proposals by studying additional features that can be used for

3

comparing source code to binary code, and propose a new approach, CodeBin,

which unlike previous proposals, tries to move from syntactic matching to ex-

tracting semantic features from source code and is capable of revealing code

reuse with much more detail.

3. Implementation. We have implemented CodeBin and created a system that

is capable of automating the matching of reused binary functions to their source

code on arbitrary binary programs and code bases. Our implementation can au-

tomatically parse and extract features from arbitrary code bases, create search-

able indexes of source code features, extract relevant features from disassembled

binaries, match binary functions to previously indexed source code, and gener-

ally reveal the source code of a majority of reused functions in an executable

binary.

4. Evaluation. We have evaluated CodeBin by simulating real world reverse en-

gineering scenarios using existing open source code. We present the results of

code reuse detection through binary to source matching, and assess the scala-

bility of our approach. To this end, we have indexed millions of lines of code

from 31 popular real world software projects, reused portions of the previously

indexed code in 12 binary programs, and used our prototype implementation to

detect and match reused binary functions to source functions. In summary, our

implementation is generally capable of uniquely matching over 60% of reused

functions to their source code, requiring approximately one second for indexing

each 1,000 lines of code and less than 30 minutes for analyzing relatively large

disassembled binary files on commodity hardware. We have also tested our sys-

tem in 3 cases where no previously indexed code is reused, and have investigated

the results in all cases. We present sample cases and describe the reasons why

the capabilities of our approach is undermined in certain circumstances, and

also discuss the possibilities for future work.

4

1.4 Outline

The rest of this thesis is organized as follows. Chapter 2 covers some necessary

background on how source code is translated into machine level executable binary

programs, as well as the challenges of automatically matching binary programs to

source code. In Chapter 3, we review related work in the field of reverse engineering

and software analysis, and discuss previous proposals for binary to source matching

and their shortcomings. Chapter 4 is dedicated to the formulation of our approach,

including the results of studying additional common features that can be extracted

from source code and binaries and our proposed method of creating searchable indices

from extracted features as well as performing searches over indexed source code. We

present the details of our prototype implementation in Chapter 5, and discuss certain

aspects of it that are incorporated to address several technical challenges that are

caused by the complexity of the compilation process. In chapter 6, we present the

results of evaluating our approach in real world clone detection-based reverse engi-

neering scenarios and our dataset. Chapter 7 includes the results of investigating the

evaluation results, covers challenging cases with samples and potential opportunities

for future work and improvements. Finally, we conclude in Chapter 8.

5

Chapter 2

Background

2.1 Software Compilation and Build Process

The build process, in general, is referred to the process of converting software source

code written in one or several programming languages into one or several software

artifacts that can be run on a computer. An important part of the build process is

compilation, which transforms the source code usually written in a high-level program-

ming language into another target computer language that is native to the platform

on which the program is meant to run. The target code is then processed further to

create target software artifacts.

The details of the build process highly varies between programming languages and

target platforms. For instance, building a program written in C into a standalone

executable binary for Intel x86 CPUs is completely different from building a Java

program that is executed in a Java Virtual Machine (JVM) [54].

Our goal is to facilitate reverse engineering of binary programs. C is arguably

the most popular programming language that is used to produce machine-executable

binaries [78], and x86 is still the predominant computing platform. Due to the nature

of our work, in the rest of this section we discuss the build process by focusing on C

as the source code programming language and Intel x86 as the target platform.

6

2.1.1 High-level Source Code

A computer program is usually written in a high-level programming language such

as C. These languages provide strong abstraction from the implementation details

of a computer, and allow the programmer to express the logic of the program using

high-level semantics.

We hereby discuss some related concepts in C programs using a simple example.

This sample code, along with many others, were used in the early stages of this work

to explore different ideas for binary to source matching.

Listing 1 shows a simple C program that receives an integer using a command

line argument and computes the largest prime number that is smaller than the input

integer using a pre-allocated array. The main logic of the program for finding the

target prime number is encapsulated in the function findP rimeSpeed, and the target

integer is passed to this function using its only argument, limit.

This program relies on three functions not defined in its source code, but imported

from the C standard library: malloc for allocating space in memory, printf for

writing in the standard output, and atoi for extracting an integer value from a

character string. Functions, as well as structs and other components can be imported

from other source files using the #include preprocessor directive.

As the first step towards creating an executable program from C source code,

the code is preprocessed. Each C source file usually refer to several other source

(header) files using #include directives, which instruct the preprocessor to simply

replace the directive line with the contents of the included header file. Preprocessor

macros are unique tokens defined by the programmer to replace arbitrary code, or to

simply include or exclude certain parts of source code in combination with #ifdef

and #ifndef directives. These macros shape an integral part of the C programming

language and are commonly used for a variety of reasons, including controlling the

build process and enclosing platform-dependent parts of code. In some projects such

as OpenSSL, macros are heavily used to include different implementations of the same

components, only one of which is eventually compiled. During the preprocessing step,

7

C compilers rewrite the source code based on a set of defined macros and form a

concrete version of the code. As a result, a piece of C source code is likely to be

incomplete without having a specific set of defined macros.

#include <stdio.h>

#include <stdlib.h>

int findPrimeSpeed(int limit) {

int

*

numbers = (int

*

) malloc(sizeof(int)

*

limit);

int lastPrime;

int n, x;

for (n = 2; n < limit; n++) {

numbers[n] = 0;

}

for (n = 2; n < limit; n++) {

if (numbers[n] == 0) {

lastPrime = n;

for (x = 1; n

*

x < limit; x++) {

numbers[n

*

x] = 1;

}

}

}

return lastPrime;

}

int main(int argc, char

*

argv[]) {

printf("%d\n", findPrimeSpeed(atoi(argv[1])));

}

Listing 1: Sample C source code.

8

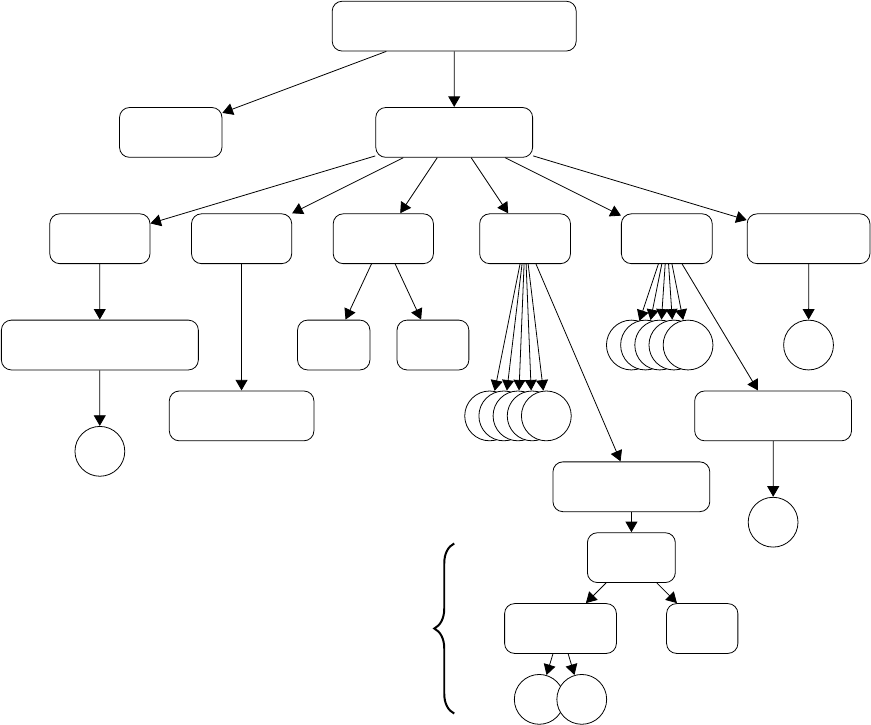

2.1.2 Abstract Syntax Tree

Once the code is preprocessed, a lexer parses the program’s source code to convert

its text into a parse tree or concrete syntax tree, which consists of all its tokens

separated according to C syntax. The parse tree is then simply converted into an

abstract syntax tree or AST, which is an immediate representation of source code

based on its syntactic structure. AST differs subtlety from a parse tree as it does not

represent all the details appearing in the real syntax, such as comments and spaces.

However, each node in AST denotes a construct in the source code, and can represent

anything from an operator to the name of a function argument. Obtaining an AST

is therefore vital to any analysis of source code based on its semantics.

findPrimeSp

eed ’int (int)’

limit ’int’

Comp

oundStmt

DeclStmt DeclStmt DeclStmt ForStmt ForStmt ReturnStmt

n

umbers ’int *’ cinit

lastPrime ’int’

n

’int’

x

’int’

...............

CompoundStmt

...............

Comp

oundStmt

...

...

’int’ ’=’

...

’in

t’ lvalue

’in

t’ 0

... ...

numbers[n] = 0

Figure 1: Abstract syntax tree for findPrimeSpeed function in Listing 1, created using

Clang [48].

9

2.1.3 Intermediate Representation

A computer program can be represented in many different forms. Translating code

from one language to another, as performed in compilation, requires analysis and

synthesis, which are in turn tightly bound to the representation form of the program.

Compilers usually translate code into an intermediate representation (IR), also

referred to as intermediate language (IL) [77]. Intermediate representations used in

compilers are usually independent of both the source and target languages, allowing

for creation of compilers that can be targeted for different platforms. Most syntheses

and analyses are performed over this form of code.

For instance, the GCC compiler uses several different intermediate representations.

These intermediate forms are internally used throughout the compilation process

to simplify portability and cross-compilation. One of these IRs, GIMPLE [58], is

a simple, SSA-based [20], three-address code represented as a tree that is mainly

used for performing code-improving transformations, also known as optimizations.

Another example is the LLVM IR [49], a strongly-typed RISC instruction set used as

the only IR in the LLVM compiler infrastructure.

2.1.4 Control Flow Graph

A control flow graph (CFG) is a directed graph that represents all the possible flows

of control during a program execution. CFGs are usually constructed individually for

each function. In a CFG, nodes represent basic blocks and edges represent possible

flows of control from one basic block to another, also referred to as jumps. A basic

block is a list of instructions that always execute sequentially, starting at the first

instruction and ending at the last.

A CFG can be created from any form of code, including source code (AST), its

intermediate representations, or machine-level assembly code. As will be discussed

later in this chapter, CFGs are an important form for representing a function or a

program in general, and are commonly used in reverse engineering.

10

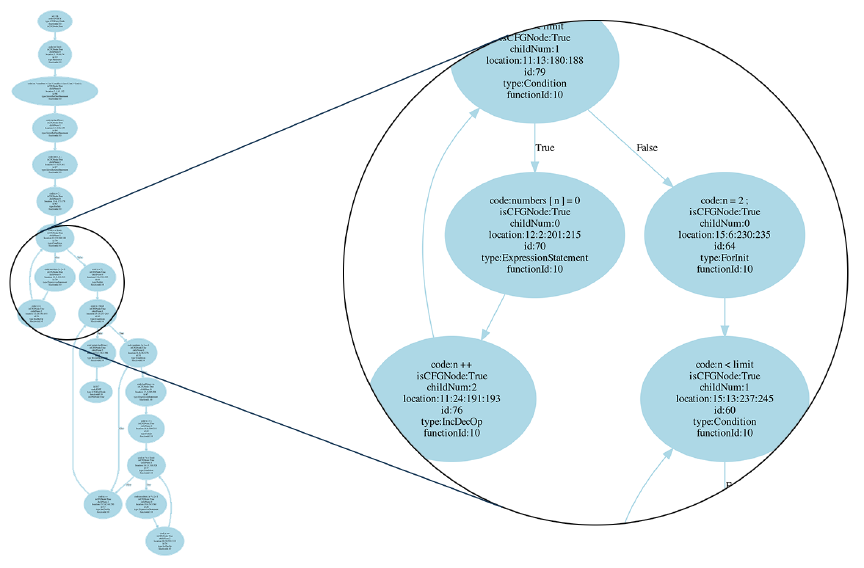

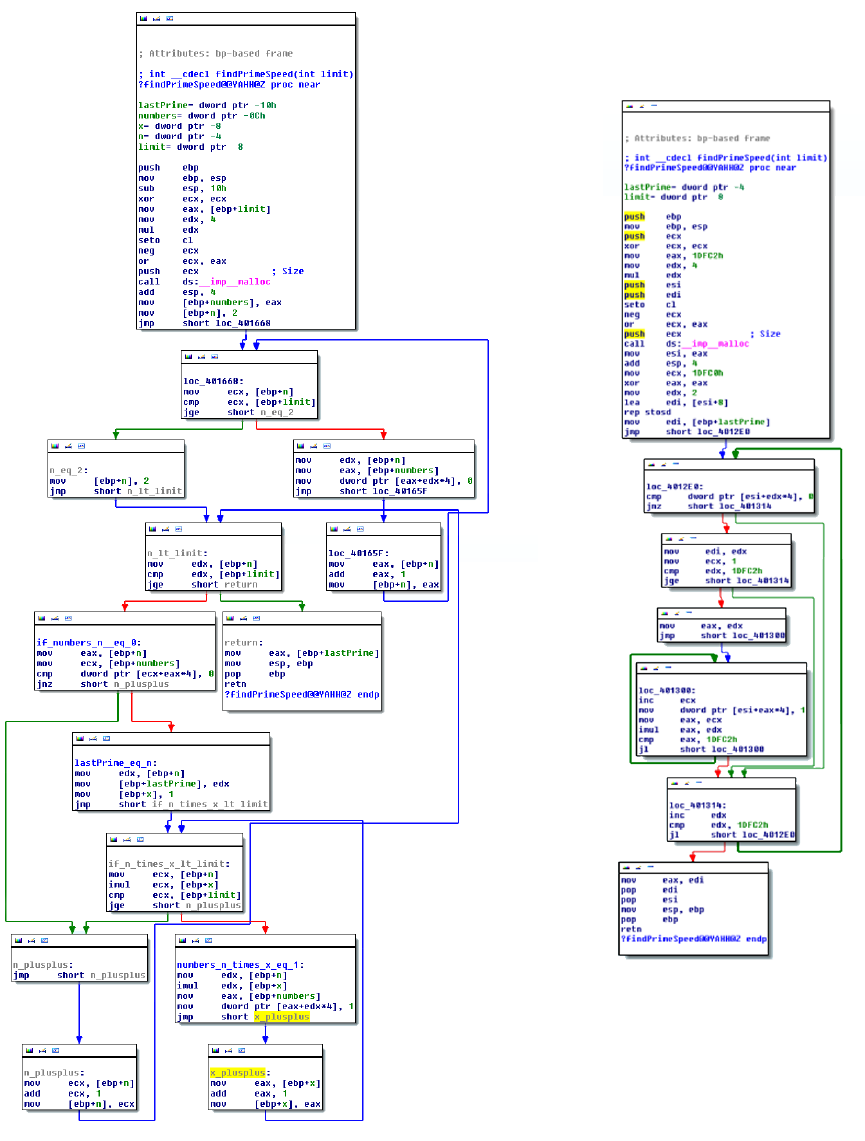

Figure 2 shows the control flow graph of the findPrimeSpeed function created by

parsing the code into an AST and converting it to a CFG using Joern [83].

Figure 2: Control flow graph of findPrimeSpeed function as created from the original source

code.

2.1.5 Compiler Optimizations

Compilers in general, and C compilers in particular, are capable of automatically

performing a variety of code optimizations, i.e., transformations that minimize the

time and/or memory required to execute the code without altering its semantics.

These optimizations are typically implemented as a sequence of transformation passes,

which are algorithms that take a program as input and produce an output program

that is semantically equivalent, but syntactically different.

11

Although the target platform is a very important factor that affects code opti-

mizations, a majority of such optimizations are intrinsically language and platform-

independent [77]. On the other hand, to guarantee semantics preservation, the com-

piler should be able to perform several analyses such as data flow analysis and depen-

dency analysis on the code, most of which are facilitated by intermediate languages.

Based on these two reasons, most compiler optimizations are performed over the

intermediate representation.

Since compiler optimizations are generally CPU and memory-intensive, compilers

typically allow programmers to choose the level of optimization, an option that affects

the time required for the compilation to finish, and how optimized the output is.

Compiler optimizations can potentially make significant changes to a piece of

code, including considerable modifications of its control flow. The amount of changes

introduced to a function as a result of compiler optimizations depends on several

factors, including how optimal the original code is and what opportunities exist for

the compiler to optimize it.

Figure 3 shows two control flow graphs for the findPrimeSpeed function. The CFG

on the left side is derived from the LLVM IR that is obtained by directly translating

the C source code, without performing any further analysis or optimization. The

CFG on the right side is derived from the LLVM IR that is fully optimized, i.e., the

output of the LLVM optimizer instructed to optimize the IR as much as possible.

Similarly, Figure 4 shows the CFG of findPrimeSpeed as derived from x86 assembly

produced using Microsoft Visual C compiler, without any optimization and with full

optimization.

Two interesting observations can be made by comparing the LLVM IR CFGs

(Figure 3) and the x86 assembly CFGs (Figure 4) to the source CFG (Figure 2):

1. Language-independent nature of CFG. Combining the sequential basic

blocks of the source CFG yields a control flow graph that is structurally identi-

cal to the unoptimized CFGs obtained from LLVM IR or x86 assembly. In other

words, the control flow graph seems to be language-independent: an abstract

12

Figure 3: Control flow graph of findPrimeSpeed function as created from LLVM bytecode.

Left: Without any optimization. Right: With full LLVM optimization.

feature that is capable of representing the flow of code regardless of what lan-

guage it is written in. This is one of the key reasons why CFGs are an important

form of representing a piece of code, as well as understanding it during reverse

engineering, simply because its overall structure is generally not affected by the

complexities of native, low-level machine languages such as the x86 instruction

set.

13

Figure 4: Control flow graph of findPrimeSpeed function as created from the assembly

output of the Microsoft Visual C compiler. Left: Without any optimization. Right: With

full (level 3) optimization.

14

2. The effect of compiler optimizations on the CFG. Note how compiler

optimizations have significantly changed the control flow of a rather simple

function. Generally, as a function becomes bigger with a more complex control

flow, compilers are provided with more opportunities to transform it into a more

optimal, but semantically equivalent code. Consequently, the chance of the

CFG being changed as a result of these optimizations also increases. Therefore,

while the structure of CFG seems to be language-independent, it cannot be

effectively used to compare functions in source and target forms in the presence

of an optimizing compiler.

2.1.6 Machine Code

Once architecture-independent analyses and optimizations are performed on the in-

termediate representation, the code is passed to a machine code generator: A compiler

that translates IR code into native instructions for the target platform contained in

an object file. In C and some other languages such as Fortran, compilation is done on

a file-by-file basis: Each source file is translated into the intermediate and/or target

language separately. Therefore, for our simple example in Listing 1, only one object

file will be created.

The nature of the generated machine code relies heavily on the architecture of the

target platform. Listing 2 contains part of the assembly code of findPrimeSpeed in the

Intel syntax, as generated by Clang/LLVM [48] with full optimizations for the Intel

x86 platform. The first part of the machine code related to the calling convention [55]

is removed for brevity.

2.1.7 Linking

Object files are created per source file, contain relocatable machine code and are

not directly executable. A linker is then responsible for linking various object files

and libraries and creating the final executable binary. There are certain link-time

15

mov r14d, edi

movsxd r15, r14d

lea rdi, [4

*

r15]

call _malloc

mov rbx, rax

cmp r15d, 3

jl LBB0_6

mov rdi, rbx

add rdi, 8

lea eax, [r14 - 3]

lea rsi, [4

*

rax + 4]

call ___bzero

dec r14d

mov ecx, 2

.align 4, 0x90

LBB0_2:

cmp dword ptr [rbx + 4

*

rcx], 0

mov edx, 1

mov rsi, rcx

jne LBB0_5

.align 4, 0x90

LBB0_3:

movsxd rax, esi

mov dword ptr [rbx + 4

*

rax], 1

inc rdx

mov rsi, rdx

imul rsi, rcx

cmp rsi, r15

jl LBB0_3

mov eax, ecx

LBB0_5:

mov rdx, rcx

inc rdx

cmp ecx, r14d

mov rcx, rdx

jne LBB0_2

LBB0_6:

add rsp, 8

pop rbx

pop r14

pop r15

pop rbp

ret

Listing 2: Part of x86 assembly code for findPrimeSpeed function

16

optimizations, mostly known as inter-procedural optimizations (IPO), that may be

performed only during linking as the optimizer has the full picture of the program.

Link-time optimizations may be performed on the intermediate representation or on

the object files. In either case, the linker takes all the input files and creates a single

executable file for each target.

Even the simplest pieces of code typically rely on a library, i.e., external code

defined in sets of functions or procedures. For instance, our example relies on three

library functions: malloc, atoi and printf. Libraries can be linked either statically

or dynamically. Statically linked libraries are simply copied into the binary image,

forming a relatively more portable executable. A dynamically linked library only has

its symbol names included in the binary image and should be present in the system

in which the binary is executed.

2.2 Binary to Source Matching

Having the essentials of the software build process explained briefly, we now discuss

two opposing ideas for matching binaries to reused source code, and the reason we

opted for the latter: Automatic compilation and automatic parsing.

2.2.1 Automatic Compilation

An idea for identifying reused source code in binary programs is to compile the source

code to obtain a binary version, and to utilize binary clone detection techniques

afterwards. We have explored this idea during the early stages of this project and

have faced several significant obstacles. According to our observations, automatic

compilation of an arbitrary piece of source code bears significant practical challenges.

Here, we enumerate and explain some of the key obstacles for automatic compilation,

which highlight the importance of the capability of directly comparing source code

and binaries.

17

2.2.1.1 Various Build Configurations

As discussed before in Section 2.1.1, C code is preprocessed before parsing, a process

that is heavily affected by preprocessor macros. An automatic compilation system

faces a big challenge for obtaining a set of correct values for custom preprocessor

macros. Usually, certain sets of values for these macros are included in a configuration

script that comes with the codebase and is run before running the actual build script.

However, different projects utilize different build systems, resulting in various methods

for configuration and build. Therefore, it is difficult in practice to obtain a set of

macros needed to compile a piece of code without any prior knowledge about the

build system used. While modern build systems such as CMake [2] provide a cross-

platform way of targeting multiple build environments and make the build process

highly standardized, they are yet to be adopted by the majority of C/C++ code

bases.

2.2.1.2 External Dependencies

Relying on external libraries for carrying out certain operations is a very common

practice. These external dependencies are not necessarily included in the depen-

dent projects and need to be downloaded, compiled in a compatible fashion and

provided separately by the user. Automatic compilation requires a standardized sys-

tem for retrieving and building these dependencies. These dependencies are usually

either downloaded by build automation and dependency management scripts or doc-

umented to be read and installed by users. While standard dependency management

systems are widely adopted by other languages [9, 35, 57], C/C++ projects have

yet to embrace such dependency management systems, further hindering automatic

compilation.

2.2.1.3 Cross-Compilation

At the time of writing this thesis, binary clone detection techniques are generally

not reliable when applied on binaries compiled with very different configurations [31],

18

such as different compilers and different optimization levels (e.g., little optimization

vs. heavy optimization), or on different platforms (e.g., x86 vs. ARM). On the other

hand, there might be a large number of candidate projects over which the search will

run. In this case, there needs to be a solution to automatically compile all the source

code into binaries, ideally with different compilers and different levels of optimization.

If the underlying platform of the future target binaries is not known, the automatic

compilation step should also build the projects on different platforms to obtain a good

quality set of binaries to match against.

2.2.2 Automatic Parsing

As a result of the challenges discussed above, we do not aim for compiling target

source code into machine binaries as a first step towards source to binary comparison.

Instead, we process the source code by parsing it and traversing the AST for extraction

of key features that are later used for matching. Obviously, custom configuration

macros are still an issue. However when only AST creation is considered, lacking

knowledge about these macros results in a partially inaccurate process instead of

blocking it completely, a problem that occurs when executable machine code is to

be created. Similarly, the location of header files will also be a missing piece of

information. In Chapter 5, we will explain our approach for obtaining ASTs in a fully

automated fashion, without access to predefined custom macros or the location of

header files.

19

Chapter 3

Related Work

In this chapter, we discuss previous work on reverse engineering of executable binaries,

with a focus on high-level information recovery from program binaries and source code

as well as assembly to source code comparison.

3.1 Binary to Source Comparison

To the best of our knowledge, the idea of reverse engineering through binary to source

comparison is not explored much. There exist three tools and a few publications that

focus on comparing binary programs to source code for various purposes including

reverse engineering, all of which employ very similar preliminary techniques for com-

parison.

The oldest proposal, RE-Google [13, 50, 51], is a plugin for IDA Pro introduced in

2009. RE-Google is based on Google code search, a discontinued web API provided by

Google that allowed third party applications to submit search queries against Google’s

open source code repository. RE-Google extracts constants, strings and imported

APIs from disassembled binaries using IDA functionalities, and then searches for the

extracted tokens to find matching strings in hosted source files. This plugin was left

unusable once Google discontinued the code API.

The RE-Source framework and its BinSourcerer tool [66, 67] is an attempt to

20

recreate the functionalities originally provided by RE-Google using other online open

source code repositories such as OpenHub [11]. BinSourcerer is also implemented as

an IDA Pro plugin and follows a method very similar to RE-Google by converting

strings and constants as well as imported APIs in binaries to searchable text tokens.

Methods proposed in RE-Google and RE-Source are indeed very similar, and both

are based on syntactic string tokens and text-based searches. There are two major

drawbacks of these proposals:

1. Both rely on online repositories for searching, limiting their capabilities in terms

of source code analysis to what these online repositories expose in their APIs.

This essentially prevents these proposals from being capable of fine-grained

analysis, as online repositories APIs treat source code as text and only expose

text-based search to third party applications [10]. A successful search using

these tools returns a list of source files that contain the searched string token,

each of which may be quite large and contain thousands of lines of code. The

string tokens has an equal chance of being included in a comment and an actual

piece of code, and may also be part of code written in any language, including

the ones that are unlikely to be compiled into executable binaries [51].

2. One cannot use RE-Google or BinSourcerer to compare binaries to any arbi-

trary codebase, e.g., proprietary code that is not necessarily open source and

is of interest for applications such as copyright infringement detection. This

shortcoming may be addressed by creating a database of the non-open code

accompanied by a searchable index as a secondary target for querying.

The binary analysis tool (BAT) [38, 39], introduced in 2013, is a generic lightweight

tool for automated binary analysis with a focus on software license compliance. The

approach adopted by BAT is also similar to that of RE-Google and BinSourcerer, as it

also searches for text tokens extracted from binaries in publicly available source code.

BAT is capable of extracting additional identifiers such as function and variable names

from binary files, provided that they are attached to the binary. However, real-world

21

executable binaries are often stripped of such high-level information, and reasonable

identifiers used by BAT are practically limited to strings.

It should be noted that despite their limitations, these tools may provide the

reverse engineer with very useful information fairly quickly. For example, returning

similar files all including implementations of cryptographic hash functions informs

the reverse engineer that the binary file or function under analysis includes such a

functionality. However, not all functions include distinctive strings and constants,

a general factor that limits the potential of approaches that are purely based on

syntactic tokens.

As will be shown later in this thesis, a piece of source code contains significantly

more high-level information rather than just strings and constants, some of which can

be effectively compared to binary files and functions for detecting reused portions of

code.

Cabezas and Mooji [23] briefly discuss the possibility of utilizing context-based and

partial hashes of control flow graphs for comparing source code to compiled binaries

in a partially manual process. They do not however test, validate or provide evidence

for the feasibility of this approach. Also, as we showed through an example in Section

2.1.5, CFGs change significantly once source code is transformed into binaries using

an optimizing compiler.

3.2 Binary Decompilation

There has been several previous efforts on binary decompilation, which tries to gen-

erate equivalent code with high-level semantics from low-level machine binaries.

Historically, research on decompilation dates back to the 1960’s [15]. However,

modern decompilers have their roots in Cifuentes’ PhD thesis in 1994 [27], where

she introduced a structuring algorithm based on interval analysis [16]. Her proposed

techniques is implemented in dcc [26], a decompiler for Intel 80286 / DOS binaries

into C, which resorts back to outputting assembly in case of failure. The correctness

22

of dcc’s output is not tested.

Another well-known decompiler, Boomerang [1], was created based on Van Em-

merik’s proposal [80] for using the Single Static Assignment (SSA) form for data flow

components of a decompiler, including expression propagation, function signature

identification and dead code elimination. Van Emmerik performed a case study of re-

verse engineering a single Windows program by using Boomerang with some manual

analysis. However, other research efforts in this area have reported very few cases of

successful decompilation using Boomerang [72].

HexRays Decompiler [6, 36] is the de facto industry standard compiler, available

as a plugin for IDA Pro [7]. As of 2015, the latest version of HexRays is capable of

decompiling both x86 and ARM binaries, providing full support for 32-bit and 64-

bit binaries alike on both platforms. To the best of our knowledge, no other binary

decompiler is capable of handling such a wide variety of executable binaries.

Phoenix [72] is another modern academic decompiler proposed by Schwartz et al.

in 2013. Pheonix relies on BAP [22], a binary analysis platform that lifts x86 instruc-

tions into an intermediate language for easier analysis, and contains extensions such as

TIE [52] for type recovery and other analyses. Phoenix employs semantics-preserving

structural analysis to guarantee correctness and iterative control flow structuring to

benefit from several opportunities for correct recovery of control flow that other de-

compilers reportedly miss [72]. Phoenix output is reportedly up to twice as more

accurate as HexRays in terms of control flow correctness, but is unavailable for public

use as of this writing.

Yakdan et al. proposed REcompile [81] in 2013, a decompiler that similar to

dcc employs interval analysis for control flow structuring, but also uses a technique

called node splitting to reduce the number of produced GOTO statements in the

output. This technique reportedly has a downside of increasing the overall size of

the decompilation output. DREAM [82] is another decompiler proposed by the same

group in 2015, which also is focused on reducing the number of produced GOTO

statements by using structuring algorithms that are not based on pattern matching,

23

a common method used in other decompilers. Neither of these two decompilers are

available for public use as of the date if this writing.

In summary, previous research in the area of decompilation has highlighted sig-

nificant challenges in correct recovery of types as well as control flow. For instance,

experiments made by Schwartz et al. on Phoenix decompiler have shown several

limitations and failures in terms of correct decompilation caused by floating-point

operations, incorrect type recovery, inability to handle recursive structures and some

calling conventions [72]. Also, the HexRays decompiler, which is the only usable and

publicly available tool in this domain, does not perform type recovery [6] and is shown

to be limited in terms of correct recovery of control flow [72, 82].

Despite its limitations, decompilation can be considered both as an alternative

and a complementary approach when compared to binary to source matching.

3.2.1 Decompilation as an Alternative

In cases where there is no code reuse, decompilation output is very likely to be more

usable compared to the results of any binary to source matching approach. Also,

as will be shown later in Section 6.2, we have found that there are still cases where

binary to source matching is likely to fail to provide useful results (see Section 7.1.2).

On the other hand, we argue that binary to source matching has a lot more

potential in clone-based reverse engineering scenarios. Source code usually comes

with many identifiers such as identifier names (functions, variables, structures, etc.)

and comments that significantly facilitate understanding it, all of which are removed

in a compiled binary in realistic settings. In these cases, correct matching from binary

to source may provide a reverse engineer with more helpful results compared to correct

decompilation.

24

3.2.2 Decompilation as a Complementary Approach

Decompilation can also be used as a complementary approach to binary to source

matching. Due to limitations mentioned above, we have not tried to use a decompiler

output in our work, except for recovering the number of arguments for a function in an

optional fashion. However, one might try to apply several source-level clone detection

techniques proposed in the literature [69] to benefit from some of the in-depth analyses

decompilers perform for comparing binary functions to source functions.

3.3 High-Level Information Extraction from Binaries

There has been several research efforts on inference and extraction of high-level infor-

mation such as variable types [29, 52], data structures [53, 68, 74] and object oriented

entities [43, 70] from executable binaries using both static and dynamic analysis tech-

niques. Some of these proposals achieve promising results in particular scenarios.

However, we have not been able to use them in our work as they all have considerable

limitations either in terms of relying on dynamic analysis and execution, focusing on

very specific compilation settings, not supporting many realistic use cases, or simply

not being available for evaluation.

Zhiqiang et al. developed REWARDS [53], an approach for automatic recovery

of high-level data structures from binary code based on dynamic analysis and bi-

nary execution. REWARDS is evaluated on a subset of GNU coreutils suite, and

achieves over 85% accuracy for data structures embedded in the segments that it

looks into. TIE [52] is a similar approach by JongHyup et al. that combines both

static and dynamic analysis to recover high-level type information. TIE is an at-

tempt towards handling control flow and mitigating less than optimal coverage, both

of which are significant limitations of approaches that are merely based on dynamic

analysis. Based on an experiment on a subset of coreutils programs, TIE is reported

to be 45% more accurate than RWARDS and HexRays decompiler. However, other

work such as Phoenix [72] has shown considerable limitations of TIE in handling data

25

structures, and code with non-trivial binary instructions.

OBJDIGGER [43] is proposed by Jin et al. to recover C++ object instances,

data members and methods using static analysis and symbolic execution. While it is

shown to be able to recover classes and objects from a set of 5 small C++ programs, it

does not support many C++ features such as virtual inheritance and is only targeted

towards x86 binaries compiled by Microsoft Visual C++.

Prakash et al. proposed vfGuard [64], a system that is aimed at increasing the

control flow integrity (CFI) protection for virtual function calls in C++ binaries.

vfGuard statically analyzes x86 binaries compiled with MSVC to recover C++ se-

mantics such as VTables.

There also exist a few old IDA Pro plugins for recovering C data structures [33]

and C++ class hierarchies [73] using RTTI [87], but they all seem limited in terms

of capabilities and are not actively developed in the public.

3.4 Source Code Analysis

Necula et al. have developed CIL [62], a robust high-level intermediate language

that aids in analysis and transformations of C source code. CIL is both lower level

than ASTs and higher level than regular compiler or reverse engineering intermediate

representations, and allows for representation of C programs with fewer constructs

and clean semantics. While it is extensively tested on various large C programs such

as the Linux kernel, it needs to be run instead of the compiler driver to achieve correct

results [61]. This basically means that one needs to modify the configure and make

scripts that ship with the source code. As discussed earlier in Section 2.2.1.1, while

this is not a limitation by any means, it makes automatic processing of arbitrary

codebases infeasible due to customized build scripts.

SafeDispatch [40] is proposed by Jang et al. to protect C++ virtual calls from

memory corruption attacks, a goal very similar to that of vfGuard [64]. However,

SafeDispatch inserts runtime checks for protection by analyzing source code. This

26

system is implemented as a Clang++/LLVM extension, and needs to be run along

with the compiler. Due to this requirement and similar to CIL [62], SafeDispatch

cannot be automatically invoked on arbitrary C++ source bases.

Joern [83] is a tool developed by Yamaguchi et al. for parsing C programs and

storing ASTs, CFGs and program dependence graphs in Neo4J [59] graph databases.

Joern is used along with specific-purpose graph queries for detecting vulnerabilities

in source code [84, 85, 86]. During our experiments, we have found that graphs

created by Joern are not always reliable, specially in the presence of rather complex

C functions or moderate to heavy use of custom preprocessor macros. As will be

discussed later in Section 5.2, we adopt Clang [48], a mature open source modular

compiler for parsing C source code.

3.5 Miscellaneous

Although not directly related, there are other pieces of work that make use of some

of the key concepts we focus on in this thesis for a variety of purposes.

Lu et al. [56] propose a source-level simulation (SLS) system that annotates source

code with binary-level information. In such a system, both source and binary versions

of the code are available. The goal is to simulate the execution of the code, usually on

an embedded system, while allowing the programmer to see how and by what extent

specific parts of the code contribute to simulation metrics. The authors propose

a hierarchical CFG matching technique between source and binary CFGs based on

nested regions to limit the negative effect of compiler optimizations on SLS techniques.

In a recent paper [24], Caliskan-Islam et al. implement a system for performing

authorship attribution on executable binaries. They use lexical and syntactic fea-

tures extracted from source code and binary decompiler output to train a random

forest classifier, and use the resulting machine learning model to de-anonymize the

programmer of a binary program. Features include library function names, integers,

AST node term frequency inverse document frequency (TFIDF) and average depth

27

of AST nodes among few others. The authors claim an accuracy of 51.6% for 600

programmers with fixed optimization levels on binaries. Although it is claimed that

syntactic features such as AST node depth survive compilation, we have not been able

to verify such a fact specially when multiple optimization levels, limitations of decom-

pilers and hundreds of thousands of functions with potentially multiple programmers

are to be considered.

28

Chapter 4

CodeBin Overview

We now outline our general approach for matching executable binaries to source code.

4.1 Assumptions

The underlying assumption is that the binary program under analysis may have used

portions of one or more open source projects or other program for which the source

code is available to the analyst, and identifying the reused code is a critical goal in

the early stages of the reverse engineering process. Functions are usually considered

as a unit of code reuse in similar work on binary clone detection. However, in some

cases only part of a function might be reused. We do not aim at detecting partial

function reuse in this work. Our main goal, therefore, is to improve the state of the

art for binary to source matching by targeting individual binary functions instead of

the entire executable. We do not aim at identifying all the functions in a piece of

executable binary, but rather those that are reused and of which the source code is

available.

We also do not consider obfuscated binaries, as de-obfuscation is considered as an

earlier step in the reverse engineering process [37, 79].

29

4.2 Comparison of Source Code and Binaries

We introduce a new approach for identifying binary functions by searching through a

repository of pre-processed source code. This approach aims at automatically finding

matches between functions declared in different code bases and machine-level binary

functions in arbitrary executable programs. At the core of CodeBin, we identify and

carefully utilize certain features of source code that are preserved during the compila-

tion and build processes, and are generally independent of the platform, compiler or

the level of optimization. As a result, these features can also be extracted from binary

files using particular methods, and be leveraged for finding similarities between source

code and executable binaries at the function level without compiling the source code.

Due to the sophisticated transformations applied on source code by an optimizing

compiler, we have found that the number of features that can be extracted from both

plain source code and executable binaries and then directly used for comparison is

rather small. Detailed properties of a binary function such as its CFG or machine

instructions are often impossible to predict solely with access to its source code and

without going into the compilation process, as they are heavily subject to change and

usually get affected by the build environment. Hence, features that can be used for

direct comparison of binary and source functions usually represent rather abstract

properties of these functions.

A small number of abstract features may not seem very usable when a large corpus

of candidate source code is considered. However, we have found that the combination

of these features produces a sufficiently unique pattern that can be effectively used for

either identifying reused functions or narrowing the candidates down to a very small

set, which is easy to analyze manually. This key observation has helped us establish

a method for direct matching of binary and source functions.

30

4.3 Function Properties

We leverage a few key properties of functions to form a fingerprint that can be later

used to match their source code and binary forms. These properties have one obvious

advantage in common: They are almost always preserved during the compilation

process regardless of the platform, the compiler, or the optimization level. In other

words, despite the feature extraction methods being different for source code and

binaries, careful adjustments of these methods can yield the same features being

extracted from a function in both forms. These features are as follows:

4.3.1 Function Calls

Functions in a piece of software are not isolated entities and usually rely on one

another to for own functionality. A high-level view of the call relations between

different functions in a certain program can be represented as a function call graph

(FCG), a directed graph in which nodes represent functions and edges represent

function calls. In majority of the cases, calls between different binary functions follow

the same pattern as in source code. We utilize this fact to combine other seemingly

abstract features into sufficiently unique patterns that can be later searched for in

source code. There are special cases, most notably inline function expansion (or

simply inlining), which sometimes cause this relation not to be easily detectable in

binaries. However, as discussed in Section 5.1.3, we employ a technique to minimize

the effect, and have actually found inlining not to be a significant limiting factor for

our approach in real-world scenarios.

4.3.2 Standard Library and API calls

System calls and standard library function invocations are rather easy to spot in

source code. Once function calls are identified, cross-referencing them in each function

against the list of declared functions in the same code base separates internal and

external calls. Using a list of known system calls and standard library functions,

31

external calls can be further processed to identify system calls and library function

invocations. On the binary side, the situation might be more complex due to different

linking techniques. If all libraries are linked dynamically (i.e., runtime linking), the

import address table (IAT) of the executable binary yields the targeted system and

library calls. While system APIs such as accept for socket connections in Linux or

those defined in kernel32.dll on Windows can be found in the IAT, static linking of the

standard library functions such as memset results in library function calls appearing

like normal internal calls in the binary and not being present in the IAT. However,

certain library function identification techniques such as those utilized by IDA Pro’s

FLIRT subsystem [4] can be used to detect library functions in executable binaries

and distinguish them from regular calls between user functions.

4.3.3 Number of Function Arguments

The number of arguments in the function prototype is another feature that can be

extracted from source code as well as binaries in a majority of cases. It should be noted

however that this is not the case for the actual function prototype as well, since exact

type recovery from executable binaries is still an ongoing research problem without

fully reliable results [52]. On the source side, parsing the source code easily yields the

number of arguments for each defined function. On the binary side, this number can

be derived from detailed analysis of the function’s stack frame and its input variables

combined with identification of the calling conventions used to invoke the function.

HexRays Decompiler [6], for instance, uses similar techniques to derive the type and

number of arguments for a binary function. While we have found the types not be

accurate enough for our purpose, the number of arguments as extracted from binaries

is correct in the majority of the cases according to our experiments. An exception

to this are functions known as “variadic” functions, such as the well-known “printf”

function in C standard library, which can accept a variable number of arguments

depending on how they are invoked and the number of passed arguments. However,

variadic functions account for a very small fraction of all the functions defined in

32

real-world scenarios (approximately 1% according to our observations), and they can

be treated in a special way to prevent mismatches, as outlined in Section 5.1.5.

4.3.4 Complexity of Control Flow

Control flow can be considered as a high-level representation of a function logic. As

discussed in Section 2.1.4, the control flow of each function is represented through a

CFG. Predicting the control flow structure of the compiled binary version of a source

function without going through the compilation process is extremely unreliable and

inaccurate, if not impossible. While the structure of the CFG is usually suscepti-

ble to compiler optimizations, we have found that its complexity remains far more

consistent between source and binary versions. In other words, simple and complex

source functions generally result in respectively simple and complex binary functions,

whether or not compiler optimizations are applied.

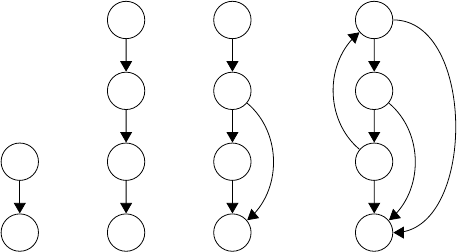

We use the number of linearly independent paths in a control flow graph to mea-

sure its complexity. This metric is referred to as the cyclomatic complexity, and

is similarly used in previous work [42] as a comparison metric for binary functions.

Cyclomatic complexity is denoted by C and defined as:

C = E − N + 2P

, where E is the number of edges, N is the number of nodes, and P is the number of

connected components of the CFG. In our use case, P = 1, as we are measuring the

complexity of the control flow structure of individual functions. As can be seen in

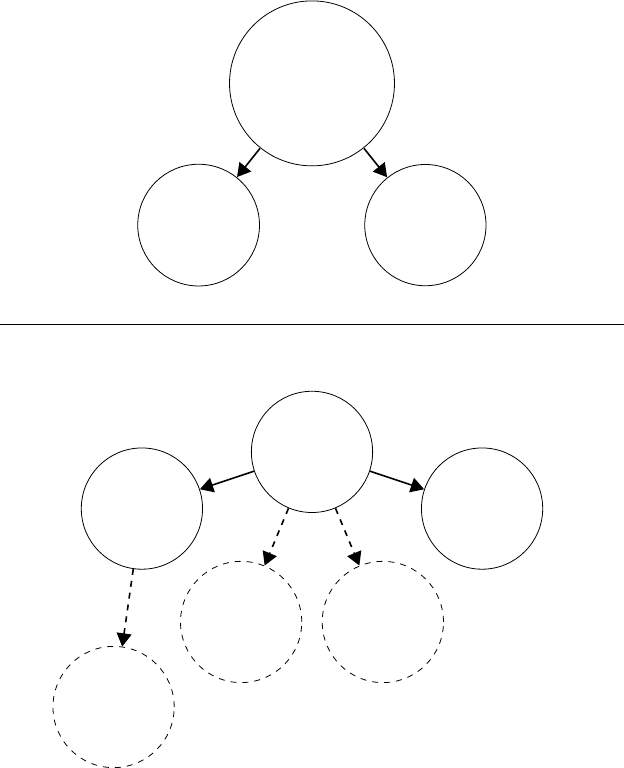

Figure 5, control structures such as branches and loops contribute to code complexity.

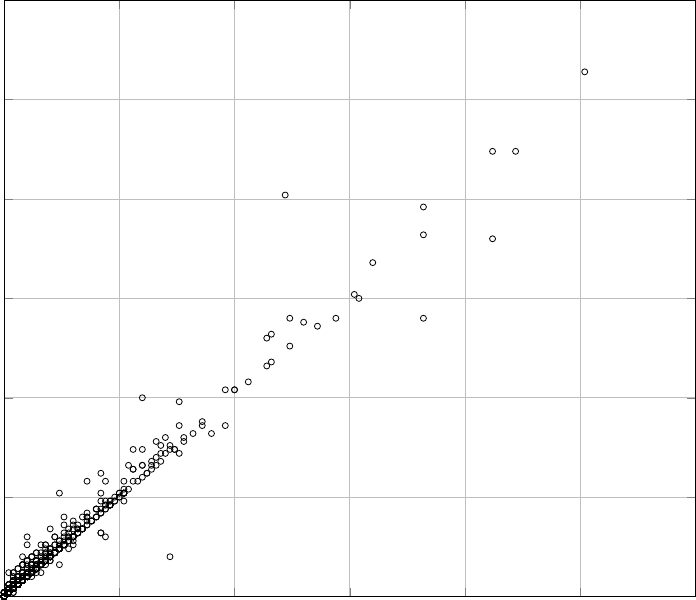

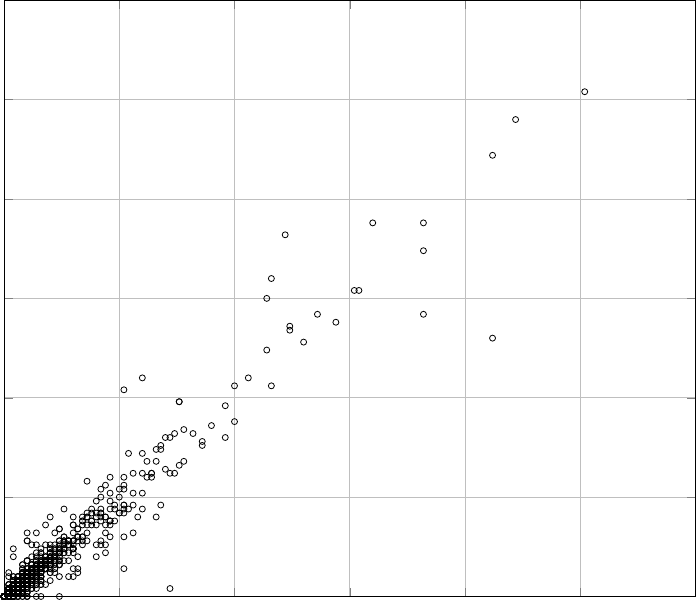

We have carried out an experimental study on the cyclomatic complexity of source

and binary versions of approximately 2000 random functions extracted from various

projects and compiled with different configurations. The results show that generally,

compiler optimizations in fact do not heavily alter the complexity of control flow.

Figures 6 and 7 show the correlation between the cyclomatic complexity of source

33

(a)

C =

1

(b)

C =

1

(c)

C =

2

(d)

C =

4

Figure 5: Cyclomatic complexity of four different hypothetical CFGs. c includes a branch

at second basic block, and d includes a loop and a break statement at second basic block.

and binary versions, in which each function is denoted by a dot in the scatter graph.

Note how optimizations affect the CFGs of functions by relatively diversifying

the graph, but still resulting in a fairly strong correlation between the complexity

of source and binary functions. The empty space in the upper left and lower right

portions of both graphs, caused by the relative concentration of most dots around

the identity (x = y) line, shows that cyclomatic complexity can indeed be effectively

used as an additional feature for comparing source and binary functions.

For clearer representation of the results, we have only included the functions of

which the complexity falls below 150. For 7 functions, accounting for less than 0.4%

of all the cases, the complexity of both source and binary control flow graphs is

above 150. While they are not depicted in the figures, they follow the same pattern.

Among all samples in this study, the maximum difference between source and binary

complexities is 32% and belongs to a function with a source CFG complexity of 350.

Our study confirms the fact that predicting the exact cyclomatic complexity for

a binary CFG based on the source CFG cyclomatic complexity, or vice versa, is not

feasible. However, it also suggests that complexity can still be used as a comparison

metric when multiple candidates for a binary function are found. Therefore, in cases

34

0 25 50 75 100 125

0

25

50

75

100

125

Source cyclomatic complexity

Binary cyclomatic complexity

Figure 6: Correlation between the cyclomatic complexity of various functions’ control flow

in source and binary form, with compiler optimizations disabled.

where multiple candidate source functions are found for one binary function, we will

use this metric as a means to reduce the number of false positives and rank the results

based on their similarity to the binary functions in terms of control flow complexity.

4.3.5 Strings and Constants

String literals and constants are used in similar work such as RE-Google [50] to

match executable binaries with source code repositories. While these two features are

certainly usable for such a purpose and can sometimes be used to uniquely identify

portions of software projects, we have found them not be reliable enough for function-

level matching. For instance, despite the fact that it is relatively easy to extract

string literals referenced and used by functions in the source code, we have found

35

0 25 50 75 100 125

0

25

50

75

100

125

Source cyclomatic complexity

Binary cyclomatic complexity

Figure 7: Correlation between the cyclomatic complexity of various functions’ control flow

in source and binary form, with compiler optimizations set to default level (-O2).

that assigning strings to individual binary functions accurately is rather difficult and

does not result in reliable feature extraction. The same issue exists for constants.

As a result, we believe string literals and constants can be used in a better way to

narrow down the list of candidate projects (and not individual functions), which may

actually help in reducing the number of false positives if patterns used by CodeBin

exist in more than one software project.

It is notable that the combination of features mentioned above is far more useful

than any of them in isolation. For instance, a set of a few API calls may be helpful in

narrowing down the list of candidate source functions for a given binary function, but

using this technique alone does not lead to many functions being identified, as only

36

a portion of functions in general include calls to system APIs or standard libraries.

On the other hand, while number of arguments is a feature applicable to nearly any

function, the set of candidate source functions with a specific number of arguments is

still too large to be analyzed manually. However, we show that a carefully designed

combination of all these features is sufficient for detecting a large number of binary

functions just by parsing and analyzing the source code.

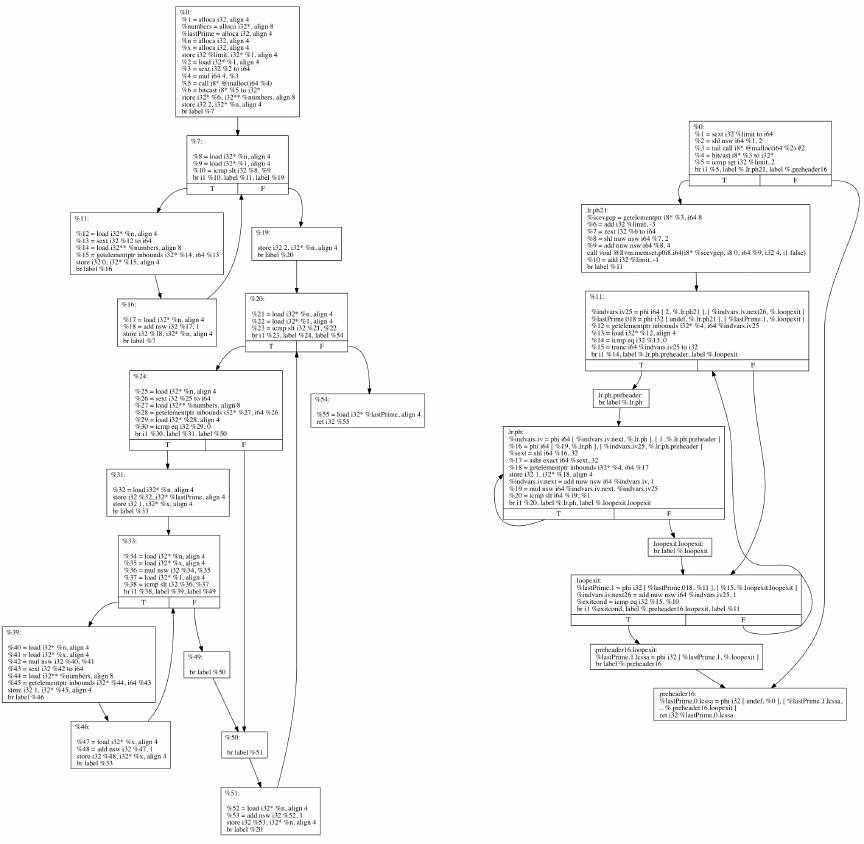

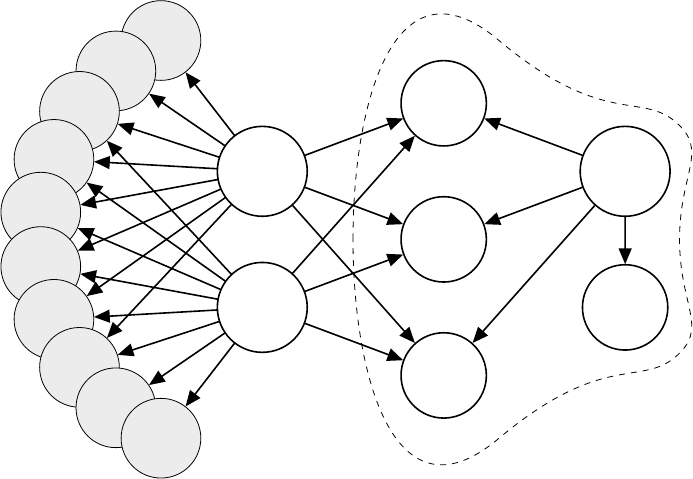

4.4 Annotated Call Graphs

We introduce and utilize the notion of annotated call graphs (ACG), function call

graphs in which functions are represented by nodes annotated with function proper-

ties. These properties are the features discussed previously, except for the function

calls that are represented by the graph structure itself. Hence, an ACG is our model

for integrating the features together, forming a view of a piece of source code that is

later used to compare it to an executable binary.

In an ACG, functions defined by the programmer, simply referred to as user

functions, are represented by nodes. A call from one user function to another user

function results in a directed edge from the caller to the callee. Library functions and

system APIs may be represented either as nodes or as node properties. If represented

by nodes, each called library function or system API will be a node with incoming

edges from the user functions that have called it. In this case, calls between library

functions may or may not be representable depending on whether the source code for

the library is available. If represented by properties, each node (user function) will

have a property that lists identifiers for each library function or system API called by

that function. As will be discussed later in Section 5.1.2, the decision whether to use

nodes or properties for representing library functions and system APIs is critical to

the effectiveness of our approach. Temporarily, let’s assume than we represent calls

to system APIs and library functions as node properties, and a node itself always

represents a user function.

37

f1

f2

f3

f4

f5

f6

f7

f8

f9

f10

N: 9

E: 4

C: 47

A

N: 6

E: 7

C: 42

B

N: 4

E: 1

C: 2

C

N: 3

E: 0

C: 3

D

N: 4

E: 1

C: 2

E

N: 7

E: 4

C: 12

F

N: 3

E: 2

C: 6

G

Figure 8: Sample partial annotated call graph from Miniz. N , E and C respectively denote

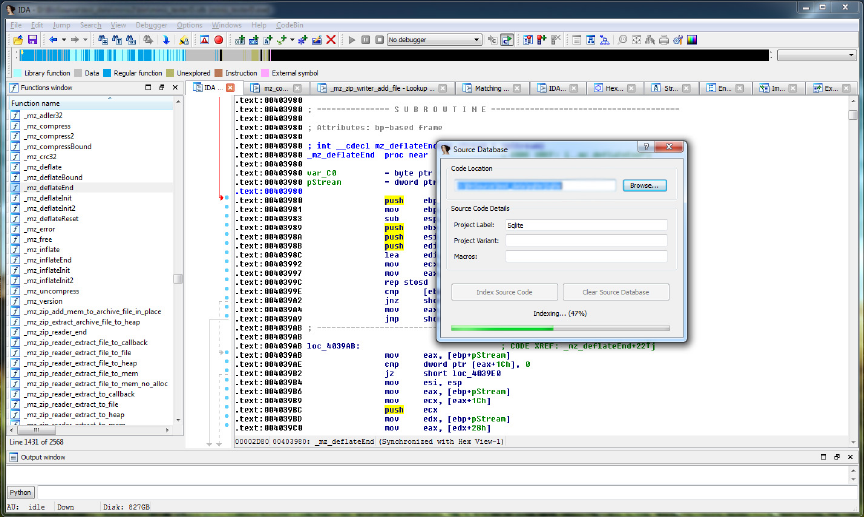

number of arguments, system API and library calls and cyclomatic complexity.

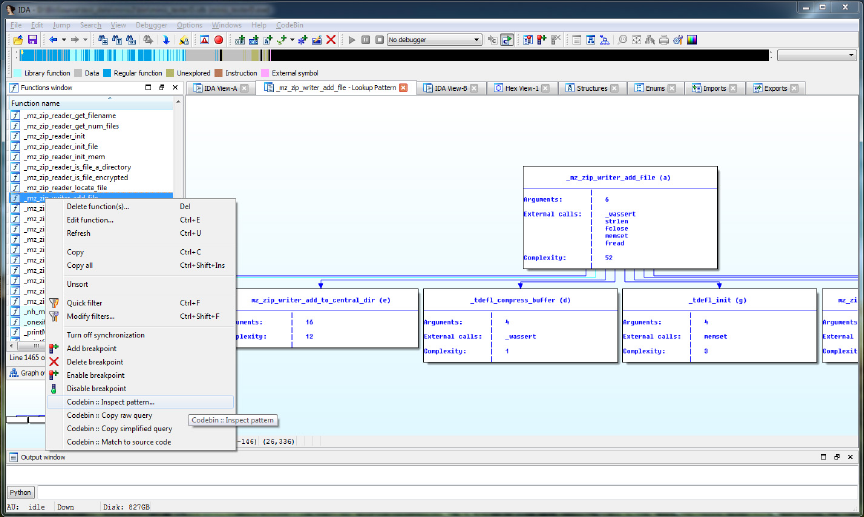

Figure 8 depicts a partial ACG consisting of 17 user functions extracted from

Miniz, a relatively simple library that combines optimized compression/decompres-

sion and PNG encoding/decoding functionalities, with its complete ACG consisting

of 127 nodes and 159 edges. We will use this sample partial ACG to explain our ap-

proach advantages and effectiveness. Table 1 includes the actual names of highlighted

nodes in Figure 8 for readers’ reference.

Each node in the graph is annotated with the features extracted from its respec-

tive source function, including the number of function arguments, calls to known

standard library functions and system APIs, and the cyclomatic complexity of its

control flow graph. Hence, an ACG can be considered a good high-level representa-

tion of a software project, combining all its “interesting” characteristics discussed in

Section 4.3.

The fundamental idea of our approach for binary to source matching is the fol-

lowing observation: The overall call graph of a piece of software, when augmented by

the features discussed before, is fairly unique and generally survives compilation. In

38

Table 1: Complementary information on Figure 8

Alias Real Function Name Library and API calls

A mz_zip_writer_add_mem_ex strlen, memset, time, _wassert

B mz_zip_writer_add_file

memset, strlen, fclose, fread,

_wassert,_ftelli64, _fseeki64

C tdefl_init memset

D mz_crc32

E tdefl_compress_buffer _wassert

F

tdefl_write_image_to_png_file

_in_memory_ex

malloc, memset, memcpy, free

G tdefl_output_buffer_putter realloc, memcpy

many cases, even a small portion of the call graph exhibits unique features.

The outlined portion of the ACG in Figure 8 consists of one function, F , calling

four other functions, C, D, E and G. When the number of arguments and the names

of recognizable library and system API calls of each of those functions is also taken

into account, we have found the pattern to be unique among call graphs of 30 different

open source projects, consisting of over 500,000 functions. This basically means that

once and if the same pattern is extracted from a binary call graph, it can be searched

in many different projects and uniquely and correctly identified.

Although the case highlighted in the paragraph above is a best-case scenario, it

is imperative to note that it can be leveraged to identify many more functions as

well. For instance, once C, D and E are uniquely identified, such a fact can be

effectively used to identify A and B as well. The same idea can be applied once again

to identify the functions denoted by f 1 to f 10, if they exhibit enough difference in

terms of their own properties. Therefore, not all functions need to have very unique

and distinctive features or call graph patterns in order to be identifiable. This is

in contrast to approaches adopted by previous work on binary to source matching,

which can detect reuse only to the extent that unique and identifiable tokens exist in

both binaries and source code.

39

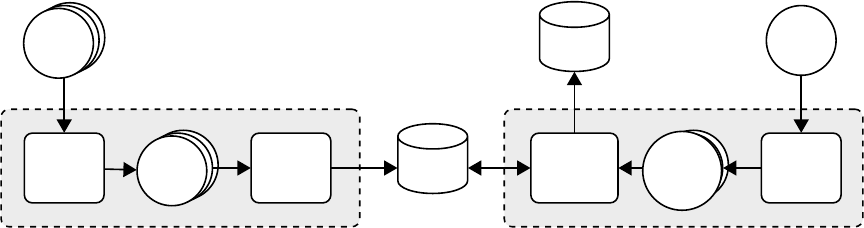

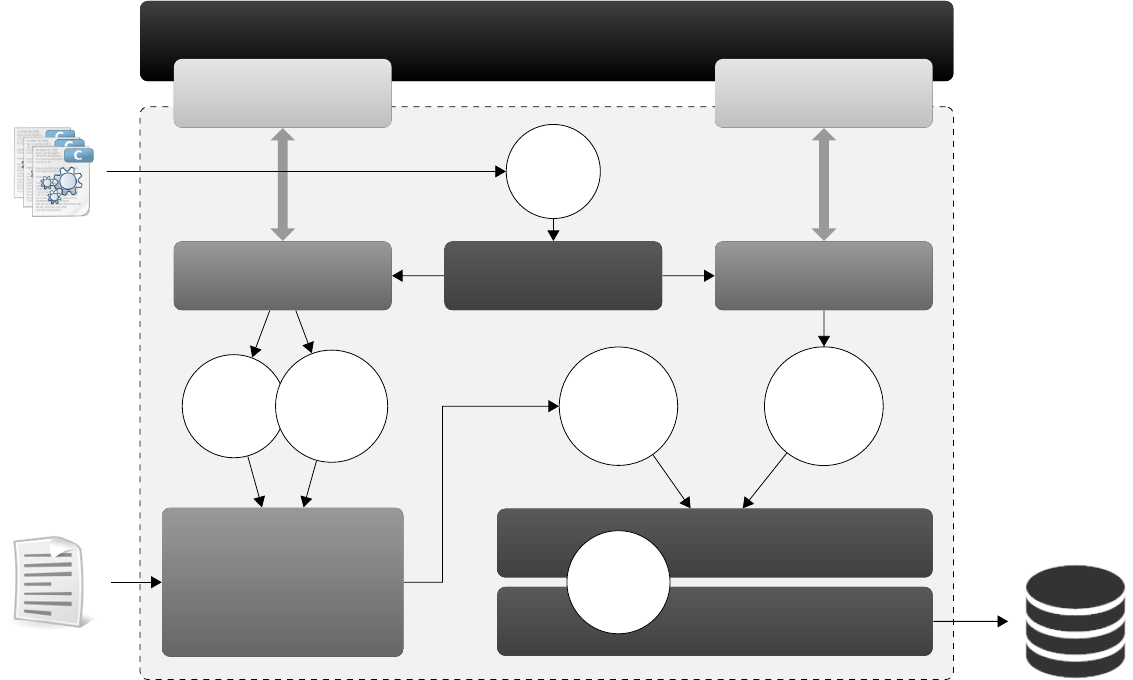

Source Pro cessor Binary Pro cessor

Source

Processor

Code

Bases

ACG

Indexer

Source

ACGs

Graph

Database

Binary

File

Binary

Processor

Graph Query

Generator

Binary

ACG

Patterns

Matching

Results

Figure 9: Overall design of CodeBin

4.5 Using ACG Patterns as Search Queries