Wim Vanderbauwhede

Jeremy Singer

Operating Systems

Foundations

with Linux on the Raspberry Pi

TEXTBOOK

Operating Systems

Foundations

with Linux on the Raspberry Pi

Wim Vanderbauwhede

Jeremy Singer

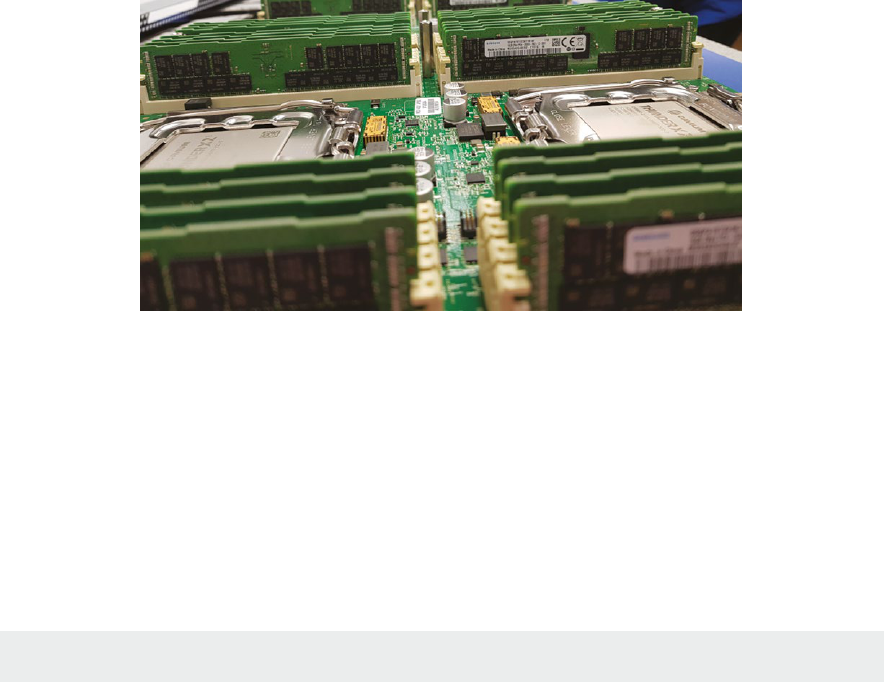

Operating Systems

Foundations

with Linux on the Raspberry Pi

TEXTBOOK

Arm Educaon Media is an imprint of Arm Limited, 110 Fulbourn Road, Cambridge, CBI 9NJ, UK

Copyright © 2019 Arm Limited (or its aliates). All rights reserved.

No part of this publicaon may be reproduced or transmied in any form or by any means, electronic

or mechanical, including photocopying, recording or any other informaon storage and retrieval

system, without permission in wring from the publisher, except under the following condions:

Permissions

You may download this book in PDF format for personal, non-commercial use only.

You may

reprint or republish portions of the text for non-commercial, educational or research

purposes but only if there is an attribution to Arm Education.

This book and the individual contributions contained in it are protected under copyright by the

Publisher (other than as may be noted herein). Nothing in this license grants you any right to modify

the whole, or portions of, this book.

Notices

Knowledge and best practice in this field are constantly changing. As new research and experience

broaden our understanding, changes in research methods and professional practices may become

necessary.

Readers must always rely on their own experience and knowledge in evaluating and using any

information, methods, project work, or experiments described herein. In using such information or

methods, they should be mindful of their safety and the safety of others, including parties for whom

they have a professional responsibility.

To the fullest extent permitted by law, the publisher and the authors, contributors, and editors shall

not have any responsibility or liability for any losses, liabilities, claims, damages, costs or expenses

resulting from or suffered in connection with the use of the information and materials set out in this

textbook.

Such information and materials are protected by intellectual property rights around the world and are

copyright © Arm Limited (or its affiliates). All rights are reserved. Any source code, models or other

materials set out in this textbook should only be used for non-commercial, educational purposes (and/or

subject to the terms of any license that is specified or otherwise provided by Arm). In no event shall

purchasing this textbook be construed as granting a license to use any other Arm technology or know-how.

ISBN: 978-1-911531-21-0

Version: 1.0.0 – PDF

For information on all Arm Education Media publications, visit our website at www.armedumedia.com

To report errors or send feedback please email [email protected]

Foreword

xviii

Disclaimer

xix

Preface

xx

About the Authors

xxiv

Acknowledgments

xxv

1. A Memory-centric system model

1.1 Overview

2

1.2 Modeling the system

2

1.2.1 The simplest possible model

2

1.2.2 What is this ‘‘system state’’?

3

1.2.3 Rening non-processor acons

4

1.2.4 Interrupt requests

4

1.2.5 An important peripheral: the mer

5

1.3 Bare-bones processor model

5

1.3.1 What does the processor do?

5

1.3.2 Processor internal state: registers

6

1.3.3 Processor instrucons

7

1.3.4 Assembly language

8

1.3.5 Arithmec logic unit

8

1.3.6 Instrucon cycle

8

1.3.7 Bare bones processor model

10

1.4 Advanced processor model

11

1.4.1 Stack support

11

1.4.2 Subroune calls

12

1.4.3 Interrupt handling

13

1.4.4 Direct memory access

13

1.4.5 Complete cycle-based processor model

14

1.4.6 Caching

15

1.4.7 Running a program on the processor

18

1.4.8 High-level instrucons

19

1.5 Basic operang system concepts

20

1.5.1 Tasks and concurrency

20

1.5.2 The register le

21

1.5.3 Time slicing and scheduling

21

1.5.4 Privileges

23

Contents

vi

1.5.5 Memory management

23

1.5.6 Translaon look-aside buer (TLB)

24

1.6 Exercises and quesons

25

1.6.1 Task scheduling

25

1.6.2 TLB model

25

1.6.3 Modeling the system

25

1.6.4 Bare-bones processor model

25

1.6.5 Advanced processor model

25

1.6.6 Basic operang system concepts

25

2. A Praccal view of the Linux System

2.1 Overview

30

2.2 Basic concepts

30

2.2.1 Operang system hierarchy

31

2.2.2 Processes

31

2.2.3 User space and kernel space

32

2.2.4 Device tree and ATAGs

32

2.2.5 Files and persistent storage

32

Paron

32

File system

33

2.2.6 ‘Everything is a le’

33

2.2.7 Users

34

2.2.8 Credenals

34

2.2.9 Privileges and user administraon

35

2.3 Boong Linux on the Arm (Raspberry Pi 3)

36

2.3.1 Boot process stage 1: Find the bootloader

36

2.3.2 Boot process stage 2: Enable the SDRAM

36

2.3.3 Boot process stage 3: Load the Linux kernel into memory

37

2.3.4 Boot process stage 4: Start the Linux kernel

37

2.3.4 Boot process stage 5: Run the processor-independent kernel code

37

2.3.5 Inializaon

37

2.3.6 Login

38

2.4 Kernel administraon and programming

38

2.4.1 Loadable kernel modules and device drivers

38

2.4.2 Anatomy of a Linux kernel module

39

2.4.3 Building a custom kernel module

41

2.4.4 Building a custom kernel

42

Contents

vii

2.5 Kernel administraon and programming

42

2.5.1 Process management

42

2.5.2 Process scheduling

43

2.5.3 Memory management

43

2.5.4 Concurrency and parallelism

43

2.5.5 Input/output

43

2.5.6 Persistent storage

43

2.5.7 Networking

44

2.6 Summary

44

2.7 Exercises and quesons

44

2.7.1 Installing Raspbian on the Raspberry Pi 3

44

2.7.2 Seng up SSH under Raspbian

44

2.7.3 Wring a kernel module

44

2.7.4 Boong Linux on the Raspberry Pi

45

2.7.5 Inializaon

45

2.7.6 Login

45

2.7.7 Administraon

45

3. Hardware architecture

3.1 Overview

49

3.2 Arm hardware architecture

49

3.3 Arm Cortex M0+

50

3.3.1 Interrupt control

51

3.3.2 Instrucon set

51

3.3.3 System mer

52

3.3.4 Processor mode and privileges

52

3.3.5 Memory protecon

52

3.4 Arm Cortex A53

53

3.4.1 Interrupt control

53

3.4.2 Instrucon set

54

Floang-point and SIMD support

55

3.4.3 System mer

56

3.4.4 Processor mode and privileges

56

3.4.5 Memory management unit

57

Translaon look-aside buer

58

Addional caches

59

viii

Contents

3.4.6 Memory system

59

L1 Cache

59

L2 Cache

60

Data cache coherency

61

3.5 Address map

61

3.6 Direct memory access

63

3.7 Summary

64

3.8 Exercises and quesons

64

3.8.1 Bare-bones programming

64

3.8.2 Arm hardware architecture

65

3.8.3 Arm Cortex M0+

65

3.8.4 Arm Cortex A53

65

3.8.5 Address map

65

3.8.6 Direct memory access

65

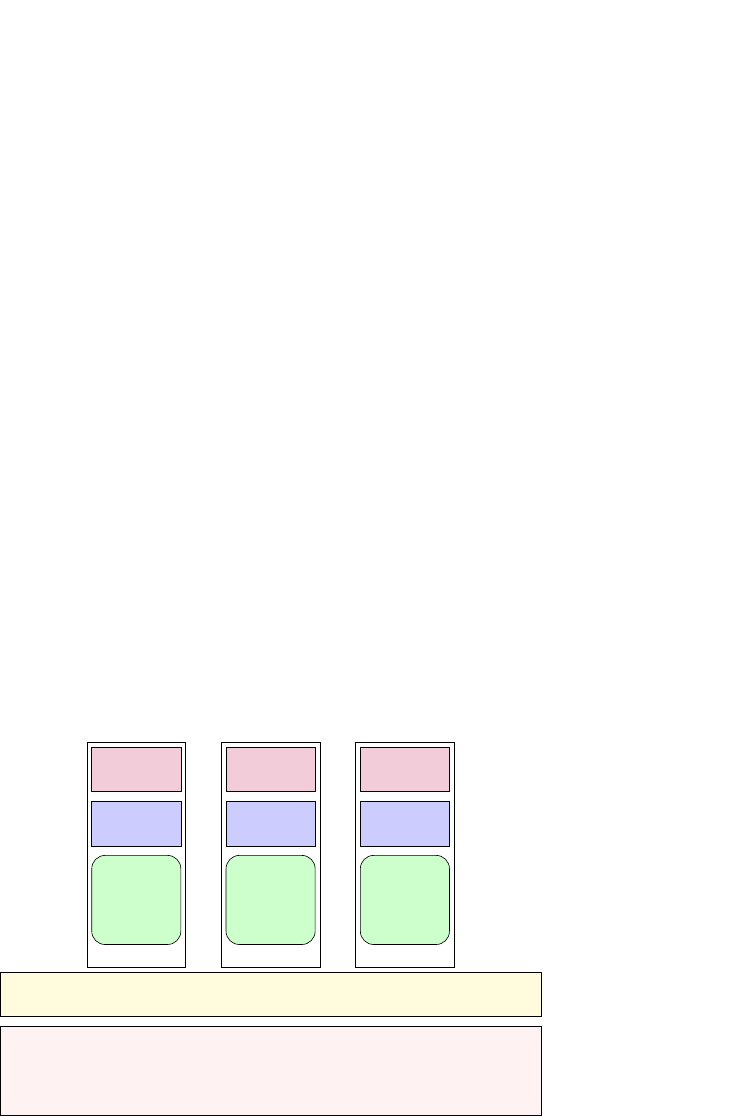

4. Process management

4.1 Overview

70

4.2 The process abstracon

70

4.2.1 Discovering processes

71

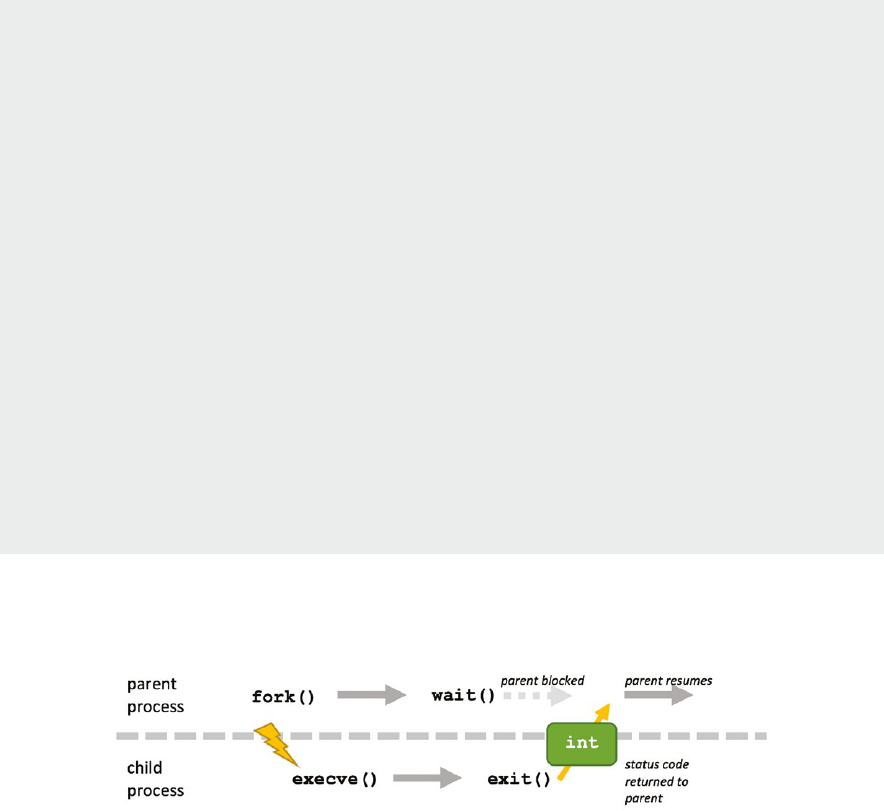

4.2.2 Launching a new process

71

4.2.3 Doing something dierent

73

4.2.4 Ending a process

73

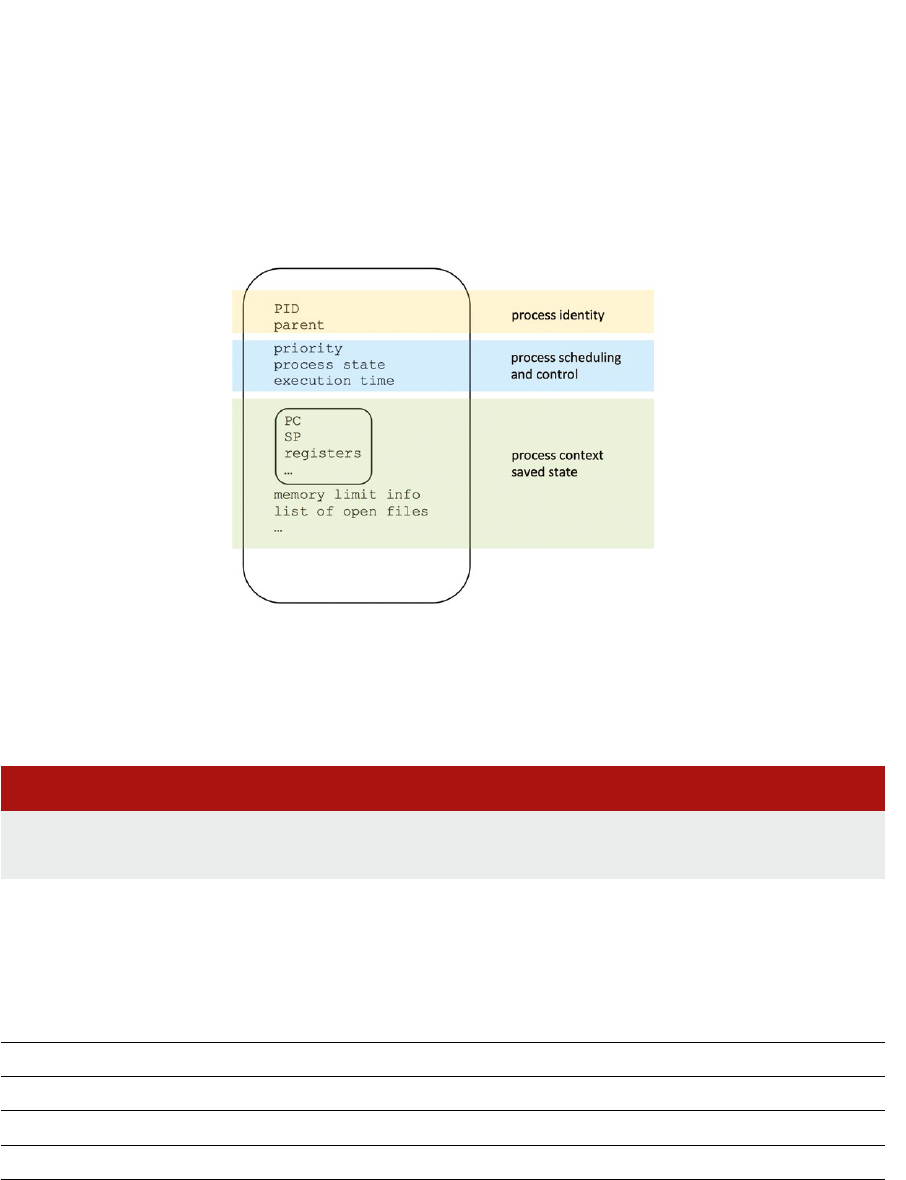

4.3 Process metadata

74

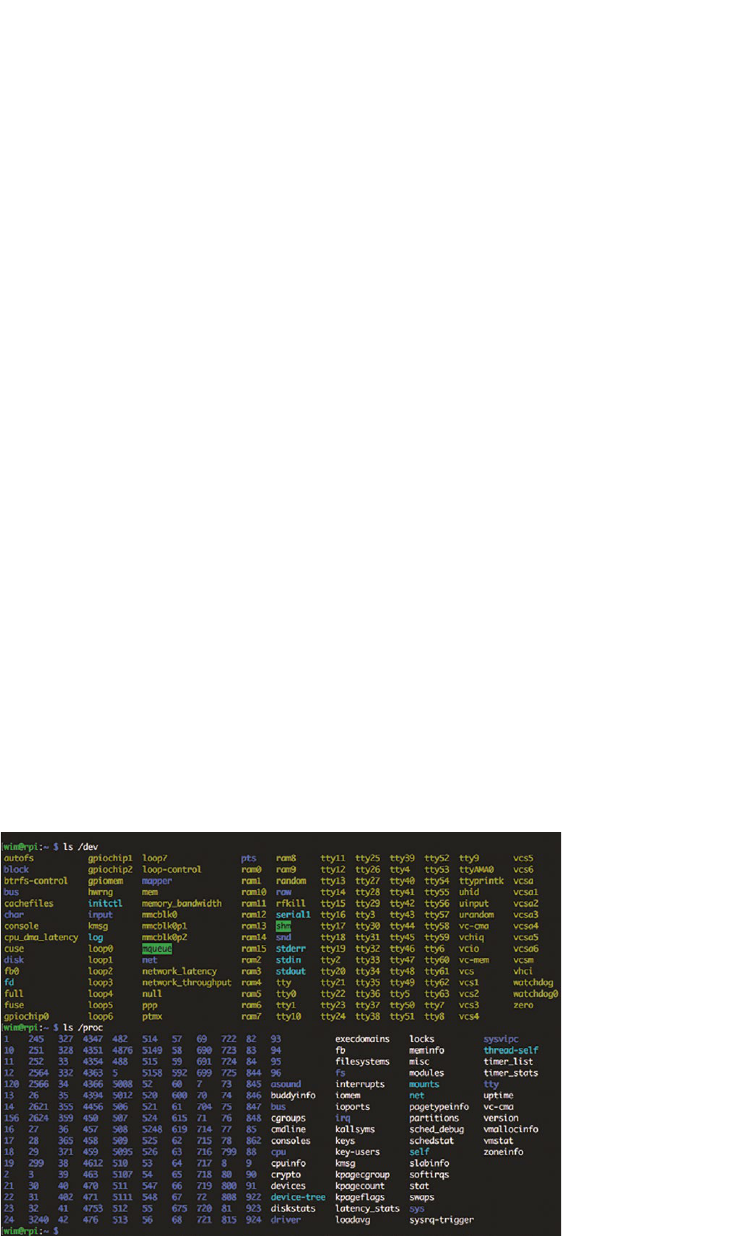

4.3.1 The /proc le system

75

4.3.2 Linux kernel data structures

76

4.3.3 Process hierarchies

77

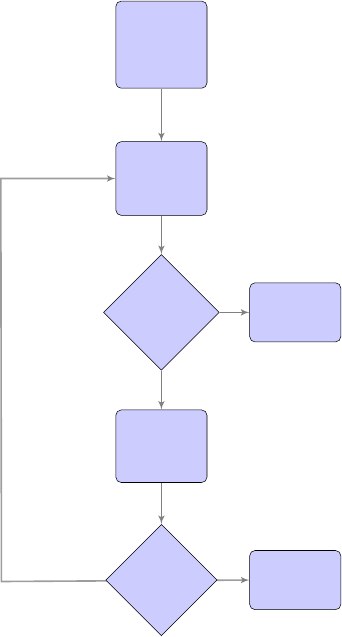

4.4 Process state transions

79

4.5 Context switch

81

4.6 Signal communicaons

83

4.6.1 Sending signals

83

4.6.2 Handling signals

84

4.7 Summary

85

4.8 Further reading

85

4.9 Exercises and quesons

85

4.9.1 Mulple choice quiz

85

4.9.2 Metadata mix

86

Contents

ix

4.9.3 Russian doll project

86

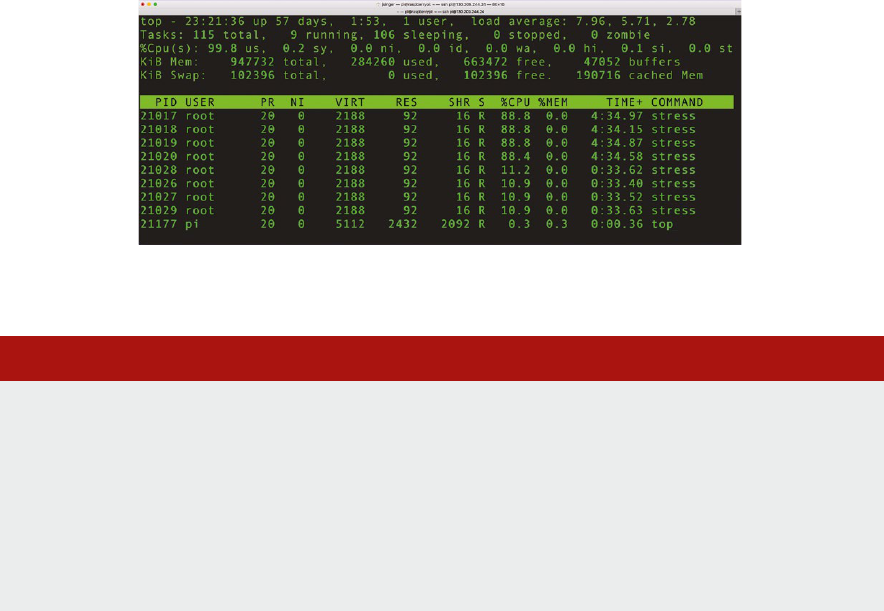

4.9.4 Process overload

86

4.9.5 Signal frequency

86

4.9.6 Illegal instrucons

86

5. Process scheduling

5.1 Overview

90

5.2 Scheduling overview: what, why, how?

90

5.2.1 Denion

90

5.2.2 Scheduling for responsiveness

90

5.2.3 Scheduling for performance

91

5.2.4 Scheduling policies

91

5.3 Recap: the process lifecycle

91

5.4 System calls

93

5.4.1 The Linux syscall(2) funcon

94

5.4.2 The implicaons of the system call mechanism

95

5.5 Scheduling principles

95

5.5.1 Preempve versus non-preempve scheduling

96

5.5.2 Scheduling policies

96

5.5.3 Task aributes

96

5.6 Scheduling criteria

96

5.7 Scheduling policies

97

5.7.1 First-come, rst-served (FCFS)

97

5.7.2 Round-robin (RR)

98

5.7.3 Priority-driven scheduling

98

5.7.4 Shortest job rst (SJF) and shortest remaining me rst (SRTF)

99

5.7.5 Shortest elapsed me rst (SETF)

100

5.7.6 Priority scheduling

100

5.7.7 Real-me scheduling

100

5.7.8 Earliest deadline rst (EDF)

101

5.8 Scheduling in the Linux kernel

101

5.8.1 User priories: niceness

102

5.8.2 Scheduling informaon in the task control block (TCB)

102

5.8.3 Process priories in the Linux kernel

104

Priority info in task_struct

105

Priority and load weight

106

x

Contents

5.8.4 Normal scheduling policies: the completely fair scheduler (CFS)

107

5.8.5 So real-me scheduling policies

110

5.8.6 Hard real-me scheduling policy

112

Time budget allocaon

113

5.8.7 Kernel preempon models

115

5.8.8 The red-black tree in the Linux kernel

116

Creang a new rbtree

116

Searching for a value in a rbtree

117

Inserng data into a rbtree

117

Removing or replacing exisng data in a rbtree

118

Iterang through the elements stored in a rbtree (in sort order)

118

Cached rbtrees

119

5.8.9 Linux scheduling commands and API

119

Normal processes

119

Real-me processes

120

5.9 Summary

120

5.10 Exercises and quesons

120

5.10.1 Wring a scheduler

120

5.10.2 Scheduling

120

5.10.3 System calls

121

5.10.4 Scheduling policies

121

5.10.5 The Linux scheduler

121

6. Memory management

6.1 Overview

126

6.2 Physical memory

126

6.3 Virtual memory

127

6.3.1 Conceptual view of memory

127

6.3.2 Virtual addressing

128

6.3.3 Paging

129

6.4 Page tables

130

6.4.1 Page table structure

130

6.4.2 Linux page tables on Arm

132

6.4.3 Page metadata

134

6.4.4 Faster translaon

136

6.4.5 Architectural details

137

Contents

xi

6.5 Managing memory over-commitment

138

6.5.1 Swapping

138

6.5.2 Handling page faults

138

6.5.3 Working set size

141

6.5.4 In-memory caches

142

6.5.5 Page replacement policies

142

Random

143

Not recently used (NRU)

143

Clock

143

Least recently used

144

Tuning the system

144

6.5.6 Demand paging

145

6.5.7 Copy on Write (CoW)

146

6.5.8 Out of memory killer

147

6.6 Process view of memory

148

6.7 Advanced topics

149

6.8 Further reading

151

6.9 Exercises and quesons

151

6.9.1 How much memory?

151

6.9.2 Hypothecal address space

152

6.9.3 Custom memory protecon

152

6.9.4 Inverted page tables

152

6.9.5 How much memory?

153

6.9.6 Tiny virtual address space

153

6.9.7 Denions quiz

153

7. Concurrency and parallelism

7.1 Overview

158

7.2 Concurrency and parallelism: denions

158

7.2.1 What is concurrency?

158

7.2.2 What is parallelism?

158

7.2.3 Programming model view

158

7.3 Concurrency

159

7.3.1 What are the issues with concurrency?

159

Shared resources

159

Exchange of informaon

160

xii

Contents

7.3.2 Concurrency terminology

161

Crical secon

161

Synchronizaon

161

Deadlock

161

7.3.3 Synchronizaon primives

163

7.3.4 Arm hardware support for synchronizaon primives

163

Exclusive operaons and monitors

163

Shareability domains

164

7.3.5 Linux kernel synchronizaon primives

165

Atomic primives

165

Memory operaon ordering

169

Memory barriers

170

Spin locks

173

Futexes

174

Kernel mutexes

174

Semaphores

175

7.3.6 POSIX synchronizaon primives

177

Mutexes

178

Semaphores

178

Spin locks

179

Condion variables

179

7.4 Parallelism

181

7.4.1 What are the challenges with parallelism?

181

7.4.2 Arm hardware support for parallelism

182

7.4.3 Linux kernel support for parallelism

183

SMP boot process

183

Load balancing

183

Processor anity control

184

7.5 Data-parallel and task-parallel programming models

184

7.5.1 Data parallel programming

184

Full data parallelism: map

184

Reducon

185

Associavity

185

Binary tree-based parallel reducon

185

7.5.2 Task parallel programming

186

Contents

xiii

7.6 Praccal parallel programming frameworks

186

7.6.1 POSIX Threads (pthreads)

186

7.6.2 OpenMP

189

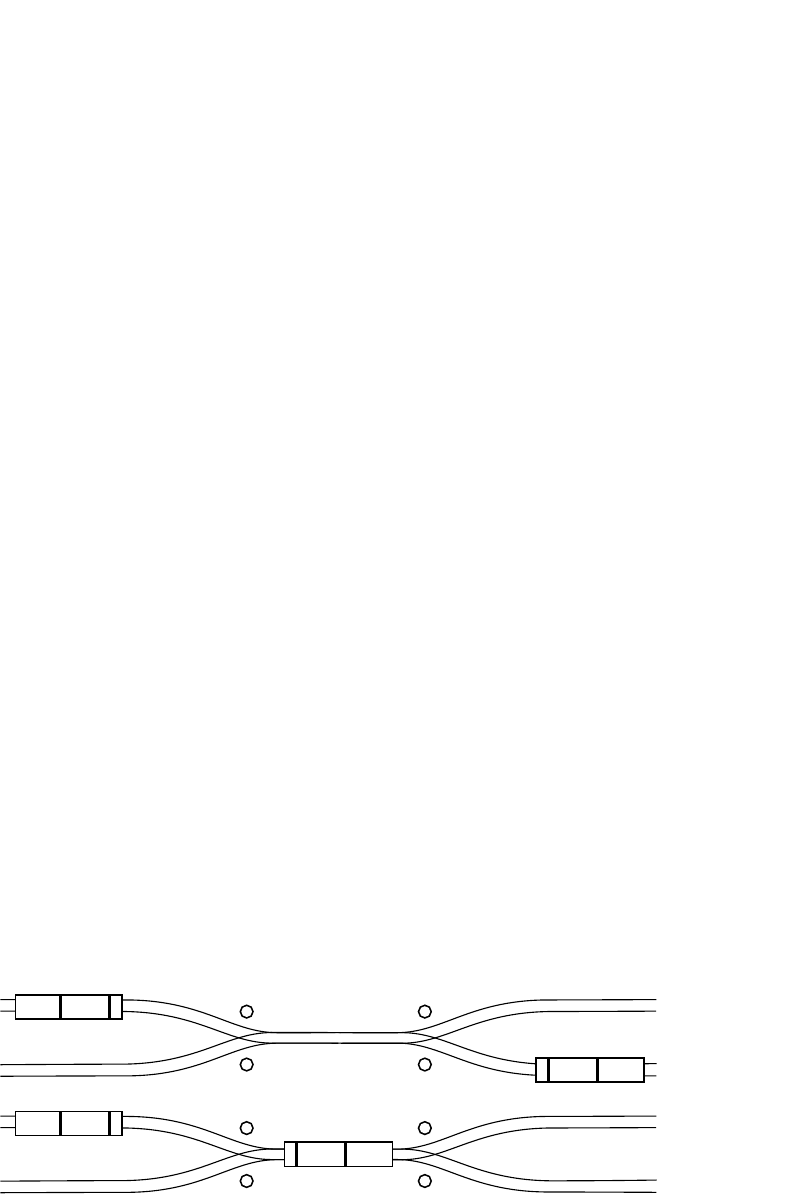

7.6.3 Message passing interface (MPI)

190

7.6.4 OpenCL

191

7.6.5 Intel threading building blocks (TBB)

194

7.6.6 MapReduce

195

7.7 Summary

195

7.8 Exercises and quesons

195

7.8.1 Concurrency: synchronizaon of tasks

196

7.8.1 Parallelism

196

8. Input/output

8.1 Overview

202

8.2 The device zoo

202

8.2.1 Inspect your devices

203

8.2.2 Device classes

203

8.2.3 Trivial device driver

204

8.3 Connecng devices

206

8.3.1 Bus architecture

206

8.4 Communicang with devices

207

8.4.1 Device abstracons

207

8.4.2 Blocking versus non-blocking IO

207

8.4.3 Managing IO interacons

208

Polling

209

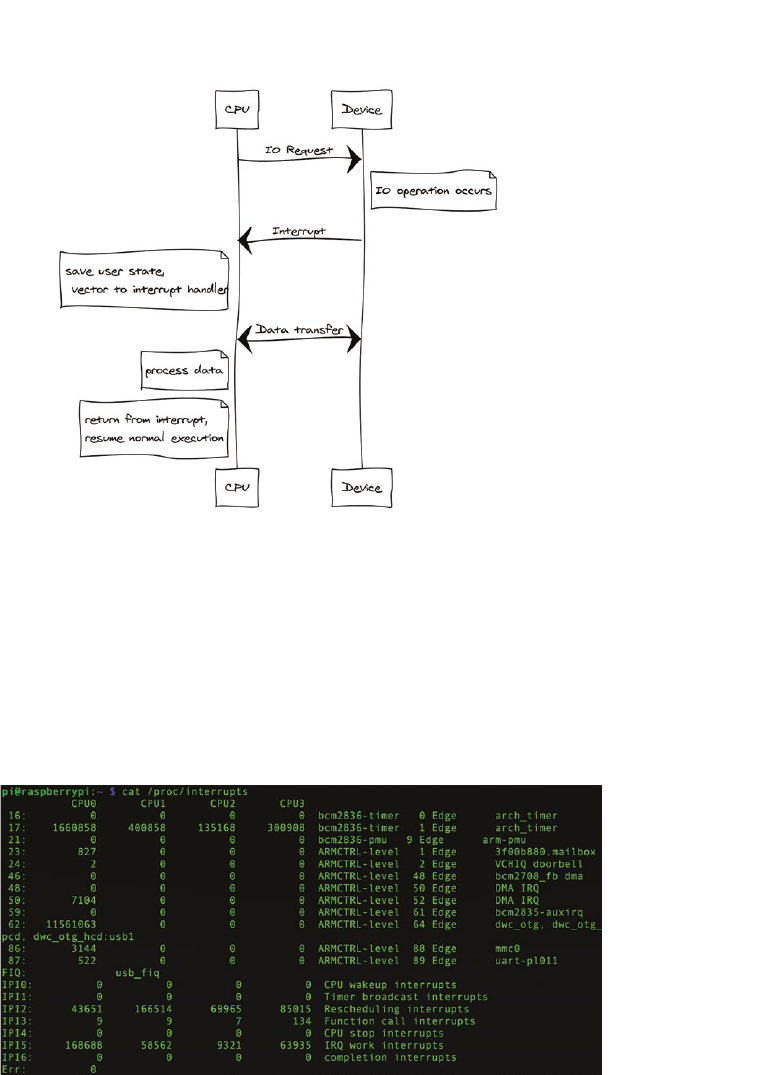

Interrupts

209

Direct memory access

210

8.5 Interrupt handlers

210

8.5.1 Specic interrupt handling details

211

8.5.2 Install an interrupt handler

212

8.6 Ecient IO

213

8.7 Further reading

213

8.8 Exercises and quesons

213

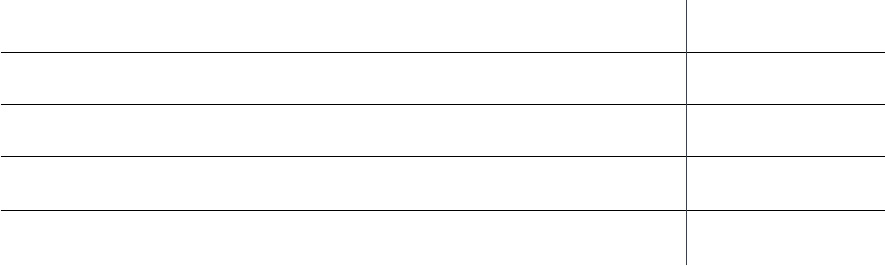

8.8.1 How many interrupts?

213

8.8.2 Comparave complexity

214

8.8.3 Roll your own Interrupt Handler

214

8.8.4 Morse Code LED Device

214

xiv

Contents

9. Persistent storage

9.1 Overview

218

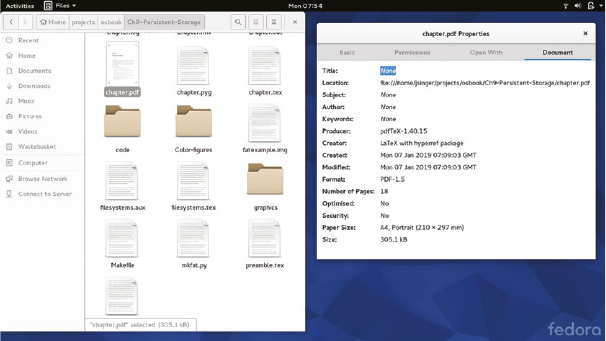

9.2 User perspecve on the le system

218

9.2.1 What is a le?

218

9.2.2 How are mulple les organized?

219

9.3 Operaons on les

221

9.4 Operaons on directories

222

9.5 Keeping track of open les

222

9.6 Concurrent access to les

223

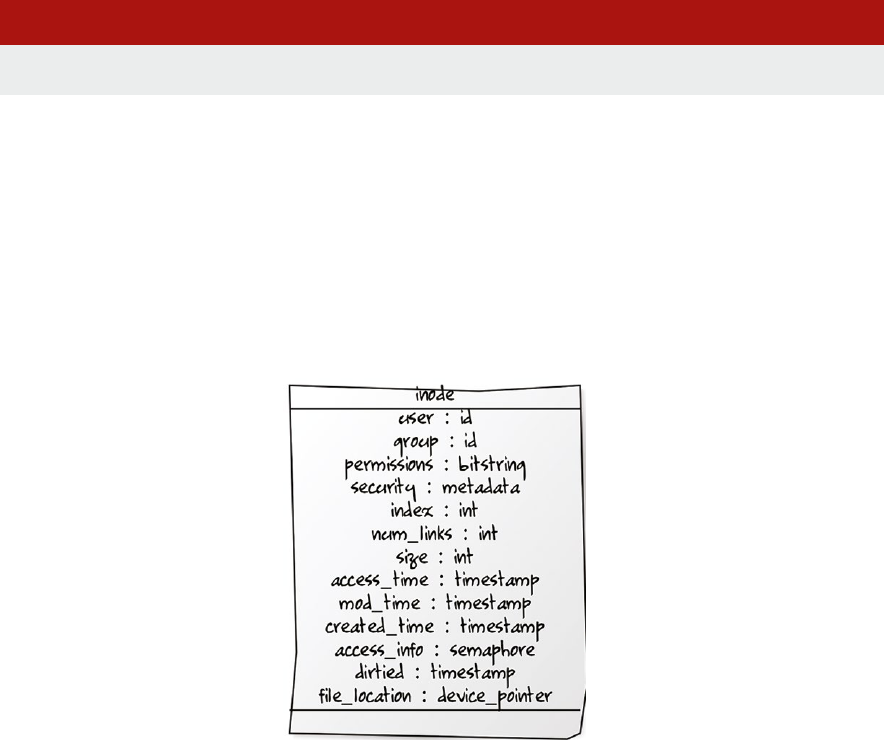

9.7 File metadata

225

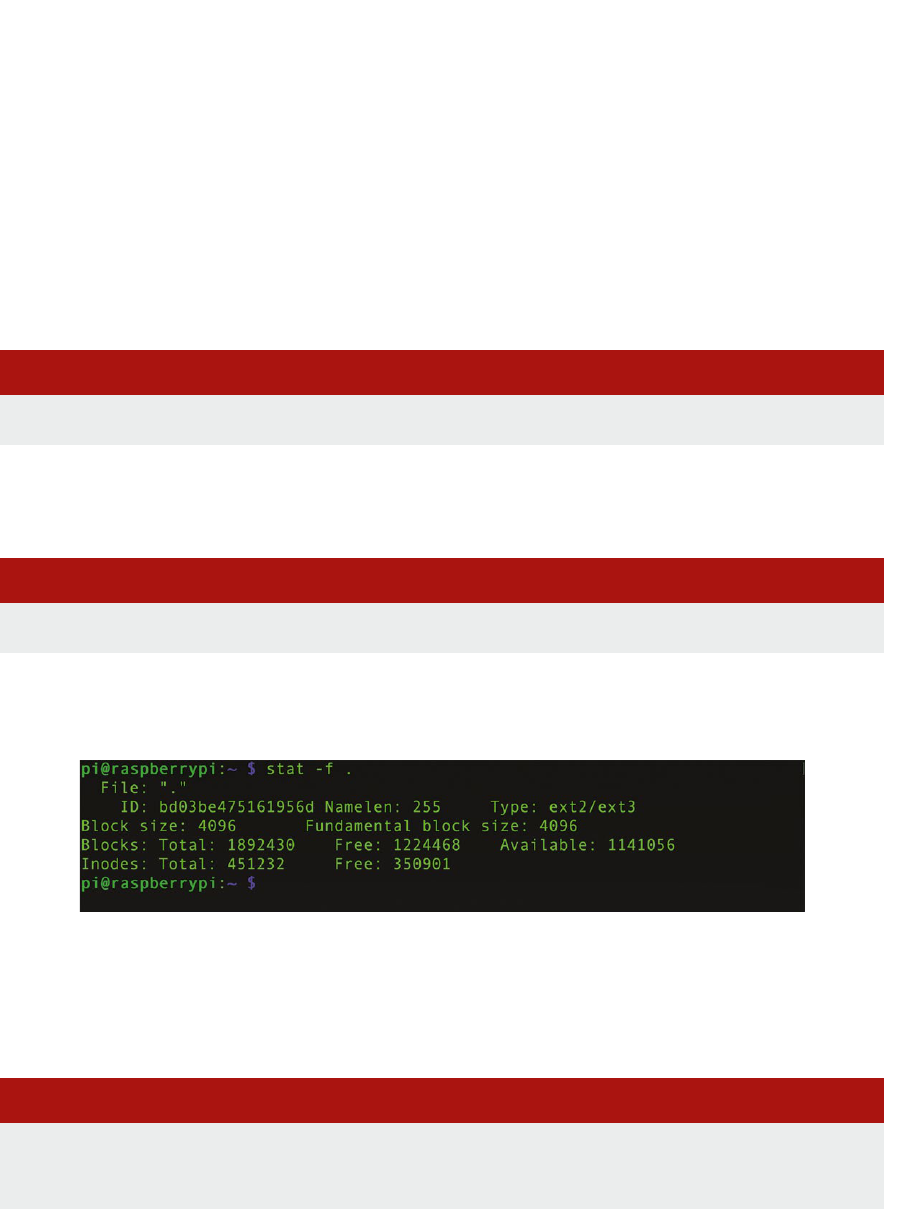

9.8 Block-structured storage

226

9.9 Construcng a logical le system

228

9.9.1 Virtual le system

228

9.10 Inodes

230

9.10.1 Mulple links, single inode

231

9.10.2 Directories

231

9.11 ext4

233

9.11.1 Layout on disk

233

9.11.2 Indexing data blocks

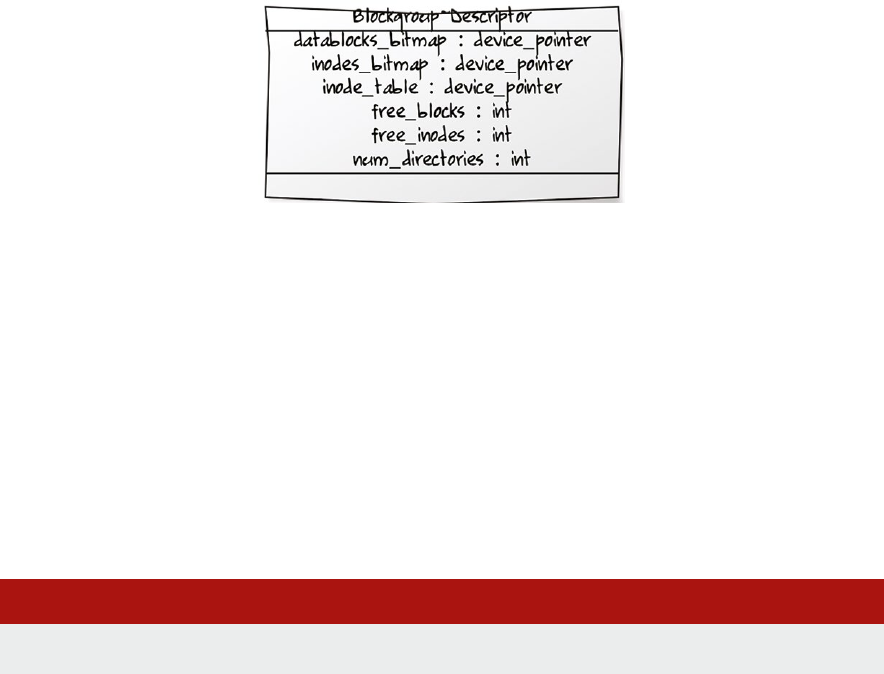

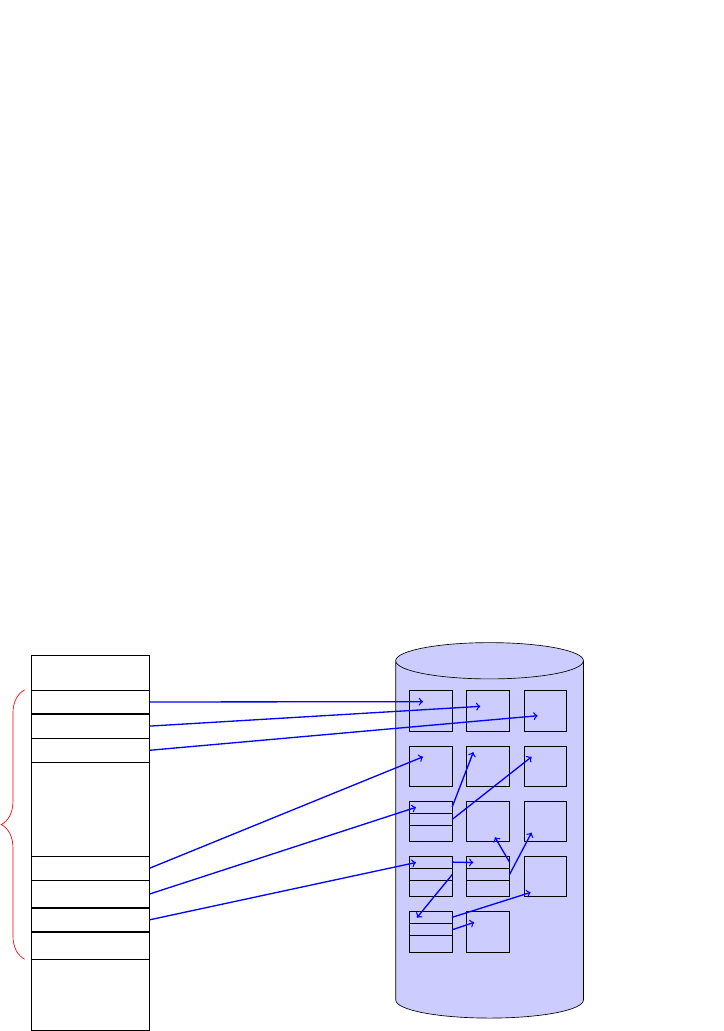

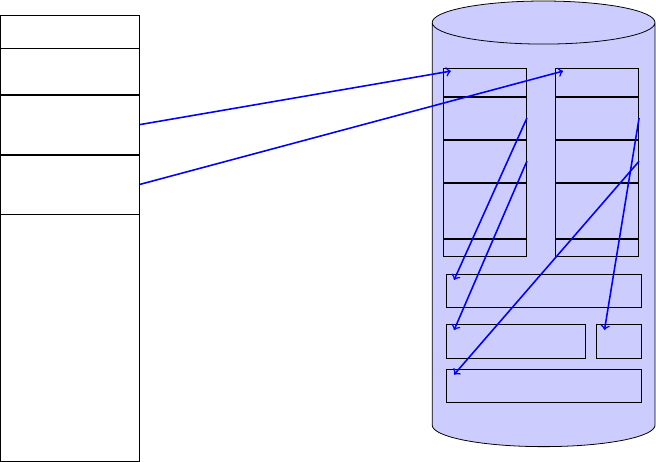

224

9.11.3 Mulple links, single inode

237

9.11.4 Checksumming

237

9.11.5 Encrypon

237

9.12 FAT

238

9.12.1 Advantages of FAT

239

9.12.2 Construct a mini le system using FAT

240

9.13 Latency reducon techniques

242

9.14 Fixing up broken le systems

243

9.15 Advanced topics

243

9.16 Further reading

245

9.17 Exercises and quesons

245

9.17.1 Hybrid conguous and linked le system

245

9.17.2 Extra FAT le pointers

245

9.17.3 Expected le size

245

9.17.4 Ext4 extents

245

9.17.5 Access mes

245

9.17.6 Database decisions

246

Contents

xv

10. Networking

10.1 Overview

250

10.2 What is networking

250

10.3 Why is networking part of the kernel?

250

10.4 The OSI layer model

251

10.5 The Linux networking stack

252

10.5.1 Device drivers

253

10.5.2 Device-agnosc interface

253

10.5.3 Network protocols

253

10.5.4 Protocol-agnosc interface

253

10.5.5 System call interface

254

10.5.6 Socket buers

254

10.6 The POSIX standard socket interface library

255

10.6.1 Stream socket (TCP) communicaons ow

255

10.6.2 Common internet data types

256

Socket address data type: struct sockaddr

256

Internet socket address data type: struct sockaddr_in

257

10.6.3 Common POSIX socket API funcons

258

Create a socket descriptor: socket()

258

Bind a server socket address to a socket descriptor: bind()

258

Enable server socket connecon requests: listen()

259

Accept a server socket connecon request: accept()

259

Client connecon request: ‘connect()’

260

Write data to a stream socket: send()

261

Read data from a stream socket: recv()

261

Seng server socket opons: setsockopt()

262

10.6.4 Common ulity funcons

263

Internet address manipulaon funcons

263

Internet network/host byte order manipulaon funcons

263

Host table access funcons

264

10.6.5 Building applicaons with TCP

264

Request/response communicaon using TCP

264

TCP server

265

TCP client

266

10.6.6 Building applicaons with UDP

268

UDP server

269

xvi

Contents

UDP client

270

UDP client using connect()

271

10.6.7 Handling mulple clients

271

The select() system call

271

Mulple server processes: fork() and exec()

275

Multhreaded servers using pthreads

276

10.7 Summary

278

10.8 Exercises and quesons

278

10.8.1 Simple social networking

278

10.8.2 The Linux networking stack

278

10.8.3 The POSIX socket API

278

11. Advanced topics

11.1 Overview

282

11.2 Scaling down

282

11.3 Scaling up

283

11.4 Virtualizaon and containerizaon

285

11.5 Security

287

11.5.1 Rowhammer, Rampage, Throwhammer, and Nethammer

287

11.5.2 Spectre, Meltdown, Foreshadow

288

11.6 Vericaon and cercaon

289

11.7 Recongurability

291

11.8 Linux development roadmap

292

11.9 Further reading

293

11.10 Exercises and quesons

293

11.10.1 Make a minimal kernel

293

11.10.2 Verify important properes

293

11.10.3 Commercial comparison

293

11.10.4 For or against cercaon

293

11.10.5 Devolved decisions

293

11.10.6 Underclock, overclock

293

Glossary of terms

296

Index

304

Contents

xvii

Foreword

In 1983, when I started modeling a RISC processor using a simulator wrien in BBC Basic on a BBC

Microcomputer, I could hardly have conceived that there would be billions of Arm (then short for

‘Acorn RISC Machine’) processors all over the world within a few decades.

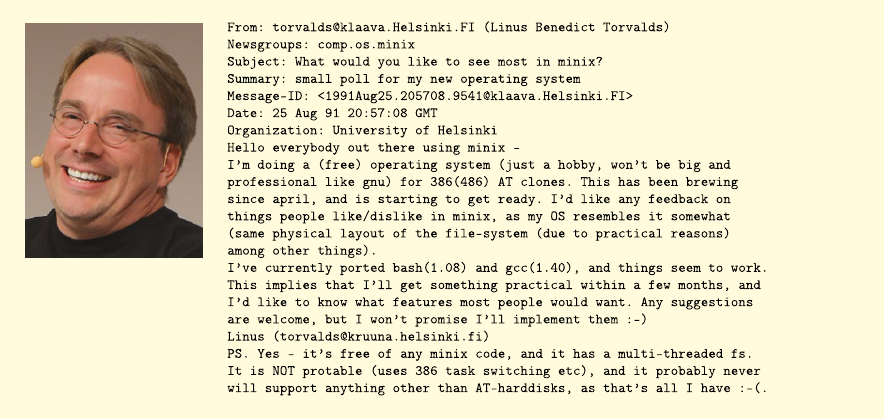

I expect Linus Torvalds has similar feelings, when he thinks back to the early days, craing a prototype

operang system for his i386 PC. Now Linux runs on a vast array of devices, from smartwatches to

supercomputers. I am delighted that an increasing proporon of these devices are built around Arm

processor cores.

In a more recent tale of runaway success, the Raspberry Pi single-board computer has far exceeded

its designers’ inial expectaons. The Raspberry Pi Foundaon thought they might sell one thousand

units, ‘maybe 10 thousand in our wildest dreams.’ With sales gures now around 20 million, the

Raspberry Pi is rmly established as Britain’s best-selling computer.

This textbook aims to bring these three technologies together—Arm, Linux, and Raspberry Pi. The

authors’ ambious goal is to ‘make Operang Systems fun again.’ As a professor in one of the UK’s

largest university Computer Science departments, I am well aware that modern students demand

engaging learning materials. Dusty 900-page textbooks with occasional black and white illustraons

are not well received. Today’s learners require interacve content, gaining understanding through

praccal experience and intuive analogies. My observaon applies to students in tradional higher

educaon, as well as those pursuing blended and fully online educaon. I am condent this innovave

textbook will meet the needs of the next generaon of Computer Science students.

While the modern systems soware stack has become large and complex, the fundamental principles

are unchanging. Operang Systems must trade-o abstracon for eciency. In this respect, Linux

on Arm is parcularly instrucve. The authors do an excellent job of presenng Operang Systems

concepts, with direct links to concrete examples of these concepts in Linux on the Raspberry Pi.

Please don’t just read this textbook – buy a Pi and try out the praccal exercises as you go.

Was it Plutarch who said, ‘The mind is not a vessel to be lled but a re to be kindled’? We could

translate this into the Operang Systems domain as follows: ‘Learning isn’t just reading source code;

it’s bootstrapping machines.’ I hope that you enjoy all these acvies, as you explore Operang

Systems with Linux on Arm using your Raspberry Pi.

Steve Furber CBE FRS FREng

ICL Professor of Computer Engineering

The University of Manchester, UK

February 2019

xviii

xix

Disclaimer

The design examples and related soware les included in this book are created for educaonal

purposes and are not validated to the same quality level as Arm IP products. Arm Educaon Media

and the author do not make any warranes of these designs.

Note

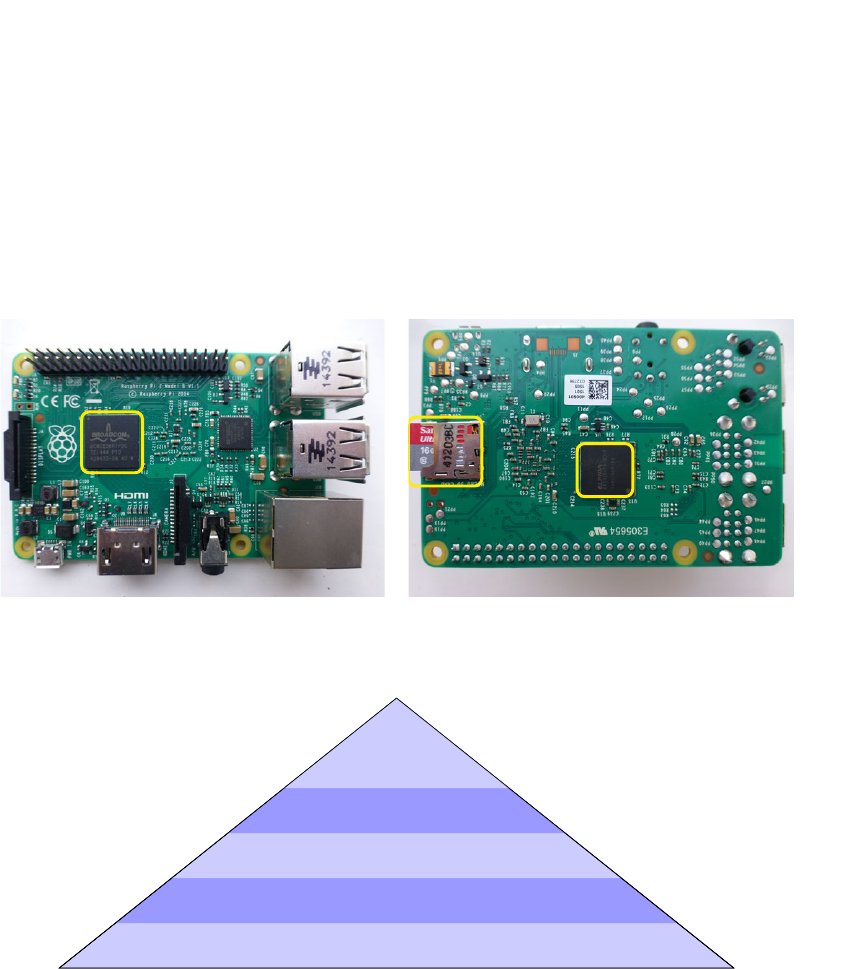

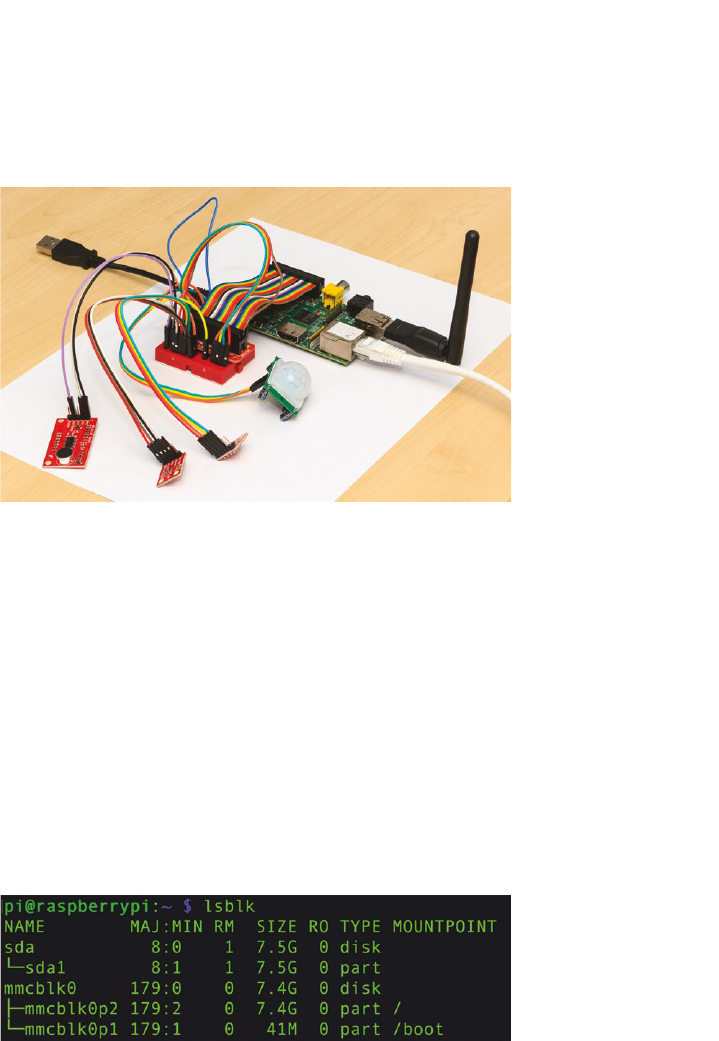

When we developed the material for this textbook, we worked with Raspberry Pi 3B boards.

However, all our praccal exercises should work on other generaons and variants of Raspberry Pi

devices, including the more recent Raspberry Pi 4.

Preface

Introducon

Modern computer devices are fabulously complicated both in terms of the processor hardware and

the soware they run.

At the heart of any modern computer device sits the operang system. And if the device is a

smartphone, IoT node, datacentre server or supercomputer, then the operang system is very likely to

be Linux: about half of consumer devices run Linux; the vast majority of smartphones worldwide (86%)

run Android, which is built on the Linux kernel. Of the top one million web servers, 98% run Linux.

Finally, the top 500 fastest supercomputers in the world all run Linux.

On the hardware side, Arm has a 95% market share in smartphone and tablet processors as well as

being used in the majority of Internet of Things (IoT) devices such as webcams, wireless routers, etc.

and embedded devices in general.

Since its creaon by Linus Torvalds in 1991, the eorts of thousands of people, most of them

volunteers, have turned Linux into a state-of-the-art, exible and powerful operang system, suitable

for any system from ny IoT devices to the most powerful supercomputers.

Meanwhile, in roughly the same period, the Arm processor range has expanded to cover an equally

wide gamut of systems and devices, including the remarkably successful Raspberry Pi.

So if you want to learn about Operang Systems but keep a praccal, real-world focus, then this book

is an ideal starng point. This book will help you answer quesons such as:

What is a le, and why is the le concept so important in Linux?

What is scheduling and how can knowledge of Linux scheduling help you create a high-throughput

video processor or a mission-crical real-me system?

What are POSIX threads, and how can the Linux kernel assist you in making your multhreaded

applicaons faster and more responsive?

How does the Linux kernel support networking, and how do you create network clients and servers?

How does the Arm hardware assist the Linux kernel in managing memory and how does

understanding memory management make you a beer programmer?

The aim of this book is to provide a praccal introducon to the foundaons of modern operang

systems, with a parcular focus on GNU/Linux and the Arm plaorm. Our unique perspecve is

that we explain operang systems theory and concepts but ground them in praccal use through

illustrave examples of their implementaon in GNU/Linux, as well as making the connecon with

the Arm hardware supporng the OS funconality.

xx

Is this book suitable for you?

This book does not require prior knowledge of operang systems, but some familiarity with command-

line operaons in a GNU/Linux system is expected. We discuss technical details of operang systems,

and we use source code to illustrate many concepts. Therefore, you need to know C and Python, and

you need to be familiar with basic data structures such as arrays, queues, stacks and trees.

This textbook is ideal for a one-semester course introducing the concepts and principles underlying

modern operang systems. It complements the Arm online courses in Real-Time Operang Systems

Design and Programming, and Embedded Linux.

Online addional material

The companion web site of the book (www.dcs.gla.ac.uk/operang-system-foundaons) contains:

Source code for all original code snippets listed in the book;

Answers to quesons and exercises;

Lab materials;

Addional content;

Addional teaching materials;

Further reading.

Target plaorm

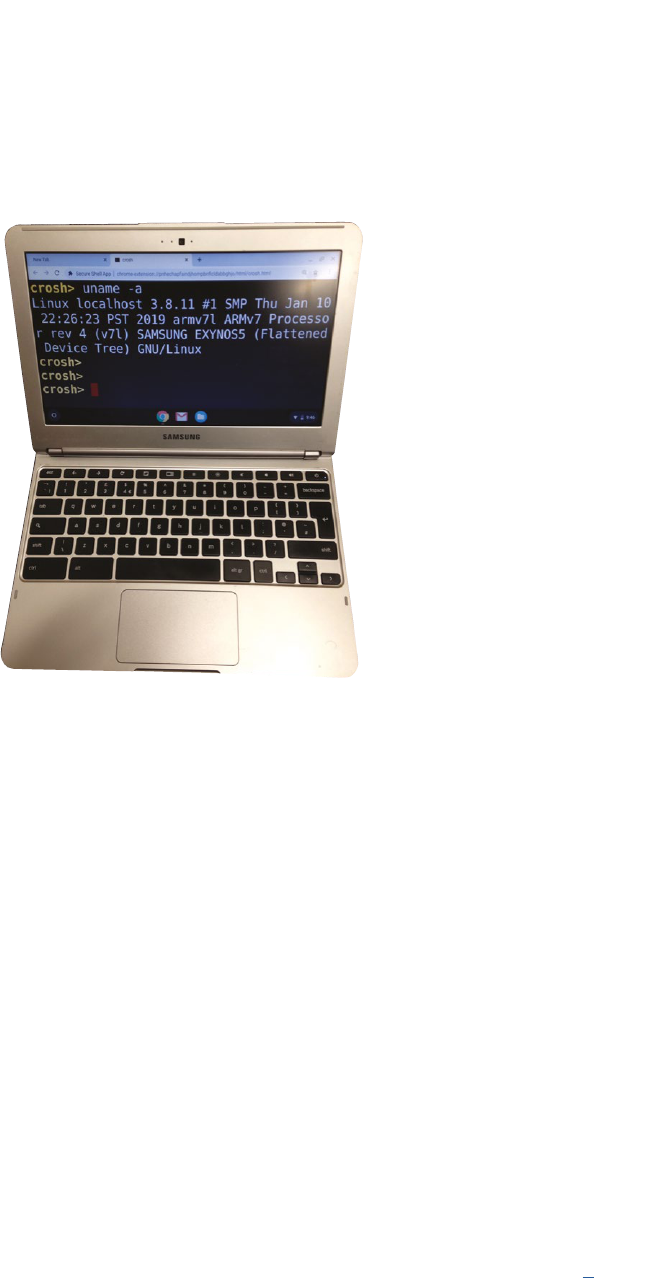

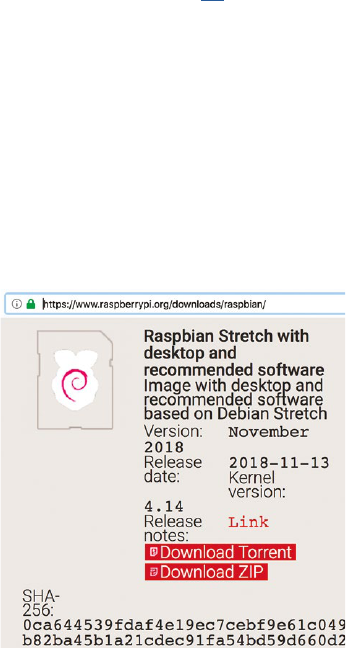

This textbook focuses on the Raspberry Pi 3, an Arm Cortex-A53 plaorm running Linux. We use the

Raspbian GNU/Linux distribuon. However, the book does not specically depend on this plaorm

and distribuon, except for the exercises.

If you don’t own a Raspberry Pi 3, you can use the QEMU emulator which supports the Raspberry Pi 3.

Soware development environment

The code examples in this book are either in C or Python 3. We assume that the reader has access

to a Linux system with an installaon of Python, a C compiler, the make build tool and the git version

control tool.

Structure

The structure of this textbook is based on our many years of teaching operang systems courses at

undergraduate and masters level, taking into account the feedback provided by the reviewers of the

text. The content of the text is closely aligned to the Compung Curricula 2001 Compung Science

report recommendaons for teaching Operang Systems, published by the Joint Task Force of the

IEEE Compung Society and the Associaon for Compung Machinery (ACM).

xxi

Preface

The book is organized into eleven chapters.

Chapters 1 and 2 provide alternate introductory views to operang systems.

Chapter 1 A memory-centric system model presents a top-down view. In this chapter, we introduce a

number of abstract models for processor-based systems. We use Python code to describe the models

and only use simple data structures and funcons. The purpose is to help the student understand that

in a processor-based system, all acons fundamentally reduce to operaons on addresses. The models

are gradually being rened as the chapter advances, and by the end, the model integrates the basic

operang system funconality into a runnable Python-based processor model.

Chapter 2 A praccal view of the Linux system approaches the Linux system from a praccal

perspecve: what actually happens when we boot and run the system, how does it work and what is

required to make it work. We rst introduce the essenal concepts and techniques that the student

needs to know in order to understand the overall system, and then we discuss the system itself.

The aim of this part is to help the student answer quesons such as “what happens when the system

boots?” or “how does Linux support graphics?”. This is not a how-to guide, but rather, provides the

student with the background knowledge behind how-to guides.

In Chapter 3 Hardware architecture, we discuss the hardware on which the operang system runs,

the hardware support for operang systems (dedicated registers, MMU, DMA, interrupt architecture,

relevant details about the bus/NoC architecture, ...), the memory subsystem (caches, TLB), high-level

language support, boot subsystem and boot sequence. The purpose is to provide the student with a

useable mental model for the hardware system and to explain the need for an operang system and

how the hardware supports the OS. In parcular, we study the Linux view on the hardware system.

The next seven chapters form the core of the book, each of these introduces a core Operang System

concept.

In Chapter 4, Process management, we introduce the process abstracon. We outline the state

that needs to be encapsulated. We walk through the typical lifecycle of a process from forking to

terminaon. We review the typical operaons that will be performed on a process.

Chapter 5 Process scheduling discusses how the OS schedules processes on a processor. This includes

the raonale for scheduling, the concept of context switching, and an overview of scheduling policies

(FCFS, priority, ...) and scheduler architectures (FIFO, mullevel feedback queues, priories, ...). The

Linux scheduler is studied in detail.

While memory itself is remarkably straighorward, OS architects have built lots of abstracon layers

on top. Principally, these abstracons serve to improve performance and/or programmability. In

Chapter 6 Memory management, we review caches (in hardware and soware) to improve access

speed. We go into detail about virtual memory to improve the management of physical memory

resource. We will provide highly graphical descripons of address translaon, paging, page tables,

page faults, swapping, etc. We explore standard schemes for page replacement, copy-on-write, etc.

We will examine concrete examples in Arm architecture and Linux OS.

xxii

Preface

In Chapter 7, Concurrency and parallelism, we discuss how the OS supports concurrency and how the

OS can assist in exploing hardware parallelism. We dene concurrency and parallelism and discuss

how they relate to threads and processes. We discuss the key issue of resource sharing, covering

locking, semaphores, deadlock and livelock. We look at OS support for concurrent and parallel

programming via POSIX threads and present an overview of praccal parallel programming techniques

such as OpenMP, MPI and OpenCL.

Chapter 8 Input/output presents the OS abstracon of an IO device. We review device interfacing,

covering topics like Polling, Interrupts and DMA. We will invesgate a range of device types, to

highlight their diverse features and behavior. We will cover hardware registers, memory mapping

and coprocessors. Further, we will examine the ways in which devices are exposed to programmers.

We will review the structure of a typical device driver.

Chapter 9 Persistent storage focuses on data storage. We outline the range of use cases for le

systems. We explain how the raw hardware (block- and sector-based 2d storage, etc.) is abstracted at

the OS level. We talk about mapping high-level concepts like les, directories, permissions, etc., down

to physical enes. We review allocaon, space management, and recovery from failure. We present

a case study of a Linux le system. We also discuss Windows-style FAT, since this is how USB bulk

storage operates.

Chapter 10 Networking introduces networking from an OS perspecve: why is networking treated

dierently from other types of IO, what are the OS requirements to support the OSI stack. We

introduce socket programming with a focus of the role the OS plays (e.g. zero-copy buers, le

abstracon, supporng mulple clients, ...).

Finally, Chapter 11 Advanced topics discusses a number of concepts that go beyond the material of

the previous chapters: The rst part of this chapter deals with customisaon of Linux for Embedded

Systems, Linux on systems without MMU, and datacentre level operang systems. The second

part discusses the security of Linux-based systems, focusing on validaon and vericaon of OS

components and the analysis of recent security exploits.

We hope that you enjoy both reading our book and doing the exercises – especially if you are trying

them on the Raspberry Pi. Please do let us know what you think about our work and how we could

improve it by sending your comments to Arm Educaon Media [email protected]

Jeremy Singer and Wim Vanderbauwhede, 2019

xxiii

Preface

About the Authors

Wim Vanderbauwhede

School of Compung Science, University of Glasgow, UK

Prof. Wim Vanderbauwhede is Professor in Compung Science at the School of Compung Science

of the University of Glasgow. He has been teaching and researching operang systems for over

a decade. His research focuses on high-level programming, compilaon, and architectures for

heterogeneous manycore systems and FPGAs, with a special interest in power-ecient compung

and scienc High-Performance Compung (HPC). He is the author of the book ‘High-Performance

Compung Using FPGAs’. He received his Ph.D. in Electrotechnical Engineering with Specialisaon

in Physics from the University of Gent, Belgium in 1996. Before moving into academic research,

Prof. Vanderbauwhede worked as an ASIC Design Engineer and Senior Technology R&D Engineer for

Alcatel Microelectronics.

Jeremy Singer

School of Compung Science, University of Glasgow, UK

Dr. Jeremy Singer is a Senior Lecturer in Systems at the School of Compung Science of the University

of Glasgow. His main research theme involves programming language runmes, with parcular

interests in garbage collecon and manycore parallelism. He leads the Federated Raspberry Pi

Micro-Infrastructure Testbed (FRµIT) team, invesgang next-generaon edge compute plaorms.

He received his Ph.D. from the University of Cambridge Computer Laboratory in 2006. Singer and

Vanderbauwhede also collaborated in the design of the FutureLearn ‘Funconal Programming in

Haskell’ massive open online course.

xxiv

Acknowledgements

The authors would like to thank the following people for their help:

Khaled Benkrid, who made this book possible.

Ashkan Tousimojarad, who originally suggested the project.

Melissa Good, Jialin Dou and Michael Shu who kept us on track and assisted us with the process.

The reviewers at Arm who provided valuable feedback on our dras.

Tony Garnock-Jones, Dejice Jacob, Richard Morer, Colin Perkins, and other colleagues who

commented on early versions of the text.

Steve Furber, for his kind endorsement of the book.

Lovisa Sundin, for her help with illustraons.

Jim Garside, Krisan Hentschel, Simon McIntosh-Smith, Magnus Morton and Michèle Weiland for

kindly allowing us to use their photographs.

The countless volunteers who made the Linux kernel what it is today.

xxv

Chapter 1

A Memory-centric

system model

Operang Systems Foundaons with Linux on the Raspberry Pi

2

1.1 Overview

In this chapter, we will introduce a number of abstract memory-centric models for processor-based

systems. We will use Python code to describe the models and only use simple data structures and

funcons. The models are abstract in the sense that we do not build the processor system starng from

its physical building blocks (transistors, logic gates, etc.), but rather, we model it in a funconal way.

The purpose is to help you understand that in a processor-based system, all acons fundamentally

reduce to operaons on addresses. This is a very important point: every observable acon in a processor-

based system is the result of wring to or reading from an address locaon.

In parcular, this includes all peripherals of the system, such as the network card, keyboard, and display.

What you will learn

Aer you have studied the material in this chapter, you will be able to:

1. Discuss the importance of state and the address space in a processor-based system.

2. Create a processor-based system model in a high-level language.

3. Implement basic operang system concepts such as me slicing in machine code.

4. Explain how hardware and soware features of a processor-based system are designed to handle

I/O, concurrency, and performance.

1.2 Modeling the system

A microprocessor is driven by a clock.

Our model will describe the acons at every ck of the clock using funcons.

We will model the system through its state, represented as a simple data structure.

By “state,” we mean informaon that is persistent, i.e., some form of memory. This is not limited to

actual computer memory. For example, if our system controls a robot arm, then the posion of the

arm is part of the state of the system.

1.2.1 The simplest possible model

We start our system model by stang that the acon of the processor modies the system state:

systemState = processorAction(systemState)

Python

In pracce, the system also interacts with the outside world through peripherals such as the keyboard,

network interface, etc., generally called “I/O devices”, storage devices such as disks, etc. Let's just call

these types of acons to modify the state ‘non-processor acons’. Adding this to our model, we get:

3

Lisng 1.2.1: System state with non-processor acons Python

1 systemState = nonProcessorAction(systemState)

2 systemState systemState = processorAction(systemState)

In a real system, these acons happen at the same me (we call concurrent acons), so one of the

quesons (that we will address in detail in Chapters 7, ‘Concurrency and parallelism’) is how to make

sure that the system state does not become undetermined as a result of concurrent acons. But rst,

let’s look in a bit more detail at the system state.

1.2.2 What is this ‘system state’?

We say that the processor ‘‘modies the system state’’, so let’s take a closer look at this system

state. From the point of view of the processor, the system state is simply a xed-size array of unsigned

integers. Nothing more than that. In C syntax, we can express this as shown in Lisng 1.2.2:

Lisng 1.2.2: System state as C array C

1 int systemState[STATE_SZ]

Which means that manipulaon of the system state, and by consequence, anything that happens in

a processor-based system boils down to modifying this array.

So, what does this array actually represent? It represents all of the memory in the system, not just the

actual system memory (DRAM, Dynamic Random Access Memory) but including the I/O devices and

other peripherals such as disks. In system terms, this is known as the ‘physical address space’, and we

will discuss this in detail in Chapter 6, ‘‘Memory management.’’

1

In other words, the system state is composed of the states of all the system components, for example

for a system with a keyboard kbd, network interface card nic, solid state disk ssd, graphics processing

unit gpu, and random access memory ram:

systemState = ramState + kbdState + nicState + ssdState + gpuState

Python

Where ramState, kbdState, nicState, etc. are all xed-size arrays of integers.

However, it could of course equally be:

systemState = ssdState + kbdState + nicState + ramState + gpuState

Python

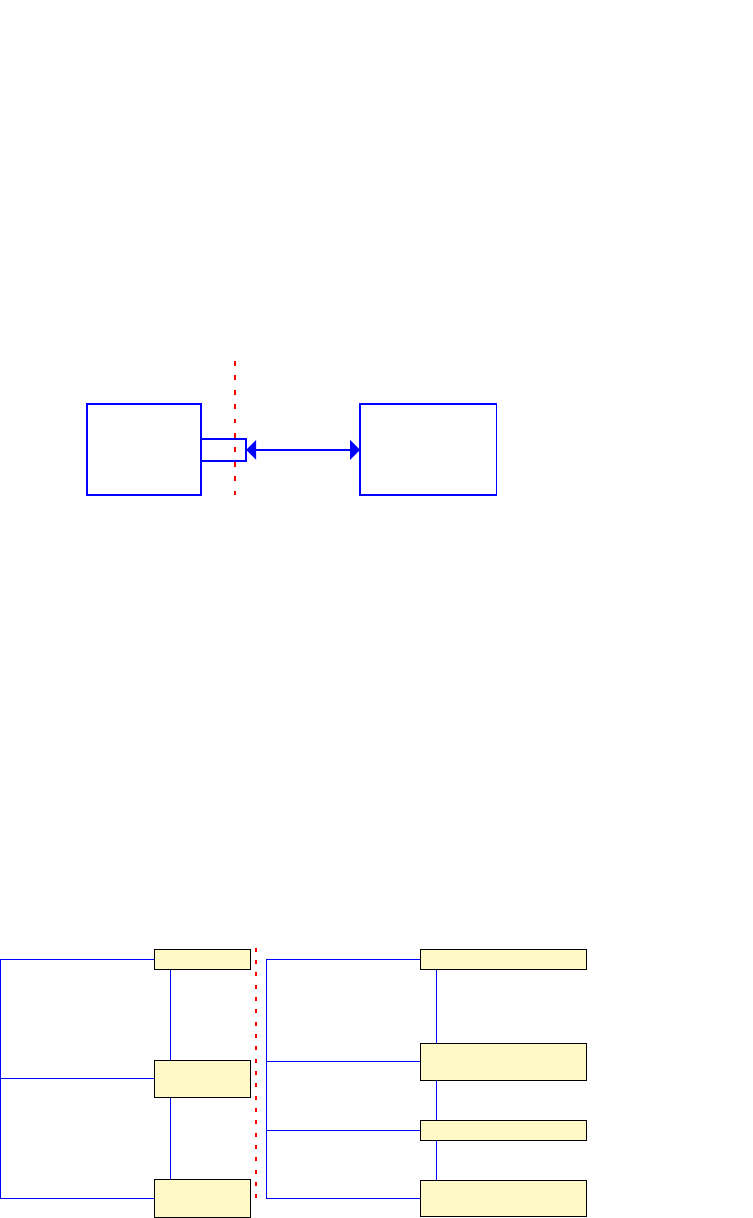

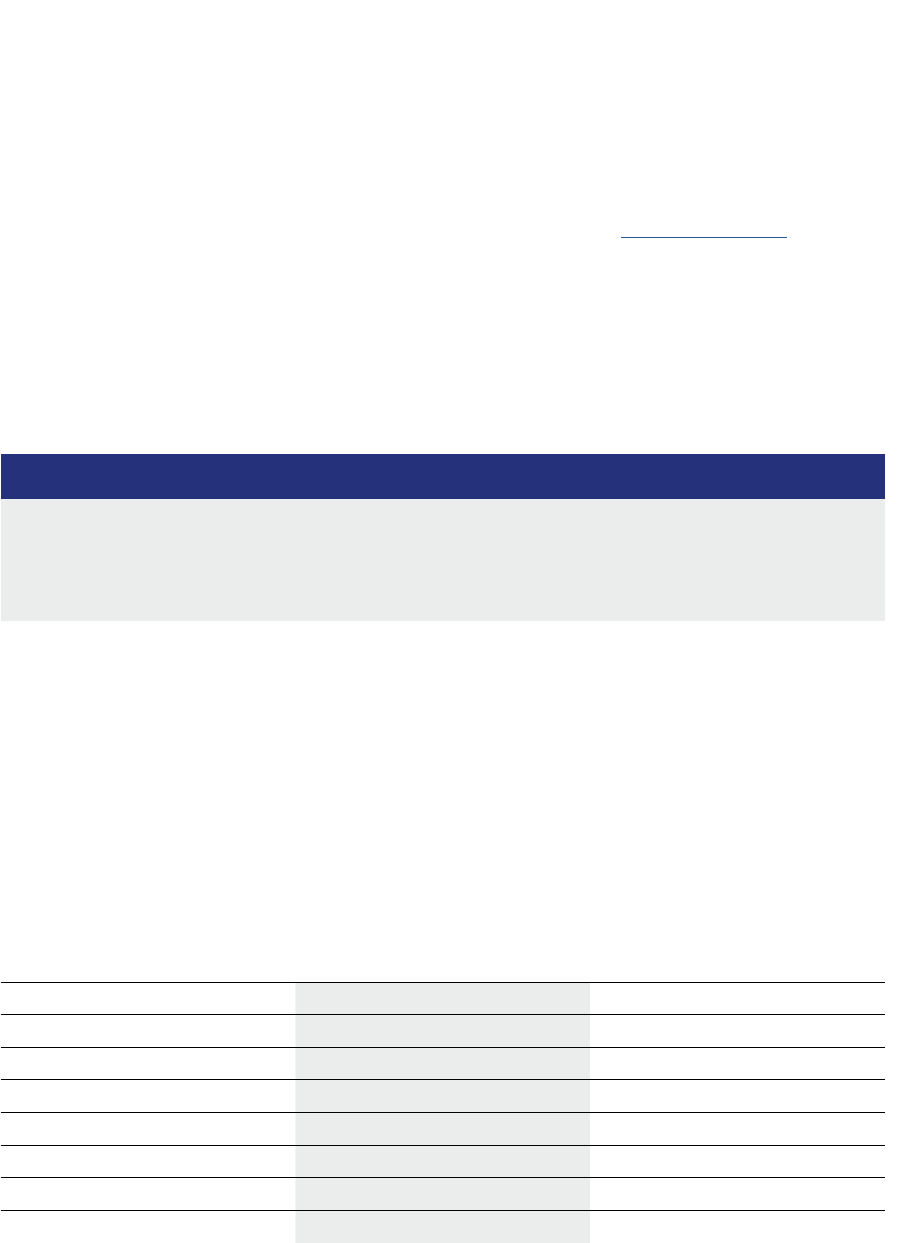

The above are two examples of address space layouts. The descripon of the purpose, size, and

posion of the address regions for memory and peripherals is called the address map. As an illustraon,

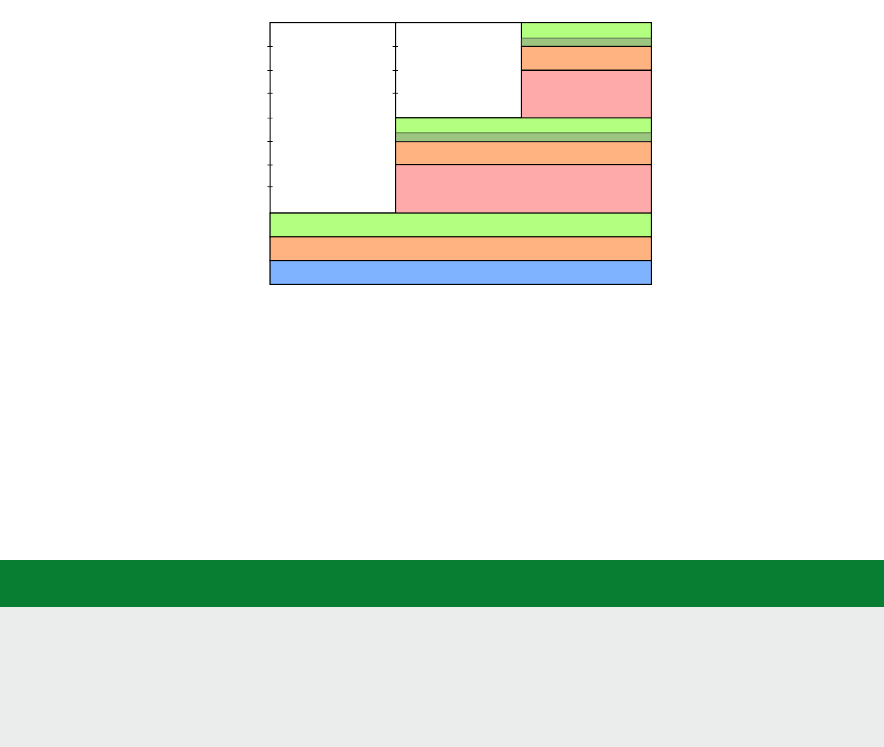

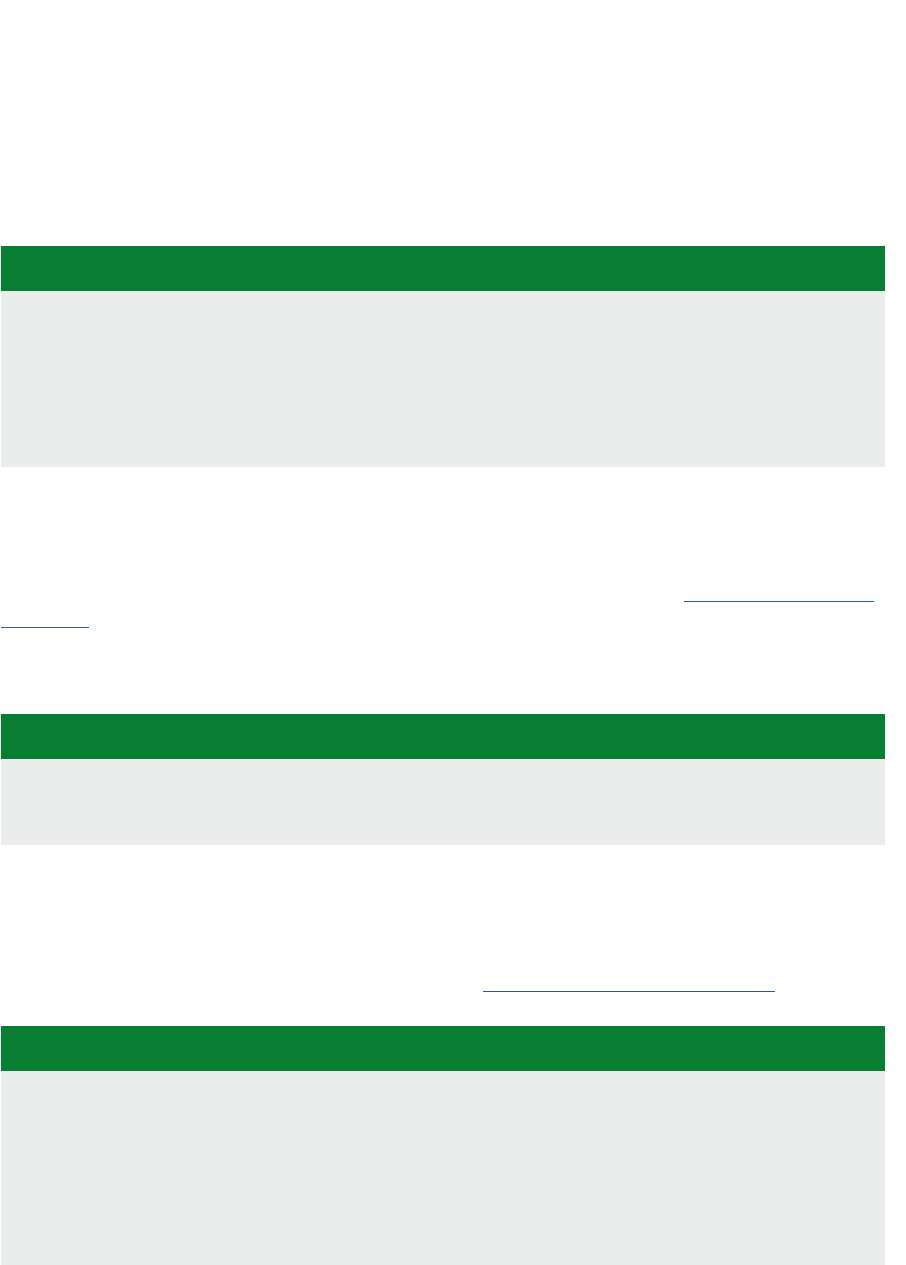

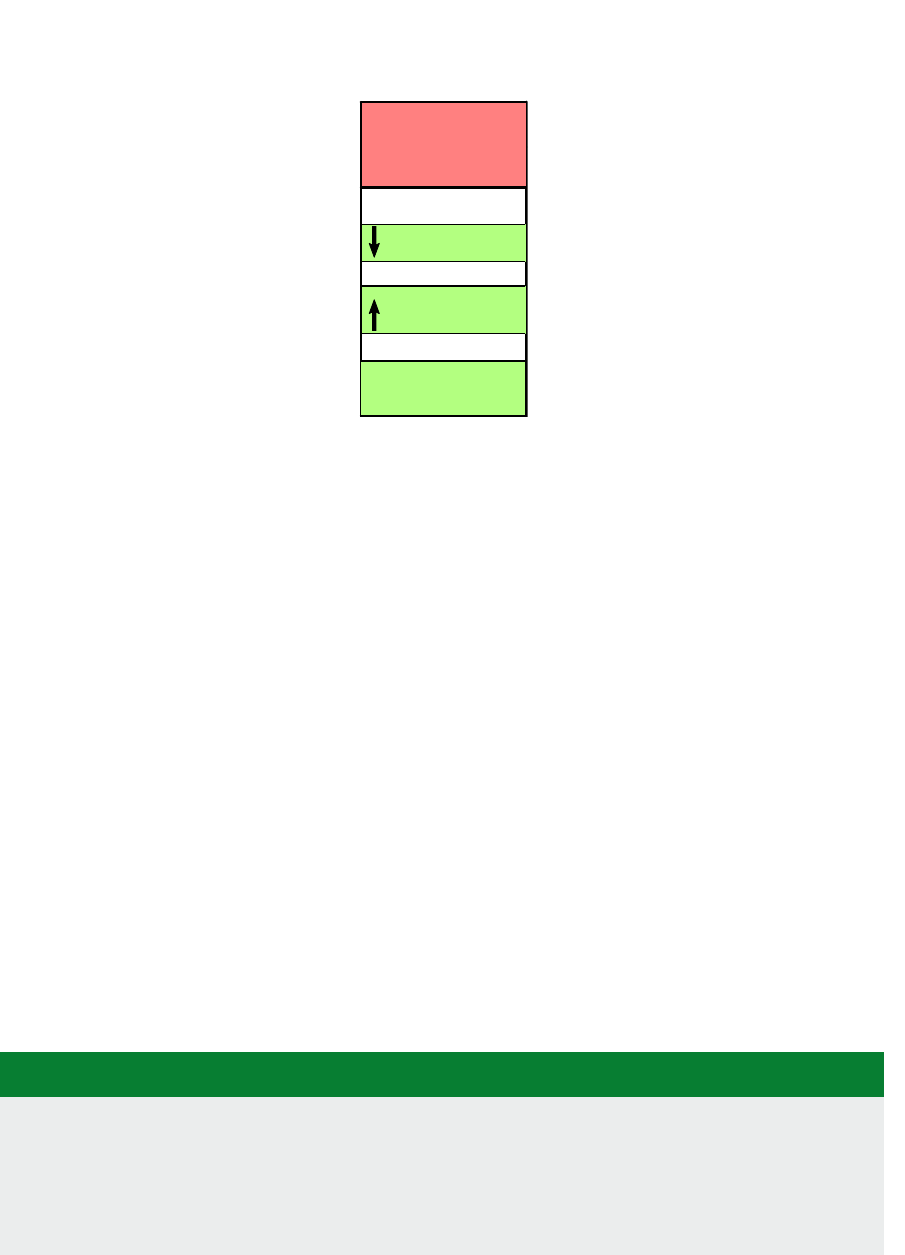

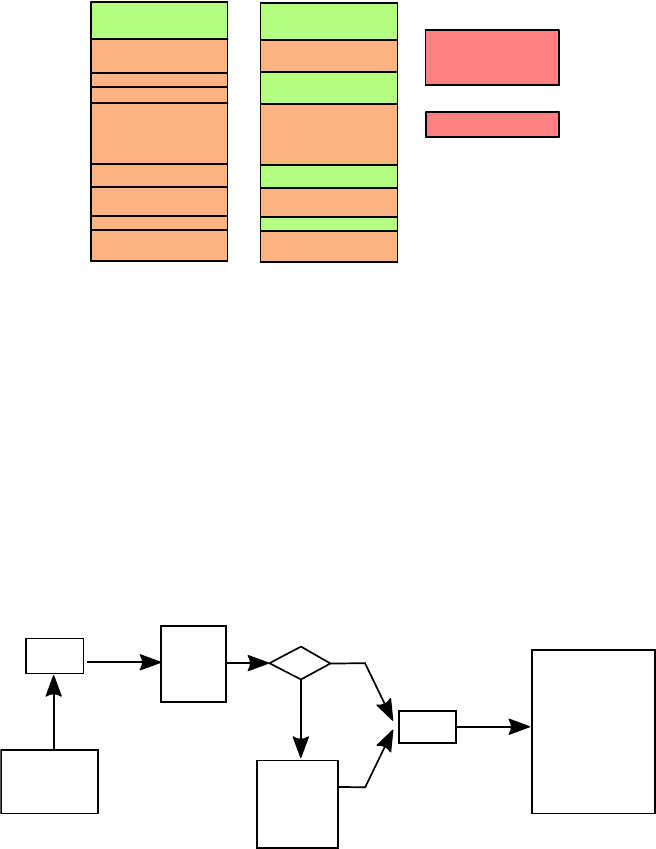

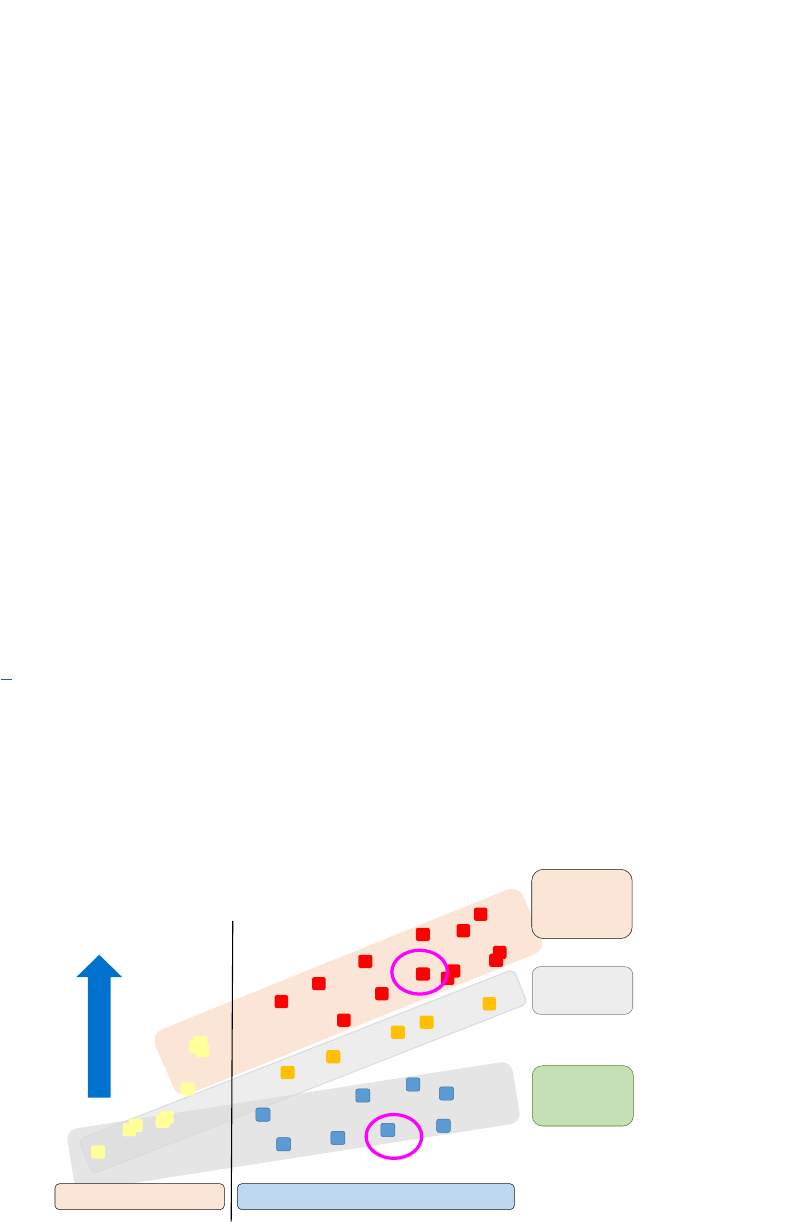

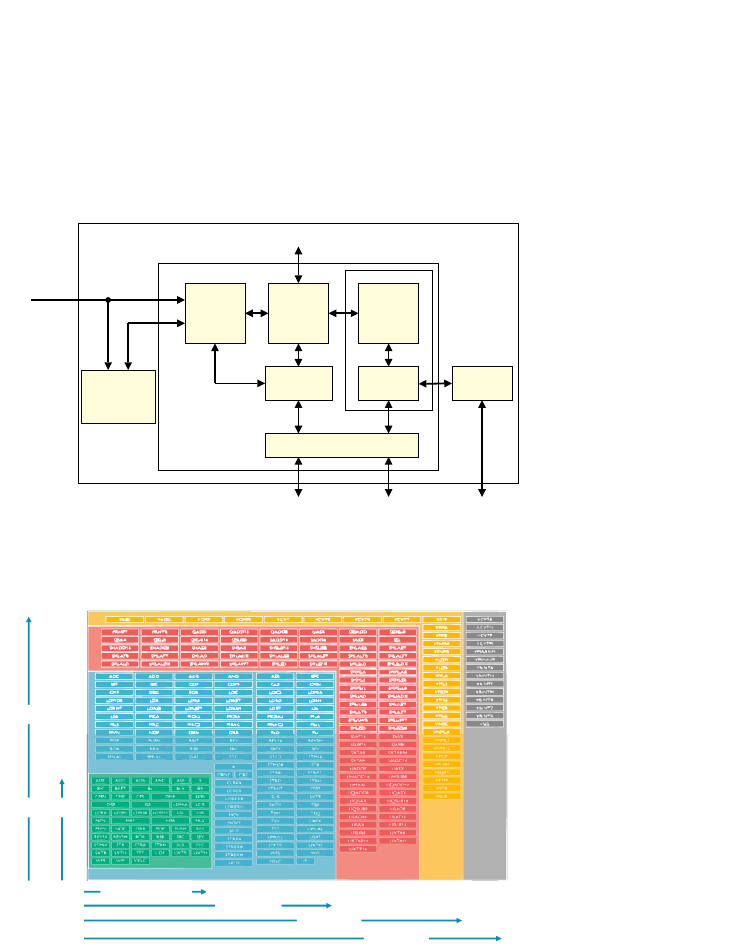

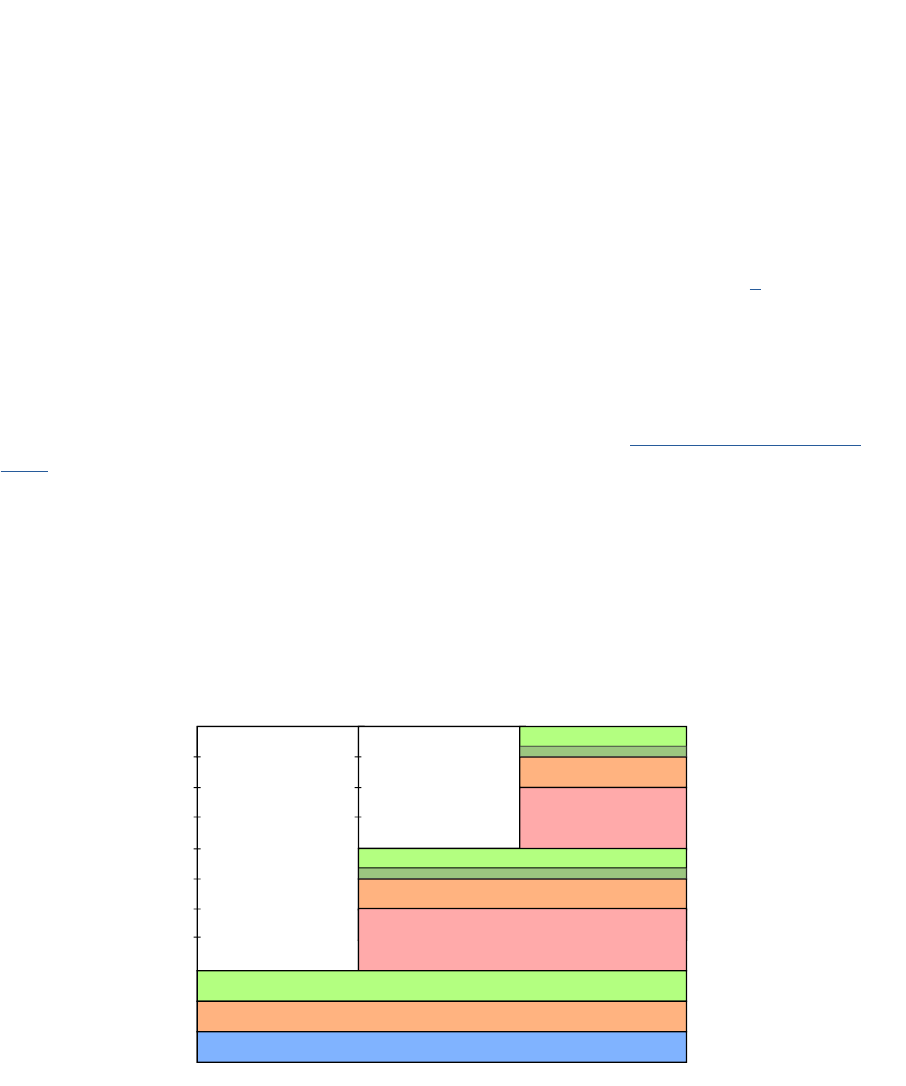

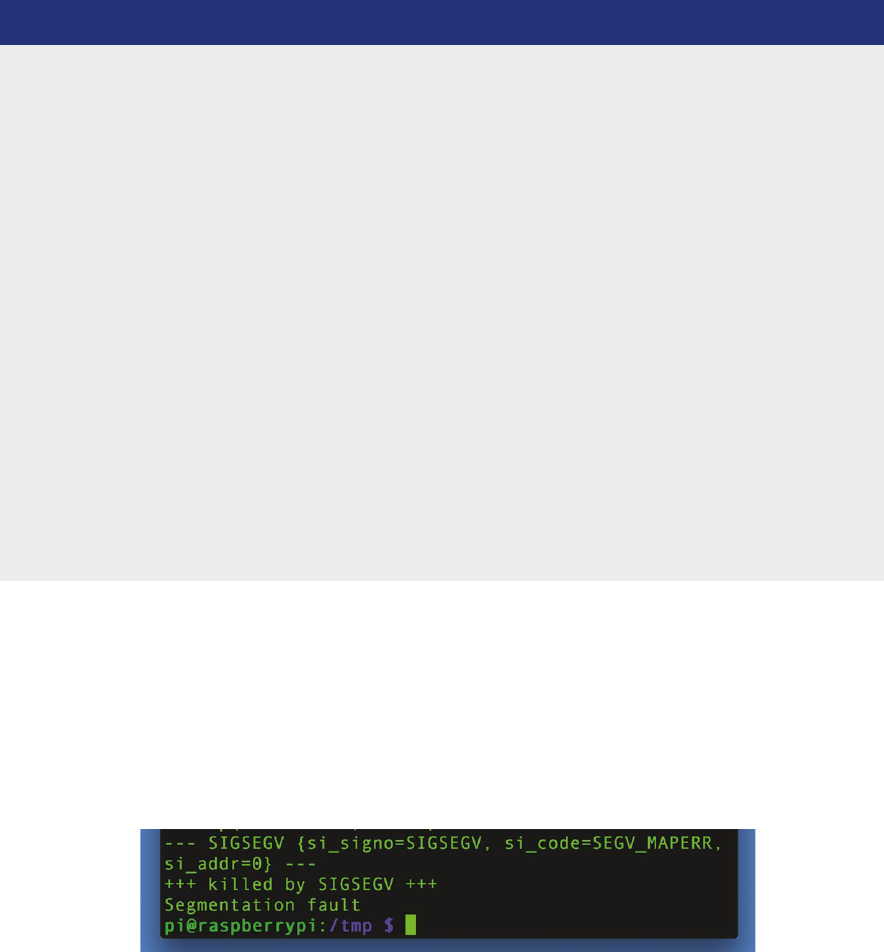

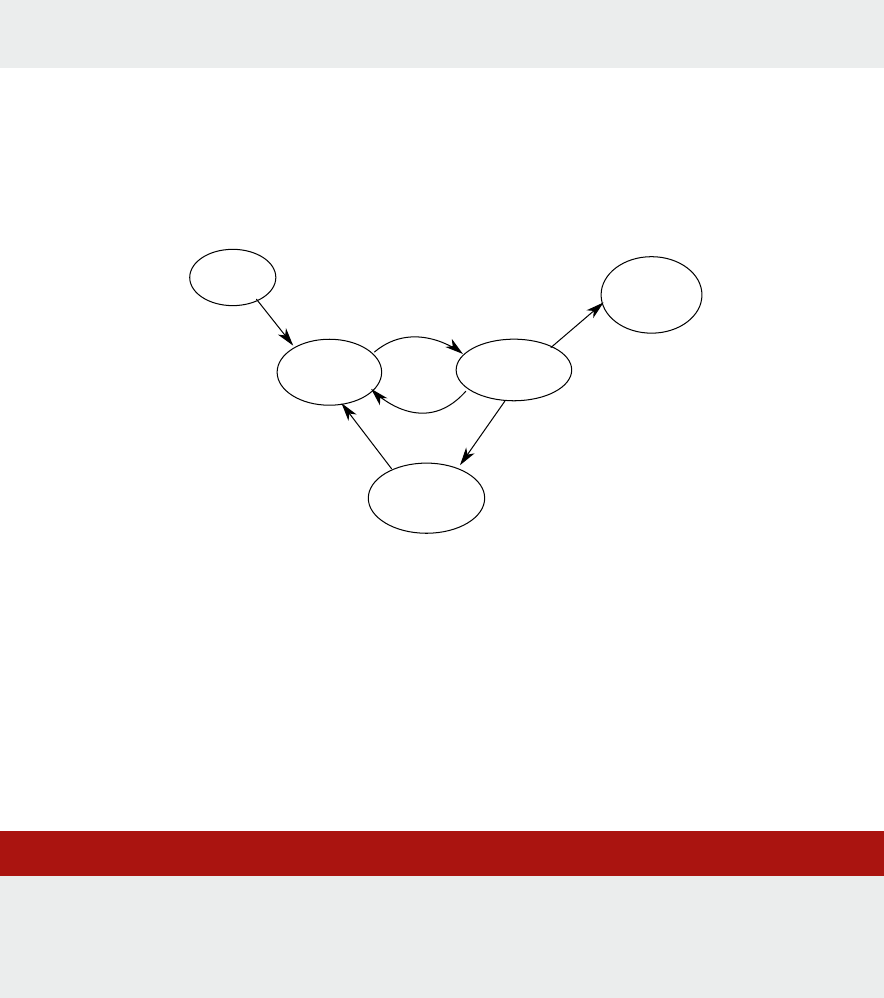

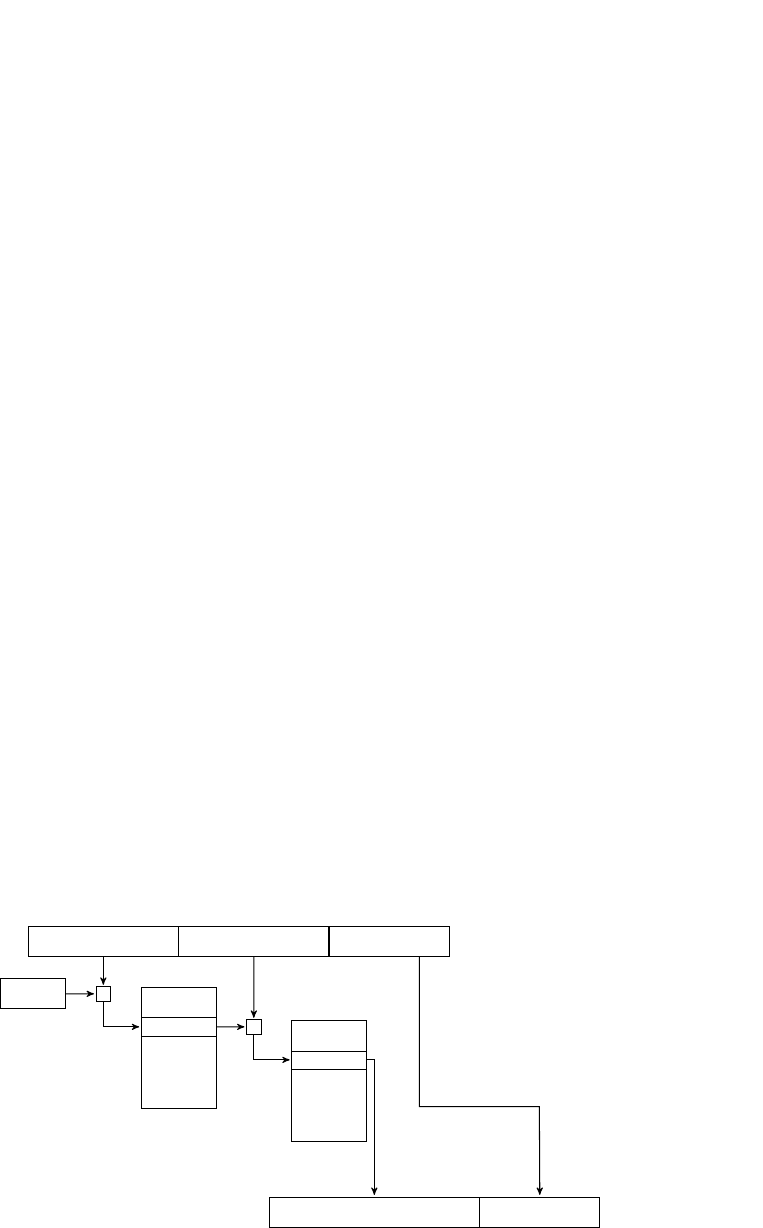

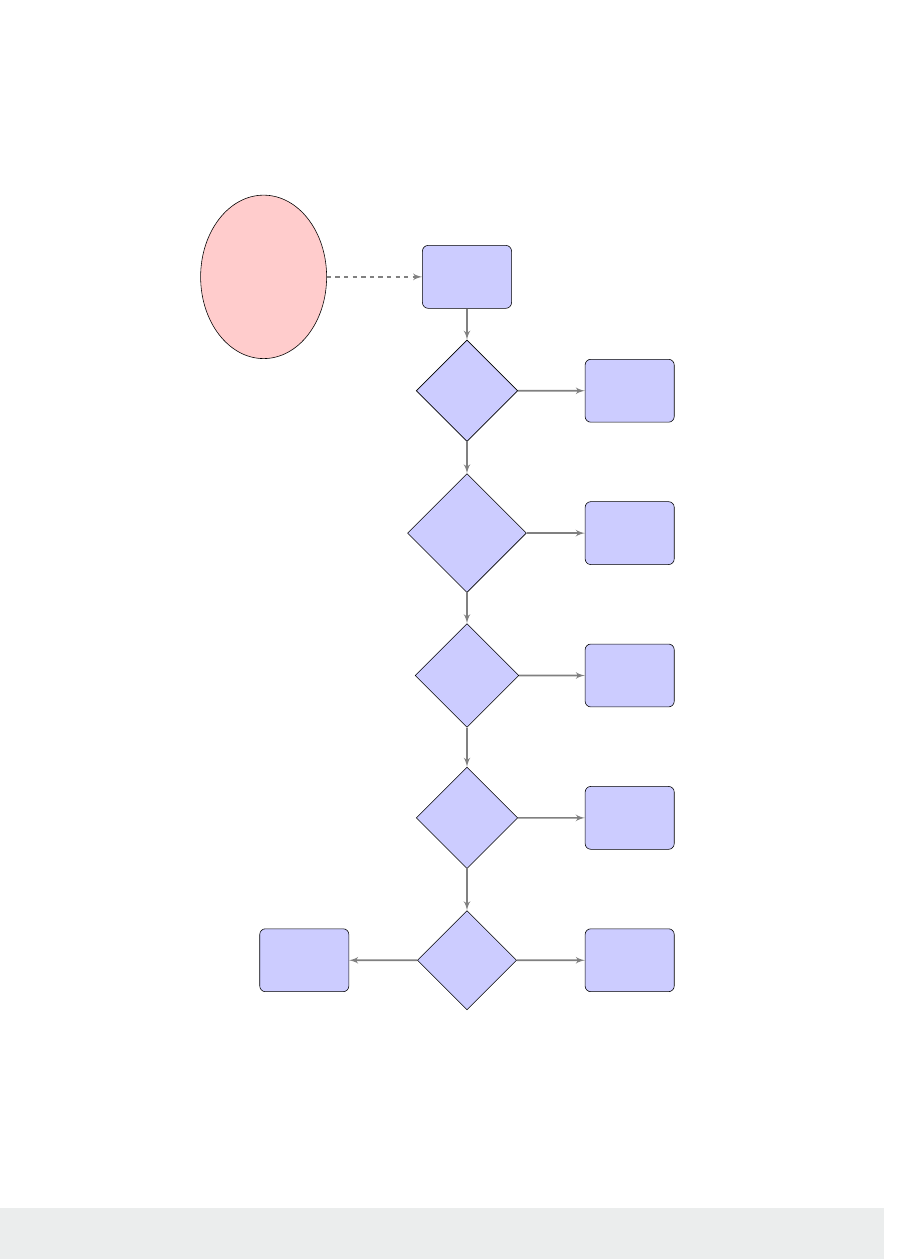

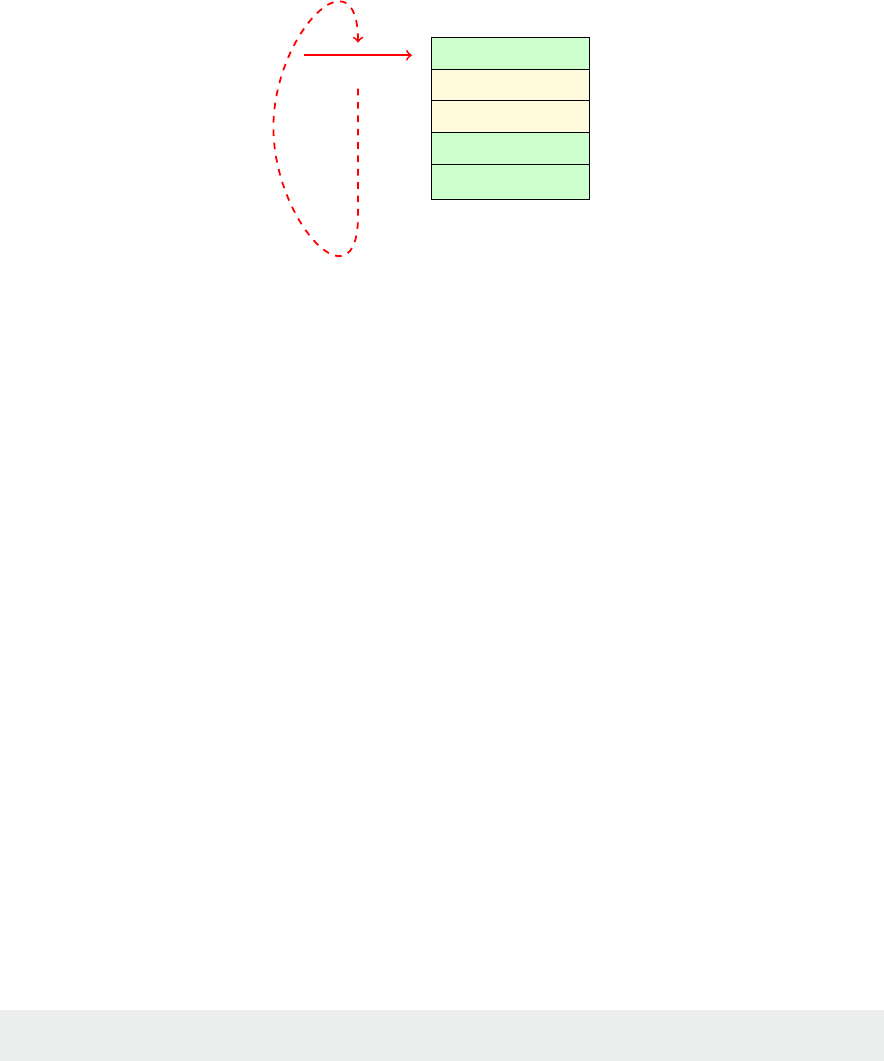

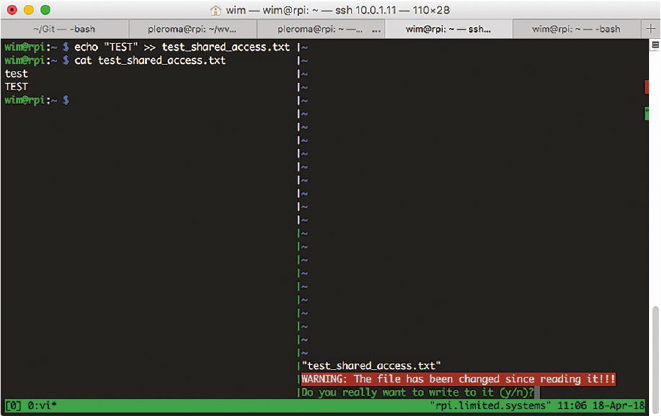

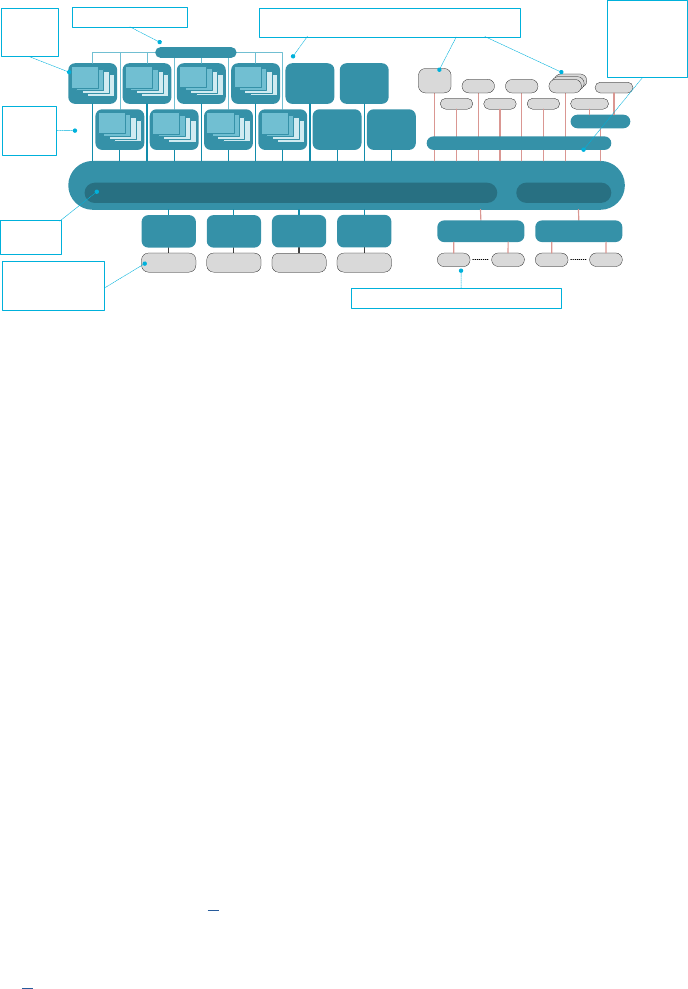

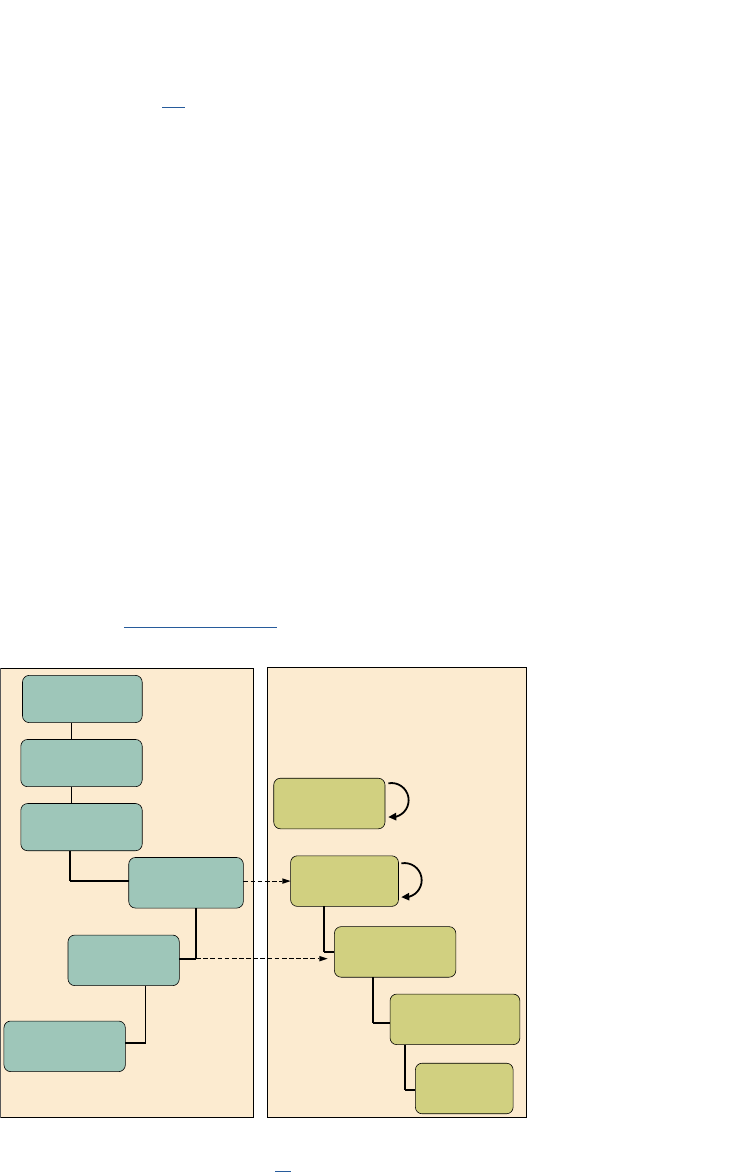

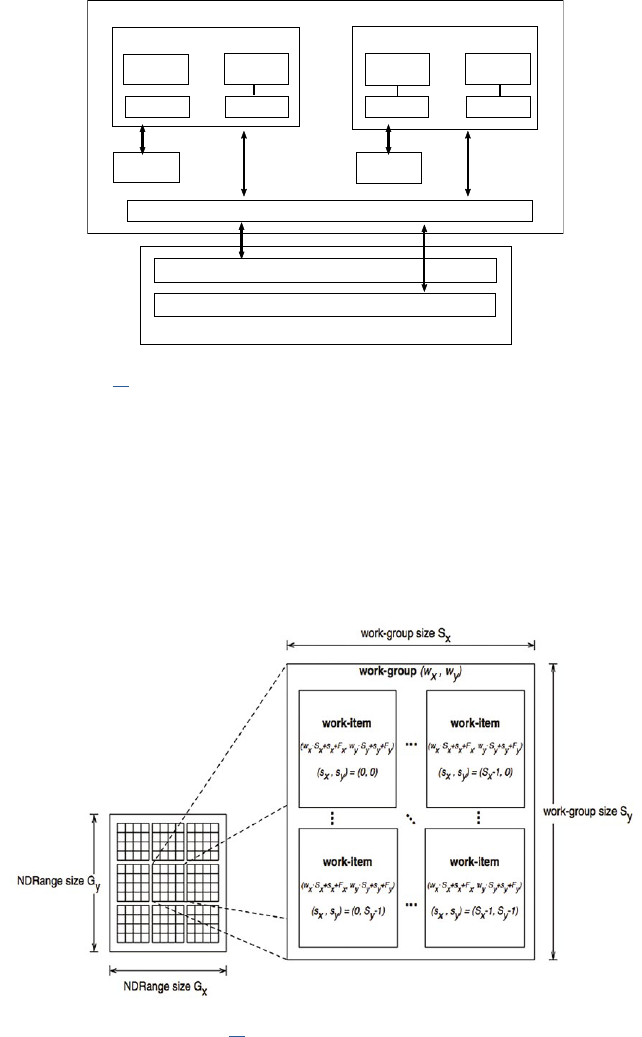

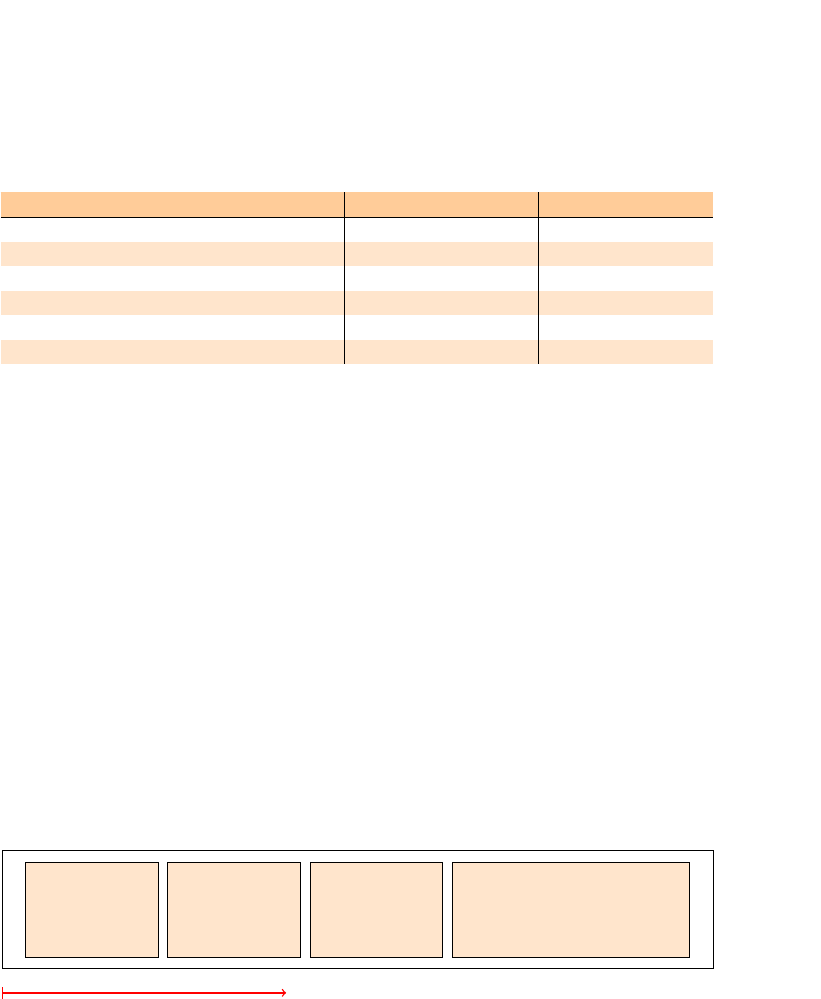

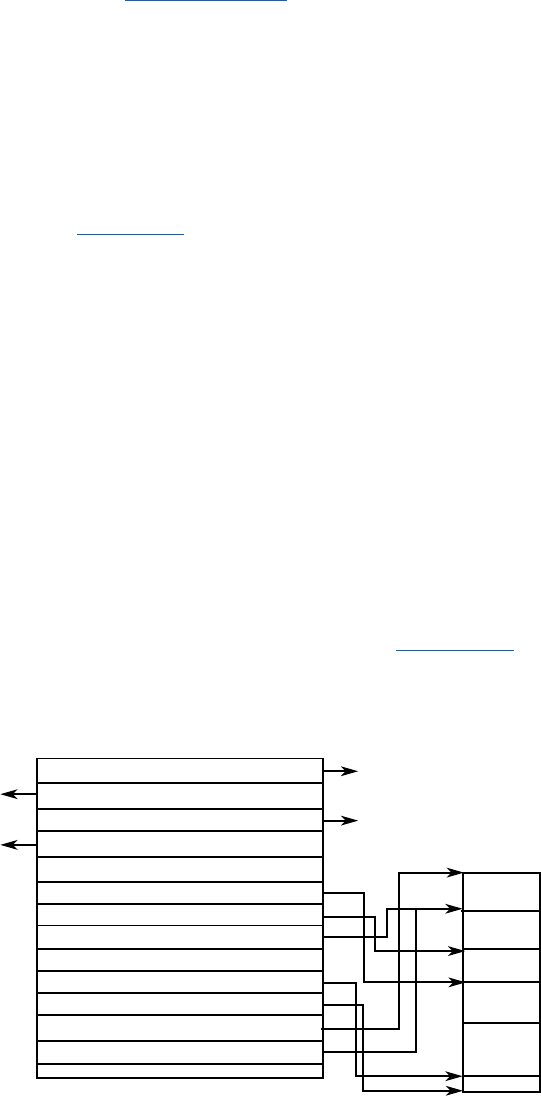

the Arm address map for A-class systems [1] is shown in Figure 1.1.

1

As our model focuses on Arm-based systems, we do not discuss port-mapped I/O.

Chapter 1 | A Memory-centric system model

Operang Systems Foundaons with Linux on the Raspberry Pi

4

Figure 1.1: Arm 40-bit address map.

Figure 1.1. If the address size is 32 bits, we can address 2

32

= 4GB of memory. We see from the gure

that dierent regions are reserved for dierent purposes, e.g., the second GB is memory mapped I/O,

and the upper 2 GB are random access memory (DRAM).

1.2.3 Rening non-processor acons

Using the more detailed state from above, we can split the non-processor acons into per-peripheral

acons, so that our model becomes:

Lisng 1.2.3: Model with per-peripheral acons Python

1 kbdState=kbdAction(kbdState)

2 nicState=nicAction(nicState)

3 ssdState=ssdAction(ssdState)

4 gpuState=gpuAction(gpuState)

5 systemState = ramState+kbdState+nicState+diskState+gpuState

6 systemState = processorAction(systemState)

Each of these acons only aects the state of the peripheral; the rest of the system state remains

unaected.

1.2.4 Interrupt requests

Let’s return now to the potenal problem of state modied by concurrent acons. The way we just

separated the state oers a possible soluon. Now we can create a kind of nocaon mechanism

which lets the processor know that an outside acon has modied the state

2

.

This is exactly what happens in real systems, and the mechanisms used are called interrupts. We will

discuss this in detail in Chapter 8, ‘Input/output’, but it is useful to add an interrupt mechanism to our

abstract model.

A peripheral can send an interrupt request (IRQ) to the processor. We will model the interrupt request as a

boolean ag which is returned by every peripheral acon together with its state (as a tuple). The processor

0 GB

1 GB

2 GB

4 GB

8 GB

16 GB

32 GB

64 GB

128 GB

256 GB

512 GB

1024 GB

32-bit

36-bit

40-bit

Mapped I/O

DRAM

Reserved

Mapped I/O

DRAM

Reserved

Mapped I/O

2 GB of DRAM

ROM & RAM & I/O

32-bit 36-bit 40-bit

0

32-bit

36-bit

40-bit

32 GB hole or DRAM

2 GB hole or DRAM

Log2 scale

2

We could also let the processor check if the state of a peripheral was changed before acng on it. This approach is called polling and will be discussed in Chapter 8, ‘Input/output’.

5

acon receives an array of these interrupt requests and uses the array index to idenfy the peripheral that

raised the interrupt (‘raising an interrupt’ in our model means seng the boolean ag to True).

In pracce, the mechanism is more complicated because many peripherals can raise mulple dierent

interrupt requests depending on the condion. Typically, a dedicated peripheral called interrupt

controller is used to manage the interrupts from the various devices.

Note that the interrupt mechanism is purely a nocaon mechanism: it does not stop the processor

from modifying the peripheral state, all it does is nofy the processor that the peripheral unilaterally

changed its state. So in principle, the peripheral could sll be modifying its state at the very same me

that the processor is modifying it. In what follows, we simply assume that this cannot happen, i.e., if a

peripheral is modifying its state, then the processor can’t change it and vice versa. A possible model for

this is that the peripheral state change and the interrupt request are happening at the same me and

that the processor always needs to process the request before making a state change.

Lisng 1.2.4: Model with interrupt requests Python

1 (kbdState,kbdIrq)=kbdAction(kbdState)

2 ...

3

4 irqs=[kbdIrq,...]

5

6 systemState = ramState+kbdState+nicState+diskState+gpuState

7 (systemState,irqs) = processorAction(systemState,irqs)

We will see in the next secon how the processor handles interrupts.

1.2.5 An important peripheral: the mer

A mer is a peripheral that counts me in terms of the system clock. It can be programmed to ‘re’

periodically at given intervals, or aer a one-o interval. When a mer ‘res’ it raises an interrupt

request. The mer is parcularly important because it is the principal mechanism used by the

operang system to track the progress of me and allows it to schedule tasks.

(timerState, timerIrq)=timerAction(timerState)

Python

1.3 Bare-bones processor model

To gain more insight into the way the processor modies the system state, we will build a simple processor

model which models how the processor changes the system state at every clock cycle. The purpose of

this model is to make the introducon of the more abstract model in Secon 1.4 easier to understand.

1.3.1 What does the processor do?

The processor is a machine to modify the system state. You need to know that …

A key feature of a processor is the ability to run arbitrary programs.

A program consists of a series of instrucons.

Chapter 1 | A Memory-centric system model

Operang Systems Foundaons with Linux on the Raspberry Pi

6

An instrucon determines how the processor interacts with the system through the address space: it can

read values at given addresses, compute new values and addresses, and write values to given addresses.

Note that the program is itself part of the system state. The program running on the processor can control

which part of the enre program code to access. This is what allows us to create an operang system.

1.3.2 Processor internal state: registers

Although in principle, a processor could directly manipulate the system state, this is not praccal

because DRAM memory access is quite slow. Therefore, in pracce, processors have a dedicated

internal state known as the register le, an array of words called registers which you can consider

as a small but very fast memory. The register le is separate from the rest of the system state (it is

a ‘separate address space’). This means we have to rene our model to separate the register le from

the rest of the system state, which we will call systemState. We do this using a tuple

3

:

(systemState,irqs,registers) = processorAction(systemState,irqs,registers)

Python

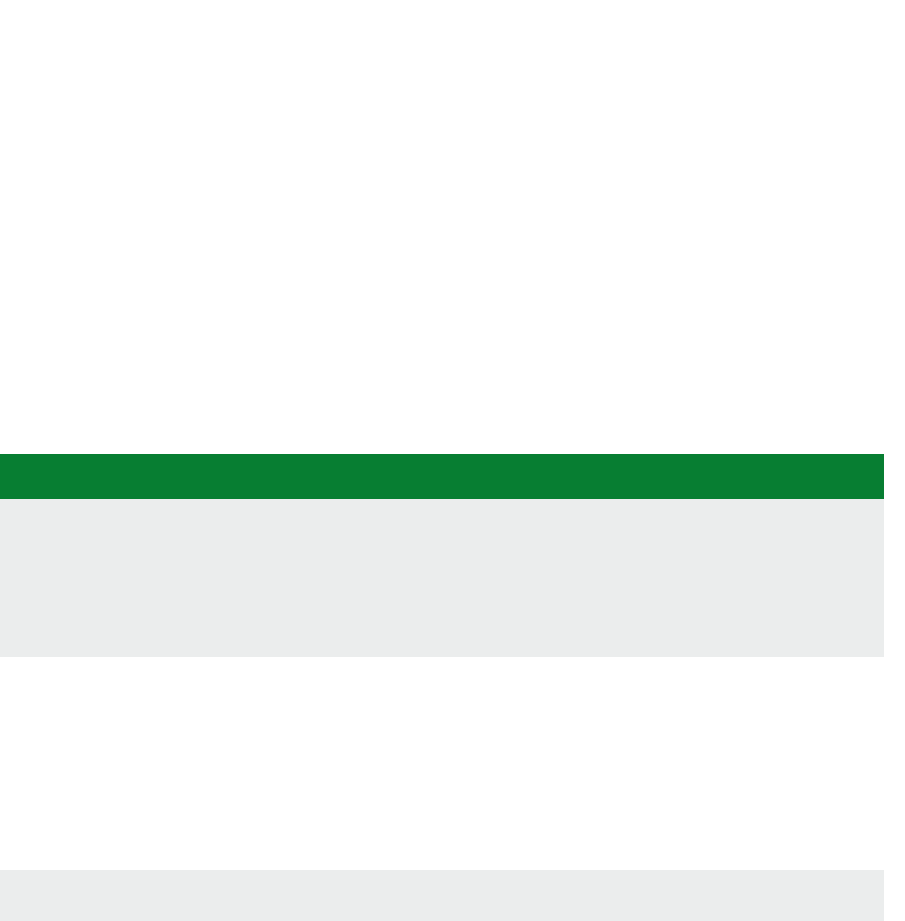

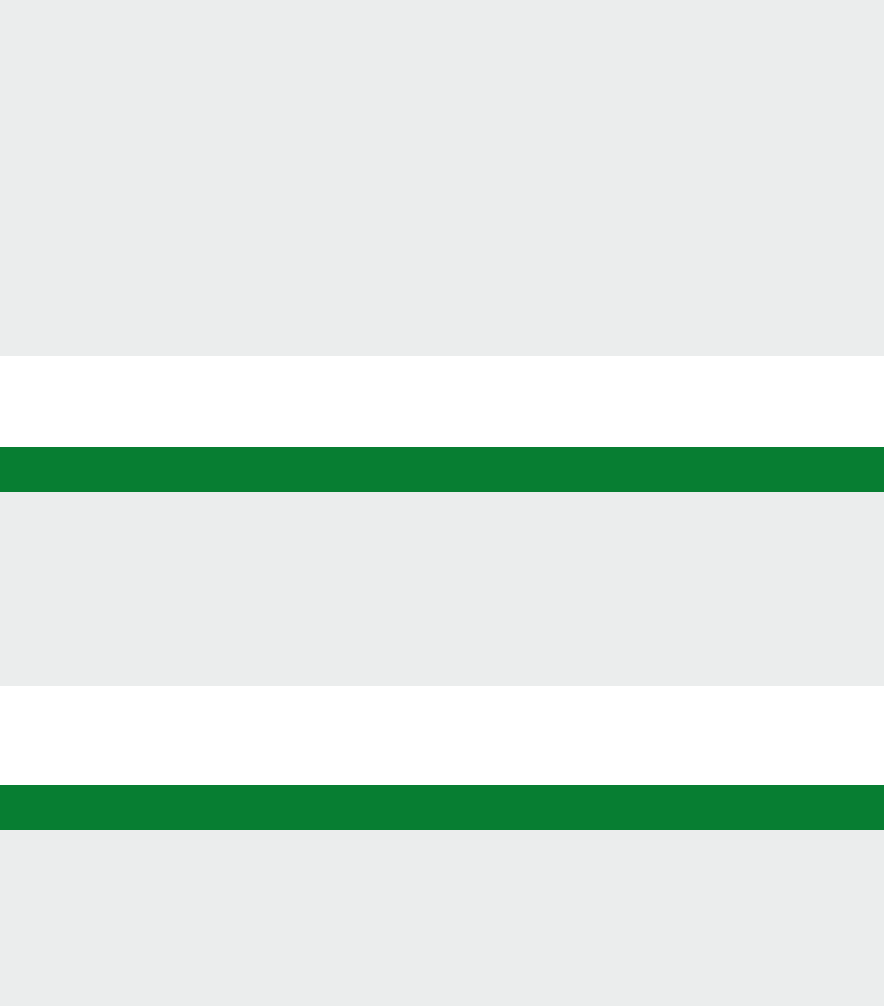

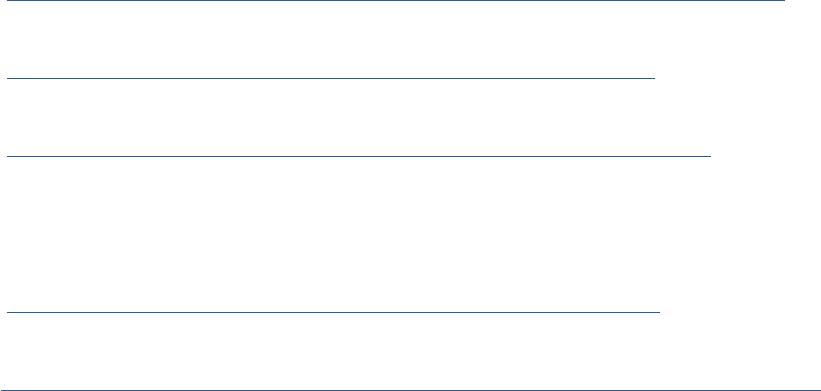

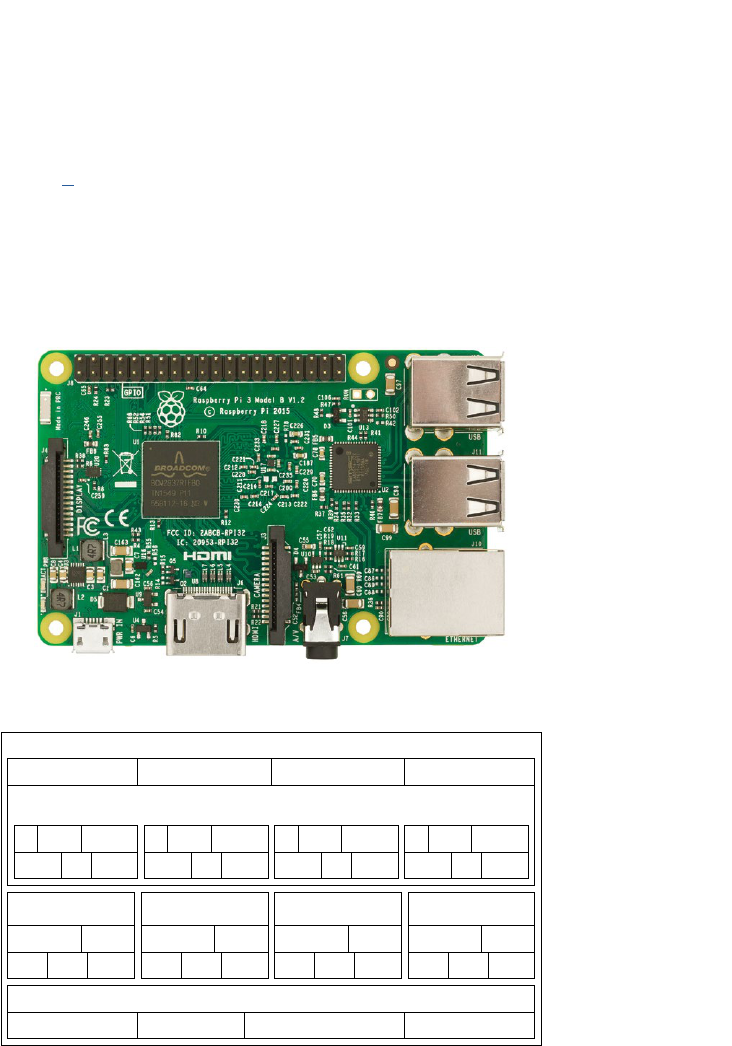

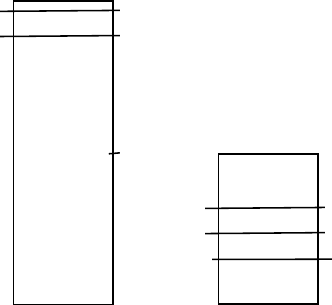

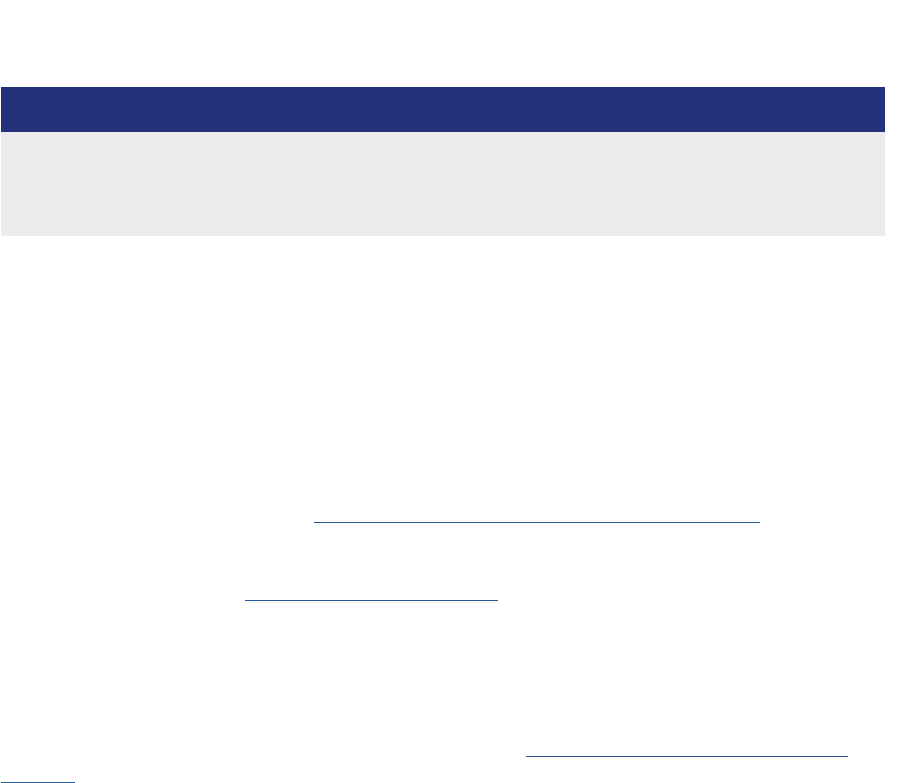

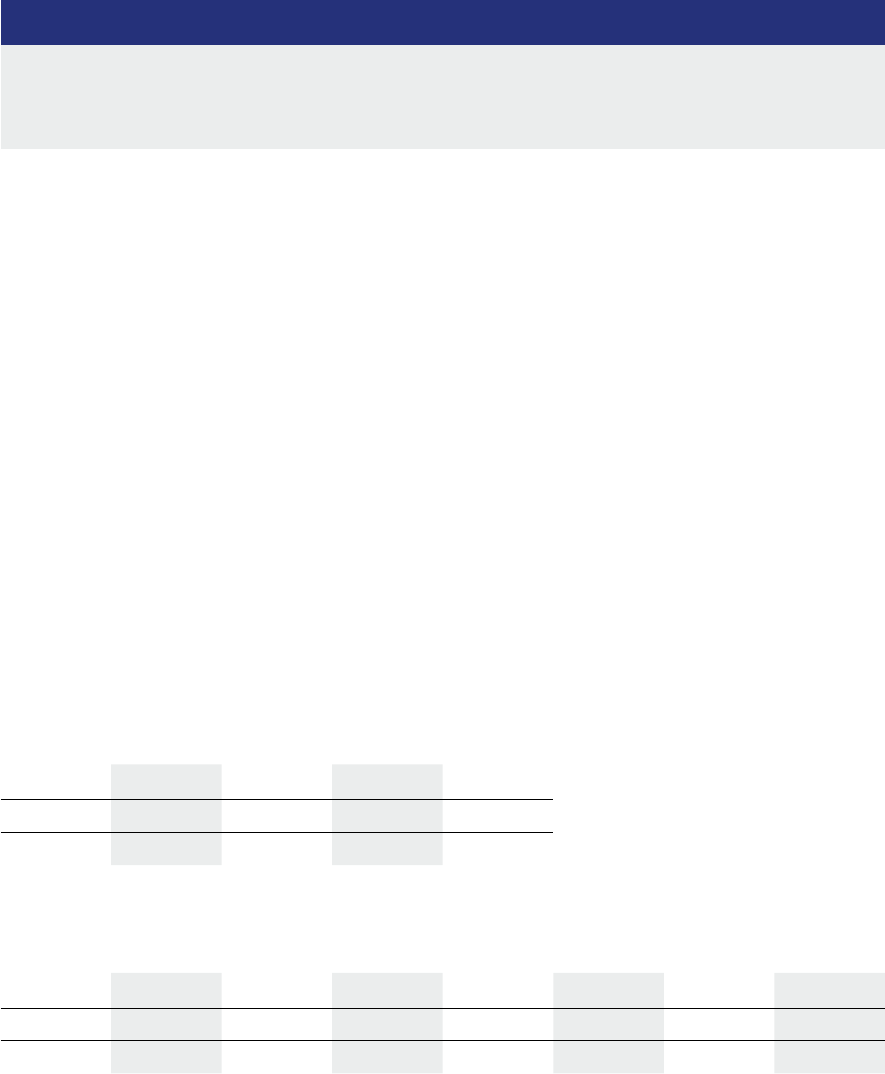

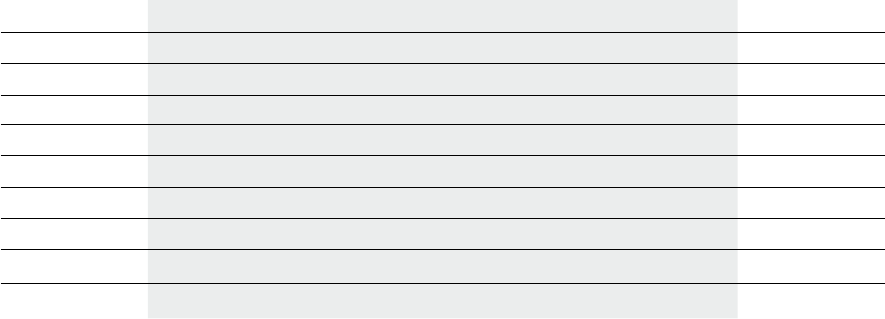

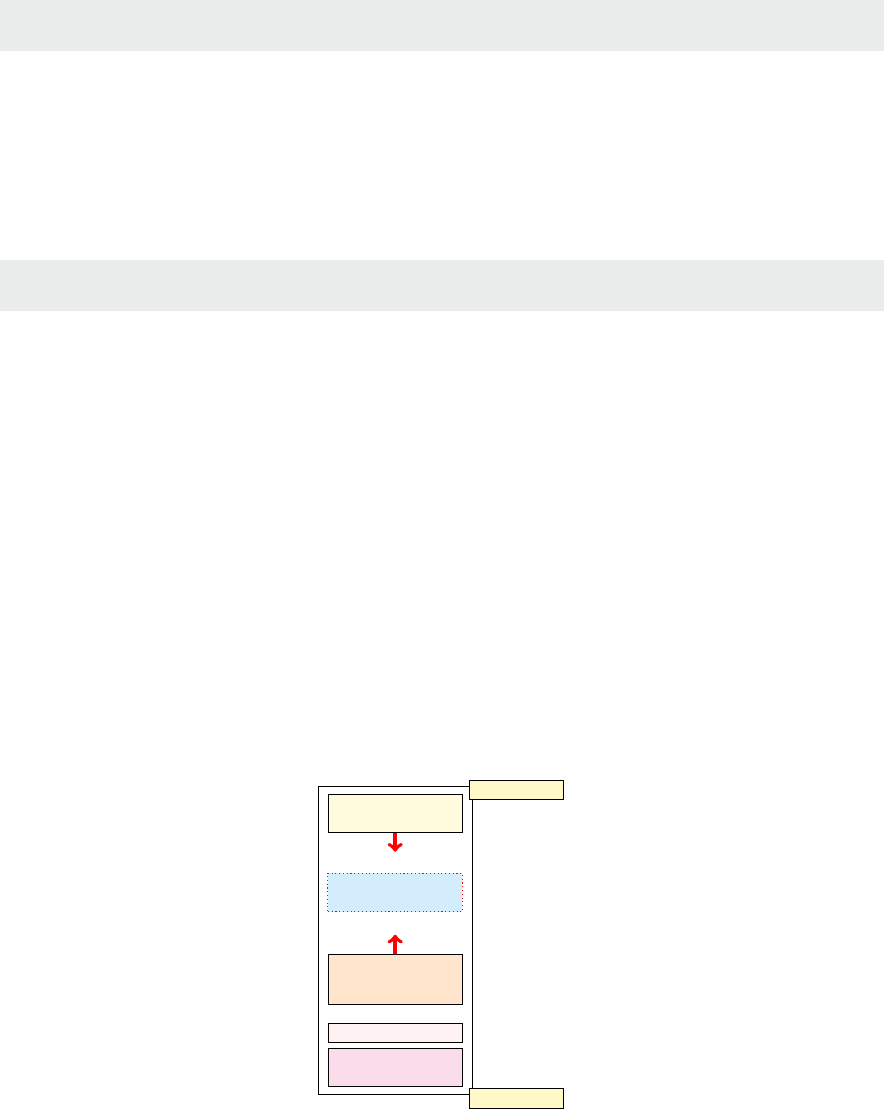

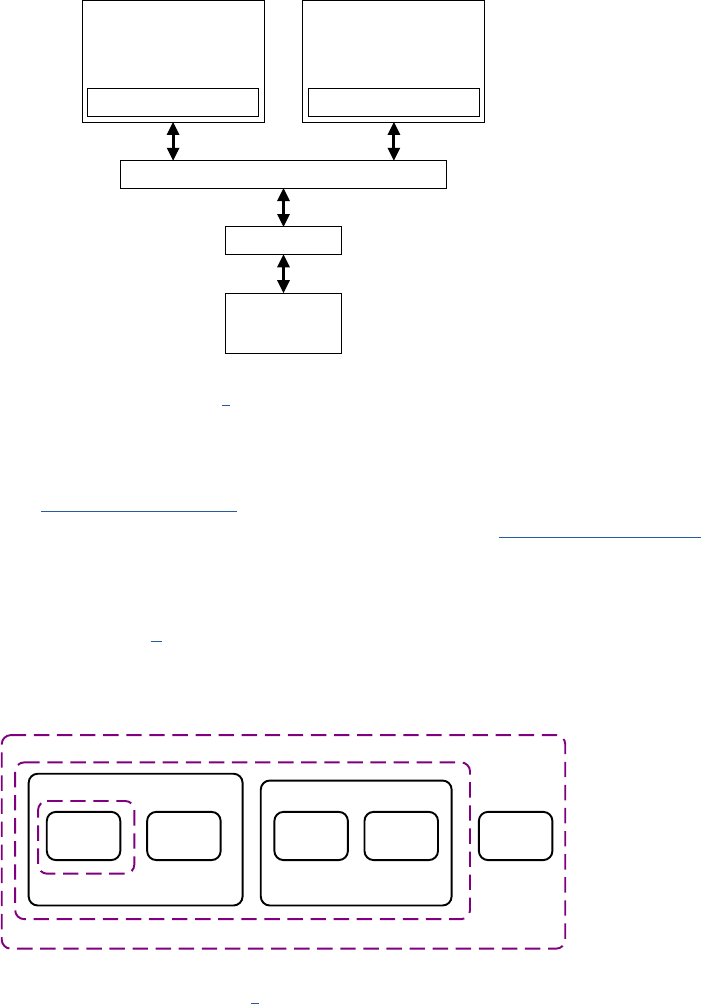

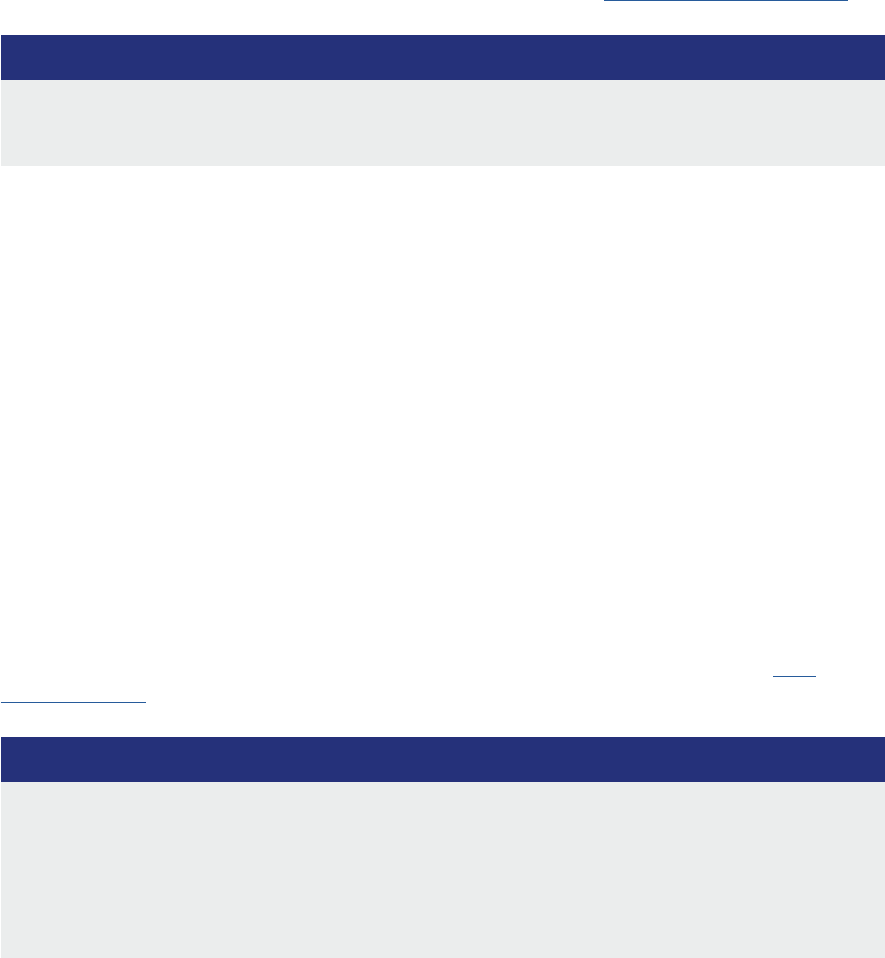

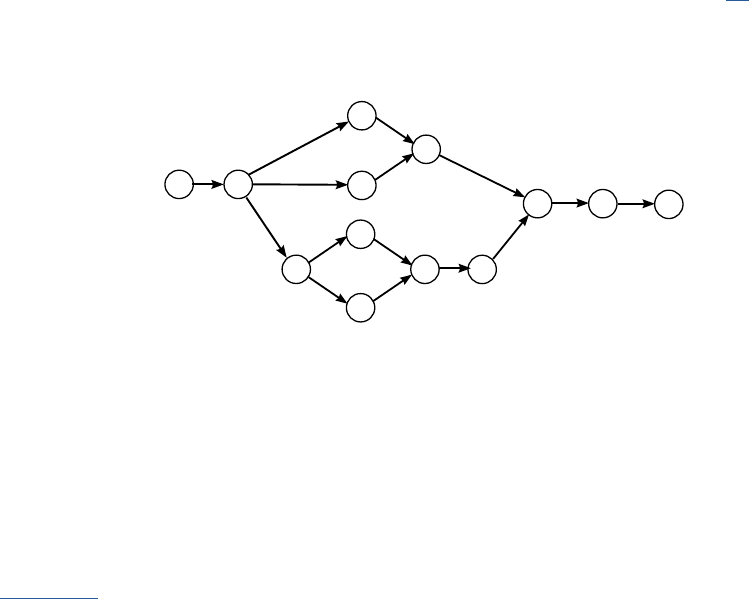

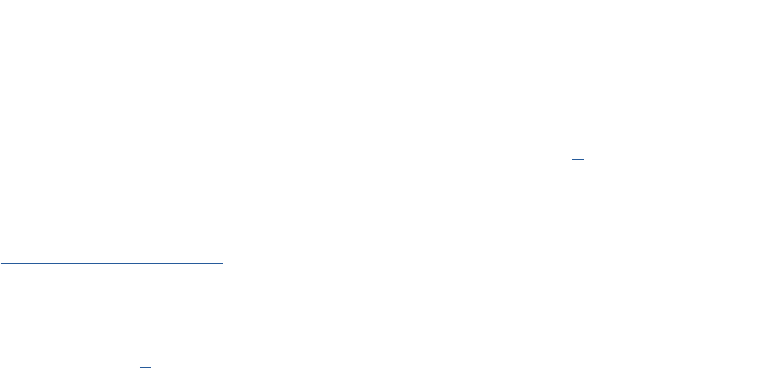

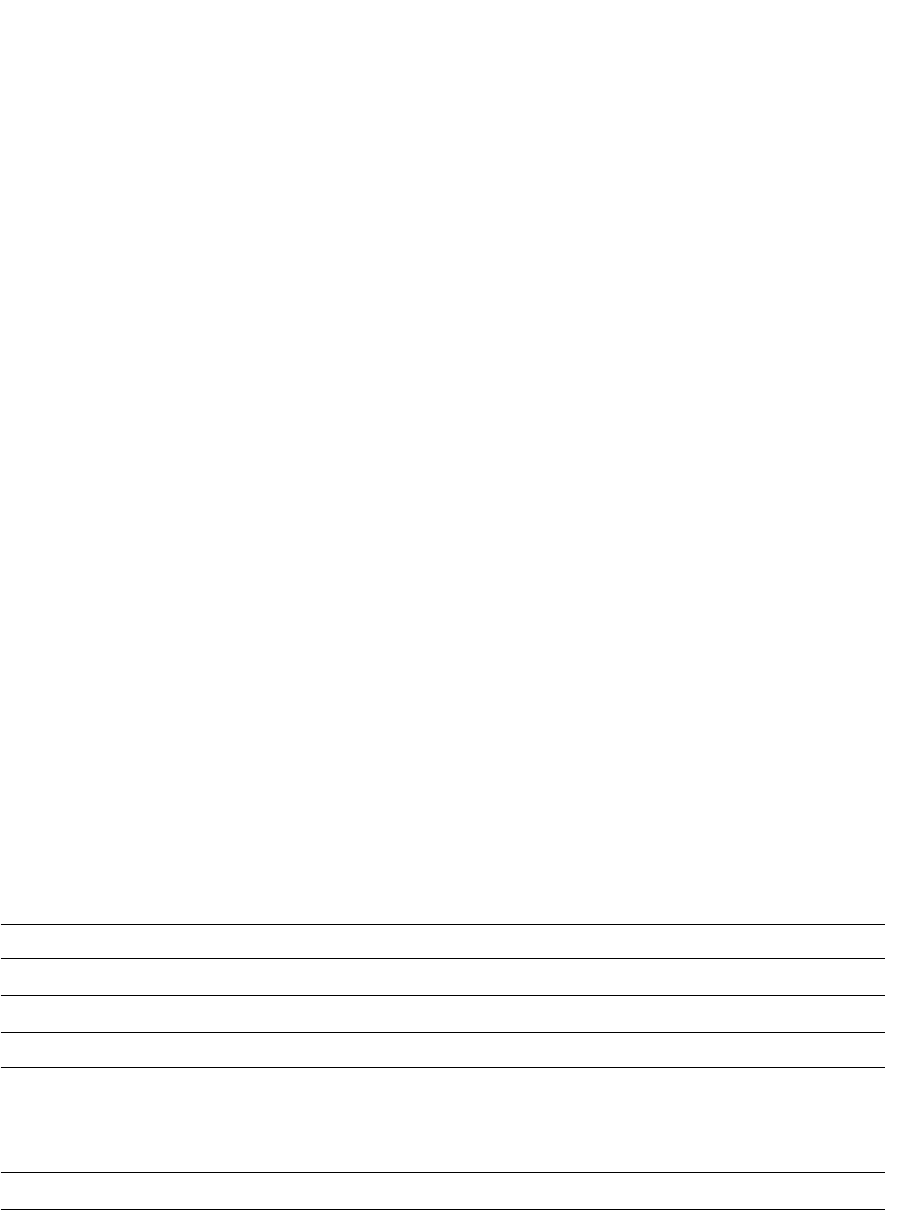

For convenience, registers oen have names (mnemonics). For example, Figure 1.2 shows the core

AArch32 register set of the Arm Cortex-A53 [2].

There are 16 ordinary registers (and ve special ones which we have omied). Registers R0-R12 are

the ‘General-purpose registers’. Then there are three registers with special names: the Stack Pointer

(SP), the Link Register (LR) and the Program Counter (PC).

Figure 1.2: Arm Cortex-A53 AArch32 register set.

3

Alternavely, we could make the registers part of the system state similar to the state of the peripherals. Our choice is purely for convenience because it makes it easier

to manipulate the registers in the Python code.

SP (R13)

LR (R14)

PC (R15)

R5

R6

R7

R0

R1

R3

R4

R2

R10

R11

R12

R8

R9

Low registers

High registers

General-purpose

registers

Stack Pointer

Link Register

Program Counter

7

1.3.3 Processor instrucons

A typical processor can perform a wide range of instrucons on memory addresses and/or register

values. We will use a simple list-based notaon for all instrucons. We will use the (uppercase) Arm

mnemonics for registers and instrucons; in Python, these are simply variables; their denions can

be found in the code repository in le abstract_system_constants.py.

We will assume that all instrucons take up to three registers as arguments, for example

add_instr = [ADD,R3,R1,R2]

Python

which means that the result of ADD operang on registers R1 and R2 is stored in register R3.

Apart from computaonal (arithmec and logic) instrucons we also introduce the instrucons LDR, e.g.

load_instr = [LDR,R1,R2]

Python

and STR, e.g.

store_instr=[STR,R1,R2]

Python

which respecvely load the content of a memory address stored in R2 into register R1 and store the

content of register R1 at the address locaon given in R2.

We also have MOV, which copies data between two registers, e.g.

set_instr = [MOV,R1,R2]

Python

will set the content of R1 to the content of R2.

We have a special non-Arm instrucon called SET, which takes a register and a value as arguments, e.g.

set_instr = [SET,R1,42]

Python

will set the content of R1 to 42.

We also need some instrucons to control the ow of the program, such as branches (B)

goto_instr = [B,R1]

Python

where R1 contains the address of the target instrucon in the program, and condional branches

(CBZ, ‘Compare and Branch if Zero’)

if_instr = [CBZ,R1,R2]

Python

where register R1 contains the condion variable (0 or 1) and the program branches to the address in R2 if

R1=0 and connues on the next line otherwise. We also have CBNZ, ‘Compare and Branch if Non-Zero’.

Chapter 1 | A Memory-centric system model

Operang Systems Foundaons with Linux on the Raspberry Pi

8

Finally, we have two instrucons which take no arguments: NOP does nothing, and WFI stops the

processor unl an interrupt occurs.

1.3.4 Assembly language

To write instrucons for actual processors, a similar, but more expressive, notaon called assembly

language is used. For example, consider the following program that reads two values from memory,

stores them in registers, adds them, and writes the result back:

Lisng 1.3.1: Example program Python

1 [

2 [LDR,R1,R4],

3 [LDR,R2,R5],

4 [ADD,R3,R1,R2],

5 [STR,R3,R6]

6 ]

In the assembly language for the Arm processor [3], this code would look as follows:

Lisng 1.3.2: Example Arm assembly program Python

1 ldr r1, r4

2 ldr r2, r5

3 add r3, r1, r2

4 str r3, r6

Assembly languages have many other features, such as a rich set of addressing mechanisms, labeling

opons, etc. However, for our current purpose, our simple funcon-based notaon is sucient. For

more details, see, e.g., [4].

1.3.5 Arithmec logic unit

The part of a processor that performs computaons is known as the arithmec logic unit (ALU).

We can create a simple ALU in Python as follows:

Lisng 1.3.3: ALU model Python

1 from operator import *

2

3 alu = [

4 add,

5 sub,

6 mul,

7 ...

8 ]

This is simply an array of funcons; more instrucons can be added trivially.

1.3.6 Instrucon cycle

A processor operates what is known as the instrucon cycle or fetch-decode-execute cycle. We can

dene each of these operaons as follows. First, we dene fetchInstrucon. This funcon fetches an

9

instrucon from memory. To determine which instrucon to fetch, it uses a dedicated register known

as the program counter, which has address PC in our register le. Then we also need to know where in

our memory space, we can nd the program code. We use CODE to denote the starng address of the

program in the system state. Aer reading the instrucon, we increment the program counter, so it

points to the next instrucon in the program.

Lisng 1.3.4: Instrucon fetch model Python

1 def fetchInstruction(registers,systemState):

2 # get the program counter

3 pctr = registers[PC]

4 # get the corresponding instruction

5 ir = systemState[CODE+pctr]

6 # increment the program counter

7 registers[PC]+=1

8 return ir

The instrucon is stored in the temporary instrucon register (ir in our code). The processor now has to

decode this instrucon, i.e., extract the register addresses and instrucon opcode from the instrucon

word. Remember that the state stores unsigned integers, so an instrucon is encoded as an unsigned

integer. The details of the implementaon can be found in the repository in le abstract_system_cpu_

decode.py. For this discussion, the important point is that the funcon returns a tuple opcode,args

where args is a tuple containing the decoded arguments (registers, addresses or constants). In the

code, if an element of a tuple is unused, we used _ as variable name to indicate this.

Lisng 1.3.5: Instrucon decode model Python

1 def decodeInstruction(ir):

2 ...

3 return (opcode,args)

Finally, the processor executes the decoded instrucon. In our model, we implement instrucon using

a funcon. The load instrucon (mnemonic LDR) is simply an array read operaon, store (mnemonic

STR) is simply an array write operaon. The B and CBZ branching instrucons only modify the program

counter. By using an array of funcons alu as discussed above, the ALU execuon is very simple too.

The complete code can be found in the repository in le abstract_- system_cpu_execute.py.

Lisng 1.3.6: Individual instrucon execute model Python

1 def doLDR(registers,systemState,args):

2 (r1,addr,_)=args

3 registers[r1] = systemState[addr]

4 return (registers,systemState)

5

6 def doSTR(registers,systemState,args)

7 (r1,addr,_)=args

8 systemState[addr]=registers[r1]

9 return (registers,systemState)

Chapter 1 | A Memory-centric system model

Operang Systems Foundaons with Linux on the Raspberry Pi

10

10

11 def doB(registers,args):

12 (_,addr,_)=args

13 registers[PC] = addr

14 return registers

15

16 def doCBZ(registers,args):

17 (r1,addr1,addr2)=args

18 if registers[r1]:

19 registers[PC] = addr1

20 else:

21 registers[PC] = addr2

22 return registers

23

24 def doALU(instr,registers,args):

25 (r1,r2,r3)=args

26 registers[r3] = alu[instr](registers[r1],registers[r2])

27 return registers

The executeInstrucon funcon simply calls the appropriate handler funcon via a condion on the

instrucon:

Lisng 1.3.7: Instrucon execute model Python

1 def executeInstruction(instr,args,registers,systemState):

2 if instr==LDR:

3 (registers,systemState)=doLDR(registers,systemState,args)

4 elif instr==STR:

5 (registers,systemState)=doSTR(registers,systemState,args)

6 elif ...

7 else:

8 registers = doALU(instr,registers,args)

9 return (registers,systemState)

1.3.7 Bare bones processor model

With these denions, we can build a very simple processor model:

Lisng 1.3.8: Simple processor model Python

1 def processorAction(systemState,registers):

2 # fetch the instruction

3 ir = fetchInstruction(registers,systemState)

4 # decode the instruction

5 (instr,args) = decodeInstruction(ir)

6 # execute the instruction

7 (registers,systemState)= executeInstruction(instr,args,registers,systemState)

8 return (systemState,registers)

11

In the source code, we have also provided an encodeInstrucon in le abstract_system_en- coder.py.

We can encode an instrucon using this funcon, assuming the mnemonics have been dened:

Lisng 1.3.9: Instrucon encoding Python

1 # multiply value in R1 with value in R2

2 # store result in R3

3 instr=[MUL,R3,R1,R2]

4

5 iw=encodeInstruction(instr)

Now you can run this as follows:

Lisng 1.3.10: Running the code Python

1 # Set the program counter relative to the location of the code

2 registers[PC]=0

3 # Set the registers

4 registers[R1]=6

5 registers[R2]=7

6

7 # Store the encoded instructions in memory

8 systemState[CODE] = iw

9

10 # Now run this

11 (systemState,registers) = processorAction(systemState,registers)

12

13 # Inspect the result

14 print( registers[R3] )

15 # prints 42

You can nd the complete Python code for this bare-bones model in the folder bare-bones-model,

have a look and try it out. The le to run is bare-bones-model/abstract_- system_model.py.

1.4 Advanced processor model

The bare-bones model is missing a number of features that are essenal to support an operang

system; in this secon, we introduce these features and add them to the model.

1.4.1 Stack support

A stack is a conguous block of memory that is accessed in LIFO (last in, rst out) fashion. Data is

added to the top of the stack using a ‘push’ operaon and taken from the top of stack using a ‘pop’

operaon. Stacks are used to store temporary data, and they are commonly used to handle funcon

calls. Most computer architectures include at least a register that is usually reserved for the stack

pointer (e.g., as we have seen the Arm processor has a dedicated ‘SP’ register) as well as ‘PUSH’ and

‘POP’ instrucons to access the stack. In our model, we will implement the stack as part of the RAM

memory, and we dene the push and pop instrucons as in the Arm instrucon set, for example:

Chapter 1 | A Memory-centric system model

Operang Systems Foundaons with Linux on the Raspberry Pi

12

Lisng 1.4.1: Example stack instrucons Python

1 push_pop=[

2 [PUSH,R1],

3 [POP,R2]

4 ]

would push the content of R1 onto the stack and then pop it into R2. The PUSH and POP instrucons

are encoded similar to the LDR and STR memory operaons. We extend the executeInstrucon

denion to support the stack with the following funcons:

Lisng 1.4.2: Push/pop implementaon Python

1 def doPush(registers,systemState,args):

2 sptr = registers[SP]

3 (r1,_,_)=args

4 systemState[sptr]=registers[r1]

5 registers[SP]+=1

6 return (registers,systemState)

7

8 def doPop(registers,systemState,args):

9 sptr = registers[SP]

10 (r1,_,_)=args

11 registers[r1] = systemState[sptr]

12 registers[SP]-=1

13 return (registers,systemState)

1.4.2 Subroune calls

One of the main reasons for having a stack is so that the processor can handle subroune calls, and

in parcular, subrounes that call other subrounes or call themselves (recursive call). This is because

whenever we call a subroune, the code in the subroune will overwrite the register le, so we need

to store the registers somewhere before we call a subroune.

To support this mechanism, most processors have instrucons to change the control ow: a rst

instrucon, the call instrucon changes the program counter to the locaon of the subroune to be called.

A second instrucon, the return instrucon, returns the locaon aer the subroune call instrucon.

These instrucons can use either the stack or a dedicated register to save the program counter.

In the Arm 32-bit instrucon set the call and return instrucons are usually implemented using BL and

BX; the Arm convenon is to store the return address in the link register LR, and we will use the same

convenon in our model. We extend the executeInstrucon denion to support subroune call and

return as follows:

Lisng 1.4.3: Call/return implementaon Python

1 def doCall(registers,args):

2 pctr = registers[PC]

3 (_,sraddr,_)=args

4 registers[LR] = pctr

5 registers[PC]=sraddr

13

6 return registers

7

8 def doReturn(registers,args):

9 lreg = registers[LR]

10 registers[PC]=lreg

11 return registers

1.4.3 Interrupt handling

Now let’s extend the processor model to support interrupts. When the processor receives an interrupt

request, it must take some specic acons. These acons are simply special small programs called

interrupt handlers or interrupt service rounes (ISR). The processor uses a region of the main memory

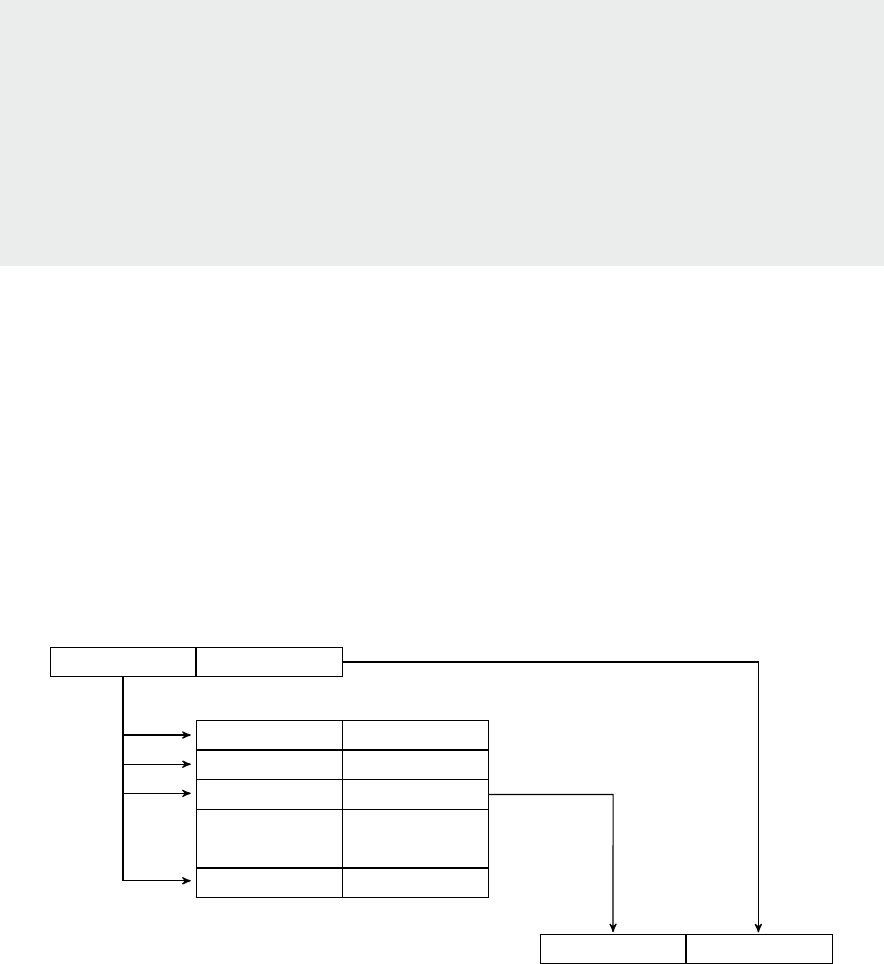

called the interrupt vector table (IVT) to link the interrupt requests to interrupt handlers.

How does the processor handle interrupts? On every clock ck (i.e., on every call to processorAcon

in our model), if an interrupt was raised, the processor has to run the corresponding ISR. In our model,

this means the processor needs to inspect irqs, get the corresponding ISR from the ivt (which in our

model is a slice of the systemState array), and execute it. So in fact, the call to the ISR is a normal

subroune call, but one that does not have a corresponding CALL instrucon in the code. Before

execung the ISR, the processor typically stores some register values on the stack, e.g., the Arm

Cortex-M3 stores R0-R3, R12, PC, and LR [5]. According to the Arm Architecture Procedure Call

Standard [6], the called subroune is responsible for storing R4-R11. In our simple model, we only

store the PC, extending it to support the AAPCS is a trivial exercise.

Lisng 1.4.4: Interrupt handling Python

1 def checkIrqs(registers,ivt,irqs):

2 idx=0

3 for irq in irqs:

4 if irq :

5 # Save the program counter in the link register

6 registers[LR] = registers[PC]

7 # Set program counter to ISR start address

8 registers[PC]=ivt[idx]

9 # Clear the interrupt request

10 irqs[idx]=False

11 break

12 idx+=1

13 return (registers,irqs)

1.4.4 Direct memory access

Another important component of a modern processor-based system is support for Direct Memory Access

(DMA). This is a mechanism that allows peripherals to transfer data directly into the main memory without

going through the processor registers. In Arm systems, the DMA controller unit is typically a peripheral

(e.g., the PrimeCell DMA Controller), so we will implement our DMA model as a peripheral as well.

The principle of a DMA transfer is that the CPU iniates the transfer by wring to the DMA unit’s

registers, then runs other instrucons while the transfer is in progress, and nally receives an interrupt

from the DMA controller when the transfer is done.

Chapter 1 | A Memory-centric system model

Operang Systems Foundaons with Linux on the Raspberry Pi

14

Typically, a DMA transfer is a transfer of a large block of data, which would otherwise keep the

processor occupied for a long me. In our simple model, the DMA controller has four registers:

Source Address Register (DSR)

Desnaon Address Register (DDR)

Counter (DCO)

Control Register (DCR)

This peripheral is dierent from the others in our model because it can manipulate the enre system

state. In a way, we can view a DMA controller as a special type of processor that only performs

memory transfer operaons. The model implementaon is:

Lisng 1.4.5: DMA model Python

1 def dmaAction(systemState):

2 dmaIrq=0

3 # DMA is the start of the address space

4 # DCR values: 1 = do transfer, 0 = idle

5 if systemState[DMA+DCR]!=0:

6 if systemState[DMA+DCO]!=0:

7 ctr = systemState[DMA+DCO]

8 to_addr = systemState[DMA+DDR]+ctr

9 from_addr = systemState[DMA+DSR]+ctr

10 systemState[to_addr] = systemState[from_addr]

11 systemState[DMA+DCO]=-1

12 systemState[DMA+DCR]=0

13 dmaIrq=1

14 return (systemState,dmaIrq)

To iniate a memory transfer using the DMA controller, the processor writes the source and desnaon

addresses to DSR and DDR, and the size of the transfer to DCO (the ‘counter’). Then the status is set to

1 in the DCR. The DMA controller then starts the transfer and decrements the counter for every word

transferred. When the counter reaches zero, an interrupt is raised (count-zero interrupt).

1.4.5 Complete cycle-based processor model

By including this interrupt support, the complete cycle-based processor model now becomes:

Lisng 1.4.6: Complete cycle-based processor model Python

1 def processorAction(systemState,irqs,registers):

2 ivt = systemState[IVT:IVTsz]

3 # Check for interrupts

4 (registers,irqs)=checkIrqs(registers,ivt,irqs)

5 # Fetch the instruction

6 ir = fetchInstruction(registers,systemState)

7 # Decode the instruction

8 (instr,args) = decodeInstruction(ir)

9 # Execute the instruction

10 (registers,systemState)= executeInstruction(instr,args,registers,systemState)

11 return (systemState,irqs,registers)

15

1.4.6 Caching

In an actual system, accessing DRAM memory requires many clock cycles. To limit the me spent in

waing for memory access, processors have a cache, a small but fast memory. For every memory read

operaon, rst the processor checks if the data is present in the cache, and if so (this is called a ‘cache

hit’) it uses that data rather than accessing the DRAM. Otherwise (‘cache miss’) it will fetch the data

from memory and store it in the cache.

For a single-core processor, memory write operaons are treated in the same way. Real-life caches are very

complicated and will be discussed in more detail in Chapters 3 ‘Hardware architecture’ and 6 ‘Memory

management’. Here we will create a simple conceptual model of a cache to illustrate the key points.

First of all, as a cache is limited in size, how do we store porons of the DRAM content in it? Like the

other memories, we will model the storage part of the cache as an array of xed size. So if we want to

store some data in the cache, we nd a free locaon and copy the data into it. At some point, the data

will be removed from the cache, freeing up this locaon. So we need a data structure, e.g., a stack to

keep track of the free locaons.

So what happens when the cache is full (so the stack is empty)? We need to free up space by evicng data

from the cache. As we will see in Chapter 6 ‘Memory management’, there are several dierent policies

to do this. The simplest one (but certainly not the best one) is to evict data from the most recently used

locaon because all it requires is that we keep track of that single locaon. When we evict data from the

cache, it needs to be wrien back to the DRAM memory. Conversely, the data that we put into the cache

was read from an address locaon in the DRAM memory. Therefore the cache must not only keep track

of the data but also of its original address. In other words, we need a lookup between the address in the

DRAM and the corresponding address in the cache. In Python, we can use a diconary for this, a data

structure that associates keys with values. A cache which behaves like a diconary – in that it allows us to

store any memory address at any cache locaon – is called ‘fully associave’.

In Python, we can write such a cache model as follows:

Lisng 1.4.7: Cache model: inializaon and helper funcons Python

1 # Initialise the cache

2 def init_cache():

3 # Cache of size CACHE_SZ

4 cache_storage=[]

5 location_stack_storage=range(0,CACHE_SZ)

6 location_stack_ptr=CACHE_SZ-1

7 last_used_loc = location_stack[location_stack_ptr]

8 location_stack = (location_stack_storage,location_stack_ptr,last_used_loc)

9 address_to_cache_loc={}

10 cache_loc_to_address={}

11 cache_lookup=(address_to_cache_loc,cache_loc_to_address)

12 cache = (cache_storage, address_to_cache_loc,cache_loc_to_address,location_stac

13 return cache

14

15 # Some helper functions

16 def get_next_free_location(location_stack):

17 (location_stack_storage,location_stack_ptr,last_used_loc) = location_stack

18 loc = location_stack_storage[location_stack_ptr]

19 location_stack_ptr-=1

Chapter 1 | A Memory-centric system model

Operang Systems Foundaons with Linux on the Raspberry Pi

16

20 location_stack = (location_stack_storage,location_stack_ptr,last_used_loc)

21 return (location,location_stack)

22

23 def evict_location(location_stack):

24 (location_stack_storage,location_stack_ptr,last_used_loc) = location_stack

25 location_stack_ptr+=1

26 location_stack[location_stack_ptr] = last_used

27 location_stack = (location_stack_storage,location_stack_ptr,last_used_loc)

28 return location_stack

29

30 def cache_is_full(location_stack_ptr):

31 if location_stack_ptr==0

32 return True

33 else

34 return False

Lisng 1.4.8: Cache model: cache read and write funcons Python

1 def write_data_to_cache(memory, address, cache):

2 (cache_storage, address_to_cache_loc,cache_loc_to_address, location_stack) = cache

3 (location_stack_storage,location_stack_ptr,last_used_loc) = location_stack

4 # If the cache was full, evict rst

5 if cache_is_full(location_stack_ptr):

6 location_stack = evict_location(location_stack)

7 evicted_address = cache_loc_to_address[last_used]

8 memory[evicted_address]=cache_storage[last_used]

9 # Get a free location.

10 (loc,location_stack) = get_next_free_location(location_stack)

11 # Get the DRAM content and write it to the cache storage

12 data = memory[address]

13 cache_storage[loc] = data

14 # Update the lookup table and the last used location

15 address_to_cache_loc[address]=loc

16 cache_loc_to_address[loc] = address

17 last_used=loc

18 location_stack = (location_stack_storage,location_stack_ptr,last_used_loc)

19 cache = (cache_storage,address_to_cache_loc,cache_loc_to_address,location_stack)

20 return (memory,cache)

21

22 def read_data_from_cache(memory,address,cache):

23 (cache_storage, address_to_cache_loc,cache_loc_to_address,location_stack) = cache

24 location_stack = evict_location(location_stack)

25 # If the data is not yet in the cache, fetch it from the DRAM

26 # Note this may result in eviction, which could modify the memory

27 if address not in address_to_cache_loc:

28 (memory, cache) = write_data_to_cache(memory,address,cache):

29 # Get the data from the cache

30 loc = address_to_cache_loc[address]

31 data = cache_storage[loc]

32 cache = (cache_storage, address_to_cache_loc,cache_loc_to_address, location_stack)

33 return (data,memory,cache)

The problem with the above model is that for a cache of a given size, we need a locaon stack and two

lookup tables of the same size. This requires a lot of silicon. Therefore, in pracce, the cache will not

simply fetch the content of a single memory address, but a conguous block of memory called a cache

line. For example, the Arm Cortex-A53 has a 64-byte cache line. Assuming that our memory stores 32-

bit words, then the size of the locaon stack and lookup tables is 16x smaller than the actual cache size.

17

There is another reason for the use of cache lines: when a given address is accessed, subsequent

memory accesses are frequently to neighboring addresses. So fetching an enre cache line on a cache

miss tends to reduce the number of subsequent cache misses. Adapng our model to use cache lines

is straighorward:

Lisng 1.4.9: Cache model with cache lines Python

1 # Initialise the cache

2 def init_cache():

3 # Cache of size CACHE_SZ, cache line = 64 bytes = 16 words

4 cache_storage=[[0]*16]*(CACHE_SZ/16)

5 location_stack_storage=range(0,CACHE_SZ/16)

6 location_stack_ptr=(CACHE_SZ/16)-1

7 last_used_loc = location_stack[location_stack_ptr]

8 location_stack = (location_stack_storage,location_stack_ptr,last_used_loc)

9 address_to_cache_loc={}

10 cache_loc_to_address={}

11 cache_lookup=(address_to_cache_loc,cache_loc_to_address)

12 cache = (cache_storage,address_to_cache_loc,cache_loc_to_address,location_stack)

13 return cache

14

15 # The helper functions remain the same

16

17 def write_data_to_cache(memory,address,cache):

18 (cache_storage, address_to_cache_loc,cache_loc_to_address,location_stack) = cache

19 (location_stack_storage,location_stack_ptr,last_used_loc) = location_stack

20 # If the cache was full, evict rst

21 if cache_is_full(location_stack_ptr):

22 location_stack = evict_location(location_stack)

23 evicted_address = cache_loc_to_address[last_used]

24 cache_line = cache_storage[last_used]

25 for i in range(0,16):

26 data = cache_line[i]

27 memory[(evicted_address<<4) + i]=data

28 # Get a free location.

29 (loc,location_stack) = get_next_free_location(location_stack)

30 # Get the DRAM content and write it to the cache storage

31 cache_line = []

32 for i in range(0,16):

33 cache_line.append(memory[((address>>4)<<4)+i]

34 cache_storage[loc] = cache_line

35 # Update the lookup table and the last used location

36 address_to_cache_loc[address>>4]=loc

37 cache_loc_to_address[loc] = address>>4

38 last_used=loc

39 location_stack = (location_stack_storage,location_stack_ptr,last_used_loc)

40 cache = (cache_storage,address_to_cache_loc,cache_loc_to_address,location_stack)

41 return (memory,cache)

42

43 def read_data_from_cache(memory,address,cache):

44 (cache_storage,address_to_cache_loc,cache_loc_to_address,location_stack) = cache

45 location_stack = evict_location(location_stack)

46 # If the data is not yet in the cache, fetch it from the DRAM

47 # Note this may result in eviction, which could modify the memory

48 if address not in address_to_cache_loc:

49 (memory,cache) = write_data_to_cache(memory,address,cache):

50 # Get the data from the cache

51 loc = address_to_cache_loc[address>>4]

52 cache_line = cache_storage[loc]

53 data = cache_line[addres & 0xF]

54 cache = (cache_storage,address_to_cache_loc,cache_loc_to_address,location_stack)

55 return (data,memory,cache)

Chapter 1 | A Memory-centric system model

Operang Systems Foundaons with Linux on the Raspberry Pi

18

The only complicaon in the cache line-based model is that we need to manipulate the memory

address to determine the start of the cache line and the locaon of the data inside the cache line. Do

this using bit shi and bit mask operaons: the rst 4bits of the address idenfy the posion of the

data in the cache line. We don’t need to store these bits in the lookup tables of the cache because the

cache stores only whole cache lines. In other words, from the perspecve of the cache, the memory

consists of cache lines rather than individual locaons. So we have the following formulas:

data_position_in_cache line = address & 0xF

cache_line_address = address >> 4

address = (cache_line_address << 4) + data_position_in_cache line

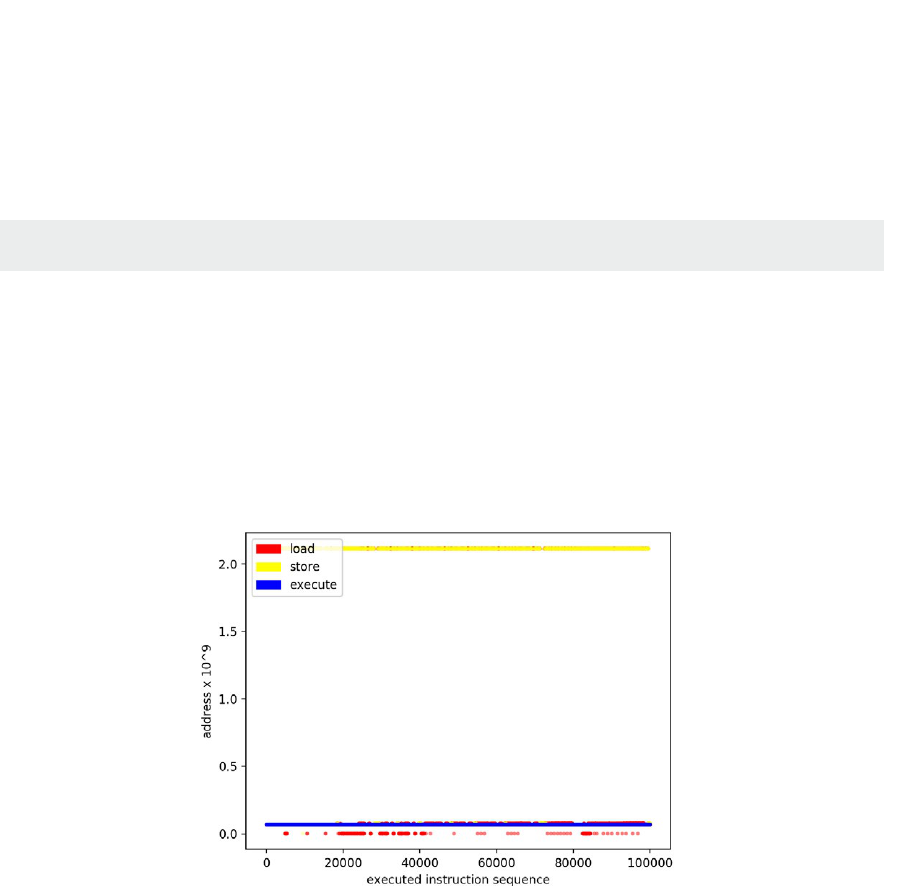

1.4.7 Running a program on the processor

The processor model is complete and can run arbitrary programs. For example, the following program

generates the rst 10 Fibonacci numbers greater than 1 and writes them to main memory:

Lisng 1.4.10: Fibonacci code Python

1 b_prog=[

2 [SET,R1,1],

3 [SET,R2,1],

4 [SET,R3,0],

5 [SET,R4,10],

6 [SET,R5,1],

7 (‘loop’,[ADD,R3,R1,R2]),

8 [MOV,R1,R2],

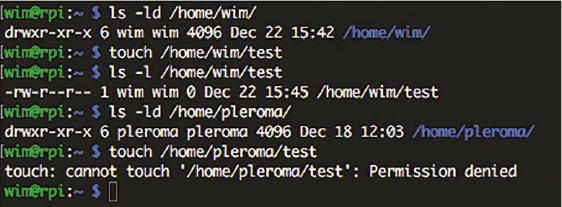

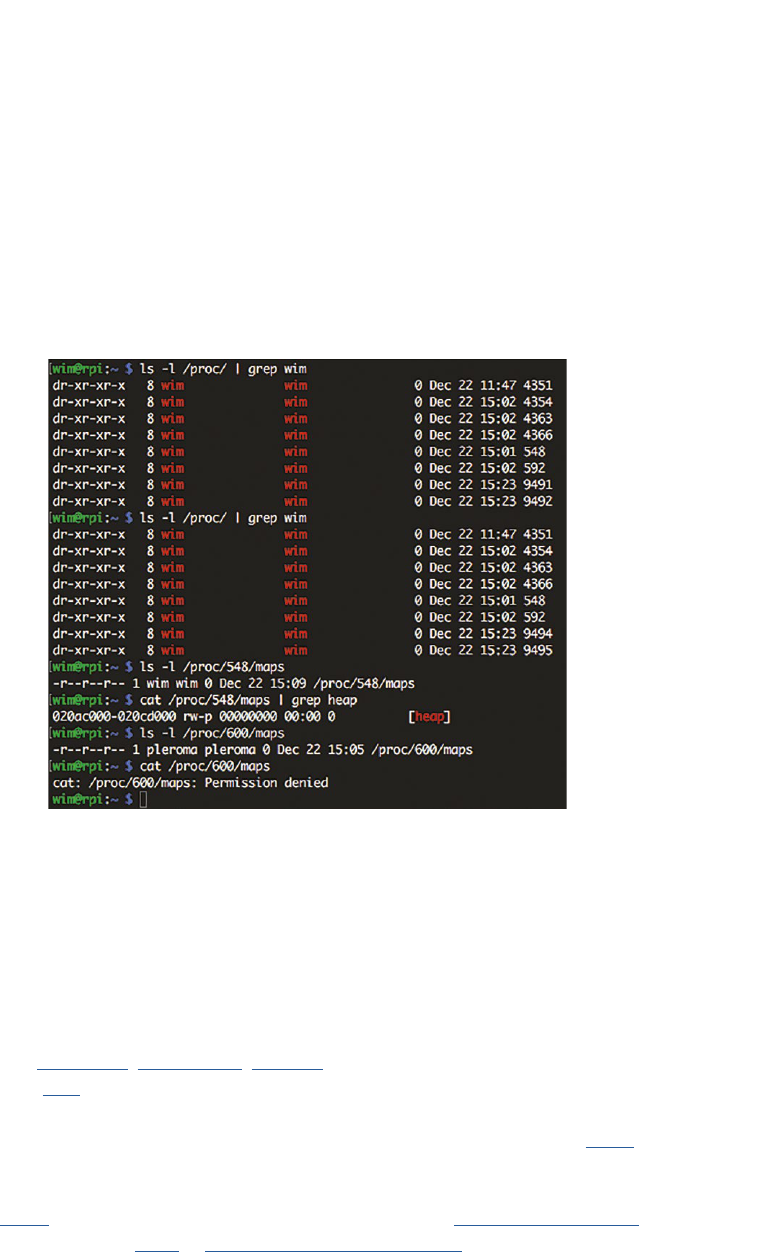

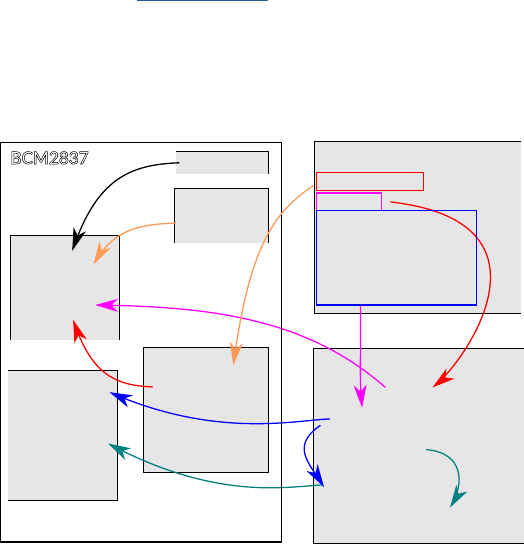

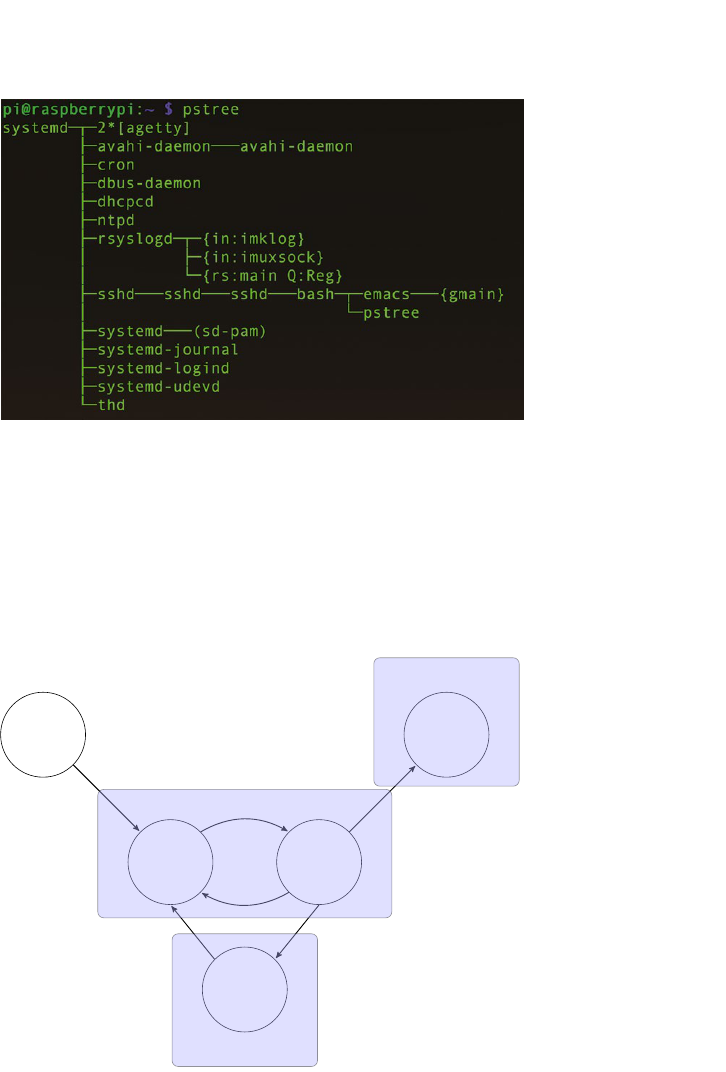

9 [MOV,R2,R3],