Using Logic Models in

Evaluation

Briefing

July 2016

The Strategy Unit

i

S:\Commissioning Intelligence And Strategy\Strategy Unit\Business Management\Website\Final Docs\evidence reviews for publication

on web site\Using Logic Models in Evaluation - July 2016.docx

Introduction ........................................................................................................................................ 2

What is a logic model? ....................................................................................................................... 3

Why use logic models? ...................................................................................................................... 4

What you should bear in mind ......................................................................................................... 8

How to develop your logic model .................................................................................................... 9

Learning more about theory based evaluation ............................................................................. 13

Alternative approaches to evaluation ............................................................................................ 14

Some key points for reflection ......................................................................................................... 2

References ........................................................................................................................................... 3

Contents

2

This briefing has been prepared for NHS England, by the Strategy Unit, as part of a programme of

training to support national and locally-based evaluation of the Vanguard programme and sites.

This paper accompanies a series of national workshops for Vanguard sites and is designed for sites

to use with stakeholders, providing a brief summary and signposting further information.

The briefing is based on a literature review conducted during June 2015, which included a search of

key bibliographic databases, including Medline and HMIC, and key online sources.

Introduction

3

Evaluation is an active component of change management, ideally achieving a balance of

meaningful practical application and methodological rigour. For the Vanguards, dealing with high

levels of complexity and uncertainty, theory-based evaluation offers a robust approach to measuring

impact. The logic model is a key tool to support this approach.

Essentially, a logic model helps with

evaluation by setting out the relationships and

assumptions, between what a programme will

do and what changes it expects to deliver

(Hayes et al., 2011). A logic model can be

particularly valuable in drawing out gaps

between the ingredients of a programme, the

underlying assumptions and the anticipated

outcomes (Helitzer et al., 2010).

Logic models have been used for at least 30 years and are recommended in official evaluation

guidance (HM Treasury, 2011) as a method to support robust evaluation. Logic models are

typically used in theory-based evaluation, which is designed to explicitly articulate the underlying

theory of change which shapes a transformation programme. Put very simply, this is your theory of

how you will achieve the desired outcomes and impacts through a series of activities. Theory-based

evaluation recognises the importance of articulating and analysing the logic at the very heart of the

programme. In complex programmes, such as the Vanguards, logic models used as part of a wider

theory-based approach can help to clarify vision and aims; support real improvement; and build a

solid evidence base.

Logic models are usually developed alongside narrative descriptions of a programme as well as

frameworks for measuring outcomes and impact. The process of developing a logic model helps to

define the various elements of your programme, which creates the foundation for measurement and

evaluation. There are a number of examples of logic models in the literature, some of which are

referenced in this report. Increasingly, logic models are being seen in secondary research to develop

theories for change programmes and to support systematic reviews (Baxter et al., 2014; Allmark et

al., 2013; Hawe, 2015).

“The main problem I see in most BCF [Better Care Fund] areas is that the logic models are

often under-developed and or flawed, usually because system leaders have not done

enough in the first instance of really thinking through the actual changes in service

delivery and how these can actually change the way the system operates. Too often the

initial focus is on funding and organisational issues.”

Dr. Nick Goodwin, International Foundation for Integrated Care, The King's Fund (Better Care

Fund, 2015)

What is a logic model?

“A logic model is a graphic display or

‘map’ of the relationship between a

programme’s resources, activities, and

intended results, which also identifies

the programme’s underlying theory

and assumptions.”

(Kaplan and Garrett, 2005)

4

As McLaughlin and Jordan (1999) note, creating a logic model enables you to set out the

programme’s “story”, detailing:

What are trying to achieve and why is it important?

How will you measure effectiveness?

How are you actually doing?

The process of creating a logic model is considered to be valuable as it requires programmes to fully

and clearly articulate vision and aims, thus introducing a more structured approach to evaluation,

setting out a clear hypothesis to be tested. The logic model can also help to build ownership,

consensus (Kaplan and Garrett, 2005; Hulton, 2007) and a shared understanding (Helitzer et al.,

2010; Hulton, 2007). A collaborative approach acknowledges the range of different perspectives

and facilitates communication with stakeholders, by setting out clearly the vision and expected

outcomes in a credible way and stating what it means for participants and stakeholders (Kaplan and

Garrett, 2005).

“The logic modelling process makes explicit what is often implicit.”

(Jordan, 2010)

Logic models can help support communication in setting out a clear argument for the programme

(Gugui and Rodriguez-Campos, 2007). Most of the literature (McLaughlin and Jordan, 1999, Hayes

et al., 2011, Gugui and Rodriguez-Campos, 2007) recommends that logic models are developed

collaboratively, with key stakeholders, as the process of developing the model creates shared

understanding and expectations of the vision, activities, roles and responsibilities (Jordan, 2010; HM

Treasury, 2011). This is particularly helpful in a complex environment where programmes are

working towards long term outcomes with high levels of uncertainty (Reynolds and Sutherland,

2013). In the Vanguard setting, this can help local health economies to define what integration

means within their local context. The collaborative process can help to reveal and test the

underlying assumptions behind the programme, essentially acting as a type of “health check” of the

clarity and consistency of ideas (Helitzer et al., 2010, McLaughlin and Jordan, 1999) and how clearly

these are expressed to stakeholders. Having a clear visual model of the programme can also aid

communication with local communities (McLaughlin and Jordan, 1999) but there may be a need to

test it before sharing more widely (Baxter et al., 2014). Page et al (2009) note:

“The use of the logic model became a second language within the organization, allowing for

effective communication among programme staff and the evaluator.”

From an evaluation perspective, using a logic model involves evaluators early on in the lifetime of

the programme (Helitzer et al., 2010). This means evaluation can be built around the vision and

aims of the programme and can be designed to facilitate formative (continuous) as well as

Why use logic models?

5

summative (at the end of the programme) evaluation. The use of the logic model approach across a

larger programme offers a standardised approach to evaluation whilst allowing for flexibility for

different approaches to suit different local contexts (Helitzer et al., 2010) can help to create a

valuable evidence base, by establishing what works for which groups in what contexts (Reynolds and

Sutherland, 2013) and informing the transferability of key learning. The logic model can also help to

identify what features of your programme contributed to “intended and unintended outcomes”

(McLaughlin and Jordan, 1999)

6

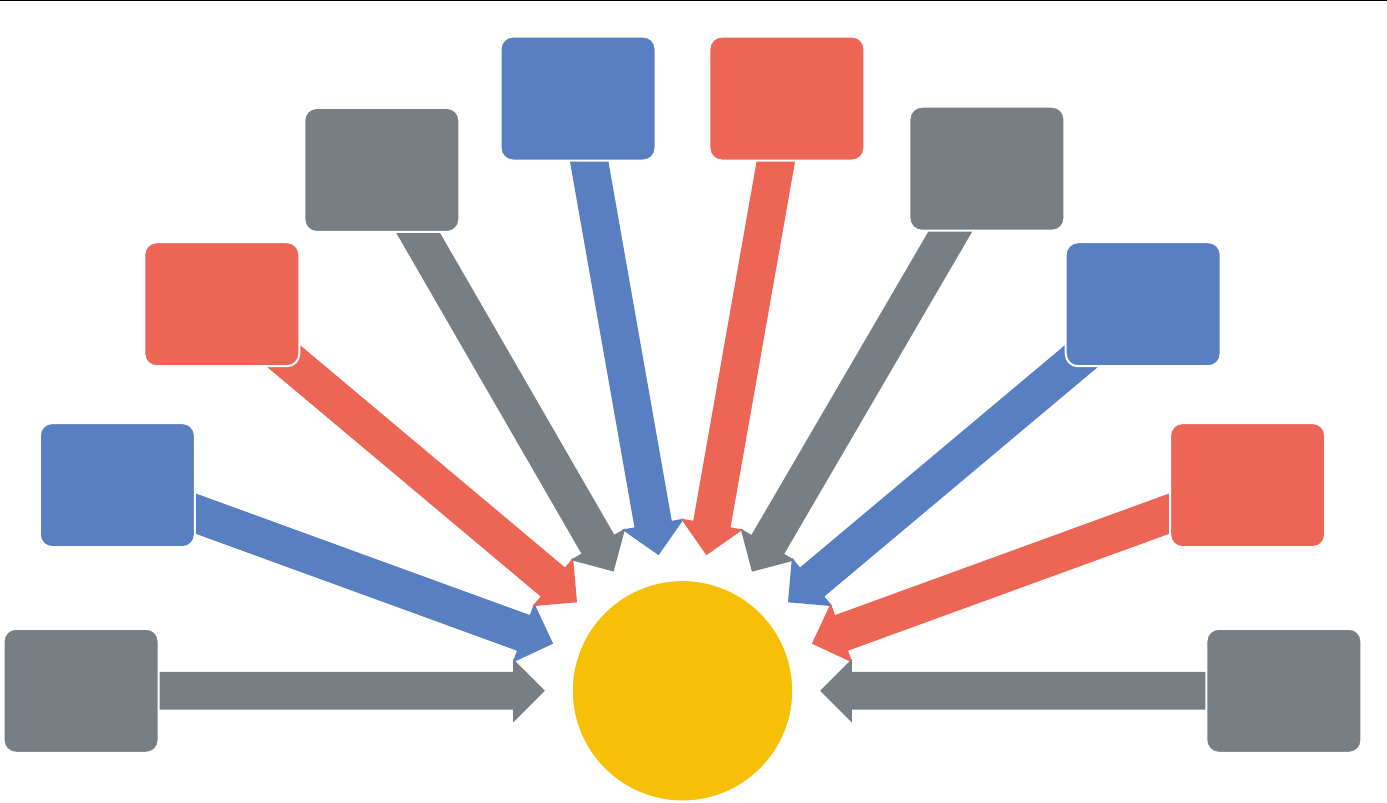

Figure 1 : Benefits of logic models

Why

use logic

models?

Tell the

programme's

"story"

Enables a shared

understanding and

supports

communication

Can act as a

"health check" to

identify gaps and

inconsistencies

Helps to identify

key metrics and

data required

Provides a

structured

framework

Enables a

standardised but

flexible approach

to evaluation

Focuses teams on

the most

important

outcomes and

activities

Supports formative

evaluation helping

you to see what is

and isn't working

Allows capture of

key lessons which

can be transferred

to create an

evidence base

Helps to identify

what features of

the programme

contributed to

outcomes

7

For the programme team, the logic model can

help to focus teams on the activities which will

deliver the most critical outcomes (Hayes et

al., 2011). The process of developing the

model can also help teams in identifying key

metrics (Gugui and Rodriguez-Campos, 2007)

and therefore what data needs to be collected

to monitor progress (Hayes et al., 2011,

McLaughlin and Jordan, 1999; HM Treasury,

2011) and to be able to see what is working

well and what isn’t working and to explore

possible reasons and actions (Hayes et al.,

2011).

Without a logic model, there’s a potential risk of missing the key mechanisms and outcomes of a

programme in the evaluation, thus limiting the value of the evaluation (Gugui and Rodriguez-

Campos, 2007). Programme team will need to state explicitly how activities and resources will lead

to desired outcomes (Helitzer et al., 2010) which helps to reduce potential misunderstandings whilst

ensuring that activities are focused on the outcomes to be achieved. The process can also help to

bring together stakeholders who will inevitably have different perspectives and possibly conflicting

agendas or imposed targets (Helitzer et al., 2010). A collaborative approach will add the insight

from multiple perspectives, which can help to identify barriers at micro, meso and macro levels, and

help to clarify what is within or out with the programme’s control (Jordan, 2010).

“The approach is grounded by first

defining the health impacts

integration is intended to affect. The

mutual goal of designing,

implementing, and scaling up

interventions to improve a particular

health outcome or impact is the

“glue” that holds together disparate

interests, services, and sectors. “

(Reynolds and Sutherland, 2013)

8

Creating robust logic models can present some challenges. To create an effective logic model takes

commitment in time, resources and training (Kaplan and Garrett, 2005). Involving experts in the

process can help create a more robust model but will require investment of resource. Programme

teams sometimes worry about “burdening” people with more work (Kaplan and Garrett, 2005).

A logic model can only ever be a simplification of the complexity with which you’re working and

can’t always capture the nature of the complexity but does offer a representation of what you’re

aiming to achieve that everyone involved in the programme can understand (Hulton, 2007; Craig,

2013). It’s often hard to attribute outcomes to specific interventions but a logic model approach can

help you to measure outcomes and assess whether interventions were implemented as planned and

to explore possible explanations for the outcomes (Craig, 2013). This helps you to build your

understanding of how your programme has contributed to intended and unintended outcomes.

The Aspen Institute (Auspos and Cabaj, 2014) suggest that logic models can encourage a focus only

on those outcomes which have been articulated, which risks missing other unintended

consequences (positive or negative) so it’s important for programmes to work with stakeholders on

real-time feedback mechanisms to avoid blind spots:

“The process begins with asking better questions. Instead of asking,

‘Did we achieve what we set out to achieve?’ they can ask, ‘What have

been the many effects of our activities? Which of these did we seek

and which are unanticipated? What is working (and not), for whom,

and why? What does this mean for our strategy?’ Simply framing

outcomes in this broader way will encourage people to cast a wider

net to capture the effects of their efforts (Patton, 2011).”

It’s important to remember that logic models should be dynamic – a logic model essentially is a

snapshot of your programme (Gugui and Rodriguez-Campos, 2007) and needs to be maintained on

an ongoing basis. This means setting up a process to monitor outcomes, including unintended

outcomes. The Magenta Book (HM Treasury, 2011) provides some guidance on the unintended

consequences of change programmes, classifying these as: displacement (such as moving demand

to another part of the system), substitution (such as a particular group’s needs being prioritised over

another’s), leakage (such as benefits being seen outside the target population) and deadweight (the

outcomes would have happened regardless of the programme).

What you should bear in mind

9

Your logic model should incorporate the underlying assumptions, or programme theory, which

articulates how what you’re doing (interventions and mechanisms) will resolve the problems you

programme aims to resolve (Gugui and Rodriguez-Campos, 2007). A logic model is often expressed

in a tabular format, such as:

McLaughlin and Jordan (1999) outline the typical stages in developing a logic model:

1. Collection of information needed to develop the model. This will involve working with multiple

sources, including, the published literature; programme documents and stakeholders.

2. Description of the problem(s) the programme aims to address and the context in which you’re

working, including the factors which contribute to the problem.

3. Definition of the individual elements of the logic model. At this stage, it may be helpful to

ask constructively challenging “how” and “why” questions to articulate what you are doing

and why (Kaplan and Garrett, 2005).

4. Construction of the model.

5. Verification of the model, working closely with stakeholders, which sets the tone for

continuous review.

The literature features a number of different approaches to the development of logic models (Gugui

and Rodriguez-Campos, 2007):

W K Kellogg Foundation

1

: This approach, which is recommended in the Magenta Book (HM

Treasury, 2011) suggests three different types of models, which each serve a different purpose:

The theory approach model is used to articulate the underlying programme theory, in other

words the “how and why the programme will work (Gugui and Rodriguez-Campos, 2007). It

poses 4 questions:

What issues or problems does the programme seek to address?

1

https://www.wkkf.org/resource-directory/resource/2006/02/wk-kellogg-foundation-logic-model-development-guide

How to develop your logic model

10

What are the specific needs of the target audience?

What are the short- and long-term goals of the programme?

What barriers or supports may impact the success of the programme?

The activities approach model is designed to support implementation by setting out the

programme activities in detail, to a timescale. This model also incorporates monitoring

processes and approaches to identifying and managing barriers.

The outcomes approach model is focused on demonstrating impact of the programme and

is recommended for short and longer term follow up.

The United Way

2

(University of Wisconsin) approach is perhaps the most familiar. This model

specifies four elements: the inputs are the resources used by the programme; the activities are

the processes undertaken to implement change; the outputs are the direct products from the

activities; and the outcomes are the benefits derived from the programme (usually expresses as

short, medium and long term), which may be direct or indirect. Outcomes indicate a change

from pre to post programme, typically more associated with behaviours, skills, attitudes and

knowledge (Gugui and Rodriguez-Campos, 2007). A fifth element, contextual factors, is often

added to articulate the constraints within the programme’s environment (such as legal

requirements, workforce, location).

Gugui and Campos developed a semi-structured interview protocol (SSIP) approach to assist

less experienced evaluation teams to collect perspectives from programme teams and

stakeholders, to inform the logic model. The protocol is divided into 7 sections:

Gathering information from key stakeholders: this includes basic programme (including

purpose, financial status and capacity) and contextual information (e.g. social, political,

legal);

Generating elements of the logic model which is likely to be an iterative process;

Organising these elements into outcomes, activities, outputs (which should all cover

individual, organisational, system, community etc. levels) and inputs (which should cover

resources and resource gaps) ;

Identifying and removing those elements which are unclear, unrealistic or meaningless;

2

http://www.uwex.edu/ces/pdande/evaluation/evallogicmodel.html

11

Identifying a plausible theory of change, which essentially links together the

outcomes, activities, outputs and inputs. A process theory approach might consider how

a programme has implemented activities (e.g. has the target population been identified? Do

staff have the required skills and knowledge?) and an outcomes theory approach would look

at whether the theory of change which sets out why activities will lead to desired outcomes,

is feasible (have the underlying health needs been identified? Which activities are most

critical for achieving outcomes?);

Prioritising your intended outcomes, to identify the most critical outcomes and therefore

where to focus evaluation;

Presenting the graphical or tabular logic model to organise the information collected in

steps 1 through 6.

According to Kaplan and Garrett (2005), logic models work best where they are adapted to meet the

needs of the programme and local community as opposed to a rigid exercise. Development of your

logic model will be an iterative and dynamic process (Hulton, 2007) and will benefit from a

coproduction approach (Kaplan and Garrett, 2005; Jordan, 2010) which helps to “grounded in local

context” (Helitzer, D et al., 2010). Helitzer et al. advise that key stakeholders should share the latest

versions of their models within their constituencies to discuss, revise and keep communication lines

open, and then feed in any learning or feedback. Kaplan and Garrett suggest that a co-production

approach leads to more complete models, which have been subjected to greater scrutiny and

broader input. This can prove challenging as stakeholders are likely to vary in their levels of

commitment to the process; Kaplan and Garrett suggest working with small interactive groups is

particularly effective.

McLaughlin and Jordan (1999) recommend asking a series of questions to ensure the model is

as comprehensive and robust as it can be:

“Is the level of detail sufficient to create understandings of the elements and their

interrelationships?

Is the programme logic complete? That is, are all the key elements accounted for?

Is the programme logic theoretically sound? Do all the elements fit together logically?

Are there other plausible pathways to achieving the programme outcomes?

Have all the relevant external contextual factors been identified and their potential

influences described?”

It is important to articulate and test the validity of underlying assumptions; this helps to identify

gaps and inconsistencies in your model (Kaplan and Garrett, 2005), which in turn, builds a stronger

case for change and helps to translate nebulous ideas into clear aims and activities. Kaplan and

Garrett share an example where a number of programmes were able to identify gaps in their

workforce planning as a result of collaborative development of logic models. There can often be an

12

assumption that behaviours will change simply as a result of an intervention, such as a new

technology; it is important that assumptions such as these are acknowledged and challenged before

implementation. Assumptions can be tested through a review of the evidence base (Helitzer, D et

al., 2010; McLaughlin and Jordan, 1999) and engagement with experts and stakeholders. Kaplan and

Garrett advise this testing happens as early on as possible as there may be a need to consider

reallocating resources; they also advise that funders be open to changes to overall design.

There are numerous guides available online, including:

Evaluation Support Scotland tutorial

http://www.evaluationsupportscotland.org.uk/resources/278/

Evaluation Support Scotland guide http://www.evaluationsupportscotland.org.uk/resources/127/

The Tavistock Insitute’s guide developed for the Department of Transport:

https://www.gov.uk/government/uploads/system/uploads/attachment_data/file/3817/logicmap

ping.pdf

Chapter 6 of the Magenta Book focuses on the development of logic models (Kellogg

approach): https://www.gov.uk/government/publications/the-magenta-book

Your logic model will enable you to ask questions during and after the programme, such as

(McLaughlin and Jordan, 1999):

“Is (was) each element proposed in the Logic Model in place, at the level expected for the time

period?

Are outputs and outcomes observed at expected performance levels?

Are activities implemented as designed?

Are all resources, including partners, available and used at projected levels?

Did the causal relationships proposed in the Logic Model occur as planned?

Is reasonable progress being made along the logical path to outcomes?

Were there unintended benefits or costs?

Are there any plausible rival hypotheses that could explain the outcome/result?

Did the programme reach the expected customers and are the customers reached satisfied with

the programme services and products?”

13

This report has provided a brief introduction to logic models and summarised the key benefits of

using logic models to plan and evaluate your programme. Logic models are typically used within

theory-based evaluation. HM Treasury (2011) note that complex programmes typically need a

theory-based evaluation framework to triangulate the evidence you’re collecting and to refine the

assumptions made in your logic model. The purpose of a theory-based framework is to enable you

to systematically test and review the relationships between your programme activities and intended

outcomes.

The Aspen Institute has developed a reputation in this area. Their Theory of Change evaluation

(Connell et al., 1995) “involves the specification of an explicit theory of ‘how’ and ‘why’ a policy

might cause an effect which is used to guide the evaluation. It does this by investigating the causal

relationships between context-input-output- outcomes-impact in order to understand the

combination of factors that has led to the intended or unintended outcomes and impacts.”

Learning more about theory based evaluation

14

There will be occasions when logic models are not the most appropriate method to support

evaluation, for example, the linear approach of the classic logic model may not work with the

complexity of your programme.

Transformational change is increasingly being viewed through a complex systems lens (Best et al.,

2012). Greenhalgh et al. (2012) suggest a systems approach is beneficial for large-scale

transformation programmes, emphasising the need to incorporate analysis of the wider context in

addition to the “hard components”:

“Specifically, policymakers and programme architects who embark on complex change

efforts will, at any point in the unfolding of the programme (and perhaps also after the

funding period has ended), be implementing and/or seeking to sustain a particular set of

activities oriented to producing a particular set of outcomes. To that end, they should

undertake (or commission) an intervention-focused evaluation based on a set of hypothesis-

driven questions and (largely) predefined metrics. However, the programme will inevitably

encounter unforeseen factors and events, which, at least in the eyes of some stakeholders,

will necessitate changes to the protocol “on the fly.” These changes, and their ramifications,

demand rich processual explanations, for which a system-dynamic evaluation is needed. […]

But we believe it should be possible to set up a change programme and linked evaluation in

a way that anticipates and accommodates the flexible use and juxtaposition of both

intervention-focused and system-dynamic evaluation components.”

There is some literature (Hawe, 2015) suggesting options to build complexity into your model, such

as: including feedback loops in the model; expressing models in a cyclical rather than linear format.

However, there are other methodologies which may prove more appropriate, including: realist

evaluation and soft systems methodology.

These alternative approaches have in part,

developed, due to the difficulties in

demonstrating the impact of large-scale

change programmes (Blamey and MacKenzie,

2007). Both approaches recognise the

influence of context (e.g. political, social,

economic), arguing that impact cannot be

measured without this acknowledgement.

Context matters not just to be able to explain

outcomes but to understand what may be

transferable to other settings.

Alternative approaches to evaluation

“Systems theory is a specific way to

conceptualize the world around us. In

its broadest sense, a system consists

of elements linked together in a

certain way, i.e. inter-relationships

that connect parts to form a whole.

And it has a boundary, which

determines what is inside of a system

and what is outside (context or

environment).”

(Hummelbrunner, 2011)

1

Realist Evaluation typically asks: ‘what works, for whom, under what circumstances?’ (Pawson and

Tilley, 1997). Realist evaluation explores the relationships between the mechanisms (what you do)

of your programme within different settings (context) and the impacts which result. This method is

becoming increasingly popular due to the acknowledgement of the influence of context

(Greenhalgh, T. et al., 2009). Pawson (2013) has recently published a book which builds on the

earlier work and gives an in-depth account from an evaluator’s perspective.

Soft systems methodology, developed by Professor Peter Checkland at the University of

Lancaster (Checkland, 2000), is an attempt to address the complexity and volatility of problematic

situations, acknowledging that different stakeholders will perceive problems and proposed

solutions differently (worldviews). The methodology involves 7 steps:

1. Situation is considered problematic

2. Problem situation expressed (usually by a “rich picture”)

3. Root definitions of relevant systems (a description of goals)

4. Conceptual models based on the root definitions

5. Comparison of models and the real world which leads to suggestions for improvements

6. Changes which are systemically desirable and culturally feasible

7. Action to improve the problem situation

If you’re interested in learning more about soft systems methodology, there are a number of

textbooks available (e.g. Checkland, P. and Poulter, J., 2006).

2

Who needs to be involved in the development of your logic model?

How will you engage people to get (and stay) involved?

What information do you need (and have) to get started?

How will you capture and share learning as your programme progresses?

How will you scan for unintended consequences which might be outside your immediate line of

vision?

How will you articulate and test the underlying assumptions of the programme?

What resources do you need, and have, to manage evaluation?

How will you communicate your model to and capture feedback from local communities?

How will you maintain your model?

What expertise can you draw on to help with different stages of the logic model process?

Some key points for reflection

3

Allmark, P., et al. (2013). Assessing the health benefits of advice services: using research evidence

and logic model methods to explore complex pathways. Health and Social Care in the Community,

21, 59-68.

Auspos, P. and Cabaj, M. (2014) Complexity and community change: managing adaptively to

improve effectiveness. Washington, The Aspen Institute.

Baxter, S. K., et al. (2014). Using logic model methods in systematic review synthesis: describing

complex pathways in referral management interventions. BMC Medical Research Methodology, 14,

62.

Best, A., et al. (2012). Large-system transformation in health care : a realist review. Milbank

Quarterly, 90, 421-456.

Better Care Fund (2015) How to understand and measure impact. Issue 4. Local Government

Association. [Online] Available at:

http://www.local.gov.uk/documents/10180/5572443/How+to+understand+and+measure+impact/

dbedc114-bd19-40f5-a040-db3177736b23 [Accessed 10 July 2015]

Blamey, A. and MacKenzie, M. (2007) Theories of change and realistic evaluation: peas in a pod or

apples and oranges? Evaluation, 13 (4), 439-55.

Checkland, P. (2000) Soft systems methodology: a thirty-year retrospective, Systems Research and

Behavioural Science, 17, S11-58.

Checkland, P. and Poulter, J. (2006) Learning for action: a short definitive account of soft systems

methodology, and its use for pracititioners, teachers and students. Chichester, Wiley.

Connell, J.P. et al. (eds.) (1995) New approaches to evaluating community initiatives: concepts,

methods and contexts. Washington: The Aspen Institute.

References

4

Greenhalgh, T. et al. (2012) "If we build it, will it stay?" A case study of the sustainability of whole-

system change in London, Milbank Quarterly, 90 (3), 516-47.

Greenhalgh, T. et al. (2009) How do you modernise a health service? A realist evaluation of whole-

scale transformation in London, Milbank Quarterly, 87 (2), 391-416.

Gugui, P. C. and Rodriguez-Campos, L. (2007). Semi-structured interview protocol for constructing

logic models. Evaluation and Programme Planning, 30, 339-350.

Hawe, P. (2015) Lessons from complex interventions to improve health, Annual Review of Public

Health, 36, 307-23.

Hayes, H., et al. (2011). A logic model framework for evaluation and planning in a primary care

practice-based research network (PBRN). Journal of the American Board of Family Medicine, 24,

576-82.

Helitzer, D., et al. (2010). Evaluation for community-based programmes: the integration of logic

models and factor analysis. Evaluation and Programme Planning, 33, 223-33.

HM Treasury (2011) The Magenta Book: guidance for evaluation. London: HM Treasury.

Hulton, L. J. (2007) An evaluation of a school-based teenage pregnancy prevention programme

using a logic model framework, Journal of School Nursing, 23 (2), 104-10.

Hummelbrunner, R. (2011) Systems thinking and evaluation, Evaluation, 17 (4), 395-403.

Jordan, G. B. (2010). A theory-based logic model for innovation policy and evaluation. Research

Evaluation, 19, 263-273.

5

Kaplan, S. A. and Garrett, K. E. (2005). The use of logic models by community-based initiatives.

Evaluation and Programme Planning, 28, 167-172.

McLaughlin, J. A. and Jordan, G. B. (1999). Logic Models: A Tool for Telling Your Programme’s

Performance Story. Evaluation and Programme Planning, 22.

Page, M., Parker, Sheila. H. and Renger, R. (2009) How using a logic model refined our programme

to ensure success, Health Promotion Practice, 10 (1), 76-82.

Pawson, R. (2013) The science of evaluation: a realist manifesto. London, Sage.

Pawson, R. and Tilley, N. (1997) Realistic evaluation. London, Sage.

Renger, R. and Titcomb, A. (2002). A Three-Step Approach to Teaching Logic Models. American

Journal of Evaluation, 23, 493-503.

Reynolds, H. W. and Sutherland, E. G. (2013). A systematic approach to the planning,

implementation, monitoring, and evaluation of integrated health services. BMC Health Services

Research, 13, 168.

Rogers, P. J. and Weiss, C. H. (2007)Theory-based evaluation: reflections ten years on: Theory-based

evaluation: past, present and future, New directions for evaluation, 114, 63-81.

The Strategy Unit

Tel: +44(0)121 612 1538

Email: strategy.unit@nhs.net

Twitter: @strategy_unit