EVALUATION PLANNING

73

7373

73

Using Your Logic Model to

Plan for Evaluation

T

hinking through program evaluation questions in terms of the logic model

components you have developed can provide the framework for your evaluation plan.

Having a framework increases your evaluation’s effectiveness by focusing in on

questions that have real value for your stakeholders.

• Prioritization of where investment in evaluation activities will contribute the most

useful information for program stakeholders.

• Description of your approach to evaluation.

There are two exercises in this chapter; exercise 4 deals with posing evaluation

questions and exercise 5 examines the selection of indicators of progress that link back

to the basic logic model or the theory-of-change model depending on the focus of the

evaluation and its intended primary audiences.

Exercise 4 --- Posing Evaluation Questions

The Importance of "Prove" and "Improve" Questions

There are two different types of evaluation questions--

formative

help you to improve

your program and

summative

help you prove whether your program worked the way

you planned. Both kinds of evaluation questions generate information that determines

the extent to which your program has had the success you expected and provide a

groundwork for sharing with others the successes and lessons learned from your

program.

Chapter

4

Produced by The W. K. Kellogg Foundation

EVALUATION PLANNING

7

4

747

4

74

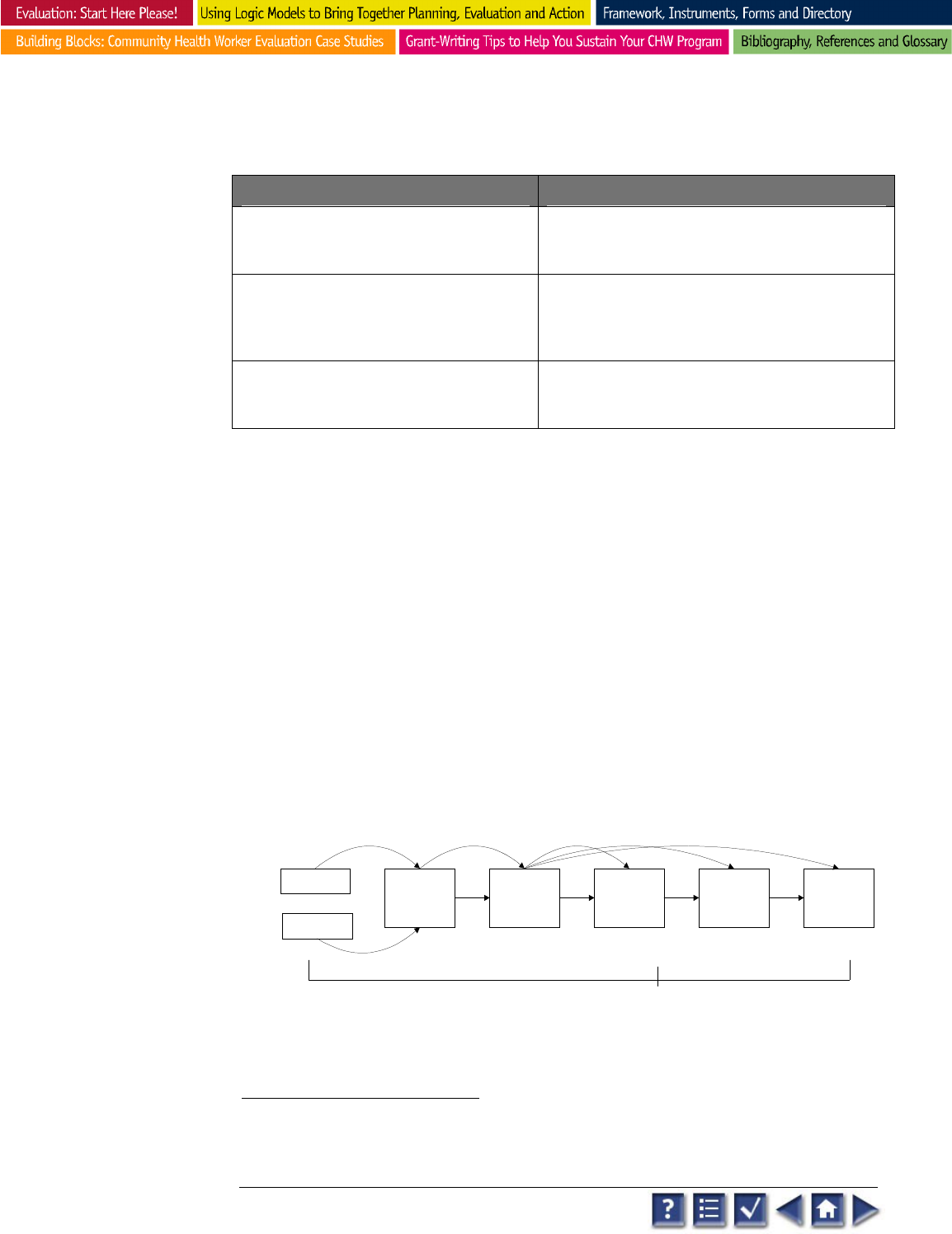

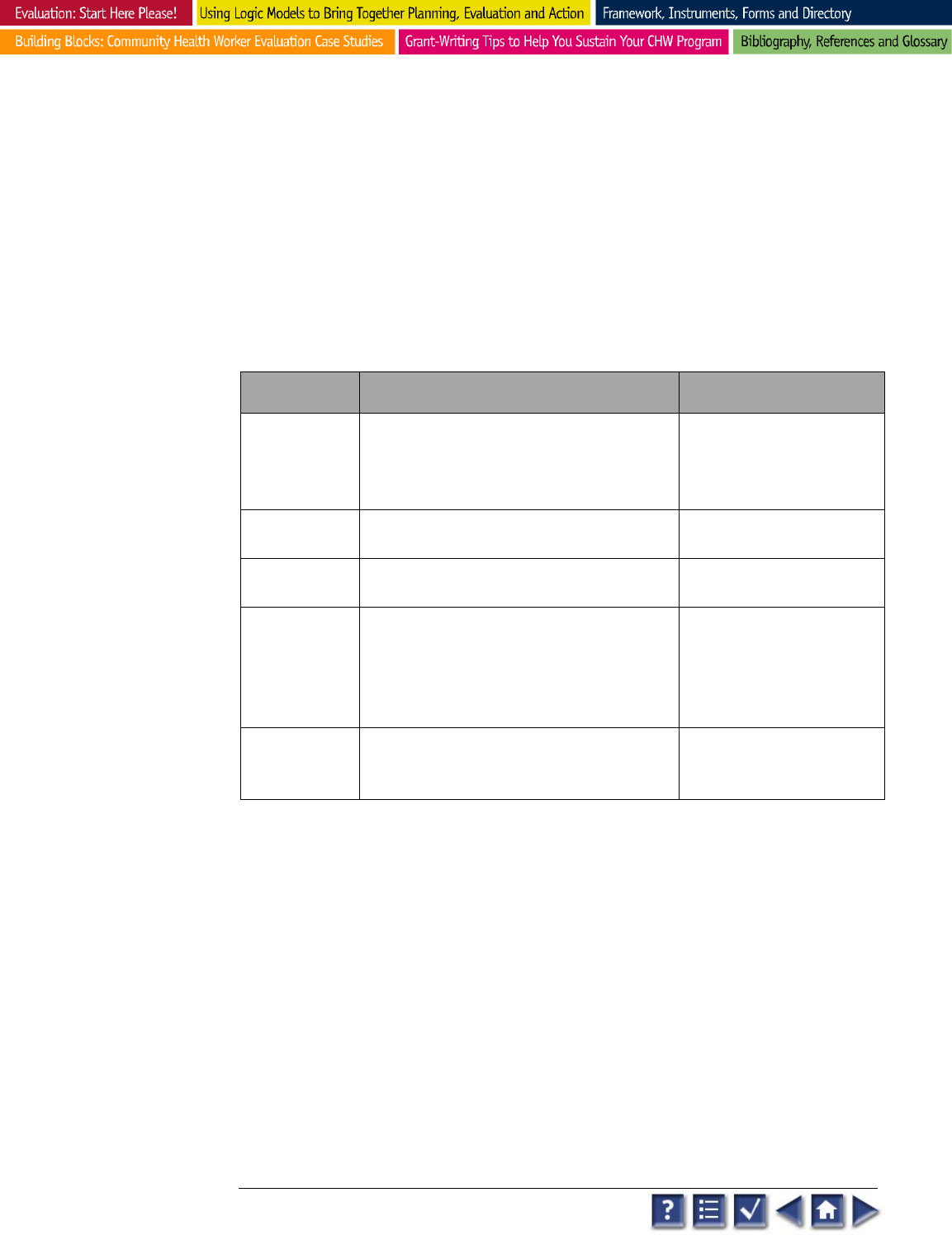

Benefits of Formative and Summative Evaluation Questions

3

Formative Evaluation--Improve Summative Evaluation--Prove

Provides information that helps you improve your

program. Generates periodic reports. Information

can be shared quickly.

Generates information that can be used to demonstrate the

results of your program to funders and your community.

Focuses most on program activities, outputs, and

short-term outcomes for the purpose of monitoring

progress and making mid-course corrections when

needed.

Focuses most on program’s intermediate-term outcomes

and impact. Although data may be collected throughout the

program, the purpose is to determine the value and worth of

a program based on results.

Helpful in bringing suggestions for improvement to

the attention of staff.

Helpful in describing the quality and effectiveness of your

program by documenting its impact on participants and the

community.

Looking at Evaluation from Various Vantage Points--

How will you measure your success? What will those “investing” in your program or

your target audience want to know?

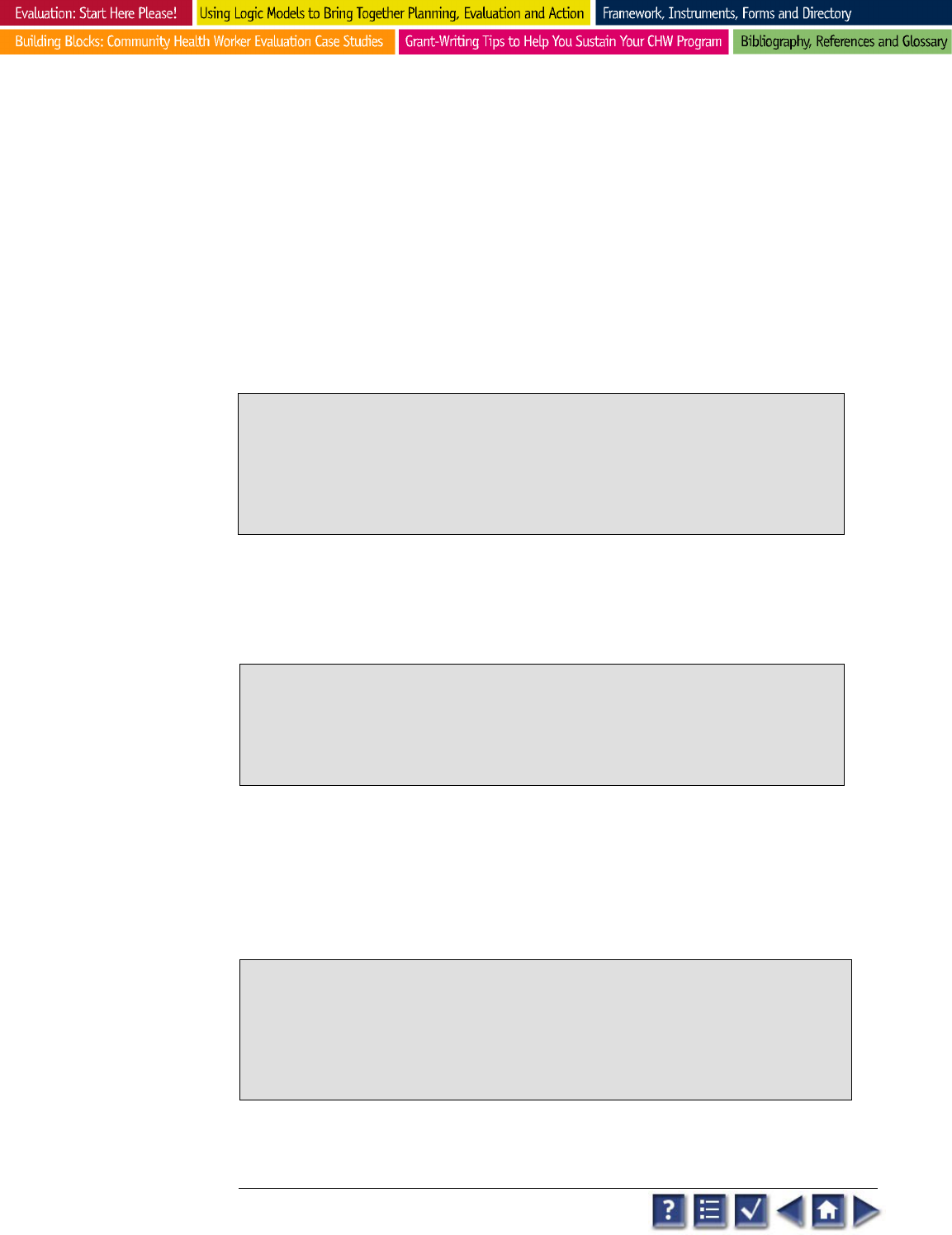

A clear logic model illustrates the purpose and content of your program and makes it

easier to develop meaningful evaluation questions from a variety of program vantage

points: context, implementation and results (which includes outputs, outcomes, and

impact).

What Parts of Your Program Will Be Evaluated? Using a logic model to

frame your evaluation questions.

INFLUENCES

ACTIVITIES OUTPUTS IMPACT

SHORT-TERM

OUTCOMES

INTERMEDIATE

OUTCOMES

Relationships

& Capacity

Quality &

Quantity

Effectiveness, Magnitude, &

Satisfaction

Formative Evaluation Summative Evaluation

Context Implementation Outcomes

RESOURCES

What aspects of our situation most

shaped our ability to do the work

we set out to do in our

community?

What did our program accomplish

in our community?

What is our assessment of what

resulted from our work in the

community?

What have we learned about

doing this kind of work in a

community like ours?

and/or

3

Adapted from Bond, S.L., Boyd, S. E., & Montgomery, D.L.(1997 Taking Stock: A Practical Guide to Evaluating

Your Own Programs, Chapel Hill, NC: Horizon Research, Inc. Available online at http://www.horizon-

research.com.

See Resources

Appendix

for more information on

evaluation planning.

Produced by The W. K. Kellogg Foundation

EVALUATION PLANNING

75

7575

75

Remember you can draw upon the basic logic model in exercises 1 & 2 and the theory-

of-change model in exercise 3. Feasibility studies and needs assessments serve as

valuable resources for baseline information on influences and resources collected

during program planning.

Context

is how the program functions within the economic, social, and political

environment of its community and addresses questions that explore issues of

program relationships and capacity. What factors might influence your ability to do the

work you have planned? These kinds of evaluation questions can help you explain

some of the strengths and weakness of your program as well as the effect of

unanticipated and external influences on it.

Sample CONTEXT QUESTIONS:

Can we secure a donated facility? With the low morale

created by high unemployment, can we secure the financial and volunteer support we need? How

many medical volunteers can we recruit? How many will be needed each evening? How will

potential patients find out about the clinic? What kind of medical care will patients need? How can

we let possible referral sources know about the clinic and its services? What supplies will we need

and how will we solicit suppliers for them? What is it about the free clinic that supports its ability to

reduce the numbers of patients seeking care in Memorial Hospital’s ER?

Implementation

assesses the extent to which activities were executed as

planned, since a program’s ability to deliver its desired results depends on whether

activities result in the quality and quantity of outputs specified. They tell the story of

your program in terms of what happened and why.

SAMPLE IMPLEMENTATION QUESTIONS:

What facility was secured?

How many

patients were seen each night/month/year? What organizations most frequently referred patients to

the clinic? How did patients find out about the clinic? How many medical volunteers serve each

night/month year? What was the value of their services? What was the most common diagnosis?

What supplies were donated? How many patients per year did the Clinic see in its first/second/third

year?

Outcomes

determine the extent to which progress is being made toward the

desired changes in individuals, organizations, communities, or systems.

Outcome questions seek to document the changes that occur in your community as a

result of your program. Usually these questions generate answers about effectiveness of

activities in producing changes in magnitude or satisfaction with changes related to the

issues central to your program.

SAMPLE OUTCOME QUESTIONS:

How many inappropriate, uninsured patients sought

medical care in Memorial’s ER in the Clinic’s first/second/third year? Was there a reduction in un-

funded ER visits? How did the number of uninsured patients compare to previous years when the

clinic was not operating? What was the cost/visit in the Free Clinic? What is the cost/visit in

Memorial’s ER? How do they compare? What were the cost savings to Memorial Hospital? How

satisfied were Clinic patients with the care they received? How satisfied were volunteers with their

service to the Clinic?

Produced by The W. K. Kellogg Foundation

EVALUATION PLANNING

76

7676

76

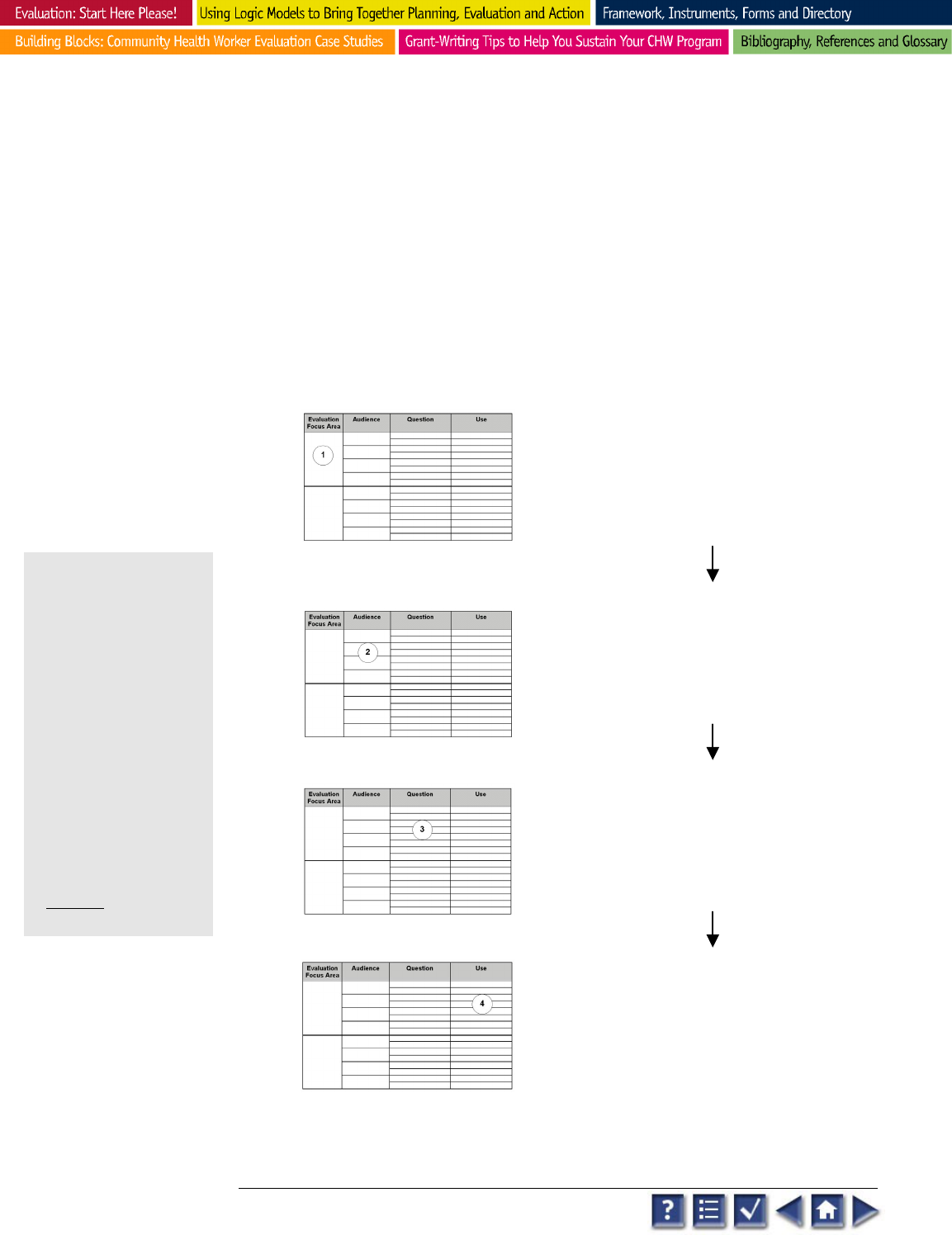

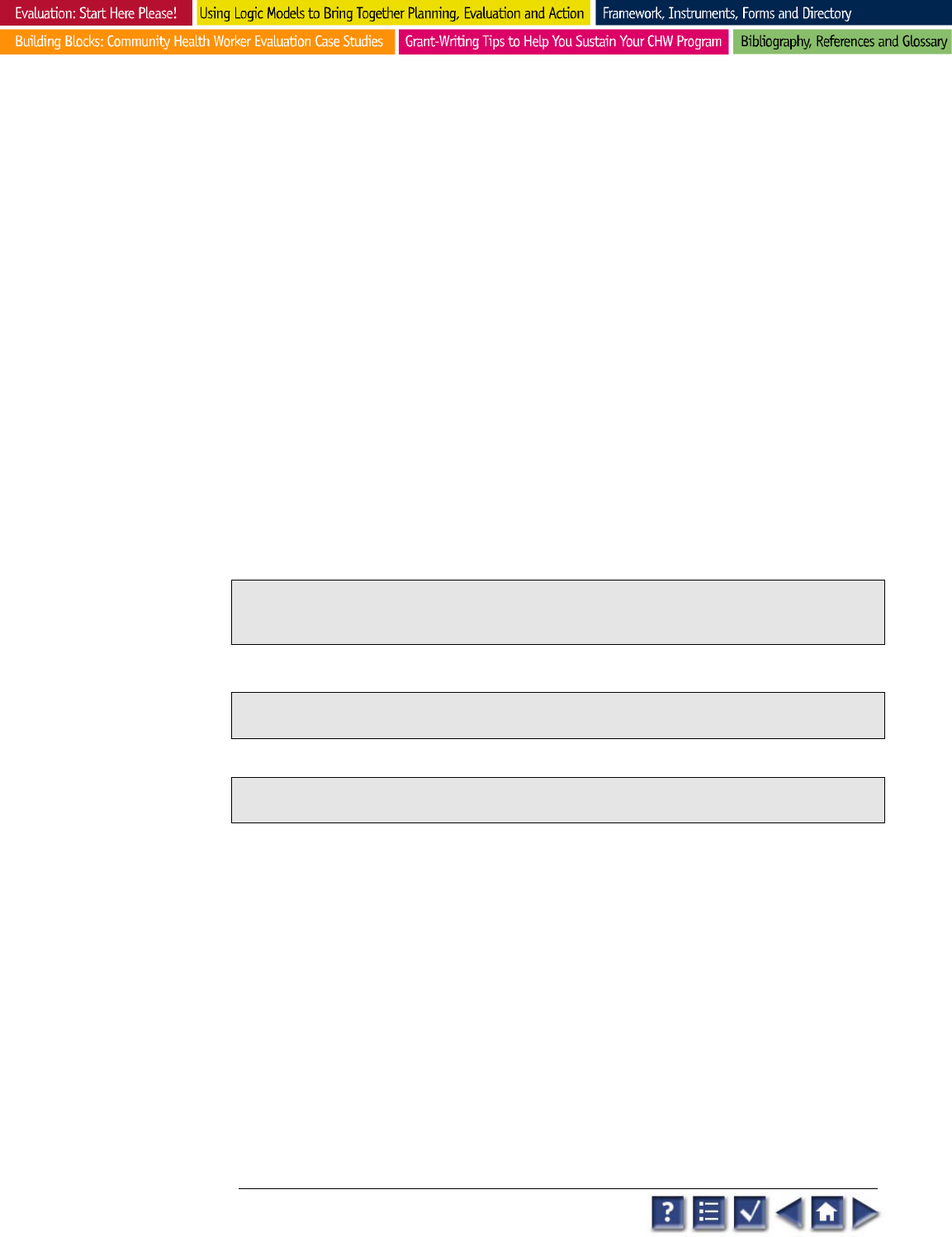

Creating Focus

Though it is rare, you may find that examining certain components of your program is

sufficient to satisfy your information needs. Most often, however, you will

systematically develop a series of evaluation questions, as shown in the Flowchart for

Evaluation Question Development.

Flowchart for Evaluation Question Development

Focus Area

What is going to be evaluated? List those

components from your theory and/or logic model

that you think are the most important aspects of your

program. These areas will become the focus of your

evaluation.

Audience

What key audience will have questions about your focus

areas? For each focus area you have identified, list the

audiences that are likely to be the most interested in that

area.

Question

What questions will your key audience have about

your program? For each focus area and audience

that you have identified, list the questions they might

have about your program.

Information Use

If you answer a given question, what will that

information be used for? For each audience and

question you have identified, list the ways and extent

to which you plan to make use of the evaluation

information.

The use of program theory

as a map for evaluation

doesn't necessarily imply that

every step of every possible

theory has to be studied.

...Choices have to be made

in designing an evaluation

about which lines of inquiry

to pursue. ...The theory

provides a picture of the

whole intellectual landscape

so that people can make

choices with a full awareness

of what they are ignoring as

well as what they are

choosing to study...

Weiss (1998)

Evaluation

Produced by The W. K. Kellogg Foundation

EVALUATION PLANNING

77

7777

77

What is going to be evaluated? For each area on which your program

focuses, list the most important aspects of your program theory and logic model.

Focus your evaluation on them.

Focus Area Examples:

Context Examples — Evaluating relationships and capacity.

How will the

Free Clinic recruit and train effective board and staff members? What is the best way to

recruit, manage, retain and recognize medical and administrative volunteers and other Clinic

partners? What is the most effective way to recruit and retain uninsured patients? How will

the operation of a Free Clinic impact Memorial Hospital’s expenses for providing uninsured

medical care in its ER? How many patients can Clinic volunteers effectively serve on a

regular basis? What is the ideal patient/volunteer ratio?

Insert focus areas into Focus Area Column of Evaluation Questions

Development Template for Evaluation Planning, Exercise 4.

Implementation Examples — Assessing quality and quantity.

How many

major funding partners does the clinic have? How are volunteers and patients scheduled?

How many medical volunteers serve Clinic patients on a regular basis? What is the value of

their services? What is the most common diagnosis at the Clinic? What is the most common

diagnosis of uninsured patients seen in Memorial’s ER? How long do patients wait to be seen

at the Clinic? Is there a patient or volunteer waiting list?

Insert focus areas into Focus Area Column of Evaluation Questions

Development Template for Evaluation Planning, Exercise 4.

Outcomes — Measuring effectiveness, magnitude and satisfaction.

Has

the clinic increased access to care for a significant number of Mytown’s uninsured citizens?

How many residents of Mytown, USA do not have health insurance? How many patients does

the Clinic serve on a regular basis? What is that ratio? What is the cost per visit in the Clinic

and Memorial’s ER? How do the costs compare? What is the satisfaction level of Clinic

patients and volunteers with Clinic services and facilities? How many donors does the Clinic

have? What is their satisfaction with Clinic services and facilities? How effectively is the

Clinic educating, engaging and involving its partners? What organizations have officially

endorsed the Clinic? What is the board and staff’s satisfaction with clinic operations, facilities

and services?

Insert focus areas into Focus Area Column of Evaluation Questions

Development Template for Evaluation Planning, Exercise 4.

The benefits of asking and answering evaluation questions depend on how clear

you are about the purpose of your evaluation, who needs to know what when, and

the resources you have available to support the evaluation process.

Produced by The W. K. Kellogg Foundation

EVALUATION PLANNING

78

7878

78

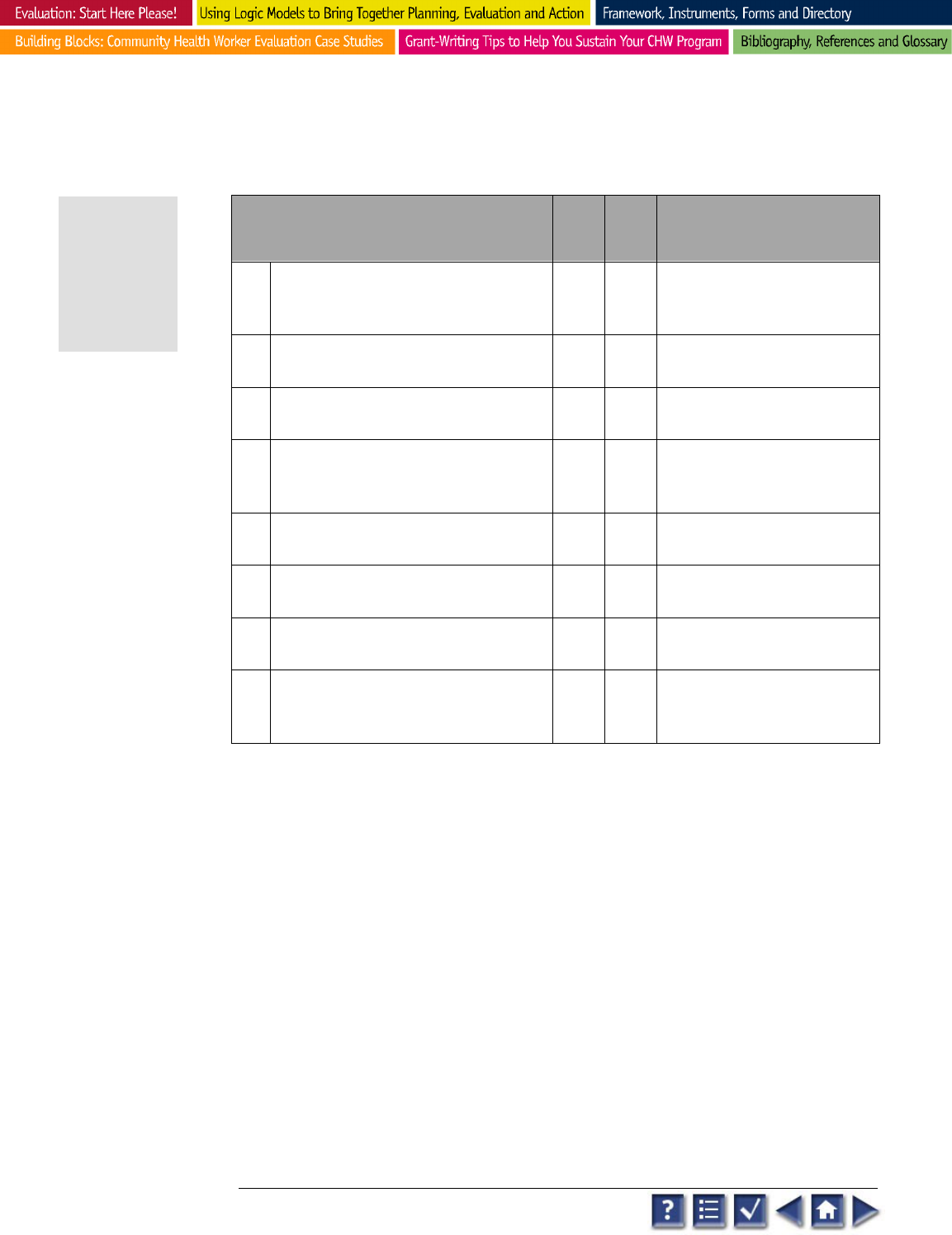

What Information Will Your Program’s Audiences Want?

As shown below, program audiences will be interested in a variety of different

kinds of information. Donors may want to know if their money did what you

promised it would. Patients might want to know how many patients the clinic

serves and how many volunteers it has. Physicians donating their time and talent

could be interested in the financial value of their contributions. If you ask your

audiences what they want to know, you’ll be sure to build in ways to gather the

evaluation data required.

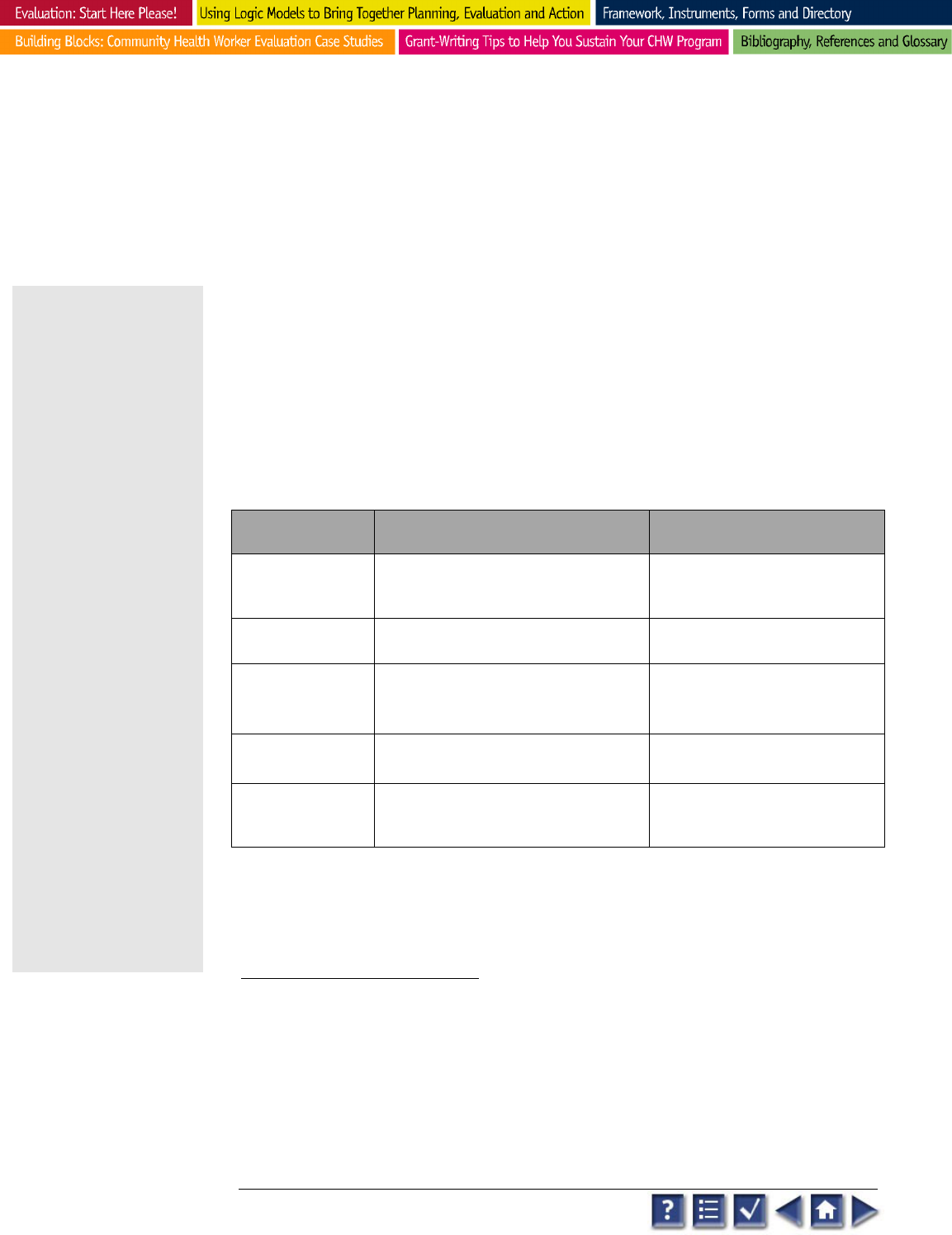

Audience Typical Questions Evaluation Use

Program

Management

and Staff

Are we reaching our target population?

Are our participants satisfied with our program?

Is the program being run efficiently?

How can we improve our program?

Programming decisions, day-

today operations

Participants Did the program help me and people like me?

What would improve the program next time?

Decisions about continuing

participation.

Community

Members

Is the program suited to our community needs?

What is the program really accomplishing?

Decisions about participation

and support.

Public Officials Who is the program serving?

What difference has the program made?

Is the program reaching its target population?

What do participants think about the program?

Is the program worth the cost?

Decisions about commitment

and support.

Knowledge about the utility

and feasibility of the program

approach.

Funders Is what was promised being achieved?

Is the program working?

Is the program worth the cost?

Accountability and

improvement of future

grantmaking efforts.

How often do you have to gather data? Whether a question is more formative or

summative in nature offers a clue on when information should be collected.

• Formative information should be periodic and reported/shared quickly to

improve your efforts.

• Summative tends to be a "before and after" snap-shots reported after the

conclusion of the program to document the effectiveness and lessons

learned from your experience.

Involve Your Audience in Setting Priorities

Program developers often interview program funders, participants, staff, board

and partners to brainstorm a list of all possible questions for a key area identified

from their program theory or from their logic models. That list helps determine

the focus the evaluation. Involving your audience from the beginning makes sure

you gather meaningful information in which your supporters have a real interest.

Produced by The W. K. Kellogg Foundation

EVALUATION PLANNING

79

7979

79

Prioritization is a critical step. No evaluation can answer all of the questions

your program's audiences may ask. The following questions can help you narrow

your number of indicators: How many audiences are interested in this

information? Could knowing the answer to this question improve your program?

Will this information assess your program’s effectiveness?

The final focus for your evaluation is often negotiated among stakeholders. It is

important to keep your evaluation manageable. It is preferable to answer a few

important questions thoroughly than to answer several questions poorly.

How well you can answer your questions will depend on the time, money, and

expertise you have at your disposal to perform the functions required by the

evaluation.

What key audiences will have questions about your evaluation

focus areas?

For each focus area that you identified in the previous step, list

the audiences that are likely to be most interested in that area.

Summarize your

audiences and transfer to the Audience Column of the Evaluation Questions

Development Template for Evaluation Planning, Exercise 4.

Context--Relationships and Capacity

Example audiences:

Medical professionals, Memorial Hospital Board and Staff (especially ER

staff), Medical associations, Foundations, The Chamber of Commerce, United Way, The Technical

College, uninsured residents, medical supply companies, local media, public officials.

Implementation--Quality and Quantity

Example audiences:

Funders, In-kind donors, Medical and administrative volunteers, Board, Staff,

Patients, Public Officials, The media, Medical associations, Local businesses, Healthcare organizations.

Outcomes--Effectiveness, Magnitude, and Satisfaction

Example audiences:

Funders, In-kind donors, Volunteers, Board, Staff, Patients, Public Officials,

The media, Medical associations, Local businesses, Healthcare organizations.

What questions will key audiences ask about your program

? For

each focus area and key audience you identified in the previous step, list the

questions your stakeholders ask about your program.

Insert summaries in the

Question Column of the Evaluation Questions Development Template for

Evaluation Planning, Exercise 4

.

Produced by The W. K. Kellogg Foundation

EVALUATION PLANNING

80

8080

80

Sample of Key Audience Questions:

Who are the collaborative partners for this program? What do they provide?

What is the budget for this program?

How many staff members does the program have?

How many patients does the clinic serve?

How many visits per year does the average patient have?

What is the most common diagnosis?

Does the clinic save the hospital money?

How does the organization undertake and support program evaluation?

How are medical volunteers protected from law suits?

How satisfied are patients, volunteers, board and staff with the clinic’s services?

What do experts say about the clinic?

How many uninsured patients still seek inappropriate care in the ER? Why?

How will the evaluation’s information be used?

For each question

and audience you identified in the previous step, list the ways and extent to which

you plan to make use of the evaluation information.

Summarize audience use of

information. Insert in the Use Column of the Evaluation Questions

Development Template for Evaluation Planning, Exercise 4 on page 44.

Context--Relationships and Capacity Examples

• Measure the level of community support

• Assess effectiveness of community outreach

• Assess sustainability of Clinic funding sources

• Improve volunteer and patient recruitment methods

• Secure additional Clinic partners

Implementation--Quality and Quantity Examples

• Assess optimal number of volunteers and patients to schedule per session to improve operating

effectiveness while maintaining patient and volunteer satisfaction.

• Measure patient, volunteer, staff, board, donors and community satisfaction with clinic.

• Determine cost savings per visit. Share information with local medical and business groups to

encourage their support.

Outcomes & Impact—Examples of Effectiveness, Magnitude,

and Satisfaction

• Cost savings of Clinic-- use to obtain additional volunteer and financial support from Memorial

Hospital

• Patient satisfaction survey results -- use to improve patient services and satisfaction.

• Analysis of most frequent referral sources-- use to present information seminars to ER staff, social

service workers and unemployment insurance clerks to increase patient referrals and intakes.

• Analysis of most prevalent patient diagnoses --use to create relevant patient health education

newsletter. Patient tracking system will measure impact of education program.

Produced by The W. K. Kellogg Foundation

EVALUATION PLANNING

81

8181

81

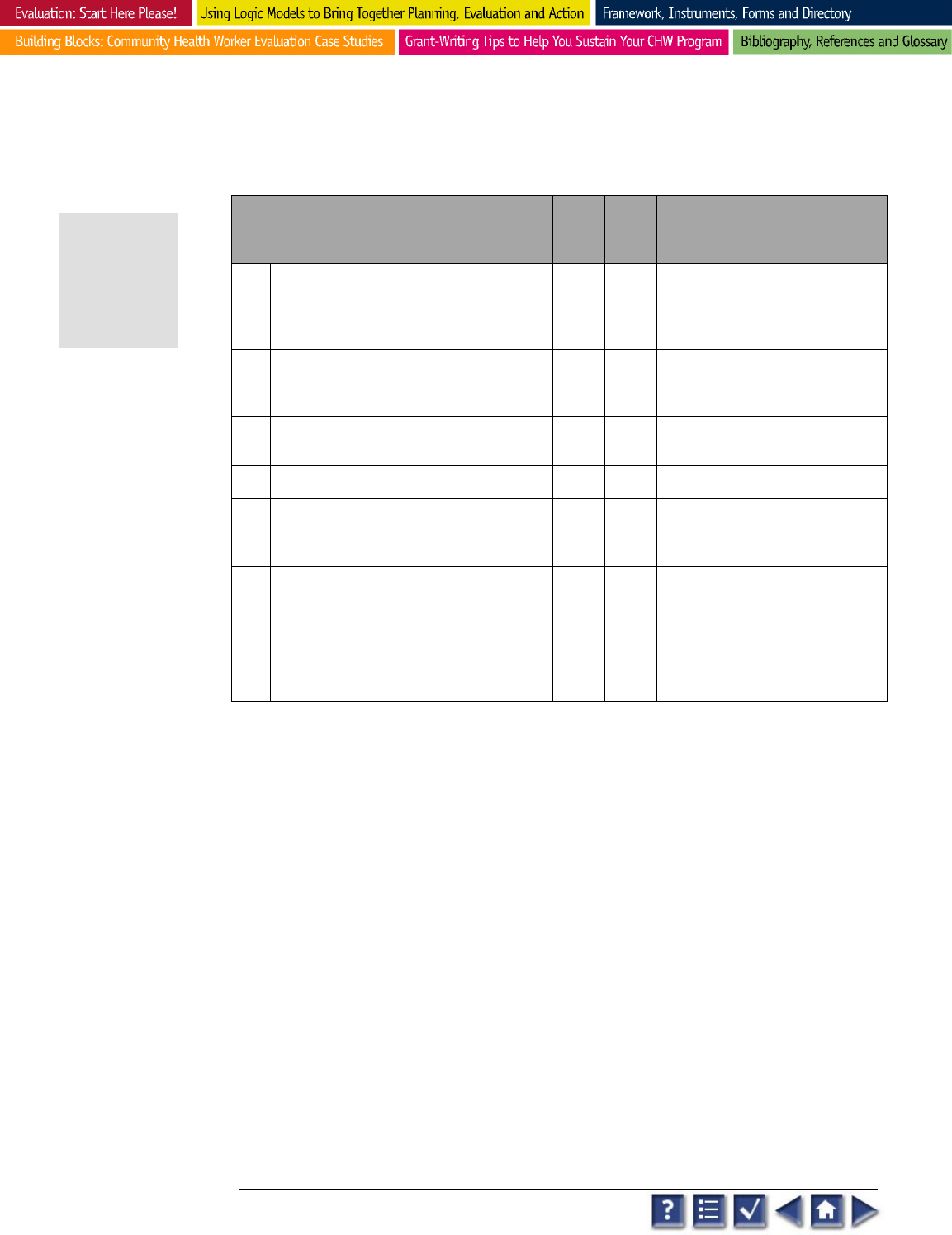

Exercise 4 Checklist: After completing Exercise 4 you can use the following checklist

to assess the quality of your draft.

Posing Questions Quality Criteria Yes Not

Yet

Comments

Revisions

1. A variety of audiences are taken into

consideration when specifying

questions.

2. Questions selected are those with the

highest priority.

3. Each question chosen gathers useful

information.

4. Each question asks only one question

(i.e. "extent of X, Y, and Z is not

appropriate).

5. It is clear how the question relates to

the program’s logic model.

6. The questions are specific about what

information is needed.

7. Questions capture "lessons learned"

about your work along the way.

8. Questions capture "lessons learned"

about your program theory along the

way.

EXERCISE 4

Check-list

Produced by The W. K. Kellogg Foundation

EVALUATION PLANNING

82

8282

82

Evaluation

Focus Area

Audience Question Use

Relationships

Funders Is the program cost effective? Cost benefits/fundraising

Are volunteers & patients satisfied with Clinic services? Program promotion/fundraising

Medical Volunteers What is the most common diagnosis? Quality assurance/Planning

How will medical volunteers be protected from law suits? Volunteer recruitment

Patients Am I receiving quality care? Program improvement & planning

How long can I receive care here? “ “

Staff Are we reaching our target population? Evaluation/program promotion

How do patients find us? What’s our best promotional

approach?

Evaluation and/or improvement

Funders/Donors Program Budget? Cost benefit analysis

Outcomes

Cost/visit? “

Volunteers Visits/month/year? Annual Report/Program promotion/Public relations

Cost savings for Memorial Hospital? Annual Report/Program promotion/ Fundraising

Patients Volunteers/year? Annual Report/Volunteer recruitment

Patient satisfaction Program improvements/staff training

Staff Patient & volunteer satisfaction “

Common DRG(?)

Logic Model Development. Evaluation Planning Template – Exercise 4

Produced by The W. K. Kellogg Foundation

EVALUATION PLANNING

82

EVALUATION PLANNING

83

8383

83

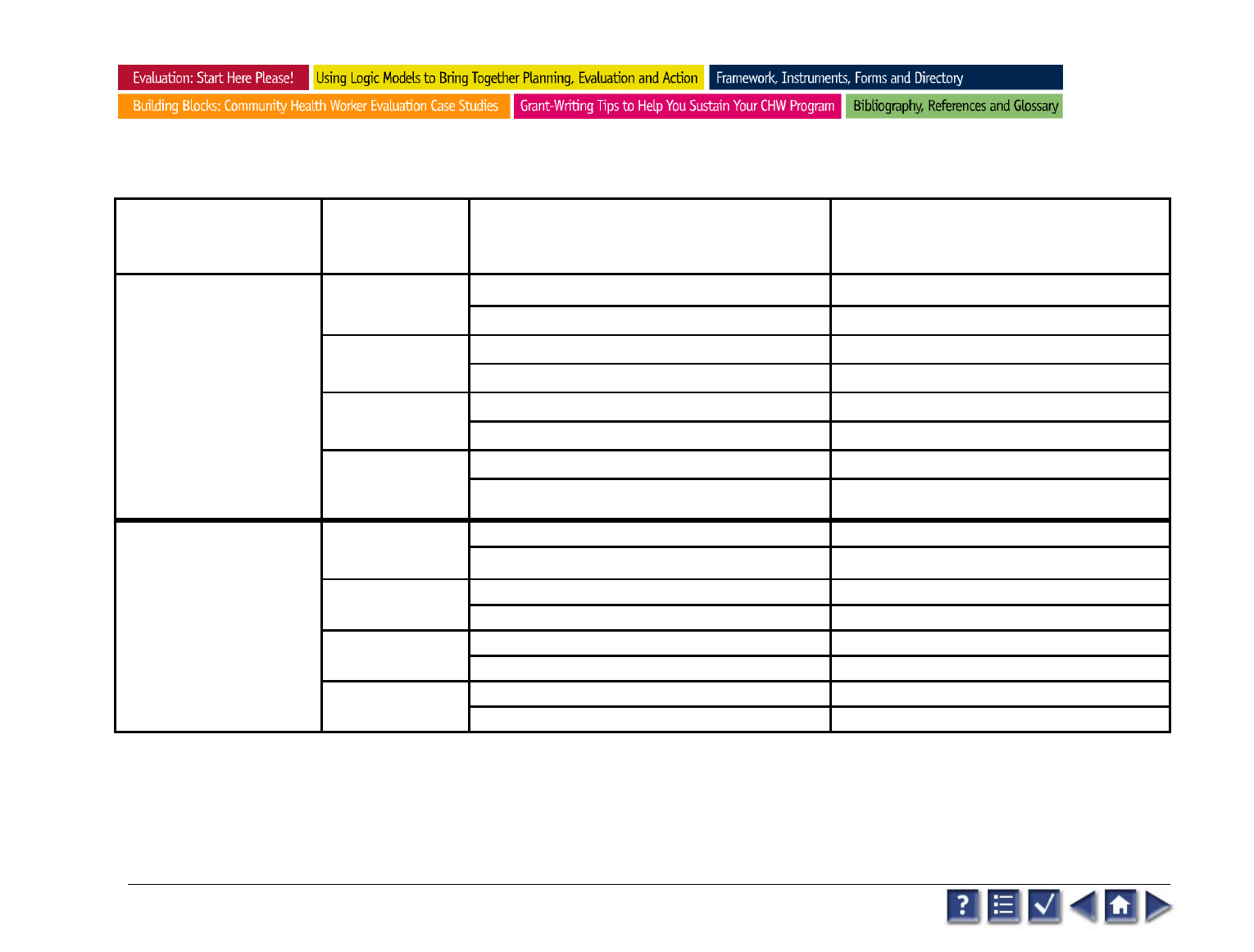

Exercise 5---Establishing Indicators

One of the biggest challenges in developing an evaluation plan is choosing what kind

of information best answers the questions you have posed. It is important to have

general agreement across your audiences on what success will look like.

Indicators are the measures you select as markers of your success.

In this last exercise you create a set of indicators. They are often used as the starting

point for designing the data collection and reporting strategies (e.g., the number of

uninsured adults nationally, statewide, in Mytown, USA or the number of licensed

physicians in Mytown). Often organizations hire consultants or seek guidance from

local experts to conduct their evaluations. Whether or not you want help will depend

on your organization’s level of comfort with evaluation and the evaluation expertise

among your staff.

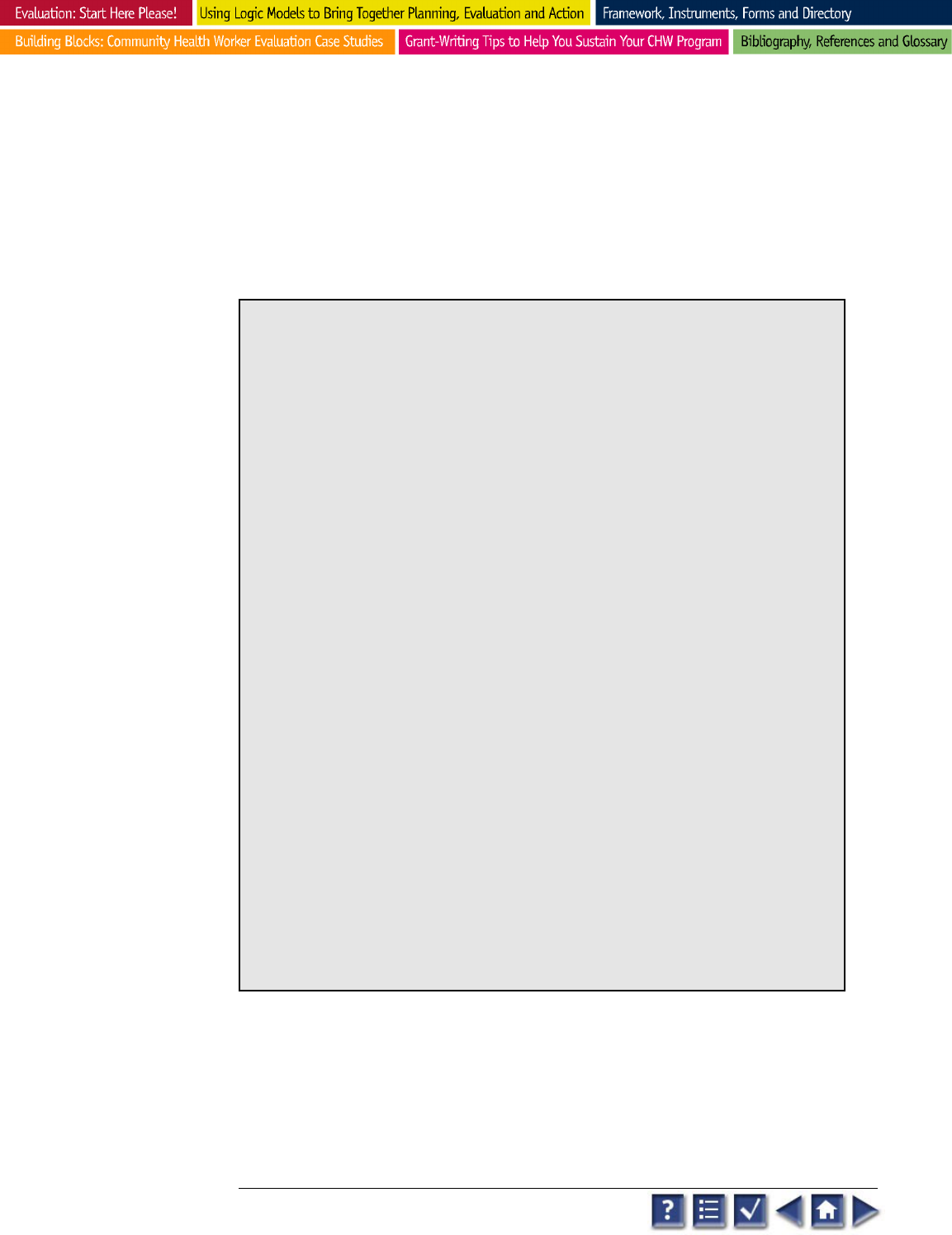

Focus Area Indicators How to Evaluate

1

Influential Factors

Measures of influential factors--may require

general population surveys and/or

comparison with national data sets

2

.

Compare the nature and extent of

influences before (baseline) and after

the program.

Resources Logs or reports of financial/staffing status.

Compare actual resources acquired

against anticipated.

Activities Descriptions of planned activities.

Logs or reports of actual activities.

Descriptions of participants.

Compare actual activities provided,

types of participants reached against

what was proposed.

Outputs Logs or reports of actual activities.

Actual products delivered.

Compare the quality and quantity of

actual delivery against expected.

Outcomes & Impacts

Participant attitudes, knowledge, skills,

intentions, and/or behaviors thought to result

from your activities

3

.

Compare the measures before and

after the program

4

.

Examples and Use of Indicators.

Our advice is to keep your evaluation simple and straight forward. The logic model

techniques you have been practicing will take you a long way toward developing an

evaluation plan that is meaningful and manageable.

1

This table was adapted from A Hands-on Guide to Planning and Evaluation (1993) available from the National

AIDS Clearinghouse, Canada.

2

You may want to allocate resources to allow for the assistance of an external evaluation consultant to access

national databases or perform statistical analyses.

3 Many types of outcomes and impact instruments (i.e. reliable and valid surveys and questionnaires) are

readily available. The Mental Measurement Yearbook published by the Buros Institute

(http://www.unl.edu/buros/) and the ERIC Clearinghouse on Assessment and Evaluation

(http://ericae.net/) are great places to start.

4 You may need to allocate resources to allow for the assistance of an external evaluation consultant.

The biggest problem

is usually that people

are trying to

accomplish too

many results. Once

they engage in a

discussion of

indicators, they start

to realize how much

more clarity they

need in their

activities.

I also find that it is

important that the

program, not the

evaluator, is

identifying the

indicators.

Otherwise, the

program can easily

discredit the

evaluation by saying

they don’t think the

indicators are

important, valid, etc.

Beverly Anderson

Parsons,

WKKF Cluster

Evaluator

Context Indicator

Examples

Produced by The W. K. Kellogg Foundation

EVALUATION PLANNING

8

4

848

4

84

Determine the kinds of data you will need and design methods to gather the data (i.e.,

patient registration forms, volunteer registration forms, daily sign in sheets, national,

state and local statistics). Sometimes, once an indicator (type of data) is selected,

program planners set a specific target to be reached as a agreed upon measure of

success if reached (for example 25% decrease in the numbers of inappropriate ER

visits).

As in the previous exercises use the space below to loosely organize your

thoughts. Then, once the exercise is completed and assessed, use the Indicator

Development Template on page 60 to record your indicators and technical

assistance needs.

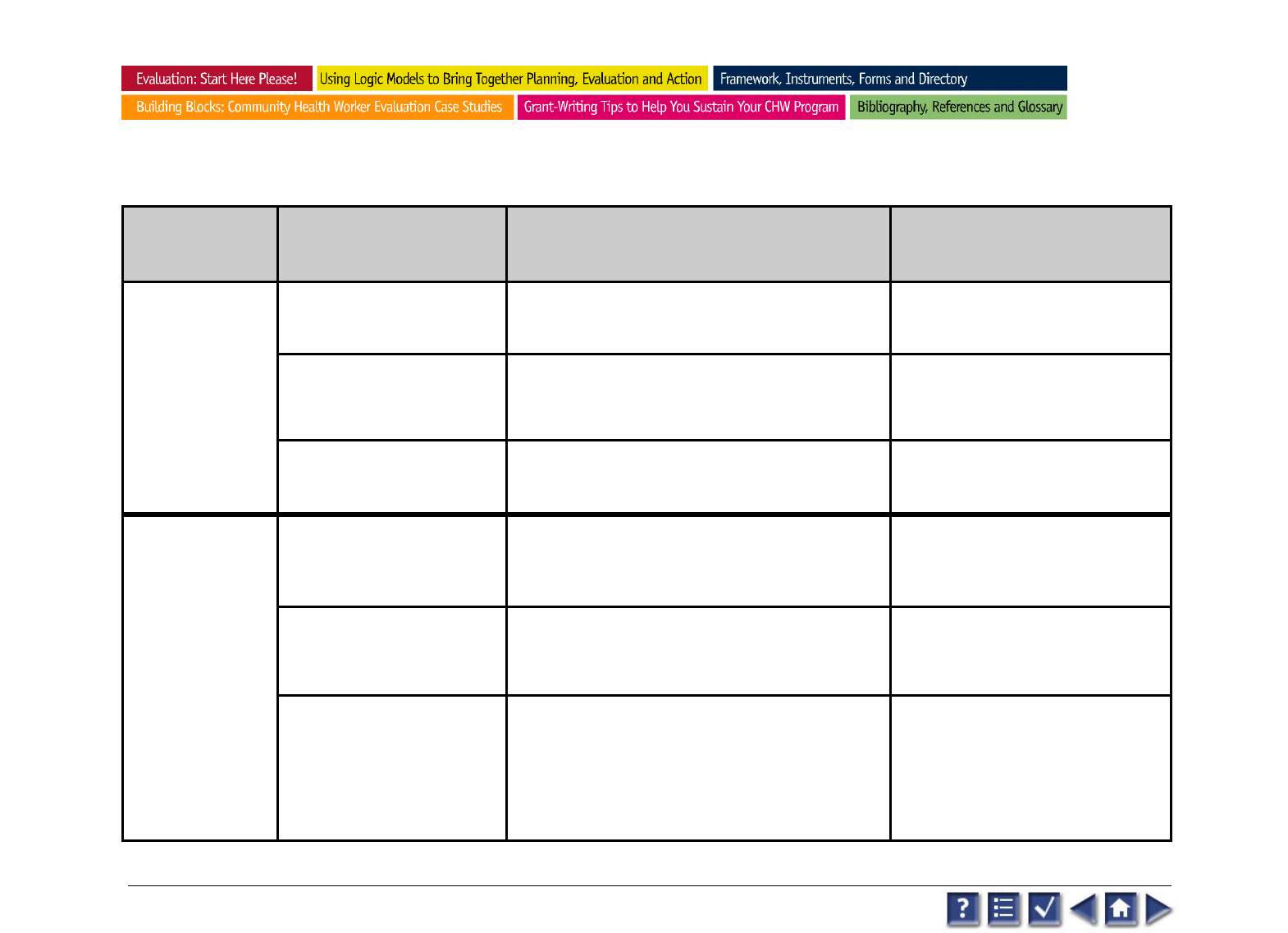

Filling in the Flowchart for Indicator Development

What information will be gathered to “indicate” the status of your

program and/or its participants?

Focus

Area

Question Indicators Technical Assistance

Needed

Column 1:

Focus Areas—

From the information gathered in Exercise 4, transfer

the areas on which your evaluation will focus into column one (for example,

patient health, volunteer participation, sustaining supporting partnerships).

Column 2:

Questions

—transfer from Exercise 4 the major questions related to

each focus area--big questions your key audiences want answered. Remember to

keep your evaluation as simple as possible.

Column 3:

Indicators--

Specify the indicators (types of data) against which you

will measure the success/progress of your program. It’s often helpful to record

the sources of data you plan to use as indicators (where you are likely to find or

get access to these data).

Column 4:

Technical Assistance

--

To what extent does your organization have

the evaluation and data management expertise needed to collect and analyze the

data that related to each indicator? List any assistance that would be helpful—

universities, consultants, national and state data experts, foundation evaluation

departments, etc.

Produced by The W. K. Kellogg Foundation

EVALUATION PLANNING

85

8585

85

Exercise 5 Checklist: Review what you have created using the checklist below to

assess the quality of your evaluation plan.

Establishing Indicators Quality

Criteria

Yes Not

Yet

Comments

Revisions

1. The focus areas reflect the questions asked

by a variety of audiences. Indicators

respond to the identified focus areas and

questions.

2. Indicators are SMART--Specific,

Measurable, Action-oriented, Realistic, and

Timed.

3. The cost of collecting data on the indicators

is within the evaluation budget.

4. Source of data is known.

5. It is clear what data collection,

management, and analysis strategies will

be most appropriate for each indicator.

6. Strategies and required technical

assistance have been identified and are

within the evaluation budget for the

program.

7. The technical assistance needed is

available.

EXERCISE 5

Check-list

Produced by The W. K. Kellogg Foundation

EVALUATION PLANNING

86

8686

86

Focus Area Question Indicators Technical Assistance

Needed

Are volunteers & patients

•

Patient satisfaction surveys

Anywhere’s pt. satisfaction surveys

satisfied w/ clinic care?

•

Volunteer satisfaction tests

Anywhere’s volunteer survey

Relationships

Are we reaching our target

population?

•

% of clinic patients vs. % of uninsured citizens in

Mytown, USA

Reports from Chamber of Commerce

•

# of

q

ualified clinic

p

atients/

y

ear

Patient database creation

How do patients find

•

Annual analysis of telephone referral log

Telephone log data base

The clinic?

•

Referral question on patient intake form

Anywhere’s patient intake form

Does the clinic save

•

Cost/visit

Budget figures; patient service records

The community $?

•

# of uninsured pts. seen in hospital ER—beginning

the year before Clinic opened.

Tracking database software

Strategic direction for analysis

Outcomes

What does the clinic

•

most common diagnosis

DRG workbook/tables (hospital staff)

provide?

•

Hospital cost/visit for common diagnosis

Input from hospital billing staff

How has volunteering

impacted doctors, nurses,

•

Annual volunteer survey

•

Patient satisfaction survey

Anywhere surveys and analysis

instruments

administrators and patients?

•

# of volunteers/year

•

# of volunteers donating to clinic operations

Volunteer management data base

Donor data base (Raiser’s Edge?)

Logic Model Development Indicators Development Template – Exercise 5

Produced by The W. K. Kellogg Foundation

EVALUATION PLANNING