Words are not enough! Short text

classification using words as well as

entities

Masterarbeit

von

Qingyuan Bie

Studiengang: Informatik

Matrikelnummer: 1795486

Institut für Angewandte Informatik und Formale

Beschreibungsverfahren (AIFB)

KIT-Fakultät für Wirtschaftswissenschaften

Prüfer: Prof. Dr. Ralf Reussner

Zweiter Prüfer: Prof. Dr. Harald Sack

Betreuer: M.Sc. Rima Türker

Eingereicht am: 29. August 2019

Zusammenfassung

Textklassifizierung, auch als Textkategorisierung bezeichnet, ist eine klassische Aufgabe

in der natürlichen Sprachverarbeitung. Ziel ist es, Textdokumenten eine oder mehrere vor-

definierte Klassen oder Kategorien zuzuordnen. Die Textklassifizierung hat eine Vielzahl

von Anwendungsszenarien.

Herkömmliche Probleme bei der Klassifizierung von Binär- oder Mehrklassentexten wur-

den in der Maschinelles Lernen Forschung intensiv untersucht. Wenn jedoch Techniken des

Maschinelles Lernenes auf kurze Texte angewendet werden, haben die meisten Ansätze

der Standardtextklassifizierung die Probleme wie Datensparsamkeit und unzureichende

Textlänge. Darüber hinaus sind kurze Texte aufgrund fehlender Kontextinformationen

sehr mehrdeutig. Infolgedessen können einfache Textklassifizierungsansätze, die nur auf

Wörtern basieren, die kritischen Merkmale von Kurztexten nicht richtig darstellen.

In dieser Arbeit wird ein neuartiger Ansatz zur Klassifizierung von Kurztexten auf der Ba-

sis neuronaler Netze vorgeschlagen. Wir bereichern die Textdarstellung, indem wir Wörter

zusammen mit Entitäten verwenden, die durch den Inhalt des gegebenen Dokuments re-

präsentiert werden. Tagme wird zuerst verwendet, um benannte Entitäten aus Dokumen-

ten zu extrahieren. Wir verwenden dann das Wikipedia2Vec Modell, um Entitätsvektoren

zu erhalten. Unser Modell wird nicht nur auf vortrainierte Wortvektoren, sondern auch

auf vortrainierte Entitätsvektoren trainiert. Wir vergleichen die Leistung des Modells mit

reinen Wörtern und die Leistung des Modells mit Wörtern und Entitäten. Der Einfluss

verschiedener Arten von Wortvektoren auf die Klassifizierungsgenauigkeit wird ebenfalls

untersucht.

Abstract

Text classification, also known as text categorization, is a classical task in natural lan-

guage processing. It aims to assign one or more predefined classes or categories to text

documents. Text classification has a wide variety of application scenarios.

Traditional binary or multiclass text classification problems have been intensively studied

in machine learning research. However, when machine learning techniques applied to short

texts, most of the standard text classification approaches have the problems such as data

sparsity and insufficient text length. Moreover, due to the lack of contextual information,

short texts are highly ambiguous. As a result, simple text classification approaches based

on words only, can not represent the critical features of short texts properly.

In this work, a novel neural network based approach of short text classification is proposed.

We enrich text representation by utilizing words together with entities represented by the

content of the given document. Tagme is first utilized to extract named entities from

documents. We then utilize Wikipedia2Vec model to get entity vectors. Our model is

trained not only on top of pre-trained word vectors, but also on top of pre-trained entity

vectors. We compare the performance of the model using pure words and the model using

words as well as entities. The impact of different kinds of word vectors on the classification

accuracy is also studied.

INHALTSVERZEICHNIS v

Inhaltsverzeichnis

1 Introduction 1

1.1 Motivation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.2 Contribution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.3 Thesis Structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

2 Background 3

2.1 Text Classification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

2.2 Text Representation (Feature Engineering) . . . . . . . . . . . . . . . . . . 4

2.3 Text Classification (Classifiers) . . . . . . . . . . . . . . . . . . . . . . . . 7

3 Related Work 11

3.1 Text Classification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

3.1.1 Vector Representation of Words . . . . . . . . . . . . . . . . . . . . 11

3.1.2 Convolutional Neural Network Feature Extraction . . . . . . . . . . 13

3.1.3 Pre-training & Multi-task Learning . . . . . . . . . . . . . . . . . . 15

3.1.4 Context Mechanism . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.1.5 Memory Storage Mechanism . . . . . . . . . . . . . . . . . . . . . . 20

3.1.6 Attention Mechanism . . . . . . . . . . . . . . . . . . . . . . . . . . 20

3.2 Short Text Classification . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

3.3 Text Classification using Named Entities . . . . . . . . . . . . . . . . . . . 21

4 Wikipedia2Vec and Doc2Vec 23

4.1 Word2vec and Skip-gram . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

4.2 Wikipedia2Vec . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

4.2.1 Wikipedia Link Graph Model . . . . . . . . . . . . . . . . . . . . . 25

4.2.2 Anchor Context Model . . . . . . . . . . . . . . . . . . . . . . . . . 27

4.3 Doc2Vec . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

5 Convolutional Neural Network for Short Text Classification 29

5.1 Entity Linking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

INHALTSVERZEICHNIS vi

5.2 Overall Architecture of EntCNN . . . . . . . . . . . . . . . . . . . . . . . . 31

5.3 Training . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

6 Recurrent Neural Network for Short Text Classification 35

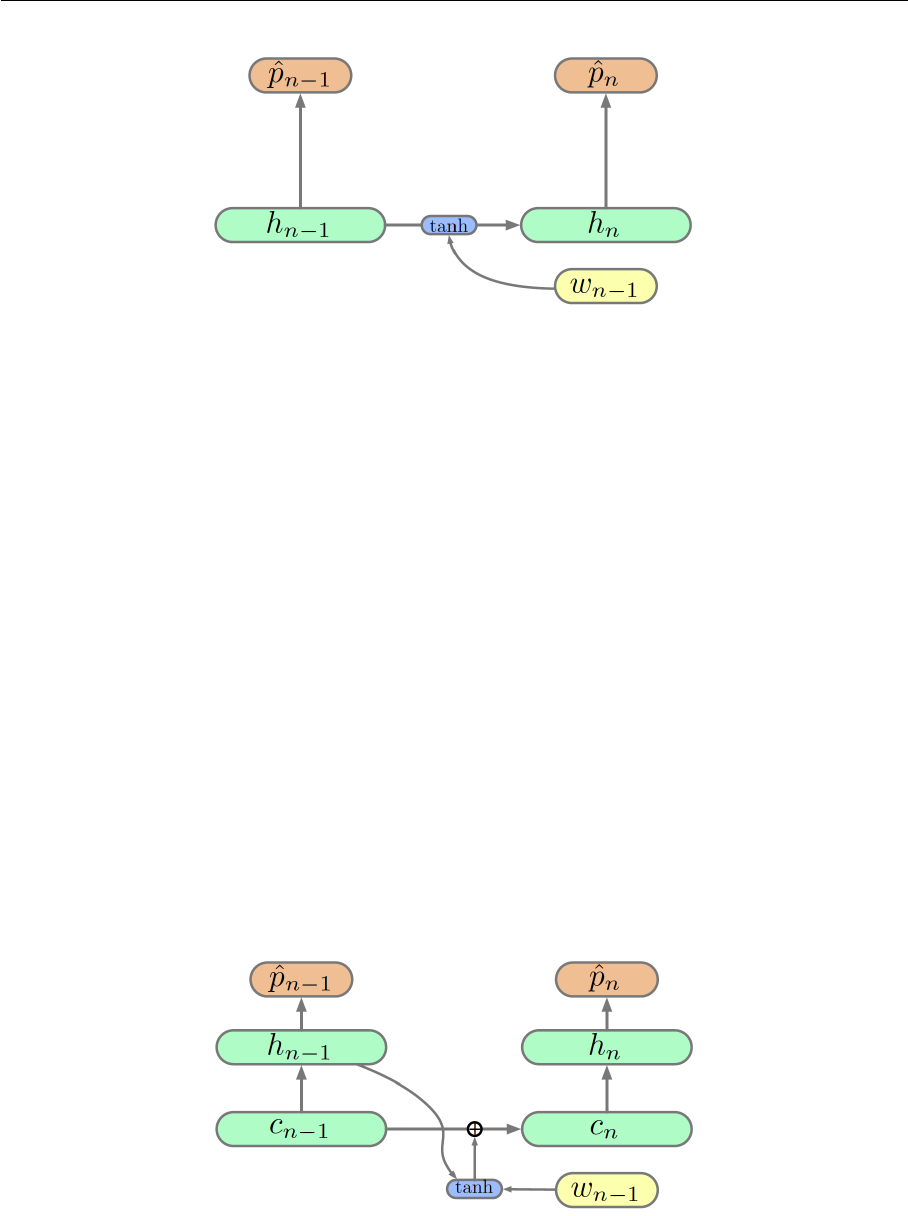

6.1 Vanilla Recurrent Neural Network . . . . . . . . . . . . . . . . . . . . . . . 35

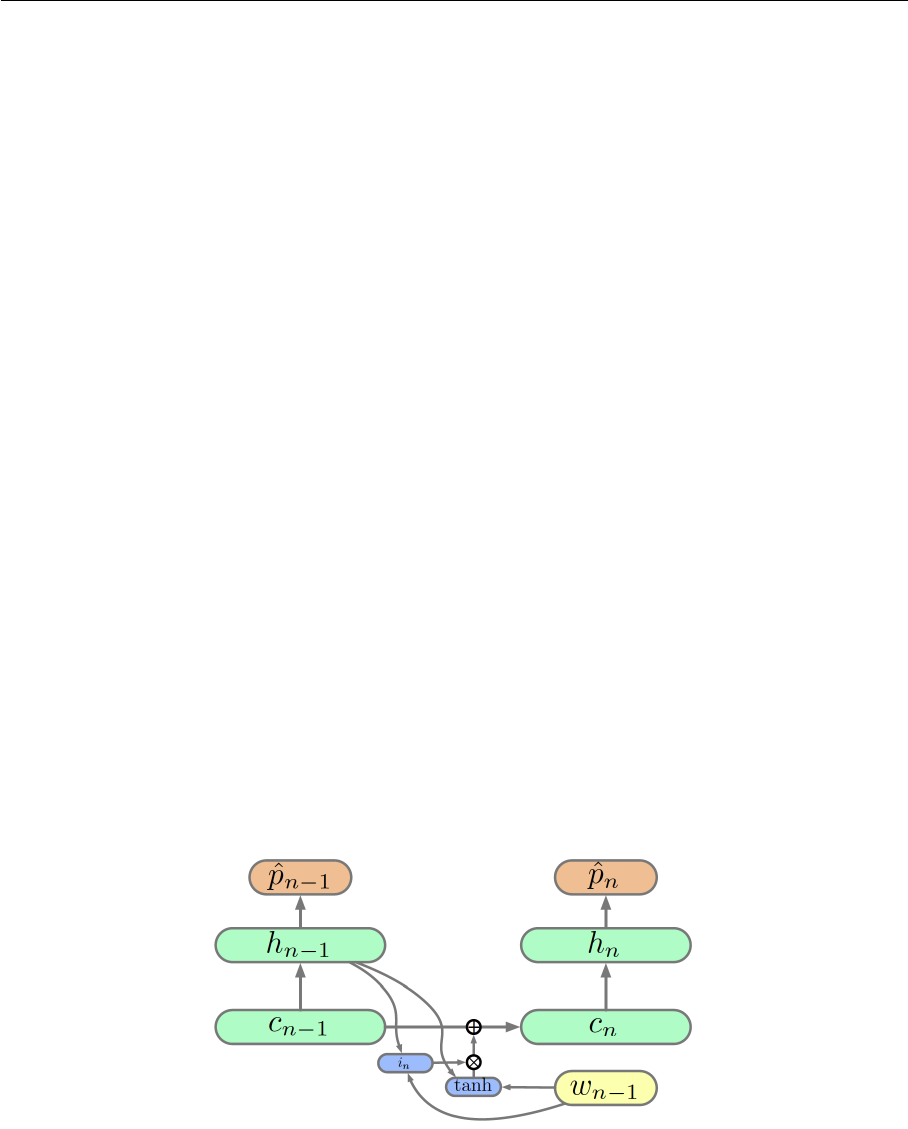

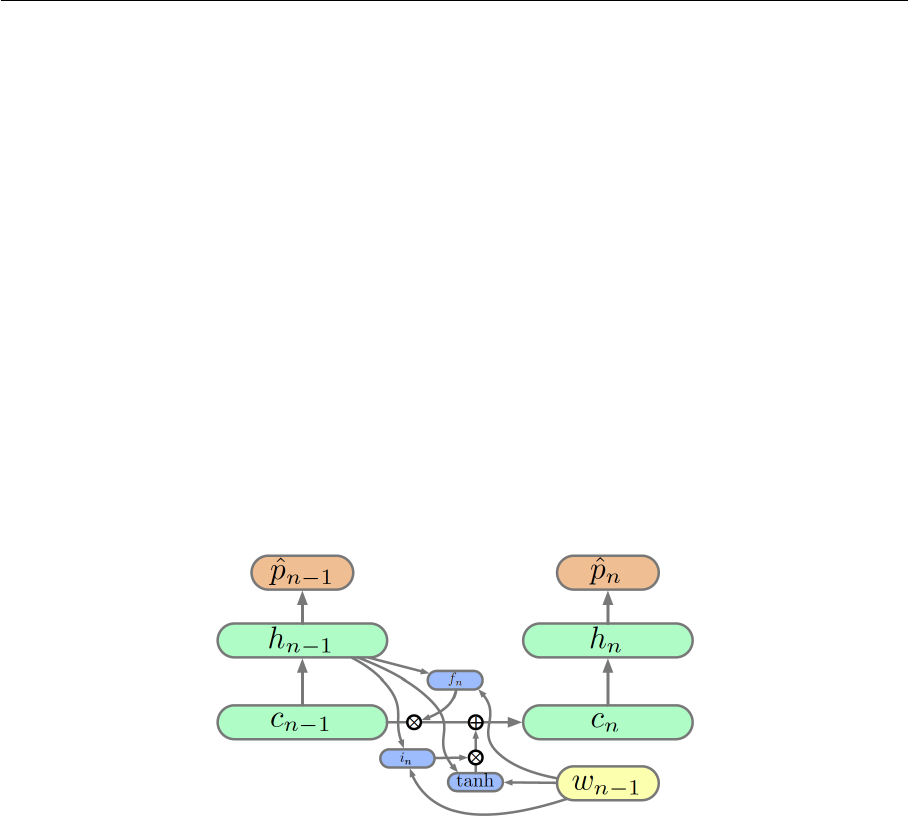

6.2 Long Short-Term Memory . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

6.3 Recurrent Convolutional Neural Network . . . . . . . . . . . . . . . . . . . 42

7 Experiments 45

7.1 Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

7.2 Preprocessing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

7.3 Evaluation Metrics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

7.4 Computational Complexity . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

7.5 Entity Linking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

7.6 Experimental Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

7.7 Results and Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

8 Conclusion 67

8.1 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

8.2 Future Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

A Feature Statistics 69

B Confusion Matrices 71

ABKÜRZUNGSVERZEICHNIS vii

Abkürzungsverzeichnis

BERT Deep Bidirectional Encoder Representations from Transformers.

BoW Bag-of-Words.

CBOW Continuous Bag of Words.

CharCNN Character-level Convolutional Neural Network.

CNN Convolutional Neural Network.

DBOW Distributed Bag of Words.

DCNN Dynamic Convolutional Neural Network.

DMN Dynamic Memory Network.

EL Entity Linking.

ELMo Embeddings from Language Models.

EntCNN Convolutional Neural Network for Text Classification with Entities.

FLOPs Floating Point Operations.

FN False Negative.

FP False Positive.

GloVe Global Vectors for Word Representation.

GPT Generative Pre-Training model.

GPU Graphics Processing Unit.

GRU Gated Recurrent Unit.

HAN Hierarchical Attention Network.

ICA Independent Component Analysis.

KB Knowledge Base.

K-NN K-Nearest Neighbors.

LDA Latent Dirichlet Allocation.

ABKÜRZUNGSVERZEICHNIS viii

LSA Latent Semantic Analysis.

LSTM Long Short-Term Memory.

NED Named Entity Disambiguation.

NEL Named Entity Linking.

NER Named Entity Recognition.

NLP Natural Language Processing.

NMT Neural Machine Translation.

OOV Out-of-vocabulary.

PCA Principal Component Analysis.

RCNN Recurrent Convolutional Neural Network.

ReLU Rectified Linear Unit.

RNN Recurrent Neural Network.

SGD Stochastic Gradient Descent.

sLDA Spatial Latent Dirichlet Allocation.

SVM Support-Vector Machine.

TextCNN Convolutional Neural Network for Text Classification.

TextRNN Recurrent Neural Network for Text Classification.

TF-IDF Term Frequency–Inverse Document Frequency.

TN True Negative.

TP True Positive.

TPU Tensor Processing Unit.

VDCNN Very Deep Convolutional Neural Network.

VSM Vector Space Model.

WSD Word Sense Disambiguation.

ABBILDUNGSVERZEICHNIS ix

Abbildungsverzeichnis

2.1 Text classification process . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

3.1 Architecture of FastText for text classification . . . . . . . . . . . . . . . . 12

3.2 Architecture of convolutional neural network for text classification . . . . . 13

3.3 Architecture of generative pre-training model for text classification . . . . . 18

3.4 Differences in pre-training model architectures . . . . . . . . . . . . . . . . 19

4.1 Word based skip-gram model . . . . . . . . . . . . . . . . . . . . . . . . . 23

4.2 Wikipedia link graph model . . . . . . . . . . . . . . . . . . . . . . . . . . 26

4.3 Anchor context model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

5.1 Convolutional neural network with words and entities for short text classi-

fication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

6.1 Unfolded vanilla recurrent neural network architecture . . . . . . . . . . . 35

6.2 Vanishing Gradient problem in recurrent neural network . . . . . . . . . . 36

6.3 Basic recurrent neural network architecture . . . . . . . . . . . . . . . . . . 39

6.4 Cell state of long short-term memory . . . . . . . . . . . . . . . . . . . . . 39

6.5 Input gate of long short-term memory . . . . . . . . . . . . . . . . . . . . . 40

6.6 Forget gate of long short-term memory . . . . . . . . . . . . . . . . . . . . 41

6.7 Output gate of long short-term memory . . . . . . . . . . . . . . . . . . . 42

6.8 Recurrent convolutional neural network for text classification . . . . . . . . 43

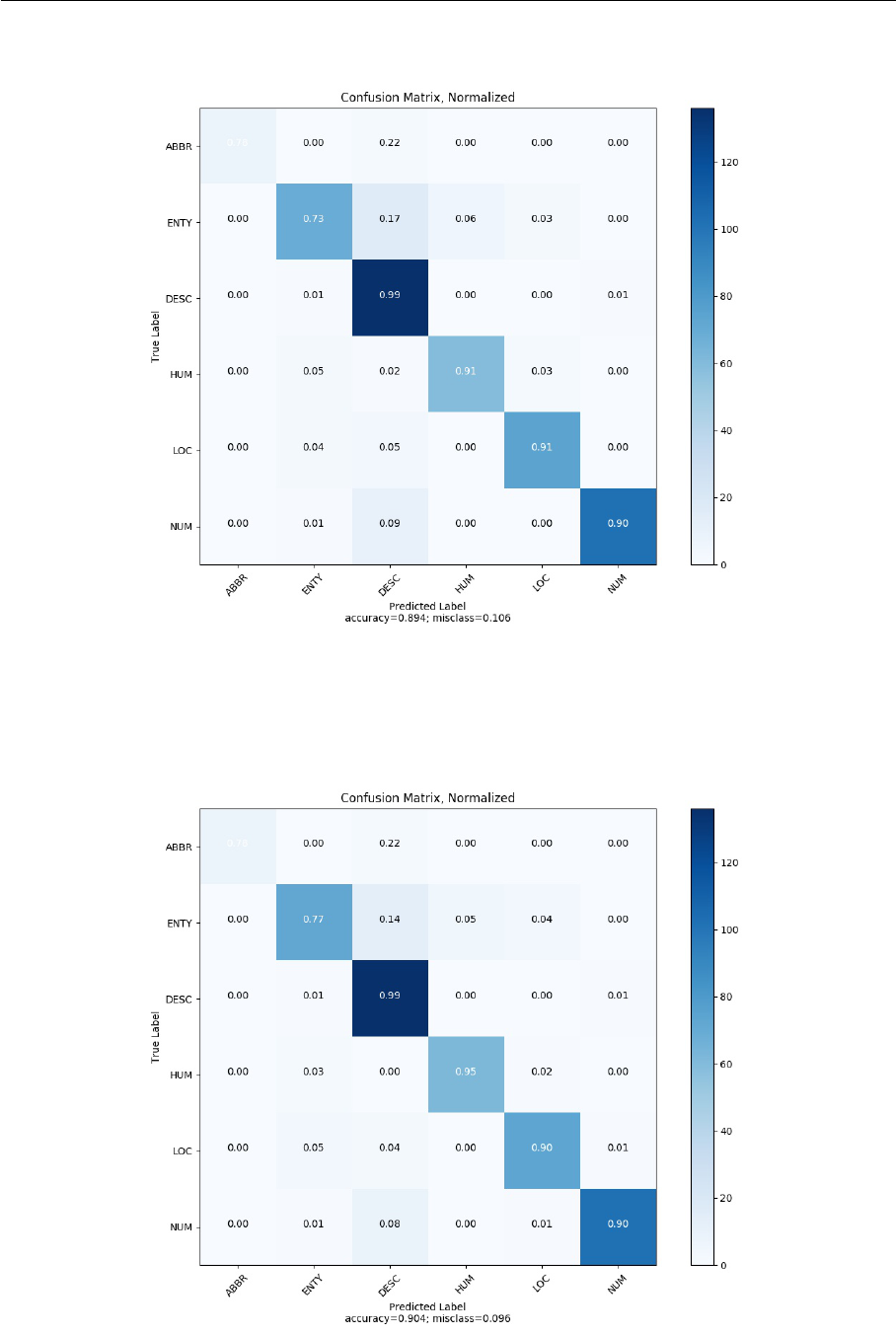

7.1 Baseline model (TextCNN) normalized confusion matrix on TREC . . . . . 64

7.2 Our model (EntCNN) normalized confusion matrix on TREC . . . . . . . 64

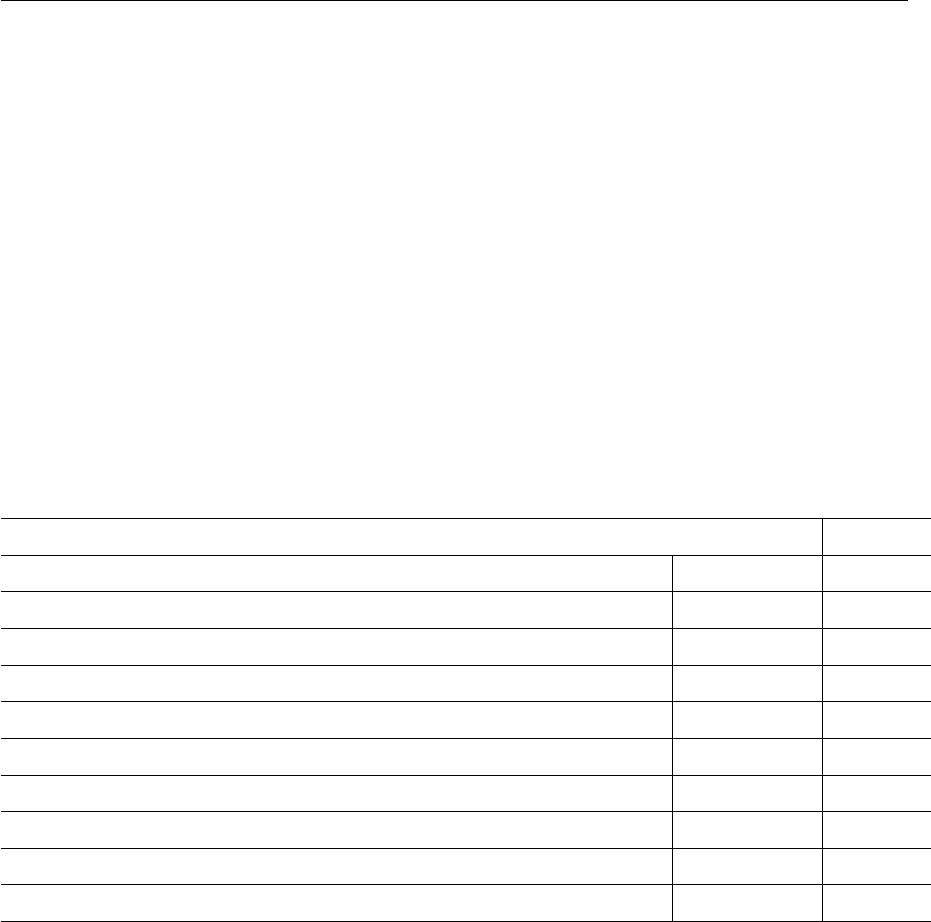

B.1 Baseline model (TextCNN) normalized confusion matrix on TREC . . . . . 71

B.2 Our model (EntCNN) normalized confusion matrix on TREC . . . . . . . 71

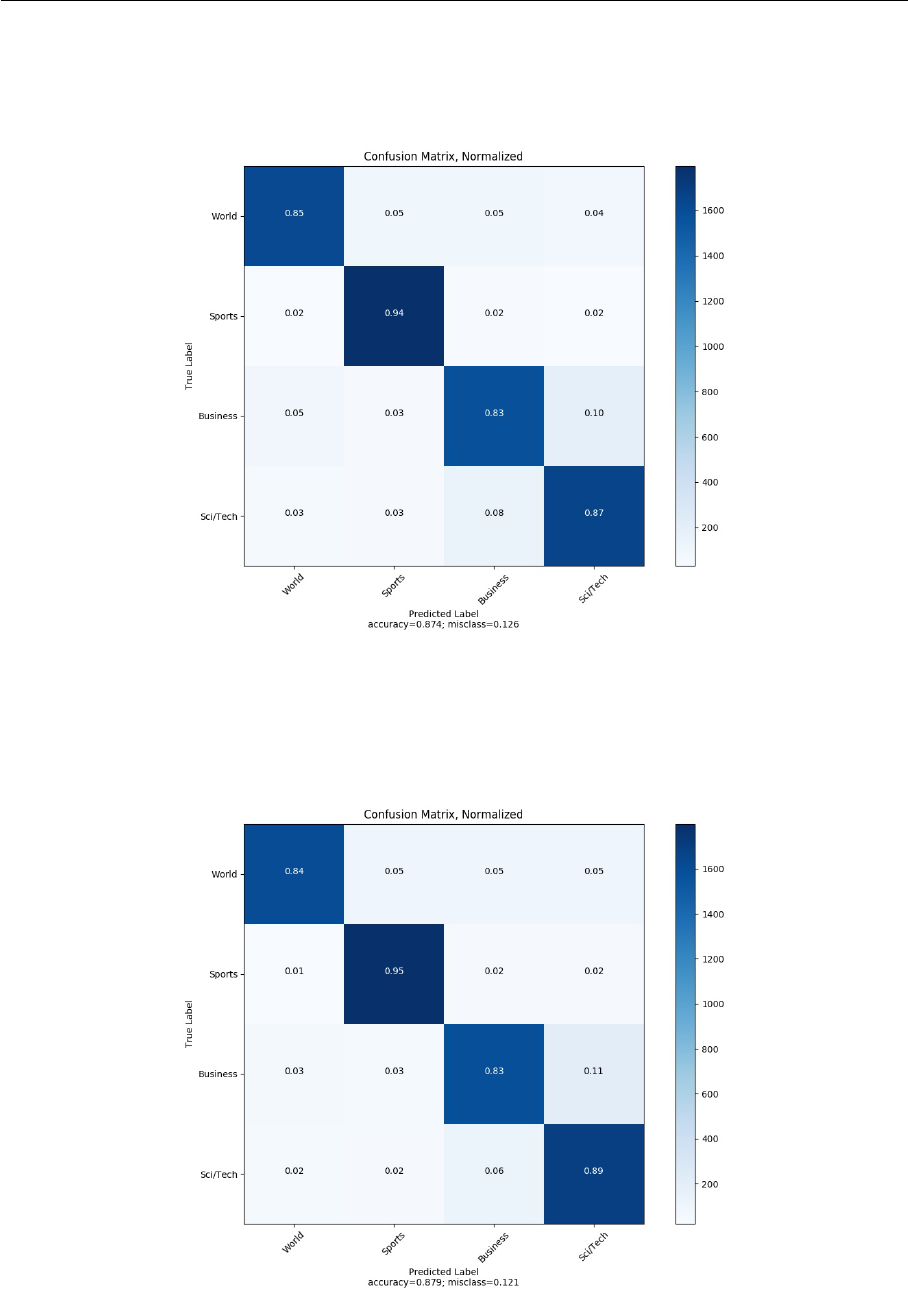

B.3 Baseline model (TextCNN) normalized confusion matrix on Twitter . . . . 72

B.4 Our model (EntCNN) normalized confusion matrix on Twitter . . . . . . . 72

TABELLENVERZEICHNIS x

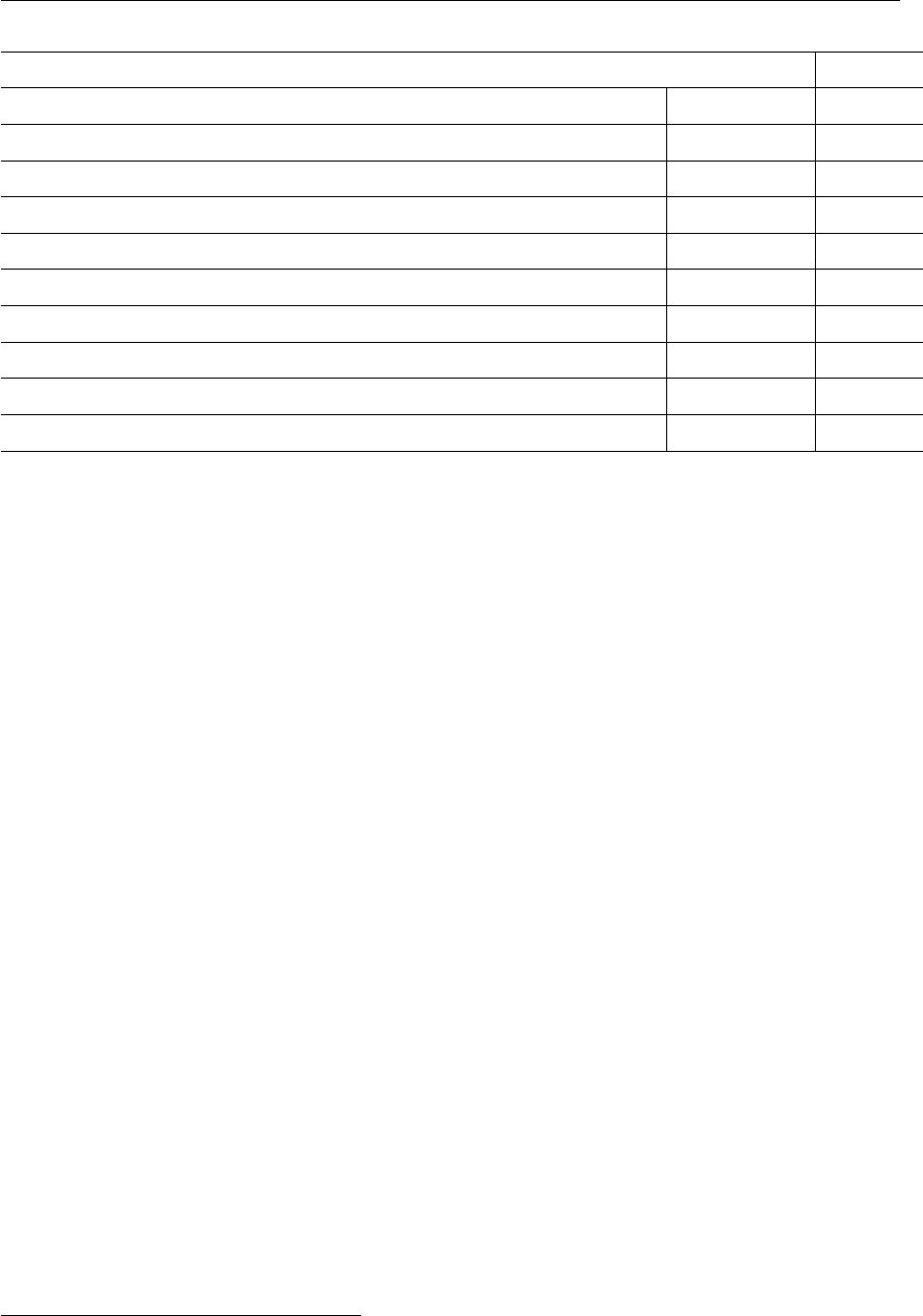

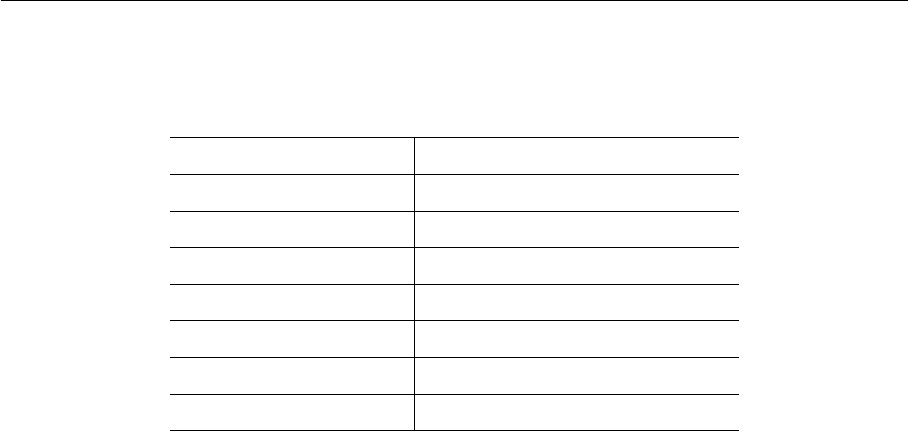

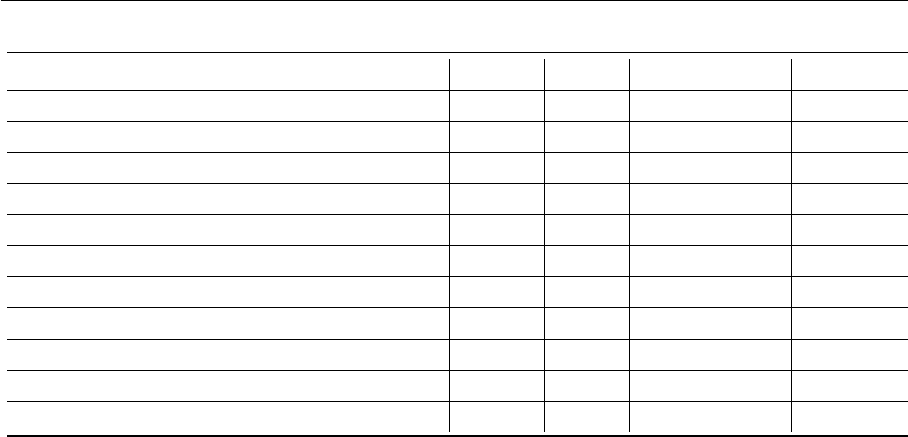

Tabellenverzeichnis

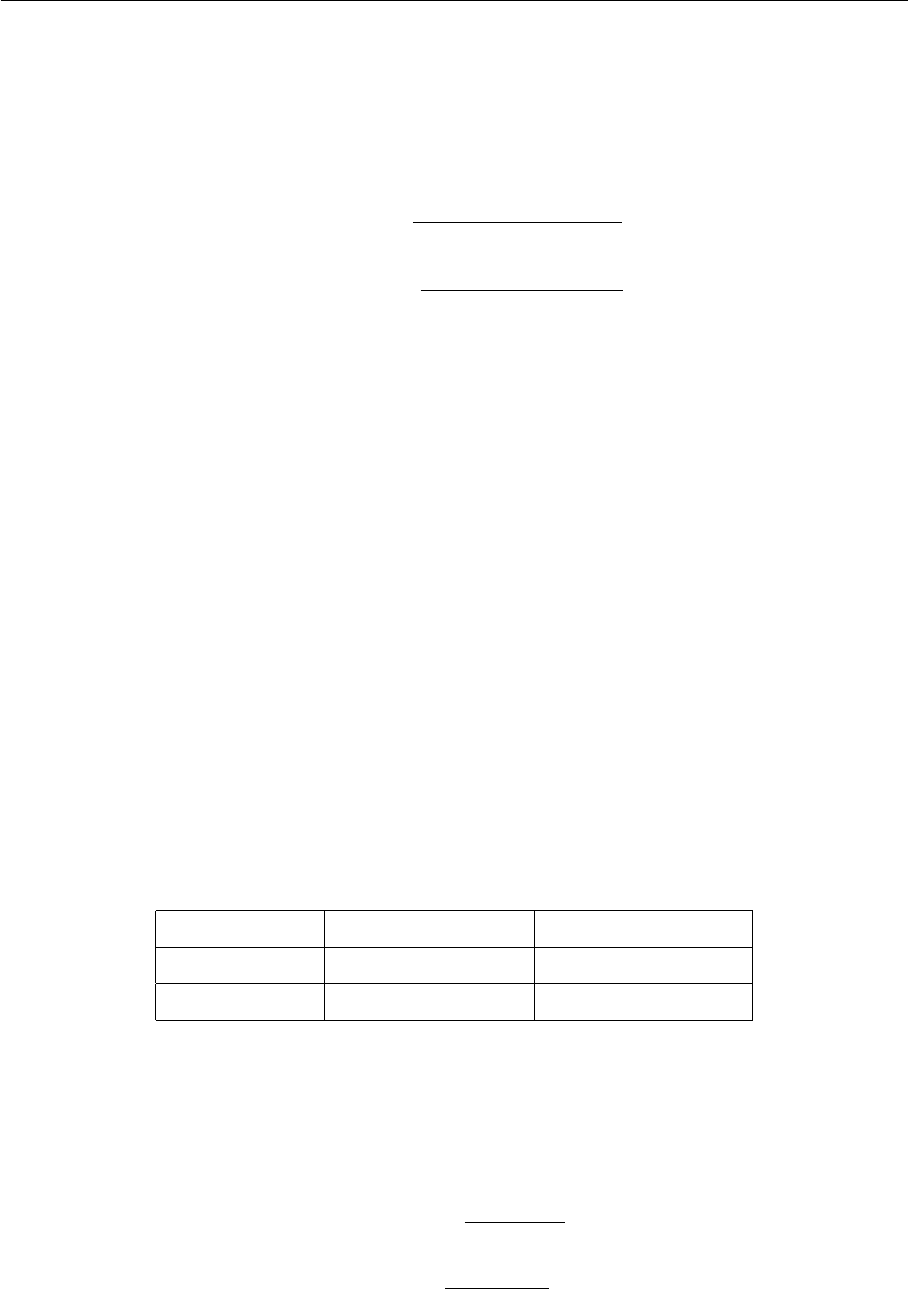

1 Chinese to English translation with different word segmentation methods . 5

2 Chinese to English translation with different word segmentation methods . 5

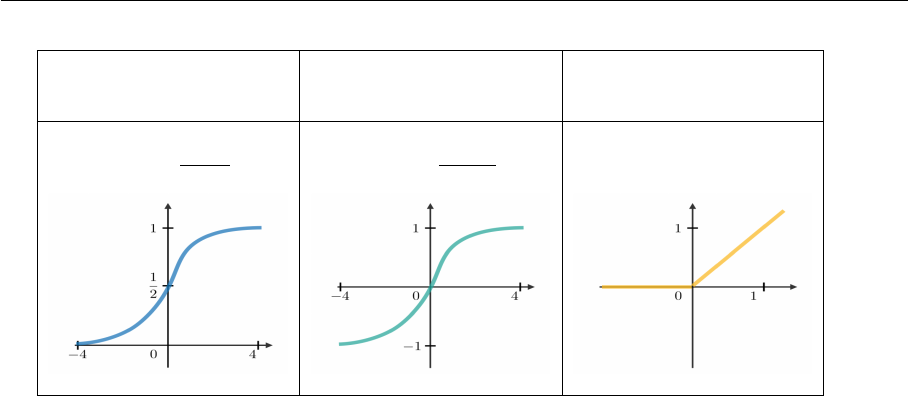

3 Activation Function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

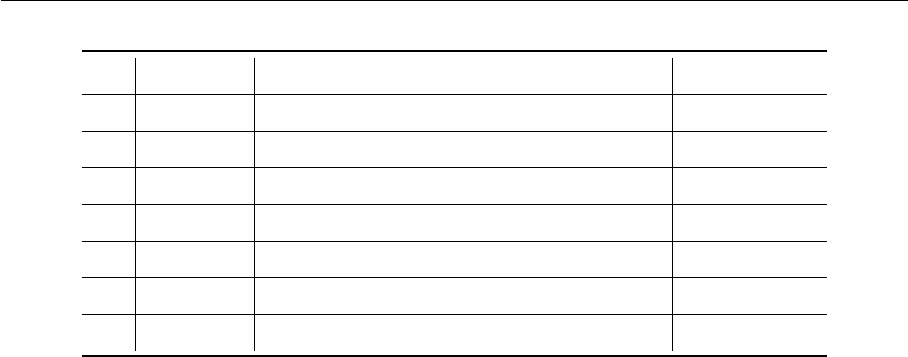

4 Summary statistics of datasets . . . . . . . . . . . . . . . . . . . . . . . . . 45

5 TREC class description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

6 Example sentences of TREC dataset . . . . . . . . . . . . . . . . . . . . . 46

7 Example sentences of Twitter dataset . . . . . . . . . . . . . . . . . . . . . 46

8 Example sentences of AG News dataset . . . . . . . . . . . . . . . . . . . . 46

9 Example sentences of Movie Review dataset . . . . . . . . . . . . . . . . . 47

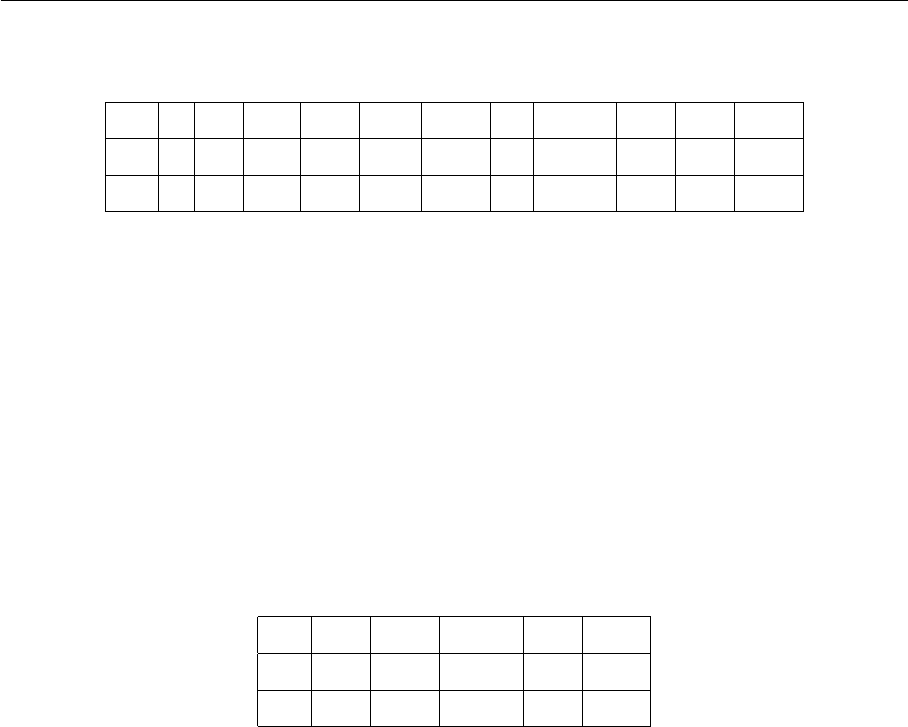

10 Document term matrix with stop words retained . . . . . . . . . . . . . . . 48

11 Document term matrix with stop words removed . . . . . . . . . . . . . . . 48

12 List of some widely used emoticons . . . . . . . . . . . . . . . . . . . . . . 51

13 List of regular expressions used to match emoticons . . . . . . . . . . . . . 52

14 Confusion matrix of binary classification . . . . . . . . . . . . . . . . . . . 53

15 Feature statistics on TREC . . . . . . . . . . . . . . . . . . . . . . . . . . 61

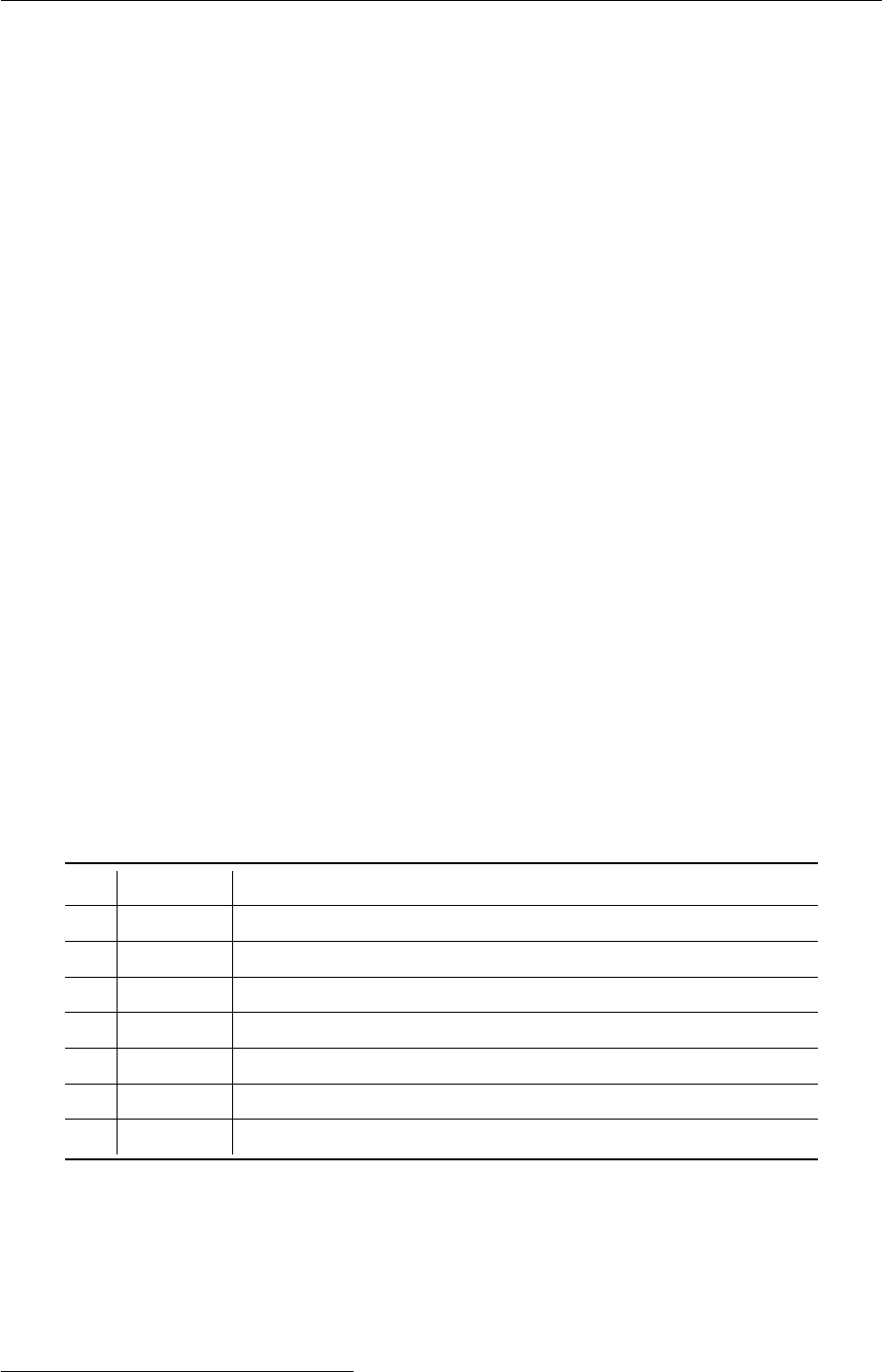

16 Experiment hyperparameters . . . . . . . . . . . . . . . . . . . . . . . . . . 62

17 Classification accuracy of different models on several different datasets . . . 63

18 Comparison between different preprocessing methods on Twitter . . . . . . 65

19 Comparison between different models . . . . . . . . . . . . . . . . . . . . . 66

20 Evaluation of EntCNN on AG News . . . . . . . . . . . . . . . . . . . . . . 66

21 Evaluation of TextCNN on AG News . . . . . . . . . . . . . . . . . . . . . 66

22 Evaluation of EntCNN on TREC . . . . . . . . . . . . . . . . . . . . . . . 66

23 Evaluation of TextCNN on TREC . . . . . . . . . . . . . . . . . . . . . . . 66

24 Feature statistics on Movie Review . . . . . . . . . . . . . . . . . . . . . . 69

25 Feature statistics on AG News . . . . . . . . . . . . . . . . . . . . . . . . . 69

26 Feature statistics on Twitter . . . . . . . . . . . . . . . . . . . . . . . . . . 70

1 INTRODUCTION 1

1 Introduction

1.1 Motivation

The advent of the World Wide Web (WWW) and the digitization of all areas of our lives

have led to an explosive growth of globally available data in recent decades. Among these

resources, text data has the largest quantity. How to automatically classify, organize and

manage the vast data has become a research topic with important purposes. Natural lan-

guage processing (NLP) refers to the analysis and processing of human natural language

by computers. It’s an interdisciplinary subject between computer science and linguistics.

Text classification, also known as text categorization, is a classical task in NLP. It aims to

assign one or more predefined classes or categories to text documents. Text classification

has a wide variety of application scenarios, for instance:

News filtering and organization. News websites contain a large number of articles. Ba-

sed on the content of the articles, they need to be automatically classified by subjects. For

example, news can be divided into politics, economics, military, sports, entertainment, etc.

Sentiment analysis and opinion mining. On the e-commerce websites, after clients

have completed transactions, the products are positively commented or negatively com-

mented. The merchants need to collect customer feedback to obtain an advice of what is

working well about the product or service and what should be done to make experience

better.

Email classification and spam filtering. It is often desirable to classify email in order

to determine either the subject or to determine junk email from numerous emails in an

automated way. This can significantly improve the experience of mailbox users.

Document organization and retrieval. The media receives a large number of submis-

sions every day. We rely on text categorization technology to automatically review articles

and mark erotic, violent, junk advertisement and other illegal content.

Traditional binary or multiclass text classification problems have been intensively studied

in machine learning research[6][29]. However, when machine learning techniques are app-

lied to short texts, most of the standard text classification approaches have the problems

such as data sparsity and insufficient text length. Moreover, due to the lack of contextual

information, short texts are highly ambiguous. As a result, simple text classification ap-

1 INTRODUCTION 2

proaches based on words only, can not represent the critical features of short texts properly.

In this work, to overcome the mentioned shortness and sparsity problem of short texts,

we enrich text representation by utilizing words together with entities represented by the

content of the given document. Additionally, we compare the performance of the model

using pure words and the model using words as well as entities.

1.2 Contribution

In this work a novel neural network based approach of short text classification is propo-

sed. We utilize and modify the convolutional neural network for sentence classification

proposed by Kim (2014)[28]. Our model is not only trained on top of pre-trained word

vectors, but also on top of pre-trained entity vectors. Tagme implemented by Ferragina

et al. (2010) [20] is first used to extract named entities from documents. We then utilize

Wikipedia2Vec model developed by Yamada et al. (2018)[57] to get entity vectors. The

impact of different kinds of word vectors on the classification accuracy is also studied in

this work. Comprehensive experiments have been carried out to explore the classification

performance of different neural network architectures.

1.3 Thesis Structure

The next chapter provides some background knowledge so we are able to gain a basic

understanding of the process of text classification. Chapter 3 introduces previous work

of short text classification and text classification with entities which is similar to our

work. Especially, various deep learning approaches for text classification, their advan-

tages and disadvantages are discussed. Chapter 4 introduces Wikipedia2Vec model and

its submodels because Wikipedia2Vec is utilized to get entity vectors in our work. Our

main contribution of this work is presented in chapter 5, convolutional neural network

for text classification with words and entities. Chapter 5 explains the basics of recurrent

neural network and long short-term memories. Our investigated experiments, evaluation

and analysis of the utilized models can be found in chapter 7. Finally we conclude with

chapter 8, which summarizes important aspects of this work and briefly discusses some

possible future work.

2 BACKGROUND 3

2 Background

2.1 Text Classification

Text classification application

Text classification can be used in a wide variety of real world applications.

Basically speaking there are four different types of classification tasks. Binary classificati-

on is the task of classifying texts of a given set into two classes. For example, when given

two labels we just need to figure out is the email spam or not spam, or is the customer

review positive or negative. In multi-class classification where we have a set of classes that

a particular document can have, we need to identify which class it is. For example, is the

news sports, scientific or politics. Multilabel classification is an extension of the previous

type where a given text can have multiple labels so it could not just be part of one class

but part of multiple classes. Finally when we try to group a bunch of documents, we do

not know their labels, so we need to learn the structure of the given documents, and this

is called clustering.

Text classification type

There are three different types of methods that we can use to classify documents. Manual

classification by hand, we try to figure out which category it should be. This method has

its own merits in the sense that it is quite accurate and it would be consistent in terms of

its classification results assuming it is done by experts in that domain. The downside is

that it is pretty slow and expensive. Rule-based classification is very accurate if the rules

are suitable to our tasks. The shortcoming is that we need to manually build and maintain

a rule-based system, if we want to add a new criteria we need to come up with a new rule

for that. The main idea of statistical classification methods is that we automatically learn

how to classify the documents. It can scale well, can be accurate if we set it well and it

is automatic because it is done by computers. The disadvantage is that it requires lots of

training data so we need the documents which we want to classify as well as the labels

and classes which are not associated with the documents. The weakness is obviously that

it requires a lot of data, sometimes a huge amount of data, which is normally hard to get

and thus can be a serious problem.

Text classification definition

Statistical classification is that we assume some texts are represented by t and some

corresponding classes c. We want to learn the probability of t being of class c, that is:

P (c|t) (2.1)

2 BACKGROUND 4

We can think of text classification tasks as a two stage process. Text representation is

we process text into some (fixed) representation given documents. It is about how we

can learn t. Text classification is that we want to classify this document given previous

representation. It’s about how to learn P (c|t).

Short text classification

Short texts usually refer to texts that are relatively short in length and generally do not

exceed 160 characters, such as tweets, chat information, news topics, opinion comments,

question texts, mobile phone text messages, and document summaries.

2.2 Text Representation (Feature Engineering)

Feature engineering is often not only the most labor-intensive and time-consuming in

machine learning, but also is extremely important. Feature engineering is different from

classifiers and does not have wide versatility. It often needs to be combined with the un-

derstanding of a specific task. The NLP domain to which the text classification problem

belongs naturally also has its own way of dealing with features. Most work of the tradi-

tional classification tasks is also carried out here.

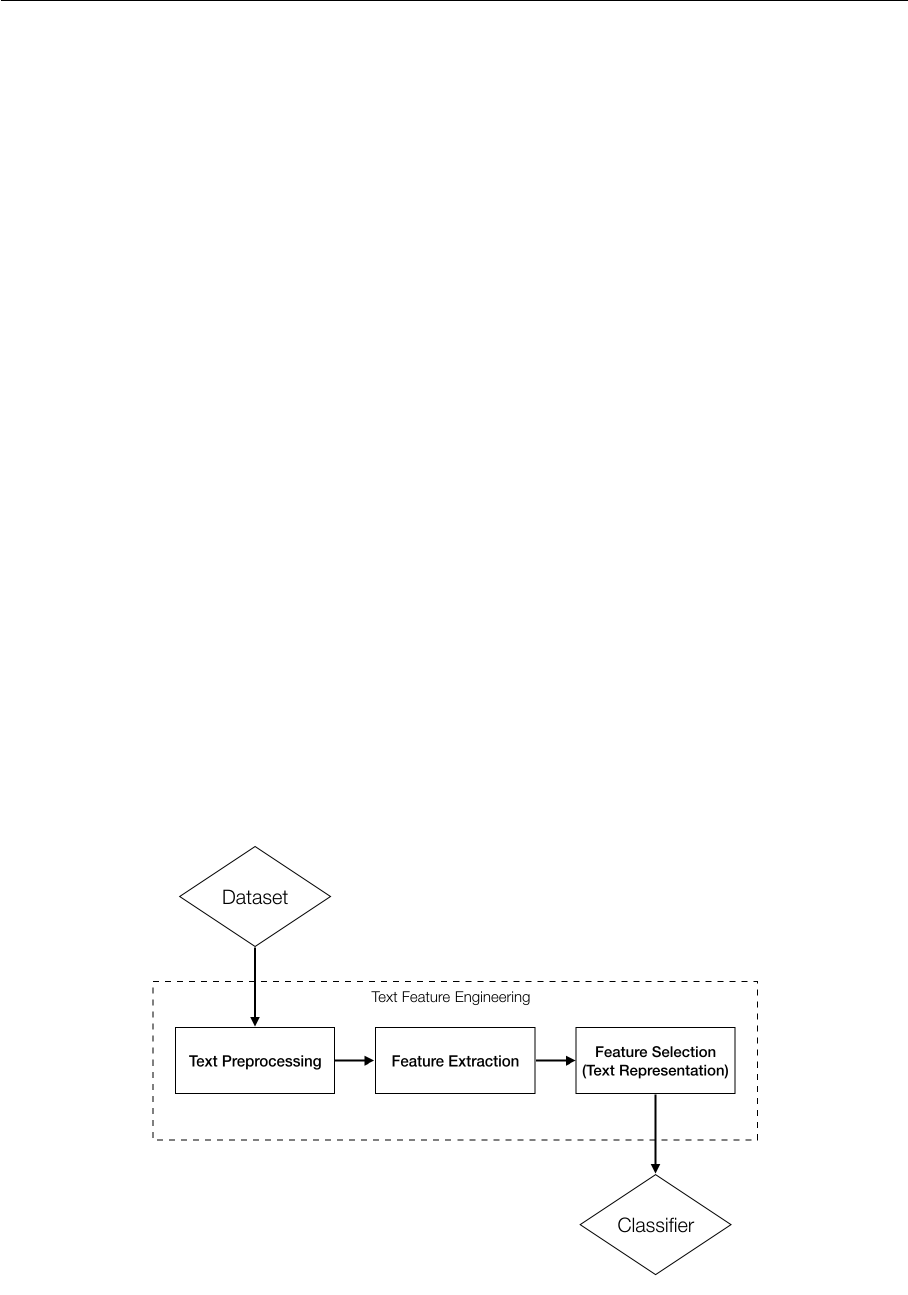

As shown in Figure 2.1, text feature engineering can be divided into three parts: text

preprocessing, feature extraction and feature selection.

Figure 2.1: Text Classification Process

2 BACKGROUND 5

Text preprocessing

Most text data in their raw format aren not well structured and contain lots of unne-

cessary information such as misspelled words, stop-words, punctuations and emojis, etc.

Especially, word segmentation is considered to be a quite important first step for Chine-

se NLP tasks, because unlike English and any other Indo-European languages, Chinese

words can be composed of multiple characters with no spaces appearing between them.

Text preprocessing involves a variety of techniques to convert raw text data into cleaned

and standardized sequences, which can further improve the performance of classifiers. De-

tailed preprocessing methods will be discussed in our experiments. Table 1 and 2 are two

Chinese to English translations with different word segmentation methods.

中外 科学 名著

chinese and foreign scientific masterpiece 4

中 外科学 名著

mid surgery masterpiece 8

Table 1: Chinese to English translation of 中外科学名著 with different word segmentation

methods

北京 大学生 前来 应聘

Peking undergraduates come to apply for jobs 4

北京大学 生前 来 应聘

Peking university while living come to apply for jobs 8

Table 2: Chinese to English translation of 北 京大学生 前来应聘 with different word

segmentation methods

Feature extraction and text representation

The purpose of text representation is to convert preprocessed texts into a form which

computer can process. It’s the most important part which determines the quality of text

classification. Traditional feature extraction techniques include syntactic word represen-

tations such as n-gram, weighted words such as bag-of-words (BoW), and semantic word

representations (word embeddings) such as word2vec, GloVe, etc. Note that vector space

model (VSM)[49] refers to the data structure for each document and BoW refers to what

kind of information we can extract from a document. They are different aspects of cha-

racterizing texts.

BoW is a simple but powerful model to represent text documents. What we need is

just to convert text documents into vectors. Only the uni-gram words are extracted, no

2 BACKGROUND 6

syntax, no semantics and no word position information. Each document is converted into

a vector which represents the frequency of all distinct words that present in the document

vector space.

Here are two simple text documents:

(1) I don’t like this type of movie, but I like this one.

(2) I like this kind of movie, but I don’t like this one.

The uni-grams of these two text documents are:

I, don’t, like, this, type, of, movie, but, I, like, this, one

I, like, this, kind, of, movie, but, I, don’t, like, this, one

Each bag-of-words can be represented as:

BoW1 = {I:2, don’t:1, like:2, this:2, type:1, of:1, movie:1, but:1, one:1}

BoW2 = {I:2, like:2, this:2, kind:1, of:1, movie:1, but:1, don’t:1, one:1}

The document vector space is:

{I, don’t, like, this, type, of, movie, but, one, kind}

The corresponding document vectors are:

[2, 1, 2, 2, 1, 1, 1, 1, 1, 0]

[2, 1, 2, 2, 0, 1, 1, 1, 1, 1]

The biggest shortcoming of this representation is that it ignores text contexts. Every

word is independent of each other and thus can not represent the semantic information

of texts. We can find that these two document vectors are extremely similar in the above

example, which demonstrates that the two sentences have very likely the same meaning.

However they mean the exact opposite. In general, the lexicon in real world applications

is at least one million in size, so BoW model has two serious problems: high dimension

and high sparsity. BoW is the basis of vector space model, so in vector space model we

can reduce the dimension by using feature selection methods and increase the density by

calculating feature weighting factors.

Some algorithms are used to reduce dimensionality, such as principal component ana-

2 BACKGROUND 7

lysis (PCA), independent component analysis (ICA), linear discriminant analysis (LDA),

and latent semantic analysis (LSA), etc. The textual representations using these machine

learning methods are generally considered to be deep representations of the documents.

In addition, distributed semantic word representations are seen as the foundation of deep

learning methods.

Feature selection

When doing the task of text categorization, it is often necessary to extract features from

the documents. Normally we train the model using part of the features which are useful

for learning, rather than using all the features which will eventually lead to curse of di-

mensionality[8] problems.

The feature selection process includes the selection of extracted features and the cal-

culation of feature weights.

The basic idea of feature selection is to rank the original features independently according

to an evaluation metric, we then select some features with the highest score, and filter

out the remaining features. Commonly used supervised feature selection methods include

Relief, Fisher score and Information Gain[35] based methods, etc.[16] Note that feature

selection methods should be distinguished from feature extraction techniques. Feature

extraction is that we create new features from the original datasets, whereas feature se-

lection returns a subset of the initial features. Feature weight calculation mainly refers

to the classic statistical method, term frequency–inverse document frequency (TF-IDF)

method and its extensions.

2.3 Text Classification (Classifiers)

Discriminative methods which directly estimate a decision boundary, such as logistic re-

gression and support vector machine (SVM), generative models which build a generative

statistical model such as naïve bayes, and instance based classifiers which use observations

directly, such as k-nearest neighbor (K-NN), are more traditional but still commonly used

machine learning classification approaches. Tree-based classification algorithms, such as

decision tree and random forests are fast and accurate for text classification.

Deep learning has a significant influence in the fields of image processing, speech recogni-

tion, and NLP. The influence of deep learning on NLP and especially on text classification

is mainly reflected in the following aspects.

2 BACKGROUND 8

End-end training

In the past, when doing statistical natural language processing, experts need to define

various features and need a lot of domain knowledge. Sometimes it is not easy to find

a good feature. But with end-to-end training, as long as there are pairs of inputs and

outputs (input-output), what we need to do is just labeling the output corresponding to

the input in order to form a training data set. The learning system can be then esta-

blished through automatic training using neural networks, without artificial settings and

carefully designed features. This has changed the development of many NLP technologies

and greatly reduced the technical difficulties of NLP. This means that as long as we have

enough computational resources (GPU, TPU) and labeled data, we can basically imple-

ment the NLP model and get really good results, which promotes the popularity of NLP

technologies.

Semantic representation (word embeddings)

One of the commonly used semantic representations is context-free embedding, which

means that the representation of a word is fixed (represented by a multi-dimensional vec-

tor) regardless of its context. The other representation depends on the context of different

sentences, the meaning of the same word might be different, so its embeddings are also

accordingly different. Now using models like BERT and GPT, we can train and obtain

the dynamic embedding of a word based on its context.

Pre-trained model

We know that neural networks need to be trained with data, they extract information from

data and convert them into corresponding weights. These weights can be then extracted

and transfered to other neural networks. Because we’ve transfered these learned features,

when we try to solve a similar problem, we don’t need to train a new neural network

model from scratch, instead we can start with models that have been trained to solve

similar problems. By using pre-trained models which are previously trained with massive

amounts of data, we can apply the corresponding structures and weights directly to the

problems we are facing. This process is called Transfer Learning. Since the pre-trained

models have been well trained, we don’t need to modify too many weights, what we need

to do is just modifying some parameters. And this is called Fine Tuning. As a result, the

pre-trained model can significantly reduce the training time.

Sentence encoding methods (CNN/RNN/LSTM/GRU/Transformer)

For a sentence of variable length, it can be encoded with RNN, LSTM, GRU or Transfor-

mer. Although these several encoding methods for sentences are feasible, recently we tend

to use Transformer to encode sentences. We can apply the encoded sentences in various

2 BACKGROUND 9

NLP applications such as machine translation, question answering, language modeling,

sentiment analysis, text classification and so on.

Attention

Encoder-Decoder model[52], or sequence to sequence (seq2seq) model, was first introdu-

ced in 2014 by Google. In an Encoder-Decoder neural network, a set of RNNs / LSTMs /

GRUs encode an input sequence into a fixed-length internal representation, another set of

RNNs / LSTMs / GRUs read this internal representation and decodes it into an output se-

quence. The length of the input and output sequences might differ. This Encoder-Decoder

architecture has achieved excellent results on a wide range of NLP tasks, such as question

answering and machine translation. Nevertheless, this approach suffers from the problem

that neural networks are forced to compress all the necessary information of an input

sequence into a fixed-length internal vector, which makes it difficult for neural networks

to deal with longer sequences, especially those in the test set which are longer than the

sequences in the training set.[7] First, the internal semantic vector cannot fully represent

the information of the entire input sequence. Second, the pre-existent information will be

overwritten by the newly input messages.

The attention mechanism is a way to free the Encoder-Decoder structure from fixed-length

internal representations. It maintains the encoder’s intermediate outputs from each step

of the input sequences, and then trains the model to learn how to selectively focus on the

inputs and relate them to the items in the output sequences. In other words, each item

in the output sequence depends on the selected item in the input sequence.[1]

Although this mechanism increases the model’s computational complexity, it is able to

result in a better performance, even state-of-the-art. Additionally, the model can also

show how to pay attention to input sequences when predicting output sequences. Un-

like the situation in computer vision, where several approaches for understanding and

visualizing CNNs have been developed, it’s normally pretty hard to visualize and under-

stand what neural networks in NLP have learned.[5] Thus this mechanism helps us to

understand and analyze what the model is focusing on and how much it focuses on a

particular input-output pair. Further attention visualization examples can be found in

machine translation[7], text summarization[48], speech recognition[13] and image descrip-

tions[56], etc.

3 RELATED WORK 11

3 Related Work

A central study in text classification is feature representation, which is commonly based

on the bag-of-words (BoW) model. Besides, feature selection methods, such as TF-IDF

model, LSA, sLDA, are applied to select more discriminative features. The major classi-

fiers in the traditional machine learning era are mainly based on naïve bayes, maximum

entropy, k-nearest neighbor (K-NN), decision tree and support-vector machine (SVM).

Traditional machine learning algorithms for text classification are well summarized in

[6][29].

3.1 Text Classification

Text categorization escapes the process of artificial feature design, text similarity com-

parison and distance definitions in the era of deep learning, but still can not escape the

choice of text preprocessing, feature extraction, model selection, parameter optimization,

coding considerations (one-hot, n-gram, etc.) and time cost trade-off.

In summary, there are six main types of deep learning models:

(1) Vector representation of words: word2vec, GloVe, FastText, BERT, GPT, ELMo,

etc.

(2) Convolutional neural network feature extraction: TextCNN, CharCNN, DCNN, VD-

CNN, etc.

(3) Pre-training & multi-task learning: BERT, GPT, ELMo, etc.

(4) Context mechanism: TextRNN, BiRNN, RCNN, etc.

(5) Memory storage mechanism: EntNet, DMN, etc.

(6) Attention mechanism: HAN, etc.

In the following paragraph we will get into a little details of the above models and get a

global picture of the application and development of deep learning in text classification

in recent years.

3.1.1 Vector Representation of Words

FastText[25], which is not much innovative in academics but has very outstanding perfor-

mance, was proposed by Mikolov’s team in Facebook in 2016. FastText (shallow network)

3 RELATED WORK 12

can often achieve the accuracy comparable to deep network in text classification, but is

many orders of magnitude faster than deep network in training time. The core idea of

FastText is to superimpose the word and n-gram vectors of the whole document to obtain

a document vector, then we use this document vector to do softmax multiclassificati-

on. There are two techniques involved: character-level n-gram features and hierarchical

softmax classification. Hierarchical softmax is first introduced in word2vec which we will

discuss in the next chapter, so here we only focus on character-level n-gram.

Word2vec treats each word in the corpus as atomic. For each word it generates a vec-

tor, which ignores the words’ internal morphological features. For example, “country” and

“countries”, these two words have many characters in common, in other words, they have

similar internal forms. However, in traditional word2vec model, such words lose this in-

ternal information when converted to different IDs. To overcome this problem, FastText

uses character-level n-grams to represent a word. For word “country”, assuming that the

value of n is 3, then its trigram are as follows:

<co, cou, oun, unt, ntr, try, ry>

where < indicates a prefix and > indicates a suffix. So we can use these trigrams to

represent word “country”. Furthermore, we can stack and add up the vector of these seven

trigrams to represent word “country”.

This brings two benefits: First, it will generate better word vectors for low frequen-

cy words, because their n-grams can be shared with other words. Second, for out-of-

vocabulary (OOV) words, we can still produce their word vectors by superimposing their

character-level n-gram vectors. Whereas in word2vec OOV words are either zero or ran-

domly initialized.

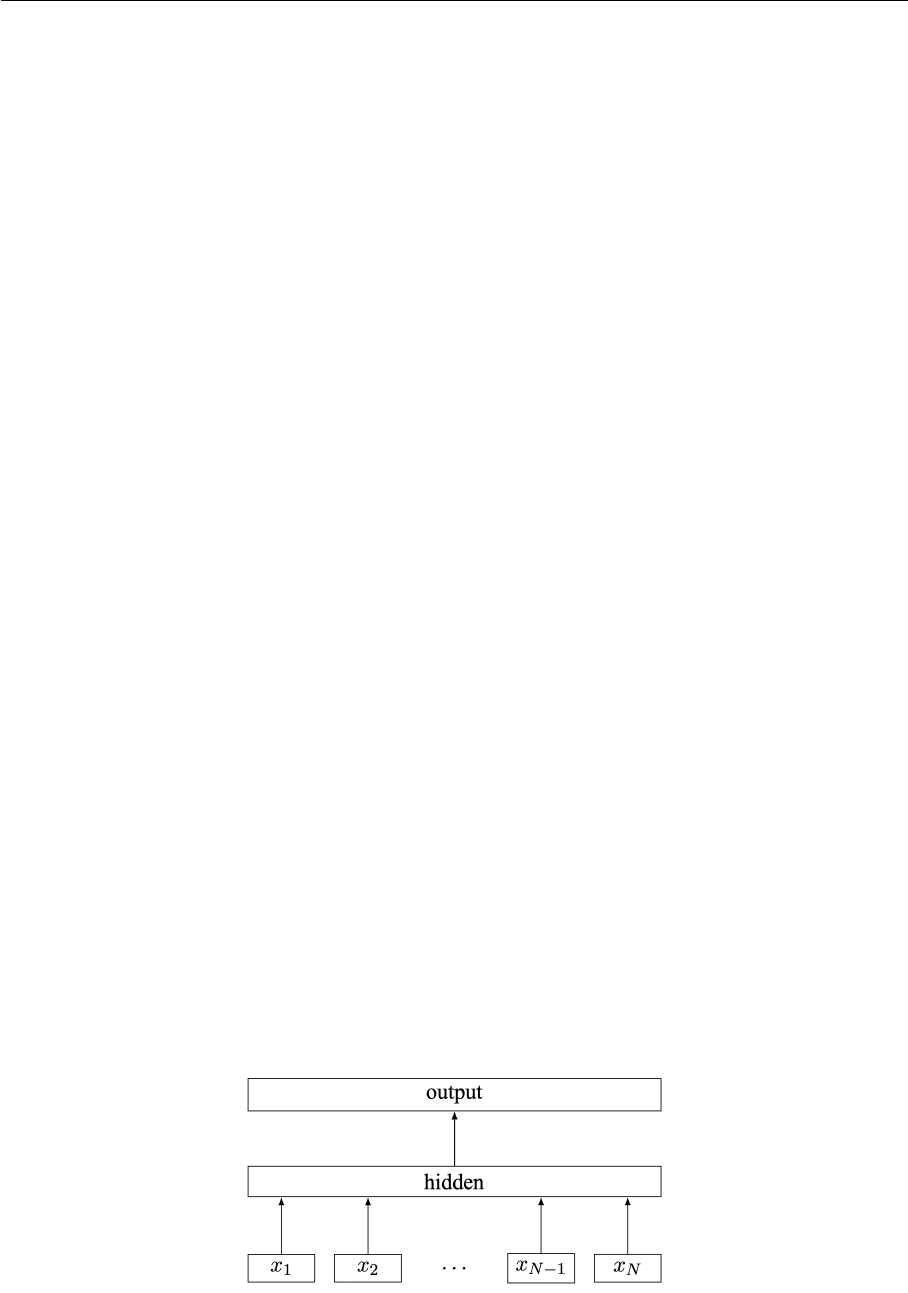

Figure 3.1: Model architecture of FastText for a sentence with N ngram features

x

1

, ..., x

N

. The features are embedded and averaged to form the hidden variable.

3 RELATED WORK 13

As we can see in Figure 3.1, like Continuous Bag of Words (CBOW), FastText has three

layers: input layer, hidden layer and output layer (hierarchical softmax). They have so-

mething in common. Their input are words represented by multiple vectors; they have a

specific target value; the hidden layers are averages of multiple superimposed word vec-

tors. The difference is that the input of CBOW is the context of the target word whereas

the input of FastText is multiple words and their character-level n-gram features, which

are used to represent a single document; the input words of CBOW are one-hot vectors

whereas the input of FastText are embedded vectors; the output of CBOW is the target

word where the output of FastText is the class label corresponding to the document.

In a word, FastText is a pretty simple but violent classification model. For paragraph

reasons, global vectors for word representation (GloVe) will not be discussed here.

3.1.2 Convolutional Neural Network Feature Extraction

TextCNN[28], which has become a classical deep learning model in text categorization,

was proposed by Kim in 2014.

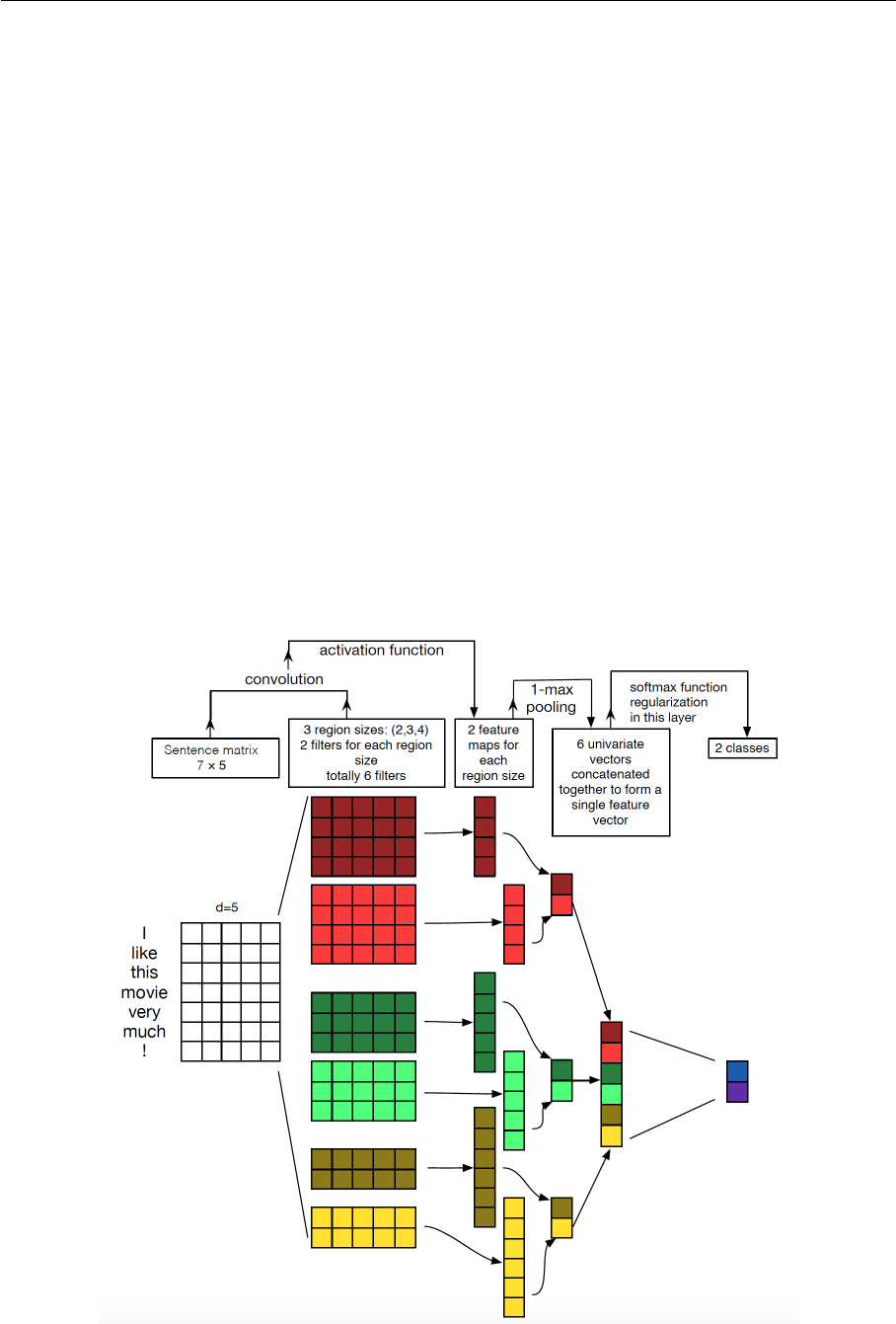

Figure 3.2: CNN architecture for sentence classification (adopted from Zhang 2014 [63])

3 RELATED WORK 14

As shown in Figure 3.2, the input layer is a 7 × 5 matrix. 5 is the word vector dimension

and the sentence length is 7. The second layer uses 3 sets of convolution kernels with

width of 2, 3 and 4, each size has 2 kernels. Each of the convolution kernels slides over

the whole sentence to generate a feature map. In the figure, the kernel does not use pad-

ding during the sliding process. The convolution kernel with width of 4 slides over the

sentence of length 7, we can get a feature map of length 4. 1-max pooling is performed

over each map. Thus a univariate feature vector is generated from all the 6 maps, and

these 6 features are concatenated to form one final feature vector. The final output layer

receives this feature vector as input and uses it to classify the sentence.

Over the last several years deep learning methods especially convolutional neural networks

(CNN) have been shown to outperform previous state-of-the-art machine learning techni-

ques in computer vision. But when CNN is first used in NLP, we’re faced with different

problems.

The max pooling operation in CNN has advantages: it has feature’s position and rotation

invariant characteristics, because no matter where the feature appears in a sentence, it

can be extracted regardless of its position. Besides, max pooling can reduce the number of

model parameters, which is beneficial to reduce the model over-fitting problem. However

in NLP this advantage isn’t necessarily a good thing, because in many NLP applications

the location information of the features is very important, for example, negative words

appear in different positions, resulting in completely different sentence meanings:

I don’t like this type of movie, but I like this one.

I like this kind of movie, but I don’t like this one.

So for TextCNN the completely loss of feature’s location information is its Achilles’ heel.

In summary, in TextCNN convolution is used to extract key information similar to n-

grams. We are able to capture features between multiple consecutive words and share

weights and biases when calculating the same type of features.

The overall performance of TextCNN is good, but there are problems. The local receptive

field size (filter size) is fixed, it is difficult to determine the filter size. The ability to mo-

del longer sequence is limited. Most importantly, the feature’s location information is lost.

Based on CNN, some models are proposed recently. Especially, due to the above pro-

blems, several works have been done.

Kalchbrenner and Blunsom et al. (2014)[27] introduced dynamic convolutional neural

3 RELATED WORK 15

network (DCNN). It uses dynamic k-max pooling, where the result of pooling isn’t a

maximum value, but the k-maximum values, which is a subsequence of the original in-

put. This brings several benefits. The dynamic k-max pooling better preserves multiple

important information in a sentence. The number of extracted features varies according

to the length of the sentence. Besides, it preserves the relative positions between words

and the word position information. It also takes into account the semantic information

between words which are far away in a sentence.

Zhang et al. (2015)[63] conducted a variety of very good comparative experiments on

CNN models mentioned in [28], and provide us with some CNN architecture and hyper-

parameter setting advice.

Zhang and LeCun (2015)[62] proposed character-level convolutional neural network (Char-

CNN) which is also an effective model. The most important point of their experimental

conclusion is that this model can classify text without using words, which strongly sug-

gests that the human language can also be considered as a signal that is no different from

any other type of signals.

Conneau and Lecun et al. (2016)[14] first used very deep convolutional neural network

(VDCNN) in NLP. Prior to their work, normally very shallow CNNs are used in text

categorization. In their work they utilize 29 convolution layers to improve the accuracy of

text classification. They get some important discoveries by exploring deep architectural

approaches: VDCNN performs better on big datasets; deeper network depth can improve

the model performance; etc.

Zhang and LeCun (2017)[61] did an interesting experiment which shows that FastText

model has better results in character-level encoding for Chinese, Japanese, and Korean

texts (CJK language), and better results in word-level encoding for English texts.

3.1.3 Pre-training & Multi-task Learning

Pre-training is a conventional method in computer vision since deep learning has become

popular. We already know that for hierarchical CNN structure, neurons of different layers

can learn different types of image features. For example, if we have a face recognition

task, after having trained the network, we visualize the features learned by each layer of

neurons, we will see that the lowest hidden layer of neurons learn has learned features

such as colors and lines, the second layer facial organ, and the third layer facial contour.

The lower the layer, the more basic the features. The upper the layer, the more related to

the task the extracted features. Because of this, the pre-trained network parameters, espe-

3 RELATED WORK 16

cially the features extracted from the bottom layers, are more independent of the specific

task, and more general. This is why we normally initialize new models with pre-trained

parameters of bottom layers.

What about NLP? The general choice for pre-training in NLP is to use language mo-

dels. The neural probabilistic language model introduced by Bengio et al. (2003)[9] can

not only predict the next word given the last few words, but also obtain a by-product, a

matrix, which is actually the word embedding matrix. Ten years later, the word2vec mo-

del proposed by Mikolov et al. (2013)[40], which simply aims to obtain word embeddings,

has become more and more popular.

So what is pre-training in NLP? Given a NLP task, such as text classification or question

answering, each word in a sentence is one-hot encoded as input, then multiplied by the

learned word embedding matrix, so we get the words’ corresponding embeddings. This

seems to be a looking-up operation, but in fact, the word embedding matrix is actually

the network parameter matrix which maps the one-hot layer to the embedding layer. So in

other words, the network from one-hot layer to embedding layer is equivalently initialized

with this pre-trained embedding matrix.

However the most serious problem with previous word embedding models is they can

not deal with polysemy. We know that polysemy is a phenomenon that often occurs in

human languages. What’s the negative impact of polysemous words on word embeddings?

For example:

He jumped in and swam to the opposite bank.

My salary is paid directly into my bank.

As shown above, “bank” has two meanings, but when we encode this word, the word

embedding model can’t distinguish between these two meanings. Despite the fact that

the contextual words of these two sentences are different, when the model being trained,

it will always predict the same word “bank”. The same word “bank” occupies the same

word embedding space (the same line in the matrix) of the model, which causes two

different context information encoded into the same parameter space. As a result, word

embeddings can’t distinguish the different semantics of polysemous words.

Researchers have made an astonishing and huge progress in NLP in 2018. Large-scale

pre-trained language models like OpenAI GPT and BERT perform quite well on various

NLP tasks using generic model architectures. The idea is similar to pre-training in com-

puter vision that we have discussed previously. But even better than that, the newly

3 RELATED WORK 17

proposed approaches do not require labeled data for pre-training. They make use of unla-

beled data, which is obviously inexhaustible in real world, allowing us to extract a large

amount of linguistic knowledge and encode them into the models. Integrating linguistic

knowledge as much as possible will naturally enhance the generalization ability of the mo-

dels, especially when we have limited amount of data. And introducing priori linguistic

knowledge has always been one of the main purposes of NLP, especially in deep learning

scenarios.

Deep contextualized word representations (ELMo) proposed by Peters et al. (2018)[44]

dynamically adjust word embedding based on current context. The core idea of ELMo

is that, we first learn word embeddings after we have trained language model, at this

time the polysemous words still can not be distinguished. But when we actually use word

embeddings, the words already have a specific context. Then we can adjust the word em-

bedding according to the semantics of the word. The adjusted word embedding can better

express the specific meaning in its context, which cleverly solve the problem of polysemy.

ELMo has a typical two-phase process. The first step is the use of language models for

pre-training, called feature-based pre-training. The second step is to extract the word

embedding of the corresponding word in each layer of the pre-trained model. Each word

in a sentence can get three embeddings: in the bottom layer we get word embedding; the

embedding in the first layer of long short-term memory (LSTM) captures word’s syntac-

tic information; the embedding in the second layer of LSTM captures word’s semantic

information. We then combine the extracted embeddings together as a new feature in the

downstream NLP tasks. ELMo can improve its performance in 6 different NLP tasks.

Generative pre-training model (OpenAI GPT) introduced by Radford et al. (2018)[45] al-

so has a two-phase training process, see Figure 3.3. In the first phase we use the language

model for pre-training and in the second phase we fine-tune the model to solve downstream

tasks. Despite of the similarity between GPT and ELMo, GPT has two major differences

from ELMo. The first difference is that in GPT’s second step, we need to fine-tune the

same base model so that we can use it in the following downstream tasks. We also need

substantial task-specific architecture modifications. The second difference between GPT

and ELMo is that GPT uses Transformer as its feature extractor. Transformer, introduced

by Vaswani et al. (2017)[54], which is first used in neural machine translation (NMT), is

a novel neural network architecture based on a self-attention mechanism. Why do we use

Transformer here? Because Transformer is currently the best feature extractor. Transfor-

mer outperforms recurrent neural network (RNN) because RNN can not be parallelized.

Transformer also outperforms CNN because it can capture long-distance features. Ad-

ditionally, in the pre-training step of GPT, although we still use the language model as

3 RELATED WORK 18

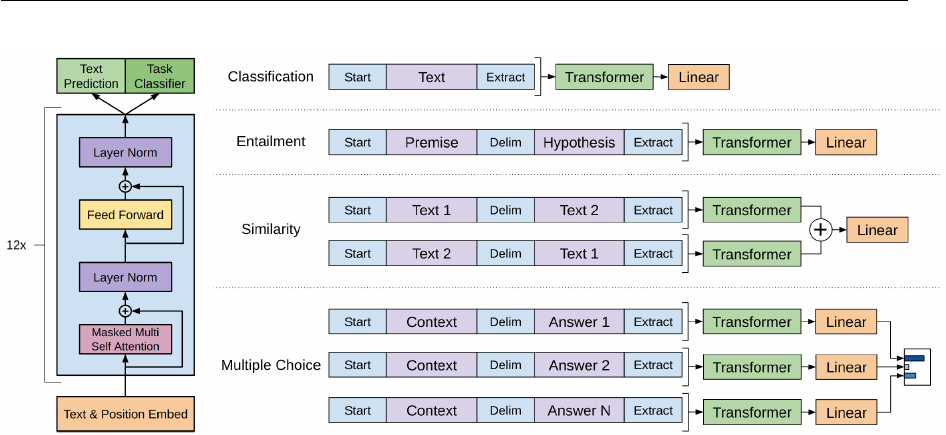

Figure 3.3: (left) Transformer architecture and training objectives used in GPT. (right)

Input transformations for fine-tuning on different tasks. We convert all structured in-

puts into token sequences to be processed by the pre-trained model, followed by a line-

ar+softmax layer. (adopted from [45])

target task, we adopt a single-direction language model. That is, one limitation with GPT

is that it is only trained to predict the future left-to-right context, without right-to-left

context. The result of GPT is very amazing. It achieves the best results in 9 out of 12

NLP tasks.

Now we finally come to the new language representation model, deep bidirectional en-

coder representations from transformers (BERT), proposed by Devlin et al. (2018)[18],

which obtains new state-of-the-art results in 11 NLP tasks including text classification.

BERT has the same two-phase process as GPT. It is designed to pre-train deep bidirec-

tional representations by jointly conditioning on both left and right context in all layers.

The pre-trained BERT representations can be fine-tuned with just one additional output

layer. They also proposed masked language model and next-sentence prediction method

in order to pre-train the model in both forward and backward directions. BERT input

representations are the sum of the token embeddings, the segmentation embeddings and

the position embeddings.

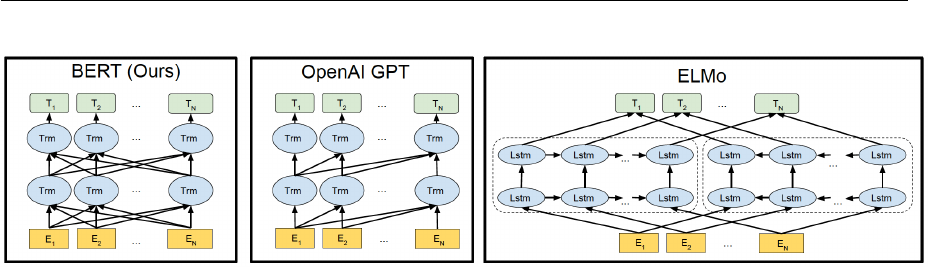

There is a close relationship between the above 3 models, see Figure 3.4. If we change

GPT model in the pre-training phase by using bidirectional language model, then we will

get BERT. If we replace ELMo’s feature extractor LSTM with Transformer, we will also

get BERT.

In summary, inductive transfer learning consists of two steps: pre-training, in which the

3 RELATED WORK 19

Figure 3.4: Differences in pre-training model architectures. BERT uses a bidirectional Trans-

former. OpenAI GPT uses a left-to-right Transformer. ELMo uses the concatenation of inde-

pendently trained left-to-right and right-to-left LSTM to generate features for downstream

tasks. Among three, only BERT representations are jointly conditioned on both left and

right context in all layers. (adopted from [18])

model learns a general-purpose representation of inputs by using a specific feature extrac-

tor (RNN, LSTM, Transformer), and adaptation, in which the representation is transferred

to a new task.[43] As we discussed previously, there are two main paradigms for adap-

tation: feature ensemble (ELMo) and fine-tuning (GPT, BERT). For ELMo, we extract

contextual representations of the words from all layers by feeding the input sentence into

pre-trained bidirectional LSTM network. During adaptation, we learn a linear weighted

combination of word embeddings in all layers which is then used as input to a task-specific

architecture in downstream tasks. In contrast, for GPT and BERT, after obtaining the

pre-trained model, during fine-tuning (adaption), we still adopt the same model, use part

of the training data of the downstream task, train on the same model to correct the

network parameters obtained during the pre-training stage. We only need a task-specific

output layer. So which paradigm is better? Peter et al. (2019)[43] did some research to

empirically compare feature ensemble with fine-tuning approaches across diverse datasets.

They come to a conclusion that the relative performance of two approaches depends on

the similarity of the pre-training and target tasks. So for BERT, we would better fine-tune

the model.

3.1.4 Context Mechanism

We know that the nature of convolution is to extract features. Deep learning is about

feature representation learning. But documents or sentences are sequences, that is, the

previous input is related to the subsequent input. So thinking about to use RNN to deal

with texts seems to be reasonable. Recurrent neural network for text classification (Tex-

tRNN) proposed by Liu et al. (2016)[37], whose architecture is similar to TextCNN, is the

first RNN model to classify documents. RNN and its variants can capture variable-length

and bidirectional n-gram information. But it has disadvantages. As far as I’m concerned,

3 RELATED WORK 20

this model is not recommended because the training speed of RNN is very slow. RNN is

also a biased model, in which later words are more dominant than earlier words.

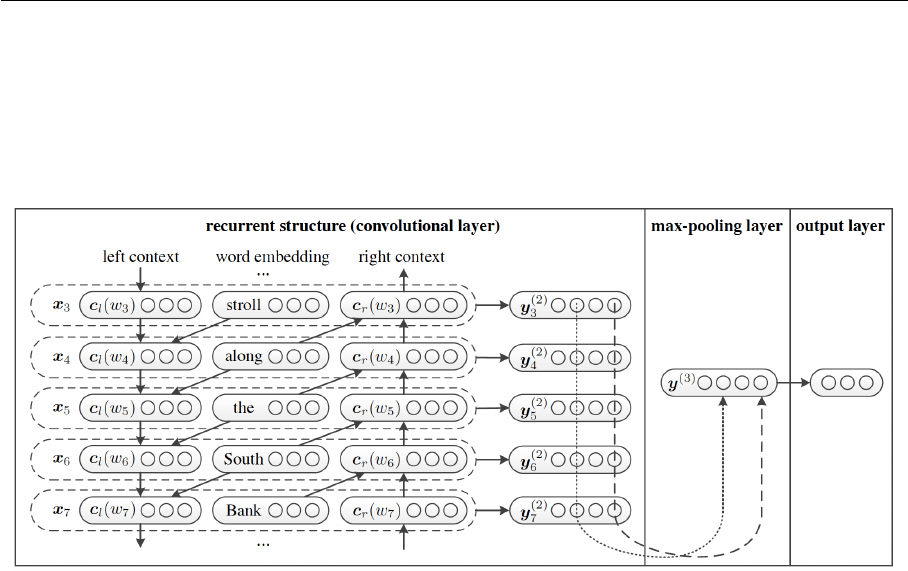

Recurrent convolutional neural network (RCNN) which was introduced by Lai et al.

(2016)[33] is a combination of CNN and RNN. They employ a bidirectional RNN to

capture the contexts and learn the word representations. They also employ max-pooling

to automatically determine which feature plays a more important role in text classificati-

on. This model runs faster than RNN and gets a good result in three of the four commonly

used datasets.

3.1.5 Memory Storage Mechanism

Typical Encoder-Decoder model’s performance is limited by the size of its memory, espe-

cially when dealing with longer sequences. This limitation can be solved by a strategy

called attention mechanism which was proposed by Bahdanau et al. (2014)[7]. We can

also refine the attention mechanism by adding a separate, readable and writable memo-

ry component. Dynamic memory networks (DMN) which was proposed by Kumar et al.

(2016)[32] has four modules: input module, question module, episodic memory module

and answer module. Here the input is a list of sentences, the question module is equiva-

lent to the gated units which uses the attention mechanism to selectively store the input

information in the episodic memory module. Then the output of episodic memory is ans-

wered by the answer module. The DMN obtains state-of-the-art results on several types

of tasks and datasets including text classification.

3.1.6 Attention Mechanism

Hierarchical attention network (HAN) proposed by Yang et al. (2016)[59] is another model

in which attention mechanism is introduced. The basic idea of HAN is that we first con-

sider the hierarchical structure of documents: a sentence is a group of words, a document

consists of several sentences. So we can divide documents hierarchy into three layers, that

is: words, sentences and texts. We use two bidirectional LSTMs to model word-sentence

and sentence-document. Second, different words and sentences contain different informa-

tion thus they can not be treated equally. The attention mechanism is introduced in each

model to capture longer dependencies. From this we derive the importance of different

words and sentences when constructing sentences and texts accordingly.

The HAN model structure is very consistent with the human understanding process:

word → sentence → document. The most important thing is that the attention layers

can be well visualized when providing us with better classification accuracy. At the same

3 RELATED WORK 21

time, we can find that the attention part has a great influence on the expression ability

of the model.

3.2 Short Text Classification

Traditional machine learning methods for short text classification are mainly based on

widely used machine learning models, such as SVM, etc. Next we focus on deep learnings

methods for short text classification which has dominated in research in recent years.

Wang et al. (2017)[55] introduced knowledge from a knowledge base and combine words

with associated concepts to enrich text features. They also use character level information

to enhance word embeddings. They finally build a joint CNN model to classify short texts.

Our work is similar to their work, the difference is that we enrich word embeddings with

entities while they utilize concepts. We also adopt the same datasets they used in the

experiments.

Zeng et al. (2018)[60] proposed topic memory networks which jointly explore latent topics

and classify documents in an end-to-end system.

3.3 Text Classification using Named Entities

There is not much research work about text classification using named entities. Kral

(2014)[30] compared five different approaches to integrate named entities using naïve

bayes classifier on Czech corpus in his work. The experimental results show that entity

features do not significantly improve the classification performance over the baseline word-

based features. The improvement is maximum 0.42%.

4 WIKIPEDIA2VEC AND DOC2VEC 23

4 Wikipedia2Vec and Doc2Vec

One of our main concerns is to get good entity vectors. Our entity vectors are generated

by using Wikipedia2Vec and Doc2Vec. Wikipedia2Vec, which is implemented by Yamada

et al. (2018)[57], is an open source tool used for obtaining vector representations of words

and entities from large corpus Wikipedia. This tool enables us to learn both the vectors of

words and entities simultaneously and thus places similar words and entities close to each

other in a high dimensional continuous vector space. Compared with existing embedding

tools such as Gensim[46], FastText[25] and RDF2Vec[47], this tool has been proved to be

the state-of-the-art NLP model in the named entity disambiguation (NED) task.

4.1 Word2vec and Skip-gram

The word2vec model, which was proposed by Mikolov et al. (2013)[40], has caught rese-

archers a numerous amount of attention in recent years. The core idea of word2vec is to

characterize one word through the neighbors of this word with prediction between every

word and its context words. The word vector representations produced by the model have

been proven to carry semantic features and can be subsequently used in many natural

language processing applications and further research.

Word2vec actually contains two algorithms, that is, skip-gram, which predicts context

words given target; continuous bag of words (CBOW), which predicts target word from

bag-of-words context. The model also contains two moderately efficient training methods,

hierarchical softmax and negative sampling[39].

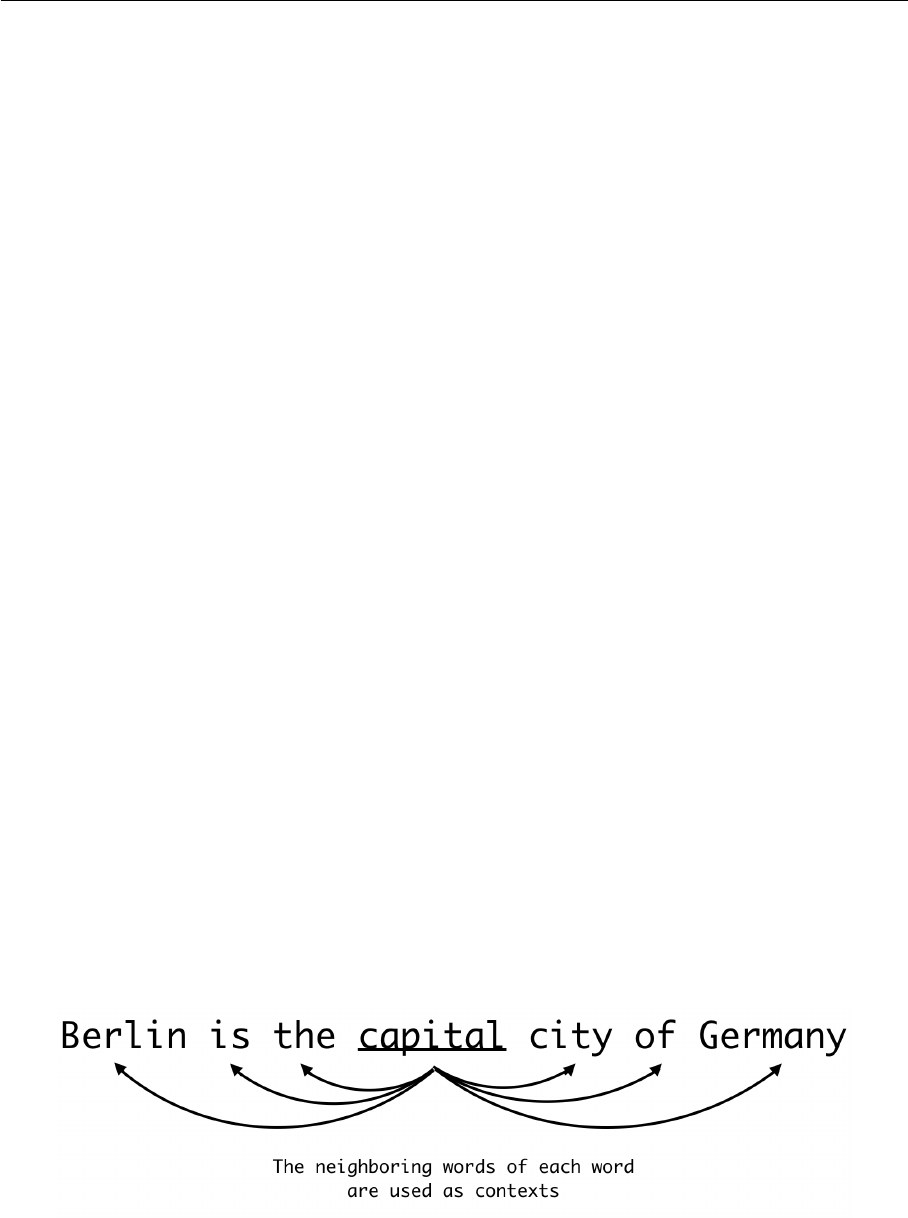

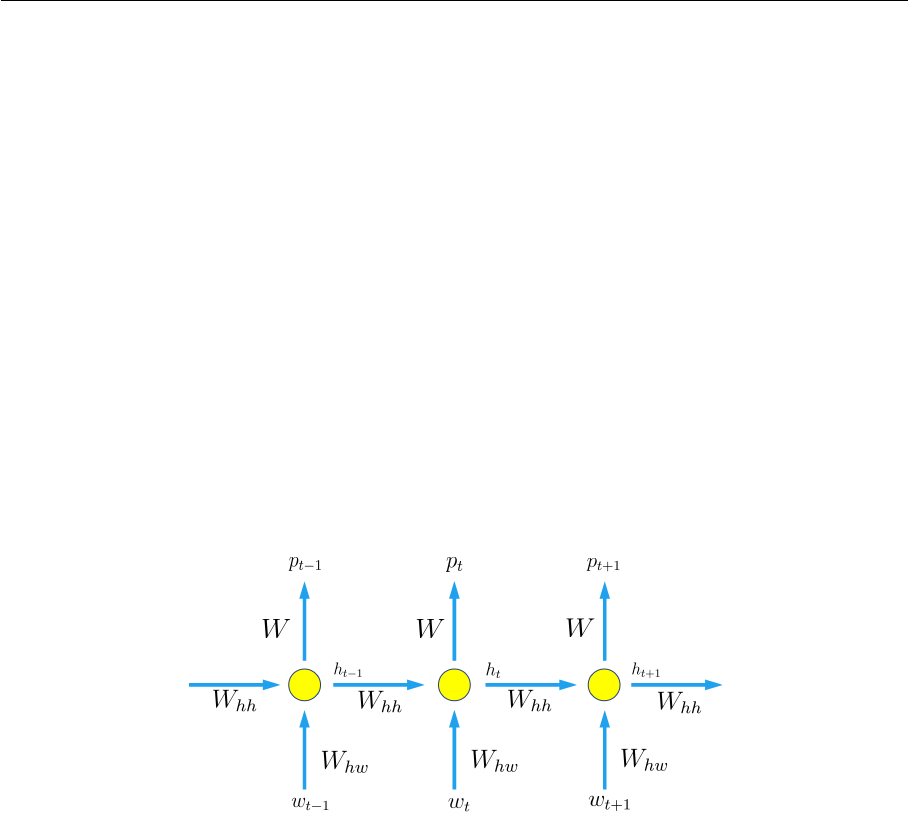

Figure 4.1: Word based skip-gram model

We now focus on skip-gram algorithm. Given a sentence of T words w

1

, w

2

, ..., w

T

, for

each word t = 1 . . . T , we predict surrounding words in a window of “radius” m of every

word. Here m is the size of the context window, w

t

denotes the center word and w

t+j

is its

4 WIKIPEDIA2VEC AND DOC2VEC 24

context word. The objective function is to maximize the probability of any context word

given the current center word. We want to maximize the following objective function:

J

0

(θ) =

T

Y

t=1

Y

−m≤j≤m

j6=0

P (w

t+j

|w

t

; θ) (4.1)

Machine learning likes minimizing things so we add minus. T represents the normalization

per word, so the objective function does not depend on the length of the sentence.

J(θ) = −

1

T

T

X

t=1

X

−m≤j≤m

j6=0

log P (w

t+j

|w

t

) (4.2)

So we minimize the negative log probability where θ represents all variables we will opti-

mize.

For the conditional probability P (w

t+j

|w

t

), the simplest formulation is:

P (o|c) =

exp(u

T

o

v

c

)

P

V

w=1

exp(u

T

w

v

c

)

(4.3)

where o is the outside (or output) word index, c is the center word index, v

c

and u

o

are

“center” and “outside” vectors of indices c and o, V is a set containing all the words in the

vocabulary. Note we have two vectors for each word because it makes math much easier.

This softmax function uses word c to obtain probability of word o. We use softmax func-

tion because it’s sort of a standard way to turn numbers into a probability distribution.

We now compute derivatives of equation 4.3 to work out minimum.

∂

∂v

c

log

exp(u

T

o

v

c

)

P

V

w=1

exp(u

T

w

v

c

)

=

∂

∂v

c

log exp(u

T

o

v

c

) −

∂

∂v

c

log

V

X

w=1

exp(u

T

w

v

c

) (4.4)

For the left part of the formula, we have

∂

∂v

c

log exp(u

T

o

v

c

) =

∂

∂v

c

(u

T

o

v

c

) = u

o

(4.5)

For the right part of the formula, by using chain rule we have

4 WIKIPEDIA2VEC AND DOC2VEC 25

∂

∂v

c

log

V

X

w=1

exp(u

T

w

v

c

) =

1

P

V

w=1

exp(u

T

w

v

c

)

·

∂

∂v

c

V

X

x=1

exp(u

T

x

v

c

)

=

1

P

V

w=1

exp(u

T

w

v

c

)

·

V

X

x=1

∂

∂v

c

exp(u

T

x

v

c

)

!

=

1

P

V

w=1

exp(u

T

w

v

c

)

·

V

X

x=1

exp(u

T

x

v

c

)

∂

∂v

c

(u

T

x

v

c

)

!

=

1

P

V

w=1

exp(u

T

w

v

c

)

·

V

X

x=1

exp(u

T

x

v

c

)u

x

!

(4.6)

Combining formula 4.5 with 4.6 we get the final derivative of conditional probability

P (w

t+j

|w

t

), that is

∂

∂v

c

log(P (o|c)) = u

o

−

1

P

V

w=1

exp(u

T

w

v

c

)

·

V

X

x=1

exp(u

T

x

v

c

)u

x

!

= u

o

−

V

X

x=1

exp(u

T

x

v

c

)

P

V

w=1

exp(u

T

w

v

c

)

· u

x

= u

o

−

V

X

x=1

P (x|c) · u

x

(4.7)

Besides, we can observe that the minuend in formula 4.7 is an expectation, which is an

average over all the context vectors weighted by their probability. The above formula is

just the partial derivatives for the center word vector parameters. Similarly, we also need

to compute the partial derivatives for the output word vector parameters. Finally, we have

derivatives with respect to all the parameters and we can minimize the cost(loss) function

using gradient descent algorithm.

4.2 Wikipedia2Vec

Wikipedia2Vec iterates over the entire Wikipedia pages. It extends the conventional word

vector model (i.e., skip-gram model) by using two submodels: Wikipedia link graph model

and anchor context model. It then jointly optimizes these three models.

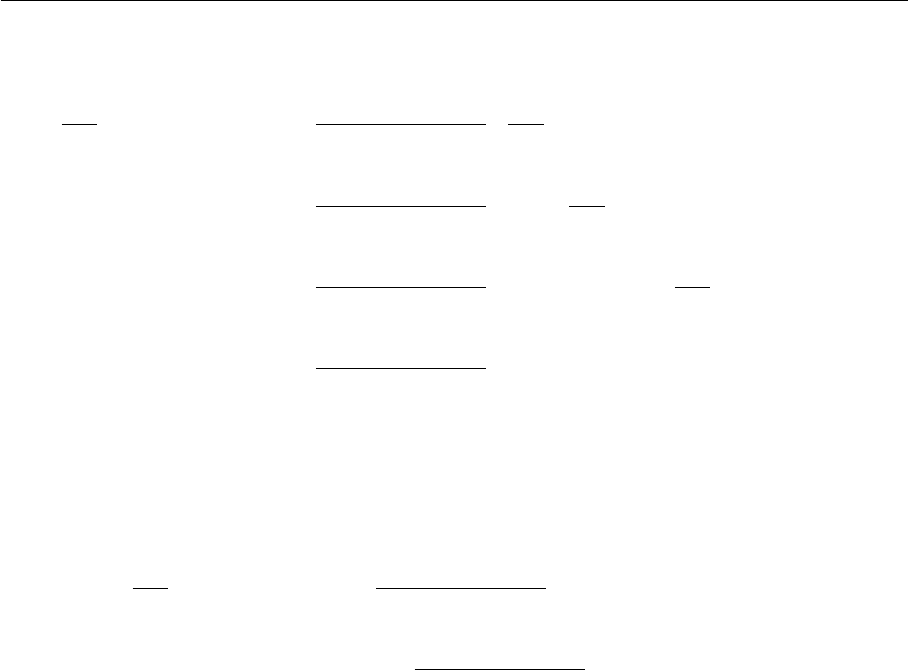

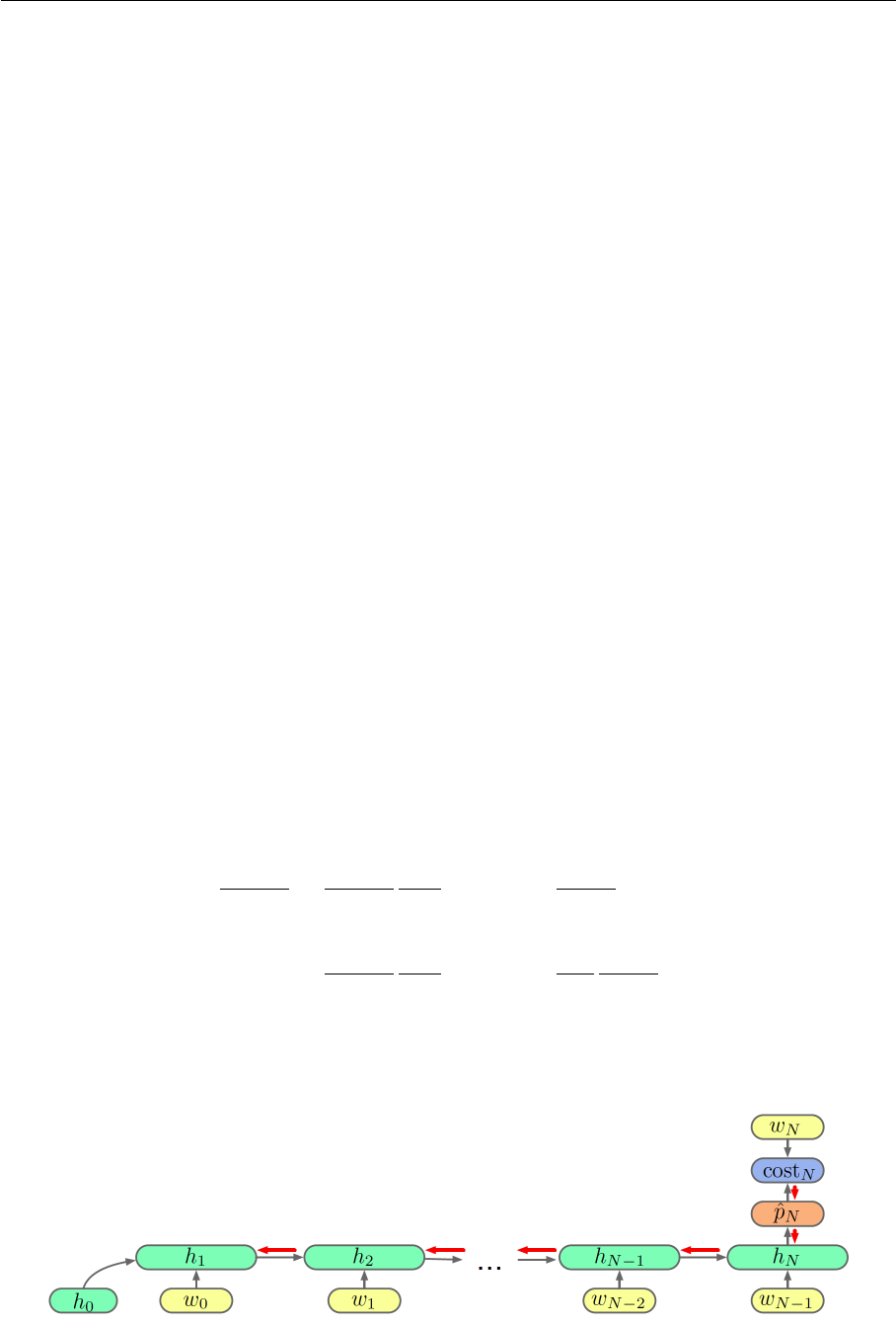

4.2.1 Wikipedia Link Graph Model

Wikipedia link graph model[58] is a model which learns entity vectors by predicting neigh-

boring entities in Wikipedia’s link graph. Wikipedia’s link graph is an undirected graph

4 WIKIPEDIA2VEC AND DOC2VEC 26

whose nodes represent entities and edges represent links between entities in Wikipedia.

Entity linking (EL) is the task of recognizing (named entity recognition NER) and lin-

king (named entity disambiguation NED) a named-entity mention to an instance in a

knowledge base (KB), typically Wikipedia-derived resources like Wikipedia, DBpedia and

YAGO.[12]

NED in NLP is a task to determine the identity of entities which are mentioned in do-

cuments. For example, given the sentence “Washington is the capital city of the United

States”, here we aim to determine that Washington refers to “Washington, D.C.” and not

to “George Washington”, the first President of the United States. EL requires a KB that

contains entities. Wikipedia2Vec is obviously based on Wikipedia, in which each page is

regarded as a named entity. For example the page of Washington, D.C. in Wikipedia has

a link to the page of United States, so there is an edge between this pair of entities. There

are also some other cases, for example, Berlin has a link to Germany, Germany has a link

to Berlin, both of the entities are linked to each other.

Figure 4.2: Wikipedia link graph model

Given a set of entities e

1

, e

2

, ..., e

n

, for each entity e

i

, we compute the probability of every

entity in the set that is linked to this entity e

i

. Here E denotes the set of all the entities

in KB and S

e

is the set of entities which has a link to entity e. We want to maximize

the probability of any entity that has a link to entity e given this entity e. So we need to

minimize this following objective function:

J

e

= −

X

e

i

∈E

X

e

o

∈S

e

i

,e

i

6=e

o

log P (e

o

|e

i

) (4.8)

For the conditional probability P (e

o

|e

i

), we compute it using the following softmax func-

tion:

4 WIKIPEDIA2VEC AND DOC2VEC 27

P (e

o

|e

i

) =

exp(u

T

e

o

v

e

i

)

P

e∈E

exp(u

T

e

v

e

i

)

(4.9)

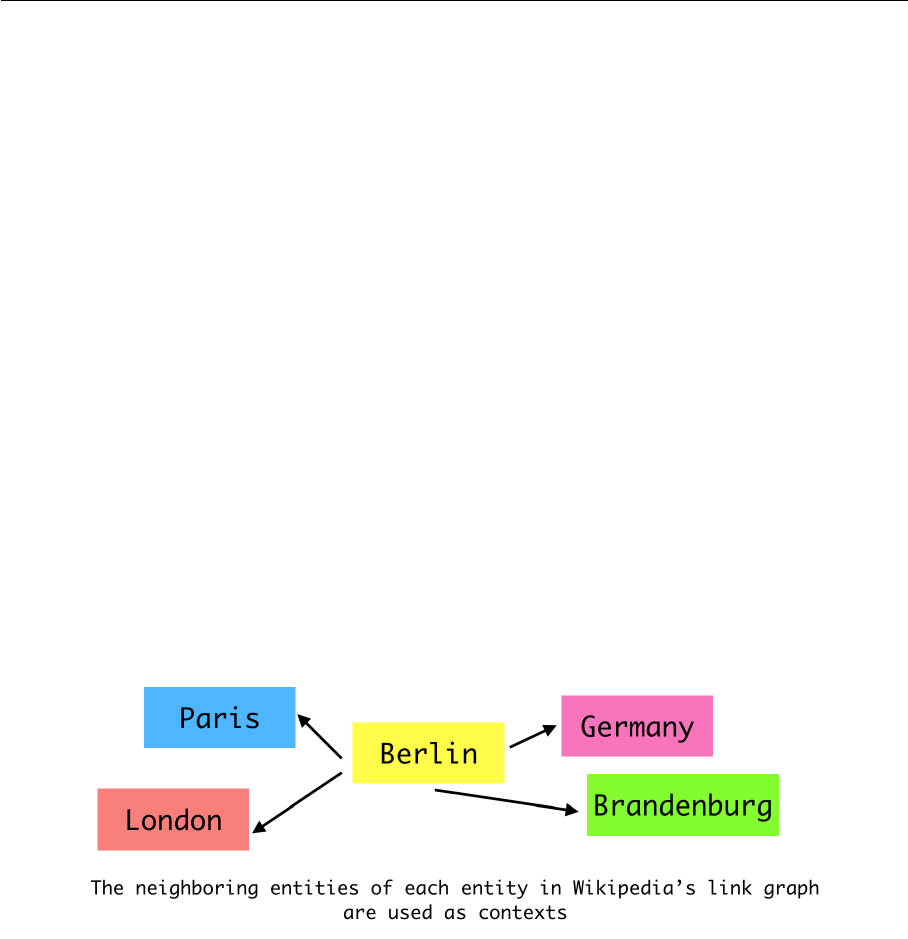

4.2.2 Anchor Context Model

So far we have learned entity vectors using entities and word vectors using words. We

obtain these vectors by training two different models, so the words and entities are map-

ped into different vector spaces. We hope to map them into the same vector space so we

introduce anchor context model[58] which learns entity vectors and word vectors simul-

taneously by predicting the context words given an entity.

In anchor context model, we want to predict the context words of an entity pointed

to with target anchor.[58] Here A denotes the set of anchors in KB. Each anchor in A

contains a referent entity e

i

and a set of its context words S. The previous c and next

c words of a referent entity are seen as its context words. The objective function is as

follows:

J

a

= −

X

(e

i

,S)∈A

X

w

o

∈S

log P (w

o

|e

i

) (4.10)

Figure 4.3: Anchor context model

For the conditional probability P (w

o

|e

i

), we compute it using the following softmax func-

tion:

P (w

o

|e

i

) =

exp(u

T

w

o

v

e

i

)

P

w∈W

exp(u

T

w

v

e

i

)

(4.11)

4 WIKIPEDIA2VEC AND DOC2VEC 28

4.3 Doc2Vec

Doc2Vec is proposed by Quoc Le and Tomas Mikolov in 2014.[34] Doc2Vec, also called

Paragraph Vector, is an unsupervised algorithm, with which we are able to get fixed-

length feature representations from variable-length documents. Doc2Vec can be used on

text classification and sentiment analysis tasks.

Similar to word2vec, Doc2Vec also has two training algorithms, that is, distributed bag

of words (DBOW) which is similar to skip-gram in word2vec, and distributed memory

(DM) which is similar to continuous bag of words (CBOW) in word2vec. Especially, the

input of DBOW is the paragraph vector. The model is trained to predict the words in

a small window. In contrast to DBOW, in DM, the paragraph vector and local context

word vectors are concatenated or averaged to predict the next word in a context.

Quoc Le also did experiments trained on Wikipedia which shows that the Paragraph

Vector algorithms perform significantly better than other methods and embedding quality

is enhanced.[15] So in our work, we train the Doc2Vec model on Wikipedia dump and use

Doc2Vec to obtain better entity embeddings.

5 CONVOLUTIONAL NEURAL NETWORK FOR SHORT TEXT CLASSIFICATION 29

5 Convolutional Neural Network for Short Text Clas-

sification

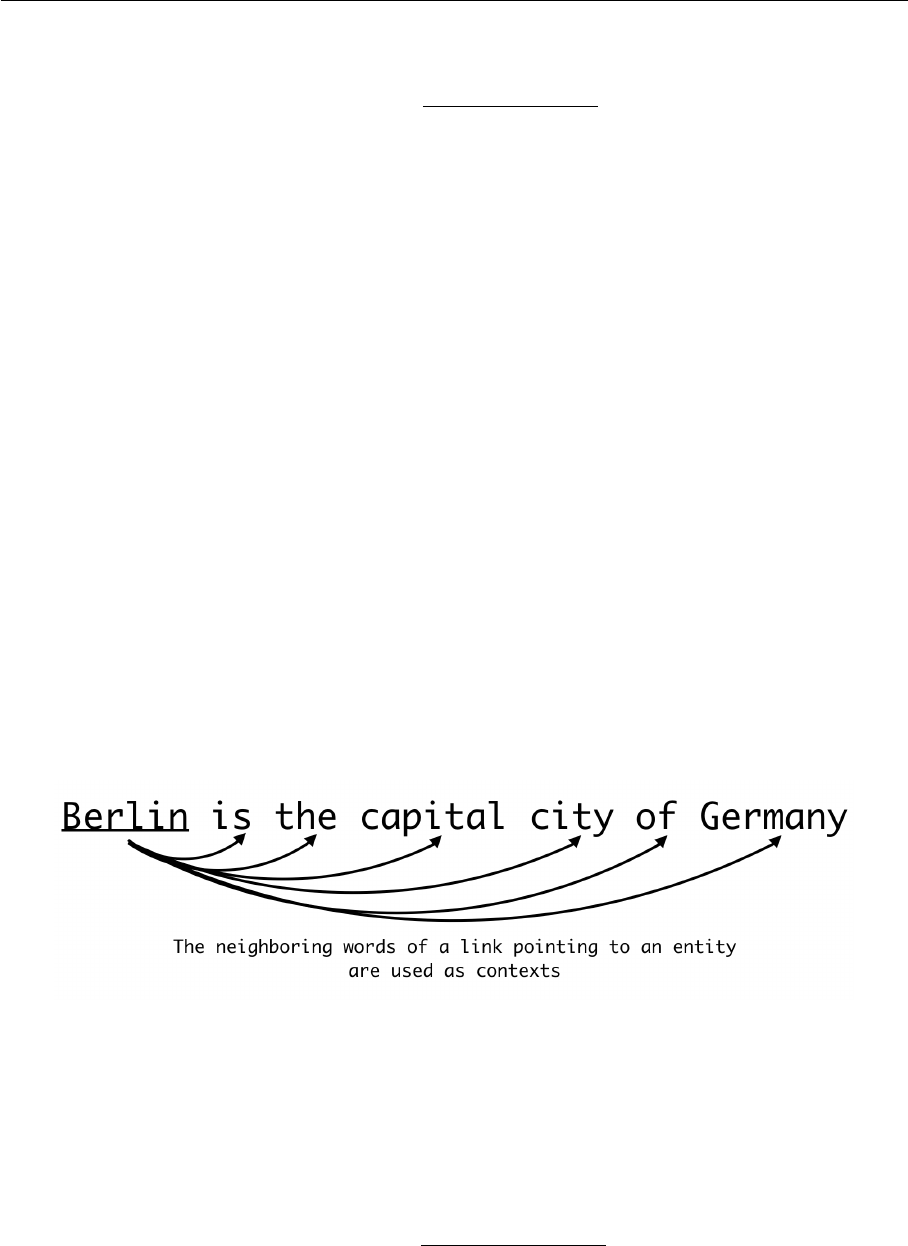

In this section, we modify traditional convolutional neural network for text classification

(TextCNN) and propose a new model called convolutional neural network with entities for

text classification (EntCNN), using words as well as entities to extract more contextual

information from texts. We first introduce the way to obtain entities and entity vectors

from texts. Then we describe our model to show how to learn features from words and

entities.

5.1 Entity Linking

The first step of our work is to detect named entities from texts. A named entity is, rough-

ly speaking, anything that can be referred to with a proper name: a person, a location or

an organization.[26] As we have mentioned in chapter 4, entity linking (EL) is the task

of recognizing and disambiguating named entities to a knowledge base (KB). It is some-

times also known as named entity recognition and disambiguation. Why can we benefit

from EL? By linking named entity mentions appearing in texts with their corresponding

entities in a KB, we can make use of KB which contains rich information about entities to

better understand documents. EL is essential to many NLP tasks, including information

extraction, information retrieval, content analysis, question answering and knowledge ba-

se population.[50] Shen and Jiawei Han et al. (2014) wrote an outstanding survey about

EL, see [50].

In our work, we utilize TagMe to extract entities and link them to Wikipedia entries.

TagMe is an end-to-end system which has two major stages. First, we process a text to

extract named entities (i.e. named entity recognition). Then we disambiguate the extrac-

ted entities to the correct entry in a given KB (i.e. named entity disambiguation). After

having obtained entity entries in a KB, we use Wikipedia2Vec to get entity vectors. As we

have discussed in the previous chapter, Wikipedia2Vec is a disambiguation-only approach.

In contrast to end-to-end approach, this model takes standard named entities directly as

input and disambiguates them to the correct entries in a given KB. In our work, we just

need to query previously obtained entity entries on Wikipedia2Vec, then we can get entity

vectors as output.

5 CONVOLUTIONAL NEURAL NETWORK FOR SHORT TEXT CLASSIFICATION 30

Figure 5.1: Convolutional neural network with words and entities for short text classifi-

cation

5 CONVOLUTIONAL NEURAL NETWORK FOR SHORT TEXT CLASSIFICATION 31

5.2 Overall Architecture of EntCNN

As we have discussed in chapter 3, CNNs have achieved impressive results on text classi-

fication tasks. In our work, we focus on single layer CNN due to its outstanding empirical

performance and use it as our baseline model.

We begin with a preprocessed sentence as Figure 5.1 shows: Berlin is my favorite city

in Germany. The whole network contains input layer (embedding layer), convolution and

max-pooling layer, dropout layer and output layer. The details are described in the follo-

wing paragraph.

Embedding Layer

We first convert the preprocessed sentence to an embedding matrix. This word embed-

ding matrix maps vocabulary word indices into low-dimensional vector representations.

Each row of the matrix is a word vector representation. It’s essentially a look-up table.

Note that each sentence is zero-padded to the maximum sentence length of a document.

We initialize word vectors with publicly available pre-trained word2vec or GloVe models.

Vocabulary words which do not present in the set of pre-trained models are randomly in-

itialized. Similarly, Our entity embedding matrix is initialized using Wikipedia2Vec. Note

that entity embedding matrix should have the same length as word embedding matrix due

to Tensorflow implementation reasons. But it turns out that this doesn’t affect the final

result because padded-zeros are finally ignored after max-pooling step. Note that not all

entities have entity vectors. We also adopt the same zero-padding strategy for entities that

don’t have entity embeddings, i.e. they are zero-padded in the entity embedding matrix.

Assume the maximum sentence length of a given document is l. The dimensionality of

word and entity vectors are denoted as d. So we can obtain the embedding matrix W

by concatenating the embedding matrix of words and entities together: W = W

w

⊕ W

e

.

W

w

and W

e

denote the embedding matrix of words and entities respectively. ⊕ stands for

concatenation operator. Thus given a sentence, its embedding matrix can be represented

as:

W = v

w

1

⊕ v

w

2

⊕ · · · ⊕ v

w

l

⊕ v

e

1

⊕ v

e

2

⊕ · · · ⊕ v

e

l

(5.1)

Convolution and Max-Pooling Layer

Unlike convolution operations in computer vision where filter width can be one or any

5 CONVOLUTIONAL NEURAL NETWORK FOR SHORT TEXT CLASSIFICATION 32

other integers, filter width should be equal to the dimensionality of word and entity vectors

(i.e. d) in text classification. It doesn’t make any sense to operate on part of word vectors.

For example, for word example, we can’t recognize it when just seeing xa or ampl. Thus

we just need to vary filter height f. Given a filter (convolution kernel) which has size

f × d, suppose we have a concatenation of words and entities v

i

, v

i+1

, . . . , v

i+j

, denoted

as [v

i

: v

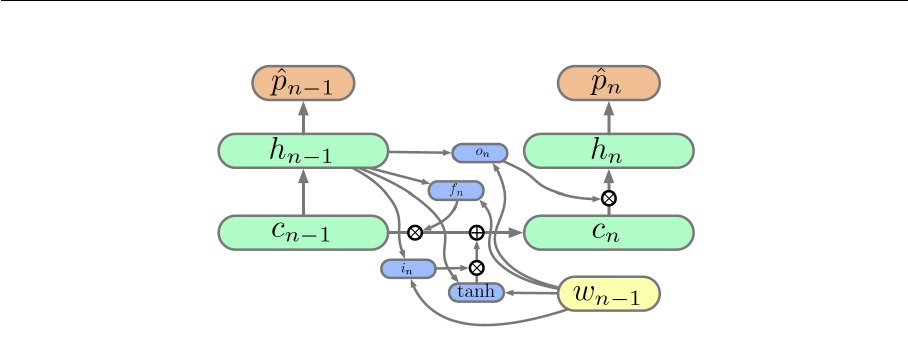

i+f−1