STUDY

Panel for the Future of Science and Technology

EPRS | European Parliamentary Research Service

Scientific Foresight Unit (STOA)

PE 641.530 – June 2020

EN

The impact of

the General Data

Protection

Regulation

(GDPR) on

artificial

intelligence

The impact of the General Data

Protection Regulation (GDPR)

on artificial intelligence

This study addresses the relationship between the General Data

Protection Regulation (GDPR) and artificial intelligence (AI). After

introducing some basic concepts of AI, it reviews the state of the art

in AI technologies and focuses on the application of AI to personal

data. It considers challenges and opportunities for individuals and

society, and the ways in which risks can be countered and

opportunities enabled through law and technology.

The study then provides an analysis of how AI is regulated in the

GDPR and examines the extent to which AI fits into the GDPR

conceptual framework. It discusses the tensions and proximities

between AI and data protection principles, such as, in particular,

purpose limitation and data minimisation. It examines the legal

bases for AI applications to personal data and considers duties of

information concerning AI systems, especially those involving

profiling and automated decision-making. It reviews data subjects'

rights, such as the rights to access, erasure, portability and object.

The study carries out a thorough analysis of automated decision-

making, considering the extent to which automated decisions are

admissible, the safeguard measures to be adopted, and whether

data subjects have a right to individual explanations. It then

addresses the extent to which the GDPR provides for a preventive

risk-based approach, focusing on data protection by design and by

default. The possibility to use AI for statistical purposes, in a way that

is consistent with the GDPR, is also considered.

The study concludes by observing that AI can be deployed in a way

that is consistent with the GDPR, but also that the GDPR does not

provide sufficient guidance for controllers, and that its prescriptions

need to be expanded and concretised. Some suggestions in this

regard are developed.

STOA | Panel for the Future of Science and Technology

AUTHOR

The study was led by Professor Giovanni Sartor, European University Institute of Florence, at the request of the

Panel for the Future of Science and Technology (STOA) and managed by the Scientific Foresight Unit, within

the Directorate-General for Parliamentary Research Services (EPRS) of the Secretariat of the European

Parliament. It was co-authored by Professor Sartor and Dr Francesca Lagioia, European University Institute of

Florence, working under his supervision.

ADMINISTRATOR RESPONSIBLE

Mihalis Kritikos, Scientific Foresight Unit (STOA)

To contact the publisher, please e-mail stoa@ep.europa.eu

LINGUISTIC VERSION

Original: EN

Manuscript completed in June 2020.

DISCLAIMER AND COPYRIGHT

This document is prepared for, and addressed to, the Members and staff of the European Parliament as

background material to assist them in their parliamentary work. The content of the document is the sole

responsibility of its author(s) and any opinions expressed herein should not be taken to represent an official

position of the Parliament.

Reproduction and translation for non-commercial purposes are authorised, provided the source is

acknowledged and the European Parliament is given prior notice and sent a copy.

Brussels © European Union, 2020.

PE 641.530

ISBN: 978-92-846-6771-0

doi: 10.2861/293

QA-QA-02-20-399-EN-N

http://www.europarl.europa.eu/stoa

(STOA website)

http://www.eprs.ep.parl.union.eu (intranet)

http://www.europarl.europa.eu/thinktank (internet)

http://epthinktank.eu (blog)

The impact of the General Data Protection Regulation (GDPR) on artificial intelligence

I

Executive summary

AI and big data

In the last decade, AI has gone through rapid development. It has acquired a solid scientific basis

and has produced many successful applications. It provides opportunities for economic, social, and

cultural development; energy sustainability; better health care; and the spread of knowledge. These

opportunities are accompanied by serious risks, including unemployment, inequality,

discrimination, social exclusion, surveillance, and manipulation.

There has been an impressive leap forward on AI since it began to focus on the application of

machine learning to mass volumes of data. Machine learning systems discover correlations between

data and build corresponding models, which link possible inputs to presumably correct responses

(predictions). In machine learning applications, AI systems learn to make predictions after being

trained on vast sets of examples. Thus, AI has become hungry for data, and this hunger has spurred

data collection, in a self-reinforcing spiral: the development of AI systems based on machine

learning presupposes and fosters the creation of vast data sets, i.e., big data. The integration of AI

and big data can deliver many benefits for the economic, scientific and social progress. However, it

also contributes to risks for individuals and for the whole of society, such as pervasive surveillance

and influence on citizens' behaviour, polarisation and fragmentation in the public sphere.

AI and personal data

Many AI applications process personal data. On the one hand, personal data may contribute to the

data sets used to train machine learning systems, namely, to build their algorithmic models. On the

other hand, such models can be applied to personal data, to make inferences concerning particular

individuals.

Thanks to AI, all kinds of personal data can be used to analyse, forecast and influence human

behaviour, an opportunity that transforms such data, and the outcomes of their processing, into

valuable commodities. In particular, AI enables automated decision-making even in domains that

require complex choices, based on multiple factors and non-predefined criteria. In many cases,

automated predictions and decisions are not only cheaper, but also more precise and impartial than

human ones, as AI systems can avoid the typical fallacies of human psychology and can be subject

to rigorous controls. However, algorithmic decisions may also be mistaken or discriminatory,

reproducing human biases and introducing new ones. Even when automated assessments of

individuals are fair and accurate, they are not unproblematic: they may negatively affect the

individuals concerned, who are subject to pervasive surveillance, persistent evaluation, insistent

influence, and possible manipulation.

The AI-based processing of vast masses of data on individuals and their interactions has social

significance: it provides opportunities for social knowledge and better governance, but it risks

leading to the extremes of 'surveillance capitalism' and 'surveillance state'.

A normative framework

It must be ensured that the development and deployment of AI tools takes place in a socio-tech nical

framework – inclusive of technologies, human skills, organisational structures, and norms – where

individual interests and the social good are preserved and enhanced.

To provide regulatory support for the creation of such a framework, ethical and legal principles are

needed, together with sectorial regulations. The ethical principles include autonomy, prevention of

harm, fairness and explicability; the legal ones include the rights and social values enshrined in the

EU charter, in the EU treaties, as well as in national constitutions. The sectoral regulations involved

include first of all data protection law, consumer protection law, and competition law, but also other

STOA | Panel for the Future of Science and Technology

II

domains of the law, such as labour law, administrative law, civil liability etc. The pervasive impact of

AI on European society is reflected in the multiplicity of the legal issues it raises.

To ensure adequate protection of citizens against the risks resulting from the misuses of AI, beside

regulation and public enforcement, the countervailing power of civil society is also needed to detect

abuses, inform the public, and activate enforcement. AI-based citizen-empowering technologies

can play an important role in this regard, by enabling citizens not only to protect themselves from

unwanted surveillance and 'nudging', but also to detect unlawful practices, identify instances of

unfair treatment, and distinguish fake and untrustworthy information.

AI is compatible with the GDPR

AI is not explicitly mentioned in the GPDR, but many provisions in the GDPR are relevant to AI, and

some are indeed challenged by the new ways of processing personal data that are enabled by AI.

There is indeed a tension between the traditional data protection principles – purpose limitation,

data minimisation, the special treatment of 'sensitive data', the limitation on automated decisions –

and the full deployment of the power of AI and big data. The latter entails the collection of vast

quantities of data concerning individuals and their social relations and processing such data for

purposes that were not fully determined at the time of collection. However, there are ways to

interpret, apply, and develop the data protection principles that are consistent with the beneficial

uses of AI and big data.

The requirement of purpose limitation can be understood in a way that is compatible with AI and

big data, through a flexible application of the idea of compatibility, which allows for the reuse of

personal data when this is not incompatible with the purposes for which the data were o r iginally

collected. Moreover, reuse for statistical purposes is assumed to be compatible, and thus would in

general be admissible (unless it involves unacceptable risks for the data subject).

The principle of data minimisation can also be understood in such a way as to allow for beneficial

applications of AI. Minimisation may require, in some contexts, reducing the 'personality' of the

available data, rather than the amount of such data, i.e., it may require reducing, through measures

such as pseudonymisation, the ease with which the data can be connected to individuals. The

possibility of re-identification should not entail that all re-identifiable data are considered personal

data to be minimised. Rather the re-identification of data subjects should be considered as creation

of new personal data, which should be subject to all applicable rules. Re-identification should

indeed be strictly prohibited unless all conditions for the lawful collection of personal data are met,

and it should be compatible with the purposes for which the data were originally collected and

subsequently anonymised.

The information requirements established by the GDPR can be met with regard to AI-based

processing, even though the complexity of AI application has to be taken into account. The

information made available to data subjects should enable them to understand the purpose of each

AI-based processing and its limits, even without going into unnecessary technical details.

The GDPR allows for inferences based on personal data, provided that appropriate safeguards are

adopted. Profiling is in principle prohibited, but there are ample exceptions (contract, law or

consent). Uncertainties exist concerning the extent to which an individual explanation s h ould be

provided to the data subject. It is also uncertain to what extent reasonableness criteria may apply to

automated decisions.

The GDPR provisions on preventive measures, and in particular those concerning privacy by design

and by default, do not hinder the development of AI systems, if correctly designed and

implemented, even though they may entail some additional costs. It needs to be clarified which AI

applications present high risks and therefore require a preventive data protection assessment, and

possibly the preventive involvement of data protection authorities.

The impact of the General Data Protection Regulation (GDPR) on artificial intelligence

III

Finally, the possibility of using personal data for statistical purposes opens opportunities for the

processing of personal data in ways that do not involve the inference of new personal data.

Statistical processing requires security measures that are proportionate to the risks for the data

subject, and which should include at least pseudonymisation.

The GDPR prescriptions are often vague and open-ended

The GDPR allows for the development of AI and big data applications that successfully balance data

protection and other social and economic interests, but it provides limited guidance on how to

achieve this goal. It indeed abounds in vague clauses and open standards, the application of which

often requires balancing competing interests. In the case of AI/big data applications, the

uncertainties are aggravated by the novelty of the technologies, their complexity and the broad

scope of their individual and social effects.

It is true that the principles of risk-prevention and accountability potentially direct the processing of

personal data toward a 'positive sum' game, in which the advantages of the processing, when

constrained by appropriate risk-mitigation measures, outweigh its possible disadvantages.

Moreover these principles enable experimentation and learning, avoiding the over- and under-

inclusiveness issues involved in the applications of strict rules. However, by requiring controllers to

rely on such principles, the GDPR offloads the task of establishing how to manage risk and find

optimal solutions onto controllers, a task that may be challenging as well as costly. The stiff penalties

for non-compliance, when combined with the uncertainty on the requirements for compliance, may

constitute a novel risk, which, rather than incentivising the adoption of adequate compliance

measure, may prevent small companies from engaging in new ventures.

Thus, the successful application of GDPR to AI-application depends heavily on what guidance data

protection bodies and other competent authorities will provide to controllers and data subjects.

Appropriate guidance would diminish the cost of legal uncertainty and would direct companies –

in particular small ones that mostly need such advice – to efficient and data protection-co mpliant

solutions.

Some policy indications

The study concludes with the following indications on AI and the processing of personal data.

• The GDPR generally provides meaningful indications for data protection in the context of AI

applications.

• The GDPR can be interpreted and applied in such a way that it does not substantially hinder

the application of AI to personal data, and that it does not place EU companies at a

disadvantage by comparison with non-European competitors.

• Thus, the GDPR does not require major changes in order to address AI applications.

• However, a number of AI-related data-protection issues do not have an explicit answer in

the GDPR. This may lead to uncertainties and costs, and may needlessly hamper the

development of AI applications.

• Controllers and data subjects should be provided with guidance on how AI can be applied

to personal data consistently with the GDPR, and on the available technologies for doing so.

Such guidance can prevent costs linked to legal uncertainty, while enhancing compliance.

• Providing guidance requires a multilevel approach, which involves data protection

authorities, civil society, representative bodies, specialised agencies, and all stakeholders.

• A broad debate is needed involving not only political and administrative authorities, but

also civil society and academia. This debate needs to address the issues of determining what

STOA | Panel for the Future of Science and Technology

IV

standards should apply to AI processing of personal data, particularly to ensure the

acceptability, fairness and reasonability of decisions on individuals. It should also address

what applications are to be barred unconditionally, and which ones may instead be

admitted only under specific circumstances and controls.

• Discussion of a large set of realistic examples is needed to clarify which AI applications are

socially acceptable, under what circumstances and with what constraints. The debate on AI

can also be an opportunity to reconsider in depth, with more precision and concreteness,

some basic ideas of law and ethics, such as acceptable and practicable conceptions of

fairness and non-discrimination.

• Political authorities, such as the European Parliament, the European Commission and the

Council should provide general open-ended indications about the values at stake and ways

to achieve them.

• Data protection authorities, and in particular the Data Protection Board, should provide

controllers with specific guidance on the many issues for which no precise answer can be

found in the GDPR. Such guidance can often take the form of soft law instruments designed

with dual legal and technical competence, as in the case of Article 29 Working Party

opinions.

• National Data Protection Authorities should also provide guidance, in particular when

contacted for advice by controllers, or in response to data subjects' queries.

• The fundamental data protection principles – especially purpose limitation and

minimisation – should be interpreted in such a way that they do not exclude the use of

personal data for machine learning purposes. They should not preclude the creation of

training sets and the construction of algorithmic models, whenever the resulting AI systems

are socially beneficial and compliant with data protection rights.

• The use of personal data in a training set, for the purpose of learning general correlations

and connection, should be distinguished from their use for individual profiling, which is

about making assessments about individuals.

• The inference of new personal data, as it is done in profiling, should be considered as

creation of new personal data, when providing an input for making assessments and

decisions. The same should apply to the re-identification of anonymous or pseudonymous

data.

• Guidance is needed on profiling and automated decision-making. It seems that an

obligation of reasonableness – including normative and reliability aspects – should be

imposed on controllers engaging in profiling, mostly, but not only when profiling is aimed

at automated decision-making. Controllers should also be under an obligation to provide

individual explanations, to the extent that this is possible according to the available AI

technologies, and reasonable according to costs and benefits. The explanations may be

high-level, but they should still enable users to contest detrimental outcomes.

• It may be useful to establish obligations to notify data protection authorities of applications

involving individualised profiling and decision-making, possibly accompanied with the

right to ask for indications on compliance.

• The content of the controller's obligation to provide information (and the corresponding

rights of the data subject) about the 'logic' of an AI system need to be specified, with

appropriate examples, an in relation to different technologies.

The impact of the General Data Protection Regulation (GDPR) on artificial intelligence

V

• It needs to be ensured that the right to opt out of profiling and data transfers can easily be

exercised, through appropriate user interfaces. The same applies to the right to be

forgotten.

• Normative and technological requirements concerning AI by design and by default need to

be specified.

• The possibility of repurposing data for AI applications that do not involve profiling –

scientific and statistical ones – need to be broad, as long as appropriate precautions are in

place preventing abuse.

• Strong measures need to be adopted against companies and public authorities that

intentionally abuse the trust of data subjects by using their data against their interests.

• Collective enforcement in the data protection domain should be enabled and facilitated.

In conclusion, controllers engaging in AI-based processing should endorse the values of the GDPR

and adopt a responsible and risk-oriented approach. This can be done in ways that are compa tible

with the available technology and economic profitability (or the sustainable achievement of public

interests, in the case of processing by public authorities). However, given the complexity of the

matter and the gaps, vagueness and ambiguities present in the GDPR, controllers should not be left

alone in this exercise. Institutions need to promote a broad societal debate on AI applications, and

should provide high-level indications. Data protection authorities need to actively engage in a

dialogue with all stakeholders, including controllers, processors, and civil society, in order to

develop appropriate responses, based on shared values and effective technologies. Consistent

application of data protection principles, when combined with the ability to efficiently use AI

technology, can contribute to the success of AI applications, by generating trust and preventing

risks.

STOA | Panel for the Future of Science and Technology

VI

Table of Contents

1. Introduction............................................................................................................................................................ 1

2. AI and personal data ............................................................................................................................................ 2

2.1. The concept and scope of AI...................................................................................................................... 2

2.1.1. A definition of AI ...................................................................................................................................... 2

2.1.2. AI and robotics ......................................................................................................................................... 3

2.1.3. AI and algorithms..................................................................................................................................... 3

2.1.4. Artificial intelligence and big data........................................................................................................ 4

2.2. AI in the new millennium............................................................................................................................ 4

2.2.1. Artificial general and specific intelligence .......................................................................................... 5

2.2.2. AI between logical models and mac hine learning ............................................................................ 8

2.2.3. Approaches to learning ........................................................................................................................ 10

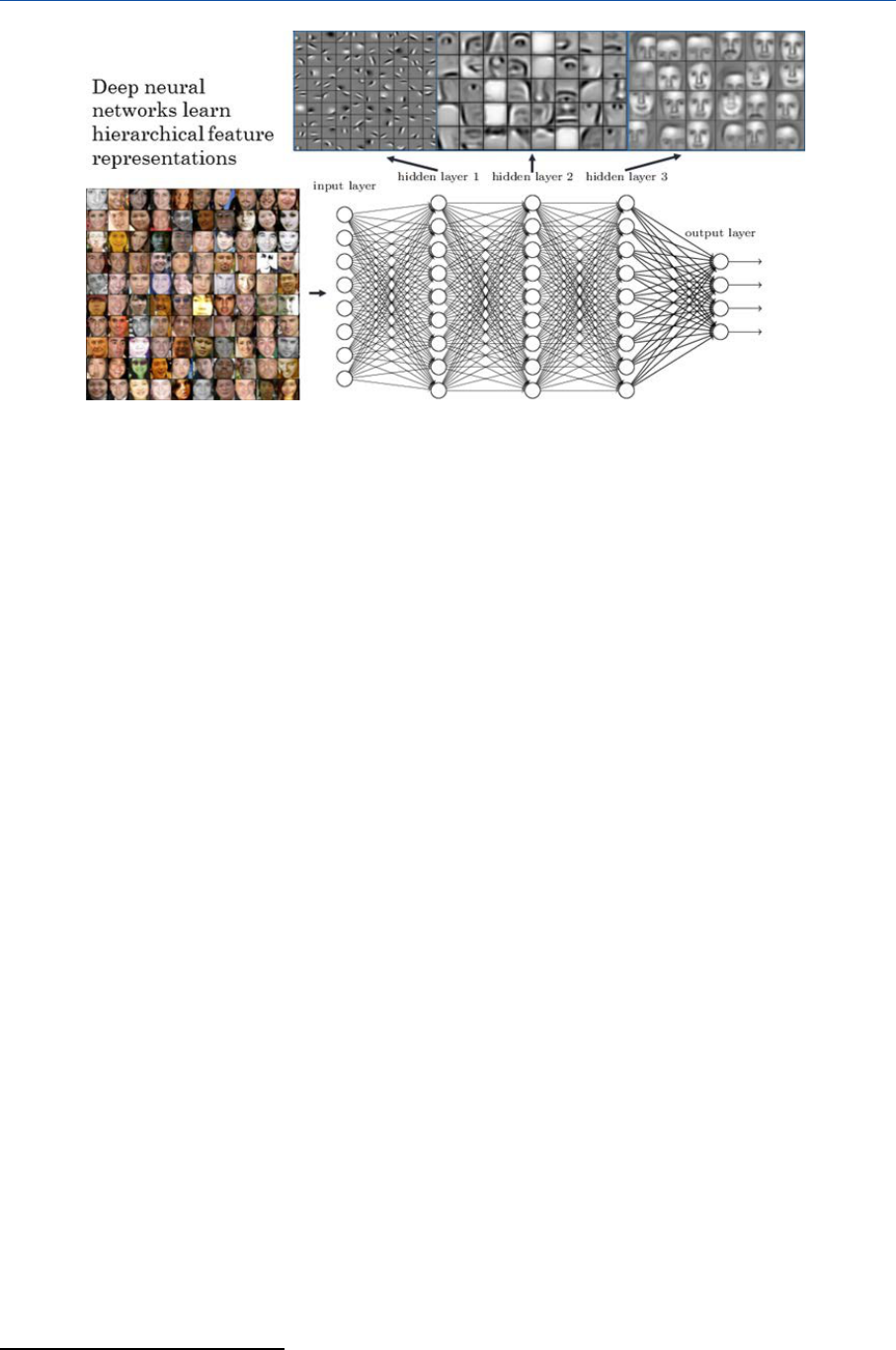

2.2.4. Neural networks and deep learning ................................................................................................... 13

2.2.5. Explicability ............................................................................................................................................. 14

2.3. AI and (personal) data ............................................................................................................................... 15

2.3.1. Data for automated predictions and assessments .......................................................................... 15

2.3.2. AI and big data : risks and opportunities ........................................................................................... 18

2.3.3. AI in decision-making concerning individuals: fairness and discrimination............................... 20

2.3.4. Profiling, influence and manipulation ............................................................................................... 22

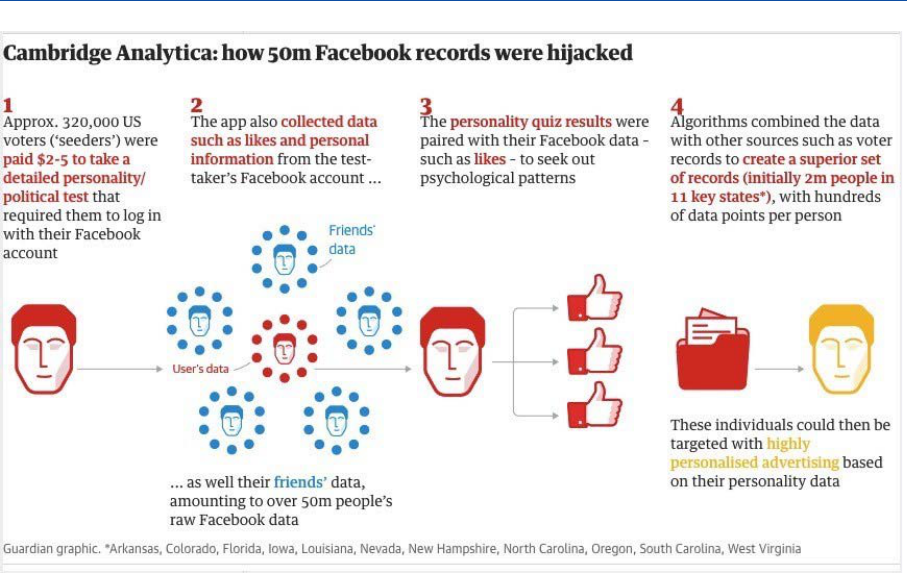

2.3.5. The dangers of profiling: the case of Cambridge Analytica ........................................................... 23

2.3.6. Towards surveillance capitalism or surveillance state? .................................................................. 25

2.3.7. The general problem of social sorting and differential treatment ............................................... 27

2.4. AI, legal values and norms ....................................................................................................................... 30

2.4.1. The ethical framework .......................................................................................................................... 30

2.4.2. Legal principles and norms .................................................................................................................. 31

2.4.3. Some interests at stake ......................................................................................................................... 32

2.4.4. AI technologies for social and legal empowerment........................................................................ 33

3. AI in the GDPR ...................................................................................................................................................... 35

3.1. AI in the conceptual framework of the GDPR..................................................................................... 35

3.1.1. Article 4(1) GDPR: Personal data (identification, identifiability, re-identification)..................... 35

3.1.2. Article 4(2) GDPR: Profiling................................................................................................................... 39

3.1.3. Article 4(11) GDPR: Consent................................................................................................................. 41

3.2. A I and the data pro tection princi ples................................................................................................... 44

The impact of the General Data Protection Regulation (GDPR) on artificial intelligence

VII

3.2.1. Article 5(1)(a) GDPR: Fairness, transparency ..................................................................................... 44

3.2.2. Article 5(1)(b) GDPR: Purpose limitation............................................................................................ 45

3.2.3. Article 5(1)(c) GDPR: Data minimisation ............................................................................................ 47

3.2.4. Article 5(1)(d) GDPR: Accuracy............................................................................................................. 48

3.2.5. Article 5(1)(e) GDPR: Storage limitation............................................................................................. 48

3.3. AI and legal bases ....................................................................................................................................... 49

3.3.1. Article 6(1)(a) GDPR: Consent .............................................................................................................. 49

3.3.2. Article 6(1)(b-e) GDPR: Necessity ........................................................................................................ 49

3.3.3. Article 6(1)(f) GDPR: Legitimate interest ............................................................................................ 50

3.3.4. Article 6(4) GDPR: Repurposing........................................................................................................... 51

3.3.5. Article 9 GDPR: AI and special categories of data ............................................................................ 53

3.4. AI and transparency ................................................................................................................................... 53

3.4.1. Articles 13 and 14 GDPR: Information duties.................................................................................... 53

3.4.2. Information on automated decision-making ................................................................................... 54

3.5. AI and data subjects' rights...................................................................................................................... 56

3.5.1. Article 15 GDPR: The right to access................................................................................................... 56

3.5.2. Article 17 GDPR: The right to erasure ................................................................................................. 57

3.5.3. Article 19 GDPR: The right to portability ........................................................................................... 57

3.5.4. Article 21 (1): The right to object......................................................................................................... 57

3.5.5. Article 21 (1) and (2): Objecting to profiling and direct marketing .............................................. 58

3.5.6. Article 21 (2). Objecting to processing for research and statistical purposes............................. 58

3.6. Automated decision-making................................................................................................................... 59

3.6.1. Article 22(1) GDPR: The prohibition of automated decisions ........................................................ 59

3.6.2. Article 22(2) GDPR: Exceptions to the prohibition of 22(1) ............................................................ 60

3.6.3. Article 22(3) GDPR: Safeguard measures ........................................................................................... 61

3.6.4. Article 22(4) GDPR: Automated decision-making and sensitive data .......................................... 62

3.6.5. A right to explanation? ......................................................................................................................... 62

3.6.6. What rights to information and explanation? .................................................................................. 64

3.7. AI and privacy by design .......................................................................................................................... 66

3.7.1. Right-based and risk-based approaches to data protection ......................................................... 66

3.7.2. A risk-based approach to AI ................................................................................................................. 66

3.7.3. Article 24 GDPR: Responsibility of the controller ............................................................................. 67

3.7.4. Article 25 GDPR: Data protection by design and by default .......................................................... 67

STOA | Panel for the Future of Science and Technology

VIII

3.7.5. Article 35 and 36 GDPR: Data protection impact assessment ....................................................... 68

3.7.6. Article 37 GDPR: Data protection officers.......................................................................................... 68

3.7.7. Articles 40-43 GPDR: Codes of conduct and certification............................................................... 69

3.7.8. The role of data protection authorities.............................................................................................. 69

3.8. AI, statistical processing and scientific research............................................................................... 70

3.8.1. The concept of statistical processing ................................................................................................. 70

3.8.2. Article 5(1)(b) GDPR: Repurposing for research and statistical processing................................. 71

3.8.3. Article 89(1,2) GDPR: Safeguards for research of statistical processing....................................... 71

4. Policy options: How to reconcile AI-based innovation with individual rights & social values,

and ensure the adoption of data protection rules and principles............................................................ 73

4.1. AI and personal data.................................................................................................................................. 73

4.1.1. Opportunities and risks......................................................................................................................... 73

4.1.2. Normative foundations......................................................................................................................... 73

4.2. AI in the GDPR.............................................................................................................................................. 74

4.2.1. Personal data in re-identification and inferences ............................................................................ 74

4.2.2. Profiling.................................................................................................................................................... 74

4.2.3. Consent.................................................................................................................................................... 74

4.2.4. AI and transparency............................................................................................................................... 74

4.2.5. The rights to erasure and portability.................................................................................................. 75

4.2.6. The right to object ................................................................................................................................. 75

4.2.7. Automated decision-making ............................................................................................................... 75

4.2.8. AI and privacy by design....................................................................................................................... 75

4.2.9. AI, statistical processing and scientific research .............................................................................. 76

4.3. AI and GDPR compatibility ...................................................................................................................... 76

4.3.1. No incompatibility between the GDPR and AI and big data ......................................................... 76

4.3.2. GDPR prescriptions are often vague and open-ended ................................................................... 77

4.3.3. Providing for oversight and enforcement......................................................................................... 78

4.4. Final considerations: some policy proposals on AI and the GDPR............................................... 79

5. References.............................................................................................................................................................. 82

The impact of the General Data Protection Regulation (GDPR) on artificial intelligence

IX

Table of figures

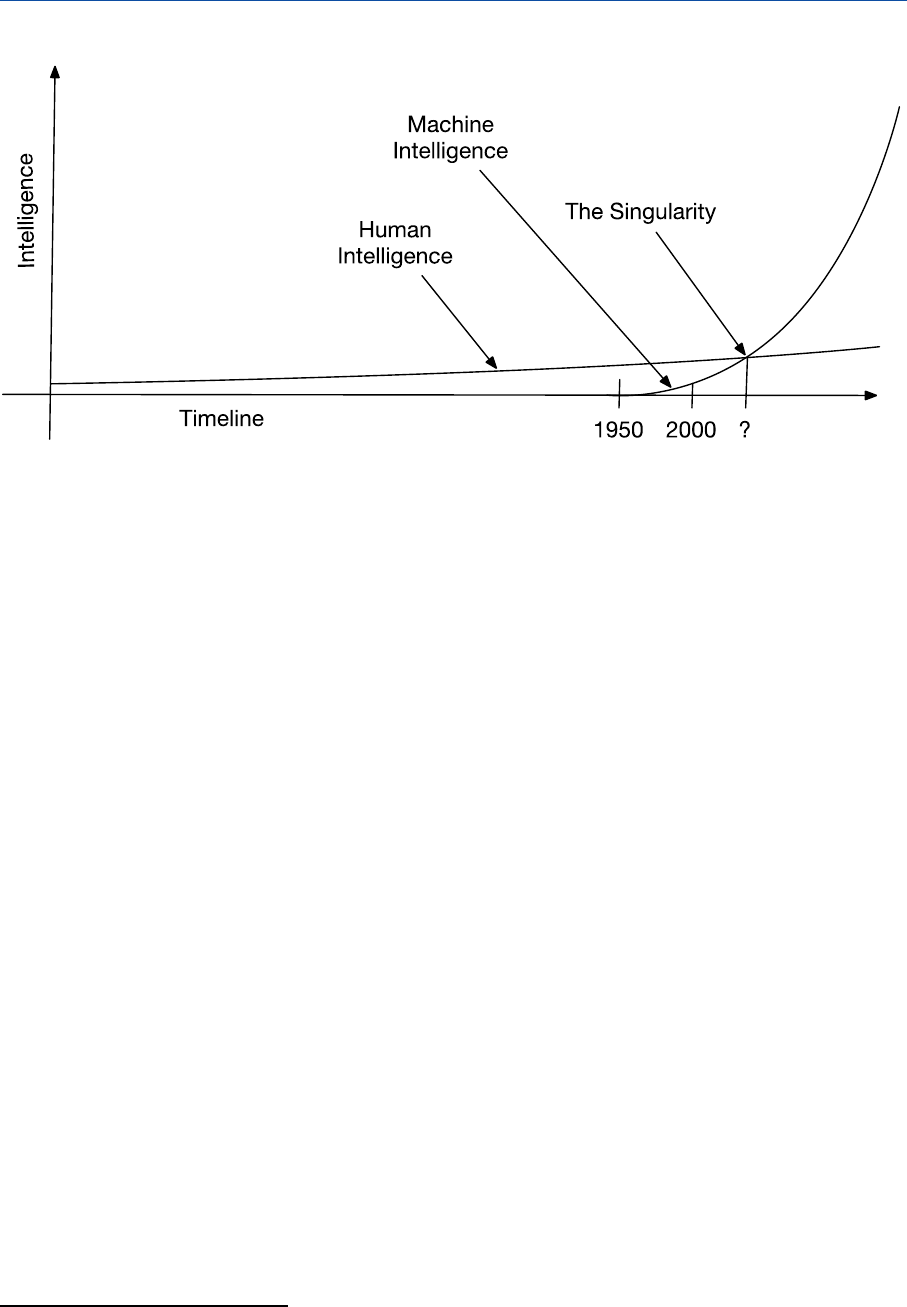

Figure 1 – Hypes and winters of AI _________________________________________________ 5

Figure 2 – General AI: The singularity _______________________________________________ 6

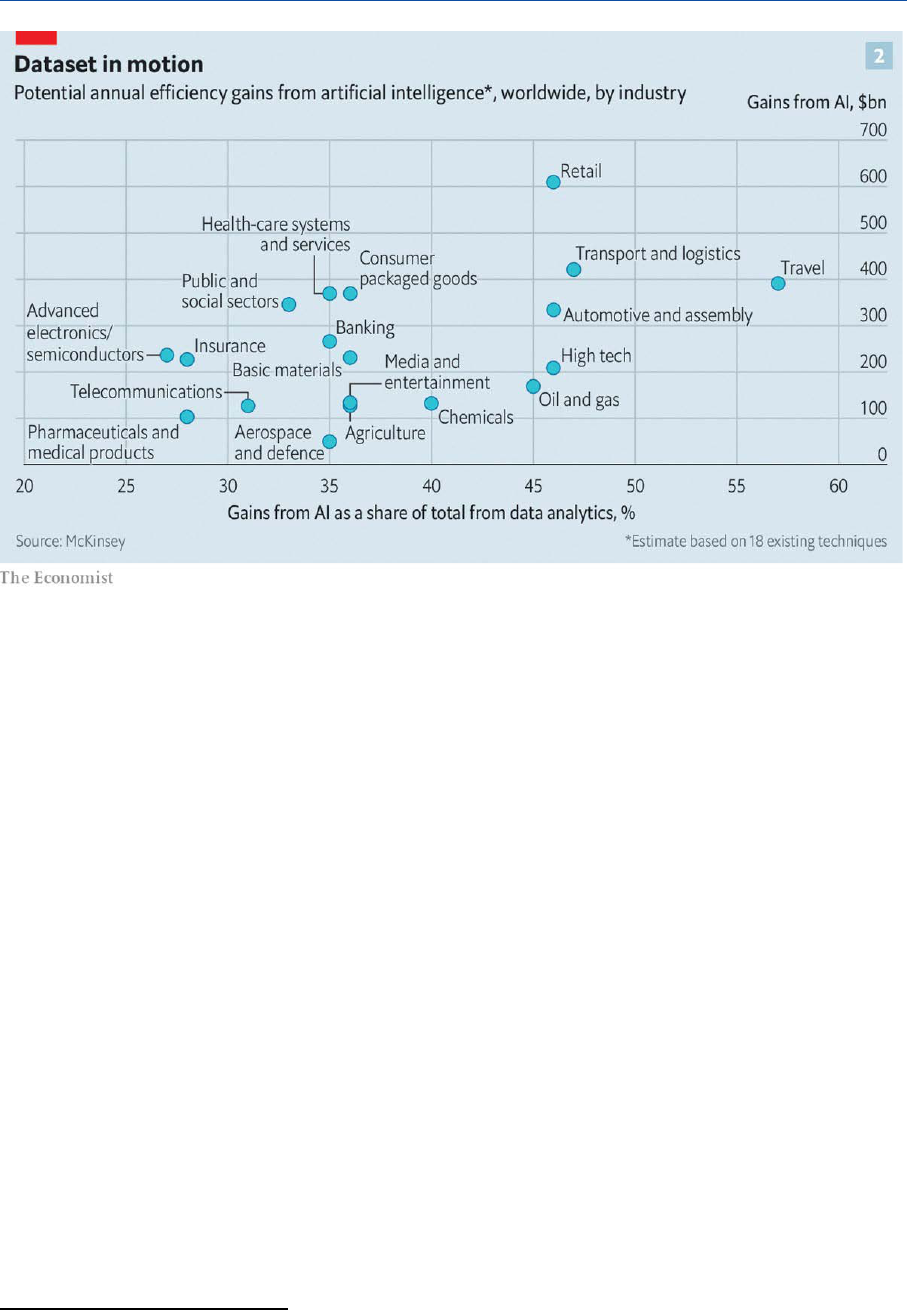

Figure 3 – Efficiency gains from AI _________________________________________________ 7

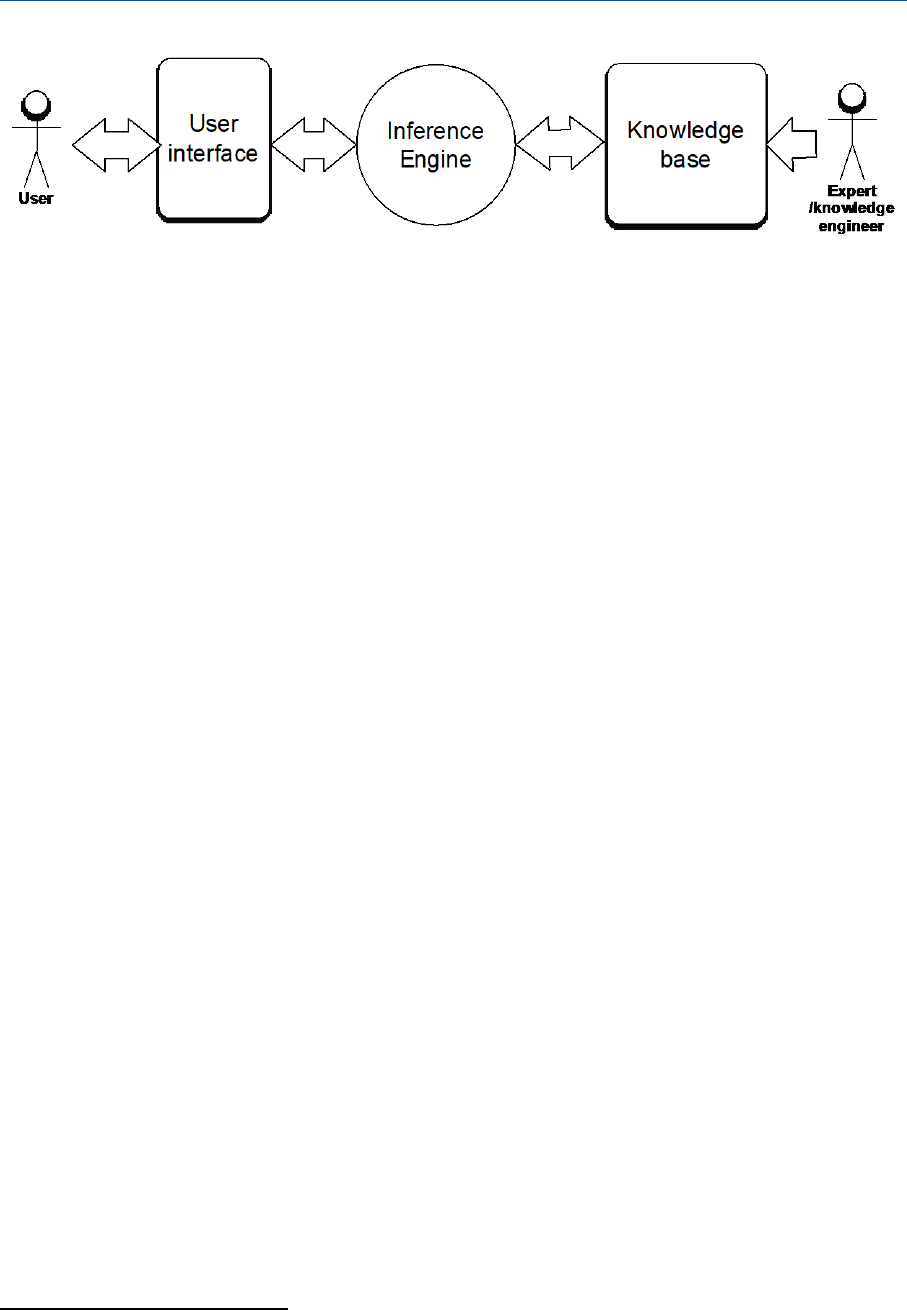

Figure 4 – Basic structure of expert systems__________________________________________ 9

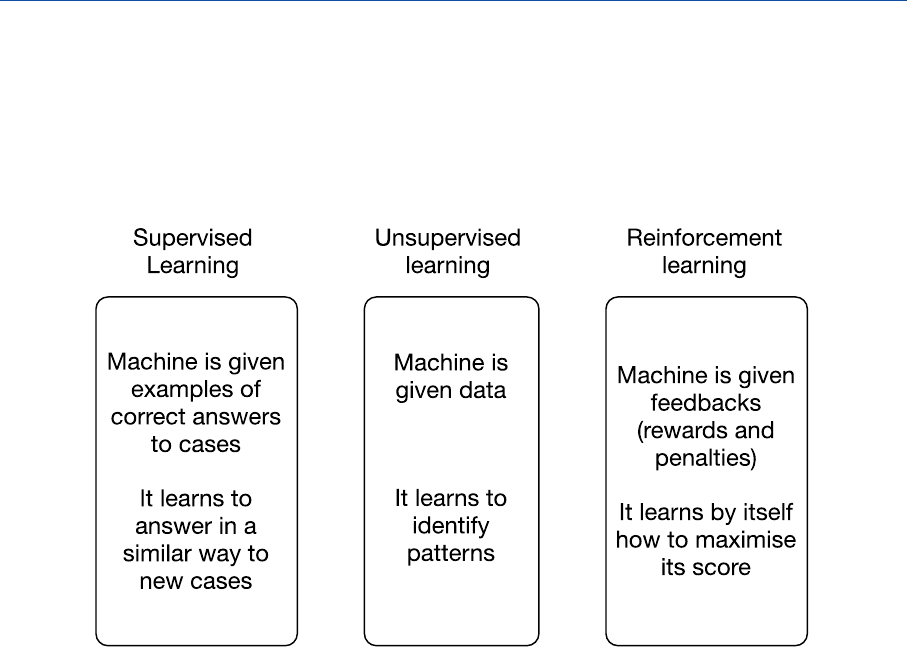

Figure 5 – Kinds of learning _____________________________________________________ 10

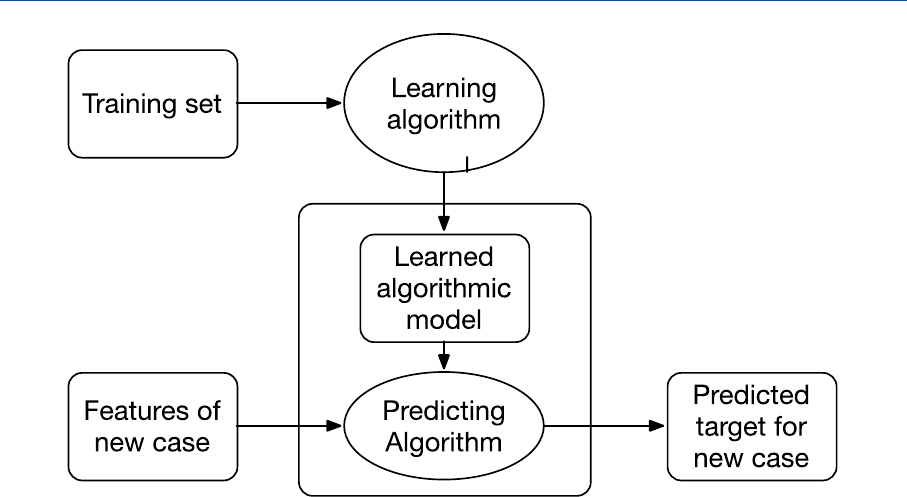

Figure 6 – Supervised learning ___________________________________________________ 11

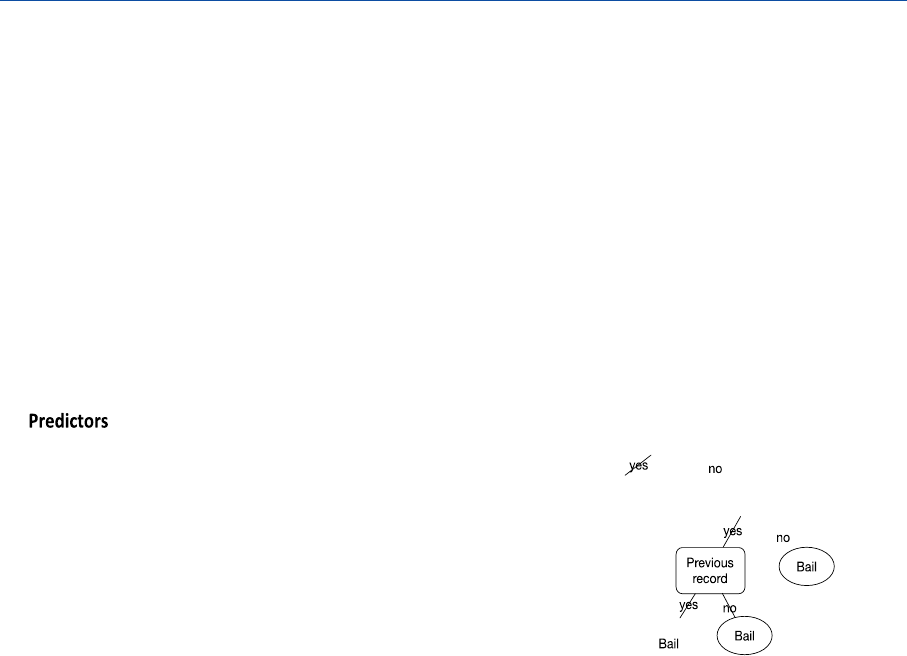

Figure 7 – Training set and decision tree for bail decisions _____________________________ 12

Figure 8 – Multilayered (deep) neural network for face recognition ______________________ 14

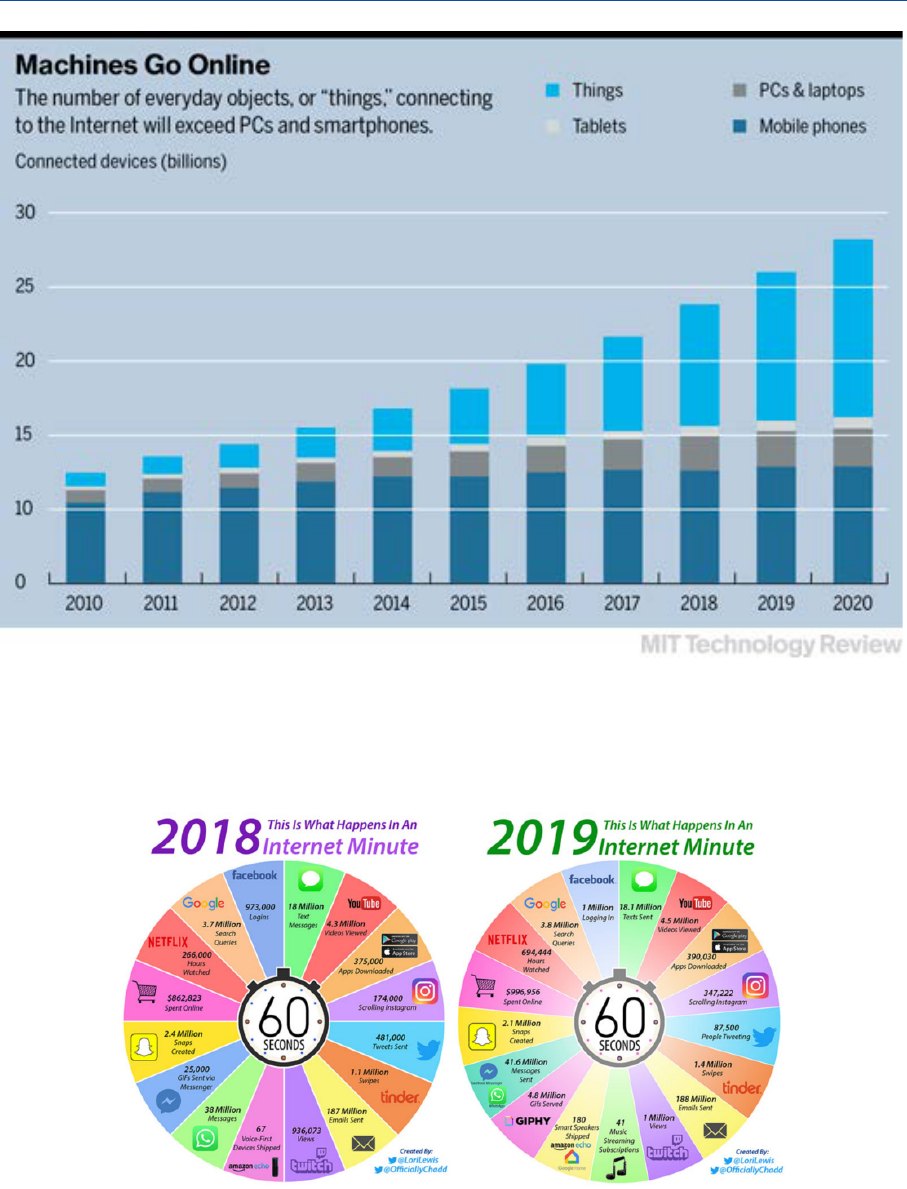

Figure 9 – Number of connected devices ___________________________________________ 17

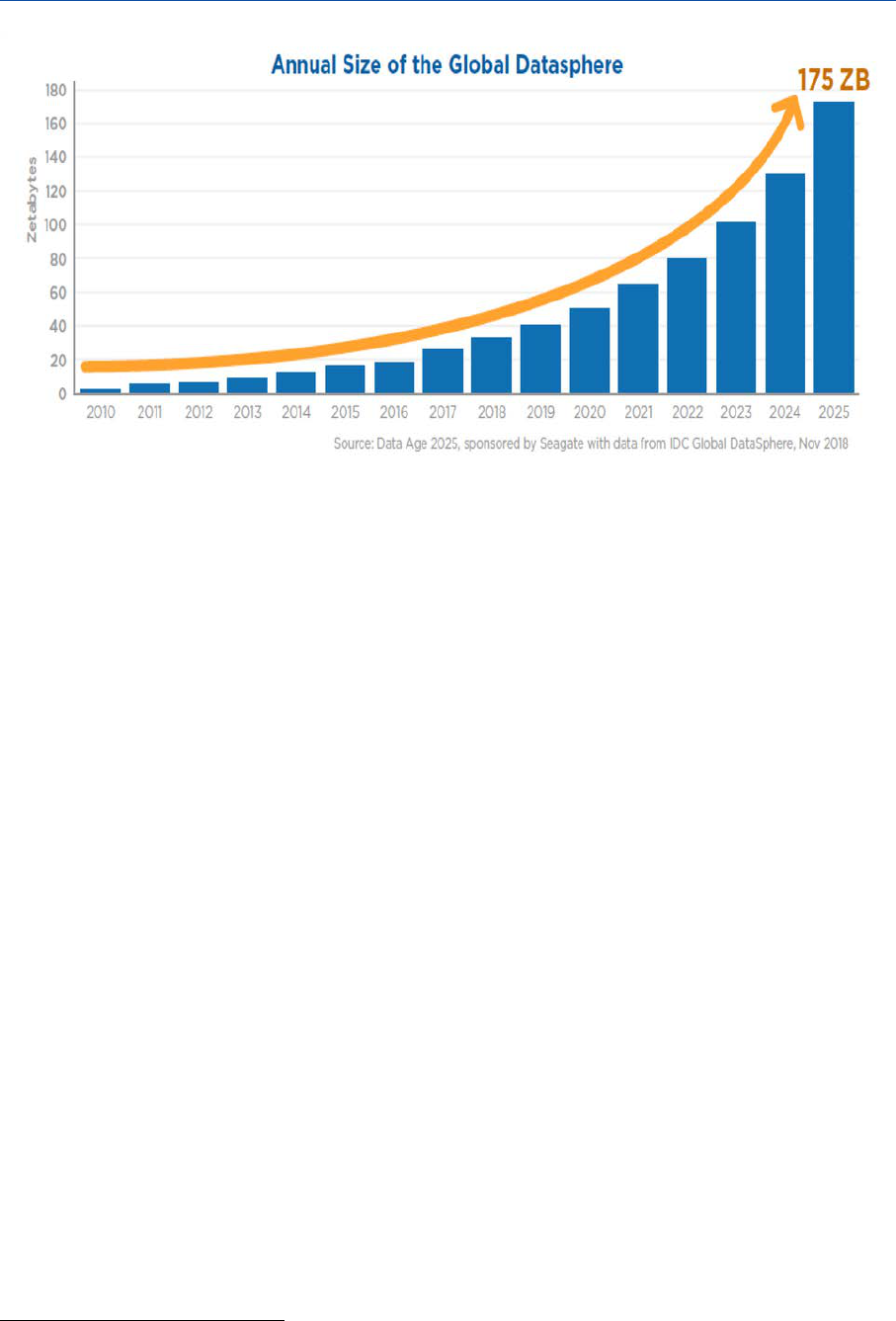

Figure 10 – Data collected in a minute of online activity worldwide ______________________ 17

Figure 11 – Growth of global data ________________________________________________ 18

Figure 12 – The Cambridge Analytica case __________________________________________ 24

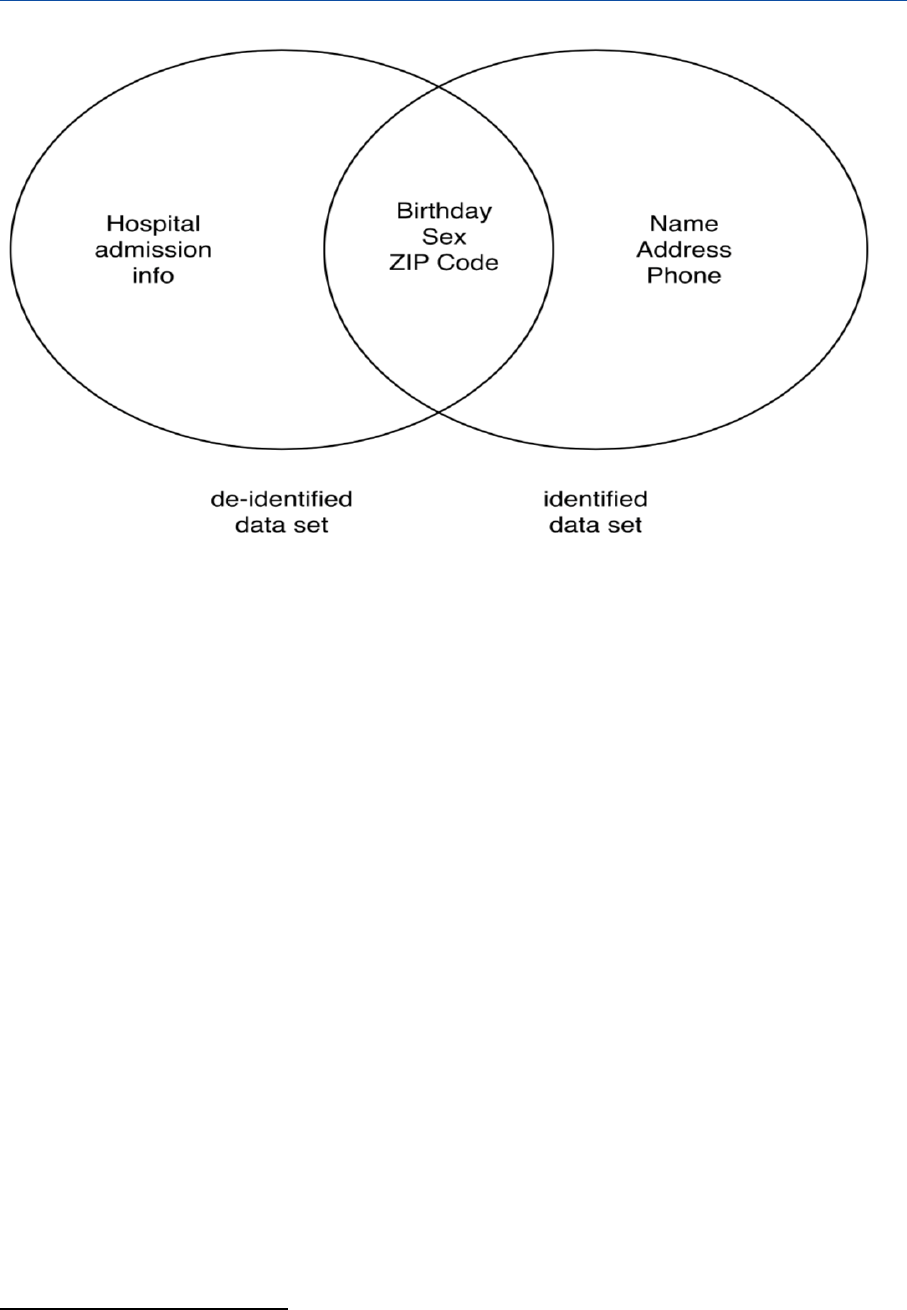

Figure 13 – The connection between identified and de-identified data ___________________ 37

The impact of the General Data Protection Regulation (GDPR) on artificial intelligence

1

1. Introduction

This study aims to provide a comprehensive assessment of the interactions between artificial

intelligence (AI) and data protection, focusing on the 2016 EU General Data Protection Regulation

(GDPR).

Artificial intelligence systems are populating the human and social world in multiple varieties:

industrial robots in factories, service robots in houses and healthcare facilities, autonomous vehicles

and unmanned aircraft in transportation, autonomous electronic agents in e-commerce and

finance, autonomous weapons in the military, intelligent communicating devices embedded in

every environment. AI has come to be one of the most powerful drivers of social transformation: it

is changing the economy, affecting politics, and reshaping citizens' lives and interactions.

Developing appropriate policies and regulations for AI is a priority for Europe, since AI increases

opportunities and risks in ways that are of the greatest social and legal importance. AI may enhance

human abilities, improve security and efficiency, and enable the universal provision of knowledge

and skills. On the other hand, it may increase opportunities for control, manipulation, and

discrimination; disrupt social interactions; and expose humans to harm resulting from technological

failures or disregard for individual rights and social values.

A number of concrete ethical and legal issues have already emerged in connection with AI in several

domains, such as civil liability, insurance, data protection, safety, contracts and crimes. Such issues

acquire greater significance as more and more intelligent systems leave the controlled and limited

environments of laboratories and factories and share the same physical and virtual spaces with

humans (internet services, roads, skies, trading on the stock exchange, other markets, etc.).

Data protection is at the forefront of the relationship between AI and the law, as many AI

applications involve the massive processing of personal data, including the targeting and

personalised treatment of individuals on the basis of such data. This explains why data protection

has been the area of the law that has most engaged with AI, although other domains of the law are

involved as well, such as consumer protection law, competition law, antidiscrimination law, and

labour law.

This study will adopt an interdisciplinary perspective. Artificial intelligence technologies will be

examined and assessed on the basis of most recent scientific and technological research, and their

social impacts will be considered by taking account of an array of approaches, from sociology to

economics and psychology. A normative perspective will be provided by works in sociology and

ethics, and in particular information, computer, and machine ethics. Legal aspects will be analysed

by reference to the principles and rules of European law, as well as to their application in national

contexts. The report will focus on data protection and the GDPR, though it will also consider how

data protection shares with other domains of the law the task of addressing the opportunities and

risks that come with AI.

STOA | Panel for the Future of Science and Technology

2

2. AI and personal data

This section introduces the technological and social background of the study, namely, the

development of AI and its connections with the processing of personal and other data. First the

concept of AI will be introduced (Section 2.1), then the parallel progress of AI and large-sca le data

processing will be discussed (Section 2.2), and finally, the analysis will turn to the relation between

AI and the processing of personal data (Section 2.3).

2.1. The concept and scope of AI

The concept of AI will be introduced, as well as its connections with the robotics and algorithms.

2.1.1. A definition of AI

The broadest definition of artificial intelligence (AI) characterises it as the attempt to build machines

that 'perform functions that require intelligence when performed by people.'

1

A more elaborate

notion has been provided by the High Level Expert Group on AI (AI HLEG), set up by the EU

Commission:

Artificial intelligence (AI) systems are software (and possibly also hardware) systems

designed by humans that, given a complex goal, act in the physical or digital dimension

by perceiving their environment through data acquisition, interpreting the collected

structured or unstructured data, reasoning on the knowledge, or processing the

information, derived from this data and deciding the best action(s) to take to achieve

the given goal. AI systems can either use symbolic rules or learn a numeric model, and

they can also adapt their behaviour by analysing how the environment is affected by

their previous actions.

2

This definition can be accepted with the proviso that most AI systems only perform a fraction of the

activities listed in the definition: pattern recognition (e.g., recognising images of plants or animals,

human faces or attitudes), language processing (e.g., understanding spoken languages, translating

from one language into another, fighting spam, or answering queries), practical suggestions (e.g.,

recommending purchases, purveying information, performing logistic planning, or optimising

industrial processes), etc. On the other hand, some systems may combine many such capacities, as

in the example of self-driving vehicles or military and care robots.

The High-Level Expert Group characterises the scope of research in AI as follows:

As a scientific discipline, AI includes several approaches and techniques, such as

machine learning (of which deep learning and reinforcement learning are specific

examples), machine reasoning (which includes planning, scheduling, knowledge

representation and reasoning, search, and optimization), and robotics (which includes

control, perception, sensors and actuators, as well as the integration of all other

techniques into cyber-physical systems).

To this definition, we could also possibly add also communication, and particularly the

understanding and generation of language, as well as the domains of perception and vision.

1

Kurzweil (1990, 14), Russel and Norvig (2016, Section 1.1).

2

AI-HLEG (2019).

The impact of the General Data Protection Regulation (GDPR) on artificial intelligence

3

2.1.2. AI and robotics

AI constitutes the core of robotics, the discipline that aims to build 'physical agents that performs

tasks by manipulating the physical world.'

3

The High-Level Expert Group describes robotics as

follows

Robotics can be defined as 'AI in action in the physical world' (also called embodied AI).

A robot is a physical machine that has to cope with the dynamics, the uncertainties and

the complexity of the physical world. Perception, reasoning, action, learning, as well as

interaction capabilities with other systems are usually integrated in the control

architecture of the robotic system. In addition to AI, other disciplines play a role in robot

design and operation, such as mechanical engineering and control theory. Examples of

robots include robotic manipulators, autonomous vehicles (e.g. cars, drones, flying

taxis), humanoid robots, robotic vacuum cleaners, etc.

In this report, robotics will not be separately addressed since embodied and disembodied AI systems

raise similar concerns when addressed from the perspective of GDPR: in both cases personal data

are collected, processed, and acted upon by intelligent system. Moreover, also software systems

may have access to sensor on the physical world (e.g., cameras) or govern physical devices (e.g.,

doors, lights, etc.). This fact does not exclude that the specific types of interaction that may exists, or

will exists, between humans and physical robots – e.g., in the medical or care domain– may require

specific considerations and regulatory approaches also in the data protection domain.

2.1.3. AI and algorithms

The term 'algorithm' is often used to refer to AI applications, e.g., through locutions such 'algorithmic

decision-making.' However, the concept of an algorithm is more general that the concept of AI, since

it includes any sequence of unambiguously defined instructions to execute a task, particularly but

not exclusively through mathematical calculations.

4

To be executed by a computer system,

algorithms have to be expressed through programming languages, thus becoming machine-

executable software programs. Algorithms can be very simple, specifying, for instance, how to

arrange lists of words in alphabetical order or how to find the greatest common divisor between

two numbers (such as the so-called Euclidean algorithm). They can also be very complex, such as

algorithms for file encryption, the compression of digital files, speech recognition, or financial

forecasting. Obviously, not all algorithms involve AI, but every AI system, like any computer system,

includes algorithms, some dealing with tasks that directly concern AI functions.

AI algorithms may involve different kinds of epistemic or practical reasoning (detecting patterns and

shapes, applying rules, making forecasts or plans), as well different ways of learning.

5

In the latter

case the system can enhance itself by developing new heuristics (tentative problem-solving

strategies), modifying its internal data, or even generating new algorithms. For instance, an AI

system for e-commerce may apply discounts to consumers meeting certain conditions (apply rules),

provide recommendations (e.g., learn and use correlations between users' features and their buying

habits), optimise stock management (e.g., develop and deploy the best trading strategies). Though

an AI system includes many algorithms, it can also be viewed as a single complex algorithm,

combining the algorithms performing its various functions, as well as the top algorithms that

orchestrate the system's functions by activating the relevant lower-level algorithms. For instance, a

bot that answers queries in natural language will include an orchestrated combination of algorithms

3

Russell and Norvig (2016).

4

Harel (2004).

5

According to Russel and Norvig (2016, 693), 'an agent is learning if it improves its performance on future tasks after

making observations about the world'.

STOA | Panel for the Future of Science and Technology

4

to detect sounds, capture syntactic structures, retrieve relevant knowledge, make inferences,

generate answers, etc.

In a system that is capable of learning, the most important component will not be the learned

algorithmic model, i.e., the algorithms that directly execute the tasks assigned to the system (e.g.,

making classifications, forecasts, or decisions) but rather the learning algorithms that modify the

algorithmic model so that it better performs its function. For instance, in a classifier system that

recognises images through a neural network, the crucial element is the learning algorithm (the

trainer) that modifies the internal structure of the algorithmic model (the trained neural network)

by changing it (by modifying its internal connections and weights) so that it correctly classifies the

objects in its domain (e.g., animals, sounds, faces, attitudes, etc.).

2.1.4. Artificial intelligence and big data

The term big data identifies vast data sets that it is difficult to manage using standard techniques,

because of their special features, the so-called thee V's: huge Volume, high Velocity and great

Variety. Other features associated to big data are low Veracity (high possibility that at least some

data are inaccurate), and high Value. Such data can be created by people, but most often they are

collected by machines, which capture information from the physical word (e.g., street cameras,

sensors collecting climate information, devices for medical testing, etc.), or from computer-

mediated activities (e.g., systems recording transactions or tracking online behaviour etc.).

From a social and legal perspective what is most relevant in very large data sets, and which makes

them 'big data' from a functional perspective, is the possibility of using such data sets for analytics,

namely, for discovering correlations and making predictions, often using AI techniques, as we shall

see when discussing machine learning.

6

In particular, the connection with analytics and AI makes

big data specifically relevant to data protection.

7

Big data can concern the non-human physical world (e.g. environmental, biological, industrial, and

astronomical data), as well as humans and their social interactions (e.g., data on social networks,

health, finance, economics or transportation). Obviously, only the second kind of data is relevant to

this report.

2.2. AI in the new millennium

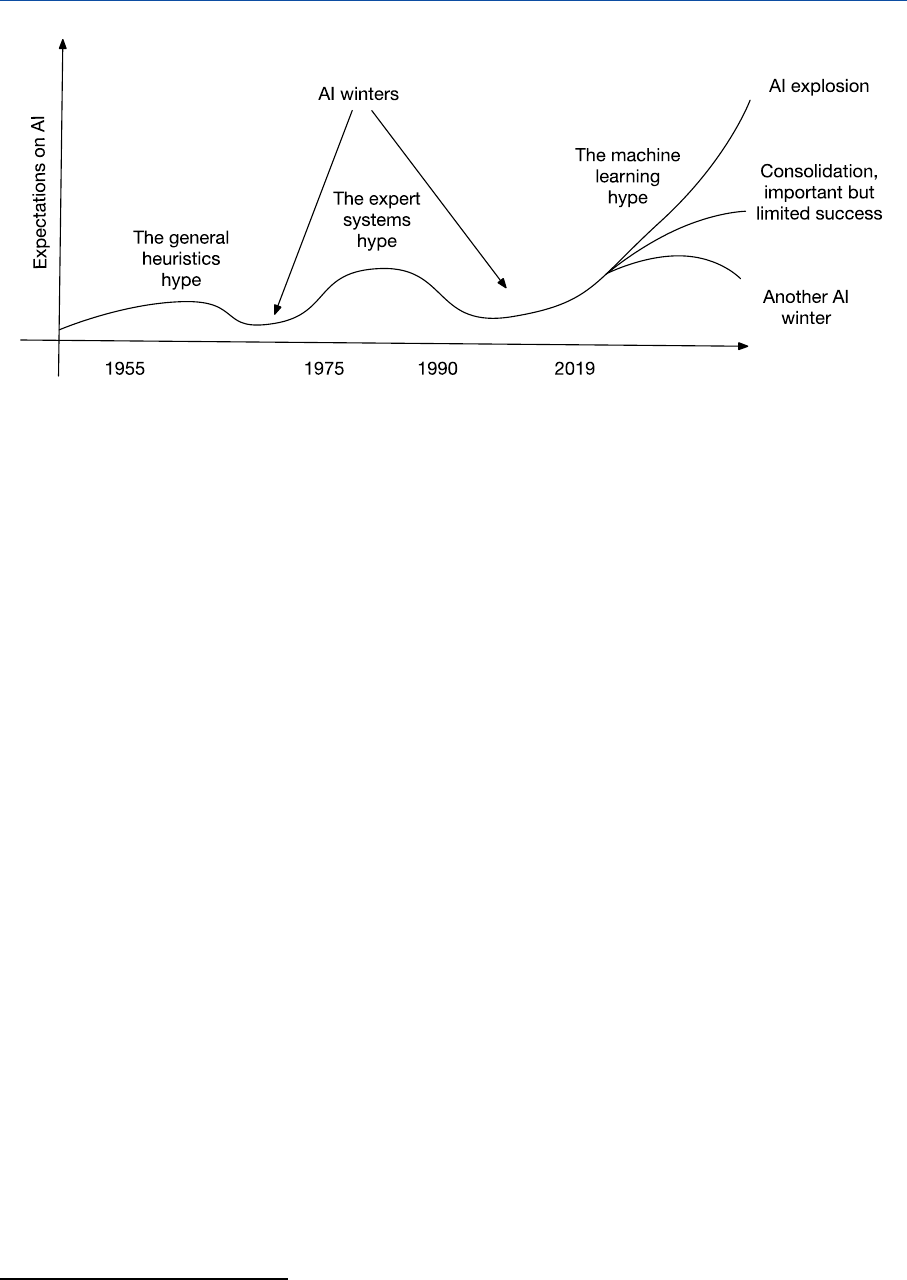

Over the last decades, AI has gone through a number of ups and downs, excessive expectations

being followed by disillusion (the so-called AI winters).

8

In recent years, however, there is no doubt

that AI has been hugely successful. On the one hand, a solid interdisciplinary background has been

constructed for AI research: the original core of computing, mathematics, and logic has been

extended with models and insights from a number of other disciplines, such as statistics, economics,

linguistics, neurosciences, psychology, philosophy, and law. On the other hand, an array of

successful applications has been built, which have already entered our daily lives: voice, image, and

face recognition; automated translation; document analysis; question-answering; games; high-

speed trading; industrial robotics; autonomous vehicles; etc.

Based on the current successes, it is most likely that current successful applications will not only be

consolidate, but will be accompanied by further growth, following probably the middle path

indicated in Figure 1.

6

See Mayer-Schoenberger and Cukier (2013, 15).

7

Hildebrandt (2014)

8

Nilsson (2010).

The impact of the General Data Protection Regulation (GDPR) on artificial intelligence

5

Figure 1 – Hypes and winters of AI

2.2.1. Artificial general and specific intelligence

AI research usually distinguishes two goals: 'artificial general intelligence,' also known as 'strong AI,'

and 'artificial specialised intelligence,' also known as 'weak AI.' Artificial general intelligence pursues

the ambitious objective of developing computer systems that exhibit most human cognitive skills,

at a human or even a superhuman level.

9

Artificial specialised intelligence pursues a more modest

objective, namely, the construction of systems that, at a satisfactory level, are able to engage in

specific tasks requiring intelligence.

The future emergence of a general artificial intelligence is already raising serious concerns. A general

artificial intelligence system may improve itself at an exponential speed and quickly become

superhuman; through its superior intelligence it may then acquire capacities beyond human

control.

10

In relation to self-improving artificial intelligence, humanity may find itself in a condition

of inferiority similar to that of animals in relation to humans. Some leading scientists and

technologists (such as Steven Hawking, Elon Musk, and Bill Gates) have argued for the need to

anticipate this existential risk,

11

adopting measures meant to prevent the creation of general

artificial intelligence or to direct it towards human-friendly outcomes (e.g., by ensuring that it

endorses human values and, more generally, that it adopts a benevolent attitude). Conversely, other

scientists have looked favourably on the birth of an intelligence meant to overcome human

capacities. In an AI system's ability to improve itself could lie the 'singularity' that will accelerate the

development of science and technology, so as not only to solve current human problems (poverty,

underdevelopment, etc.), but also to overcome the biological limits of human existence (illness,

aging, etc.) and spread intelligence in the cosmos.

12

9

Bostrom (2014)

10

Bostrom (2014). This possibility was anticipated by Turing ([1951] 1966).

11

Parkin (2015).

12

See Kurzweil (2005) and Tegmark (2017).

STOA | Panel for the Future of Science and Technology

6

Figure 2 – General AI: The singularity

The risks related to the emergence of an 'artificial general intelligence' should not be

underestimated: this is, on the contrary, a very serious problem that will pose challenges in the

future. In fact, as much as scientists may disagree on whether and when 'artificial general

intelligence,' will come into existence, most of them believe that this objective will be achieved

within the end of this century.

13

In any case, it is too early to approach 'artificial general intelligence'

at a policy level, since it lies decades ahead, and a broader experience with advanced AI is needed

before we can understand both the extent and proximity of this risk, and the best ways to address

it.

Conversely, 'artificial specialised intelligence' is already with us, and is quickly transforming

economic, political, and social arrangements, as well as interactions between individuals and even

their private lives. The increase in economic efficiency already is reality (see Figure 2), but AI pr ovides

further opportunities: economic, social, and cultural development; energy sustainability; better

health care; and the spread of knowledge. In the very recent White Paper by the European

Commission

14

it is indeed affirmed that AI.

will change our lives by improving healthcare (e.g. making diagnosis more precise,

enabling better prevention of diseases), increasing the efficiency of farming,

contributing to climate change mitigation and adaptation, improving the efficiency of

production systems through predictive maintenance, increasing the security of

Europeans, and in many other ways that we can only begin to imagine.

13

A poll among leading AI scientists can be found in Bostrom (2014).

14

White Paper 'On artificial intelligence - A European approach to excellence and trust', Brussels, 19.2.2020 COM(2020) 65

final.

The impact of the General Data Protection Regulation (GDPR) on artificial intelligence

7

Figure 3 – Efficiency gains from AI

The opportunities offered by AI are accompanied by serious risks, including unemployment,

inequality, discrimination, social exclusion, surveillance, and manipulation. It has indeed been

claimed that AI should contribute to the realisation of individual and social interests, and that it

should not be 'underused, thus creating opportunity costs, nor overused and misused, thus creating

risks.'

15

In the just mentioned Commission's White paper, it is indeed observed that the deployment

of AI

entails a number of potential risks, such as opaque decision-making, gender-based or

other kinds of discrimination, intrusion in our private lives or being used for criminal

purposes.

Because the need has been recognised to counter these risks, while preserving scientific research

and the beneficial uses of AI, a number of initiatives have been undertaken in order to design an

ethical and legal framework for 'human-centred AI.' Already in 2016, the White House Office of

Science and Technology Policy (OSTP), the European Parliament's Committee on Legal Affairs, and,

in the UK, the House of Commons' Science and Technology Committee released their initial reports

on how to prepare for the future of AI

16

. Multiple expert committees have subsequently pro duced

reports and policy documents. Among them, the High-Level Expert Group on artificial intelligence

appointed by the European Commission, the expert group on AI in Society of the Organisation for

Economic Co-operation and Development (OECD), and the select committee on artificial

intelligence of the United Kingdom (UK) House of Lords.

17

The Commission's White Paper affirms that two parallel policy objectives should be pursued and

synergistically integrated. On the one hand research and deployment of AI should be promoted, so

15

Floridi et al (2018, 690).

16

See Cath et al (2017).

17

For a recent review of documents on AI ethics and policy, see Jobin (2019).

STOA | Panel for the Future of Science and Technology

8

that the EU is competitive with the US and China. The policy framework setting out measures to

align efforts at European, national and regional level should aim to mobilise resources

to achieve an 'ecosystem of excellence' along the entire value chain, starting in research

and innovation, and to create the right incentives to accelerate the adoption of

solutions based on AI, including by small and medium-sized enterprises (SMEs)

On the other hand, the deployment of AI technologies should be consistent with the EU

fundamental rights and social values. This requires measures to create an 'ecosystem of trust,' which

should provide citizens with 'the confidence to take up AI applications' and 'companies and public

organisations with the legal certainty to innovate using AI'. This ecosystem

must ensure compliance with EU rules, including the rules protecting fundamental

rights and consumers' rights, in particular for AI systems operated in the EU that pose a

high risk.

It is important to stress that the two objectives of excellence in research, innovation and

implementation, and of consistency with individual rights and social values are compatible, but

distinct. On the one hand the most advanced AI applications could be deployed to the detriment of

citizens' rights and social values; on the other hand the effective protection of citizens' from the risks

resulting from abuses AI does not provide in itself the incentives that are needed to stimulate

research and innovation and promote beneficial uses. This report will argue that GDPR can

contribute to address abuses of AI, and that it can be implemented in ways that do not hinder its

beneficial uses. It will not address the industrial and other policies that are needed to ensure the EU

competitiveness in the AI domain.

2.2.2. AI between logical models and machine learning

The huge success that AI has had in recent years is linked to a change in the leading paradigm in AI

research and development. Until a few decades ago, it was generally assumed that in order to

develop an intelligent system, humans had to provide a formal representation of the relevant

knowledge (usually expressed through a combination of rules and concepts), coupled with

algorithms making inferences out of such knowledge. Different logical formalisms (rule languages,

classical logic, modal and descriptive logics, formal argumentation, etc.) and computable models

for inferential processes (deductive, defeasible, inductive, probabilistic, case-based, etc.) have been

developed and applied.

18

The structure for expert systems is represented in Figure 4. Note that humans appear both as users

of the system and as creators of the system's knowledge base (experts, possibly helped by

knowledge engineers).

18

Van Harmelen et al (2008).

The impact of the General Data Protection Regulation (GDPR) on artificial intelligence

9

Figure 4 – Basic structure of expert systems

The theoretical results in knowledge representation and reasoning were not matched by disrupting,

game-changing applications. Expert systems – i.e., computer systems including vast domain-

specific knowledge bases, e.g., in medicine, law, or engineering, coupled with inferential engines –

gave rise to high expectations about their ability to reason and answer users' queries. Unfortunately,

such systems were often unsuccessful or only limitedly successful: they could only provide

incomplete answers, were unable to address the peculiarities of individual cases, and required

persistent and costly efforts to broaden and update their knowledge bases. In particular, expert-

system developers had to face the so-called knowledge representation bottleneck: in order to build a

successful application, the required information – including tacit and common-sense knowledge –

had to be represented in advance using formalised languages. This proved to be very difficult and

in many cases impractical or impossible.

In general, only in some restricted domains the logical models have led to successful application. In

the legal domain, for example, logical models of great theoretical interest have been developed –

dealing, for example, with arguments,

19

norms, and precedents

20

– and some expert systems have

been successful in legal and administrative practice, in particular in dealing with tax and social

security regulations. However, these studies and applications have not fundamentally transformed

the legal system and the application of the law.

AI has made an impressive leap forward since it began to focus on the application of machine

learning to mass amounts of data. This has led to a number of successful applications in many

sectors – ranging from automated translation to industrial optimisation, marketing, robotic visions,

movement control, etc. – and some of these applications already have substantial economic and

social impacts. In machine learning approaches, machines are provided with learning methods,

rather than, or in addition to, formalised knowledge. Using such methods, they can automatically

learn how to effectively accomplish their tasks by extracting/inferring relevant information from

their input data. As noted, and as Alan Turing already theorised in the 1950s, a machine that is able

to learn will achieve its goals in ways that are not anticipated by its creators and trainers, and in some

cases without them knowing the details of its inner workings.

21

Even though the great success of machine learning has overshadowed the techniques for explicit

and formalised knowledge representation, the latter remain highly significant. In fact, in many

domains the explicit logical modelling of knowledge and reasoning can be complementary to

machine learning. Logical models can explain the functioning of machine learning systems, check

and govern their behaviour according to normative standards (including ethical principles and legal

norms), validate their results, and develop the logical implications of such results according to

conceptual knowledge and scientific theories. In the AI community the need to combine logical

modelling and machine leaning is generally recognised, though different views exist on how to

19

Prakken, and Sartor (2015).

20

Ashley (2017).

21

Turing ([1951] 1996)

STOA | Panel for the Future of Science and Technology

10

achieve this goal, and on the aspects to be covered by the two approaches (for a discussion on the

limits of machine learning, see recently Marcus and Davis 2019).

2.2.3. Approaches to learning

Three main approaches to machine learning are usually distinguished: supervised learning,

reinforcement learning and unsupervised learning.

Figure 5 – Kinds of learning

Supervised learning is currently the most popular approach. In this case the machine learns through

'supervision' or 'teaching': it is given in advance a training set, i.e., a large set of (probably) correct

answers to the system's task. More exactly the system is provided with a set of pairs, each linking the

description of a case to the correct response for that case. Here are some examples: in systems

designed to recognise objects (e.g. animals) in pictures, each picture in the training set is tagged

with the name of the kind of object it contains (e.g., cat, dog, rabbit, etc.); in systems for automated

translation, each (fragment of) a document in the source language is linked to its translation in the

target language; in systems for personnel selection, the description of each past applicants (age,

experience, studies, etc.) is linked to whether the application was successful (or to an indicator of

the work performance for appointed candidates); in clinical decision support systems, each patient's

symptoms and diagnostic tests is linked to the patient's pathologies; in recommendation systems,

each consumer's features and behaviour is linked to the purchased objects; in systems for assessing

loan applications, each record of a previous application is linked to whether the application was

accepted (or, for successful applications, to the compliant or non-compliant behaviour of the

borrower). As these examples show, the training of a system does not always require a human

teacher tasked with providing correct answers to the system. In many case, the training set can be

side-product of human activities (purchasing, hiring, lending, tagging, etc.), as is obtained by

recording the human choices pertaining to such activities. In some cases the training set can even

be gathered 'from the wild' consisting in data which is available on the open web. For instance,

manually tagged images or faces, available on social networks, can be scraped and used for training

automated classifiers.

The impact of the General Data Protection Regulation (GDPR) on artificial intelligence

11

Figure 6 – Supervised learning

The learning algorithm of the system (its trainer), uses the training set to build an algorithmic model:

a neural network, a decision tree, a set of rules, etc. The algorithmic model is meant to capture the

relevant knowledge originally embedded in the training set, namely the correlations between cases

and responses. This model is then used, by a predicting algorithm, to provide hopefully correct

responses to new cases, by mimicking the correlations in the training set. If the examples in the

training set that come closest to a new case (with regard to relevant features) are linked to a cer tain

answer, the same answer will be proposed for the new case. For instance if the pictures that are most

similar to a new input were tagged as cats, also the new input will also be tagged in the same way;

if past applicants whose characteristic best match those of the new applicant were linked to

rejection, the system will propose to reject also the new applicant; if the past workers who come

closest to the new applicant performed well (or poorly), the systems will predict that also the

applicant will perform likewise.

The answers by learning systems are usually called 'predictions'. However, often the context of the

system's use often determines whether its proposals are be interpreted as forecasts, or rather as a

suggestion to the system's user. For instance, a system's 'prediction' that a person's application for

bail or parole will be accepted can be viewed by the defendant (and his or her lawyer) as a prediction

of what the judge will do, and by the judge as a suggestion guiding her decision (assuming that she

prefers not to depart from previous practice). The same applies to a system's prediction that a loan

or a social entitlement will be granted.

There is also an important distinction to be drawn concerning whether the 'correct' answers in a

training set are provided by the past choices by human 'experts' or rather by the factual

consequences of such choices. Compare, for instance, a system whose training set consists of past

loan applications linked to the corresponding lending decisions, and a system whose training set

consists of successful applications linked to the outcome of the loan (repayment or non-payment).

Similarly, compare a system whose training set consists of parole applications linked to judges'

decisions on such application with a system whose training set consists of judicial decisions on

parole applications linked to the subsequent behaviour of the applicant. In the first case, the system

will learn to predict the decisions that human decision-makers (bank managers, or judges) would

have made under the same circumstances. In the second case, the system will predict how a certain

choice would affect the goals being pursued (preventing non-payments, preventing recidivism). In

the first case the system would reproduce the virtues – accuracy, impartiality, farness – but also the

STOA | Panel for the Future of Science and Technology

12

vices – carelessness, partiality, unfairness – of the humans it is imitating. In the second case it would

more objectively approximate the intended outcomes.

As a simple example of supervised learning, Figure 7, shows a (very small) training set concerning

bail decisions along with the decision tree that can be learned on the basis of that training set. The

decision tree captures the information in the training set through a combination of tests, to be

performed sequentially. The first test concerns whether the defendant was involved in a drug

related offence. If the answer is positive, we have reached the bottom of the tree with the conclusion

that bail is denied. If the answer is negative, we move to the second test, on whether the defendant

used a weapon, and so on. Notice that the decision tree does not include information concerning

the kind of injury, since all outcomes can be explained without reference to that information. This

shows how the system's model does not merely replicate the training set; it involves generalisation:

it assumes that certain combination of predictors are sufficient to determine the outcomes, other

predictors being irrelevant.

Figure 7 – Training set and decision tree for bail decisions

In this example we can distinguish the elements in Figure 6. The table in Figure 7 is the training set.

The software that constructs the decision tree, is the learning algorithm. The decision tree itself, as

shown in Figure 7 is the algorithmic model, which codes the logic of the human decisions in the

training set. The software that processes new cases, using the decision tree, and makes predictions

based on their features of such cases, is the predicting algorithm. In this example, as noted above,

the decision tree reflects the attitudes of the decision-makers whose decisions are in the training

set: it reproduces their virtues and biases.

For instance, according to the decision tree, the fact that the accuse concerns a drug-related offence

is sufficient for bail to be denied. We may wonder whether this is a fair criterion for assessing bail

requests. Note also that the decision tree (the algorithmic model) also provides answers for cases

that do not fit exactly any example in the training set. For instance, no example in the training set

concerns a drug-related offence with no weapon and no previous record. However, the decision

tree provides an answer also for this case: there should be no bail, as this is what happens in all drug-

related cases in the training set.

As another simplified example of supervised machine learning consider the training set and the

rules in figure 7. In this case too, the learning algorithm, as applied to this very small set of past

decisions, delivers questionable generalisation, such as the prediction that young age would always

lead to a rejection of the loan applications and that middle age would always lead to acceptance.

Usually, in order to give reliable prediction, a training set must include a vast number of examples,

each described through a large set of predictors.

Reinforcement learning is similar to supervised learning, as both involve training by way of exa mples.

However, in the case of reinforcement learning the systems learns from the outcomes of it s own

action, namely, through the rewards or penalties (e.g., points gained or lost) that are linked to the

outcomes of such actions. For instance, in case of a system learning how to play a game, rewards

The impact of the General Data Protection Regulation (GDPR) on artificial intelligence

13

may be linked to victories and penalties to defeats; in a system learning to make investments,

rewards may be linked to financial gains and penalties to losses; in a system learning to target ads

effectively, rewards may be linked to users' clicks, etc. In all these cases, the system observes the

outcomes of its actions, and it self-administers the corresponding rewards or penalties. Being

geared towards maximising its score (its utility), the system will learn to achieve outcomes leading

to rewards (victories, gains, clicks), and to prevent outcomes leading to penalties. With regard to

reinforcement learning too, we can distinguish the learner (the algorithm that learns how to act

successfully, based on the outcomes of previous actions by the system) and the learned model (the

output of the learner, which determines the system's new actions).

In unsupervised learning, finally, AI systems learn without receiving external instructions, either in

advance or as feedback, about what is right or wrong. The techniques for unsupervised learning are

used in particular, for clustering, i.e., for grouping the set of items that present relevant similarities

or connections (e.g., documents that pertain to the same topic, people sharing relevant

characteristics, or terms playing the same conceptual roles in texts). For instance, in a set of cases

concerning bail or parole, we may observe that injuries are usually connected with drugs (not with

weapons as expected), or that people having prior record are those who are related to weapon.

These clusters might turn out to be informative to ground bail or parole policies.

2.2.4. Neural networks and deep learning

Many techniques have been deployed in machine learning: decision trees, statistical regression,

support vector machine, evolutionary algorithms, methods for reinforcement learning, etc.

Recently, deep learning based on many-layered neural networks has been very successfully

deployed especially, but not exclusively, where patterns have to be recognised and linked to

classifications and decisions (e.g., in detecting objects in images, recognising sounds and their

sources, making medical diagnosis, translating texts, choosing strategies in games, etc.). Neural

networks are composed of a set of nodes, called neurons, arranged in multiple layers and connected

by links. They are so-called, since they reproduce some aspects of the human nervous system, which

indeed consists of interconnected specialised cells, the biological neurons, which receive and

transmit information. Neural networks were indeed developed under the assumption that artificial

intelligence could be achieved by reproducing the human brain, rather than by modelling human

reasoning, i.e., that artificial reasoning would naturally emerge out of an artificial brain (though we