1

Reviewer Surveys — Feedback on CSRs’ Bias Awareness and Mitigation Training

Office of the Director March 8, 2022

Introduction

The mission of the Center for Scientific Review (CSR) is to ensure that National Institute of Health

(NIH) grant applications receive fair, independent, expert, and timely scientific peer reviews that are free

from inappropriate influences. In 2021 CSR created an online bias awareness and mitigation training

module. Rather than examining implicit bias generally, this training focused on potential biases

specific to NIH peer review and aimed to give reviewers tools to reduce bias. This report presents results

from a survey that was included in the training in order to gather reviewer feedback regarding the value

of the training and to inform future iterations. The survey measured different aspects of the training to

help identify knowledge gained, useful content, and future actions to mitigate bias. The survey also

assessed reviewers’ perceptions of and behaviors regarding peer review bias in the year prior to the

training. Write-in items gathered reviewer comments about bias in NIH review and recommendations

for improving the training. See Appendix A for Methods and a synopsis of the training.

Results

In the 2022/01 council round 10,382 reviewers were asked to take the bias training, and

6,312 completed the training—for a training completion rate of 61 percent. Among those

reviewers that completed the training, 3,166 completed the optional survey at the end of

the training—for a survey response rate of 50 percent.

Key findings

▪ 87% of reviewers stated the NIH has a “moderate” or greater problem with bias in the peer

review process.

▪ 91% of reviewers thought that the training substantially improved their ability to identify bias in

peer review and 93% said it made them substantially more comfortable intervening against bias.

▪ In the previous year, less than 20% of reviewers said they intervened always or most of time

when they perceived bias in peer review; following training, up to 82% said they would

“probably” or “definitely” take specific actions (e.g., contact the SRO, talk with the reviewer) to

combat bias.

▪ 83% of reviewers stated the training helped them become aware of their personal review biases

to a moderate or greater extent and 88% said it would help them present an unbiased review to a

moderate or greater extent.

▪ Over 90% of reviewers were extremely satisfied with the training and found the specific

interactive training activities very useful.

2

▪ Results of the qualitative analysis of open-ended comments (n = 1,443) were consistent with

many of the quantitative findings. Additionally, the qualitative analysis found that reviewers

want more intervention techniques to help them mitigate different types of bias (e.g., scientific,

socio-demographic characteristics, implicit) and bias in different situational contexts (e.g., power

and group dynamics, critiques). Reviewers also would like for Chairs and SROs to play a more

active role in bias intervention.

Acknowledgment: We would like to thank Dr. Hope Cummings for carrying out this project.

3

Item analyses

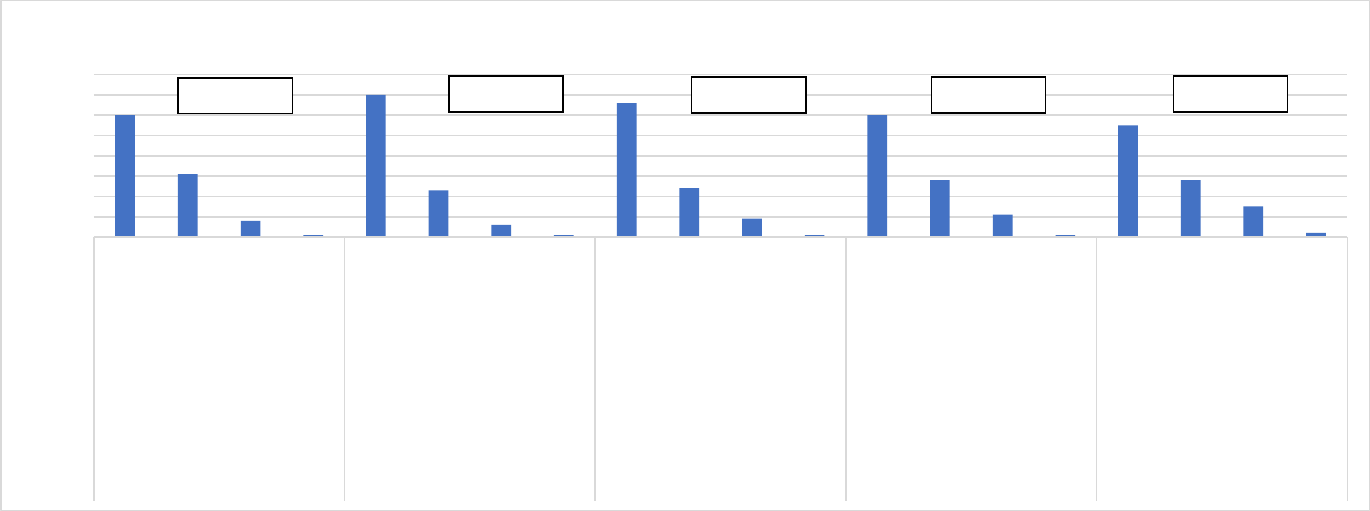

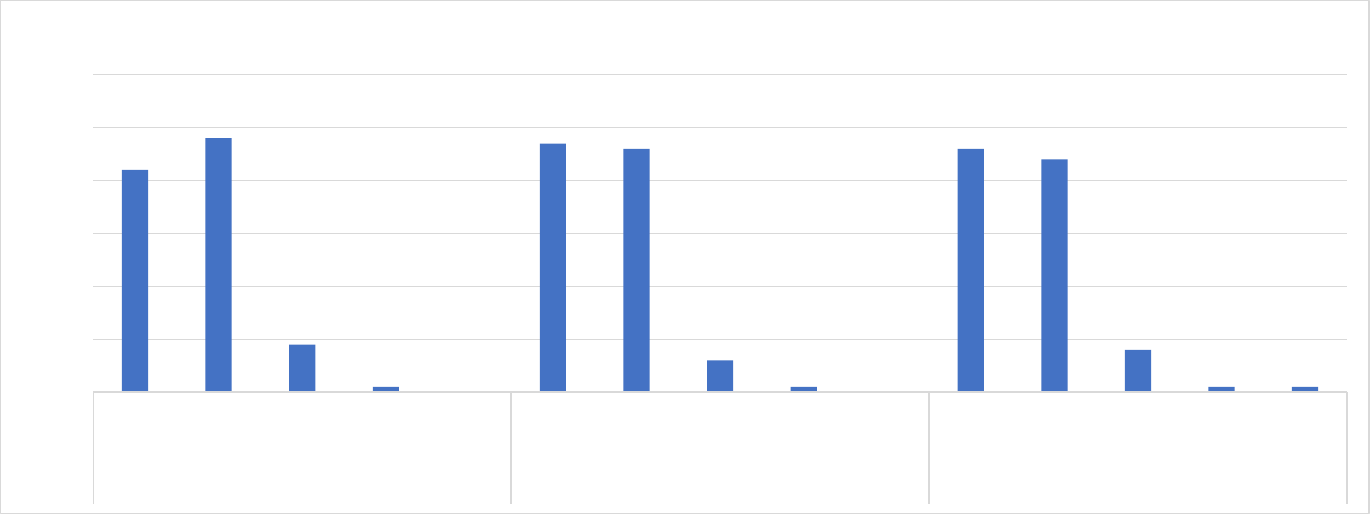

1. To what extent did the training help you in the following areas?

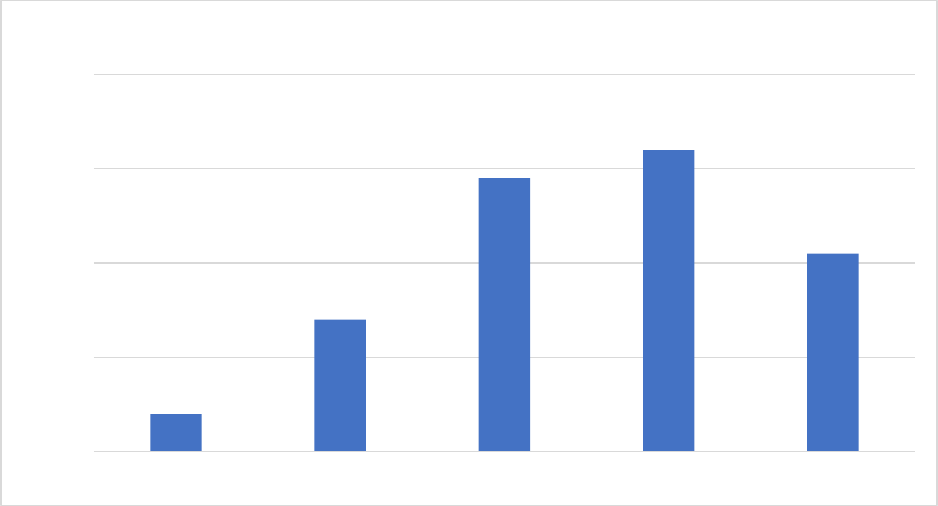

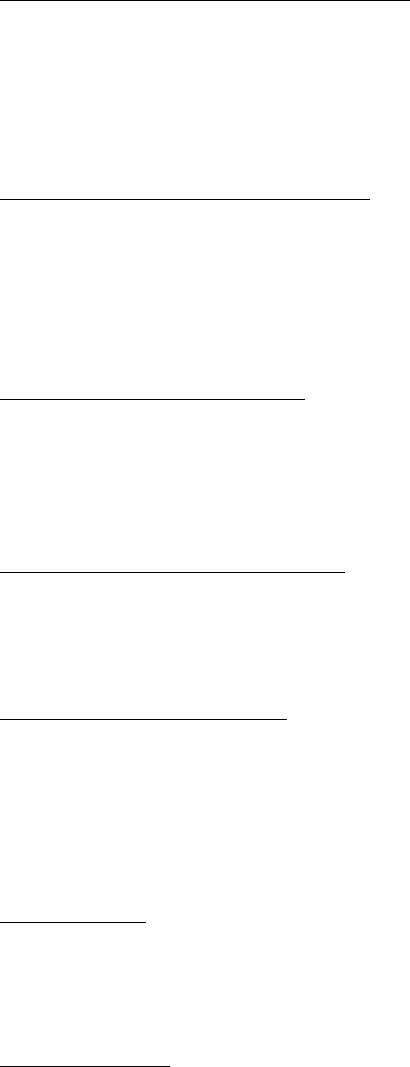

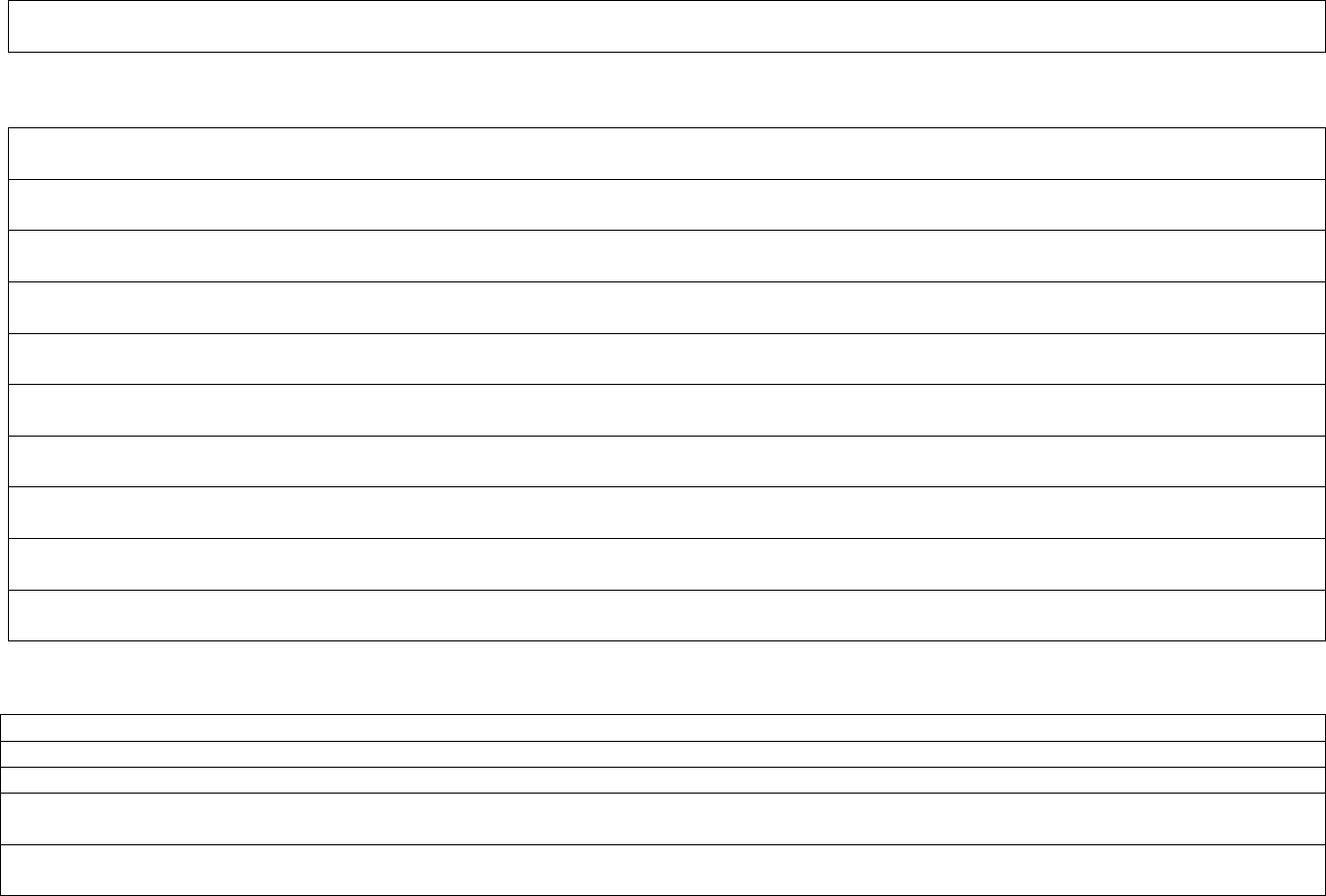

▪ Most reviewers (~ 60%) thought the training helped them to a large extent become more aware of bias in peer review, understand the

importance of the review criteria for preventing bias, and present an unbiased review in the future; ~ 30% indicated that the training

helped them in these areas to a moderate extent.

▪ 70% of reviewers stated that the training helped them to a large extent feel more comfortable intervening on review bias; less than

10% indicated that the training had little or no value in helping them intervene on bias.

▪ ~ 80% of reviewers thought the training helped them to a large or moderate extent become aware of their personal review biases.

Figure 1. Helpfulness of Training for Reviewers’ Knowledge and Awareness of Bias in Peer Review

Note. Items use a 6-point scale where 6 = “very large extent” and 1 = “not at all”. The mean (M) is the mean of those numeric scores. For ease of presentation, very

large” and “large” were grouped together and defined as “large”, and “very small” and “small” were grouped together and defined as “small”.

0

10

20

30

40

50

60

70

80

Large extent

Moderate extent

Small extent

Not at all

Large extent

Moderate extent

Small extent

Not at all

Large extent

Moderate extent

Small extent

Not at all

Large extent

Moderate extent

Small extent

Not at all

Large extent

Moderate extent

Small extent

Not at all

Identify Review Bias Comfortable Intervening on

Review Bias

Understand Importance of

Review Criteria for Bias

Prevention

Present an Unbiased

Review

Aware of Personal Review

Biases

Percent of Reviewers

Knowledge and Awareness of Bias

M = 4.7

M = 4.9

M = 4.8 M = 4.6

M = 4.6

4

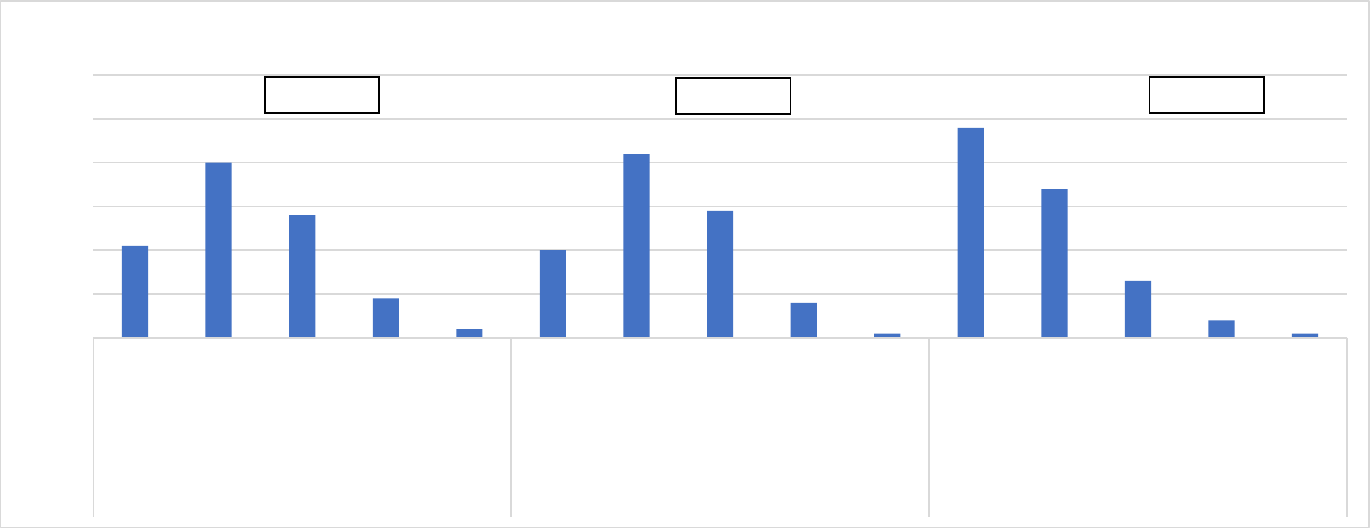

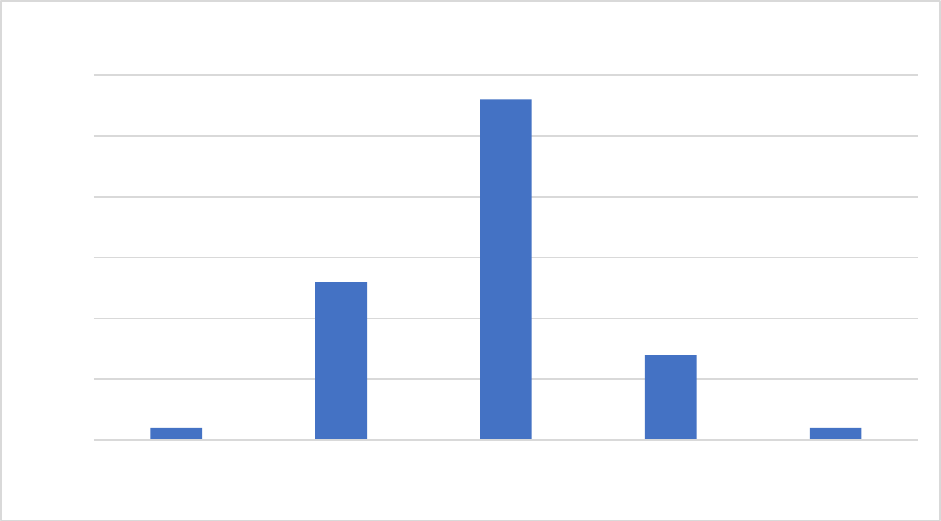

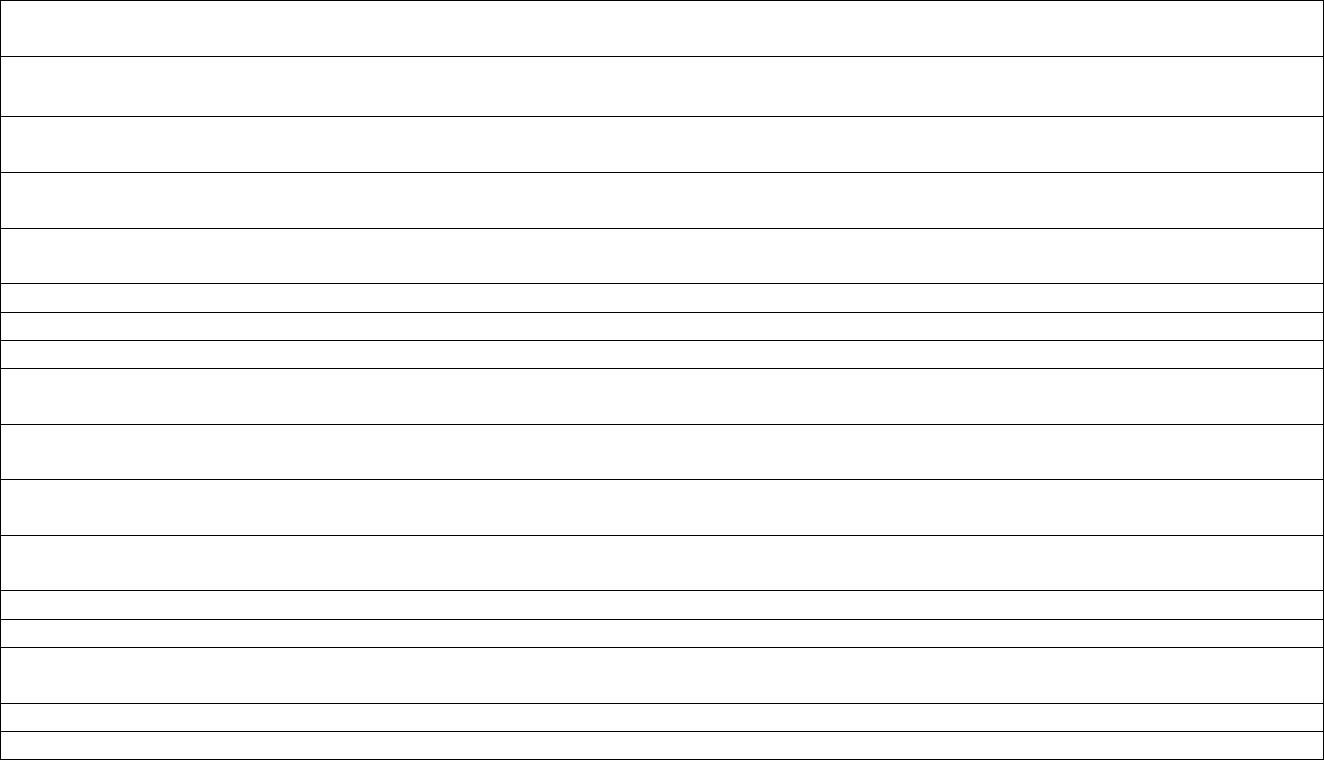

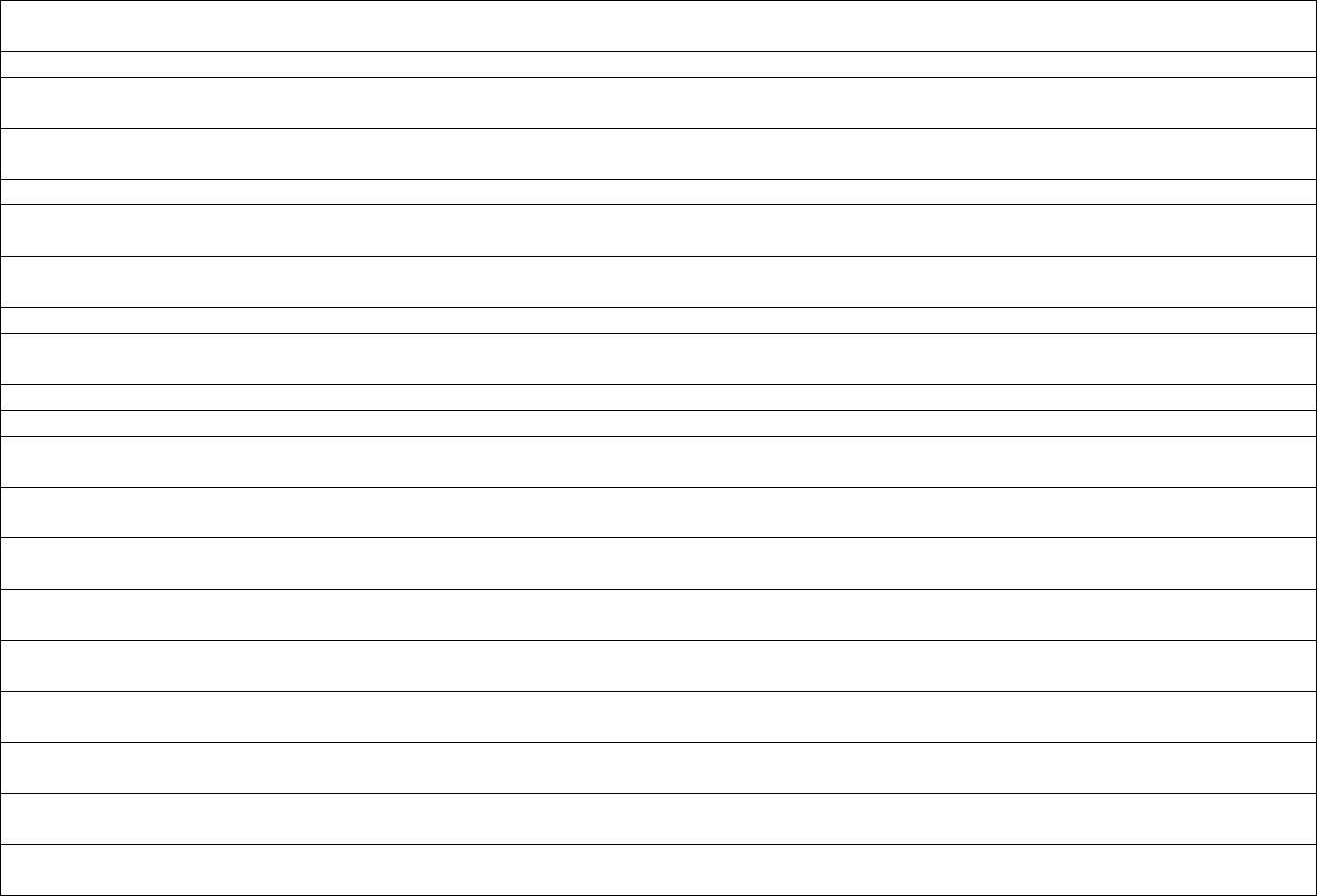

2. In the future, how likely are you to do the following activities?

▪ About half of reviewers (48%) said they would definitely ask for clarification or justification from a reviewer if they thought the

reviewer made a biased statement; about half (47%) said they would probably or maybe do so.

▪ ~ 20% of reviewers stated they would definitely contact the SRO or Chair if they perceived bias in a critique or at the meeting; ~70%

said they probably or maybe would do so. Only 10% indicated that they would probably not or definitely not contact the SRO or Chair

if they perceived bias.

Figure 2. Future Actions to Reduce Bias in Peer Review

Note. Items use a 5-point scale where 5 = “definitely” and 1 = “definitely not”. The mean (M) is the mean of those numeric scores.

0

10

20

30

40

50

60

Definitely

Probably

Maybe

Probably not

Definitely not

Definitely

Probably

Maybe

Probably not

Definitely not

Definitely

Probably

Maybe

Probably not

Definitely not

Contact SRO: Bias in Critique Contact SRO or Chair: Bias at Meeting Openly Discuss with Reviewer: Bias at Meeting

Percent of Reviewers

Actions to Reduce Bias in Review

M = 3.68

M = 3.72

M = 4.23

5

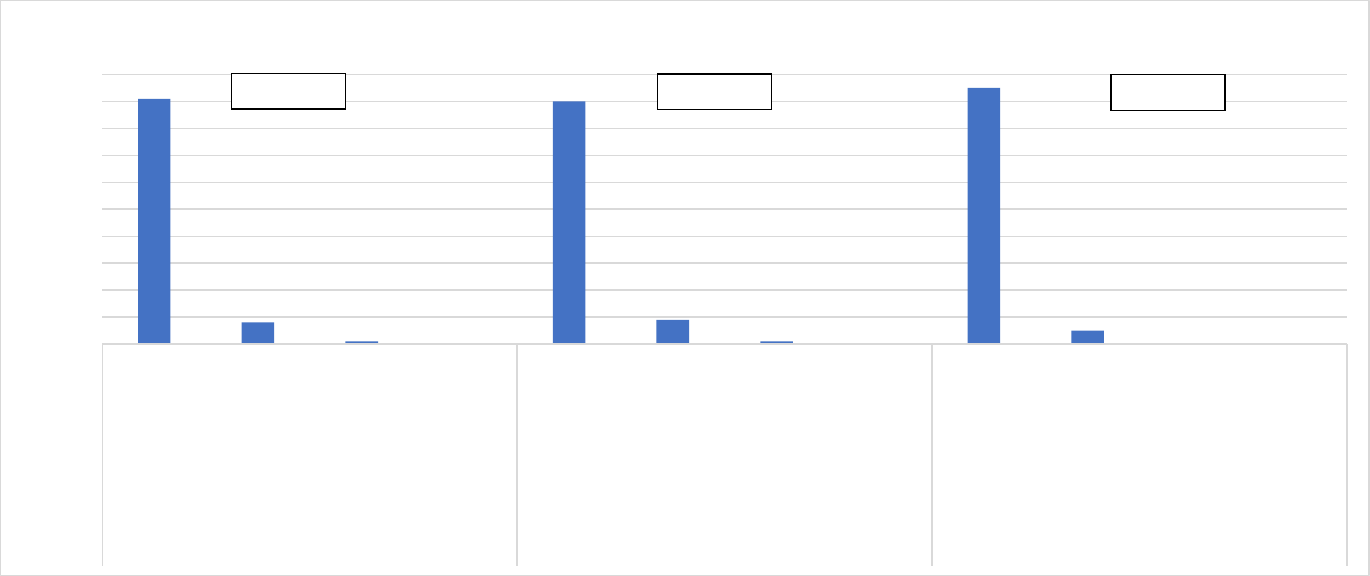

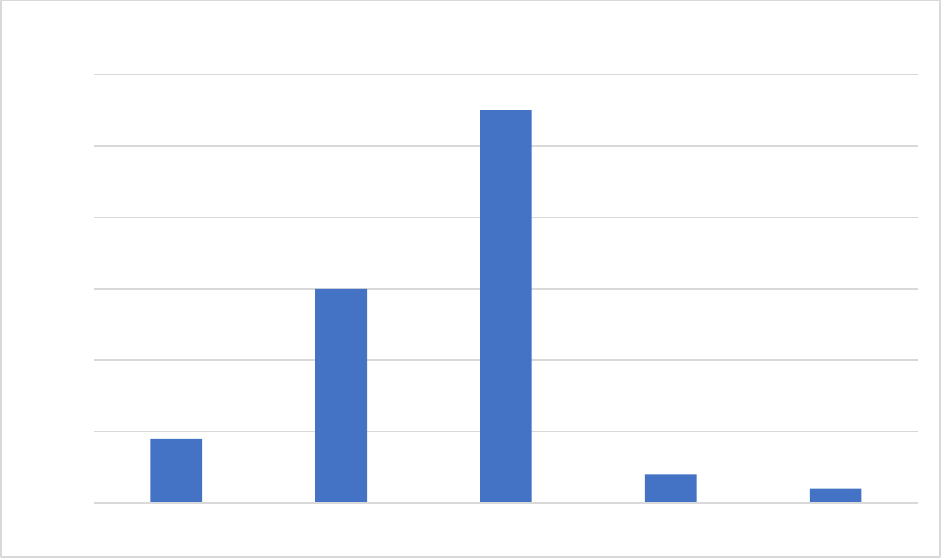

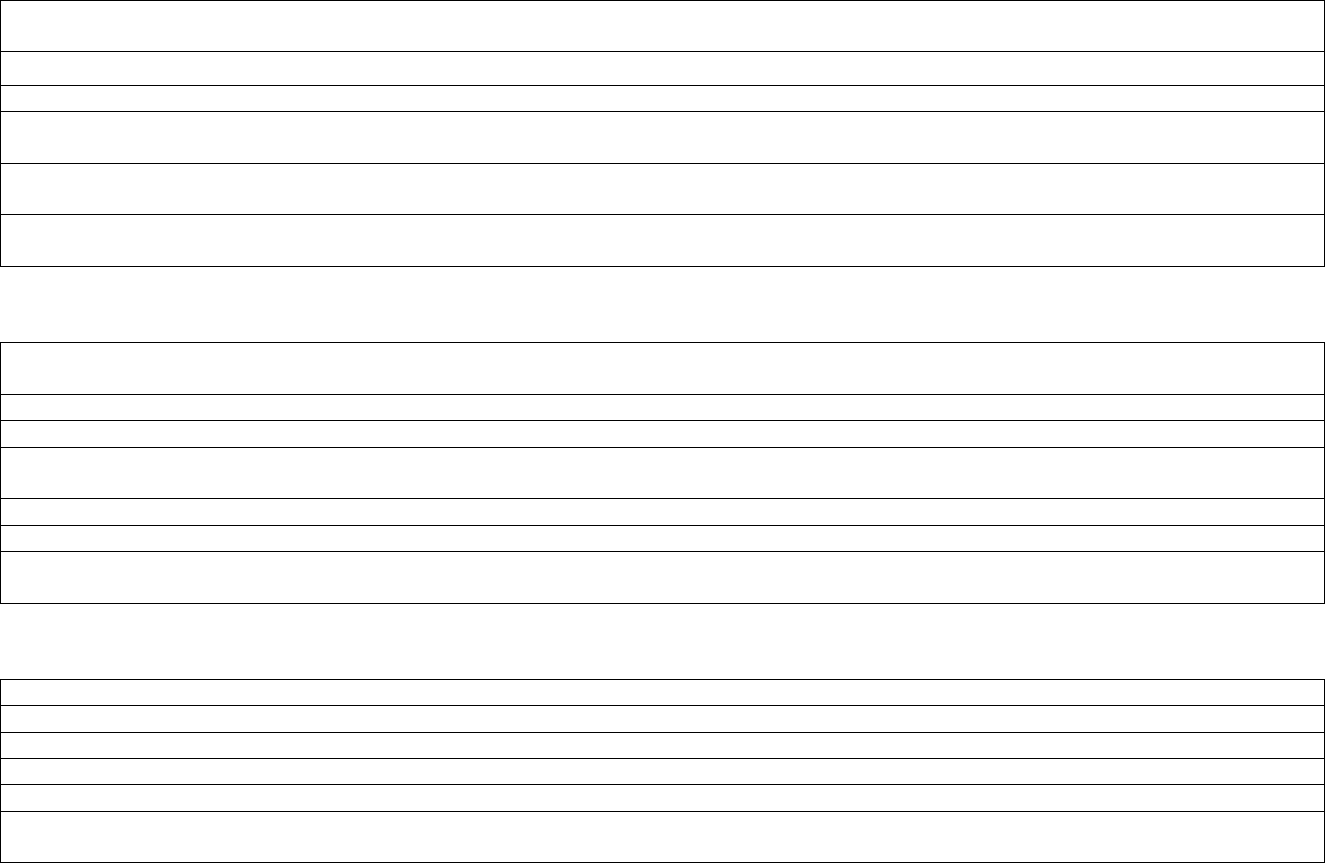

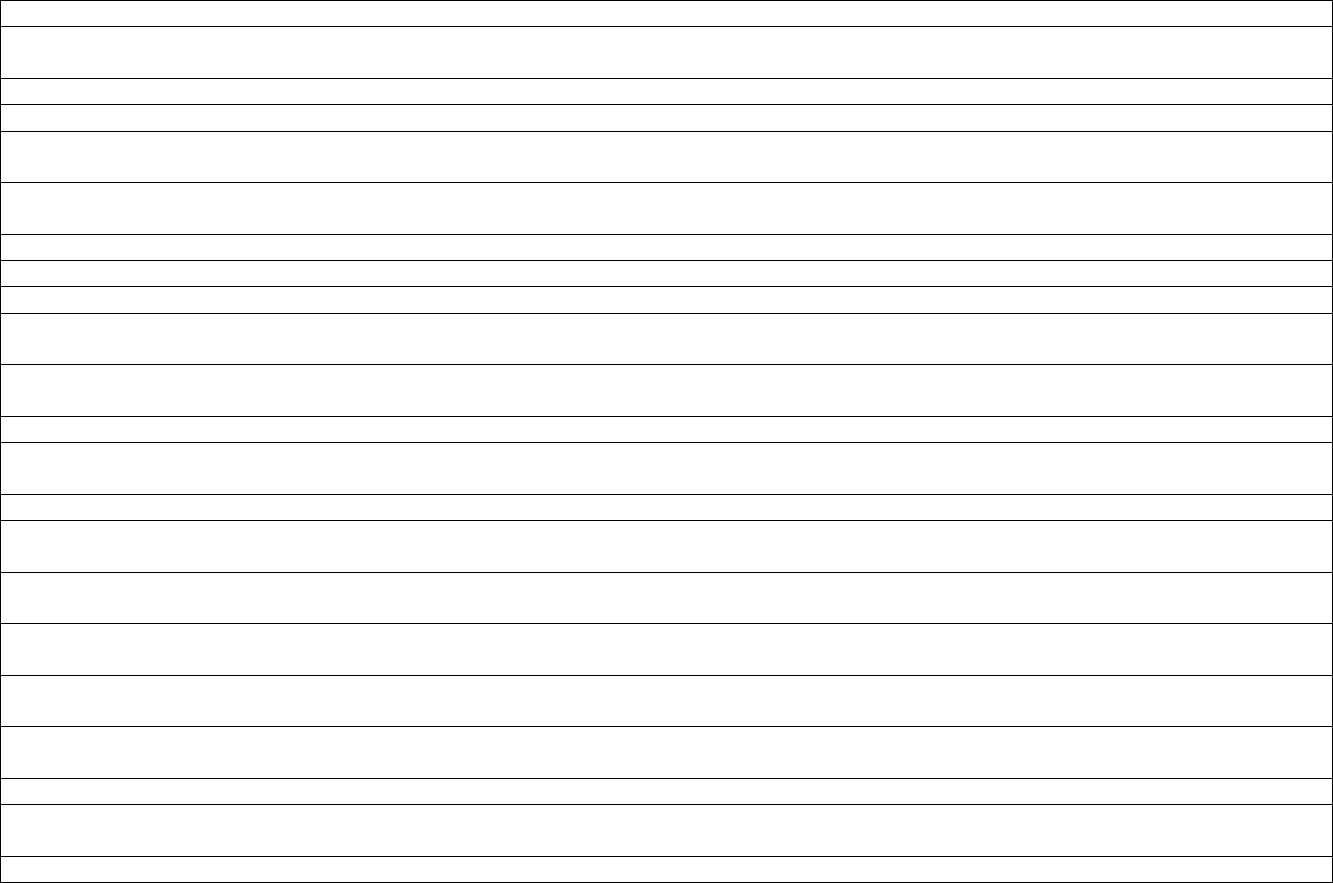

3. How useful did you find the following training activities?

▪ Over 90% of reviewers thought that all the specific training activities were very useful.

Figure 3. Usefulness of Specific Training Activities

Note. Items use a 5-point scale where 5 = “extremely useful” and 1 = “not at all useful”. The mean (M) is the mean of those numeric scores. For ease of presentation,

“extremely useful” and “very useful” were grouped together and defined as “very useful”.

0

10

20

30

40

50

60

70

80

90

100

Very useful

Moderately useful

Slightly useful

Not at all useful

Very useful

Moderately useful

Slightly useful

Not at all useful

Very useful

Moderately useful

Slightly useful

Not at all useful

Peer Review Vernacular Read Phase Study Section Scenario

Pecent of Reviewers

Specific Training Activities

M = 4.5

M = 4.5

M = 4.6

6

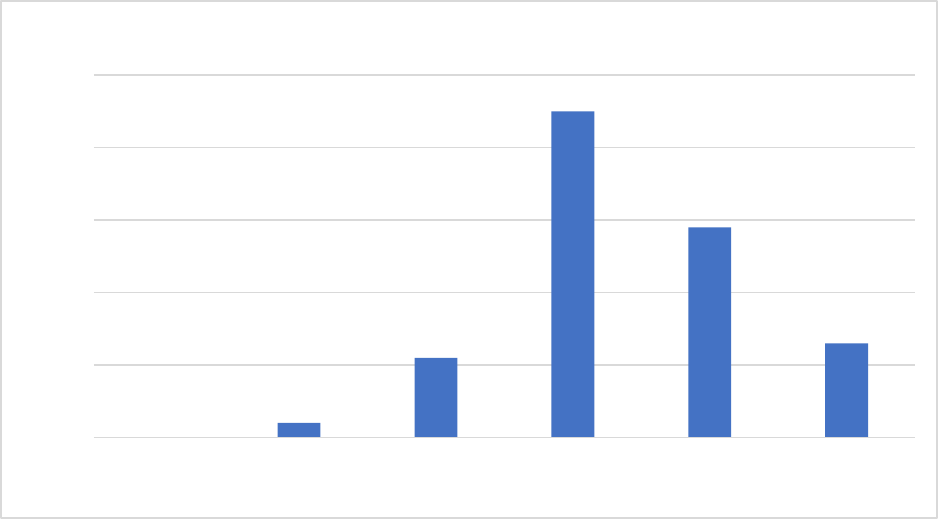

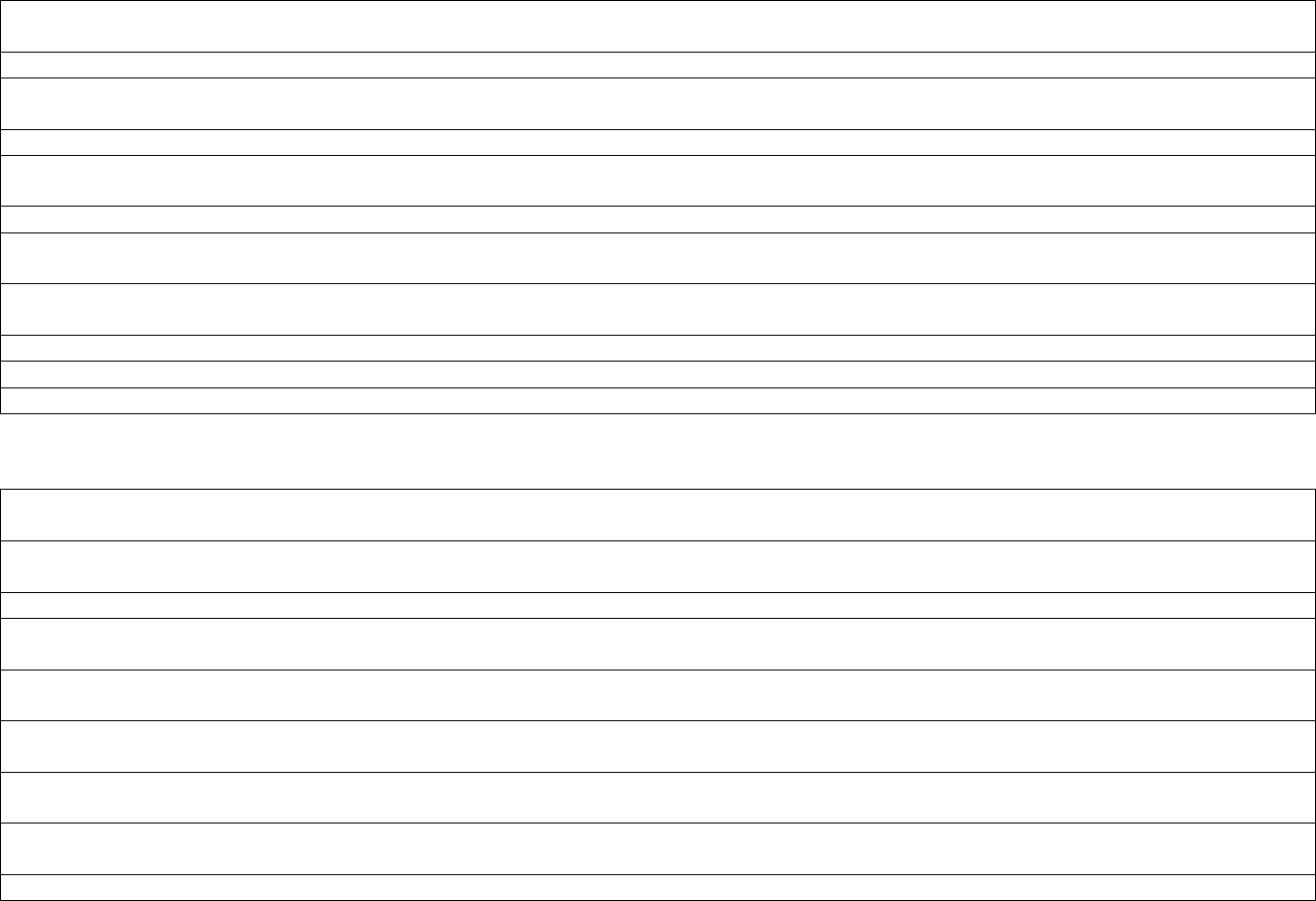

4. Please mark how strongly you agree or disagree with the following statements.

▪ Over 90% of reviewers were highly satisfied with the training.

Figure 4. General Satisfaction with the Training

0

10

20

30

40

50

60

Strongly

agree

Agree Neutral Disagree Strongly

disagree

Strongly

agree

Agree Neutral Disagree Strongly

disagree

Strongly

agree

Agree Neutral Disagree Strongly

disagree

Met Needs and Expectations Testimonials were Relatable Interesting and Engaging

Percent of Reviewers

General Satisfaction with Training

7

5. In the last year, how confident were you in your ability to identify bias in a review?

▪ Most reviewers (56%) were moderately confident in their ability to identify bias in

review in the last year, pre-training; only 28% were very or extremely confident in their

ability to do so.

Figure 5. Confidence in Ability to Identify Bias in Peer Review in the Last Year (pre-training)

0

10

20

30

40

50

60

Extremely

confident

Very confident Moderately

confident

Slightly confident Not at all

confident

Percent of Reviewers

Pre-training: Confidence in Identifying Bias in Review

8

6. In the last year, how often did you read a critique or attend a review meeting where you

thought bias (or potential bias) was present?

▪ 55% of reviewers stated that they sometimes read critiques or attended meetings where

they thought bias was present in the last year (pre-training).

▪ ~40% indicated that they rarely or never perceived review bias in the last year (pre-

training).

Figure 6. Identified Bias in Peer Review in the Last Year (pre-training)

0

10

20

30

40

50

60

Never Rarely Sometimes Most of the time Always

Percent of Reviewers

Pre-training: Presence of Bias in Peer Review

9

7. To what extent do you think bias is a problem in the NIH review process?

▪ Most reviewers believe that NIH has a problem with bias in the review process, with 45%

considering it a moderate problem, 29% a big problem and 13% a very big problem.

Figure 7. Bias as a Problem in the NIH Review Process

0

10

20

30

40

50

Not a problem

at all

Very small

problem

Small problem Moderate

problem

Big problem Very big

problem

Percent of Reviewers

Problem with Bias in the NIH Review Process

10

8. In the last year, how often did you intervene when you thought bias (or potential bias)

was present in a review (written critique or meeting)?

▪ Most reviewers (53%) rarely or never intervened when they thought bias was present in a

review in the last year, before training was available.

▪ Only 4% of reviewers always intervened when they perceived review bias in the last year

(pre-training).

Figure 8. Frequency of Intervening on Bias in Peer Review in the Last Year

0

10

20

30

40

Always Most of the time Sometimes Rarely Never

Percent of Reviewers

Pre-training: Intervening on Bias in Peer Review

11

Qualitative Survey Results

Please share any general comments about bias in NIH review or recommendations for

improving this training.

▪ Among those who responded to the survey (n = 3166), 46% provided comments to the

open-ended text question (n = 1,443).

Common and Salient Themes

Listed below are the themes identified in the comments, followed by some examples that help

capture the sentiment (see Appendix B for additional themed comments).

1. General Satisfaction and Value of the Training: The great majority of reviewer comments

were those of simple thanks and appreciation for the training. Reviewers applauded NIH for

tackling bias in review and were very pleased with the high quality of the training. Reviewers

stated that they learned a lot from the training and believed that the training could have a real

impact on reducing bias in review. They also felt that the training activities were very

relatable and were impressed by the study section scenario that displayed bias that they

themselves have seen “many times”. Reviewers overwhelmingly requested more scenarios

that they could watch and learn from. Many also thought that bias training should be required

every year or before every review meeting.

a. “I greatly appreciated this training. Not only did it allow me to understand some potential

biases I may have, but the training was also empowering to understand ways to have a positive

impact during panel discussion. Thank you!”

b. “It's encouraging that the NIH has recognized that bias should be addressed in the review

process. I am cautiously optimistic that this required training will have an impact towards a

fair and equitable process.”

c. “I thought the training was outstanding and it gave me hope for the future. Thank you!”

d. “As a junior member of a study section who is only participating in his 3rd study section (all

of which have been virtual) I have often felt uncomfortable in discussions. It is extremely

helpful to see "speaking up" role modeled as a core NIH value here.”

e. “I thought the modality of revisiting the same clip to see what was wrong and how it could be

fixed was really engaging, and the examples were spot on (all things I regularly see). Thank

you for doing this!”

f. “I didn't think I was going to enjoy this training so much! I would have watched more study

section examples if there were optional additional videos. Thank you for this training and

actively improving the review process.”

g. This is literally the most practical and helpful anti-bias training I have ever participated in.

Thank you for doing this!

Click here to see additional comments on General Satisfaction and Value of the Training

12

2. Empowered and Committed to Future Bias Mitigation Actions: Many reviewers were

motivated and empowered by the training. Reviewers stated that the training helped them

become more confident in speaking up and out against bias. The training also helped many to

recognize their own biases and make vows to correct their biased attitudes in future reviews.

a. “The training was engaging and made me think carefully about my choice of wording in

critiques. It also gave me more confidence to speak up and question other reviewer statements

during study sections.”

b. “Thank you for this. I now feel empowered to speak up when I hear or read bias. Before I

rarely spoke up because I thought it would be held against me or others would think I was not

an expert and out of line.”

c. “I thought the training was very useful - now I need to modify some of my written critiques

before submitting them.”

d. “…I think this training has elevated my awareness of potential bias (in myself and others). I

will be more likely to identify and push back on perceived bias in the read and meeting

phases.”

e. “It will be my first time reviewing this October so I’ haven’t had any of these experiences as a

reviewer but now I have learned about them and feel empowered to not give biased reviews or

to allow my colleagues to give biased reviews.”

Click here to see additional comments on Empowered and Committed to Future Bias Mitigation Actions

3. Requests for More Direct Approaches to Tackle Bias: Reviewers found the bias

intervention strategies in the training extremely valuable and wanted more resources and

examples on how to intervene in situations where different types of biases are present (e.g.,

scientific, age) and when bias is present in different contexts (e.g., meeting, critiques).

Reviewers also wanted resources and strategies to help prevent themselves from expressing

bias in review. Reviewers requested examples such as clarifying questions to ask when

challenging biased statements, different avenues to raise bias concerns anonymously during

the meeting, templates to assist with writing unbiased critiques, catered intervention

approaches for young, minority and marginalized groups, and a cheat sheet of common

review language that was biased.

a. “Provide examples of sentences, language reviewers can use to ask questions of other

reviewers if they think they are being bias; examples of how to write non-bias reviews for each

of the review sections”

b. “Language is often a barrier, particularly for not native English speakers. A list of appropriate

and inappropriate sentences most commonly used in each sections of the review criteria would

be helpful, particularly to new reviewers.”

c. “…would like more tips for how to approach it in a respectful manner--I can always go back

to the point about the review criteria but when (if ever) is it appropriate to call out bias

explicitly?”

13

d. “…Would be great to see examples of gender and race based bias and examples of how to

intervene.”

e. “It would be helpful to highlight differences in how ESI's can intervene vs how more well

established researchers can intervene when observing bias.”

Click here to see additional comments on Requests for More Direct Approaches to Tackle Bias

4. The Need for Chairs and SROs to Intervene More and Receive More Bias Training:

Reviewers believed that Chairs and SROs hold the most power in the room and play a major

role in setting the tone for the meeting and in deciding acceptable and unacceptable behavior;

for these reasons, reviewers believed that Chairs and SROs should take the lead in addressing

bias and in changing the review culture to one that does not tolerate bias. Reviewers stated

that they would like to see Chairs and SROs speak up more and intervene first when bias

occurs, especially when biased statements are voiced at review meetings. Many reviewers

noted that they would feel more comfortable and confident intervening on bias if they saw

the Chair and SRO playing a more active role in intervening. They also thought that Chairs

and SROs could benefit from additional training on bias.

a. “train SROs and chairs to be more engaged in identifying bias and clearly stating it during

session. These two jobs have the most weight in the room…”

b. “This training is good. Strongly encourage SROs to intervene, often, to help get reviewers to

change their behavior over time.

c. “...SRO intervention needs to occur more frequently in order to teach the community about

what is and is not acceptable.”

d. “We need the SROs to step in more when they see or hear bias.”

e. “Chairs should get extra training on this issue - they are the first line of defense”

Click here to see additional comments on the Need for Chairs and SROs to Intervene More and Receive

More Bias Training

5. Experiences with Bias as a Reviewer and Applicant: Reviewers stated that they could

relate to the scenarios in the bias training, with many stating they have been in meetings

where these exact scenarios occurred. Reviewers shared other examples of bias that they

experienced or observed as a reviewer. Others noted that the training highlighted many of the

biases that they have faced as a grant applicant, mainly from reviewer comments on their

applications (summary statements).

a. “I very much appreciate this training and the direct focus on what I've always thought of as

snobbery, but that is indeed bias. As a woman, I have felt talked over or disregarded in

meetings just as this training illustrated and I hope that it has impact.”

b. “I very much liked the emphasis on investigator stature over the application. I have seen this

as an issue many times in study section.”

14

c. “This training was surprisingly good. I've been in or seen those situations many times before.”

d. “While taking this training it was sad to see how so many of my grants have been hit with

many of the examples that were presented...”

e. “I was 34 when I received my first 2 R01s. I am only now (10 years and many applications

later) no longer getting ageist comments on peer reviews. My perception, and experience on

study section is that peer review is biased against young people.”

f. “…One area of bias that I've personally experienced is in judging a PI by their name. The

reviewer wrote that it was unlikely that I wrote the application myself - it was insulting

considering I'm a native English speaker.”

Click here to see additional comments on Experiences with Bias as a Reviewer and Applicant

6. Power Dynamics and Challenges with Intervention: Reviewers also noted the challenges

and potential consequences that many reviewers face that could deter them from intervening

on bias, namely the power dynamics associated with reviewers’ social and demographic

status. Reviewers felt that young, less experienced reviewers and reviewers from

marginalized and minority groups will be less likely to speak out against bias out of fear of

being ignored or undermined or because of the potential for negative repercussions as a

reviewer or applicant. Reviewers recommended that the training include more

acknowledgement and intervention strategies that take these power dynamics into account.

a. “The training was helpful. I suspect those with more experience as investigators and as NIH

peer reviewers will often hold more "power" to "steer" a conversation, making it difficult for

less experienced reviewers to intervene.”

b. “It is difficult to speak up / intervene against reviewers with strong convictions. I imagine that

people from underrepresented groups, and particularly young investigators, would not feel

comfortable speaking out”.

c. “I enjoyed the training. One of the challenges of intervening during the review process as a

junior or mid-career researcher is that you won't be heard by the chair or other committee

members.”

d. “I think the biggest challenge to this training is learning how to intervene on biases without

negative repercussions.”

e. “One of the issues I find problematic is the fear that reviewers may retaliate when our own

grants are reviewed. So my feeling is that few people want to upset other reviewers by making

antagonistic remarks”

Click here to see additional comments on Power Dynamics and Challenges with Intervention

7. Reviewer Recommendations to Reduce Bias in Review: Reviewers highly appreciated the

training but also gave their own recommendations on how NIH can reduce bias in peer

review. Many of these recommendations focused on policy changes such as modifying the

review criteria, incorporating blind reviews, and adopting a staged review process. Reviewers

also noted that increasing the diversity on panels would also help to reduce bias in review.

15

Some reviewers even suggested removing the environment and investigator criteria since

they were heavily susceptible to bias.

a. “Another aspect of reducing bias in the panel meeting is trying to mix the levels of experience

of members (established, new investigator, new panel member, etc)”

b. “Reduce biases by using double-blind review processing.”

c. “I have believed for a long time that the "environment" criterion should be eliminated. As the

1st speaker said how can the quality of the environment at Meharry be evaluated by people

who have no knowledge of the place.”

d. “You cite that Investigator and Environment are the two areas at highest risk of bias. NIH

should probably get rid of them. So long as these exist, the "top tier" institutions will remain

with systematically higher scores than other institutions.”

e. “Honestly, I think most bias, particularly more subtle and less clear examples that are more

pervasive, will be extremely difficult to remove without blind peer review.”

Click here to see additional comments on Reviewer Recommendation to Reduce Bias in Review

8. Recommendations to Improve Training: Aside from the overwhelming request to add

more scenarios, (see #1 above) reviewers stated that the training could be improved by

covering more types of bias and by addressing review biases that are more implicit or subtle

in nature. While reviewers were pleased that the training covered bias associated with the

investigator and environment, many reviewers wanted to learn more about scientific bias

(e.g., preference for one’s own science or approach) and bias associated with the socio-

demographic characteristics of reviewers and applicants (e.g., gender, race). Bias associated

with critique-score mismatch and non-discussed applications were also of concern.

Reviewers also noted that the biases covered in the training were mostly explicit in nature

and therefore easily identifiable and more likely to be addressed and corrected. They wanted

the training to cover more implicit or subtle forms of biases that arise during review.

Reviewers also wanted to learn how to intervene when these types of biases (i.e., different

types of biases and subtle biases) surfaced.

a. “There was too much emphasis on a single bias (preeminence of the PI) and not enough on

other bias.”

b. “This was very good but it only applies to reviewed grants. Much bias goes into giving grants

bad scores so that they are not scored and are, therefore, never reviewed.”

c. “There is another type of bias that is much more challenging to address. Most scientists favor

their own local fields of research and tend to believe their field's approach to science is the

most rigorous, and evaluate other approaches more harshly.”

d. “I commend the NIH for this bias training. I would find it very important to also address

biases with respect to sex, gender expression, race and ethnicity.”

e. “Whenever the reviewer have scored the individual they have no weaknesses but the score

was" 4". that ended up in Not discussed application for an early status investigator.”

16

f. “the video was great but slightly exaggerated. Often there is more implicit bias which is more

difficult to pick up on.”

g. “The examples were an excellent illustration of common pitfalls. The issues were relatively

easy to identify, but the ways to address benefited from the analysis and explanations. It might

be interesting to have examples where the bias was more subtle.”

Click here to see additional comments on Recommendations to Improve Training

17

Appendix A

Methods

Training Module

The CSR Eliminating Bias in Peer Review training module was developed with significant,

iterative input on both content and format from the CSR Advisory Council Working Group on

Bias Awareness Training, which included a broad, diverse group of extramural scientists as

well as staff from CSR, COSWD and NIGMS, including those with specific backgrounds and

expertise in bias training. The module is designed to raise reviewer awareness of potential

sources of bias in review of scientific grant applications and help them develop ways to mitigate

bias. It is targeted towards mitigating the most common (but not all) biases in the peer review

process and is not designed to be implicit bias training. The overall focus is on bias related to

principal investigator and institutional reputation and on the importance of adhering to

established NIH review criteria in the areas of Investigator and Environment. The training

begins with vignettes from real NIH grant applicants who describe instances of bias they have

personally experienced in review of their applications. The training then engages reviewers in a

short activity that illustrates the implicit biases that language can elicit. The next activity probes

how a reviewer might respond upon reading potentially biased statements in a fellow reviewer’s

critique. Finally, a mock study section presents two versions of the review of a flawed

application from a renowned principal investigator. The first version demonstrates multiple

instances where bias leads to unfair review; then an alternative version is presented in which

reviewers intervene to mitigate potentially biased remarks.

Participants

Participants were 3,166 reviewers who participated in 389 CSR study section meetings between

August and December 2021.

Survey Administration

All reviewers received the training four weeks before their Fall 2021 review meeting. The

survey weblink was located at the end of the training module. Reviewers were told that the

survey was optional, that their responses would be kept private, and that the survey would take

about five minutes to complete. All surveys returned by January 21, 2022 were included for

analysis.

Measures

Knowledge and Awareness of Bias in Peer Review

Five items asked reviewers to rate on a scale from 1 (not at all) to 6 (very large extent) how the

training helped them in the following areas: a) identify potential biases in a review, b) become

more aware of the review biases that I hold, c) understand the importance of using the review

criteria to assess and/or avoid bias in review, d) feel more comfortable intervening when bias is

perceived in a review, and e) write an unbiased critique or present an unbiased argument during

a review meeting. The scale was later recoded into a 4-pt. scale with values 6 (very large extent)

and 5 (large extent) grouped and redefined as “large extent” and values 3 (small extent) and 2

(very small extent) grouped and redefined as “small extent”.

18

Future Actions to Reduce Bias in Peer Review

Three items asked reviewers to rate on a scale from 1 (definitely not) to 5 (definitely) how

likely they are to do the following items in the future: a) contact the SRO if you believe bias is

present in reviewers’ written critiques, b) contact the SRO or Chair during a review meeting if

you believe bias is present at the meeting, c) ask for clarification or justification from a

reviewer if you think they made a biased statement during the review meeting.

Usefulness of Specific Training Activities

Three items asked reviewers to rate on a scale from 1 (not at all useful) to 5 (extremely useful)

how useful they found the following specific training activities: a) language matters: peer

review vernacular and biased associations, b) the read phase: review of written critiques and

bias, c) study section meeting. The scale was later recoded into a 4-pt. scale with values 5

(extremely useful) and 4 (very useful) grouped and redefined as “very useful”.

General Satisfaction with Training

Three items asked reviewers to rate on a scale from 1 (strongly disagree) to 5 (strongly agree)

their general satisfaction with training for the following items: a) the training met my needs and

expectations, b) the testimonials were relatable, and c) the training was interesting and

engaging.

Ability to Identify Bias in Peer Review

One item asked reviewers in the last year, how confident were you in your ability to identify

bias in a review? This item was on a scale from 1 (not at all confident) to 5 (extremely

confident).

Presence of Bias in Peer Review

Two items asked reviewers about their perceptions of the presence of bias in peer review: a) In

the last year, how often did you read a critique or attend a review meeting where you thought

bias (or potential bias) was present?; this item was on a scale from 1 (never) to 5 (always), and

b) To what extent do you think bias is a problem in the NIH review process?; this item was on a

scale from 1 (not a problem at all) to 6 (very big problem).

Intervening Bias

One item asked reviewers in the last year, how often did you intervene when you thought bias

(or potential bias) was present in a review (written critique or meeting)? This item was on a

scale from 1 (never) to 5 (always).

General Comments

In an open-text box, reviewers were given the opportunity to share any general

comments about bias in NIH review or recommendations for improving this training.

19

Appendix B. Additional Themed Comments

Table 1. General Satisfaction and Value of the Training

I previously thought I was aware but this training, along with external events over the last year, have helped me to understand that the extent of my

own bias and that of my peers is much deeper and insidious than I'd previously realized.

I learned that some things I thought were appropriate actually reflect potential bias (e.g., regarding prior NIH experience on the team, publication

record of investigators)

I think I have not had the bias during the review process, but I might just not realize the issue. By having this training, I will be more cautiousness

about whether there is the bias during the process.

The training was excellent. Among many important points, it brought bias towards other reviewers to my attention, which I have experienced and

did not realize was of concern to the NIH.

This is important, but in my ~15 years of reviewing this is the first time such a training was done. This is not meant as a criticism of the past but as

an encouragement to do more of it in the future

This was extremely useful and eye-opening, even for someone who considers himself a veteran NIH reviewer

Interesting training. Makes you think.

I loved this module! The scenario, discussion and narrated version illustrated very well each issue related to bias and how to intervene. Thank you!

This is an excellent training tutorial. Very engaging and very realistic. All of those scenarios actually happened! And I hope we could do a much

better job going forward.

The study section video was spot-on in matching my experience on study sections, and the types of biases that I've seen in reviews of senior

investigators and the attitudes of some peer reviewers towards others at the table.

The most useful part was the acted scenario. I identified some of the moments of bias when first watching, but not all. And, seeing the examples of

how to intervene was helpful. And it's good to know my fellow panelists will have done this training.

I thought the scenario, giving a senior investigator a pass on a poorly written proposal, was very effective; I consider this a major source of bias.

Additional scenarios would be useful.

I think that the study section scenario was very well done and that reviewers will benefit if more such examples would be added.

An optional longer version would also be helpful with more scenarios.

I would gladly have spent twice the time on this training. Especially liked the bystander training and examples of how things could be done

differently.

This training is an excellent start and should be recommended before every study section meeting to remind reviewers about unconscious bias…

We're all inundated with mandatory training, but this one was timely, useful, and probably effective. Glad I did it.

20

Table 2. Empowered and Committed to Future Bias Mitigation Actions

This training is particularly helpful because it reinforces the idea that it is our right to voice concerns when biases are perceived during the

discussion of applications.

This training has given me the confidence to step up and say something when I believe I am seeing bias in the review process.

The course was effective, I feel better qualified to identify potential bias in the review process. Thank you

I received biased comments on K99/R00 grant. As a postdoctoral fellow, I had no experience of what to do about those unfair comments. Now as a

junior investigator, this video helped me in understanding that the bias can be voiced out.

I feel I will be more proactive in identifying bias to the SRO in the future. I also am more likely to ask a reviewer for further explanation for their

remarks.

I enjoyed this interesting and engaging training! It will be helpful for me to detect hidden bias during the review process and avoid my own bias

when reviewing the NIH grants.

Table 3. Requests for More Direct Approaches to Tackle Bias

The most useful aspect is to provide tools to reviewers to intervene respectfully if they see bias. In my opinion, the training could be strengthened

by spelling out this goal and providing guidance on this goal that is as concrete as possible.

Perhaps a bit more detail regarding writing the critique in an unbiased manner.

It would be useful to have more training on when/how to intervene…

…You might consider to have an "anonymous" chat function during meetings where everyone present can raise an issue without being named, to

avoid stress of speaking out.

…It would be helpful to provide more trainings for the read phase and in how to write informative, constructive and fair critiques.

Giving more ways to report bias other than speaking up during review process. Point out repeated bias of a reviewer anonymously.

…I've thought about asking the SRO to make a bullet-point "cheat sheet" about things you should not bring up in reviews, whether written or in the

discussion, because some of these problems happen over and over again.

Table 4. The Need for Chairs and SROs to Intervene More and Receive More Bias Training

I think a good Chair that rapidly intervenes when bias occurs is very important and gives confidence for others to act as well.

…Leadership by the SROs and section chairs to encourage such discussions is extremely important.

The SRO and study section chairs should be the ones who are trained and speak up immediately in conjunction with any of these scenarios.

The chair and/or SRO should play a major role in identifying and intervening upon bias during review.

…I hope that chairs / SROs become more aware of their responsibility to hold these standards high...

NIH CSR should also require all SROs to attend this training and more effectively intervene to minimize bias during reading the critiques and the

discussion meeting.

21

in my opinion the SRO is the one that needs to actively address the obvious bias from the written reviews. You get very inappropriate comments

from reviewers that are supposed to be identified by the SRO before there critiques are released.

have SRO also speak up during meetings

... I have been in reviews similar to the example and someone has tried to step in and has been shouted down with no help from the SRO. Very

troubling, SRO training critical.

…If the SRO states the rules at the beginning of the meeting, it helps (less awkward than calling people out later)

… it would be useful for the SROs to chime in when bias is perceived. In general, SROs keep a much closer handle on NIH guidelines vs

reviewers.

…The chair and SRO of a study section should pay more attention and do more.

As a person who is usually shy from speaking up, especially in case of confrontation, I would appreciate the Chair to speak up more. It would be

nice if the Chair can get more training on identifying bias.

…SROs should be encouraged to speak up during study section if they feel bias is being exerted, or contact reviewers individually if they spot it in

comments submitted.

…In my experience both the Chair and the SRO can help steer a meeting in more unbiased direction...

…I didn't know reviewers were expected to mention when they see/hear bias in another panelist's review - I assumed it should be left to the SRO.

Training for SROs and chairs (mine have been very good), might help particularly when it comes to intervening on subtle issues.

Table 5. Experiences with Bias as a Reviewer and Applicant

When I previously pointed a bias in a study section as an ad-hoc reviewer, later in the men's bathroom I was warned by another reviewer that the

section wanted to "keep" those who think the same way as others. I was removed from the next study section.

The videos were relatable and one almost mirrored what I went through in a review session with reviewers not judging a grant by its merits but

giving applicant a pass because of their institution and big names on the research team.

I think that the scenario in the video was spot on. I've been at review sessions where this exact thing has happened.

I have received multiple reviews where there was clear bias (~50% of my reviews have the statements used here as examples). It is unclear how

appropriate it is to point this out to the SRO - without looking like I am just complaining about a low score.

Seeing these videos made me realize that I have been the victim of bias in reviews of my own applications. It happened so often that I thought the

bias was the norm. I had to buttress my applications with senior investigators just to be acceptable.

I have experienced bias in my critiques: Statements like: prior NIH funding has lapsed, the most senior person on the grant is X and does not have

any effort, stayed in academic rank too long indicates lack of institutional support.

…I too have received comments about where I went to school, and why there was a gap period in my resume. I also have seen comments about

needing a more senior person.

As an investigator recently relocated to the US from Canada and thus no track record in NIH funding I was often seeing comments from reviewers

on my own grants saying "she has not had any NIH funding".

As a woman in science, I have experienced bias in review literally from the very first application I submitted…

22

As a women of color, I've been discouraged to submit to NIH because of biased reviews or no scores. I feel as if the investigator and environment

(small college) were always against me. My ideas have shown up in higher impact investigators labs.

Table 6. Power Dynamics and Challenges with Intervention

It would be helpful to address the concerns of junior folks speaking up in meetings with senior folks regarding the senior folks' bias -- junior folks

are likely to feel less able to speak up.

…Similarly, the training does not address the issues of power dynamics within meetings and how that can make it more challenging for some

individuals to intervene.

I'd say I'm a mid-career scientist and I'm on my 2nd year as a standing member of a study section. I still get nervous around more experienced,

senior scientists and would have a hard time challenging their opinions/comments in a discussion,

The training was very good and quite helpful. One dynamic that was not discussed (but was suggested) was the age/experience of the reviewers.

People new to the process (younger) can be intimidated by more senior and more confident reviewers and back off.

The training provided very obvious examples of bias in review, though I believe that the biases are harder to see and may not always be apparent. It

is also difficult for junior researchers on review panels to voice concerns about bias.

One of the problems for fighting bias in front of your peers is the potential for indirect retaliation. The other is the feeling that no matter how much

one can speak up nothing changes.

It would be helpful to address the concerns of junior folks speaking up in meetings with senior folks regarding the senior folks' bias -- junior folks

are likely to feel less able to speak up.

This training as presented is ineffective, it does not take into account that reviewers come from diverse groups that are sometimes incapable of

intervention. The training needs to focus on communication between minoritzed /majority groups and fragility.

While I see that NIH is making the peer review panel in the study sections diverse and inclusive, it will be better to empower women, women of

color to be comfortable to voice their opinions and not shut down by the chair or fellow reviewer.

Ultimately, I think the only way to eliminate bias is for people to speak up. However, it is difficult as science is a relatively small community and

some individuals hold very strong grudges.

Table 7. Reviewer Recommendations to Reduce Bias in Review

Continuing to get diverse reviewers in the room will also improve bias.

...The "environment" criterion remains problematic. Why score environment if you don't want us to score the environment?

A major factor is the makeup of the review panelists. Diversity is important.

As a Chair of a study section and serving as panel member on several study sections in the past, I personally believe the "environment" criterion

should be removed. It is difficult to assess the infrastructure and resources avaialble at each institution.

Yes, bias is intrinsic and widespread. The only way to avoid bias is to adapt double-blind review approach where neither the PI name nor the

Institution name should be given to the Reviewers.

23

This training addresses bias during the review session, but bias also occurs before then: the applications that might have suffered the most bias

simply don't get discussed. Reviewers should be blind to applicants' funding history.

We could blind the proposals so the investigator and environment are not known until at the time of the study section

The review process needs a fundamental rethink - its broken. Blinded review and removal of affiliations of PI from reviewers might work as a way

to avoid bias. Requirement for Biosketches, facilities etc could be removed. Focus on the science only!

The review criteria for investigators and environment should be totally revised. I am not sure how this is going to work but I think the reviewers

should be blinded to provided a better review.

Most bias could be addressed by having the initial review performed blindly, and then revealed when the application is discussed.

Honestly, I think most bias, particularly more subtle and less clear examples that are more pervasive, will be extremely difficult to remove without

blind peer review.

Fundamental changes are needed--completely blind process (no names, institutions), changes in peer-reviewers, adequate compensation for

reviewers time.

Blind review without revealing PI's identity may be better for avoiding biases.

bias will always be present if you can see who is submitting the application. this is obvious and a primary reason why some proposals do better in

particular study sections. blinding the reviewer to the PI is fairest.

We need to have observers in the study section.

This is an excellent training resource. Getting diverse reviewers on the panel helps reduce the bias.

The testimonial regarding environment exemplifies a problem in the review criteria that might be solved if instead of bullet points listing strengths

and weaknesses, environment was scored as a simple is it acceptable or not.

The beginning slides showed many scenarios related to environment. I think this is one of the most challenging review criteria. I am wondering if it

really is a co-equal to other criteria and whether is can bias the overall score.

Maybe the titles of the review criteria should be changed and made more descriptive "Environment" could be changed to "is the necessary expertise

and equipment available at the institution(s), same for "Investigator"

many reviewers assume reputation is a review criteria... it's going to take a lot of effort to change that view. Why not anonymize the applications?

Sure people can figure it out if they want to but it cuts down on this reputational stuff....

Investigator and Environment criteria are rarely impact score drivers (Significance, Innovation, and Approach are). Indeed, NIH should have the

data to illustrate a restricted range on these. Why do we even rate these as they seem the most liable to bias?

I think the investigator and environment criteria are inherently problematic categories of review and have tried to mitigate this by not using them as

score driving unless there is an unequivocal problem in these categories.

Grounding it in the text of what is being asked for each section is very helpful. Not sure that "environment" should even be a criterion, if its primary

effects end up being to send Hopkins more grants.

an explicit statement by NIH that: (1) Relative considerations of prominence and significance of problems does not matter, (2) the Investigator

criterion concerns qualifications for the proposed research, not general prominence

The names of applicants should be removed from the application and the investigators and environment should be assessed by a separate group, and

the scores become available when a reviewer is asked to assess the science (significance and innovation).

24

I think separating the peer review from the details of the scientific team and institution may be beneficial at judging the scientific question proposed

with a separate review for analyzing the scientific team may help remove bias.

I'm still a bit unsure how to accurately score an institution in which I am unfamiliar. I still imagine bias will exist towards those institutions that are

more well known.

Table 8. Recommendations to Improve Training

Listing the many forms of bias encountered by reviewers would be useful. While this training highlighted some forms of bias, there must be many

more forms out there. Having a list of potential biases, even if superficially covered, would help viewers.

I haven't reviewed on study section enough to identify bias, but the big issue here is that bias is going to affect the applications that don't get

discussed, leaving no change for intervention.

This training addresses bias during the review session, but bias also occurs before then: the applications that might have suffered the most bias

simply don't get discussed. Reviewers should be blind to applicants' funding history.

This training did not address collusion between specific groups of study section members-i.e., members with a common interest who ensure grants

from their field get good scores. This is perhaps the biggest problem that I have observed in study section.

The training was right to focus on excessive deference to established investigators as a key cause of bias. Addressing "back-scratching" or "horse-

trading" favorable grant reviews would also be helpful.

The scenarios are a good way to learn in a non-ideal environment like online training. I do think we as reviewers could also use specific training

regarding racial/ethnic/gender bias in addition to those presented here.

The course covers some of the biases I've seen in the review process. Of course, there are also some with gender, race, age, etc. I'm glad NIH is

trying to address all this.

I thought this was a very well done training. I did notice that the issue of gender bias or racial/ethnic biases was not discussed very much, at least not

explicitly.

Have examples of racial, ethnic, sexual orientation, gender identity, disability, etc bias in the bias in review examples.

Training should include recognizing ageism in peer review. The Biosketch provides the age of the PI. Reviewers often discuss this as a "senior

researcher who has been doing this research for a long time." This is a potential bias..

The most noxious "code word" I hear consistently during reviews is "this is a well-written application." This basically means "this person is a native

speaker of american english and went to a fancy school" and should be called out a all times

reviewers providing one sentence comment about strength and weakness without much details and favoring good score - give all the score driving

points addressing the strength as well as weakness. add scenarios about written critique.

Training was quite good and I expect it will be valuable to most. Major issues not covered: when scores and written critiques do not match (positive

words, negative scores) or when discussion gets off track on minor issues/consistency between discussions.

The training did not really teach me anything I wasn't already aware of. I have seen examples of most of the cases pointed. I think that many biases

are more subtle than the examples and are often reflected in scores but not comments.

Presentation missed these issues: (1) inequality in time presenting/discussing each application; (2) list of strengths/weakness did NOT match scores;

(3) lack of rigor in reviewing applications; (4) wide range scores; (5) too many scores outside range

25

This training is of outmost importance, but the testimonial were too extreme. Bias is much more subtile

There was nothing about unconscious bias. In my university bias training, we were shown a video that at least mentioned these tests and I'm not sure

why NIH wouldn't also do this.

The training was very helpful. More tools to identify implicit bias would also be useful…

This was pretty light weight, honestly. I was hoping for more discussion of implicit bias.

I think that some biases are more subtle and difficult to spot or call out. Unless you are a primary reviewer it is hard to tell what is a legitimate

concern vs. a bias that is "wrapped" in the guise of concern.

The real-life scenario of proposal discussion was perhaps brought to an exaggerated extreme. Biases are generally subtle, not as extreme as depicted

in the scenario.

There are many more subtle ways of bias in review. I don't think the current conflict of interest criteria are comprehensive and rigorous enough.

The was helpful in outlining basic biases. Perhaps not enough for more subtle situations.

the training is helpful. Most biases in my experience are subtle and can have cumulative effects.

The study section panel discussion was very blatant. More subtle examples might be more convincing. For example, the often used phrase that

somebody "may not be independent" from their former PI. I agree the accounts of the PI's sounded very true

the study section examples are bit too obvious and extreme. more subtle deviations are less likely to draw attention, but could still have similar

effects.

The review scenario was a little over the top (too obvious), the reviewers if they have biases tend to be more subtle or sophisticated.

The panel discussion was very over-the-top obvious. It would be more helpful to see an example of the subtle bias that's more prevalent in review

sessions.

The incidents of bias were a bit obvious. Making them a bit more subtle might make the training more useful.

The examples were an excellent illustration of common pitfalls. The issues were relatively easy to identify, but the ways to address benefited from

the analysis and explanations. It might be interesting to have examples where the bias was more subtle.

The examples presented were extreme examples, and thus really easy to see the bias. The real devil is the subtle bias which is easy to overlook. I’m

not sure that this training effectively gets to that problem. But it’s a start.

The biases discussed were quite obvious; bias I encounter (and have) and don't know how to deal with is often subtle. For example certain

techniques, approaches, and questions are favored since they are 'sexy' and others are considered are 'outdated.

My experience is that the more extreme cases covered in this training are rare and typically addressed. It is the more subtle situations where bias can

more easily go unchecked.

I think activities like this are important, but think it would be even better to identify scenarios that are more subtle than what was presented in the

mock review video. Biases involved in that review were easily identifiable.

…I found the review scenario over the top. Bias is typically more subtle than that.

The explicit bias of the kind presented is rare, but reviewers may not be as direct as in the video. The more sophisticated presentation of bias is a

larger problem.

The "implicit" piece wasn't addressed as clearly as it could have been. A lot of the bias expressed here seemed pretty explicit.